A Method for Propagation of Image Editing Based on Improved Convolutional Neural Networks

A convolutional neural network and image editing technology, applied in the field of image editing and dissemination, can solve problems such as poor model generalization ability, color overflow, and poor image coloring

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0041]With reference to accompanying drawing, further illustrate the present invention:

[0042] A method for image editing propagation based on an improved convolutional neural network, comprising the following steps:

[0043] 1) For an image to be processed, add color strokes to the image in an interactive way to obtain figure 1 stroke map;

[0044] 2), extract training set and test set to the image in step 1), be used for the training and the test of model respectively;

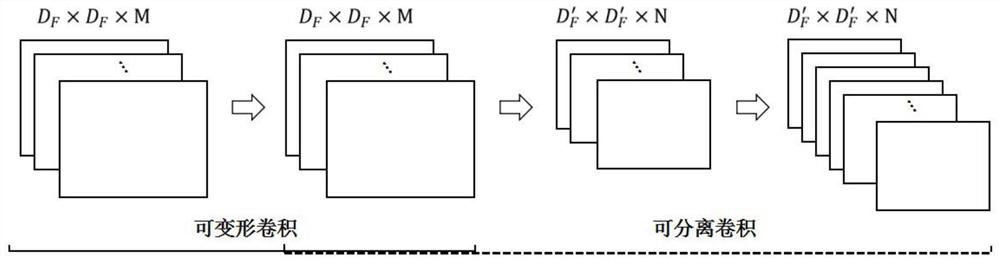

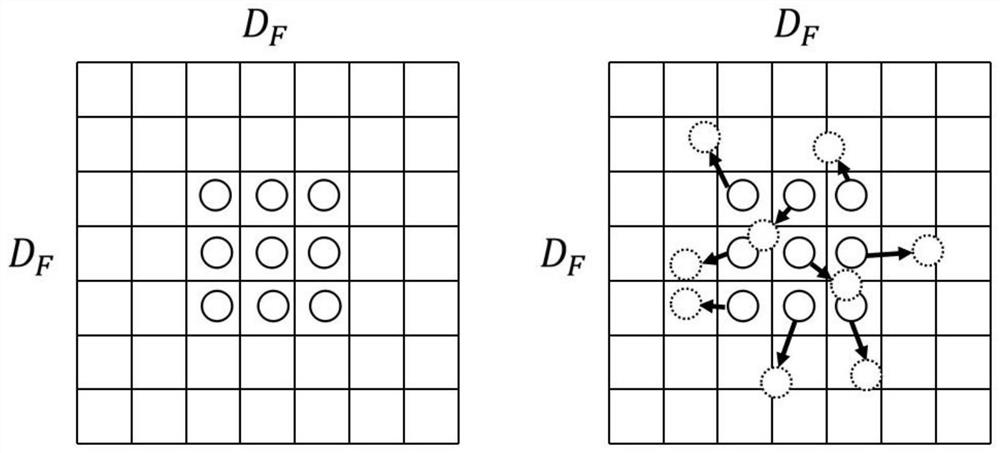

[0045] 3), use figure 2 The combined convolutional structure in the construct Figure 5 The two-branch convolutional neural network of is trained on the training set; where the combined convolution is composed of image 3 Deformable Convolution Sum Figure 4 Separable convolution composition;

[0046] 4), test the training set to achieve Image 6 Edit propagation effects in ;

[0047] The function of this method is basically the same as that of the existing editing propagation method. Its improve...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com