Feature extraction method and apparatus, computer program, storage medium and electronic device

A feature extraction and global feature technology, applied in the field of computer vision, can solve the problems of not considering the importance of information and low detection accuracy, and achieve the effect of improving the accuracy of image processing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

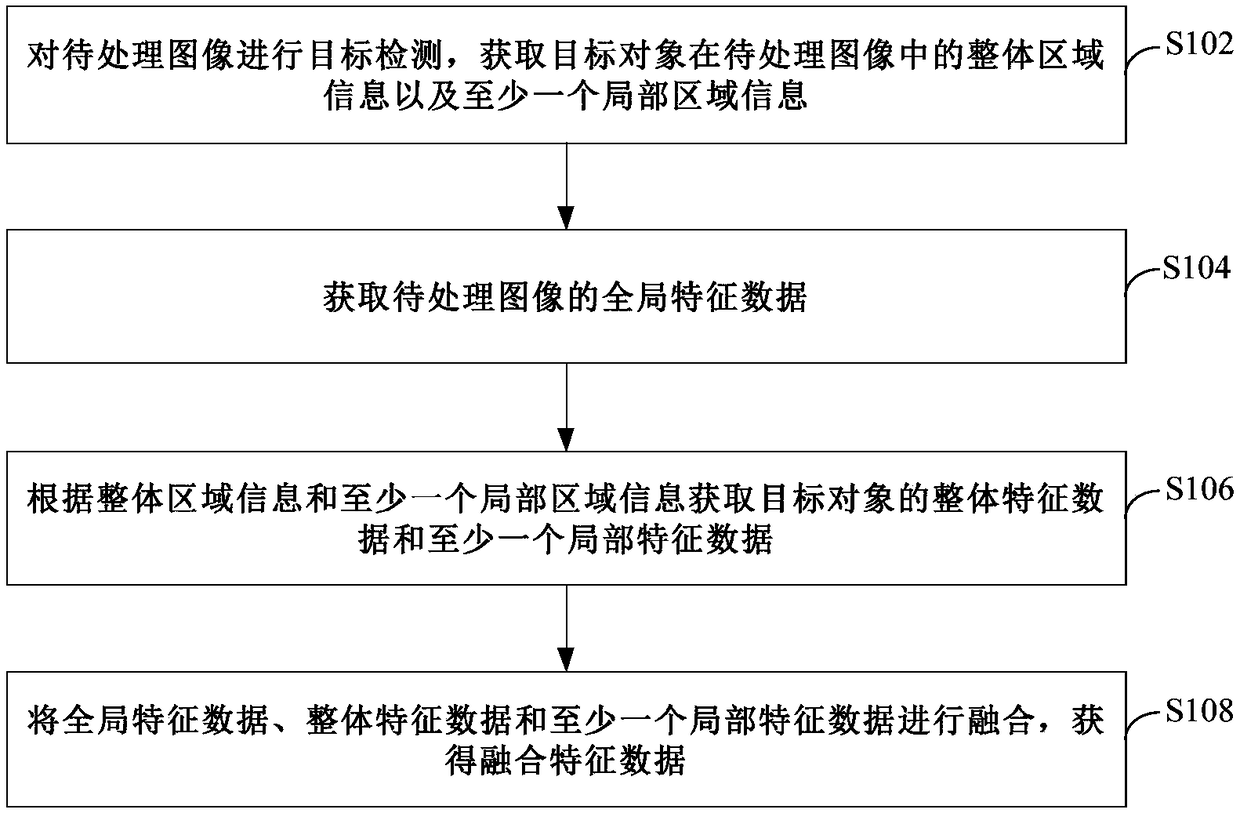

[0038] refer to figure 1 , shows a flowchart of steps of a feature extraction method according to Embodiment 1 of the present invention.

[0039] The feature extraction method of the present embodiment comprises the following steps:

[0040] Step S102: Perform target detection on the image to be processed, and obtain information on the entire area and at least one local area of the target object in the image to be processed.

[0041] Wherein, the image to be processed may be an image in any scene taken, drawn or intercepted. The image to be processed contains a target object, and the target object can be any object such as a person, an animal, or a vehicle. The overall area information of the target object in the image to be processed is information such as the position and size of the entire area containing the target object in the image to be processed, and the local area information is information such as the position and size of at least one local area containing the t...

Embodiment 2

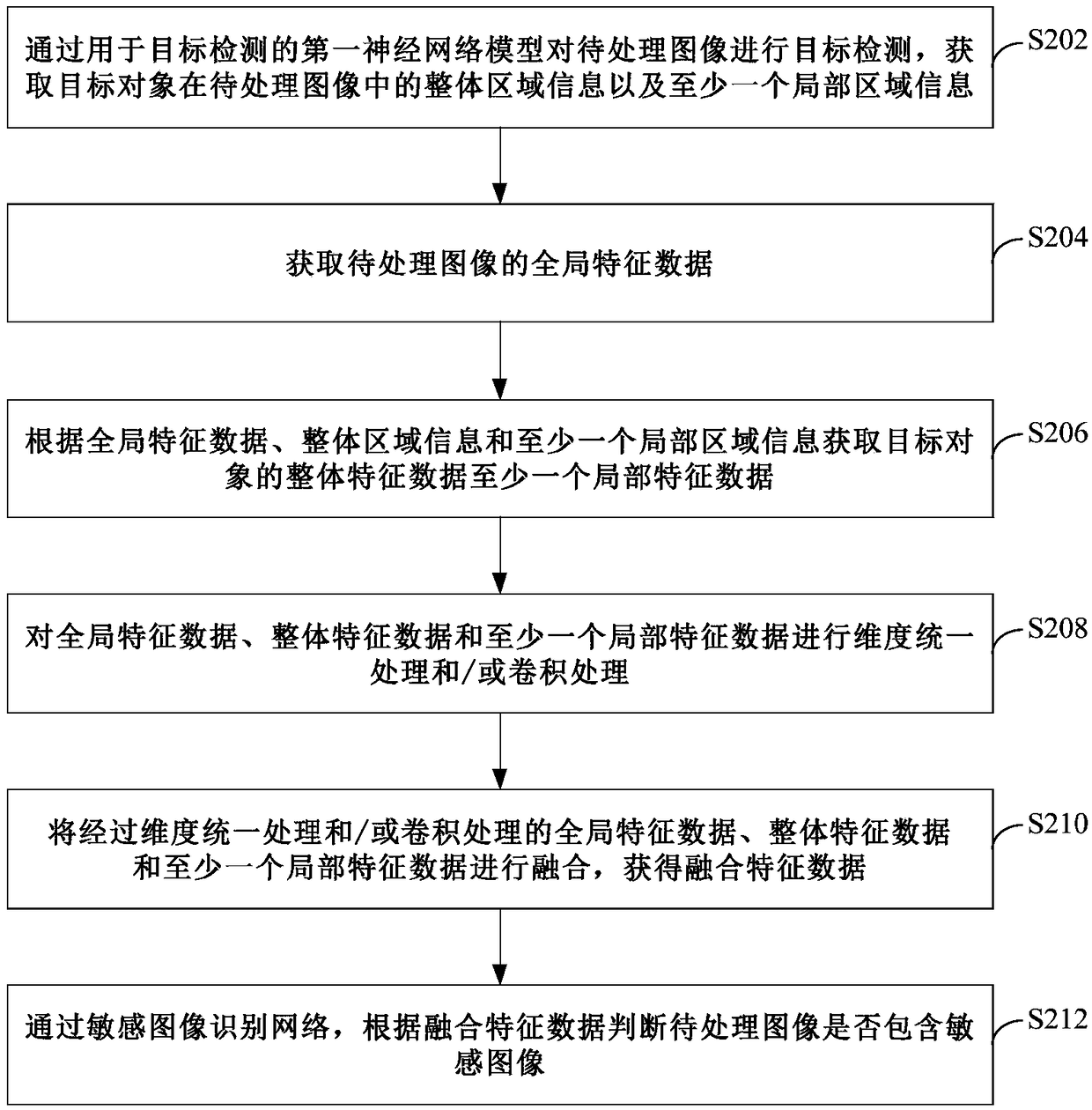

[0055] refer to figure 2 , shows a flow chart of steps of a feature extraction method according to Embodiment 2 of the present invention.

[0056] The feature extraction method of the present embodiment comprises the following steps:

[0057] Step S202: Perform target detection on the image to be processed by using the first neural network model for target detection, and obtain the overall area information and at least one local area information of the target object in the image to be processed.

[0058] In this embodiment, after the image to be processed is acquired, the image to be processed is input to the first neural network model for target detection, and the target detection is performed on the image to be processed through the first neural network model to obtain the target object in the image to be processed The overall region information, and at least one local region information.

[0059] Step S204: Obtain global feature data of the image to be processed.

[006...

Embodiment 3

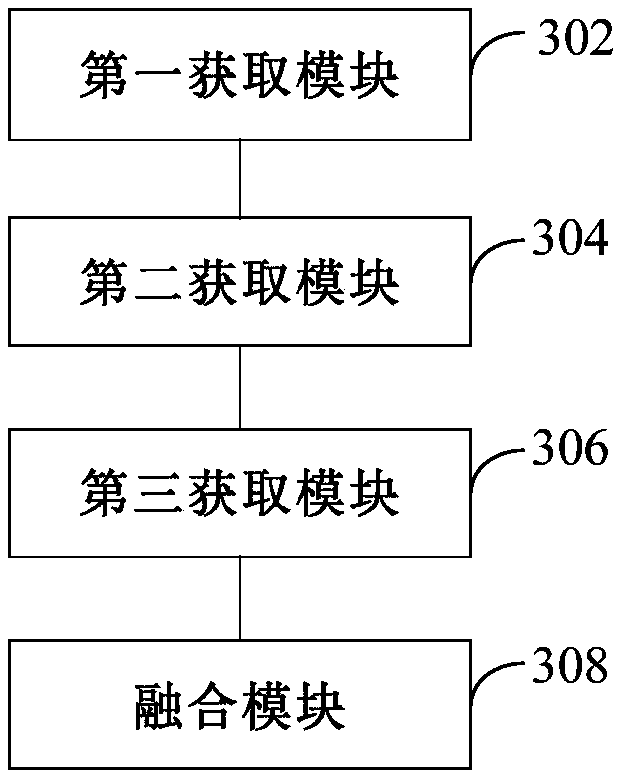

[0083] refer to image 3 , shows a structural block diagram of a feature extraction device according to Embodiment 3 of the present invention.

[0084] The feature extraction device in the embodiment of the present invention includes: a first acquisition module 302, configured to perform target detection on the image to be processed, and acquire the overall area information and at least one local area information of the target object in the image to be processed; the second acquisition module 304, for acquiring the global feature data of the image to be processed; a third acquiring module 306, for acquiring the overall feature data and at least one local feature data of the target object according to the overall area information and the at least one local area information Feature data; a fusion module 308, configured to fuse the global feature data, the overall feature data and the at least one local feature data to obtain fused feature data.

[0085] Optionally, the first ac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com