Method for identifying sound scenes based on CNN (convolutional neural network) and random forest classification

A random forest classification and convolutional neural network technology, which is applied in speech recognition, character and pattern recognition, speech analysis, etc., can solve the problems of difficult training of models, dependence of recognition effect, and aggravated model complexity, etc., to achieve improved recognition rate, Less computing resources and training time, the effect of simple CNN structure

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0046] The technical solution of the present invention will be specifically described below in conjunction with the accompanying drawings.

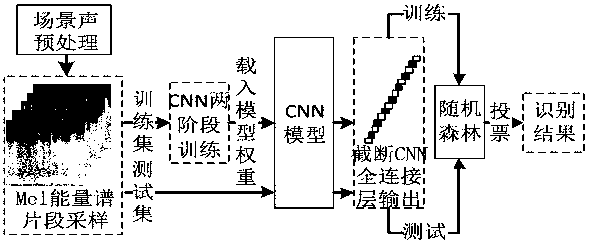

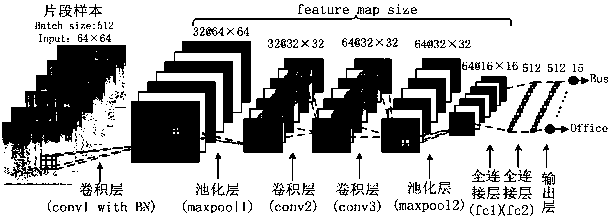

[0047] A kind of sound scene recognition method based on convolutional neural network and random forest classification of the present invention, at first, sound scene generates Mel energy spectrum and its fragment sample set through Mel filter; Then, utilizes fragment sample set to carry out two-stage training to CNN , truncate the feature output of the fully connected layer to obtain the CNN features of the fragment sample set; finally, use random forest to classify the CNN features of the fragment sample set to obtain the final recognition result.

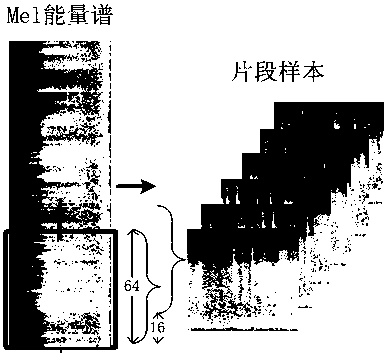

[0048] The sound scene generates the Mel energy spectrum and its segment sample set through the Mel filter, that is, by extracting the Mel energy spectrum from the scene sound samples of various lengths, and sampling by slices, the Mel energy spectrum segments of the same size are obtained as...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com