Human action recognition method based on multi-feature motion-in-depth diagram

A recognition method and motion map technology, applied in character and pattern recognition, instruments, computer parts, etc., can solve problems such as high redundancy, misidentification of similar behaviors, and weak ability to describe local details.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

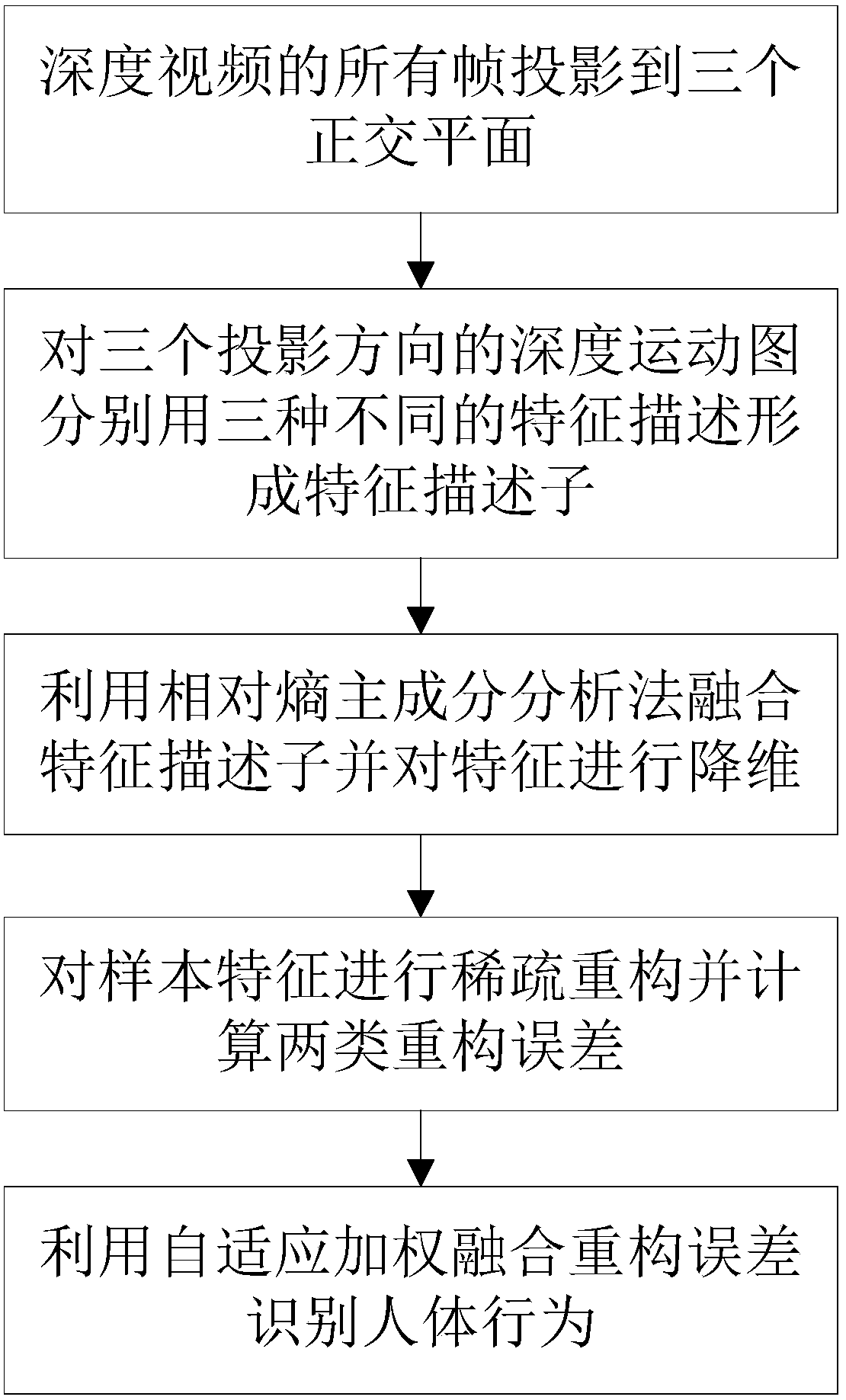

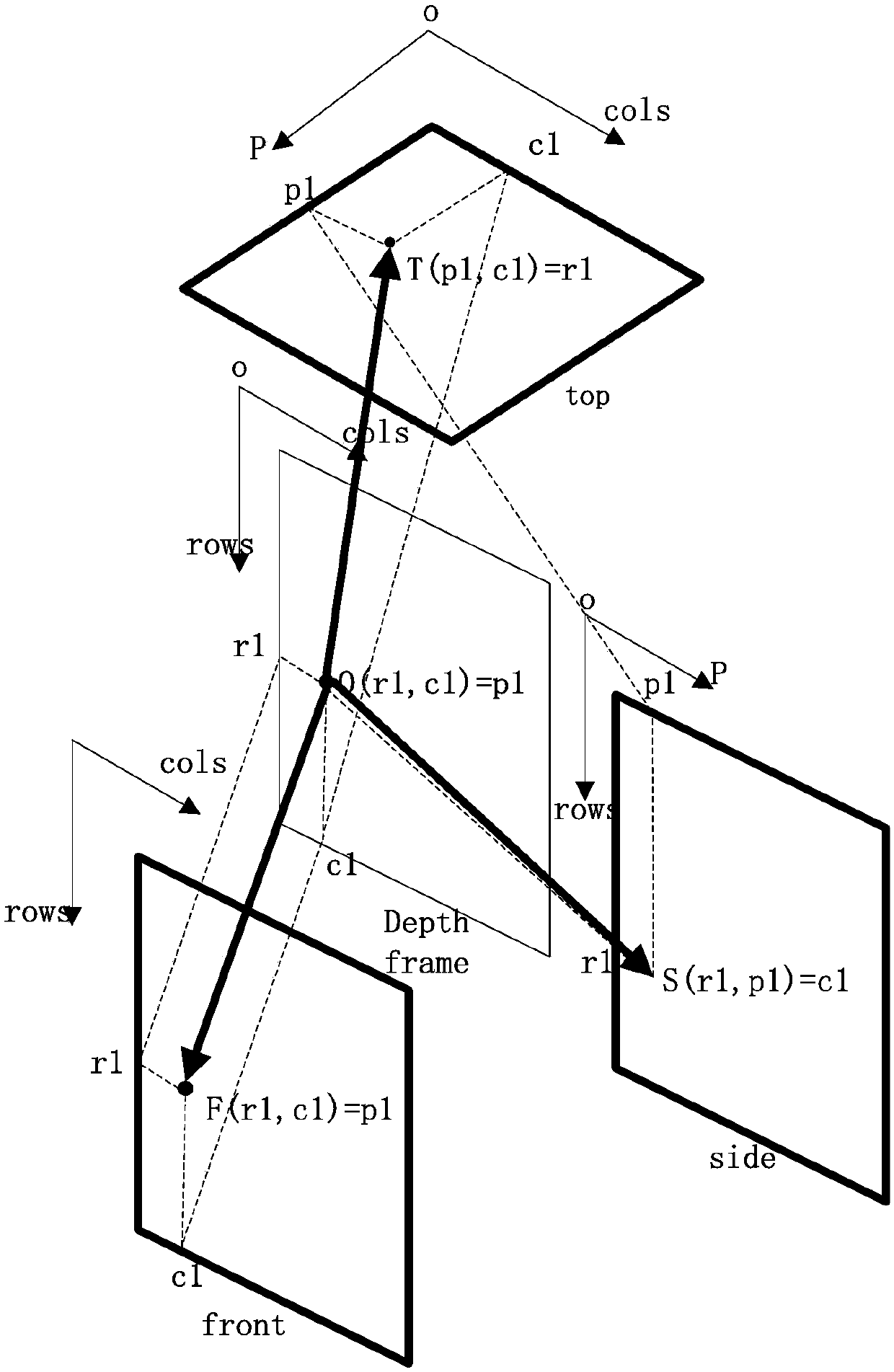

[0081] The specific embodiment of the present invention is described below in conjunction with accompanying drawing, figure 1 It is a schematic flow chart of human behavior recognition based on multi-feature depth motion map in this embodiment. The invention discloses a human body behavior recognition method based on a multi-feature depth motion map. The specific implementation steps are: (1) projecting all frames of the depth video onto three orthogonal planes of front, side and top; The absolute difference of two consecutive projected frames stacked by a plane forms a Depth Motion Map (DMM). {v=f,s,t} ), and then extract LBP features, GIST features, and HOG features respectively, and form feature descriptors corresponding to the three directions; (3) perform feature fusion and dimensionality reduction on three different feature descriptors; (4) calculate various behavior samples respectively The eigenvectors of l are based on l 1 Norm and l 2 Sparse reconstruction error o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com