Video motion recognition method based on fusion of sorting pooling and spatial features

A technology of action recognition and basic features, applied in the field of video recognition, can solve problems such as loss, and achieve the effect of improving recognition accuracy and high description performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

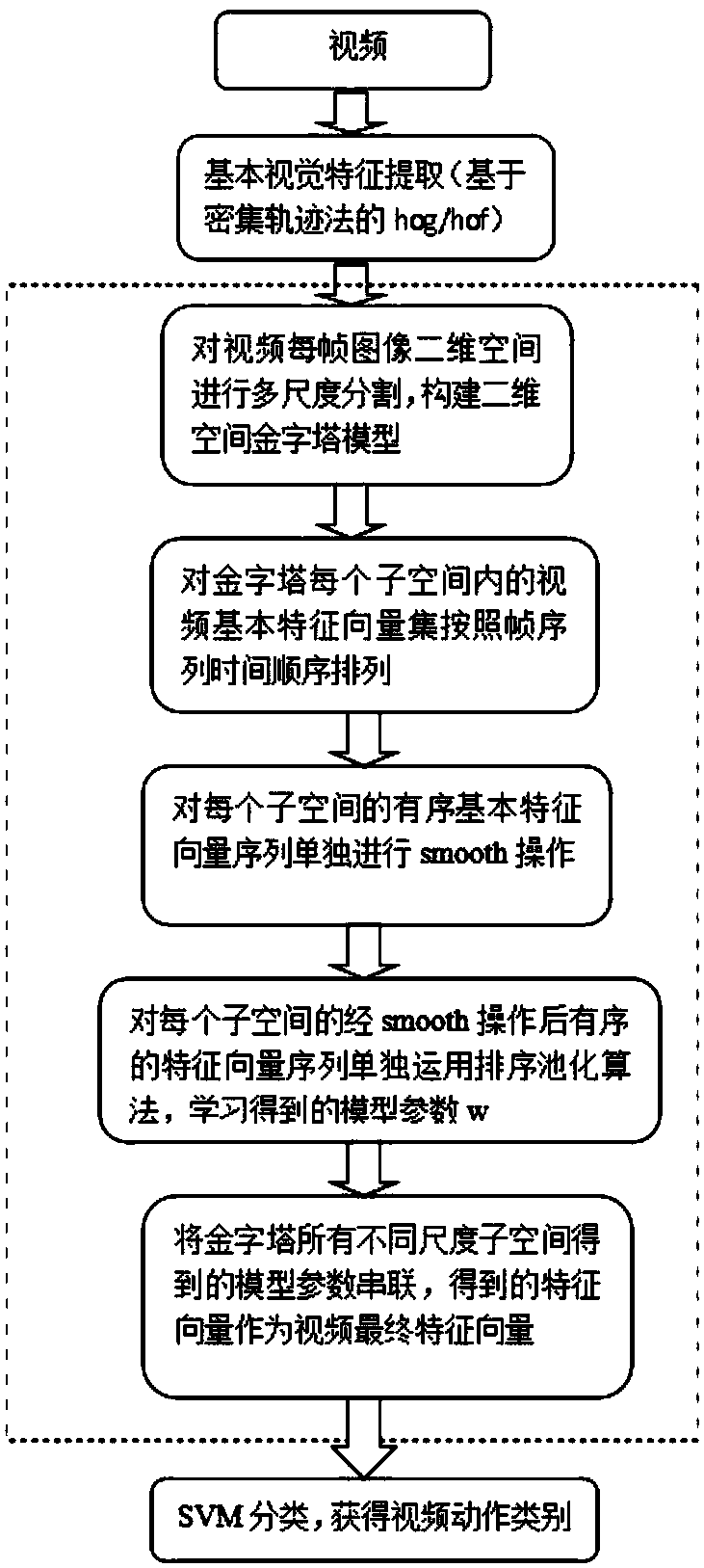

[0016] combine figure 2 , a video action recognition method based on sorting pooling fusion spatial features, comprising the following steps:

[0017] Step 1, using the video local feature descriptor algorithm to extract the basic visual feature vector set for each video;

[0018] Step 2, perform multi-scale segmentation on the two-dimensional space of each frame of each video, and construct a two-dimensional space pyramid model;

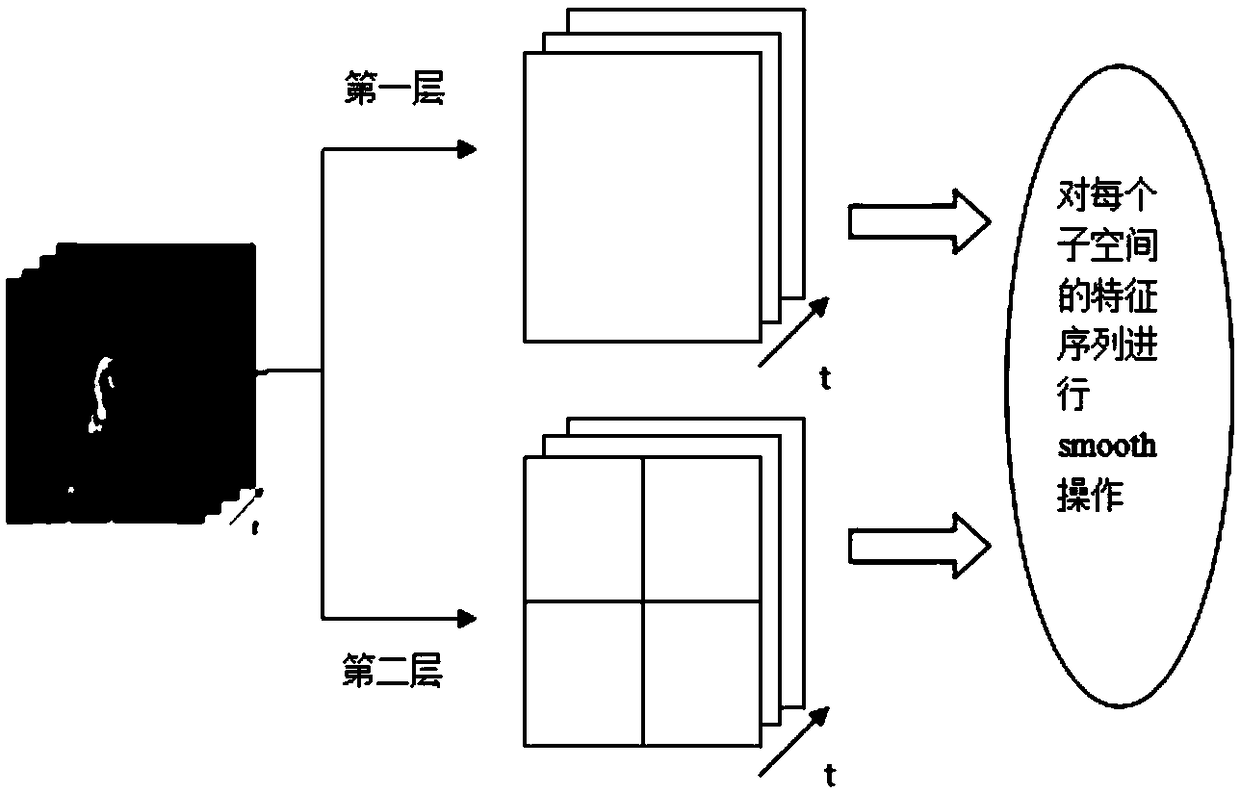

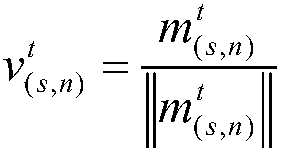

[0019] Step 3, arrange the video basic feature vector set in each subspace in the pyramid model according to the time sequence of the frame sequence;

[0020] Step 4, perform a smoothing operation on the sequence of ordered basic feature vectors in each subspace;

[0021] Step 5, separately apply the sorting pooling algorithm to the sequence of ordered feature vectors after the smoothing operation in each subspace, and learn the model parameters belonging to the subspace;

[0022] Step 6, concatenate the model parameters obtained from all subspa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com