Network object processing method and device

A technology for processing methods and objects, applied in the computer field, can solve problems such as high cost and cumbersome classification process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

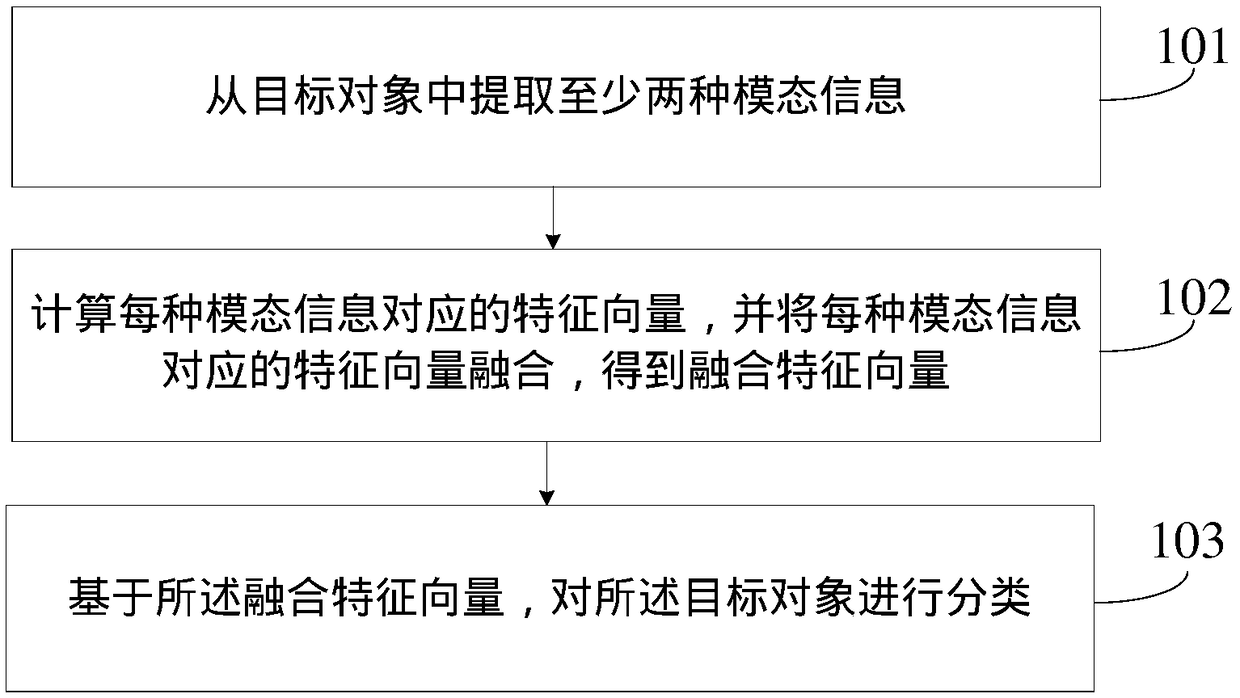

[0065] figure 1 It is a flow chart of the steps of a network object processing method provided in Embodiment 1 of the present invention, as shown in figure 1 As shown, the method may include:

[0066] Step 101. Extract at least two modality information from the target object.

[0067] In the embodiment of the present invention, the target object may be an object with multimodal information. For example, the target object may be a video, a slideshow file with text content, and so on. Further, the modality information may be text, voice or image, and so on.

[0068] Step 102, calculating the feature vector corresponding to each modality information, and fusing the feature vectors corresponding to each modality information to obtain a fusion feature vector.

[0069] In the embodiment of the present invention, the terminal calculates the feature vector corresponding to each mode information, and then performs feature fusion to obtain the fusion feature vector, so that in the s...

Embodiment 2

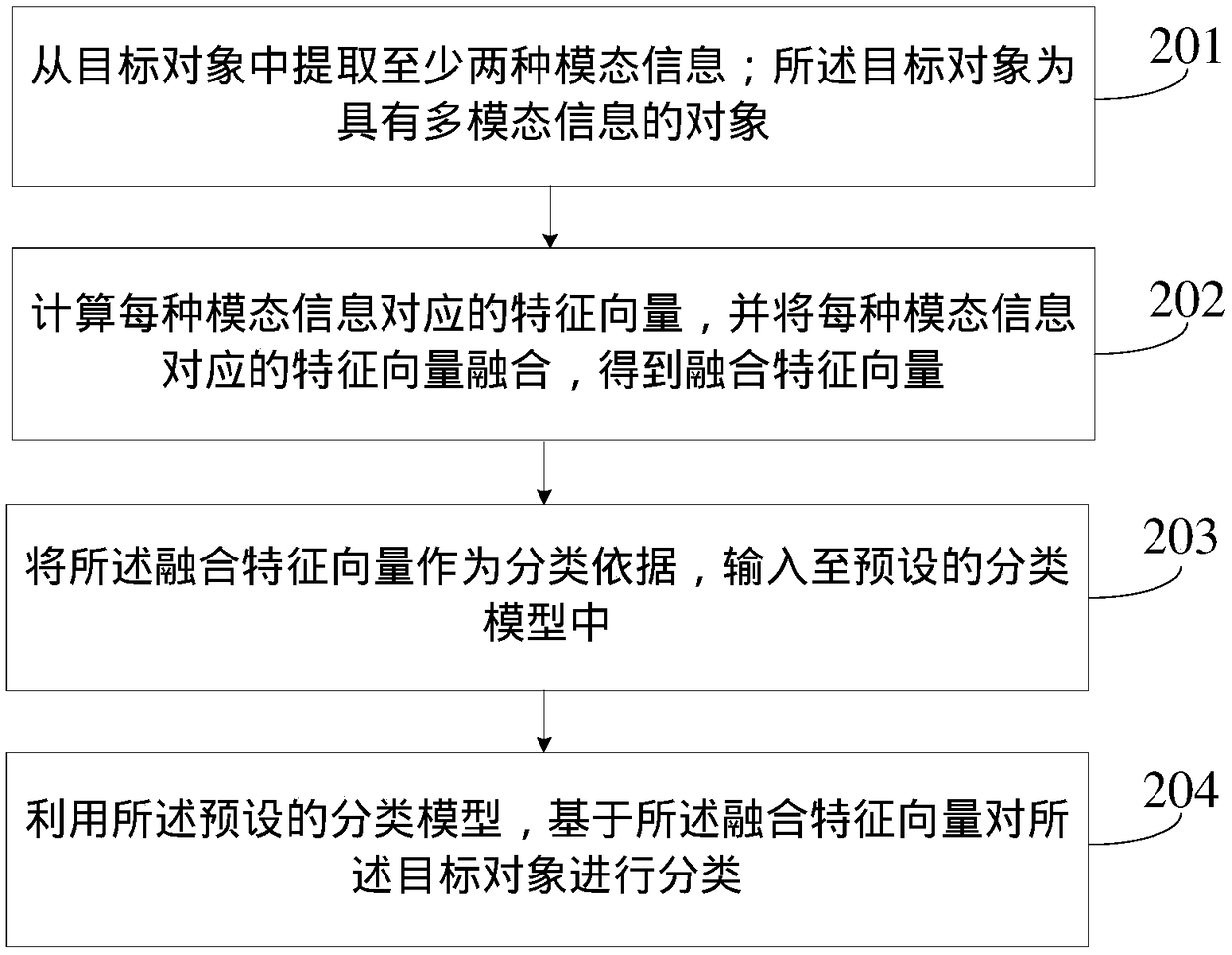

[0074] figure 2 It is a flow chart of the steps of a network object processing method provided in Embodiment 2 of the present invention, as shown in figure 2 As shown, the method may include:

[0075] Step 201. Extract at least two modal information from a target object; the target object is an object with multi-modal information.

[0076] In this step, the target object can be a target video, and accordingly, step 201 can be realized through the following substeps (1) to (4):

[0077] Sub-step (1): Extract the spectrogram corresponding to the speech information in the target video to obtain the first image.

[0078] In this step, the speech information in the target video refers to the audio contained in the target video, and the spectrogram refers to a spectrogram of the audio. Specifically, the terminal can first extract the audio in the target video, then divide the audio into multiple frames, then calculate the frequency spectrum corresponding to each frame of voice ...

Embodiment 3

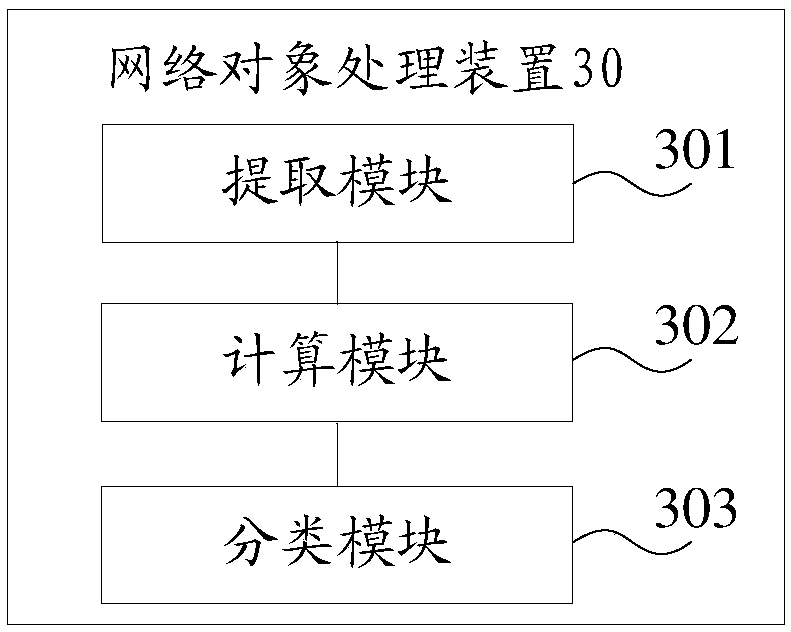

[0115] image 3 It is a block diagram of a network object processing device provided in Embodiment 3 of the present invention, such as image 3 As shown, the device 30 may include:

[0116] The extraction module 301 is configured to extract at least two modal information from the target object; the target object is an object with multi-modal information.

[0117] The calculation module 302 is configured to calculate a feature vector corresponding to each modality information, and fuse the feature vectors corresponding to each modality information to obtain a fusion feature vector.

[0118] A classification module 303, configured to classify the target object based on the fusion feature vector;

[0119] Wherein, the modal information is text, voice or image.

[0120] To sum up, in the interface processing device provided by Embodiment 3 of the present invention, the extraction module can first extract at least two kinds of modal information from the target object, and then t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com