Virtual-reality fusion fuzzy consistency processing method based on line spread function standard deviation

A technology of line spread function and virtual-real fusion, applied in the field of virtual-real fusion blur consistency processing, which can solve the problems of defocus blur and inaccurate camera focusing.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

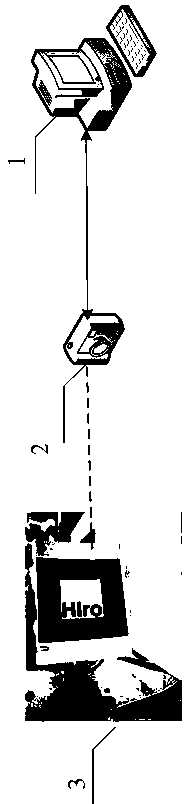

[0031] Below in conjunction with accompanying drawing and embodiment the present invention will be further described: as figure 1 As shown, a virtual-real fusion fuzzy consistency processing method based on the standard deviation of the line spread function LSF, the camera 2 is connected with the computer 1 through a cable, and the real scene 3 contains the Hiro square black and white identification card 4 in the ARToolkit; its characteristics The specific steps are as follows:

[0032] Step 1, use the camera 2 to shoot the real scene 3, obtain the real scene image and use I 1 express.

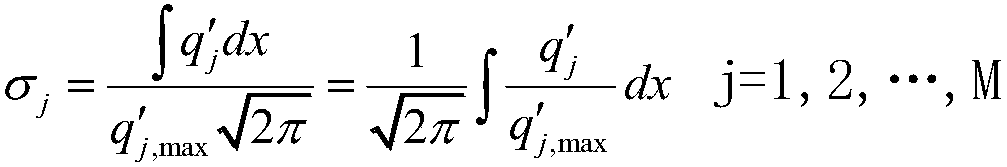

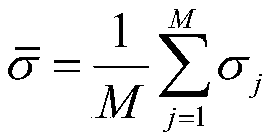

[0033] Step 2, utilize the rgb2gray function of matlab to I 1 Grayscale to get the real scene grayscale image I 2 , and according to the formula

[0034] G x (f(x,y))=(f(x+1,y)-f(x-1,y)) / 2

[0035] Calculate I 2 Gradient G in the horizontal direction x , where (x,y) is the image I 2 The pixel in x row y column, f is the image I 2 In the gray value of the pixel, f(x+1, y)-f(x-1, y) is...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com