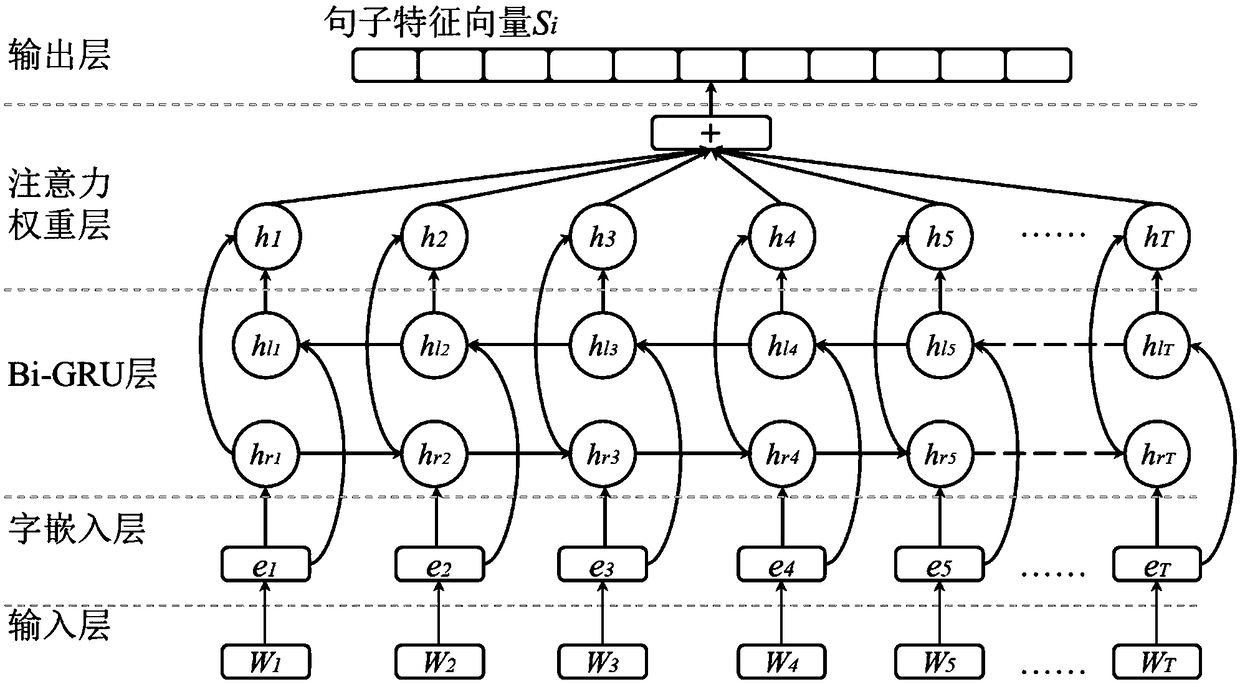

End-to-end classification method of large-scale news text based on Bi-GRU and word vector

A classification method and large-scale technology, applied in semantic analysis, electrical digital data processing, biological neural network models, etc., can solve the problems of poor long text, disappearance, RNN model gradient explosion, etc., to achieve dimensionality reduction, efficiency and The effect of improving accuracy and improving performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

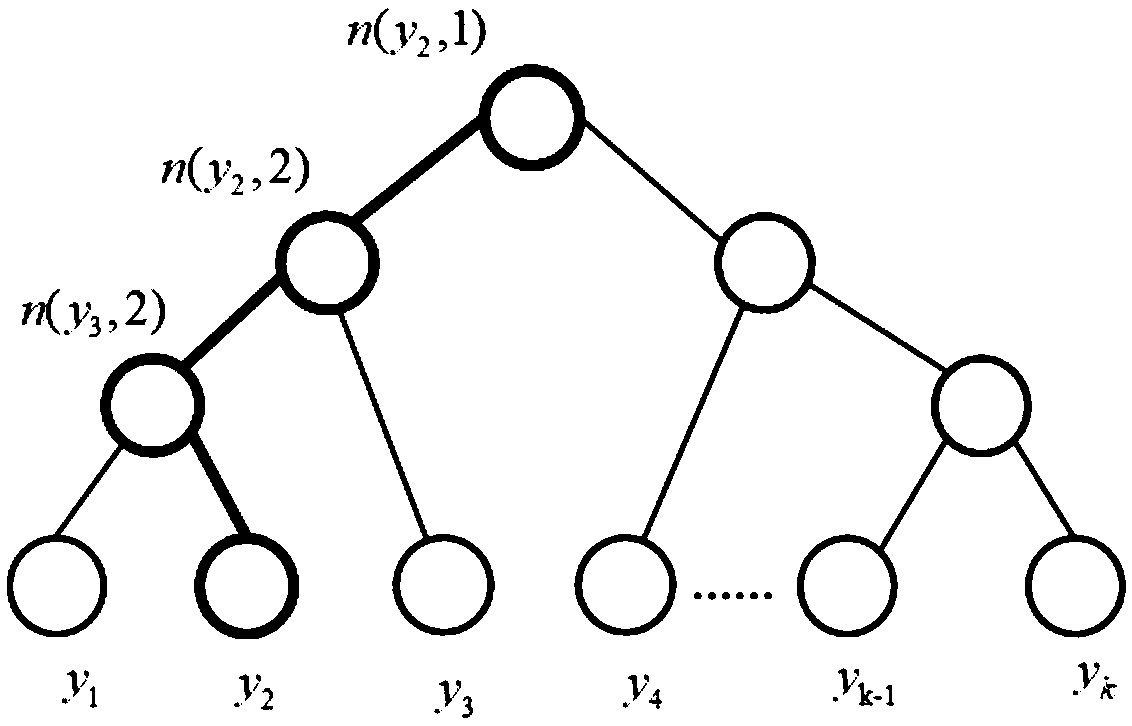

Method used

Image

Examples

Embodiment

[0062] Transcode the downloaded raw data, then label the text with categories, then make training and test data, and then control the text length, word segmentation, and remove punctuation marks. For the successfully marked news of 10 categories, count the distribution of categories, draw 2000 news from each category, and divide them into training and testing according to 4:1. The categories are: Finance, IT, Health, Sports, Travel, Military, Culture, Entertainment, Fashion, Auto. The results of model training make it possible to test the maximum probability of the classified category for any piece of news text. For example: "On March 30, Beijing time, according to US media reports, as the number one player in today's NBA, LeBron James always gets cheers from the fans of the away team when he plays in away games." The category of classification is "Sports: 0.76" , "Health: 0.12", "Culture: 0.06"..., finally take the one with the highest probability as the classification resul...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com