Industrial Human-Computer Interaction System and Method Based on Visual and Force-Tactile Augmented Reality

A tactile enhancement and augmented reality technology, applied in the input/output of user/computer interaction, mechanical mode conversion, computer components, etc., can solve the problem of not being able to know whether the user's operation has been recognized by the system in time, and the intelligent interaction method cannot interact. Timely feedback on effectiveness, human-computer interaction to get rid of space constraints and walk around with people, etc., to achieve the effect of improving convenience, saving costs, and improving compatibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

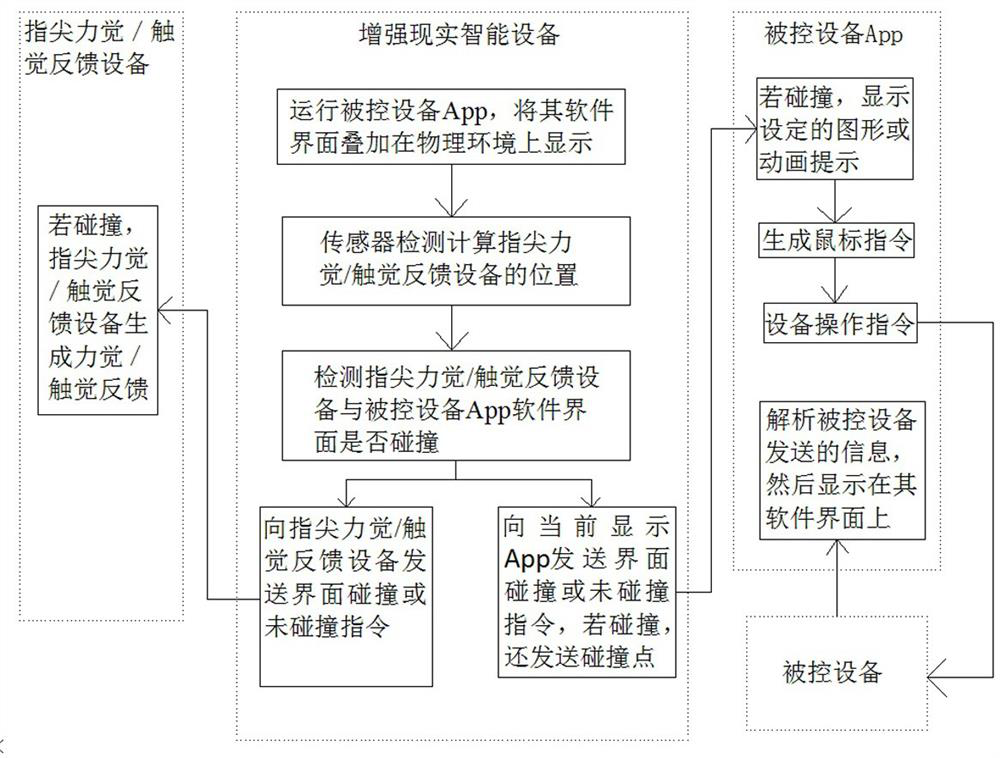

[0050] see figure 1 , an industrial human-computer interaction system based on visual and haptic augmented reality, including controlled equipment, augmented reality smart devices, and fingertip force / tactile feedback devices worn on or held by the operator's fingertips;

[0051] The augmented reality smart device runs the controlled device App, uses augmented reality technology to superimpose the controlled device App software interface on the physical environment for display, and uses the sensor detection on the augmented reality smart device to calculate the position of the fingertip force sense / tactile feedback device , and then judge whether the fingertip force sense / haptic feedback device collides with the software interface of the controlled device App. If there is no collision, send a non-collision command to the controlled device App; if there is a collision, send an interface collision command and Collision point: The controlled device App analyzes the collision comm...

Embodiment 2

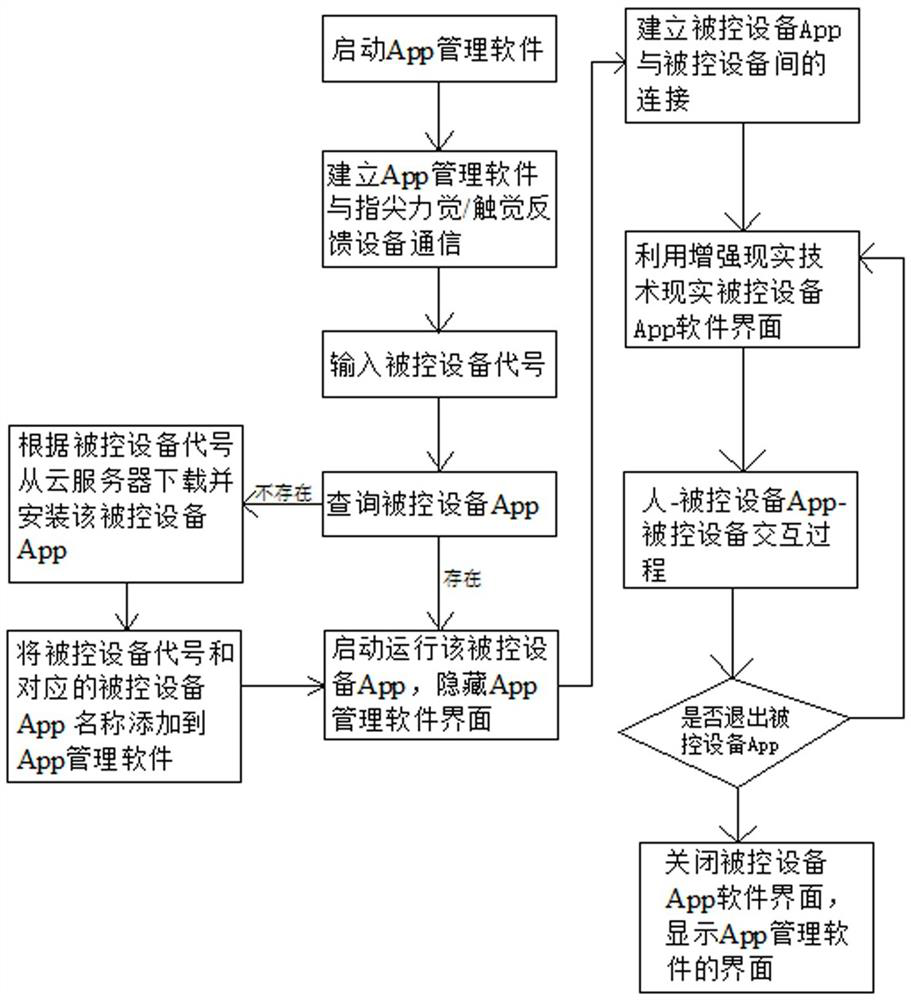

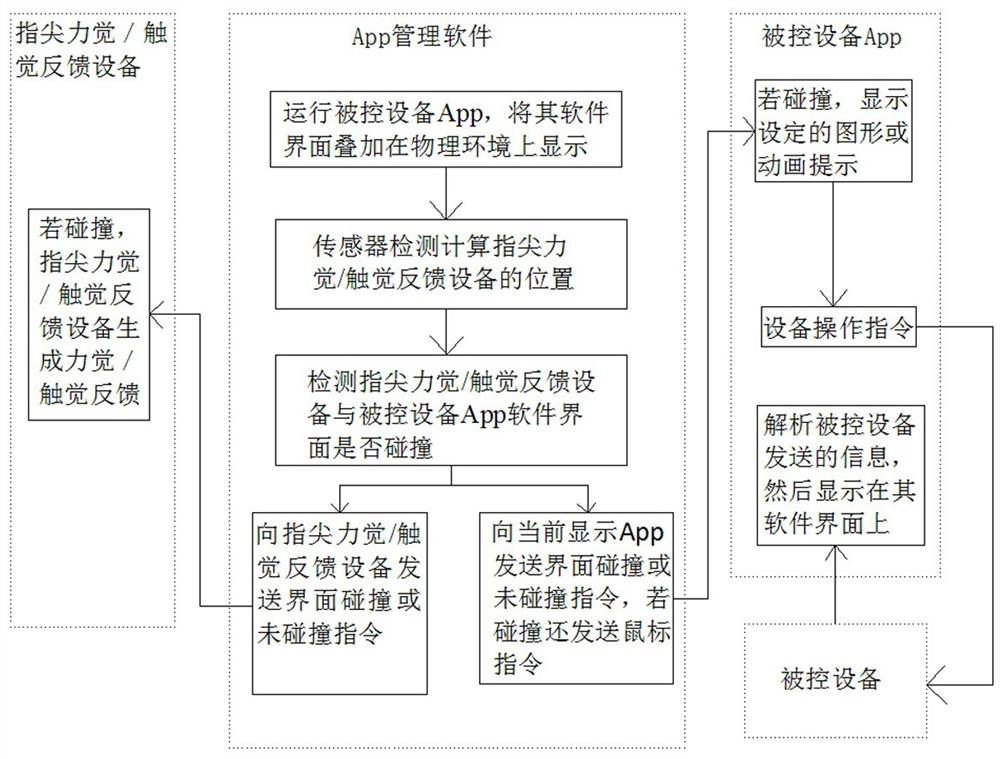

[0064] This embodiment solves the problem that the augmented reality smart device fails to provide the controlled device App, and proposes to use the App management software to manage and control the controlled device APP and the fingertip force / tactile feedback device to realize collision detection and human-computer interaction.

[0065] see figure 2 with image 3 , in this embodiment, the industrial human-computer interaction system includes a controlled device, an augmented reality smart device, a fingertip force / tactile feedback device, and a cloud server for downloading the App of the controlled device, and the augmented reality smart The device also includes App management software, which first runs the App management software on the augmented reality smart device, and then finds and runs the App of the controlled device through the App management software; the App management software includes App data storage table, enhanced Realistic registration module, interaction...

Embodiment 3

[0087] see figure 1 , an industrial human-computer interaction method based on visual and haptic augmented reality, including the following steps:

[0088] Step 10, the augmented reality smart device runs the controlled device App, establishes a connection between the controlled device App and the controlled device, and superimposes the controlled device App software interface on the physical environment for display;

[0089] Then execute step 20-step 30 to carry out the interaction between the person-controlled device App-controlled device:

[0090] Step 20. Use the sensor on the augmented reality smart device to detect and calculate the position of the fingertip force sense / tactile feedback device, and then judge whether the fingertip force sense / tactile feedback device collides with the App software interface of the controlled device. The controlled device App and the fingertip force sense / haptic feedback device send a non-collision command. If there is a collision, the in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com