A measuring algorithm for the difference of time information

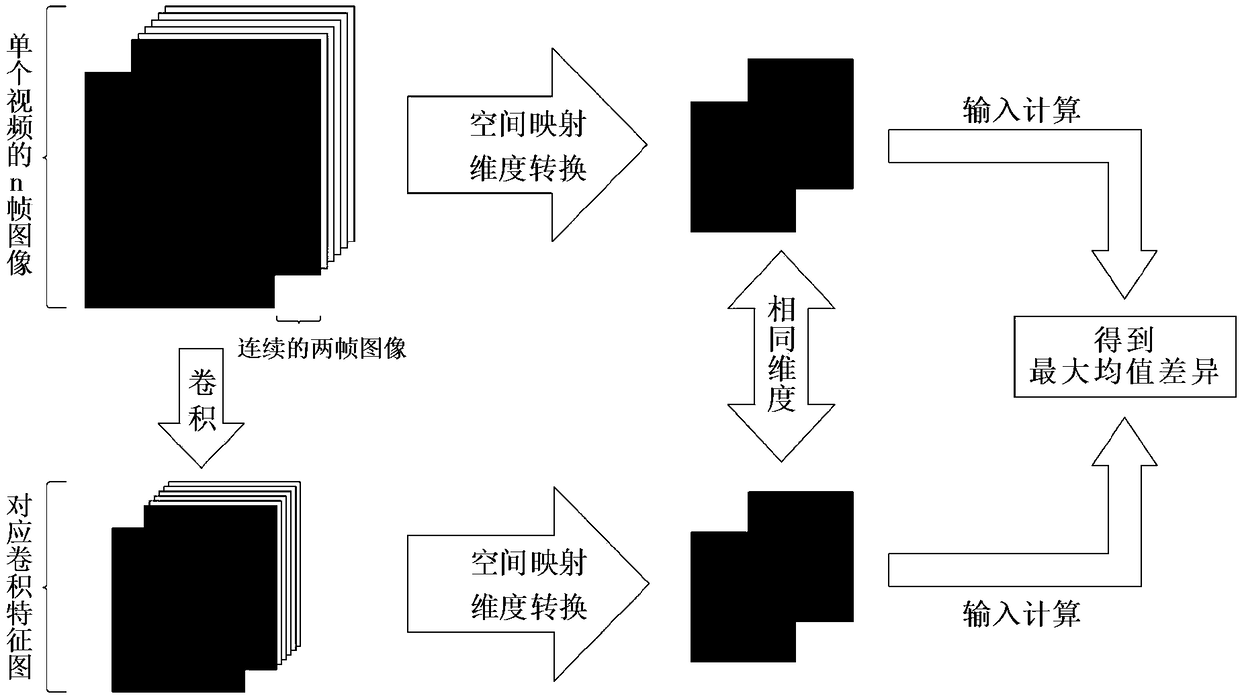

A time information and difference technology, applied in the field of video understanding, can solve the problems that the accuracy of the model is not enough to meet the application requirements, the accuracy is not satisfactory, and the time information of continuous video frames cannot be accurately extracted, so as to improve the understanding ability, The effect of reliable data and reliable process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0071] This implementation example is for figure 2 A set of continuous frame images of the original video and such as image 3 The distance metric calculation for the corresponding convolutional feature map is shown, Figure 4 for the calculated results.

Embodiment 2

[0073] This implementation example is for Figure 5 A set of continuous frame images of the original video and such as Figure 6 The distance metric calculation for the corresponding convolutional feature map is shown, Figure 7 for the calculated results.

Embodiment 3

[0075] This implementation example is for Figure 8 A set of continuous frame images of the original video and such as Figure 9 The distance metric calculation for the corresponding convolutional feature map is shown, Figure 10 for the calculated results.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com