Three-dimensional reconstruction method based on convolution neural network

A convolutional neural network and three-dimensional reconstruction technology, applied in the field of three-dimensional scene reconstruction based on convolutional neural network, can solve the problems of inaccurate feature extraction, long time consumption, poor abstraction ability, etc., and achieve good real-time performance and retrieval accuracy. High and efficient effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The specific implementation manners of the present invention will be further described below in conjunction with the accompanying drawings and technical solutions.

[0036] The 3D reconstruction method based on convolutional neural network is realized through three modules, and the steps are as follows:

[0037] (1) Preprocessing module

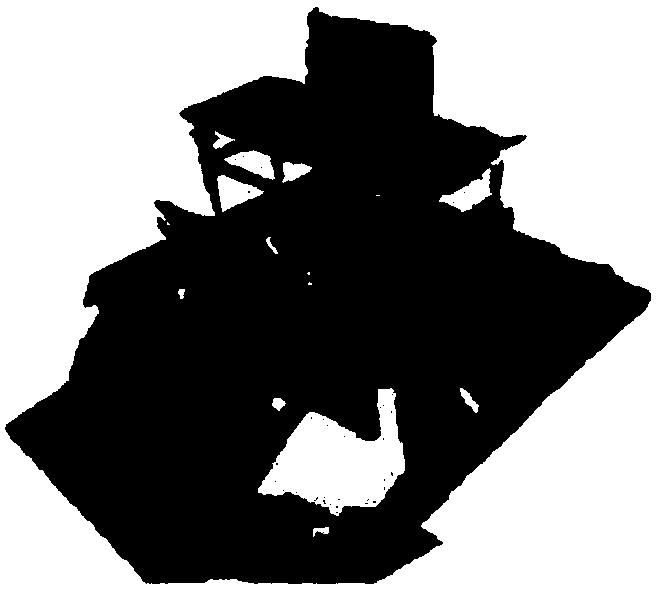

[0038] (1.1) Module input: use the RGBD camera to collect information about indoor objects and complete the establishment of a 3D scene model; segment the objects with poor quality in the scene after modeling, and use the scanned objects and database objects as the input of the preprocessing module;

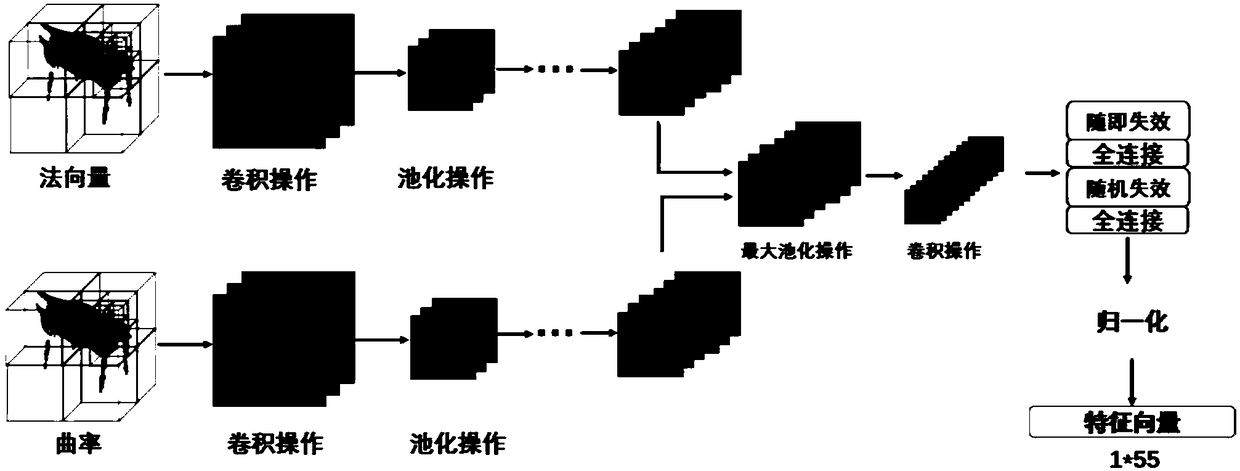

[0039] (1.2) Extract surface information: use virtual scanning technology to sample the dense area of point cloud, select the point with the largest change in normal vector direction among the sampling points as the feature point, and use the normal vector and curvature information of the feature point as the point cloud area The under...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com