Hand and eye calibration method based on 3D vision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0017] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments, so that those skilled in the art can better understand the present invention and implement it, but the examples given are not intended to limit the present invention.

[0018] It can be understood that the following KUKA manipulators can also use other types of manipulators, and the present invention is not limited here.

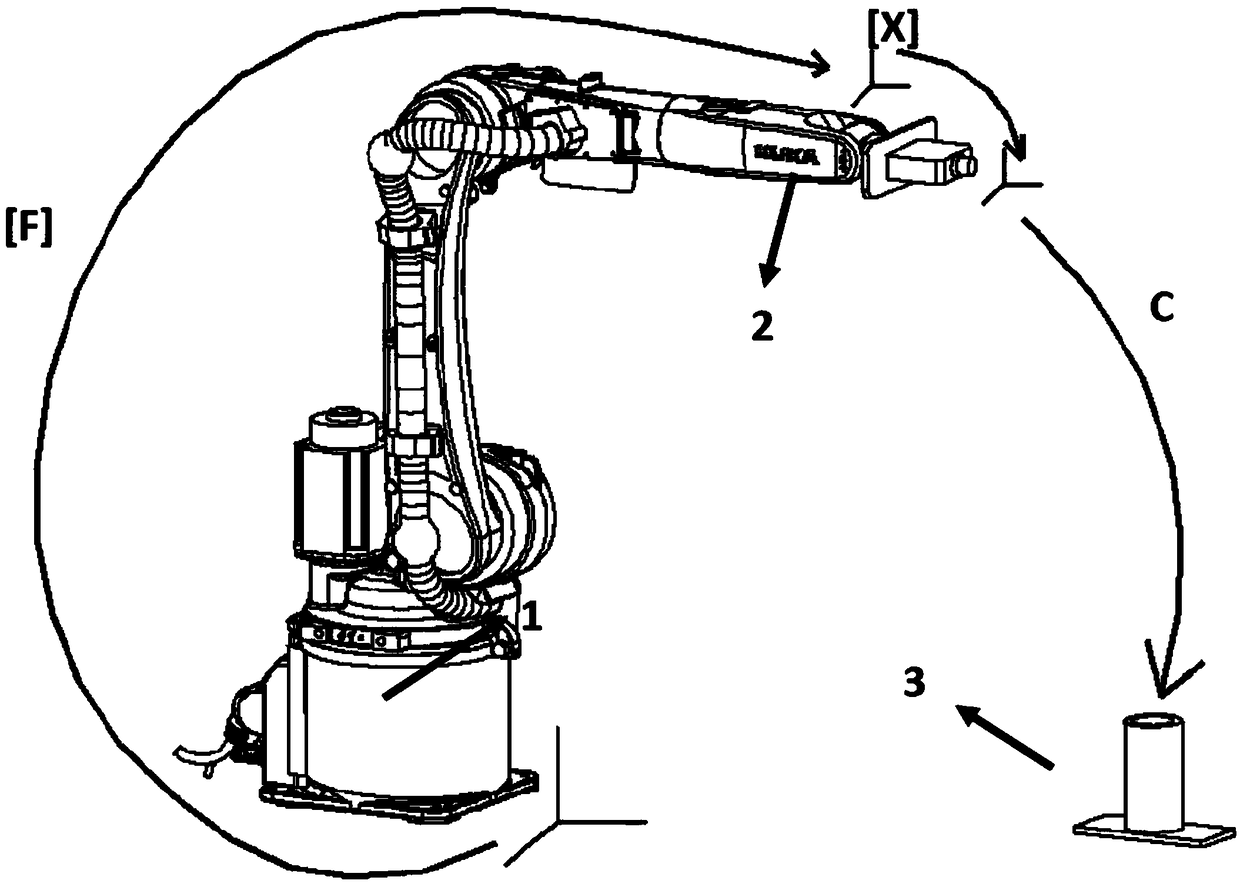

[0019] refer to figure 1 , The hand-eye calibration system based on 3D vision provided by the present invention is composed of a KUKA manipulator 1, a 3D vision sensor 2 and a measuring part 3. The 3D vision sensor is installed on the flange of KUKA manipulator 1, and the measuring part 3 is placed on the ground and keeps its position unchanged.

[0020] First, install the 3D vision sensor at the end of the KUKA manipulator, and connect it to the computer through a network cable. Enter the IP address in the IE browser to displ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com