Method, device and system for preventing cache breakdown

A caching and data request technology, applied in instrumentation, computing, electrical and digital data processing, etc., can solve problems such as database connection pool exhaustion, database impact, frequent errors, etc., to reduce avalanches, reduce access pressure, and avoid duplication. loading effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

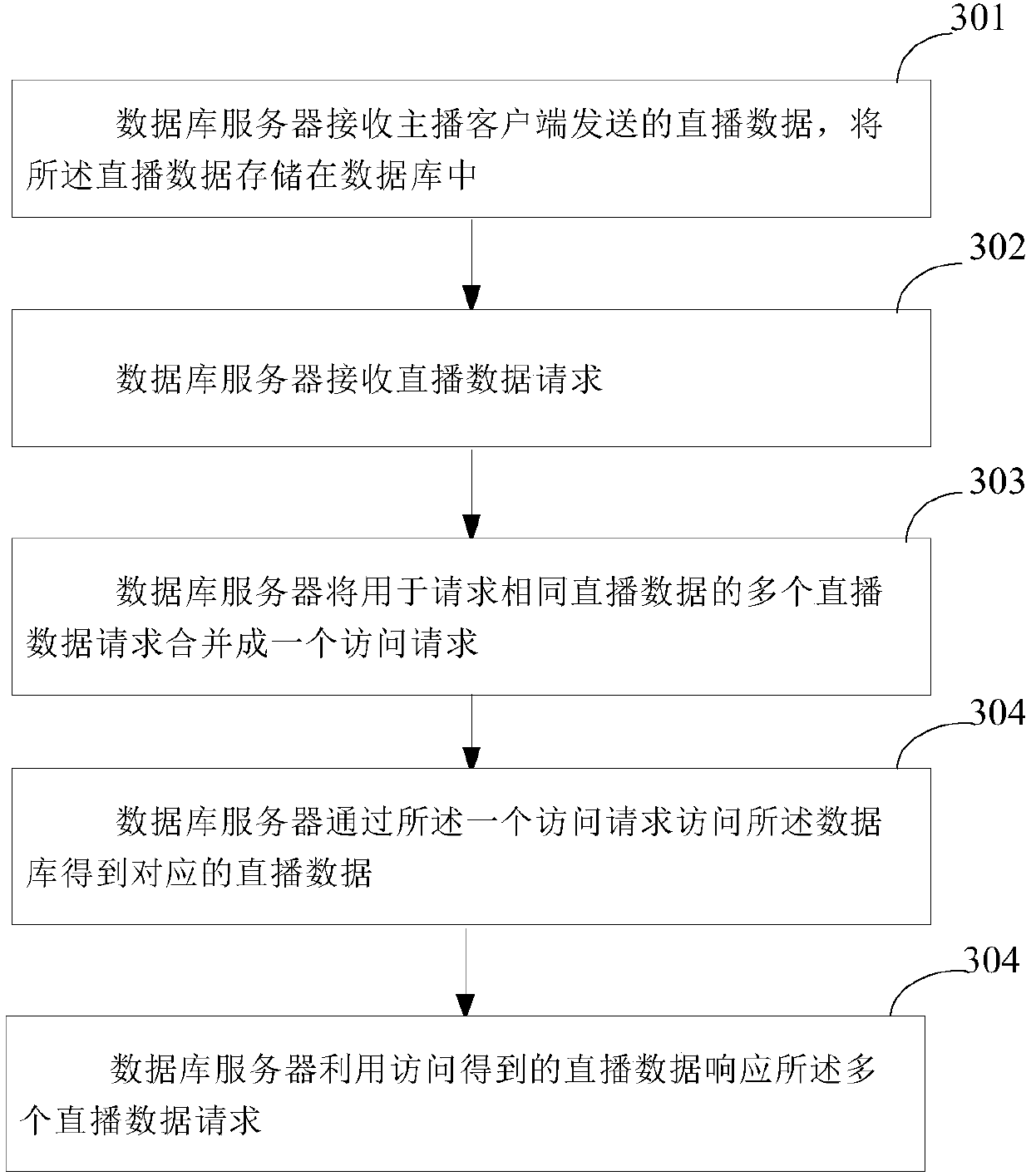

[0043] The following will clearly and completely describe the technical solutions in the embodiments of the application with reference to the drawings in the embodiments of the application. Apparently, the described embodiments are only some of the embodiments of the application, not all of them. Based on the embodiments in this application, all other embodiments obtained by persons of ordinary skill in the art without making creative efforts belong to the scope of protection of this application.

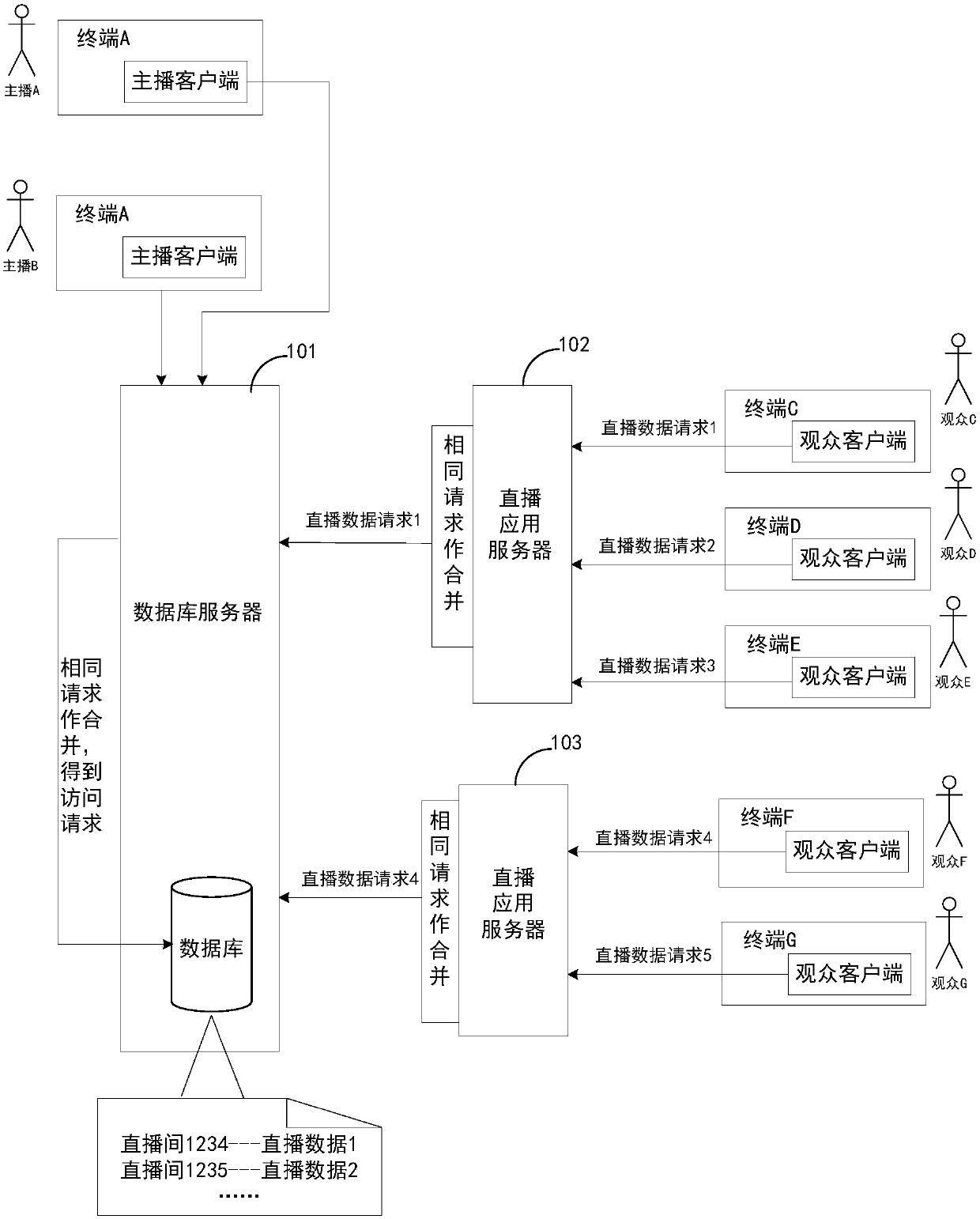

[0044] In practical applications, webcast services often have highly concurrent data loading requests. A large number of data loading requests are likely to penetrate the cache and reach the database concurrently, causing a huge impact on the database in an instant. Business paralyzed.

[0045] For example: in the online live broadcast of the Tmall Double 11 Gala, hundreds of thousands or even millions or tens of millions of people will enter the live broadcast room at the same time...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com