A 3D Model Reconstruction Method Based on Mesh Deformation

A 3D model and grid technology, applied in the field of 3D reconstruction, can solve problems such as limited geometric prior and inability to accurately reconstruct geometric structures, and achieve high flexibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] In order to facilitate those of ordinary skill in the art to understand and implement the present invention, the present invention will be described in further detail below in conjunction with the accompanying drawings and embodiments. It should be understood that the implementation examples described here are only for illustration and explanation of the present invention, and are not intended to limit this invention.

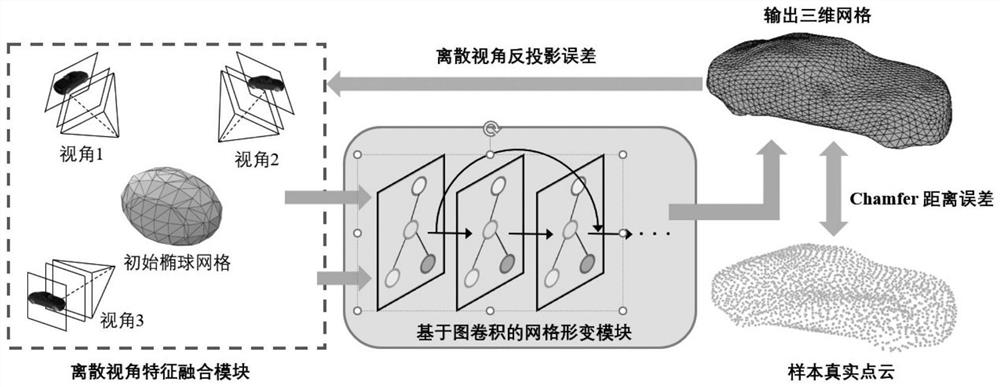

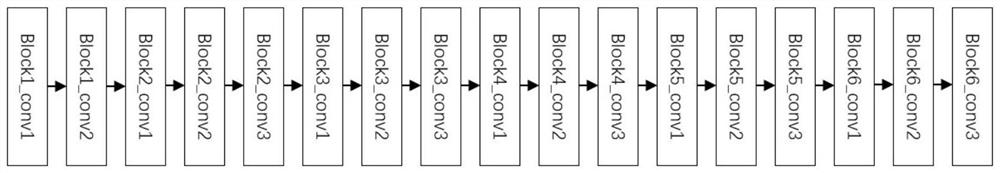

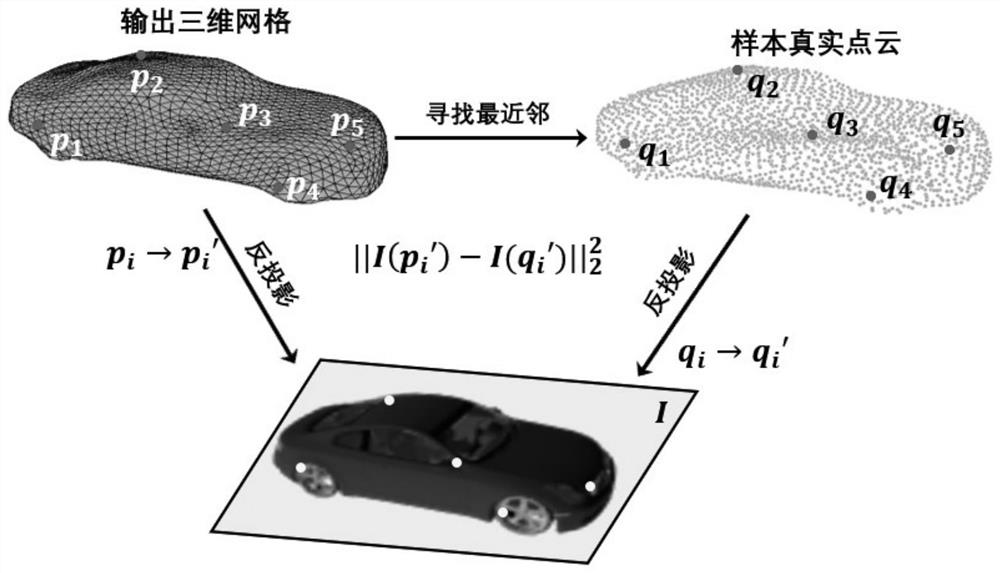

[0033] The deep learning network of the present invention only takes images of several perspectives as input, and outputs a reconstructed three-dimensional mesh model. picture figure 1As shown in , the basic module of the network of the present invention mainly includes two parts: (1) a grid deformation module based on a graph convolutional neural network, and (2) a discrete-view image feature fusion module. With the continuous learning process of the network, the mesh deformation module based on graph convolution gradually deforms the initial 3D shape ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com