Human body behavior identification method based on convolutional neural network

A convolutional neural network and recognition method technology, applied in biological neural network models, neural architecture, character and pattern recognition, etc., to achieve accurate results, reduce computational complexity, and reduce computational complexity.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

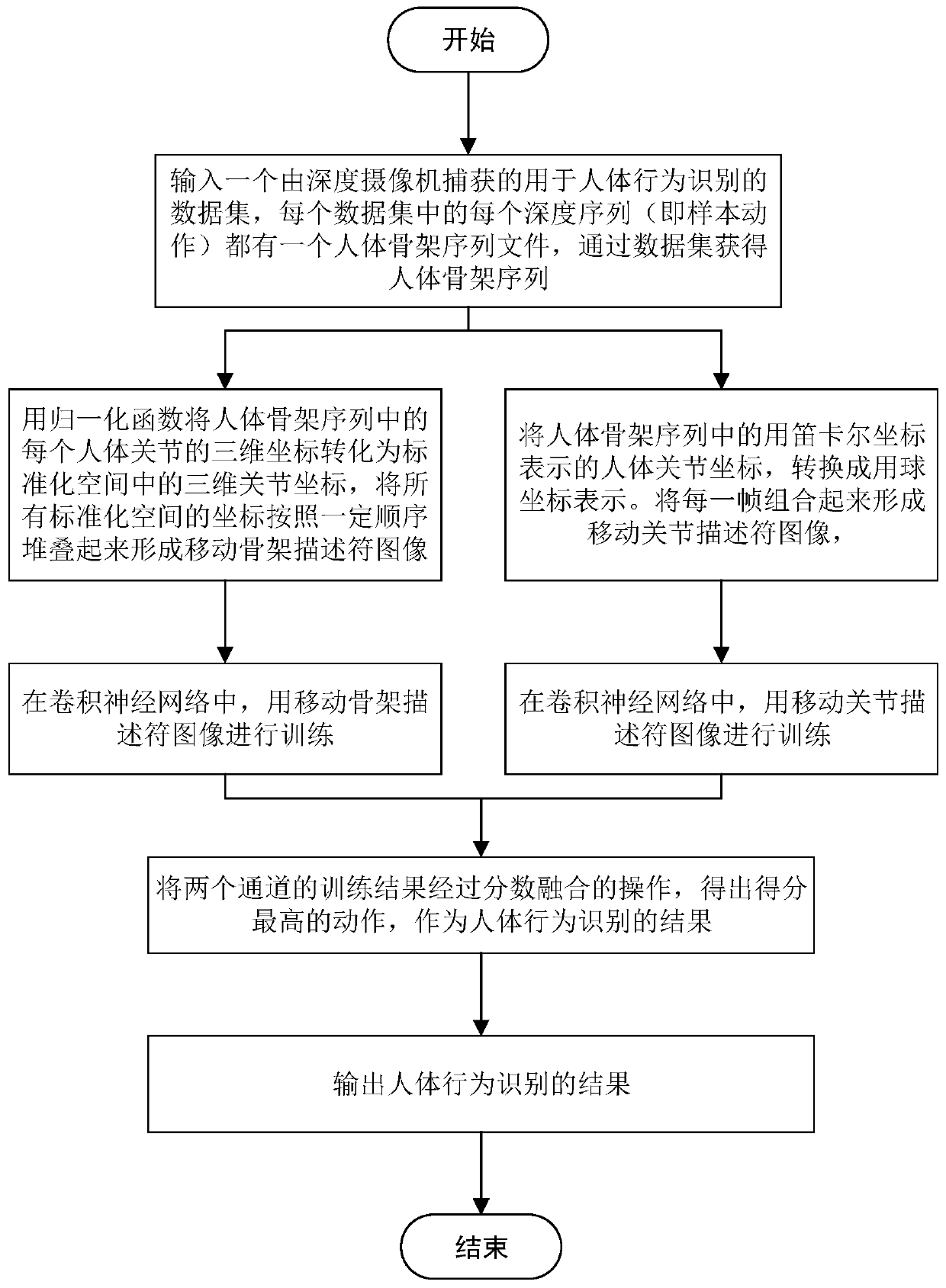

[0039] In specific implementation, figure 1 It is a human behavior recognition method flow based on convolutional neural network.

[0040] This example uses the MSRAction3D dataset, which is captured by the Microsoft Kinect v1 depth camera and contains 20 actions.

[0041] First, the system sequentially acquires the human skeleton sequences in the dataset. In the received joint pose sequence, given specific N frames [F 1 , F 2 ,...,F N ] of the human skeleton sequence s, let (x i ,y i ,z i ) is the nth frame {F n} ∈ 3D coordinates of each human joint in s, where n ∈ [1,N].

[0042] Next, the joint pose sequence transforms the 3D coordinates of each human joint in s into the 3D joint coordinates in the normalized space s′ (x′ i ,y′ i ,z' i ), stack the coordinates of all normalized spaces to form a time series [F′ 1 ,F' 2 ,…,F′ n ] to represent the entire action sequence, quantizing these elements into the RGB color space. In the order of two arms, one torso, and...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap