A Depth Camera Localization Method Based on Convolutional Neural Network

A convolutional neural network and depth camera technology, applied in the field of robot vision positioning, can solve problems such as positioning failure, loss of information, and inability to fully extract unstructured point cloud features, and achieve improved convenience, deployment and use Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0038] In order to further understand the present invention, the preferred embodiments of the present invention are described below in conjunction with examples, but it should be understood that these descriptions are only to further illustrate the features and advantages of the present invention, rather than limiting the claims of the present invention.

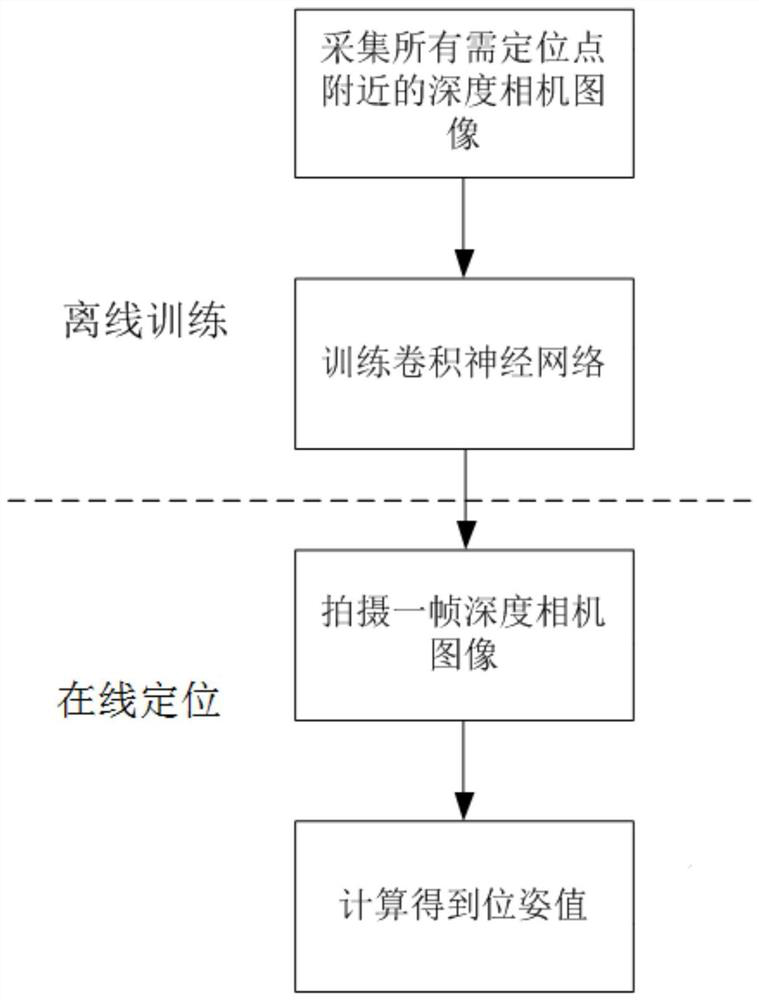

[0039] An embodiment of the present invention provides a method for positioning a depth camera based on a convolutional neural network. The color image acquired by the depth camera is fused with the depth image as input, and the pose of the camera is calculated.

[0040] In this embodiment, a convolutional neural network capable of fusing input color image and depth image information and outputting image shooting poses is firstly established.

[0041] Specifically, the convolutional neural network proposed in this embodiment is composed of a graph fusion generation module and a graph neural network module.

[0042] In this e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com