Gesture recognition method, device, intelligent equipment and computer readable storage medium

A gesture recognition and gesture technology, applied in the field of human-computer interaction, can solve the problems of narrowing differences, lack of adaptability to spatial scale changes, etc., and achieve the effect of narrowing differences and accurate gesture recognition

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

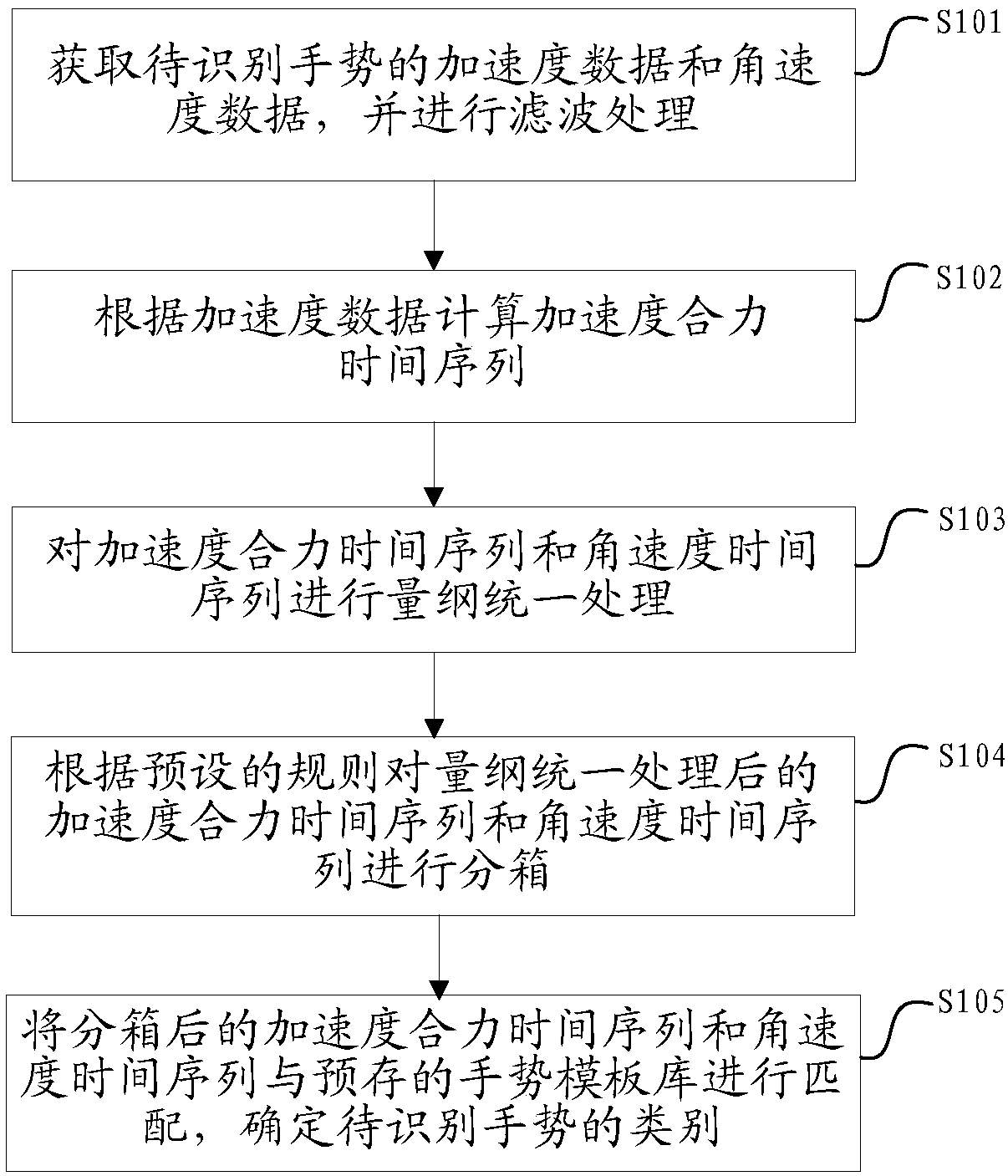

[0030] Such as figure 1 As shown, a gesture recognition method provided by an embodiment of the present invention includes:

[0031] S101. Acquire acceleration and angular velocity data of a gesture to be recognized, and perform filtering processing.

[0032] Specifically, acceleration and angular velocity data are generally collected through an acceleration sensor and a gyroscope configured in a smart device, and the acceleration and angular velocity data are used to describe gesture behavior information. However, non-target gesture signals will be mixed in the acquisition process, and filtering processing is required to avoid its influence. There are many filtering methods. Experiments and research show that the frequency of gesture behavior is generally below 3.5HZ, so it is better to use a low-pass filter with a cutoff frequency of 3.5HZ to filter acceleration and angular velocity data.

[0033] S102. Calculate the time series of acceleration resultant force according to...

Embodiment 2

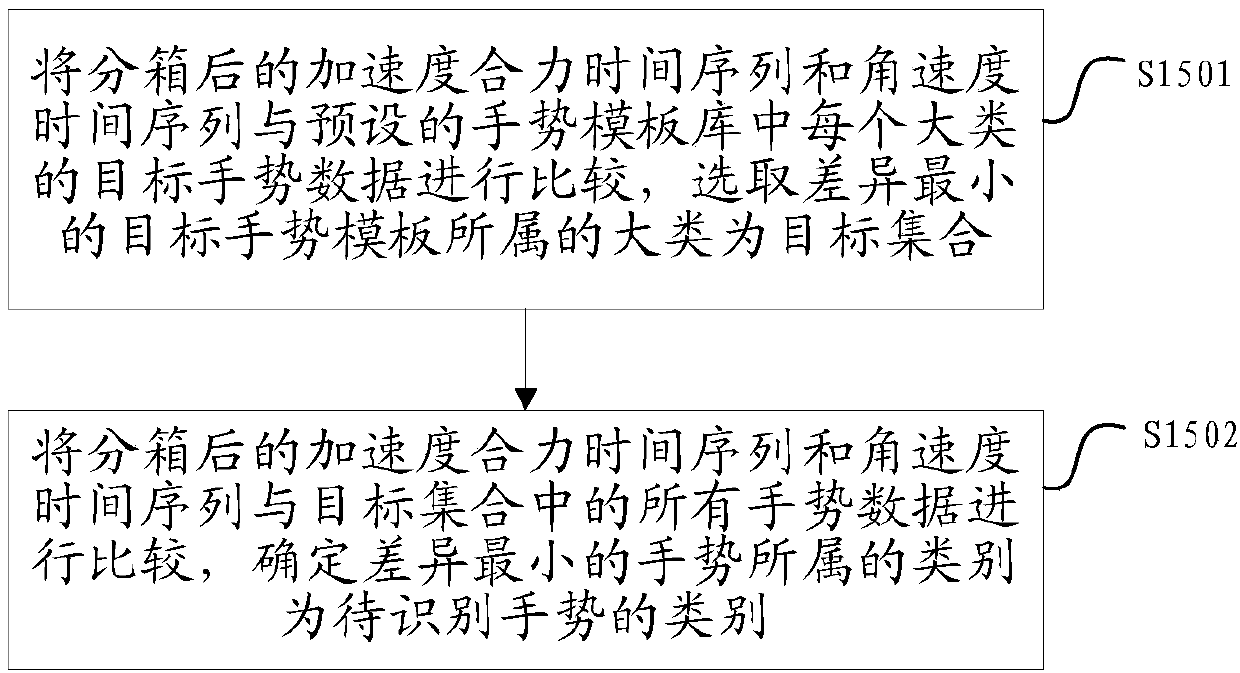

[0060] Such as figure 2 As shown, the gesture template matching method provided by the embodiment of the present invention includes:

[0061] S1051. Compare the binned acceleration resultant force time series and angular velocity time series with the target gesture data of each category in the preset gesture template library, and select the category to which the target gesture template with the smallest difference belongs to as the target set.

[0062] Wherein, the preset gesture template library is pre-stored in a two-layer classified template library on the smart device. In order to ensure the recognition accuracy, a large number of samples are usually generated for each gesture in advance, after the processing of steps S101 to S104 in the first embodiment above, and then use the DTW algorithm to obtain samples with the smallest average Euclidean distance from other similar gestures and store them in the template library as templates . Multiple gesture templates can be ge...

Embodiment 3

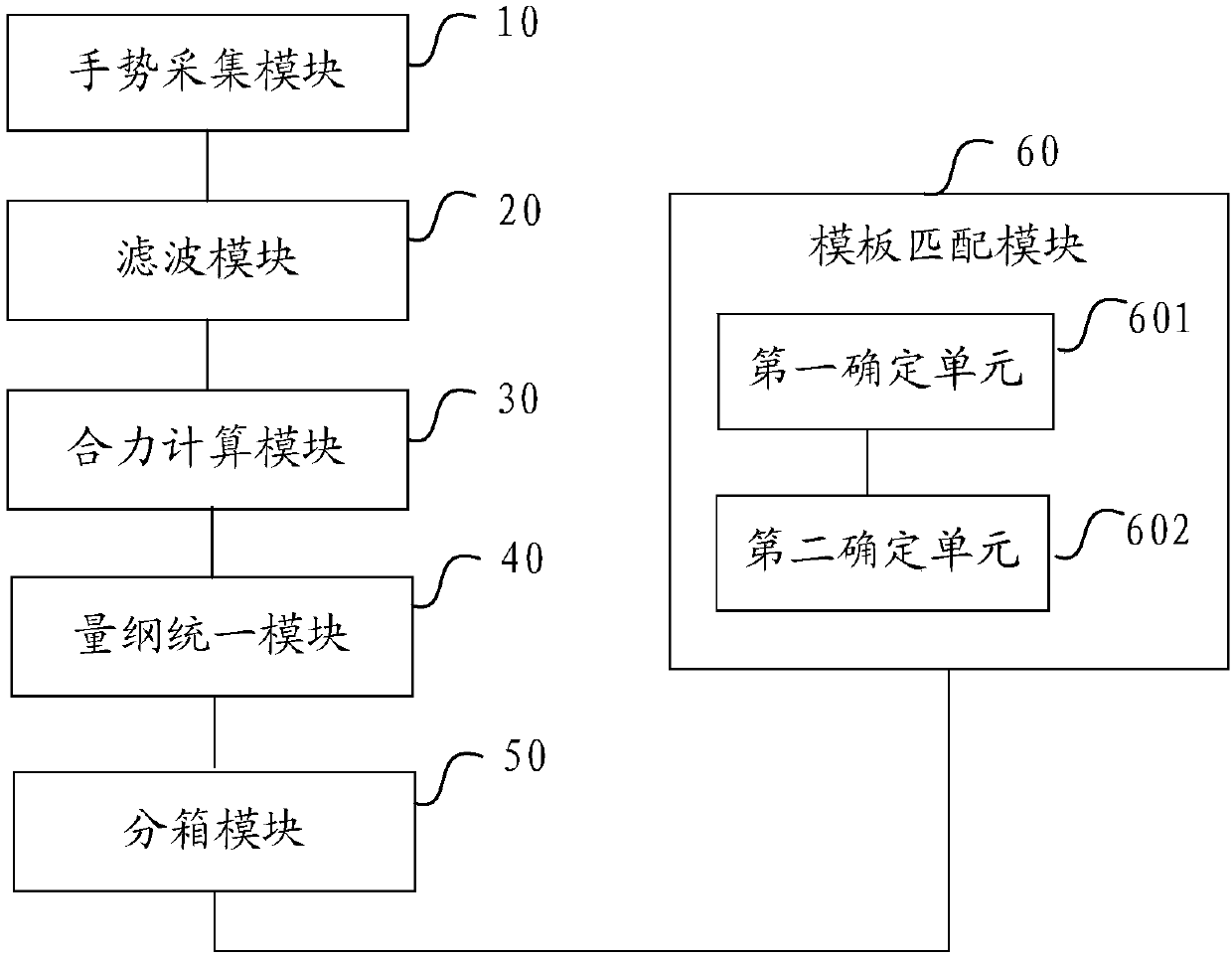

[0068] Such as image 3 As shown, a gesture recognition device provided by an embodiment of the present invention includes a gesture acquisition module 10, a filter module 20, a resultant force sequence calculation module 30, a dimension unification module 40, a binning module 50, and a template matching module 60, wherein:

[0069] The gesture collection module 10 is used to acquire the acceleration and angular velocity data of the gesture to be recognized.

[0070] The filtering module 20 is used for filtering the acceleration and angular velocity data.

[0071] The resultant force sequence calculation module 30 is configured to calculate the acceleration resultant force time series according to the acceleration data.

[0072] The dimensional unification module 40 is used for unifying the dimensions of the acceleration resultant force time series and the angular velocity time series.

[0073] The binning module 50 is configured to bin the acceleration resultant force time ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com