A Human Action Classification Method Based on Fusion Features

A technology of human action and classification methods, applied in neural learning methods, instruments, biological neural network models, etc., can solve problems such as difficulty in extracting distinguishing features, small temporal and spatial differences between classes, etc., to improve effectiveness and classification accuracy. , The effect of simplifying the data collection process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0086] Below in conjunction with the accompanying drawings and specific embodiments, based on the classification of 500 and 5 types of action videos collected, the present invention is further clarified. It should be understood that these embodiments are only used to illustrate the present invention and not to limit the scope of the present invention. After discovering the present invention, modifications to various equivalent forms of the present invention by those skilled in the art all fall within the scope defined by the appended claims of the present application.

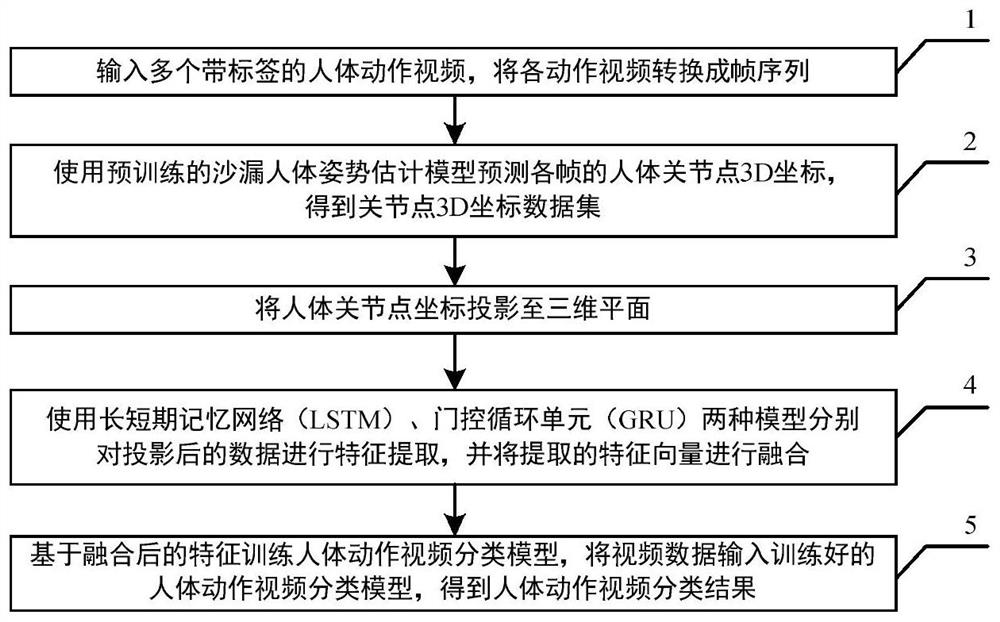

[0087] Such as figure 1 As shown, a kind of action classification method based on fusion feature of the present invention comprises the following steps:

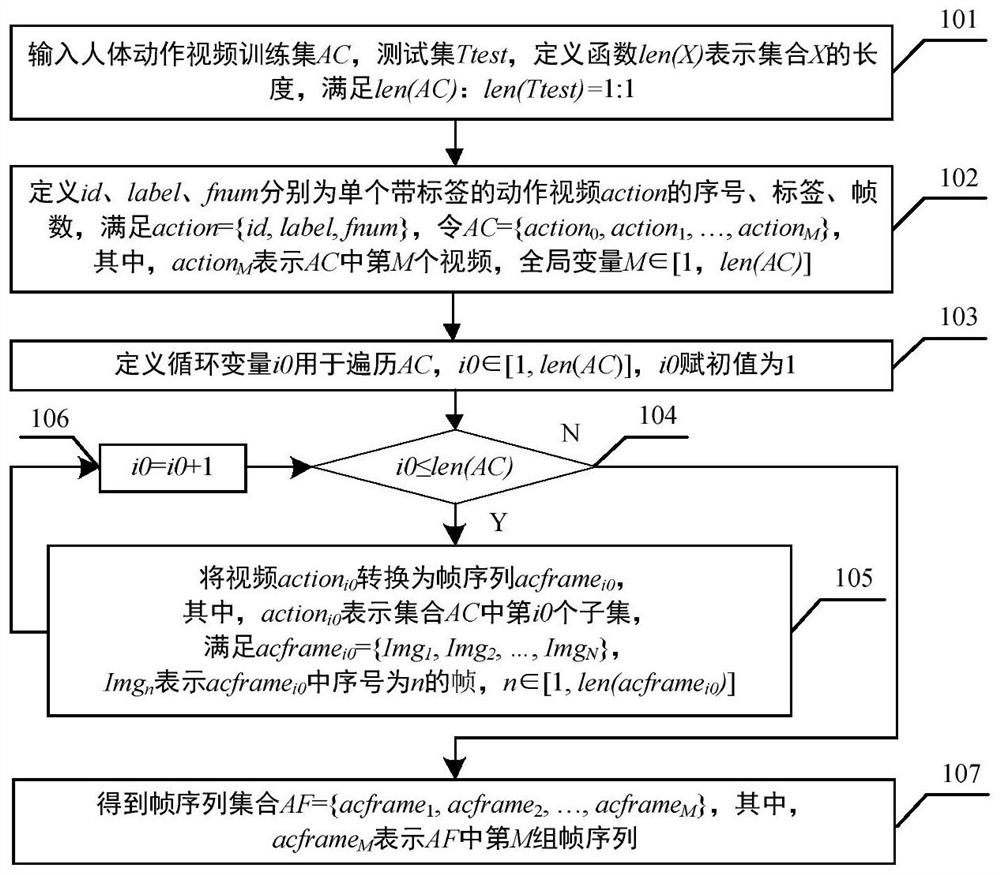

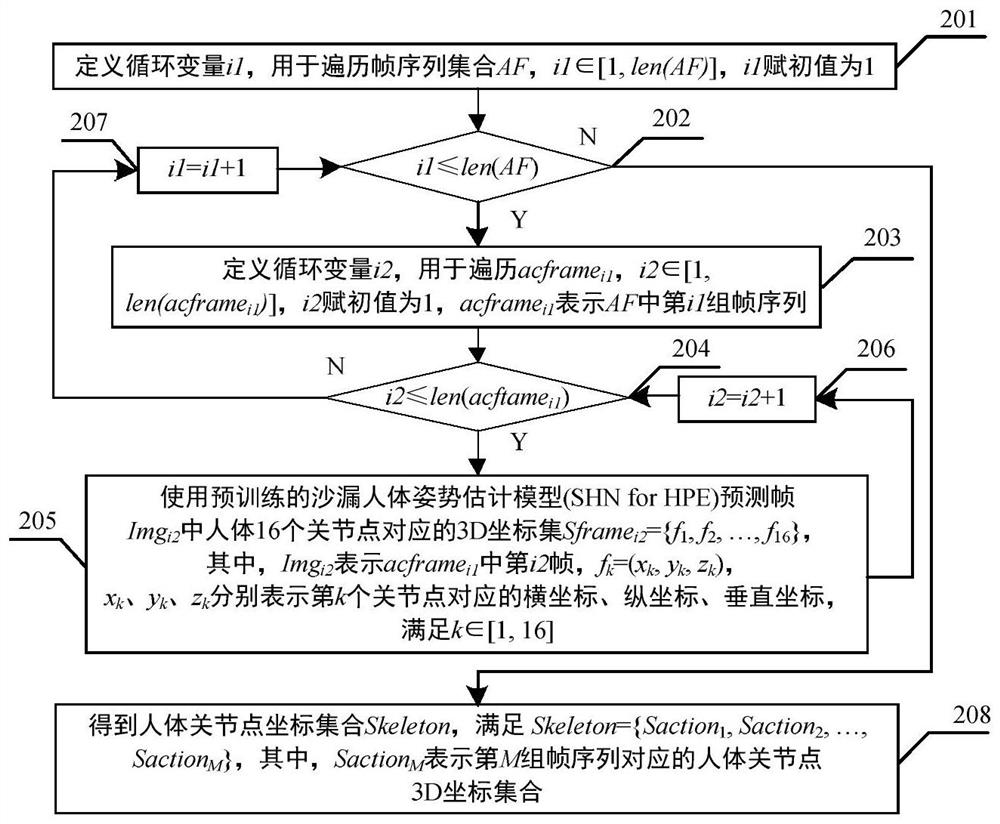

[0088] (1) Input multiple labeled human action videos, and convert each human action video into a frame sequence, such as figure 2 As shown, it specifically includes the following steps:

[0089] (101) input human action video training set AC, test set Tte...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com