Query method based on distributed search engine, server and storage medium

A search engine and query method technology, applied in the computer field, can solve the problems of low query efficiency and long response time, and achieve the effect of load balancing and reducing response time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

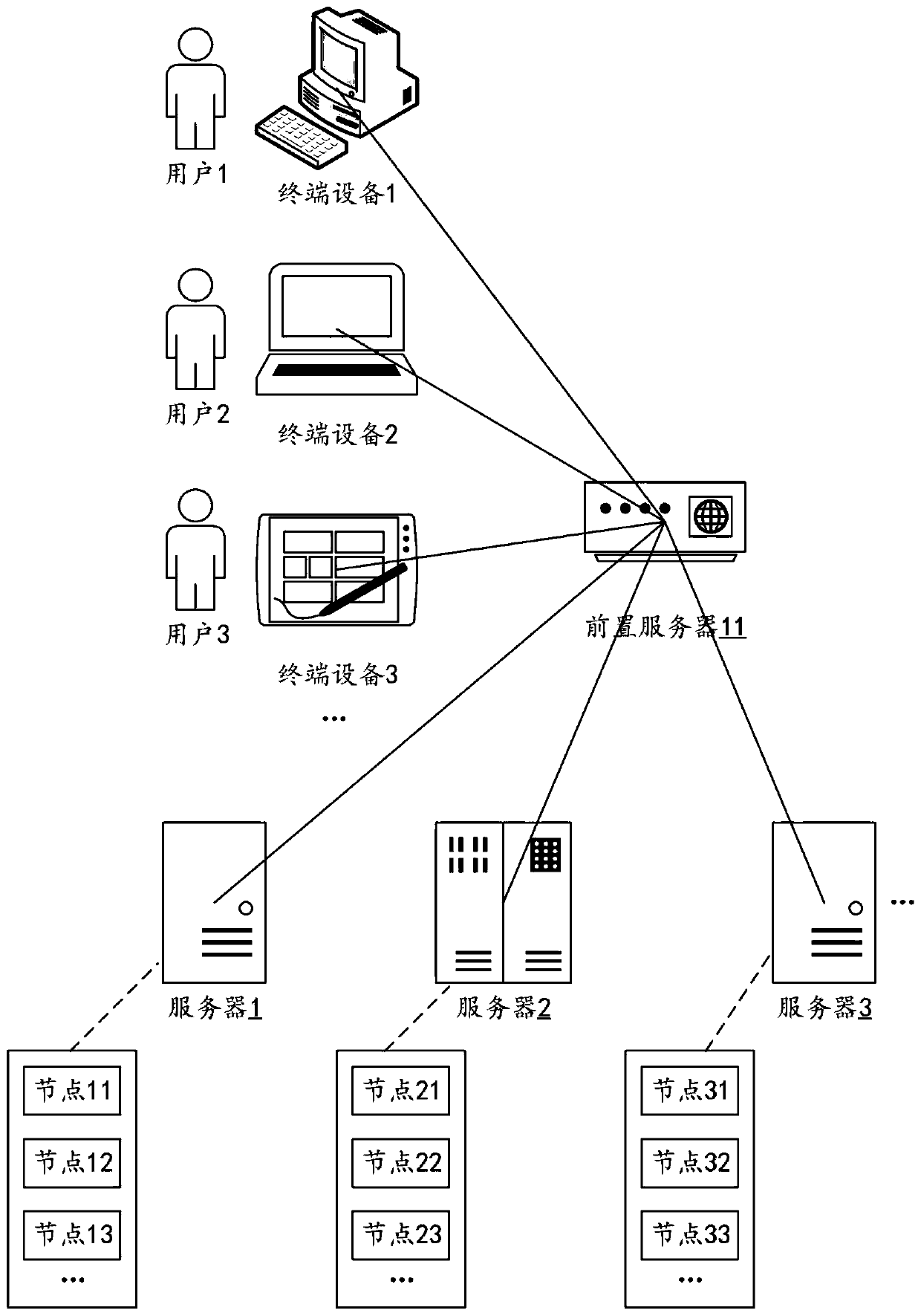

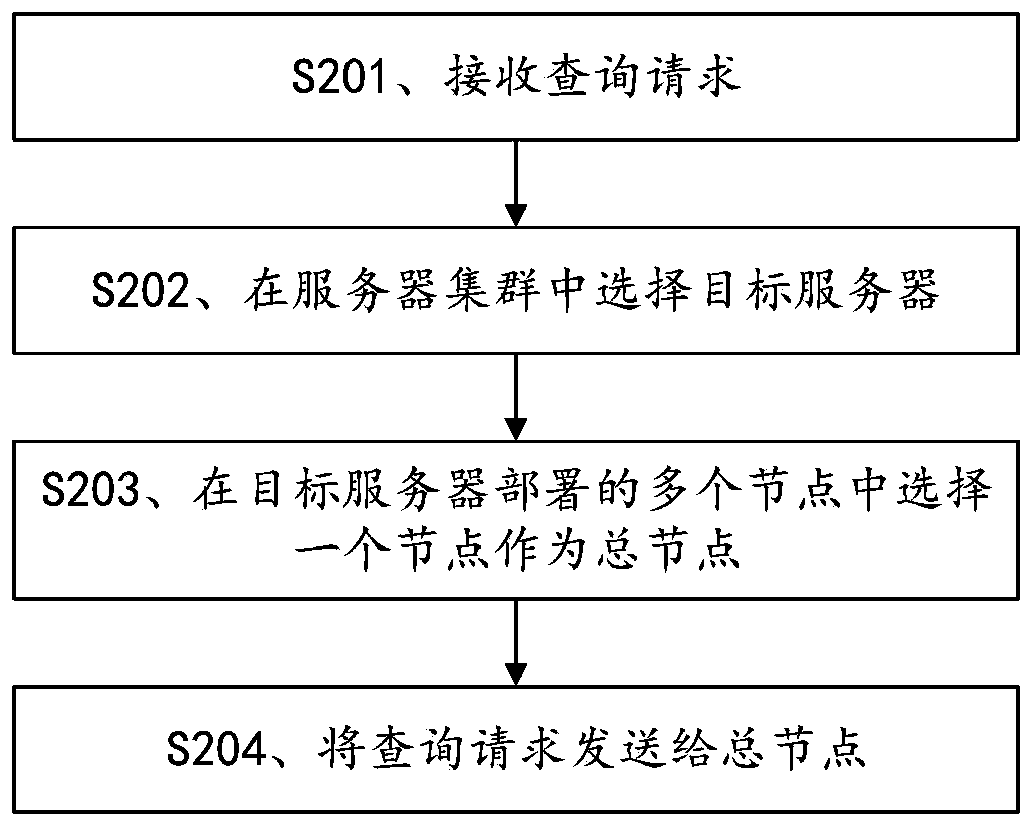

[0177] Embodiment 1: The device 7 is a front-end server.

[0178] The receiving unit 301 is configured to receive a query request.

[0179] The processing unit 302 is configured to select a target server in the server cluster; wherein, multiple nodes are deployed in the target server.

[0180] The processing unit 302 is further configured to select a node among the multiple nodes deployed on the target server as the general node;

[0181] A sending unit 303, configured to send the query request to the general node; wherein, the query request is used to instruct the general node to send the query request to each server in the first server set for processing, and the first server A set includes servers in the server cluster other than the target server.

[0182] Optionally, the processing unit 302 selecting a target server in the server cluster includes:

[0183] Randomly select a server in the server cluster as the target server; or

[0184] Monitoring the load information of...

Embodiment 2

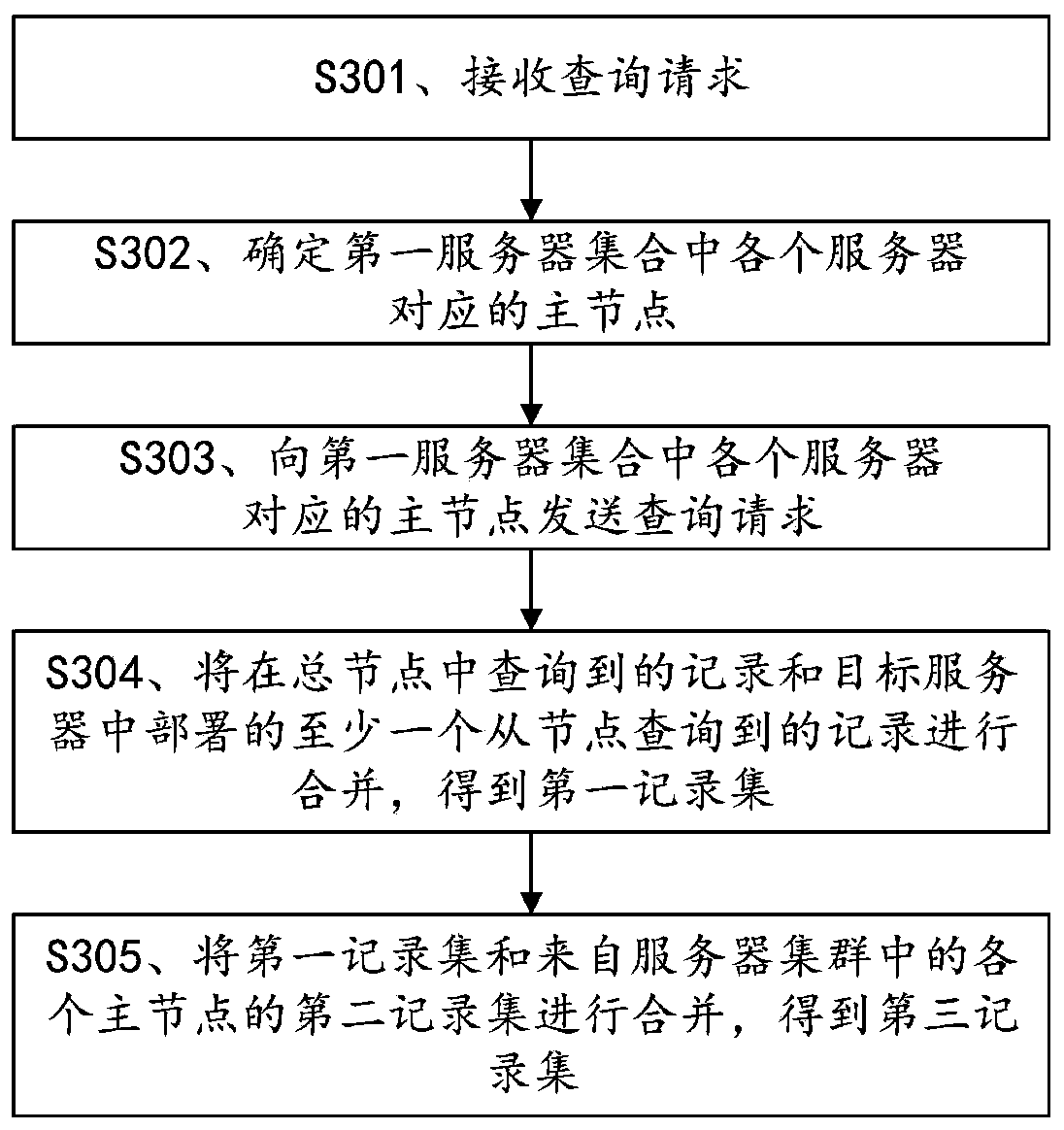

[0190] Embodiment 2: The device 7 is a target server.

[0191] The receiving unit 301 is configured to receive a query request.

[0192] The processing unit 302 is configured to determine a master node corresponding to each server in the first server set; wherein, the target server is located in a server cluster, and the target server is deployed with a master node and at least one slave node.

[0193] A sending unit 303, configured to send a query request to a master node corresponding to each server in the first server set;

[0194] The processing unit 302 is further configured to combine the records queried from the master node and the records queried from at least one slave node deployed in the target server to obtain a first record set.

[0195] The receiving unit 301 is further configured to receive the second record set sent from the master node corresponding to each server in the first server set in response to the query request;

[0196] The processing unit 302 is f...

Embodiment 3

[0213] Embodiment 3: The device 7 is a server.

[0214] The receiving unit 301 is configured to receive a query request from the master node deployed in the target server.

[0215] A processing unit 302, configured to combine the records queried on the master node with the records queried on at least one slave node associated with the master node to obtain a second record set;

[0216] A sending unit 303, configured to send the second record set to the master node deployed in the target server.

[0217] Optionally, the processor 302 merges the record queried on the master node with the record queried on at least one slave node associated with the master node to obtain the second record set including:

[0218] performing a union operation on the records queried on the master node and the records queried on the at least one slave node;

[0219] Arrange the records after the union operation in ascending order according to the score;

[0220] selecting a first preset number of ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com