Sound scene classification method based on network model fusion

A scene classification and network model technology, which is applied in biological neural network models, neural learning methods, speech analysis, etc., can solve the problems of poor robustness, pattern recognition algorithm recognition ability greatly affected by environmental changes, misjudgment and missed judgment, etc. problem, to achieve the effect of improving the recognition rate and robustness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The present invention will be further described below in conjunction with the accompanying drawings.

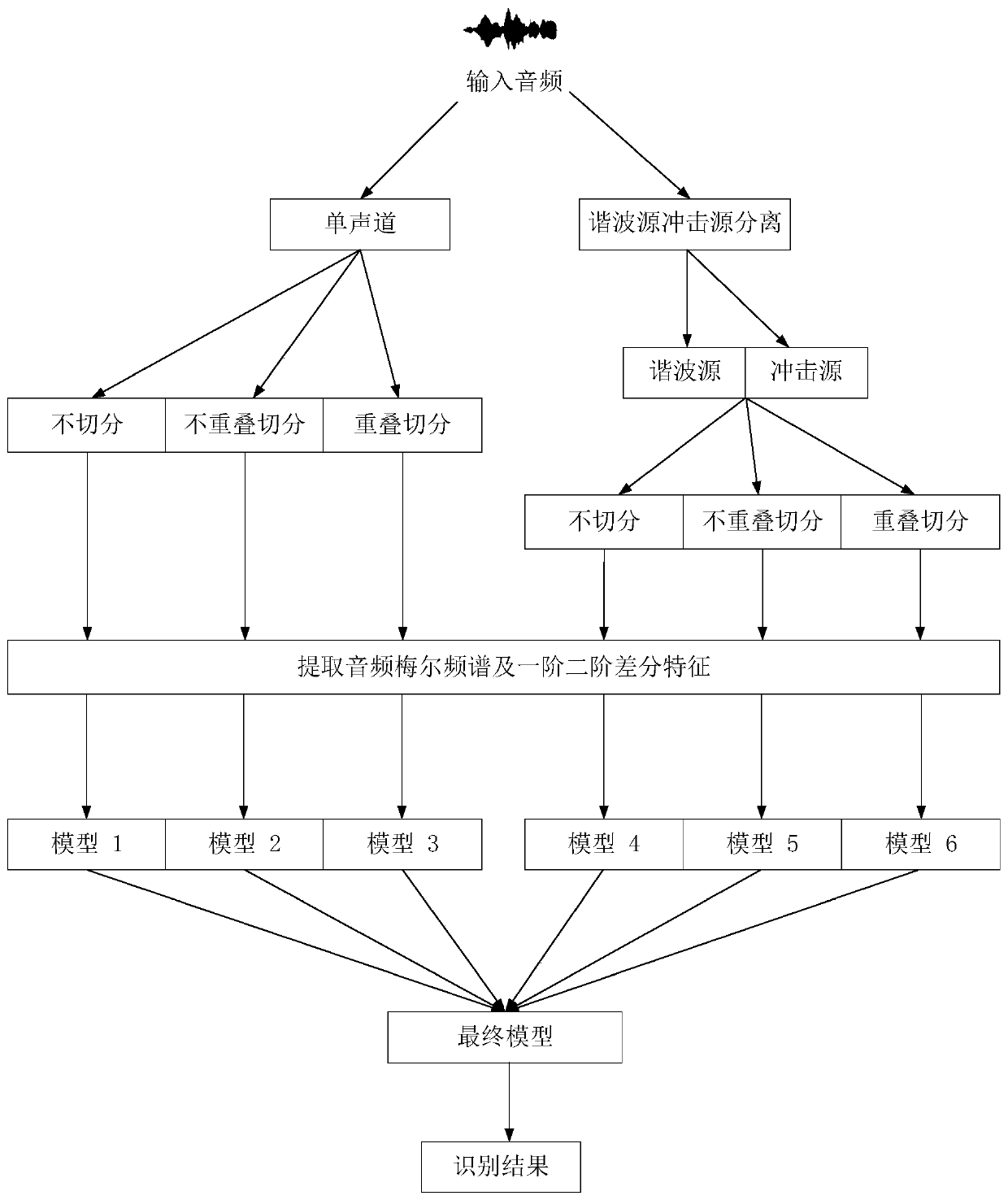

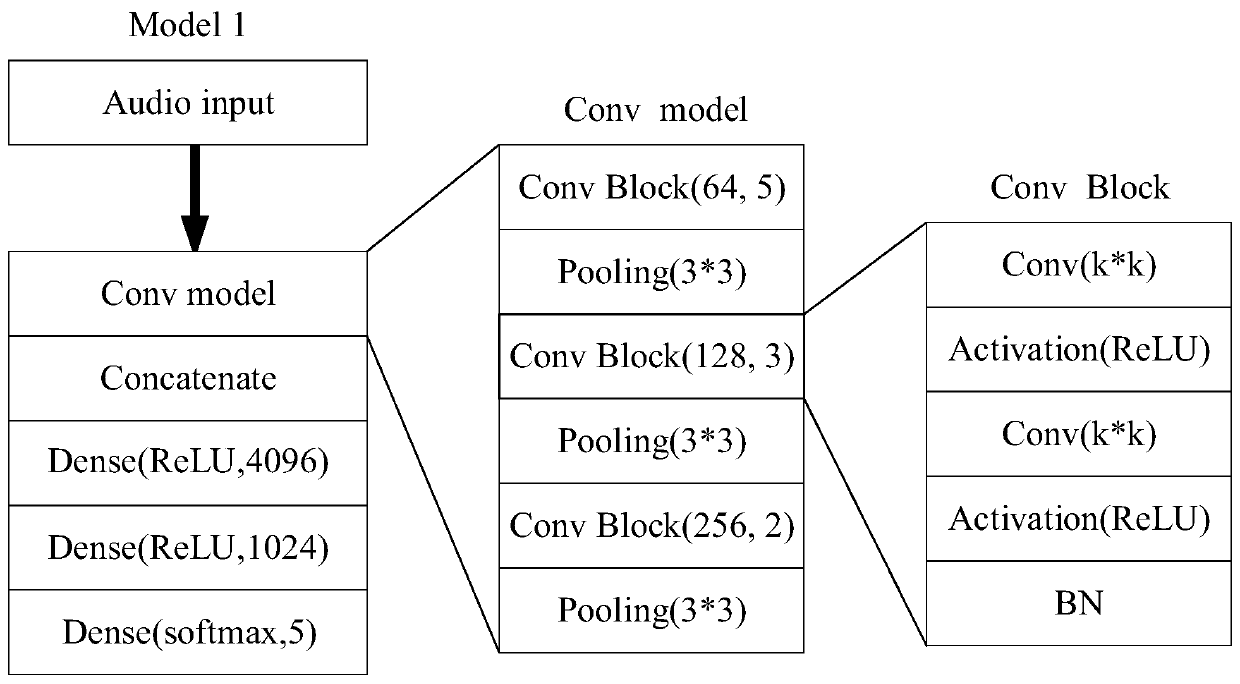

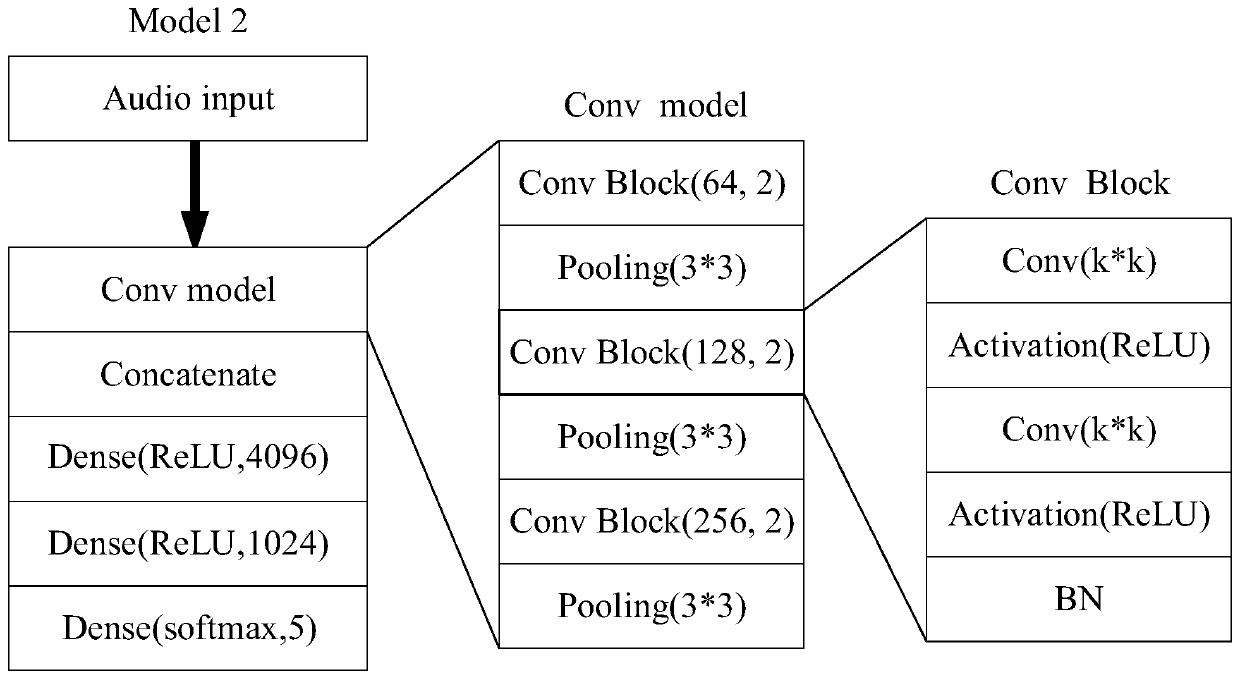

[0041] Such as Figures 1 to 7 As shown, taking 6 models as examples, the acoustic scene classification method based on network model fusion of the present invention is introduced. Include the following steps,

[0042] Step (1), first divide the sample into frames, the frame length is 50ms, and the frame shift is 20ms; secondly, calculate FFT for each frame of data, and the number of FFT points is 2048; thirdly, use 80 gamma-pass filter banks to calculate the gamma-pass Filter cepstral coefficients; use the Mel filter bank with 80 subband filters to calculate the logarithmic Mel spectrogram; finally, calculate the first-order and second-order differences of the Mel spectrum, and finally obtain the multi-channel input features.

[0043]Step (2), constructing six different input features through different channel separation methods and audio cutting methods; constructi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com