Video action detection method based on time sequence convolution modeling

A timing and action technology, applied in video processing and image fields, can solve problems such as difficult training and poor performance of dual-stream networks, and achieve the effects of accurate detection and overcoming incompleteness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

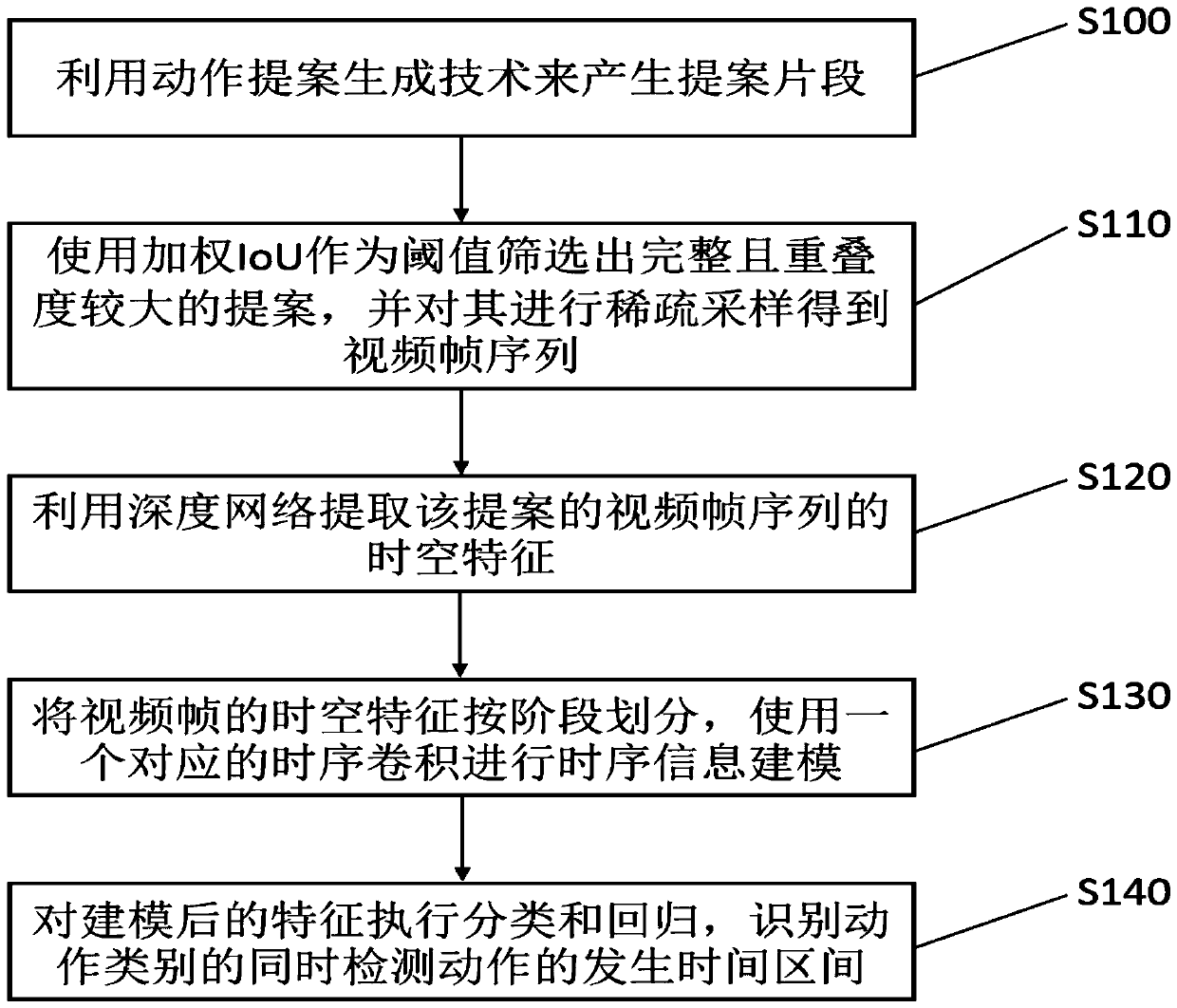

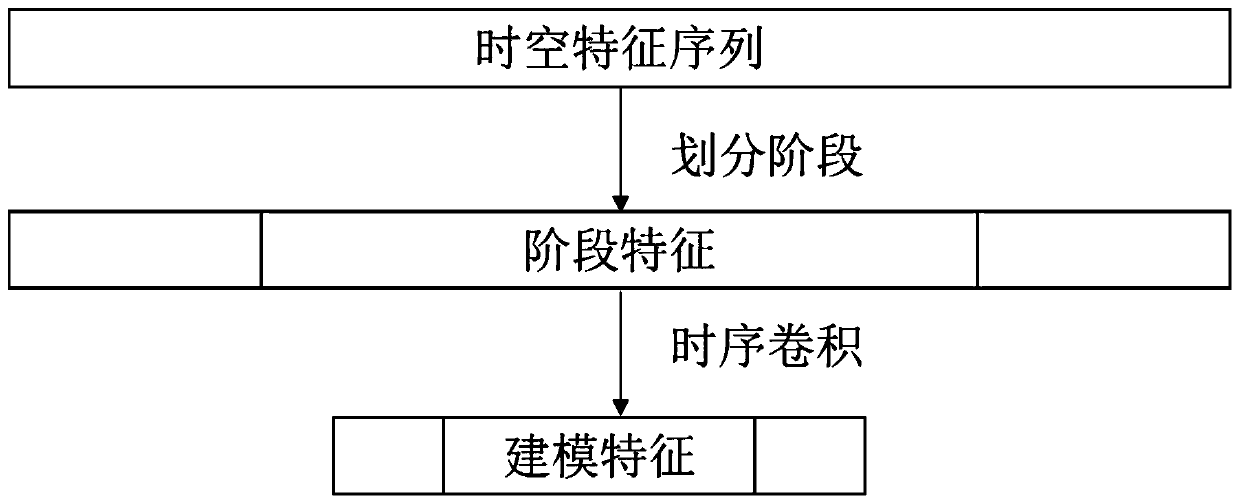

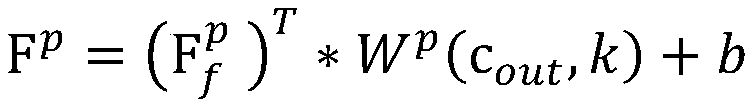

[0031] The technical solutions in the embodiments of the present invention will be described below in conjunction with the drawings in the embodiments of the present invention. figure 1 A flow chart of a video timing action detection method based on timing convolution modeling provided by the present invention, the method includes the following steps:

[0032] S100: Traverse the video stream through the motion proposal generation technology to generate proposal fragments that contain as much motion as possible.

[0033] The above actions are not limited in type and complexity, and can also be a certain kind of activity, such as running, horse riding, etc.

[0034] In one implementation, a multi-scale action proposal can be generated by sliding windows of different scales on the video sequence. The binary classification model can be further used to remove some background fragments and retain the action fragments, thereby optimizing the quality of the proposal.

[0035] In another impl...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com