Optical Remote Sensing Image Retrieval Method Based on Attention and Generative Adversarial Networks

An optical remote sensing image and attention technology, applied in still image data retrieval, neural learning methods, biological neural network models, etc., can solve the problems of reducing quantization errors, large time consumption, and affecting retrieval accuracy, so as to improve accuracy, Improved retrieval accuracy and reduced quantization error

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

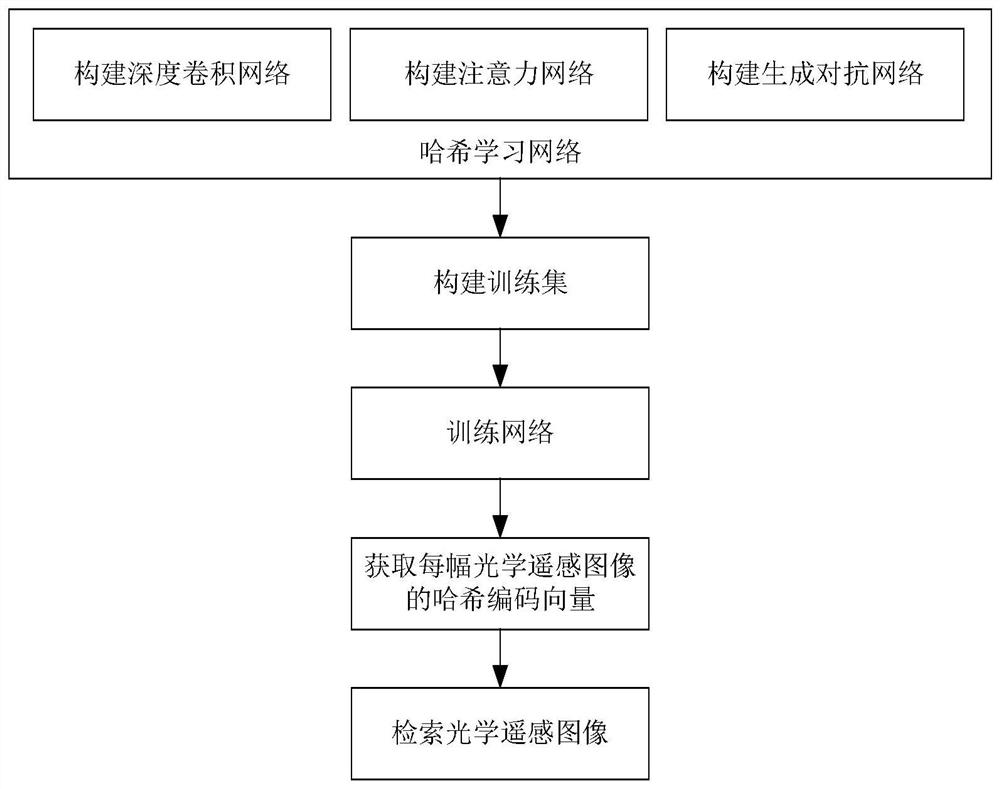

[0062] The present invention will be described in further detail below in conjunction with the accompanying drawings.

[0063] Refer to attached figure 1 , the steps of the present invention are further described in detail.

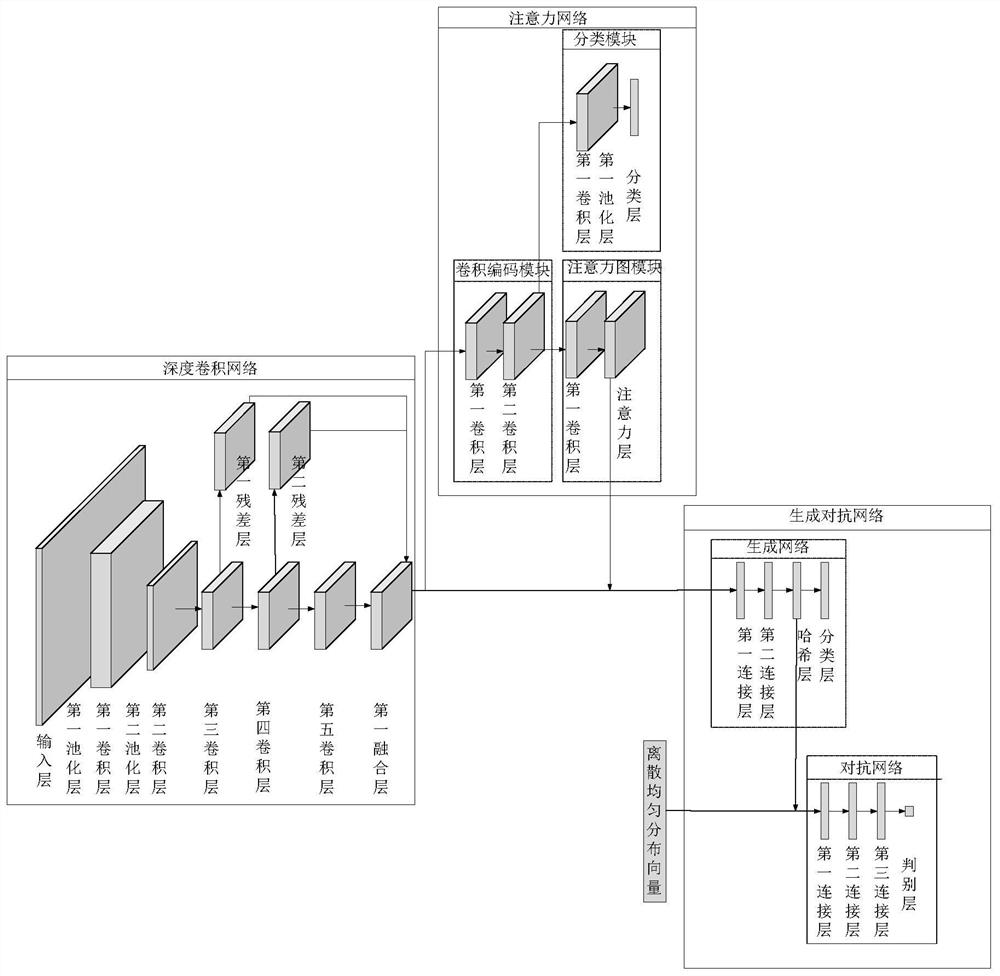

[0064] Step 1, construct a deep convolutional network.

[0065] Build an 11-layer deep convolutional network, and its structure is as follows: input layer → first convolutional layer → first pooling layer → second convolutional layer → second pooling layer → third convolutional layer → fourth Convolutional layer → fifth convolutional layer → first fusion layer; where the third convolutional layer is connected to the first fusion layer through the first residual layer, and the fourth convolutional layer is connected to the first fusion layer through the second residual layer connect.

[0066] Set the parameters of each layer as follows:

[0067] Set the total number of input layer feature maps to 3.

[0068] The total number of feature maps of the fir...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com