CNN model compression method and device based on DS structure, and storage medium

A compression method and model technology, which is applied in the field of neural networks, can solve problems such as uneven compression effects and loss of model accuracy, and achieve the effects of reducing video memory occupation, reducing the number, and reducing the amount of floating-point operations

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0032] It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

[0033] When the existing neural network is compressed, it usually converts a large and complex pre-training model into a streamlined small model to achieve the purpose of compression. However, this compression method not only has uneven compression effects, but also the compressed model There is a serious loss of precision.

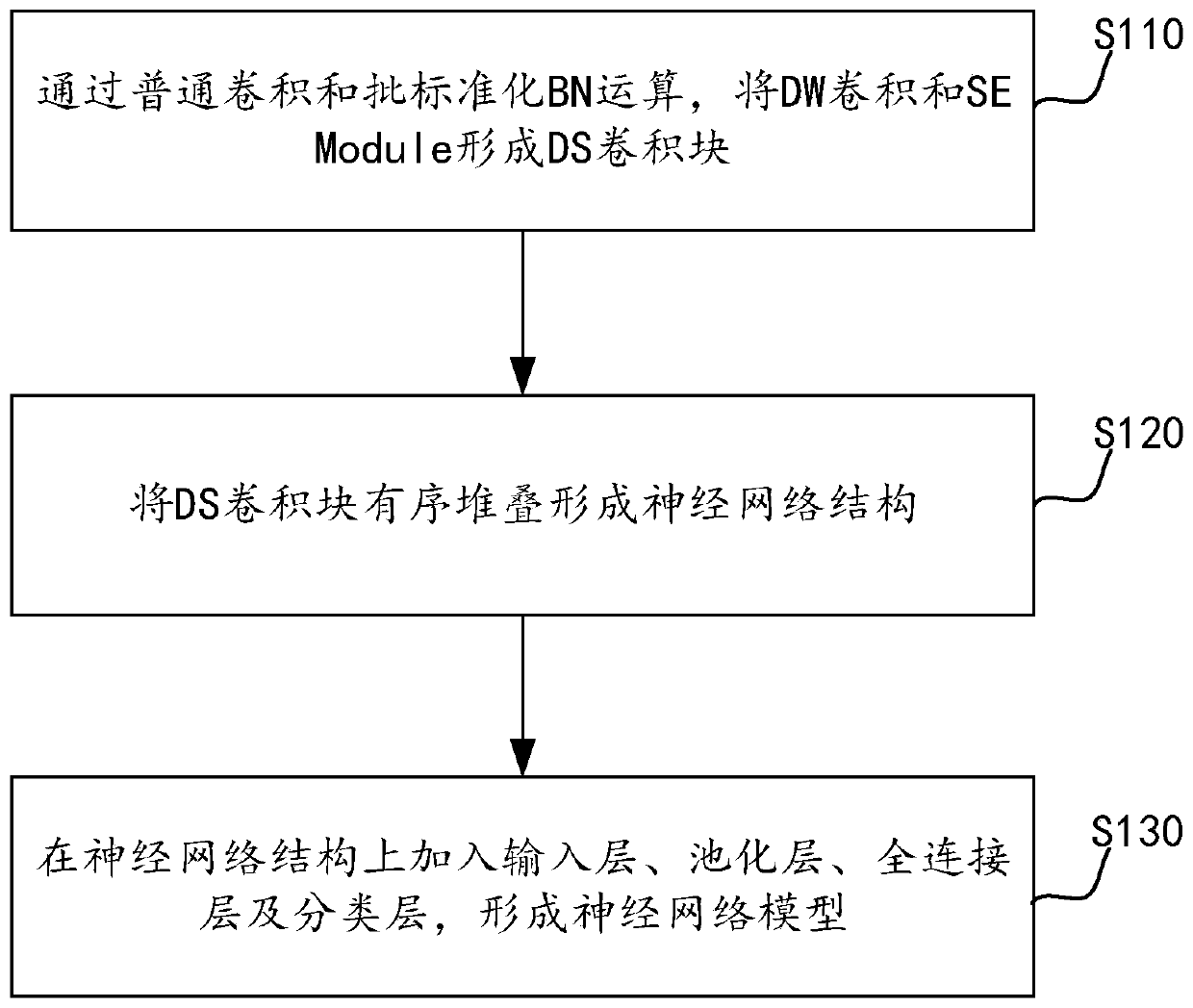

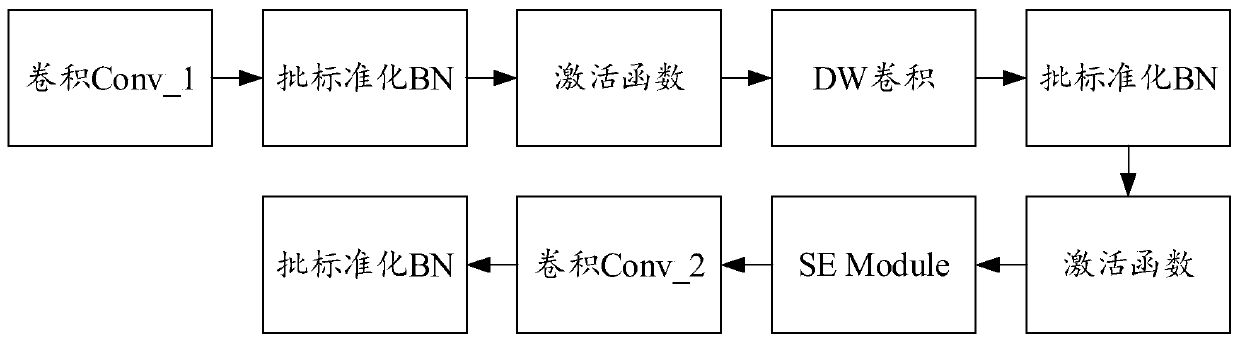

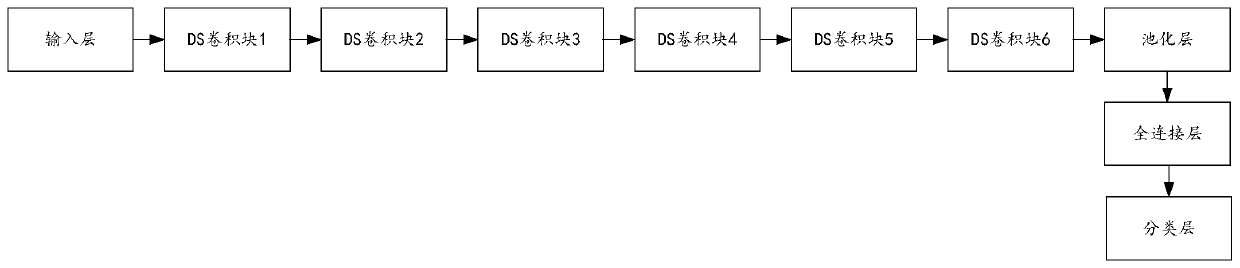

[0034] The present invention is based on depthwise separable convolution (Depthwise-Pointwise) and lightweight attention structure (Squeeze-Excitation), using common convolution and BN (BatchNorm) operations in combination, and finally designs a compact CNN component-DS volume Blocks, the orderly stacking of the DS convolution blocks can form a model with fewer parameters but basically no loss of accuracy, thereby greatly reducing the memory usage and floating-point operations of the model, and f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com