Vehicle detection method based on monocular vision and laser radar fusion

A laser radar and vehicle detection technology, applied in character and pattern recognition, instruments, computer components, etc., can solve the problems of vehicle position and laser radar missed detection, eliminate overlapping detection results, suppress sample imbalance, and reduce the effect of time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

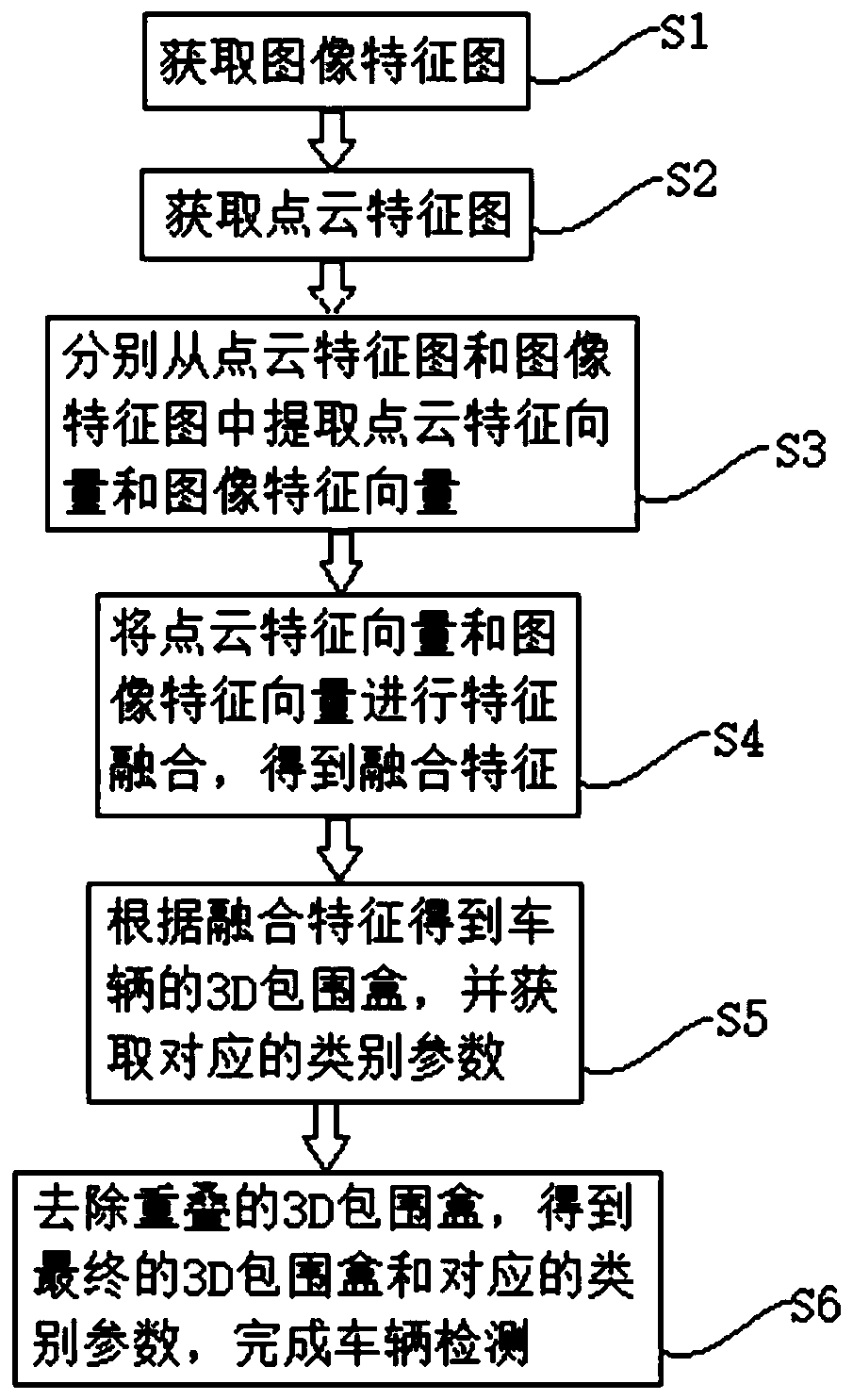

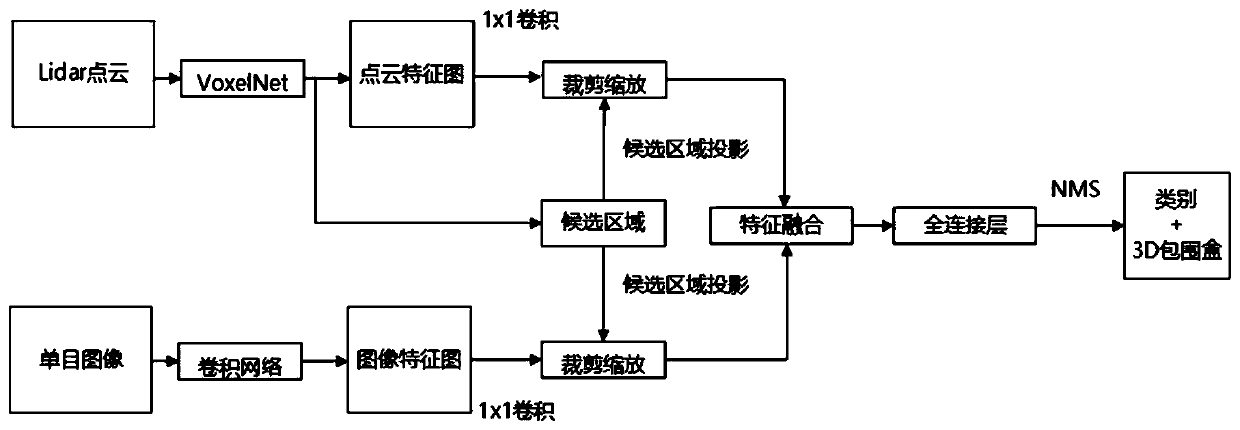

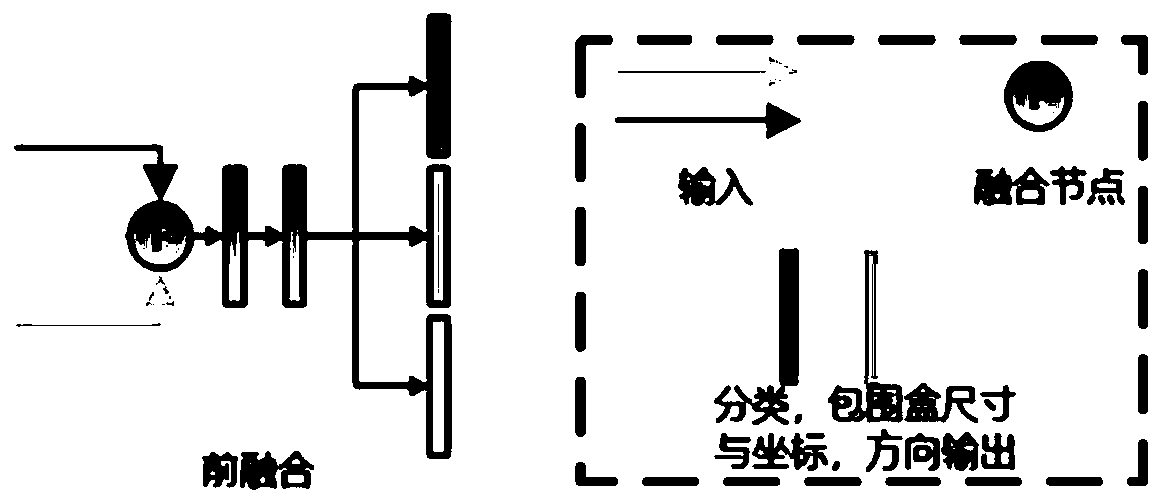

[0049] A vehicle detection method based on monocular vision and lidar fusion, including: using a feature pyramid network to extract image feature maps; using a VoxelNet network to obtain point cloud feature maps and extract 3D candidate regions; extract point clouds of regions of interest based on candidate regions Features and image features; use the pre-fusion strategy to fuse image features and point cloud features to obtain fusion features; use fusion features to estimate target categories and 3D bounding boxes; use non-maximum value suppression method to remove overlapping redundant bounding boxes and other steps, the overall process Such as figure 2 shown, including:

[0050] Step 1: Normalize the input image. First, calculate the mean value of the training set image on the R / G / B three channels. During training and detection, it is necessary to put the image on the R / G / B three channels The pixel values of the mean are subtracted respectively;

[0051] Step 2: For th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com