One-dimensional convolution acceleration device and method for complex neural network

A neural network and acceleration device technology, applied in the field of hardware acceleration design, can solve the problems of reduced computing performance, unsupported cross-channel convolution calculation, etc., and achieve the effect of reducing utilization rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

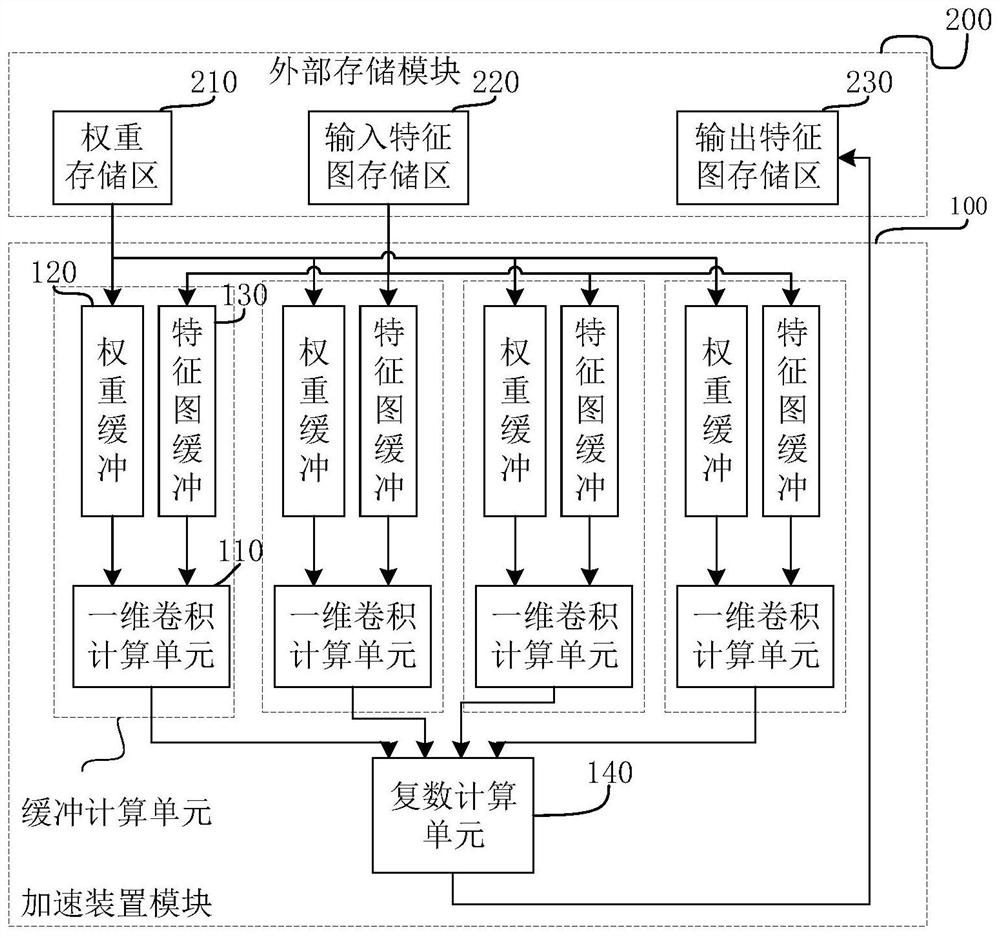

[0053] The first embodiment of the present invention provides a one-dimensional convolution calculation acceleration device for a complex neural network, and the structural diagram is as follows figure 1 shown. The acceleration device 100 is connected to the external storage 200; the external storage 200 stores a weight storage area 210 for input calculation, an input feature map storage area 220 and a calculation result output feature map storage area 230.

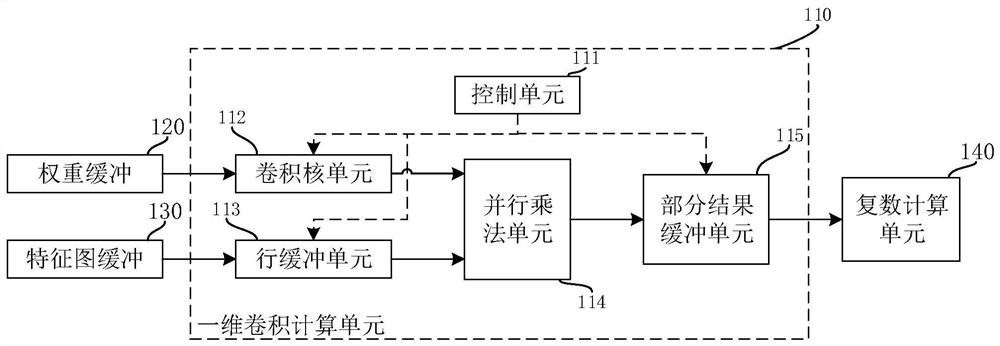

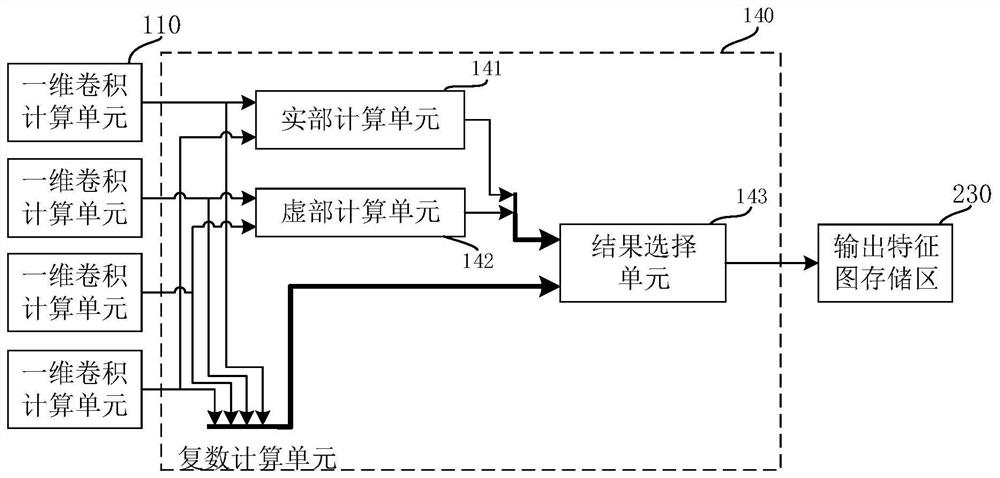

[0054] The acceleration device 100 includes a one-dimensional convolution calculation unit 110 , a weight buffer 120 , a feature map buffer 130 , and a complex number calculation unit 140 . Among them, the one-dimensional convolution calculation unit 110, the weight buffer 120, and the feature map buffer 130 are all four in number. Each weight buffer 120 is connected to the weight storage area 210 via a bus, and each feature map buffer 130 is connected to the input feature map storage area 220 via a bus. Each weight buf...

Embodiment 2

[0069] The second embodiment of the present invention provides a one-dimensional convolution calculation acceleration method for a complex neural network, the flow chart is as follows Figure 5 shown, including the following steps:

[0070] S100, the weight data and the input feature map data are respectively transmitted from the weight storage area 210 and the input feature map storage area 220 to the weight buffer 120 and the feature map buffer 130 .

[0071] All parameters of the neural network are stored in the weight storage area 210 . If it is a real number neural network, the input feature map storage area 220 stores 4 different input feature maps, and the input feature map channel is C i ; If it is a complex neural network, the input feature map storage area 220 stores one input feature map, and the input feature map channel is 2C i , where the former C i Channels are real data, after C i Channels are the imaginary part data.

[0072] S200, the one-dimensional con...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com