Adversarial sample generation method and device

An anti-sample and iterative technology, applied in the computer field, can solve the problems of weak attack and blocking, and achieve the effect of strong attack and good attack effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

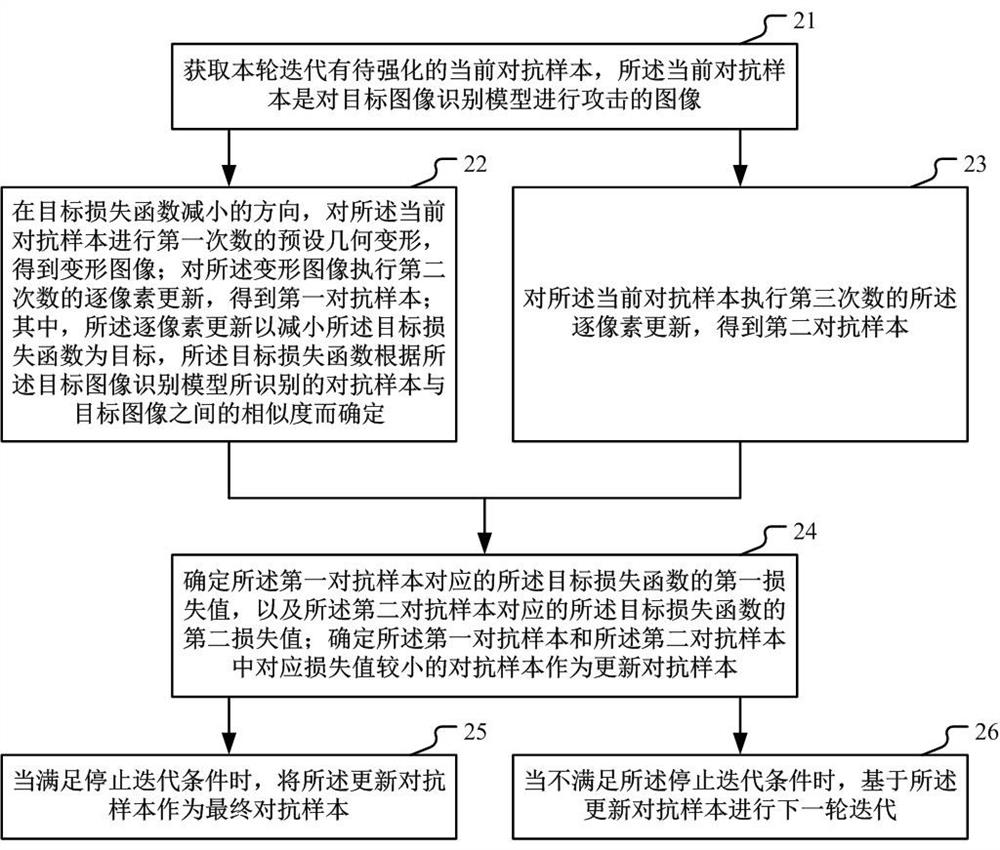

[0051] The solutions provided in this specification will be described below in conjunction with the accompanying drawings.

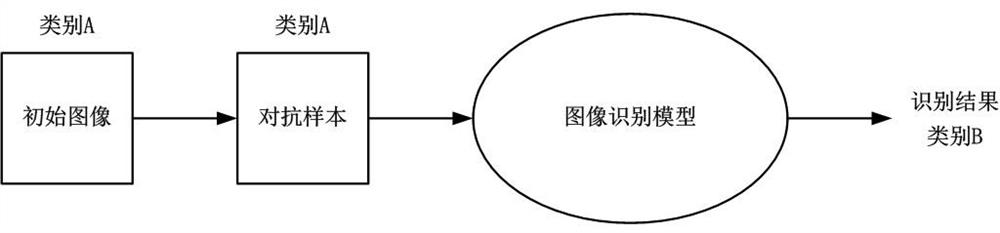

[0052] figure 1 It is a schematic diagram of an implementation scenario of an embodiment disclosed in this specification. This implementation scenario involves the generation of adversarial examples. refer to figure 1, the image recognition model is used to classify the input image. The original image belongs to category A. After adding interference to the original image, the adversarial sample is obtained. Since the above-mentioned interference is relatively small and cannot be felt by the human eye, the adversarial sample still belongs to the category in the eyes of the human eye. A, but input the adversarial sample into the image recognition model, the recognition result of the image recognition model is category B. This kind of attack method that deliberately adds interference to the input sample, causing the model to give a wrong output with a hi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com