Intelligent vehicle lane line semantic segmentation method and system

A semantic segmentation, intelligent vehicle technology, applied in the field of computer vision, can solve the problems of poor robustness, low accuracy of lane line detection, wrong classification of image pixels, semantic segmentation technology, etc., to achieve the effect of improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

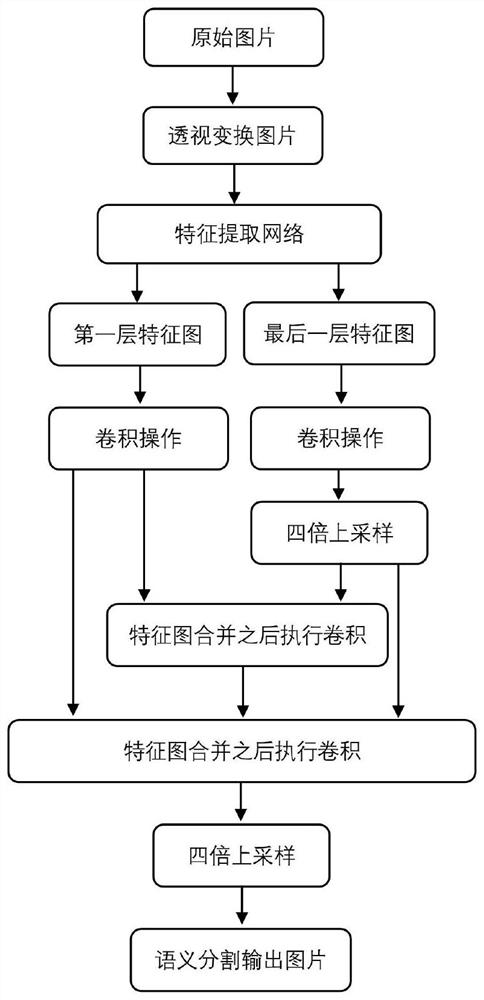

[0044] A kind of method for semantic segmentation of intelligent vehicle lane line provided according to the present invention, comprising:

[0045] Step M1: Select a frame of image in the video collected by the smart vehicle during driving as the input image; perform corresponding perspective transformation on the input image to obtain a road surface image with a suitable angle;

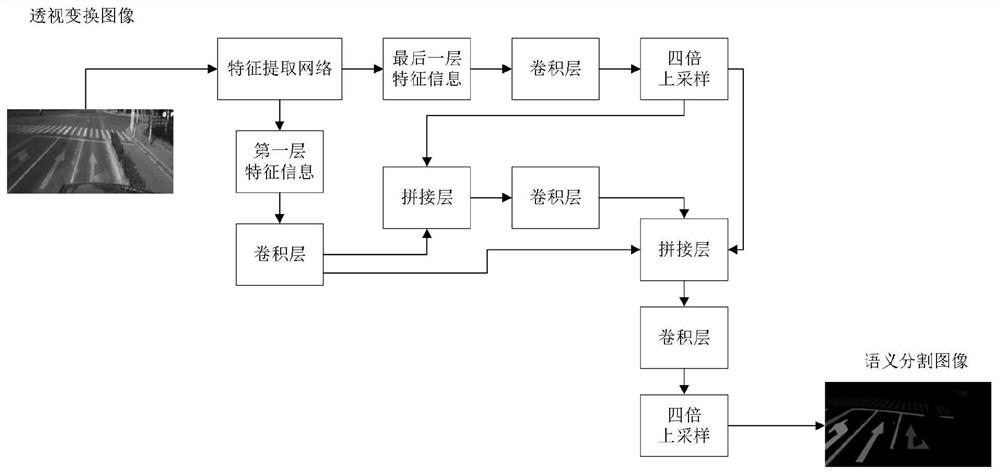

[0046] Step M2: Input the road surface image after the perspective transformation to the feature extraction network for feature extraction, and obtain the feature extraction map of the first layer and the feature extraction map of the last layer of the feature extraction network;

[0047] Step M3: performing a quadruple upsampling operation on the feature extraction map of the last layer, merging it with the feature extraction map of the first layer and performing a convolution operation to obtain the convolved image information;

[0048] Step M4: Perform a quadruple upsampling operation on the feat...

Embodiment 2

[0069] Embodiment 2 is a variation of embodiment 1

[0070] The invention provides a method for semantic segmentation of lane lines of intelligent vehicles, which fully utilizes the importance and redundancy of the feature information of the first layer and the last layer of the feature extraction network to improve the accuracy of semantic segmentation of lane lines. This implementation takes the semantic segmentation of a single image as an example, such as Figure 1-4 As shown, the described semantic segmentation method comprises the following steps:

[0071] Step 1: Take a frame of picture in the video collected during the driving of the smart vehicle as the original image, and perform perspective transformation on it to obtain the input image focusing on road surface information. Resizes the image during the perspective transformation process on the original image.

[0072] Step 2: Input the perspective transformation image obtained in step 1 into the feature extraction...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com