Patents

Literature

126 results about "Decoder architecture" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

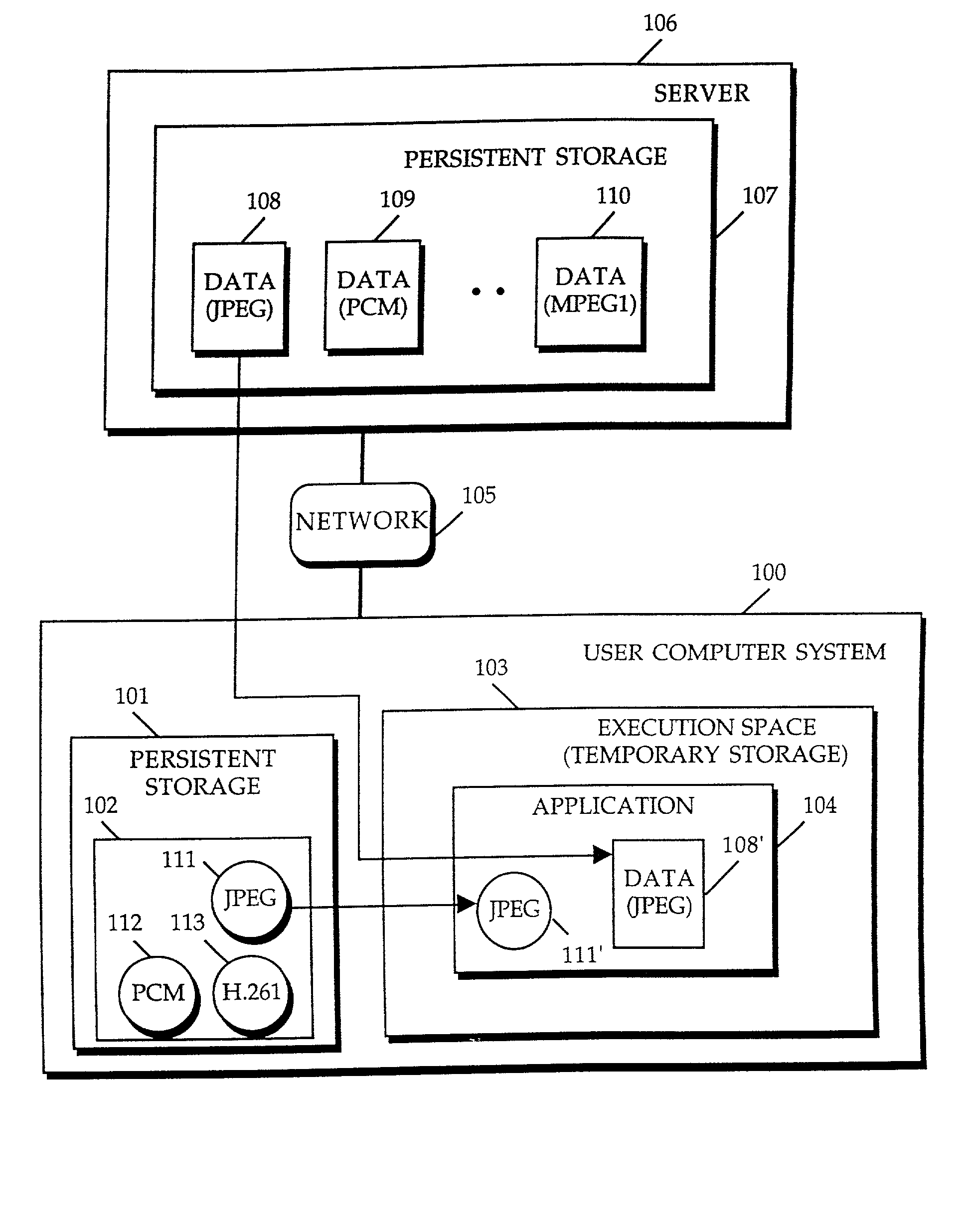

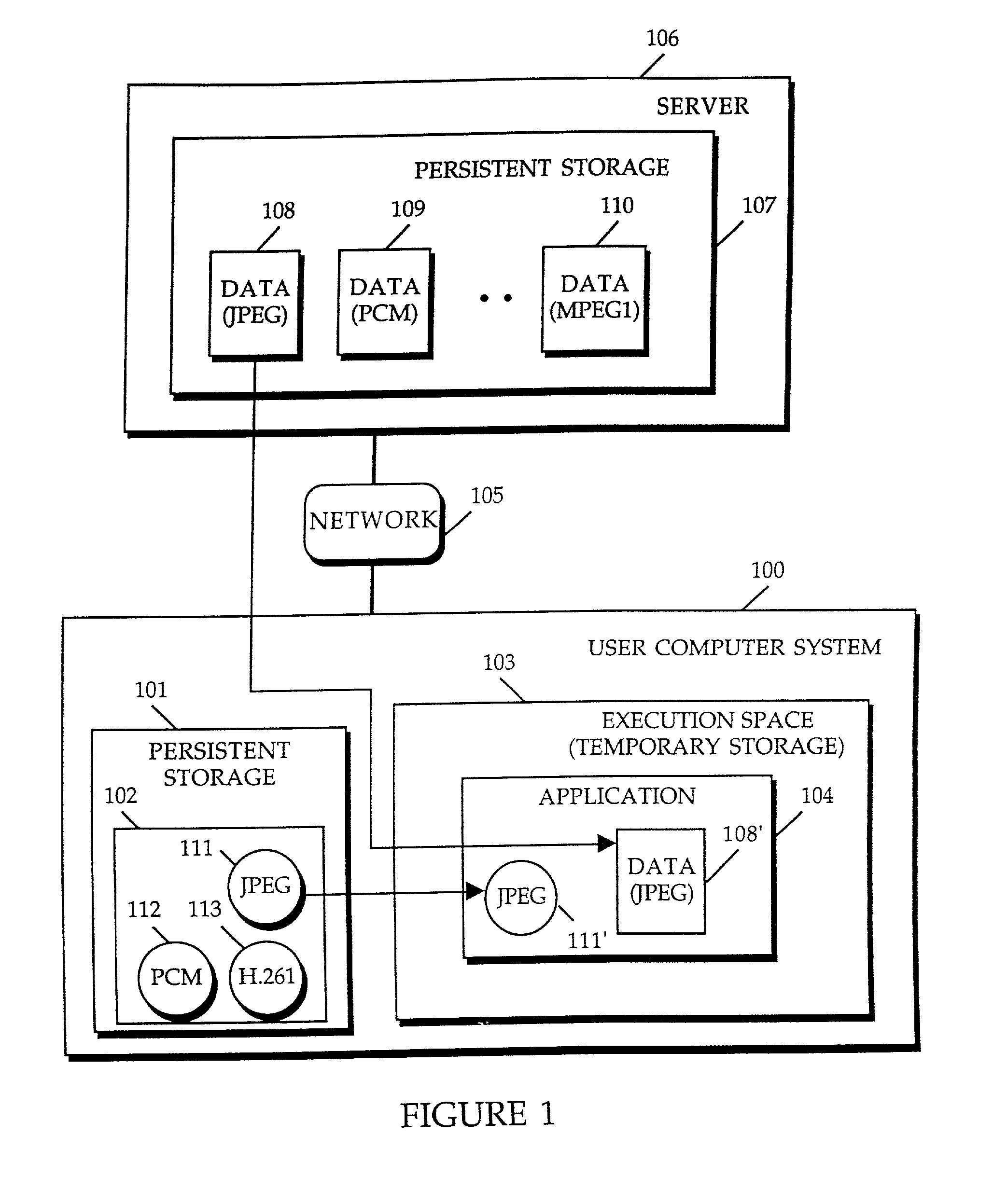

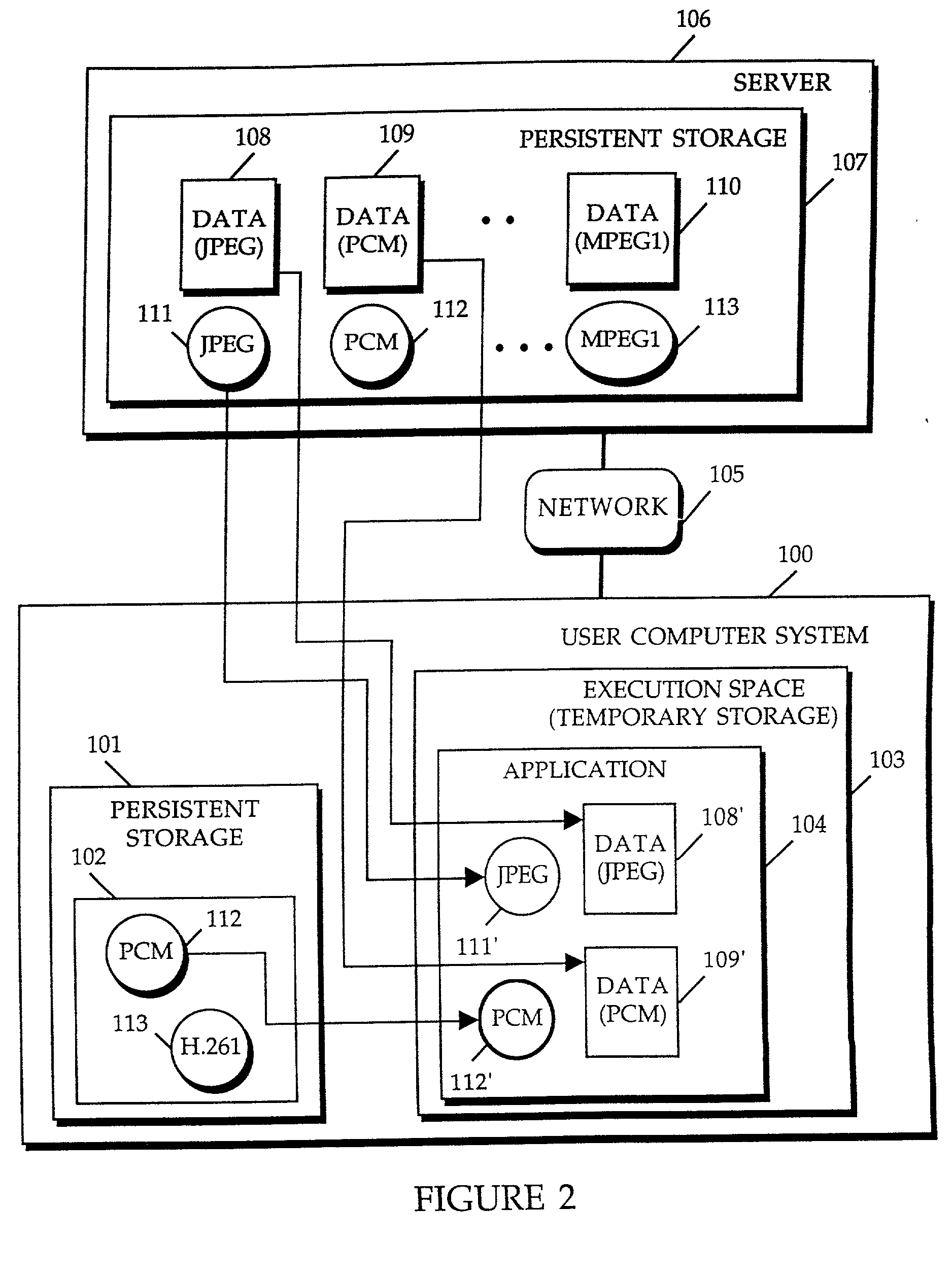

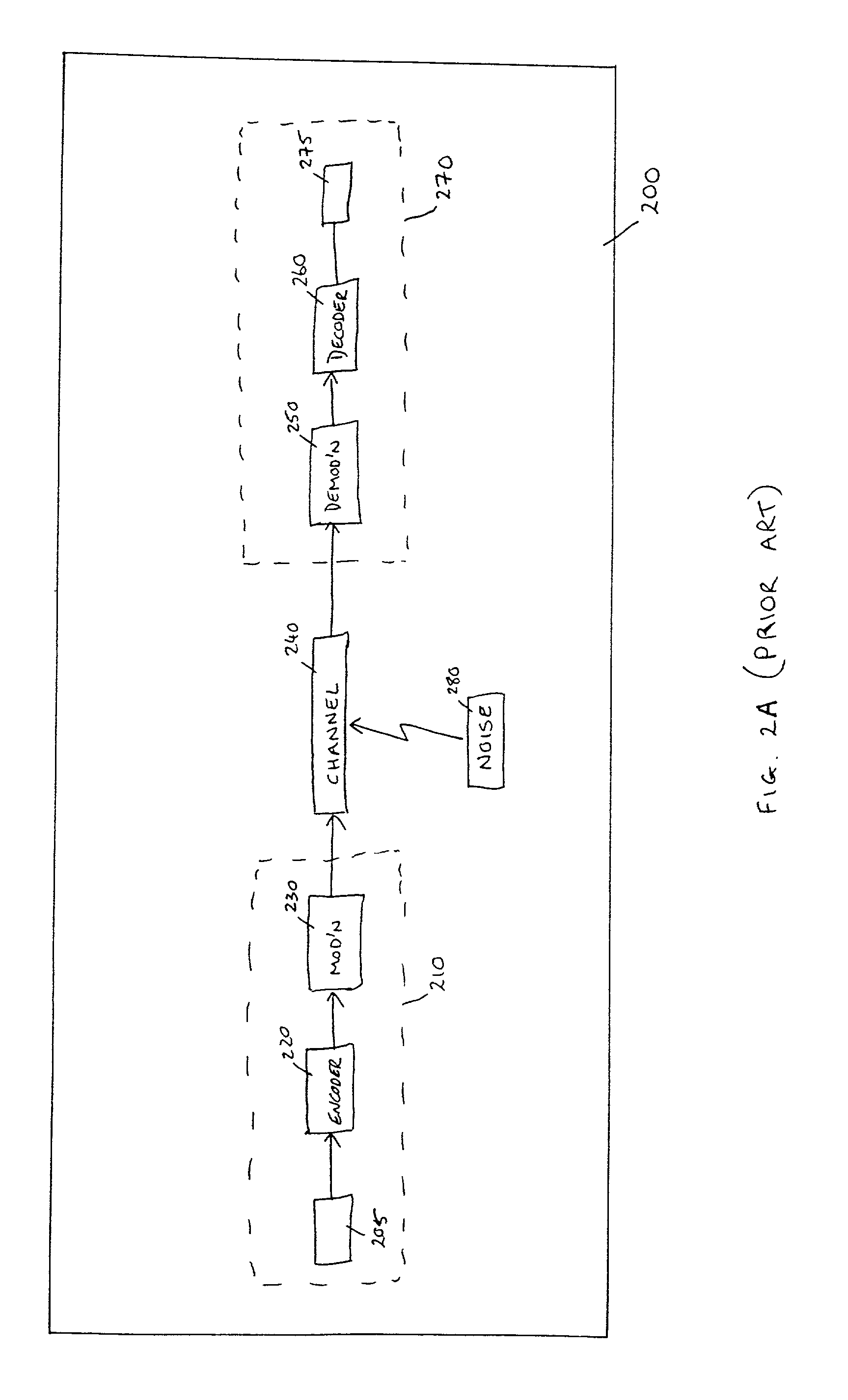

Method and apparatus for providing plug in media decoders

InactiveUS6216152B1Data processing applicationsInterprogram communicationObject basedApplication software

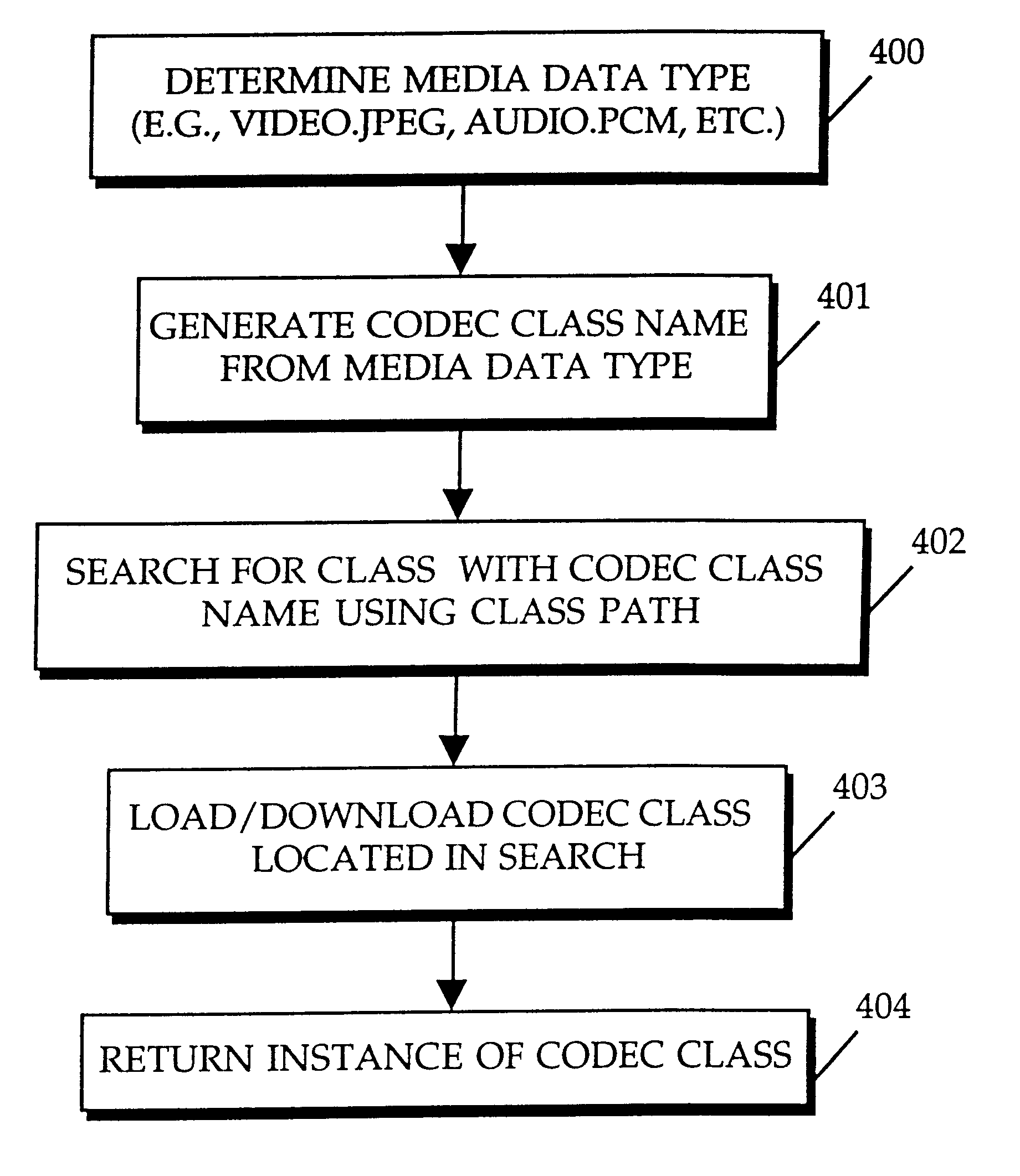

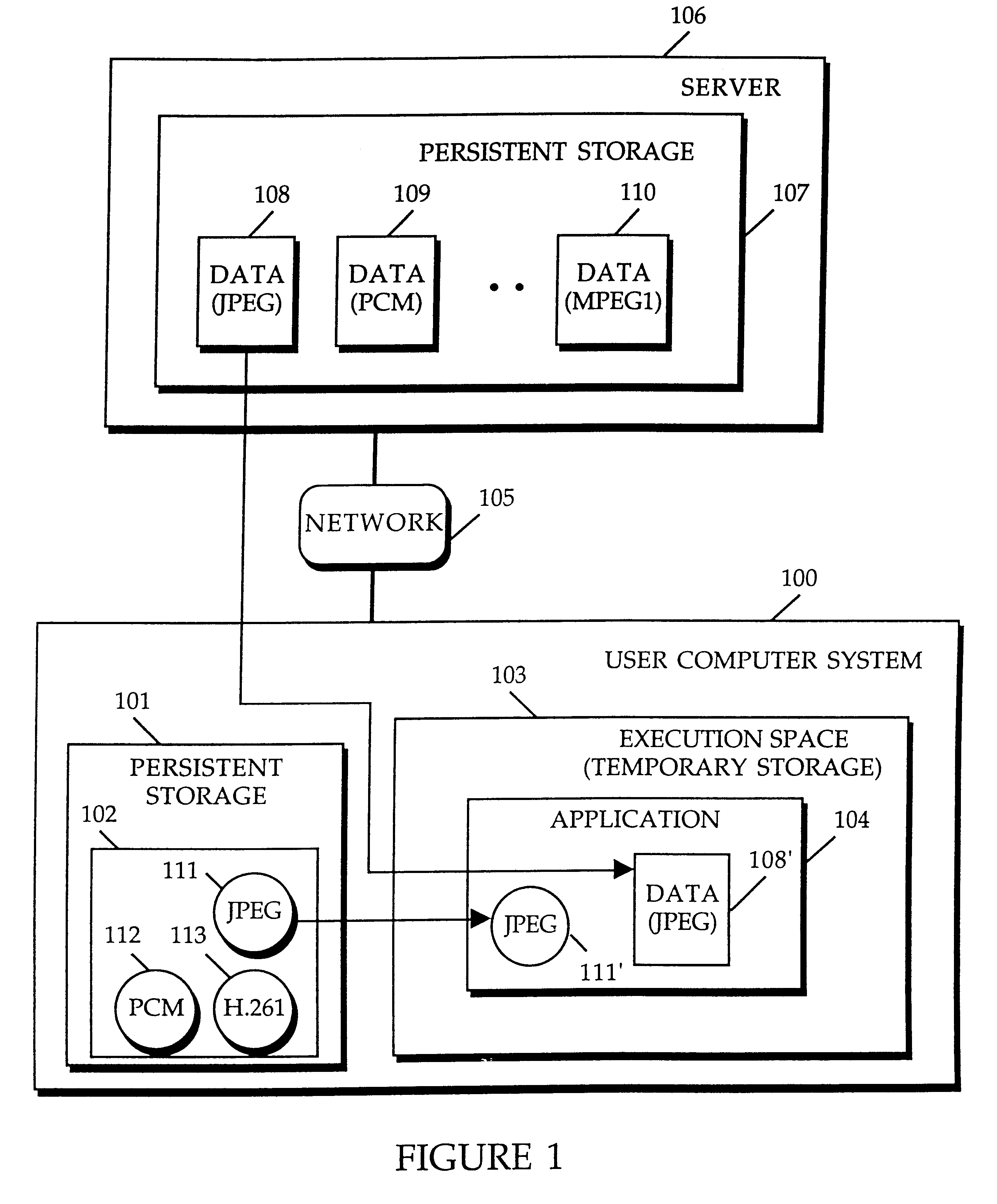

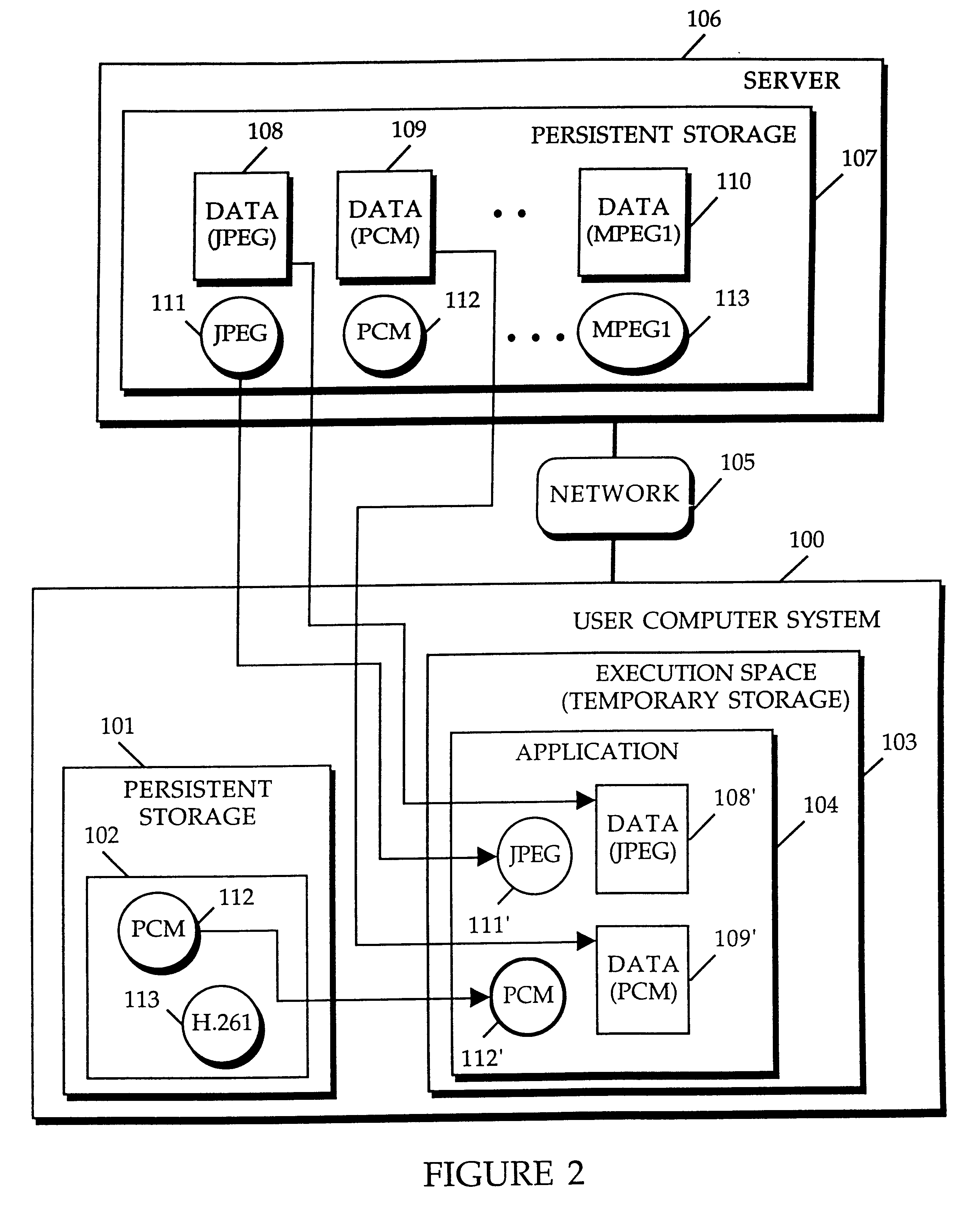

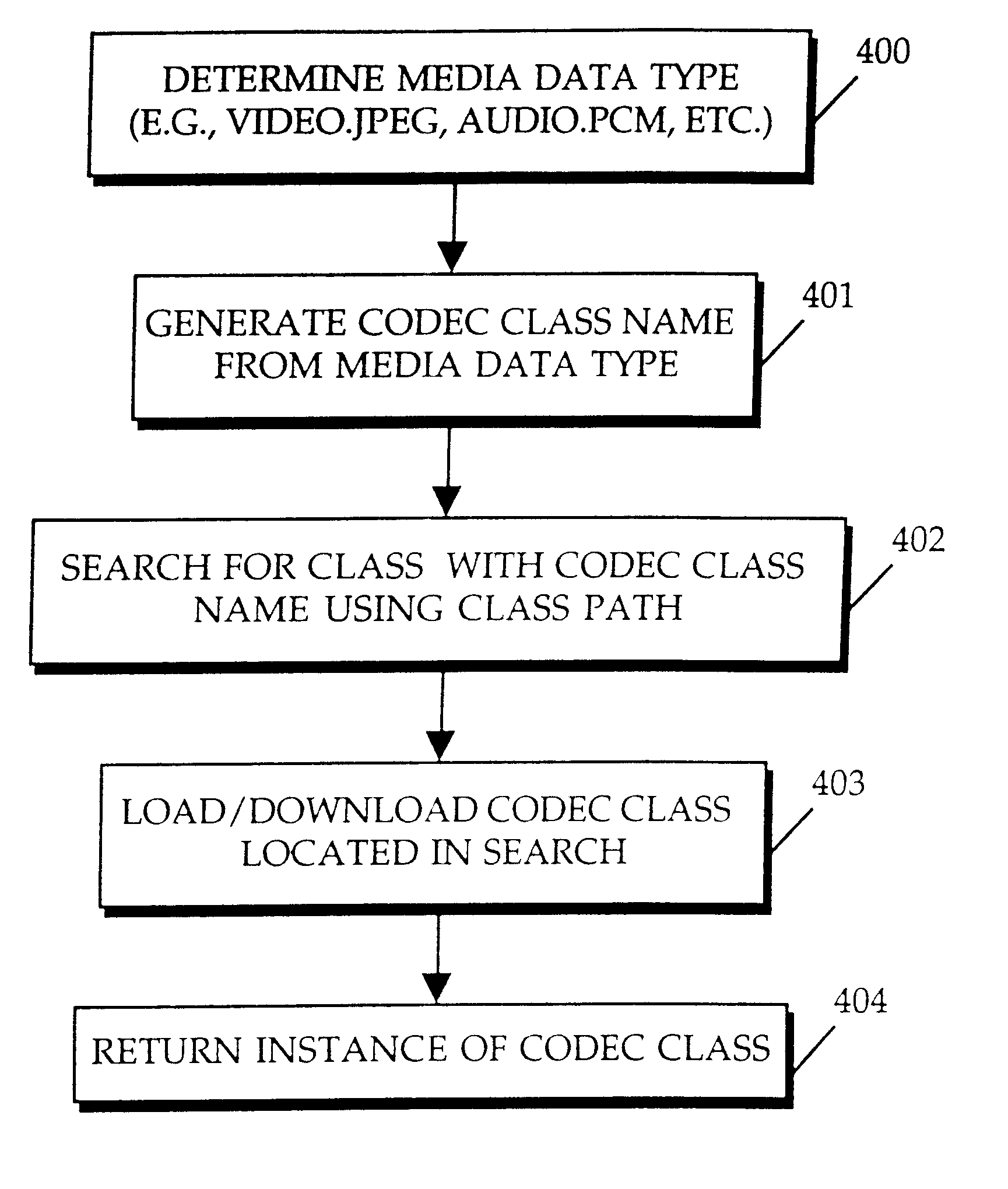

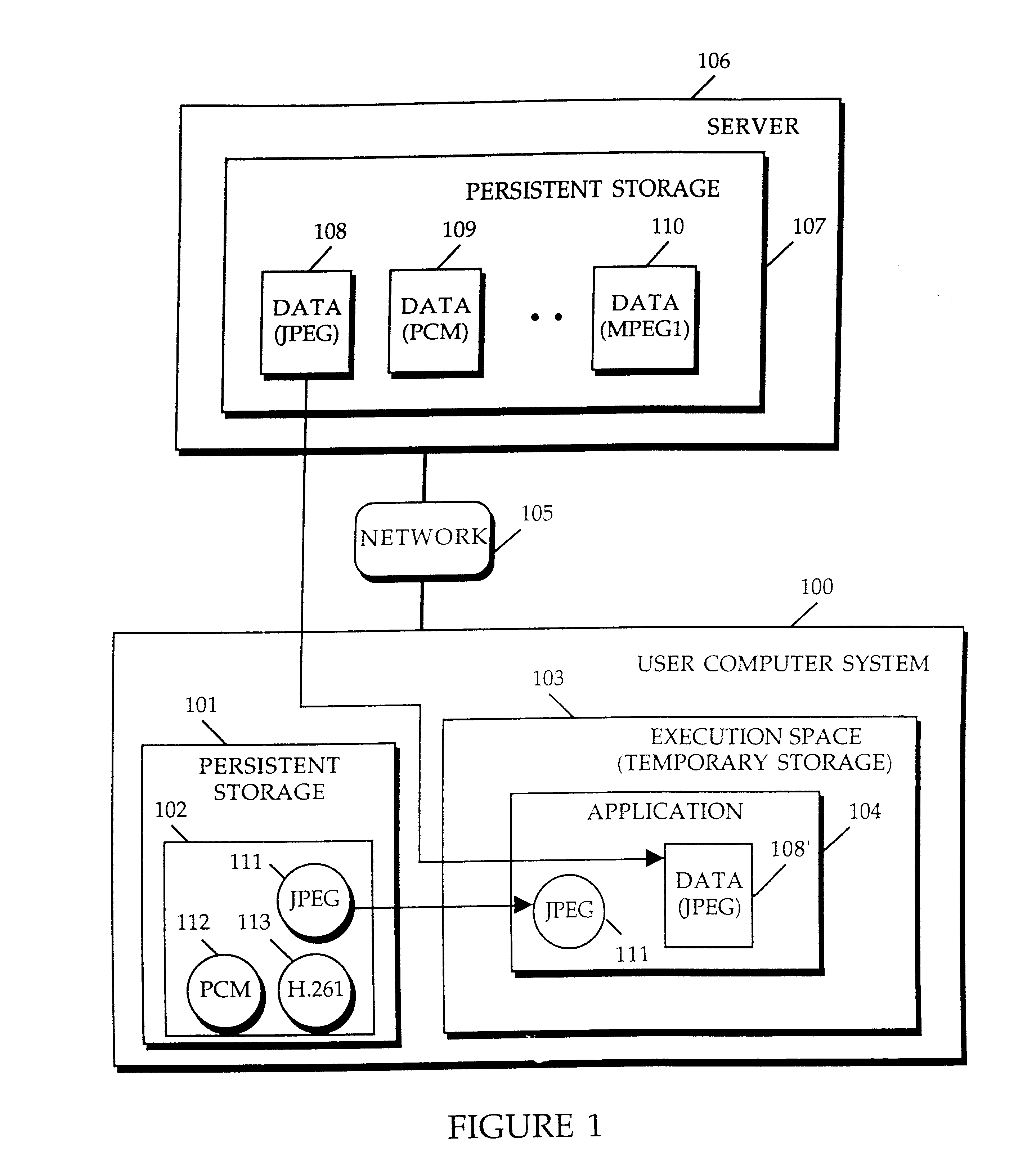

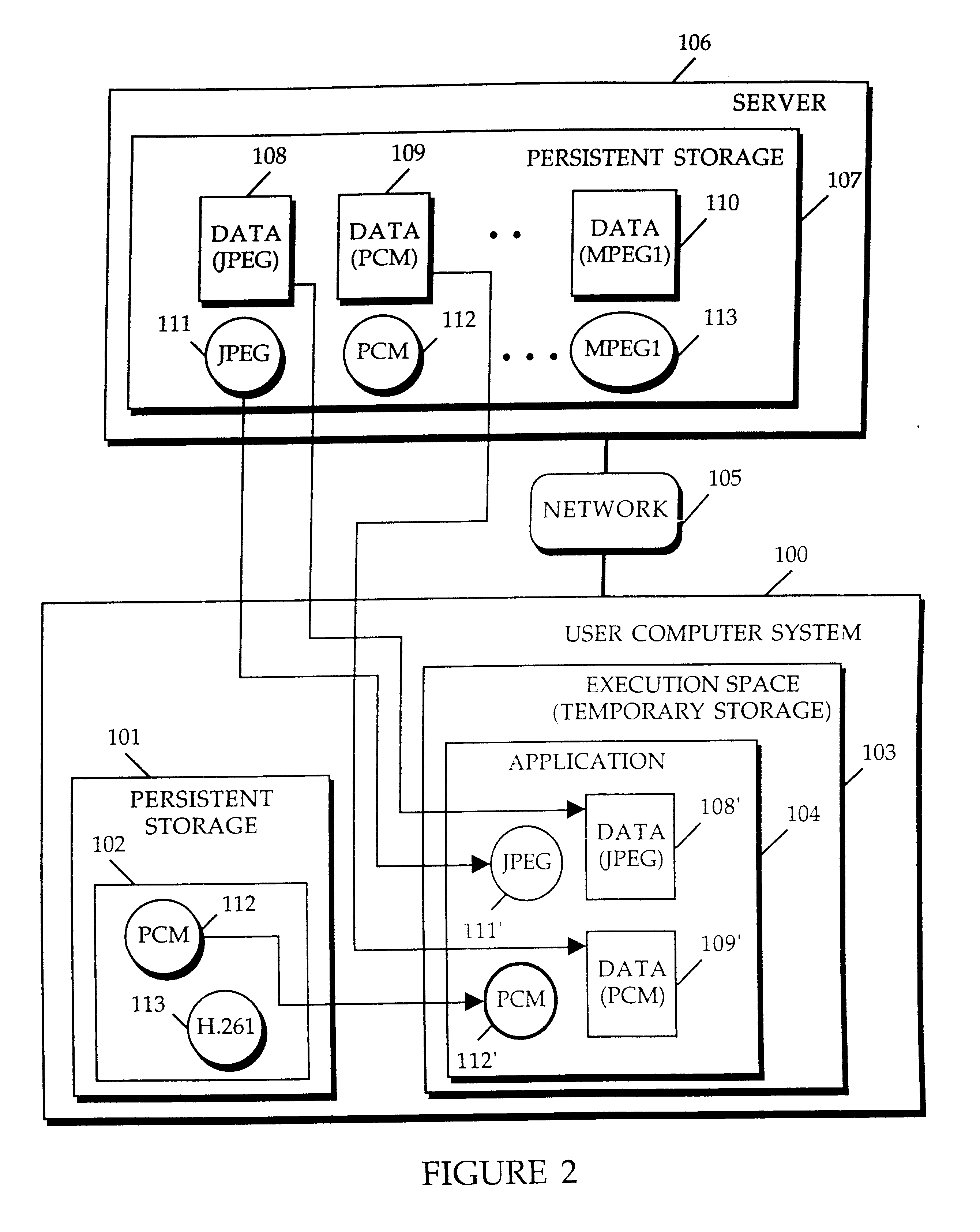

A method and apparatus for providing plug-in media decoders. Embodiments provide a "plug-in" decoder architecture that allows software decoders to be transparently downloaded, along with media data. User applications are able to support new media types as long as the corresponding plug-in decoder is available with the media data. Persistent storage requirements are decreased because the downloaded decoder is transient, existing in application memory for the duration of execution of the user application. The architecture also supports use of plug-in decoders already installed in the user computer. One embodiment is implemented with object-based class files executed in a virtual machine to form a media application. A media data type is determined from incoming media data, and used to generate a class name for a corresponding codec (coder-decoder) object. A class path vector is searched, including the source location of the incoming media data, to determine the location of the codec class file for the given class name. When the desired codec class file is located, the virtual machine's class loader loads the class file for integration into the media application. If the codec class file is located across the network at the source location of the media data, the class loader downloads the codec class file from the network. Once the class file is loaded into the virtual machine, an instance of the codec class is created within the media application to decode / decompress the media data as appropriate for the media data type.

Owner:ORACLE INT CORP

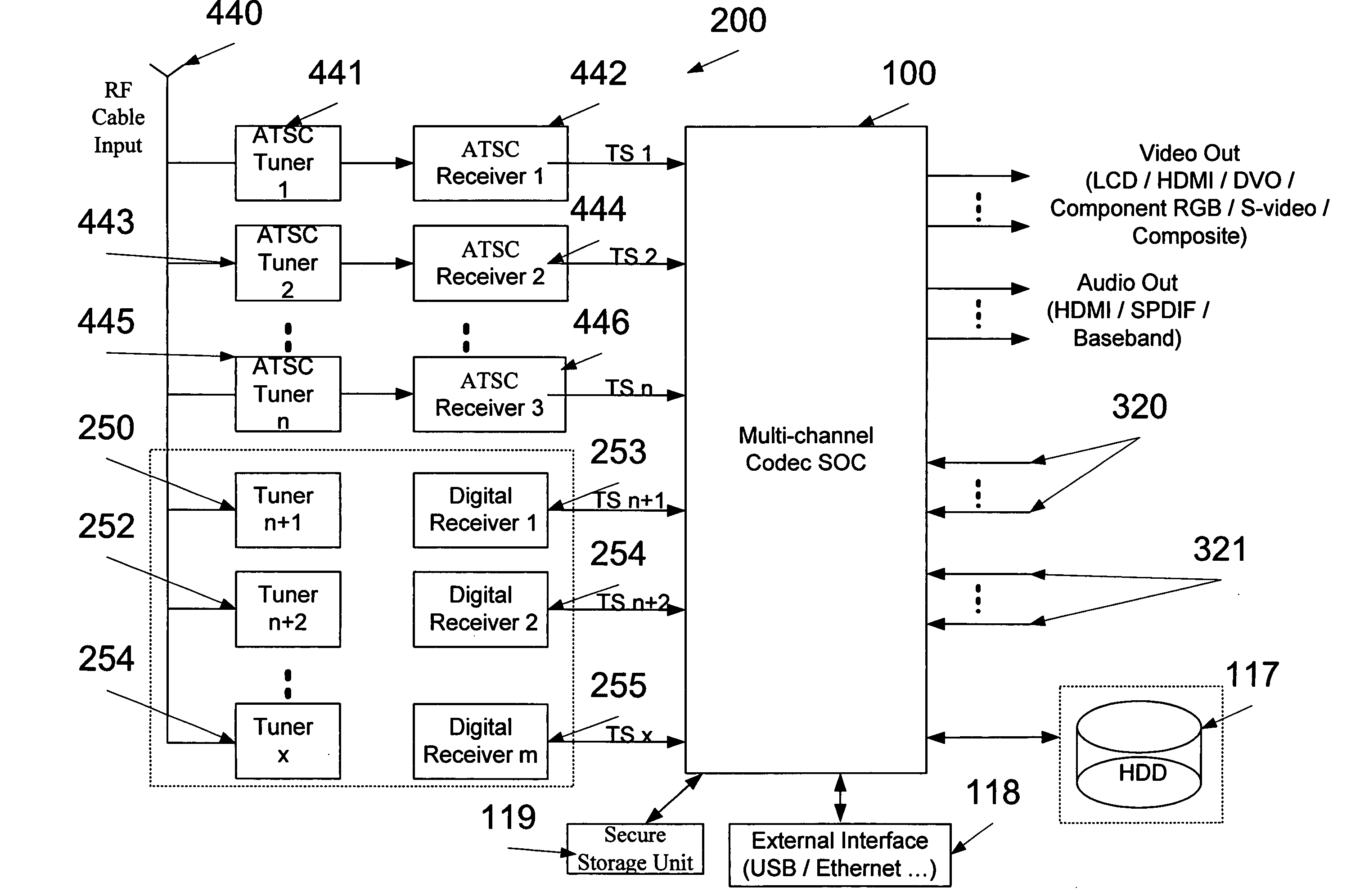

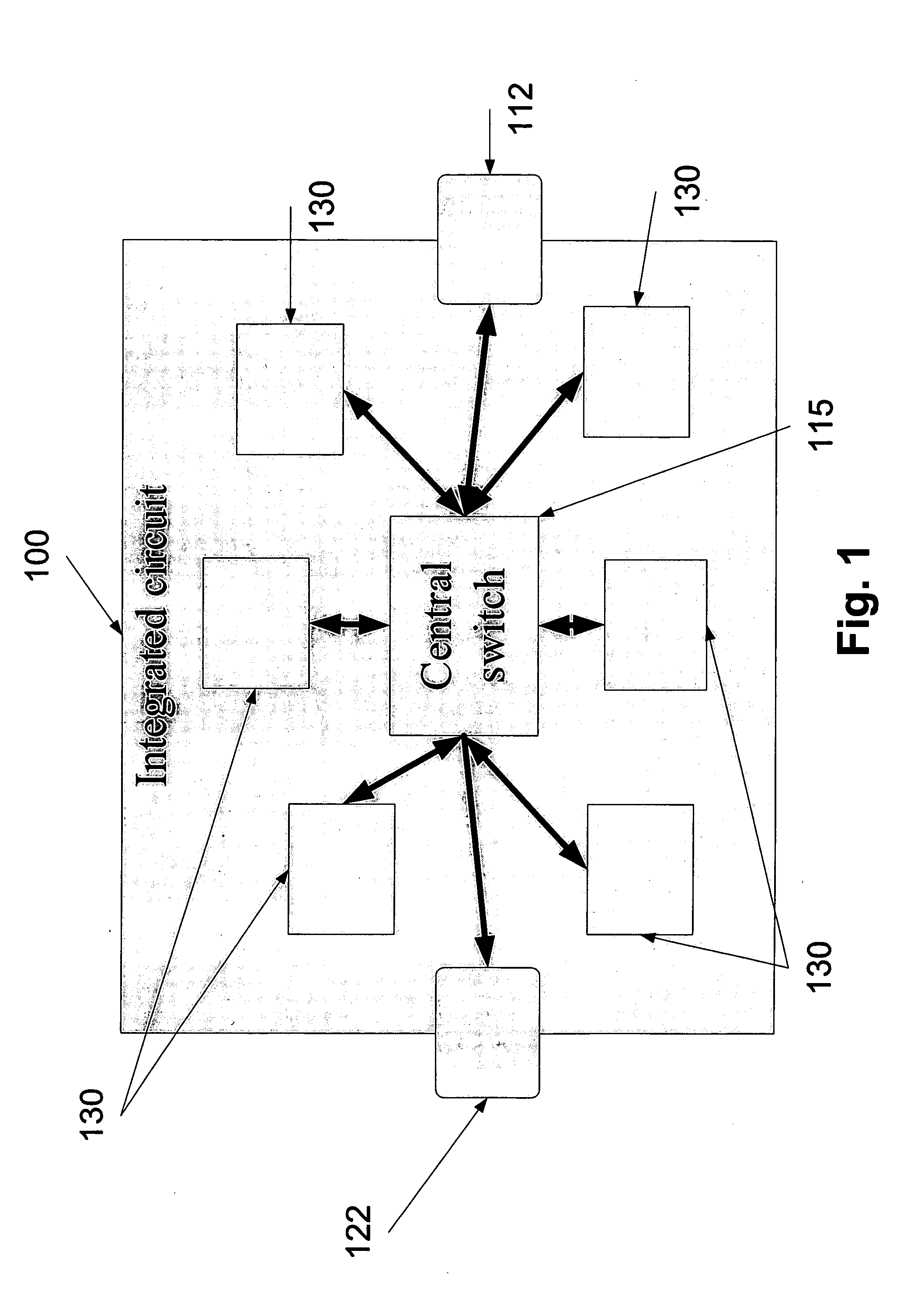

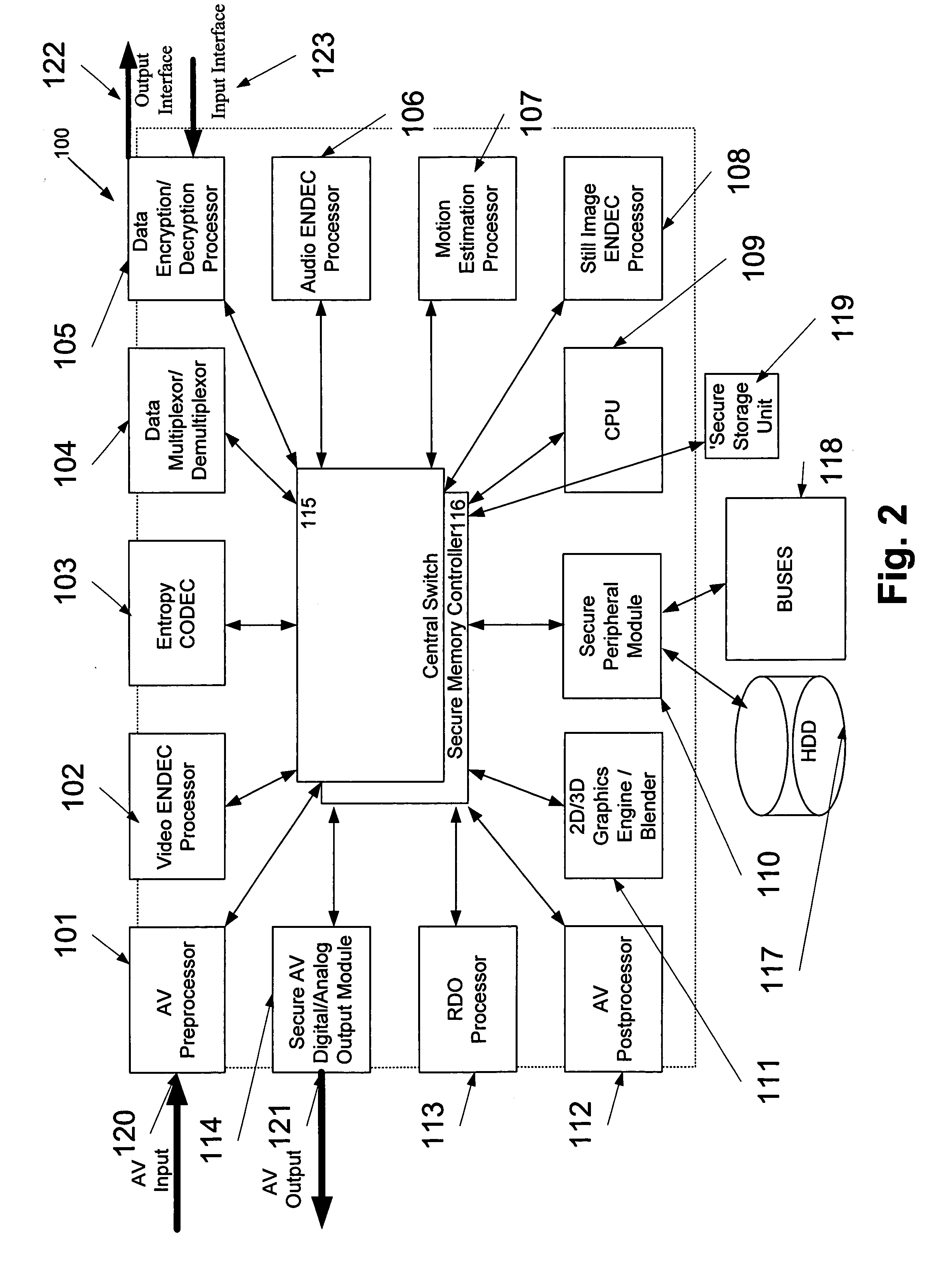

Integrated circuit, an encoder/decoder architecture, and a method for processing a media stream

InactiveUS20080120676A1Television system detailsPicture reproducers using cathode ray tubesMemory controllerEmbedded system

An integrated circuit for pre-processing, encoding, decoding, transcoding, indexing, blending, post-processing and display of media streams, in accordance with a variety of compression algorithms, DRM schemes and related industry standards and recommendations such as OCAP, ISMA, DLNA, MPAA etc. The integrated circuit comprises an input interface configured for receiving the media streams from content sources, a plurality of processing units, system CPU, and sophisticated switch and memory controller, electronically connected to the input interface and directly connected to each one of the processing units. The integrated circuit further comprises an output interface that is operatively connected to the switch and configured for outputting the simultaneously processed media streams.

Owner:TESSERA INC

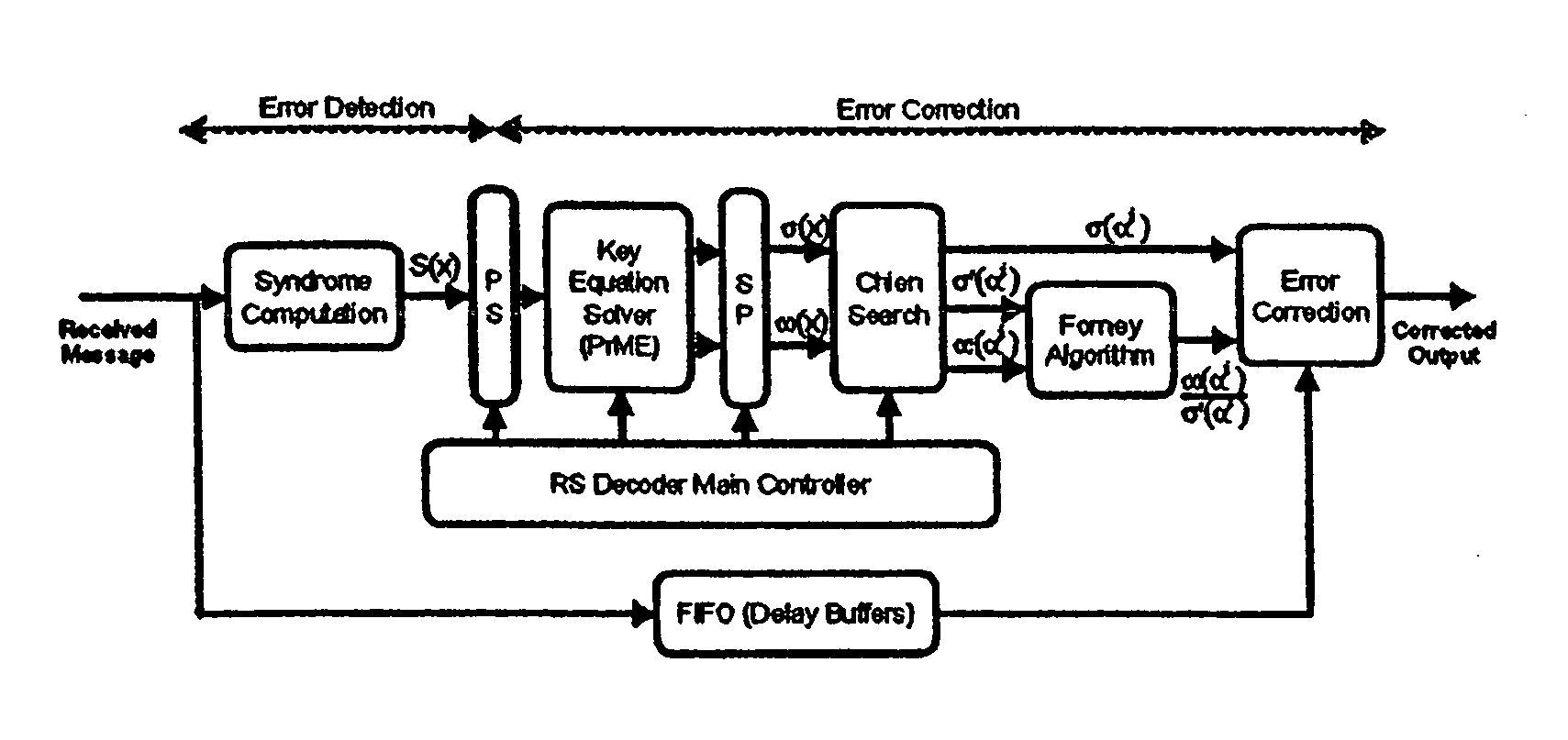

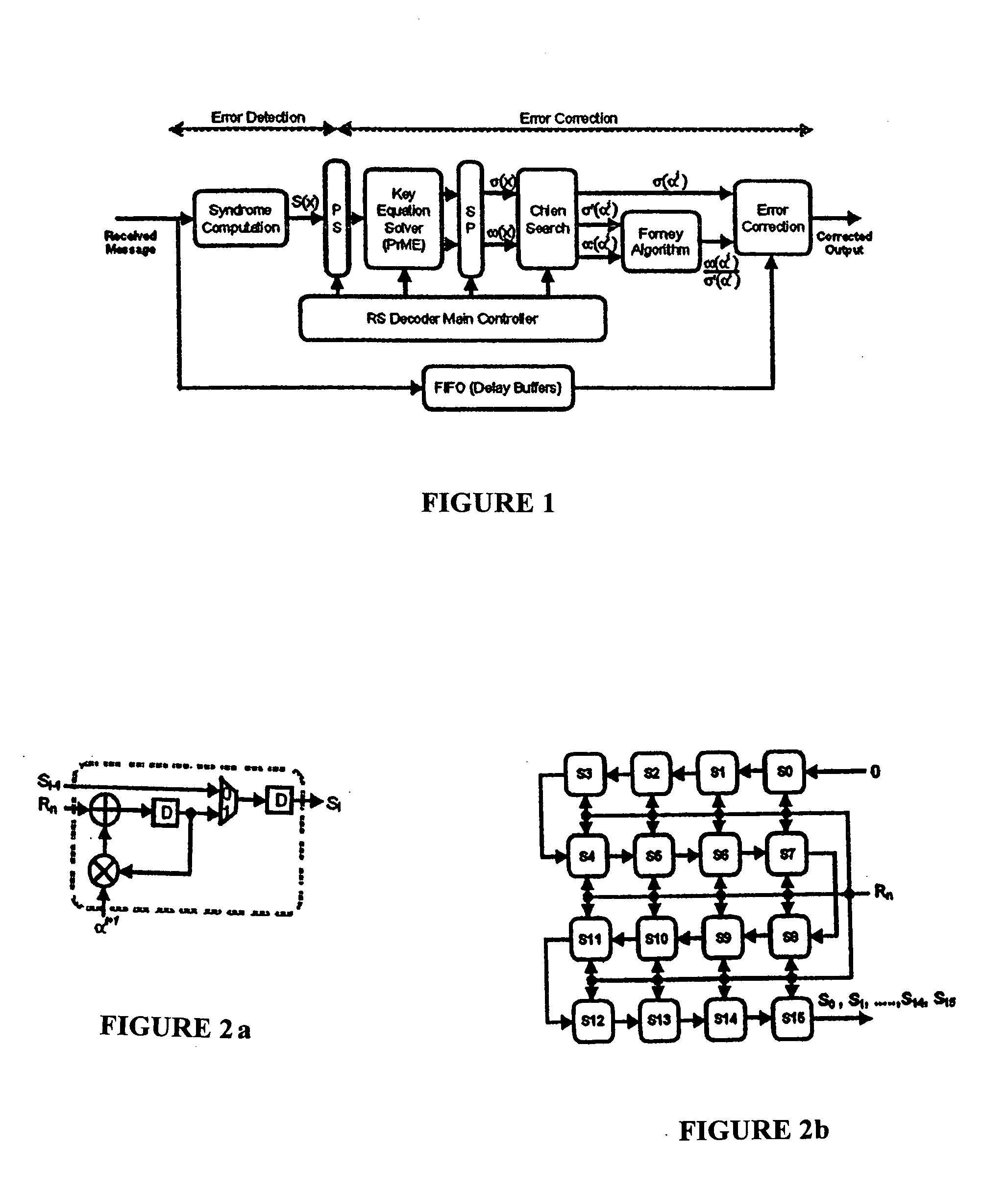

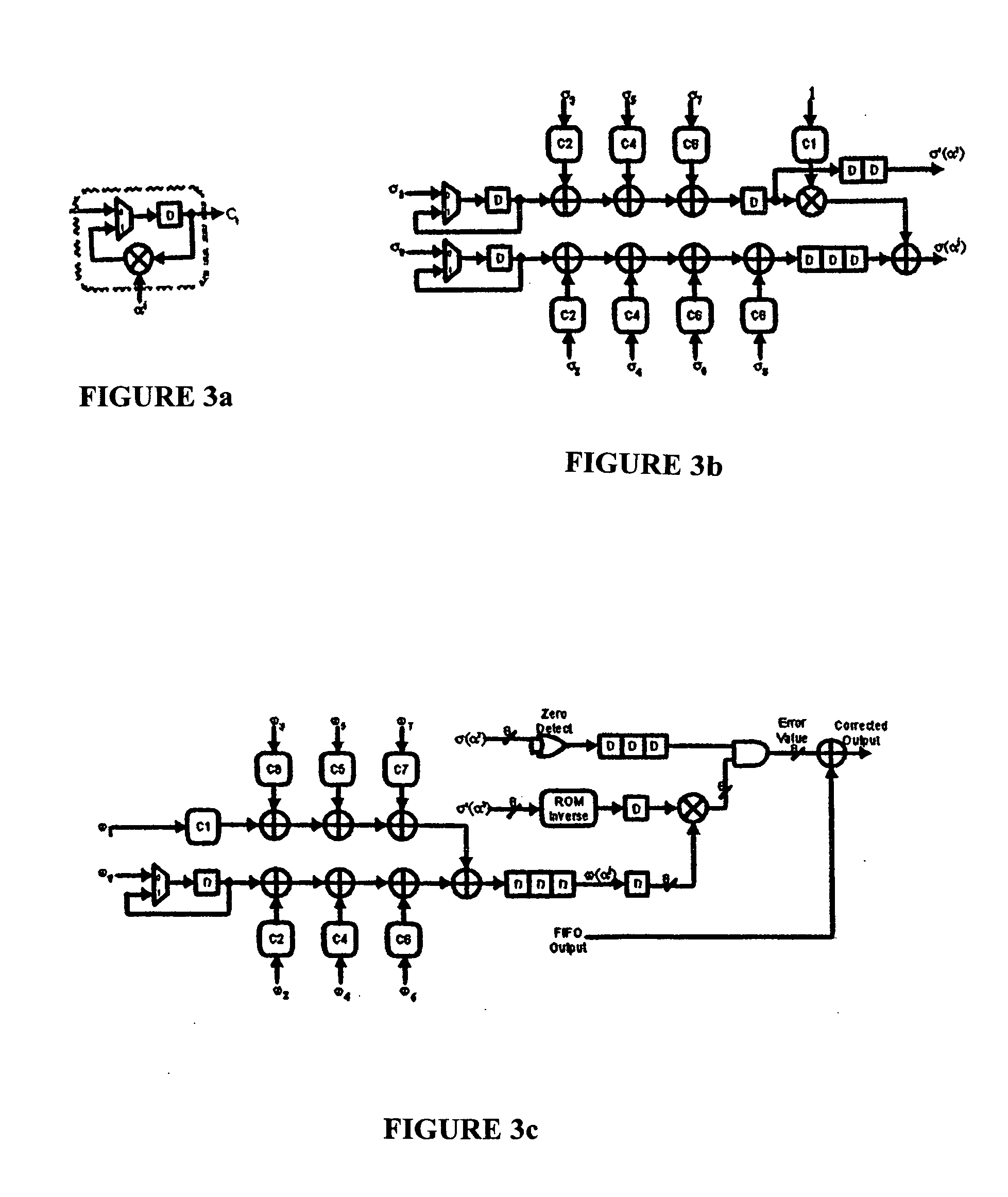

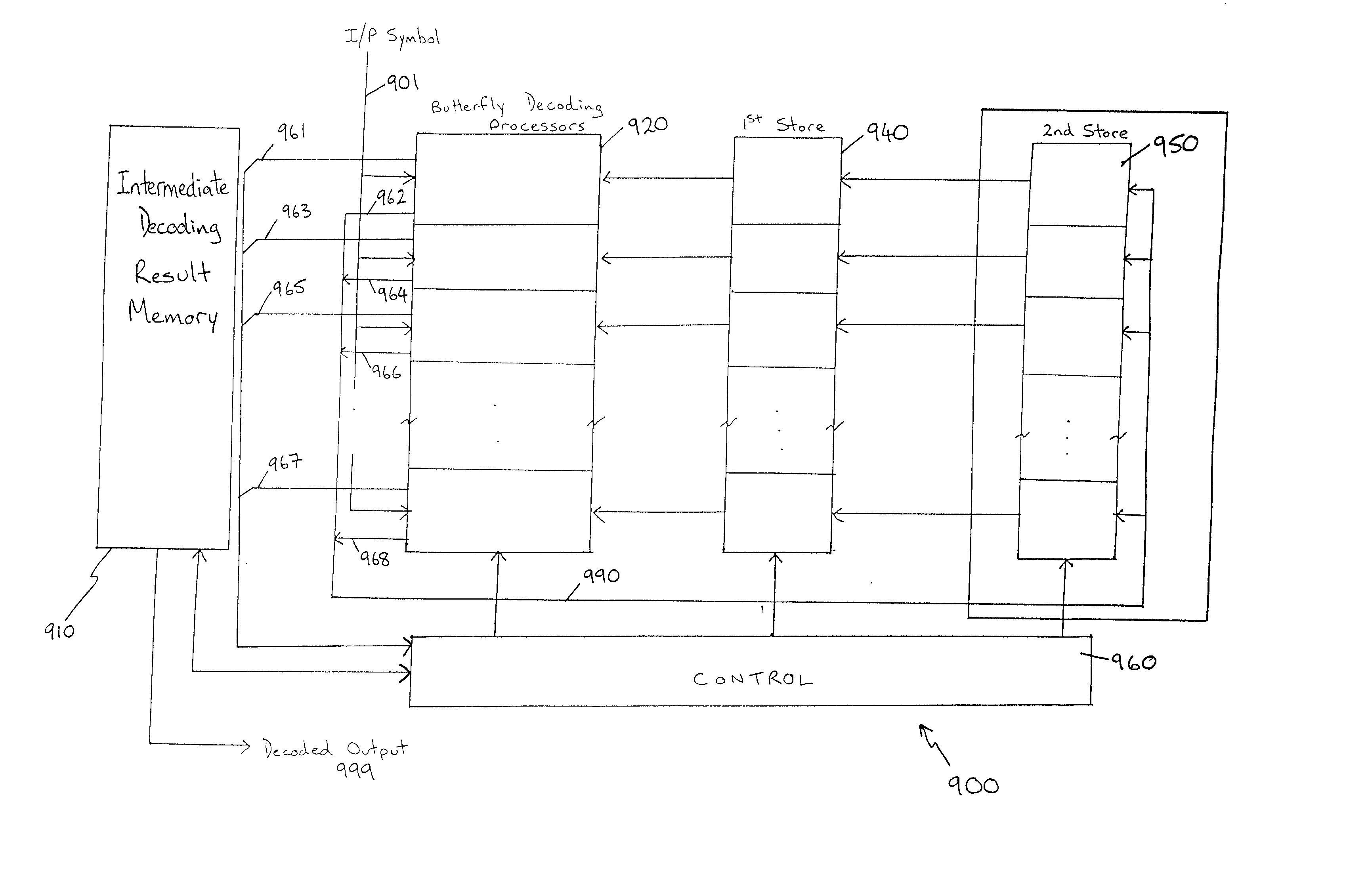

Reed-solomon decoder systems for high speed communication and data storage applications

InactiveUS20060059409A1Effective and reliable error correction functionalityReduce complexityCode conversionCoding detailsModem deviceHigh rate

A high-speed, low-complexity Reed-Solomon (RS) decoder architecture using a novel pipelined recursive Modified Euclidean (PrME) algorithm block for very high-speed optical communications is provided. The RS decoder features a low-complexity Key Equation Solver using a PrME algorithm block. The recursive structure enables the low-complexity PrME algorithm block to be implemented. Pipelining and parallelizing allow the inputs to be received at very high fiber optic rates, and outputs to be delivered at correspondingly high rates with minimum delay. An 80-Gb / s RS decoder architecture using 0.13-μm CMOS technology in a supply voltage of 1.2 V is disclosed that features a core gate count of 393 K and operates at a clock rate of 625 MHz. The RS decoder has a wide range of applications, including fiber optic telecommunication applications, hard drive or disk controller applications, computational storage system applications, CD or DVD controller applications, fiber optic systems, router systems, wireless communication systems, cellular telephone systems, microwave link systems, satellite communication systems, digital television systems, networking systems, high-speed modems and the like.

Owner:UNIV OF CONNECTICUT

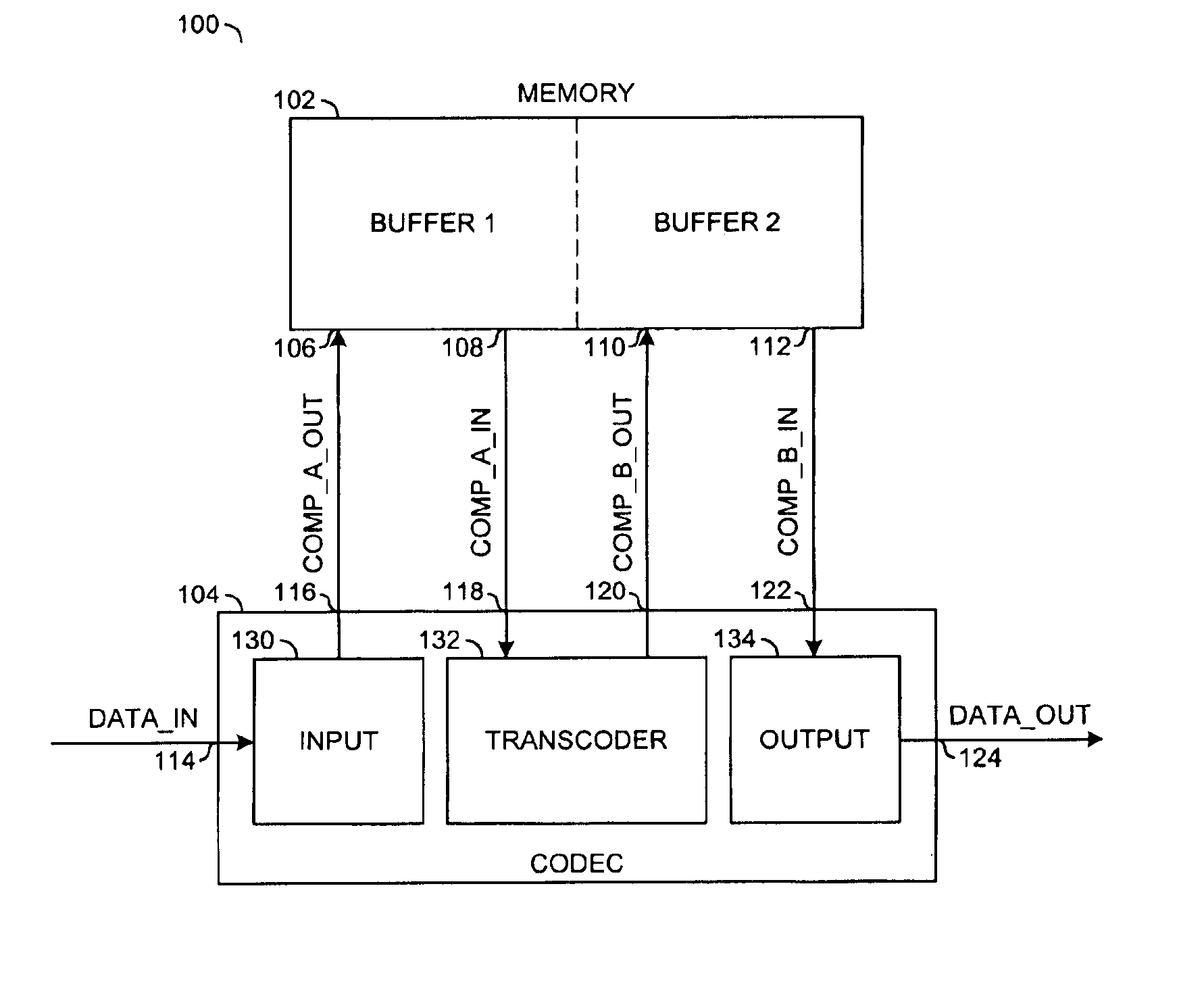

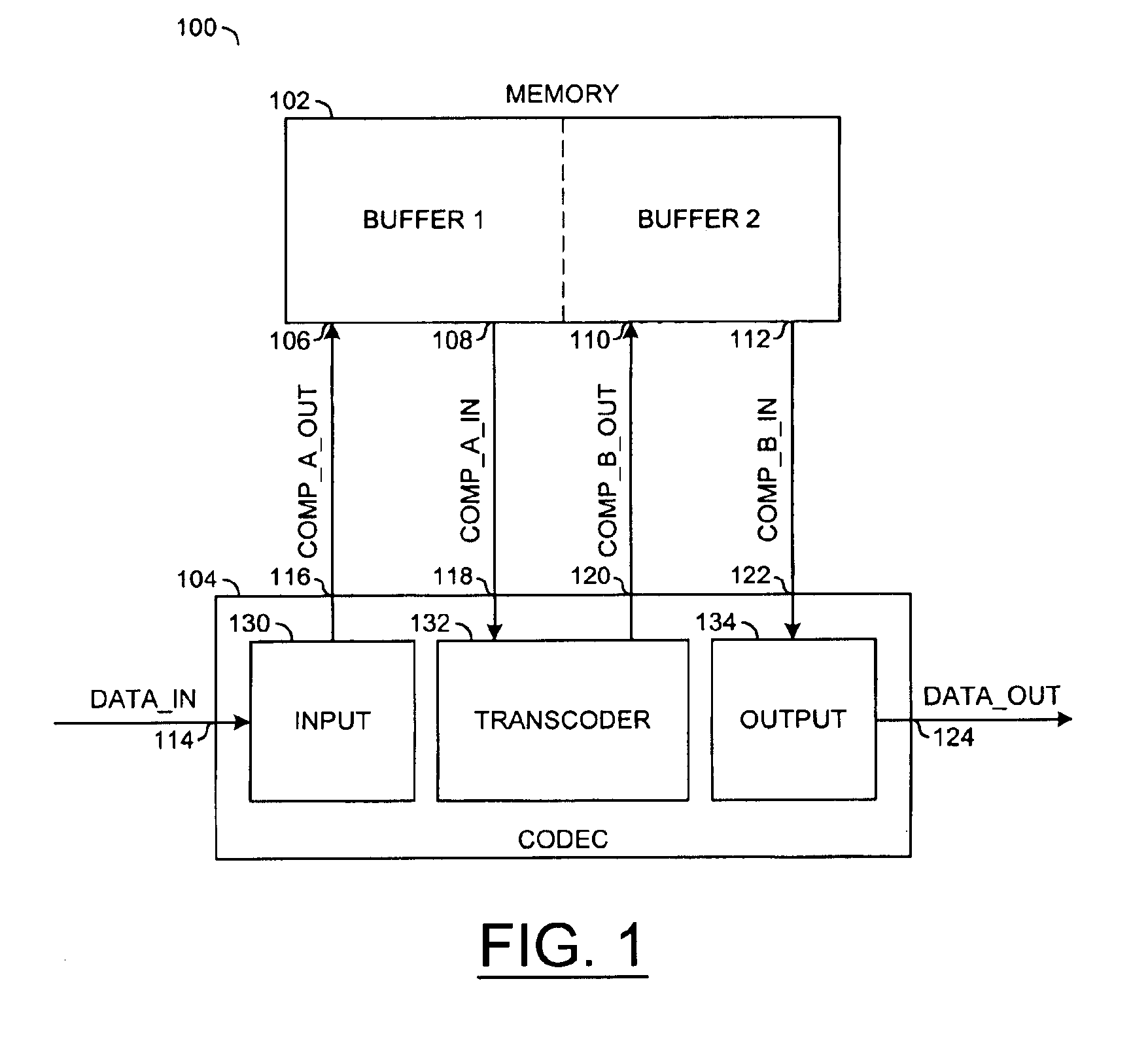

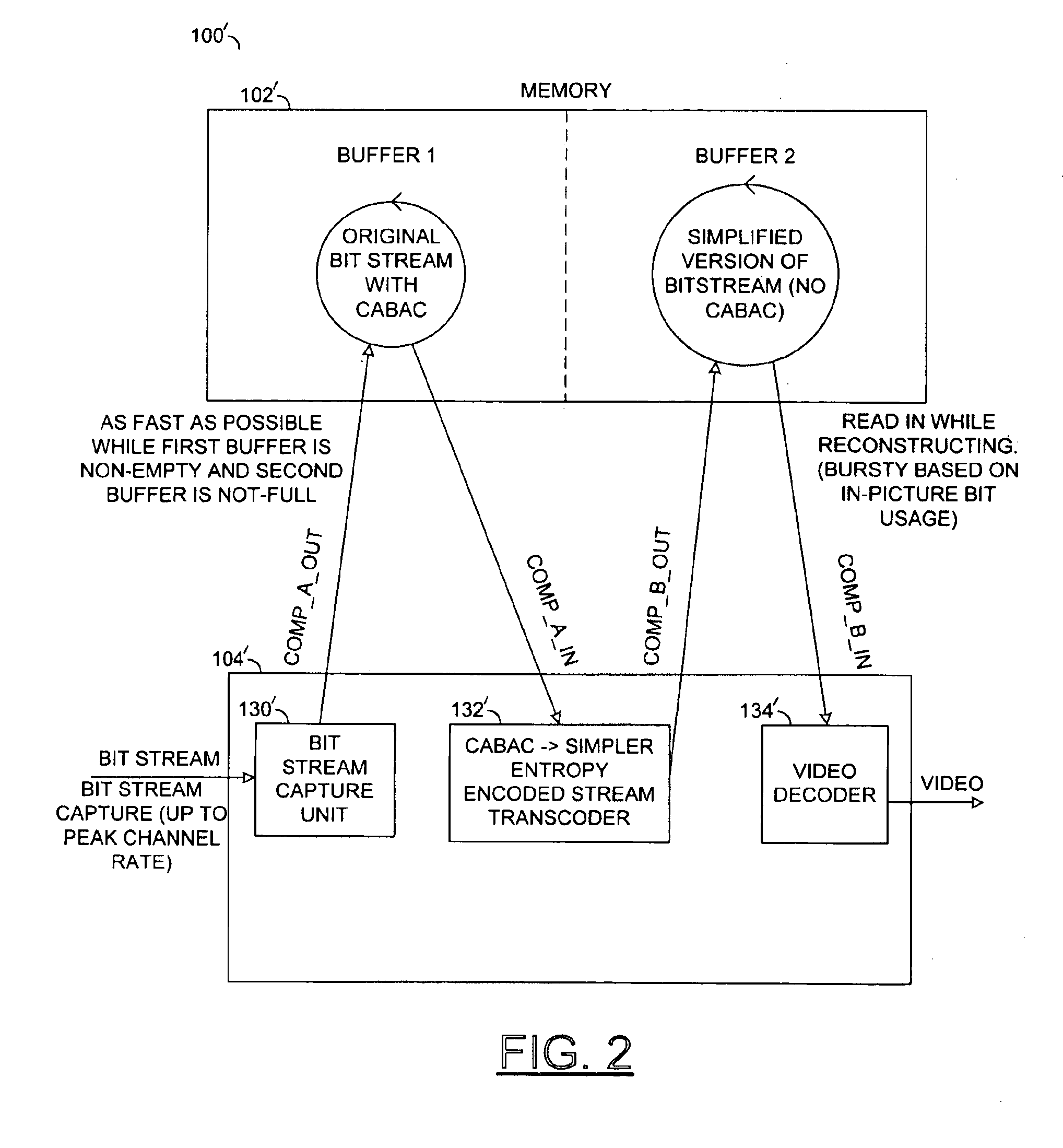

Context based adaptive binary arithmetic CODEC architecture for high quality video compression and decompression

InactiveUS6927710B2Quality improvementCode conversionImage codingAlgorithmTheoretical computer science

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

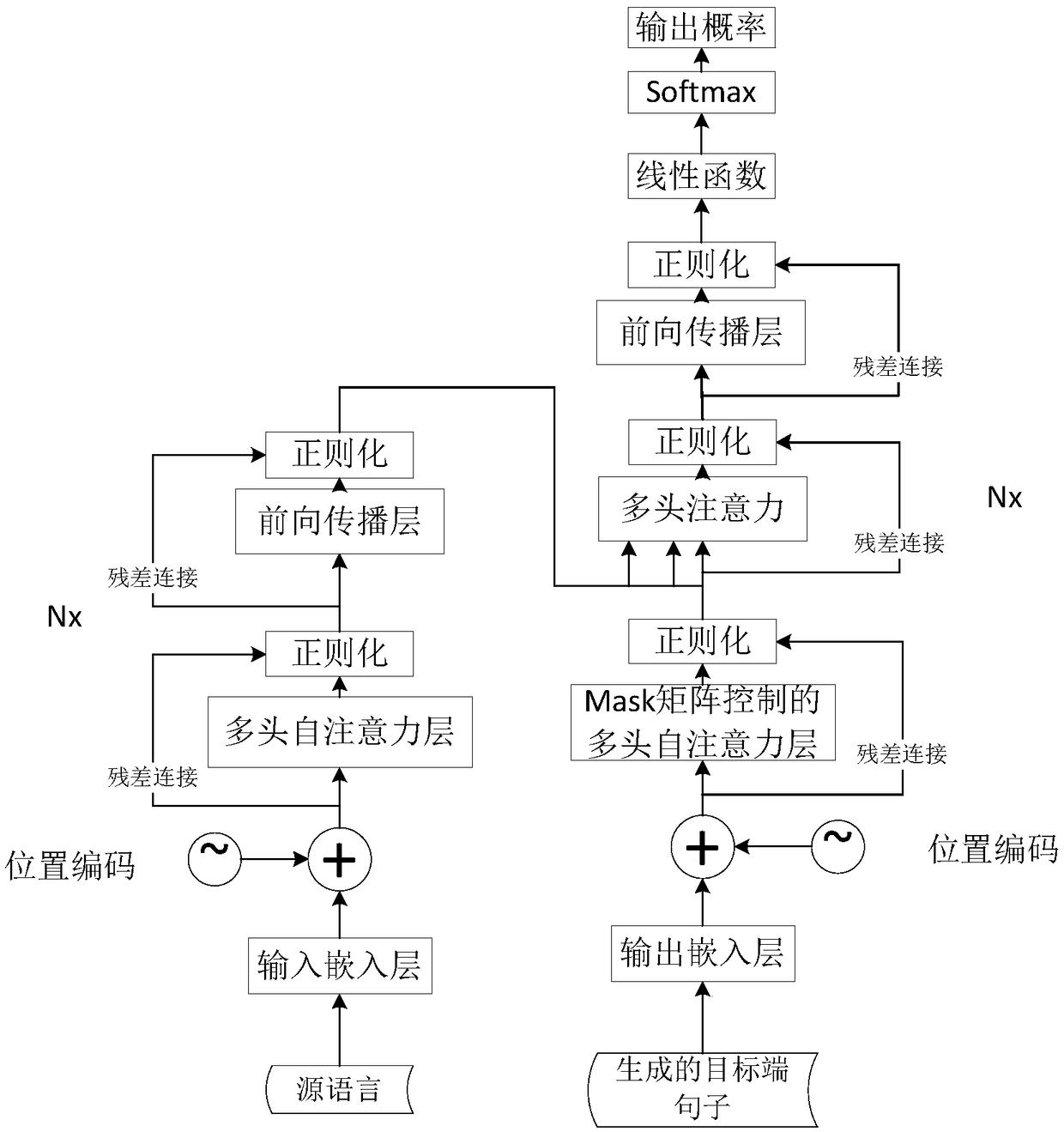

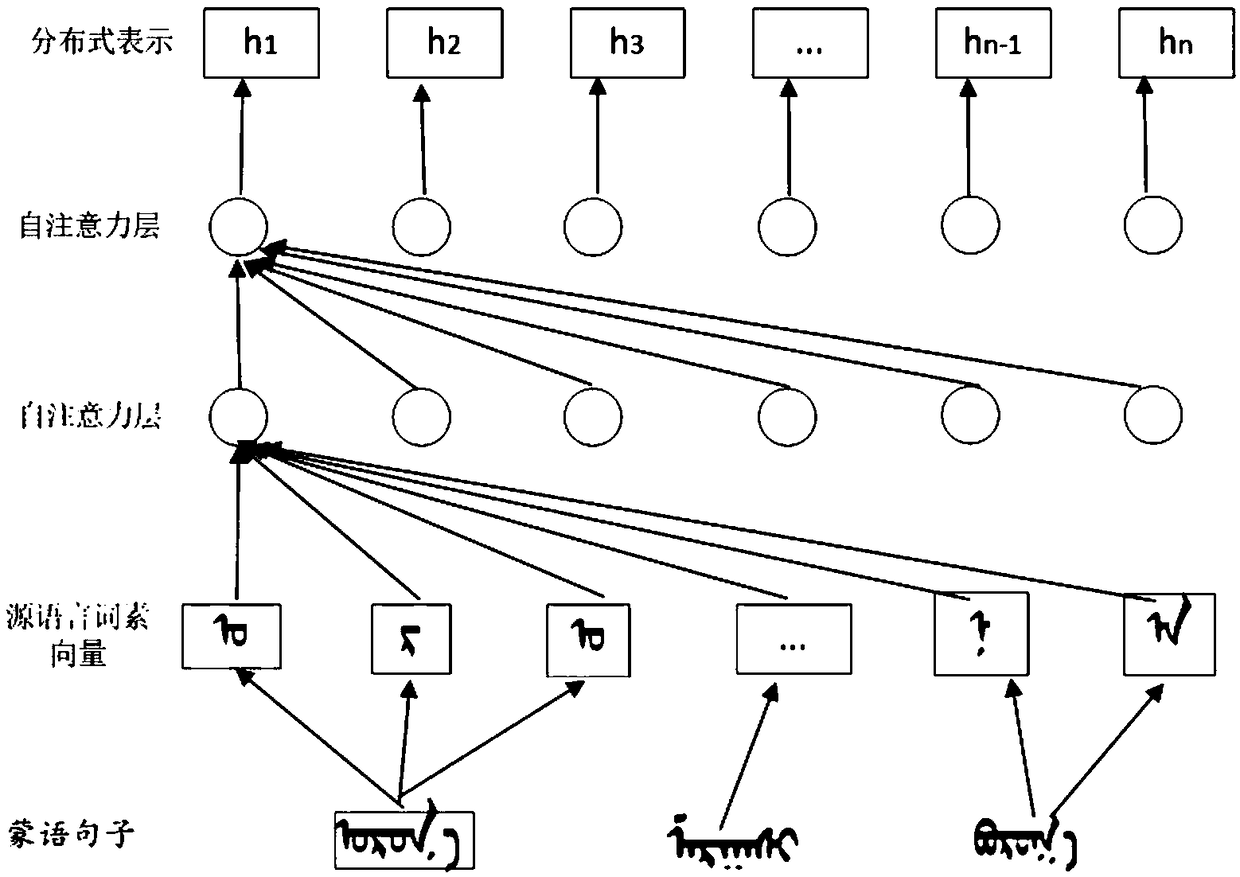

Mongolian-Chinese machine translation method for enhancing semantic feature information based on Transformers

InactiveCN109492232AEasy to catchReduce sparsityNatural language translationSemantic analysisTransformerSemantic feature

The invention provides a Mongolian-Chinese machine translation method for enhancing semantic feature information based on a Transformer model. The method comprises the following steps: firstly, starting from the language characteristics of Mongolian, finding out the characteristics of the additional components of the Mongolian in terms of stem, affixes and lattices, and merging the language characteristics into the training of a model; secondly, distributed representation for measuring the similarity between the two words is taken as a research background, and the influence of depth, density and semantic coincidence degree on the concept semantic similarity is comprehensively analyzed; in the translation process, a Transformer model is adopted, and the Transformer model is a multi-layer encoder which performs position encoding by using a trigonometric function and is constructed on the basis of an enhanced multi-head attention mechanism. A decoder architecture, which completely dependson the mechanism of attention to draw the global dependency between the input and the output, eliminates recursion and convolution.

Owner:INNER MONGOLIA UNIV OF TECH

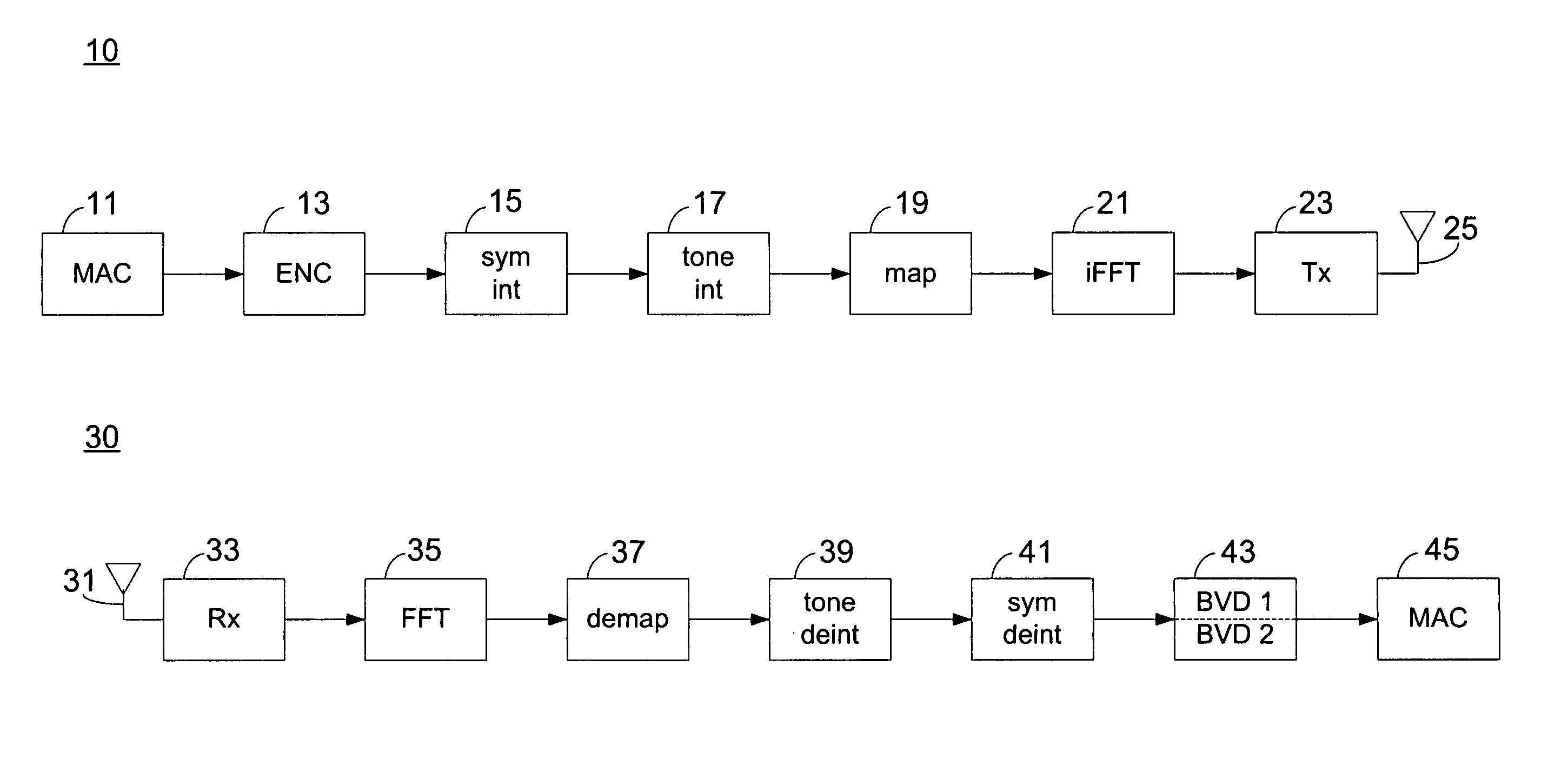

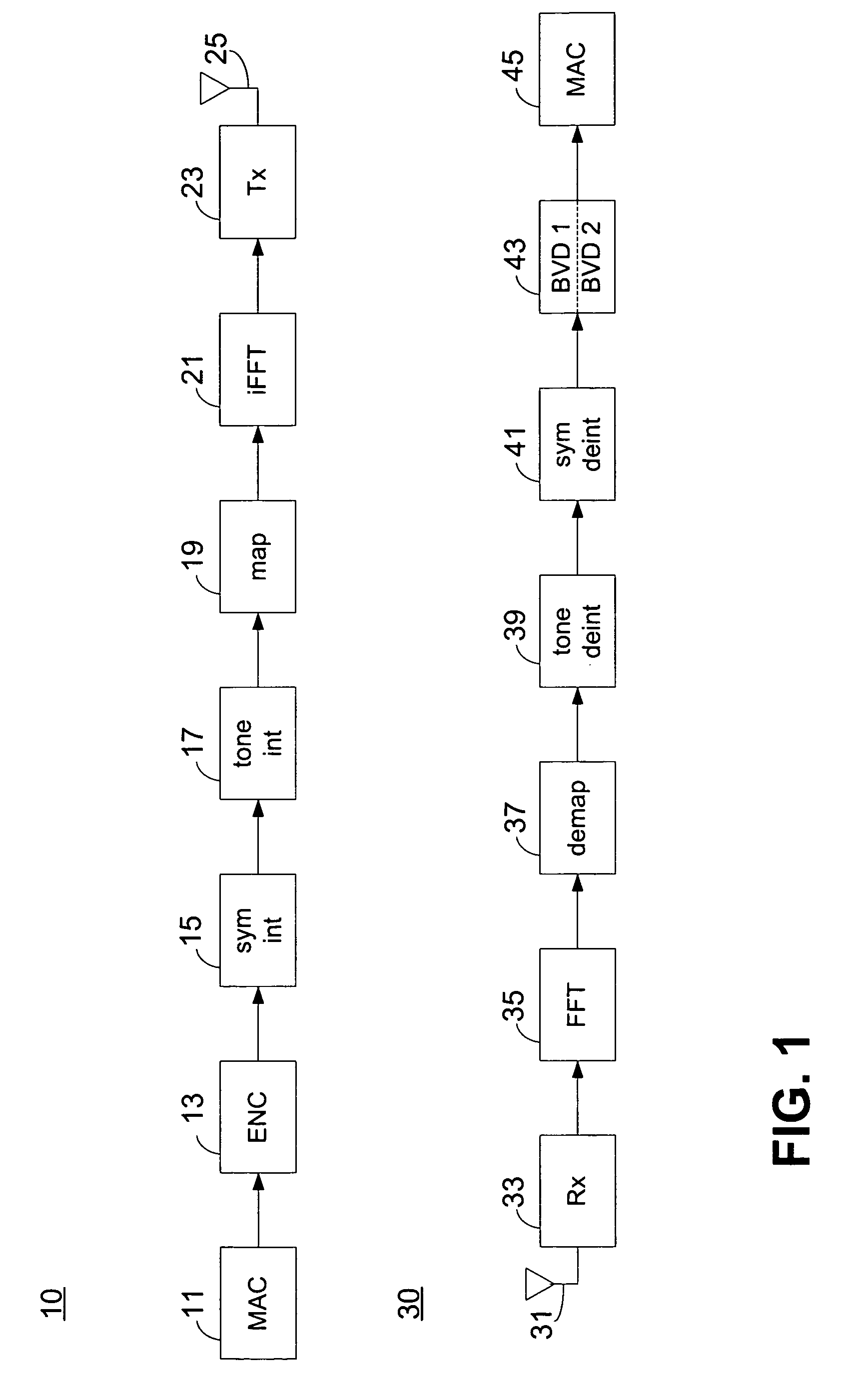

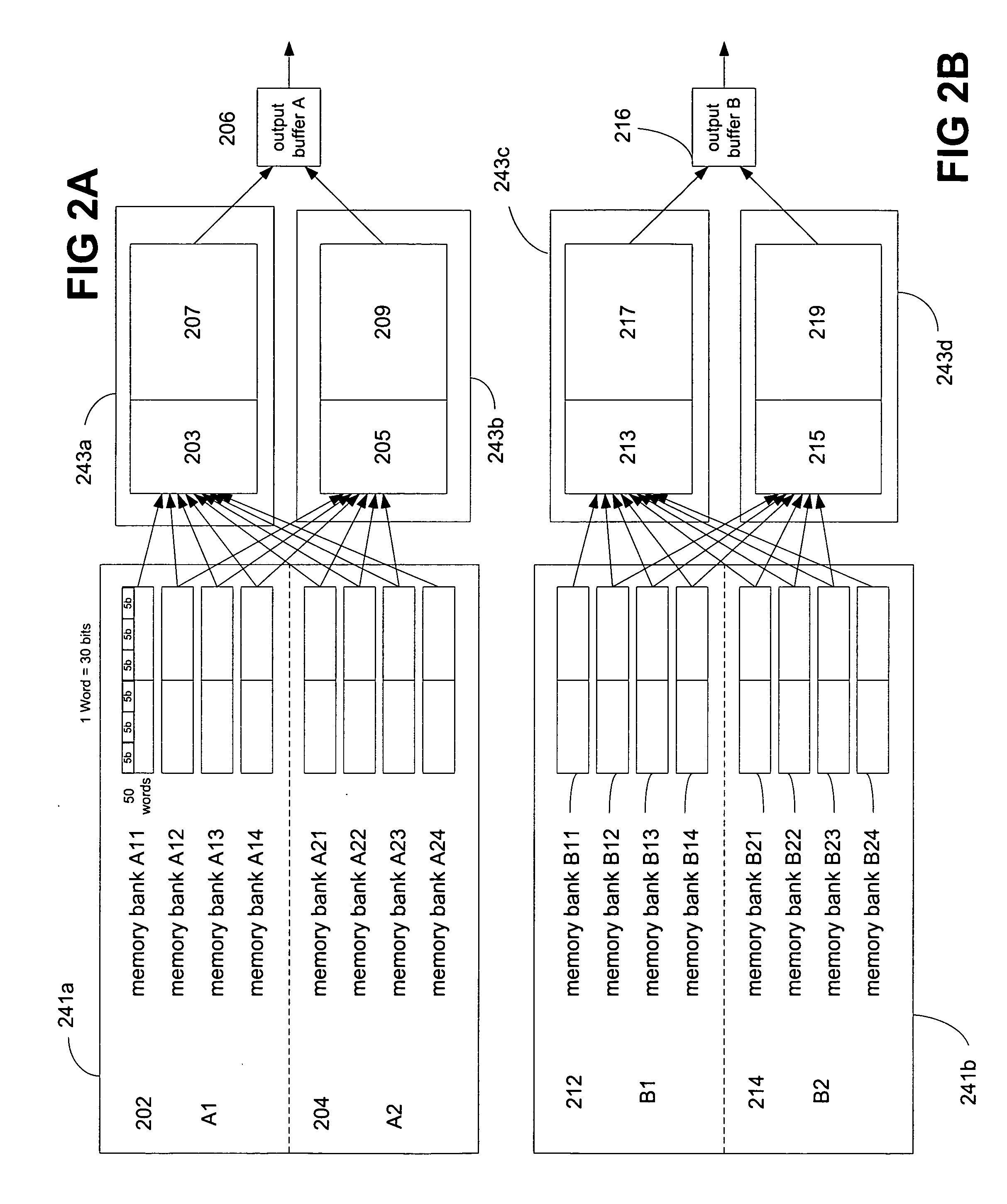

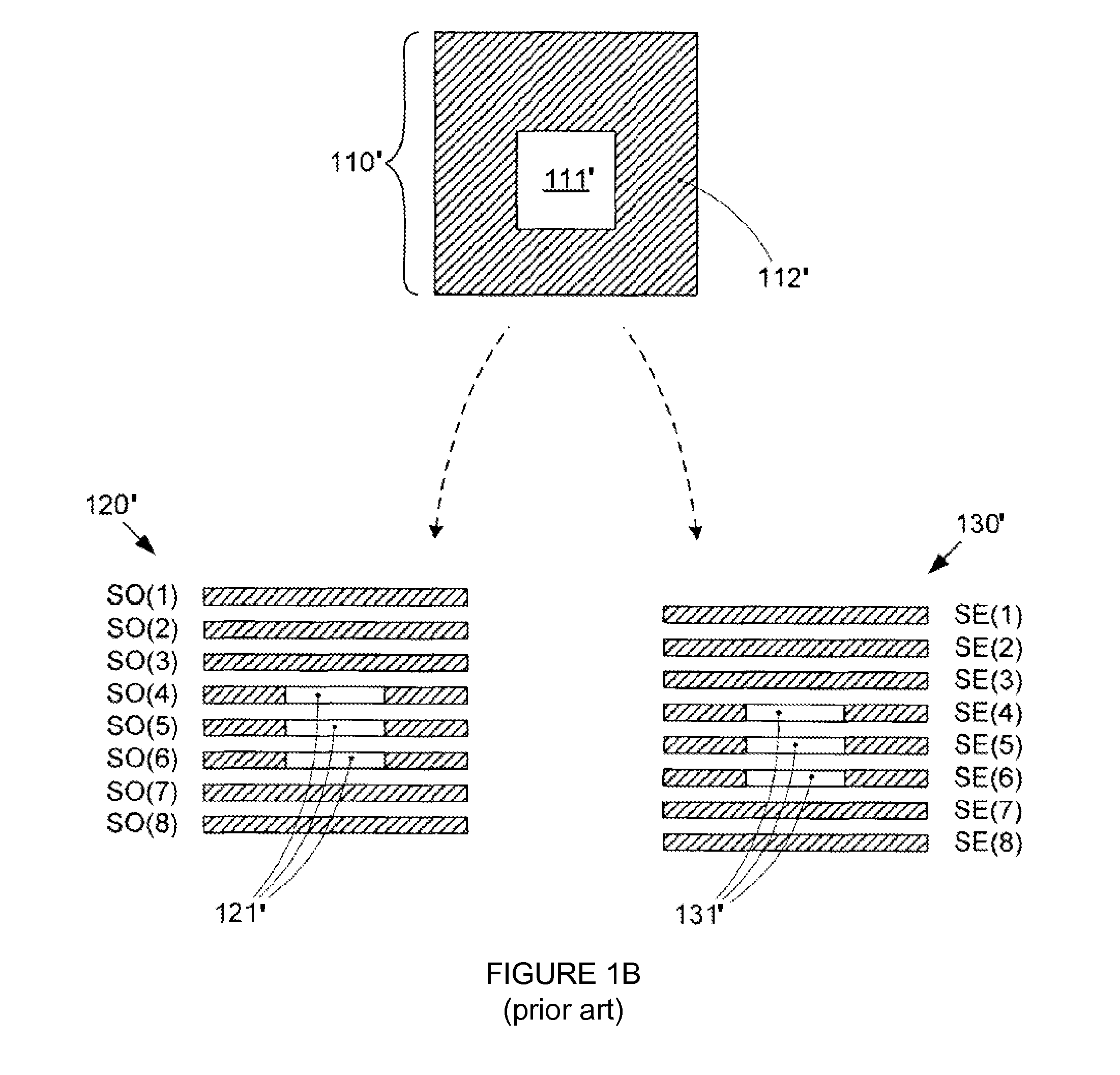

Deinterleaver and dual-viterbi decoder architecture

ActiveUS20070067704A1Fully comprehendedData representation error detection/correctionOther decoding techniquesViterbi decoderMemory bank

Pairs of parallel Viterbi decoders use windowed block data for decoding data at rates above 320 Mbps. Memory banks of the deinterleavers feeding the decoders operate such that some are receiving data while others are sending data to the decoders. Parallel input streams to every pair of decoders overlap for several traceback lengths of the decoder causing data input to a first decoder at the end of an input stream to be the same as the data input to a second decoder of the same pair at the beginning of an input stream. Then, the first decoder is able to post-synchronize its path metric with the second decoder and the second decoder is able to pre-synchronize its path metric with the first. Either, the deinterleaver data length is an integer multiple of the traceback length or the data input to only the first block of the first interleaver is padded.

Owner:REALTEK SEMICON CORP +1

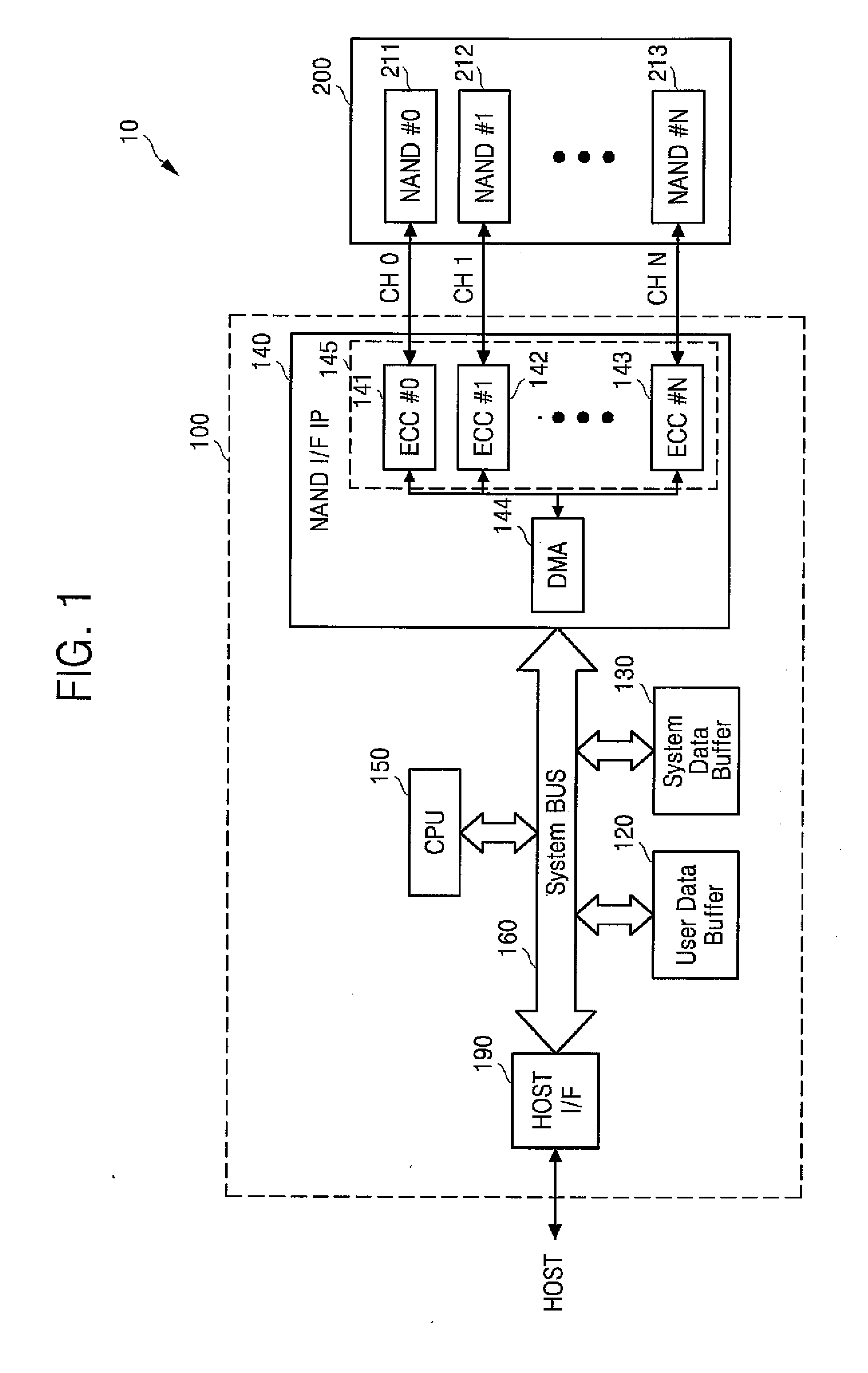

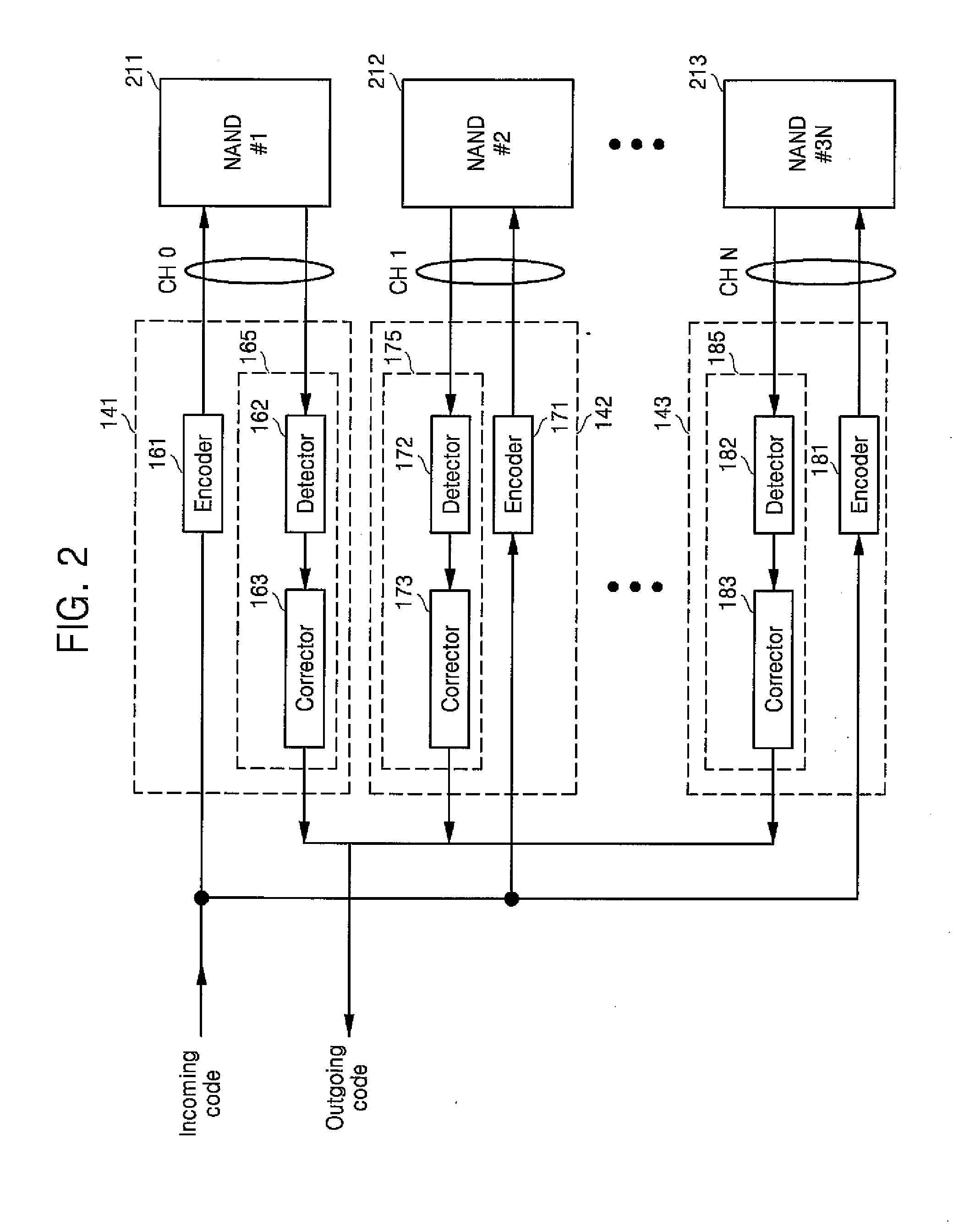

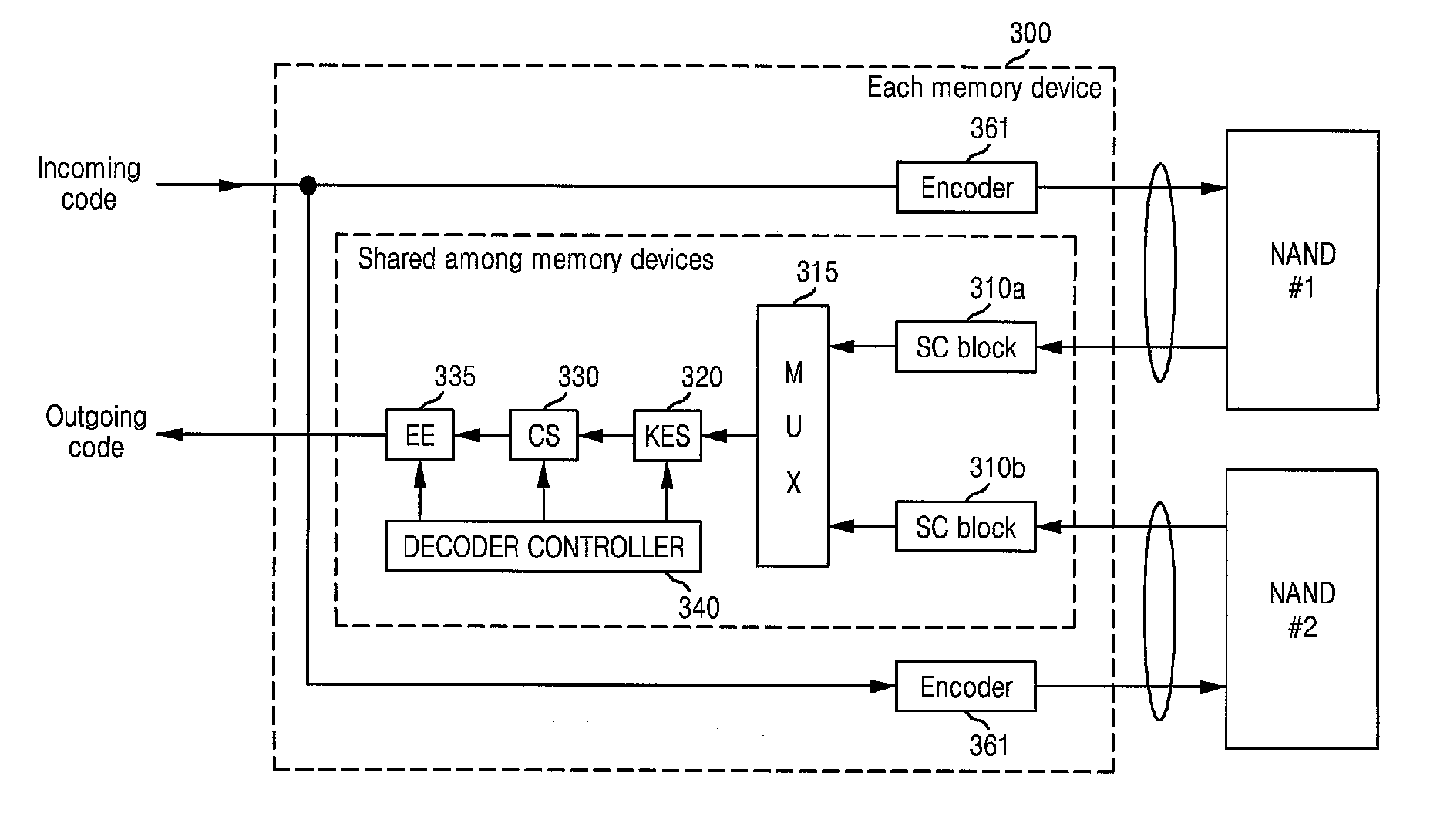

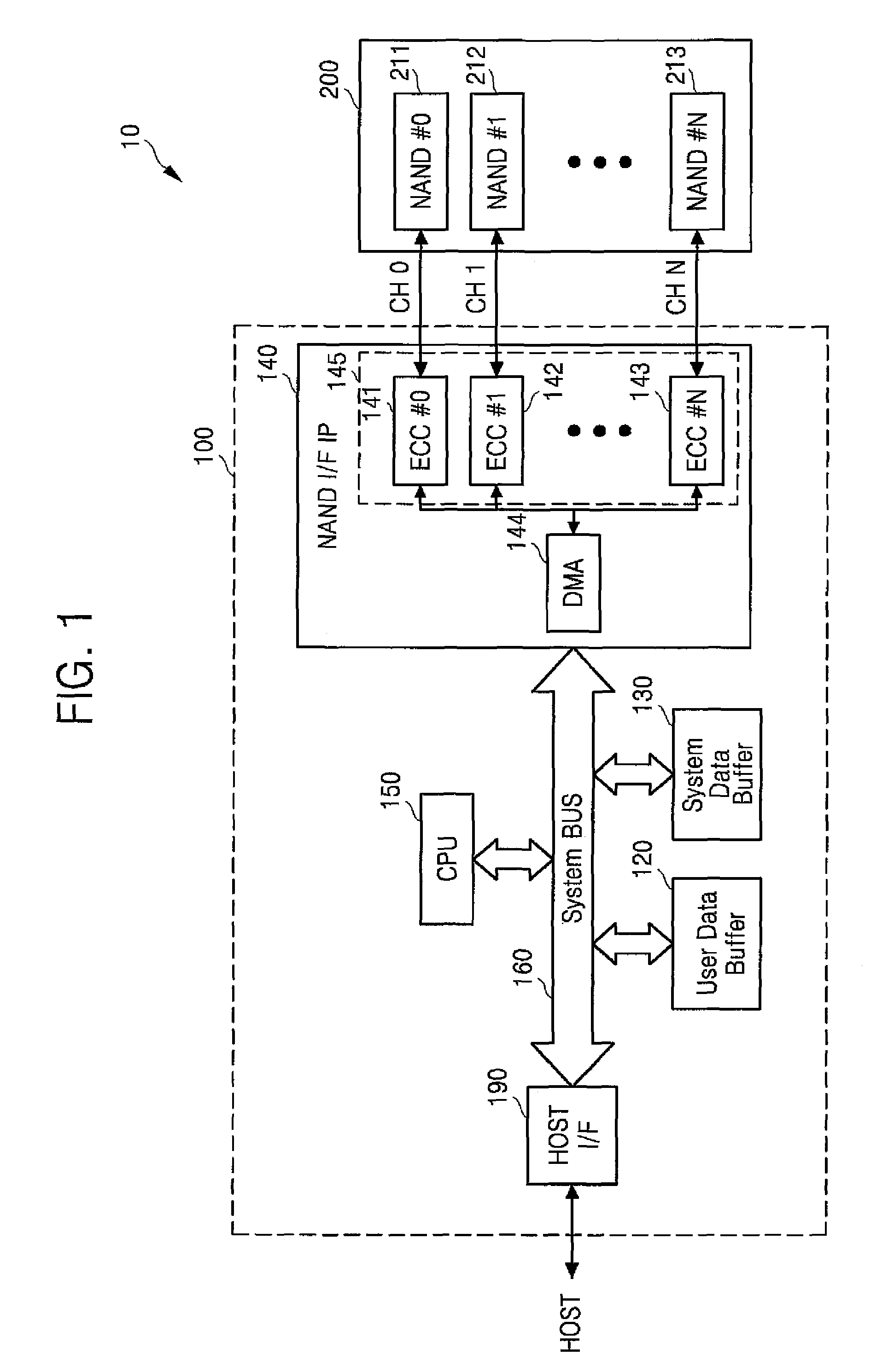

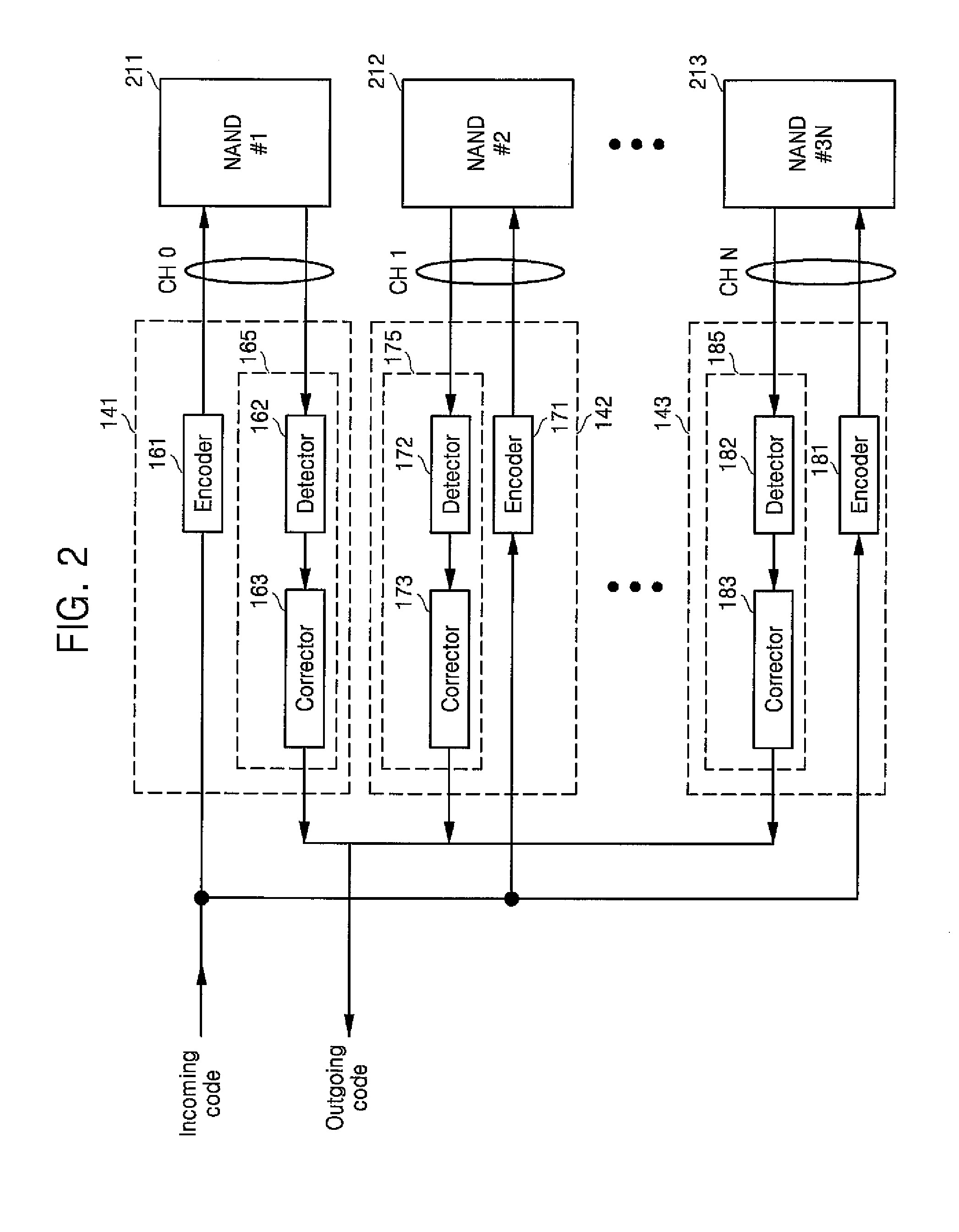

Multi-channel memory system including error correction decoder architecture with efficient area utilization

A memory system includes: a memory controller including an error correction decoder. The error correction decoder includes: a demultiplexer adapted to receive data and demultiplex the data into a first set of data and a second set of data; first and second buffer memories for storing the first and second sets of data, respectively; an error detector; an error corrector; and a multiplexer adapted to multiplex the first set of data and the second set of data and to provide the multiplexed data to the error corrector. While the error corrector corrects errors in the first set of data, the error detector detects errors in the second set of data stored in the second buffer memory.

Owner:SAMSUNG ELECTRONICS CO LTD

Method and apparatus for providing plug-in media decoders

A method and apparatus for providing plug-in media decoders. Embodiments provide a "plug-in" decoder architecture that allows software decoders to be transparently downloaded, along with media data. User applications are able to support new media types as long as the corresponding plug-in decoder is available with the media data. Persistent storage requirements are decreased because the downloaded decoder is transient, existing in application memory for the duration of execution of the user application. The architecture also supports use of plug-in decoders already installed in the user computer. One embodiment is implemented with object-based class files executed in a virtual machine to form a media application. A media data type is determined from incoming media data, and used to generate a class name for a corresponding codec (coder-decoder) object. A class path vector is searched, including the source location of the incoming media data, to determine the location of the codec class file for the given class name. When the desired codec class file is located, the virtual machine's class loader loads the class file for integration into the media application. If the codec class file is located across the network at the source location of the media data; the class loader downloads the codec class file from the network. Once the class file is loaded into the virtual machine, an instance of the codec class is created within the media application to decode / decompress the media data as appropriate for the media data type.

Owner:SUN MICROSYSTEMS INC

Multi-channel memory system including error correction decoder architecture with efficient area utilization

A memory system includes: a memory controller including an error correction decoder. The error correction decoder includes: a demultiplexer adapted to receive data and demultiplex the data into a first set of data and a second set of data; first and second buffer memories for storing the first and second sets of data, respectively; an error detector; an error corrector; and a multiplexer adapted to multiplex the first set of data and the second set of data and to provide the multiplexed data to the error corrector. While the error corrector corrects errors in the first set of data, the error detector detects errors in the second set of data stored in the second buffer memory.

Owner:SAMSUNG ELECTRONICS CO LTD

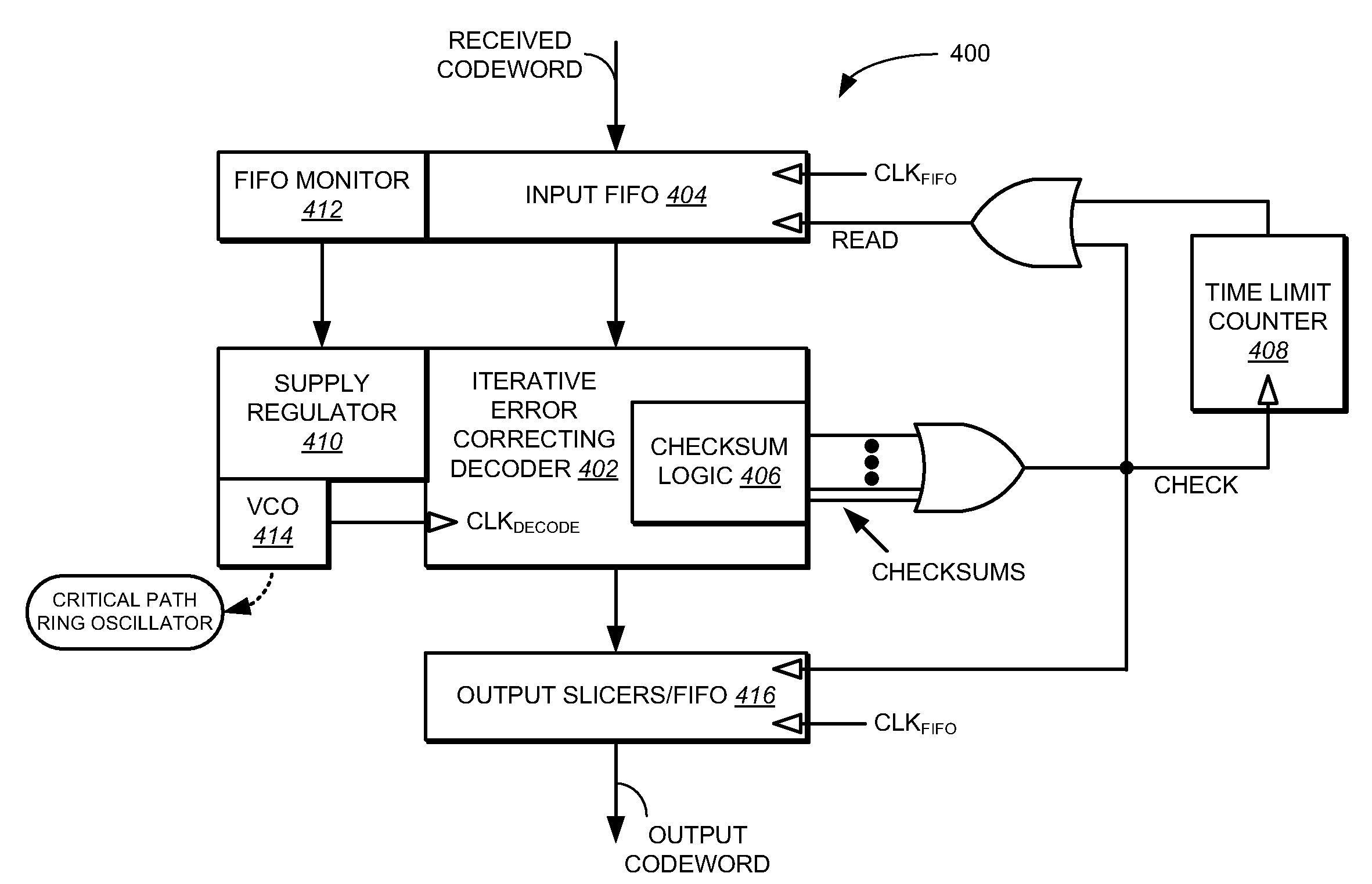

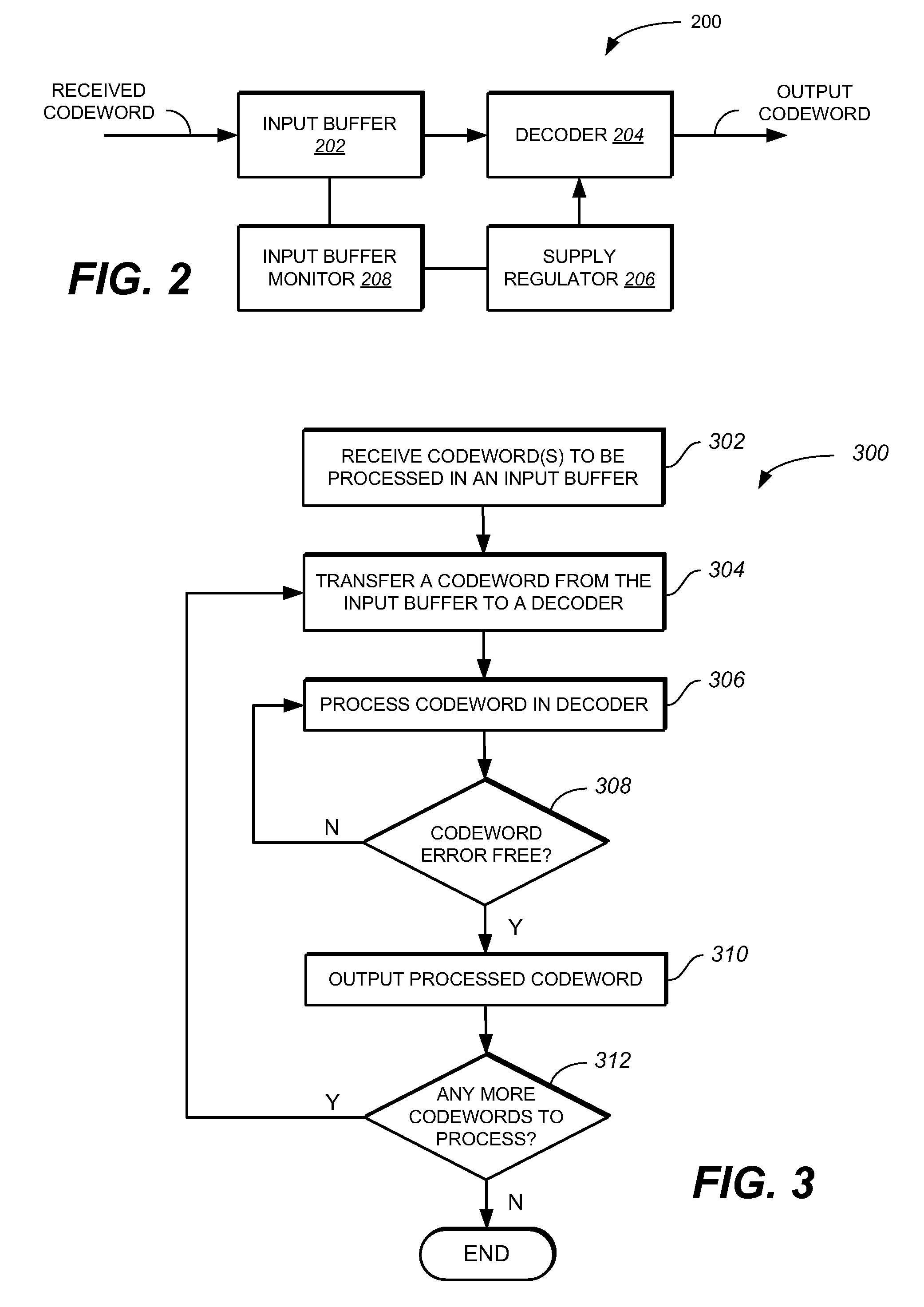

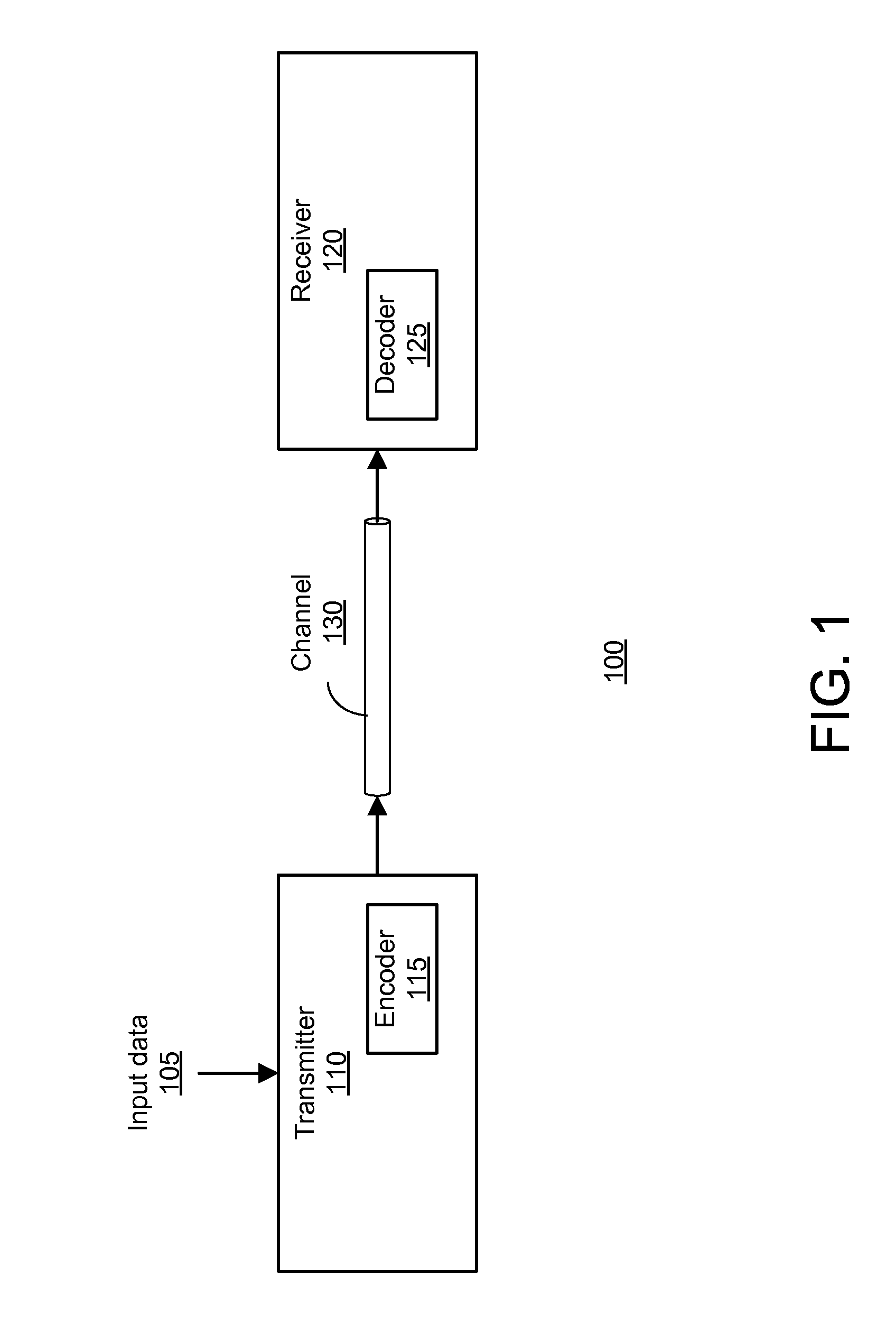

Low power iterative decoder using input data pipelining and voltage scaling

ActiveUS7805642B1Decoding time also increasesReduce areaOther decoding techniquesError detection/correctionPower conditionerComputer science

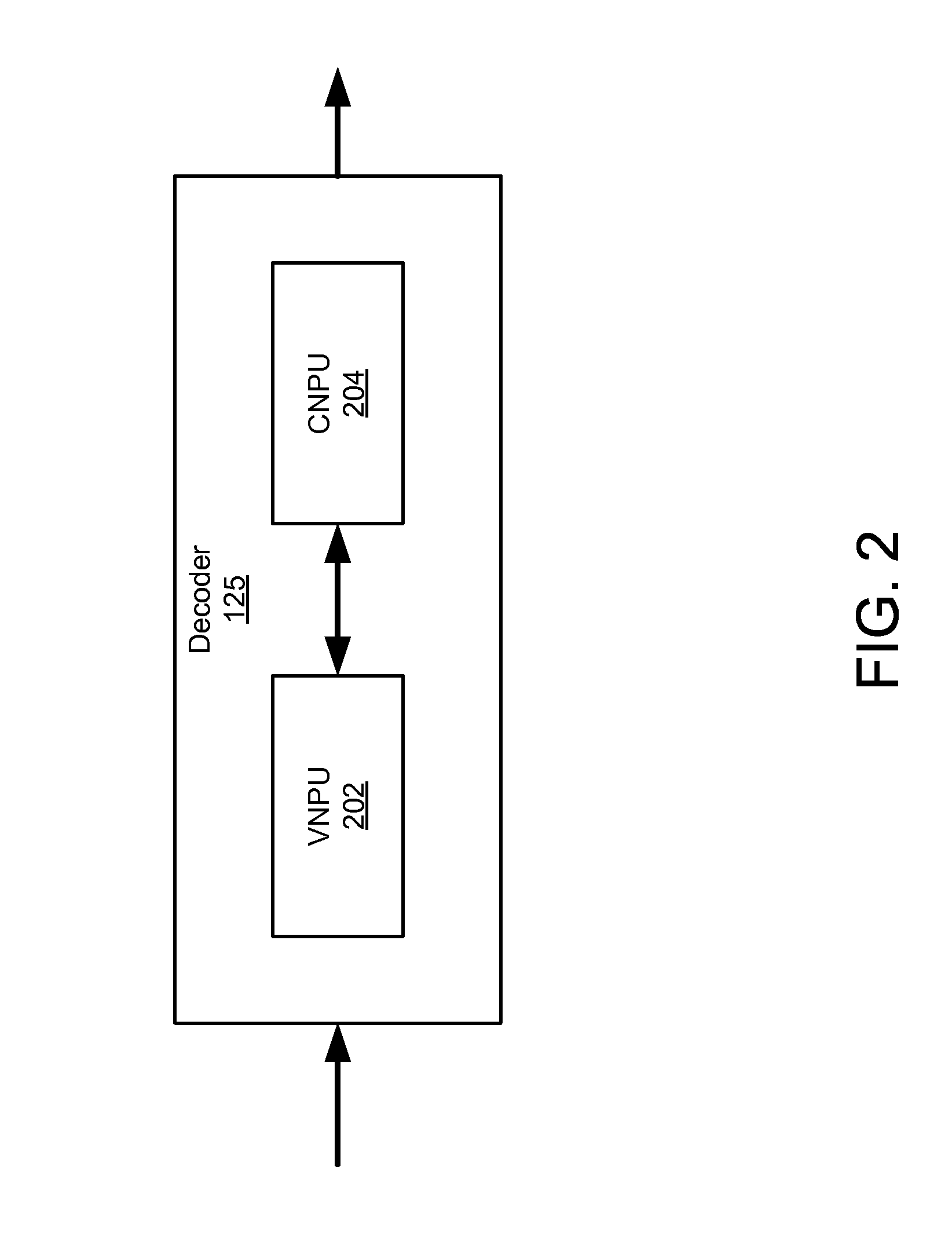

A decoder architecture and method for processing codewords are provided. In one implementation, the decoder architecture includes an input buffer configured to receive and store one or more codewords to be processed, and a decoder configured to receive codewords one at a time from the input buffer. The decoder processes each codeword only for a minimum amount of time for the codeword to become error free. The decoder architecture further includes an input buffer monitor and supply regulator configured to change a voltage supply to the decoder responsive to an average amount of time or each codeword to become error free.

Owner:MARVELL ASIA PTE LTD

Method and apparatus for providing plug-in media decoders

InactiveUS20020007357A1Data processing applicationsDigital data processing detailsObject basedData source

A method and apparatus for providing plug-in media decoders. Embodiments provide a "plug-in" decoder architecture that allows software decoders to be transparently downloaded, along with media data. User applications are able to support new media types as long as the corresponding plug-in decoder is available with the media data. Persistent storage requirements are decreased because the downloaded decoder is transient, existing in application memory for the duration of execution of the user application. The architecture also supports use of plug-in decoders already installed in the user computer. One embodiment is implemented with object-based class files executed in a virtual machine to form a media application. A media data type is determined from incoming media data, and used to generate a class name for a corresponding codec (coder-decoder) object. A class path vector is searched, including the source location of the incoming media data, to determine the location of the codec class file for the given class name. When the desired codec class file is located, the virtual machine's class loader loads the class file for integration into the media application. If the codec class file is located across the network at the source location of the media data; the class loader downloads the codec class file from the network. Once the class file is loaded into the virtual machine, an instance of the codec class is created within the media application to decode / decompress the media data as appropriate for the media data type.

Owner:SUN MICROSYSTEMS INC

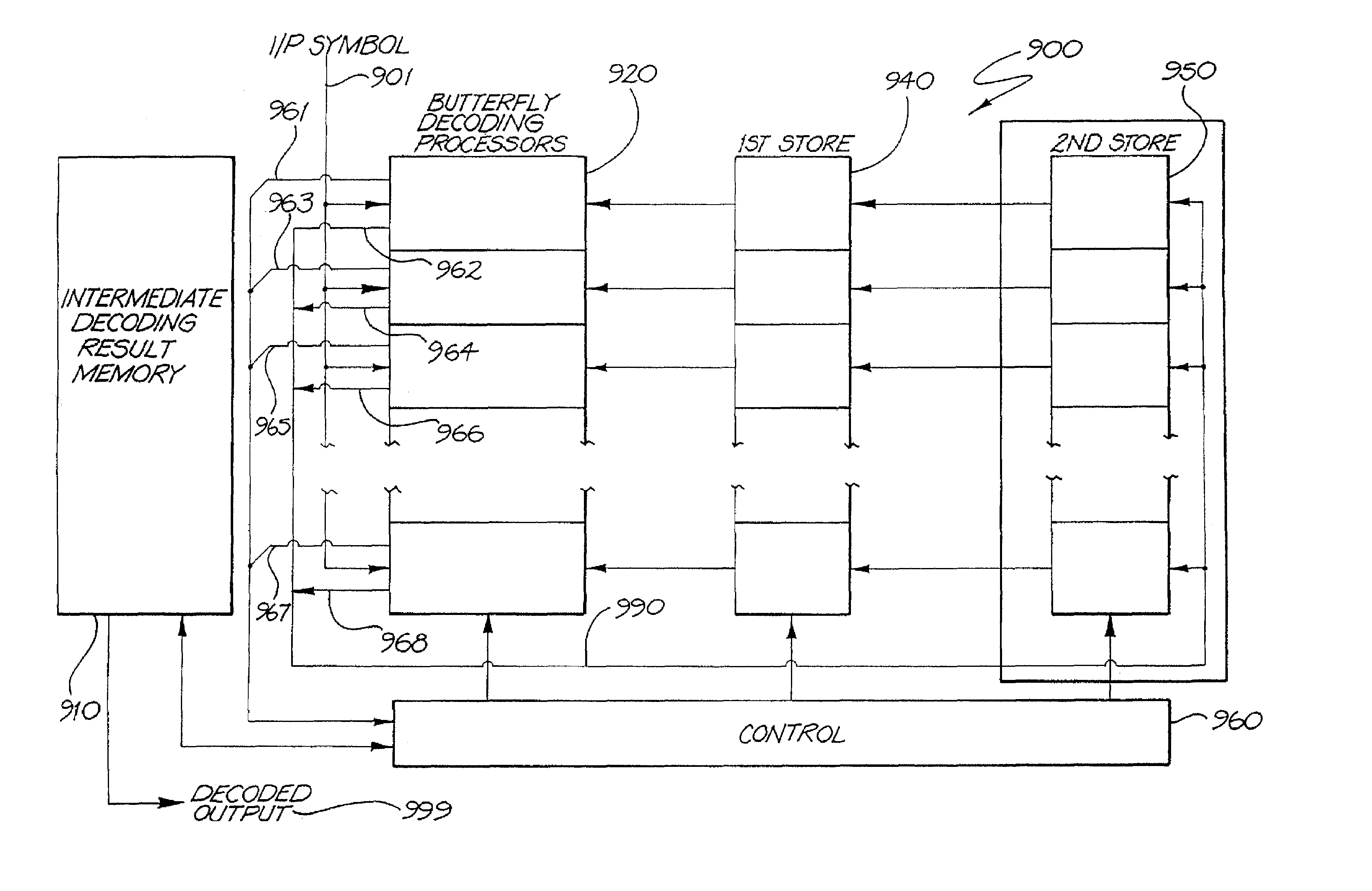

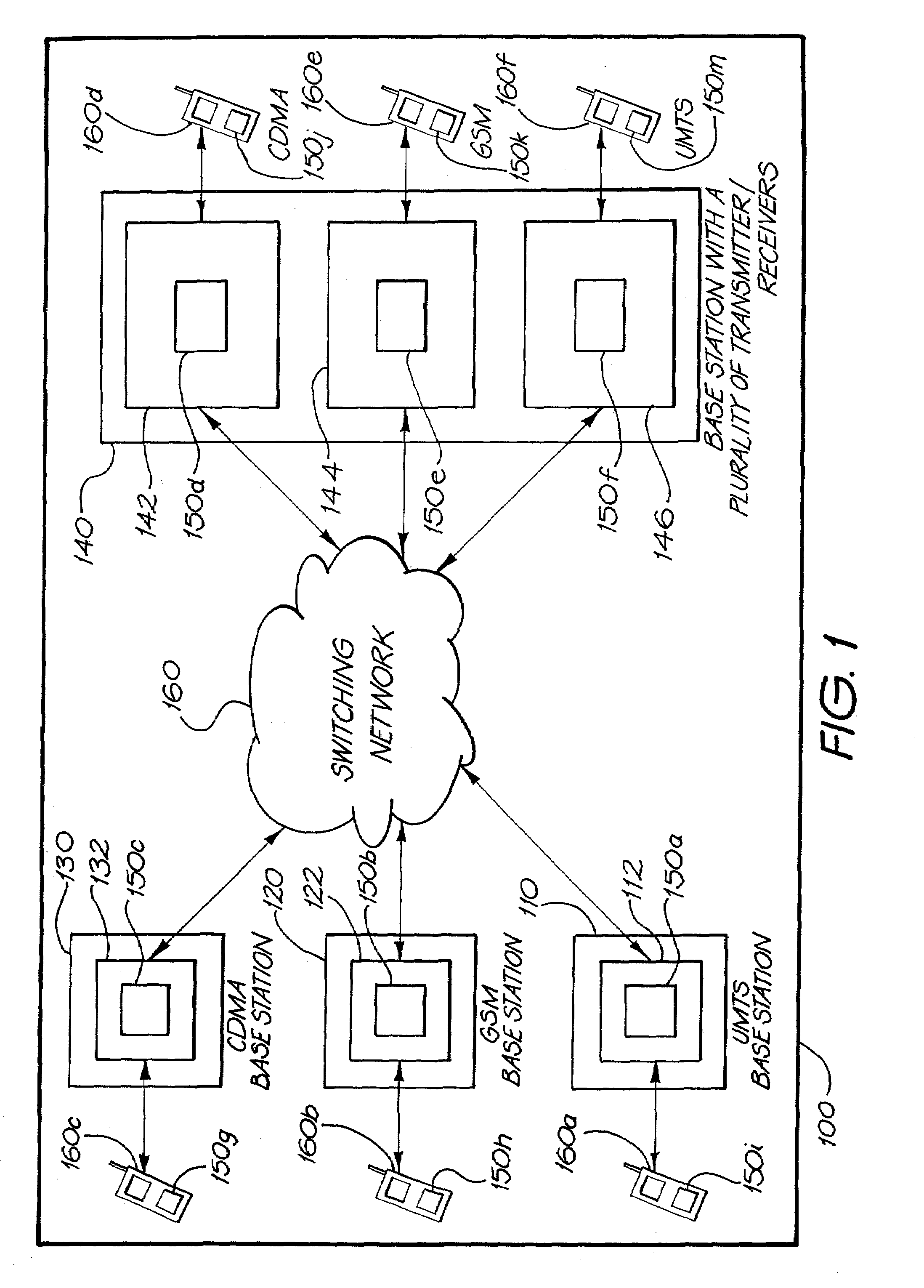

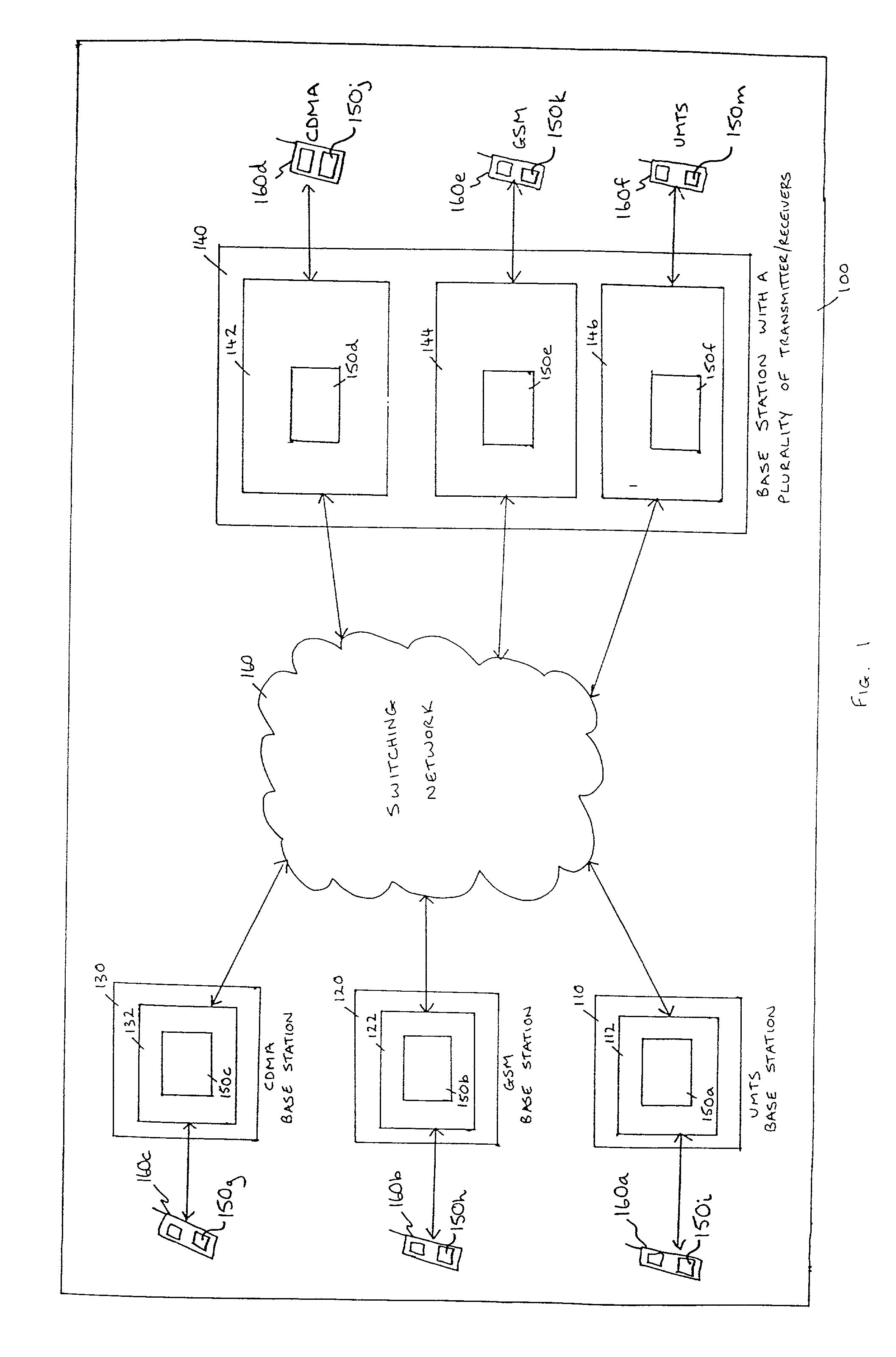

Reconfigurable architecture for decoding telecommunications signals

InactiveUS7127664B2Improve scalabilityEasy to handleError correction/detection using convolutional codesError preventionCDMA2000Computer hardware

The present invention discloses a single unified decoder for performing both convolutional decoding and turbo decoding in the one architecture. The unified decoder can be partitioned dynamically to perform required decoding operations on varying numbers of data streams at different throughput rates. It also supports simultaneous decoding of voice (convolutional decoding) and data (turbo decoding) streams. This invention forms the basis of a decoder that can decode all of the standards for TDMA, IS-95, GSM, GPRS, EDGE, UMTS, and CDMA2000. Processors are stacked together and interconnected so that they can perform separately as separate decoders or in harmony as a single high speed decoder. The unified decoder architecture can support multiple data streams and multiple voice streams simultaneously. Furthermore, the decoder can be dynamically partitioned as required to decode voice streams for different standards.

Owner:WSOU INVESTMENTS LLC

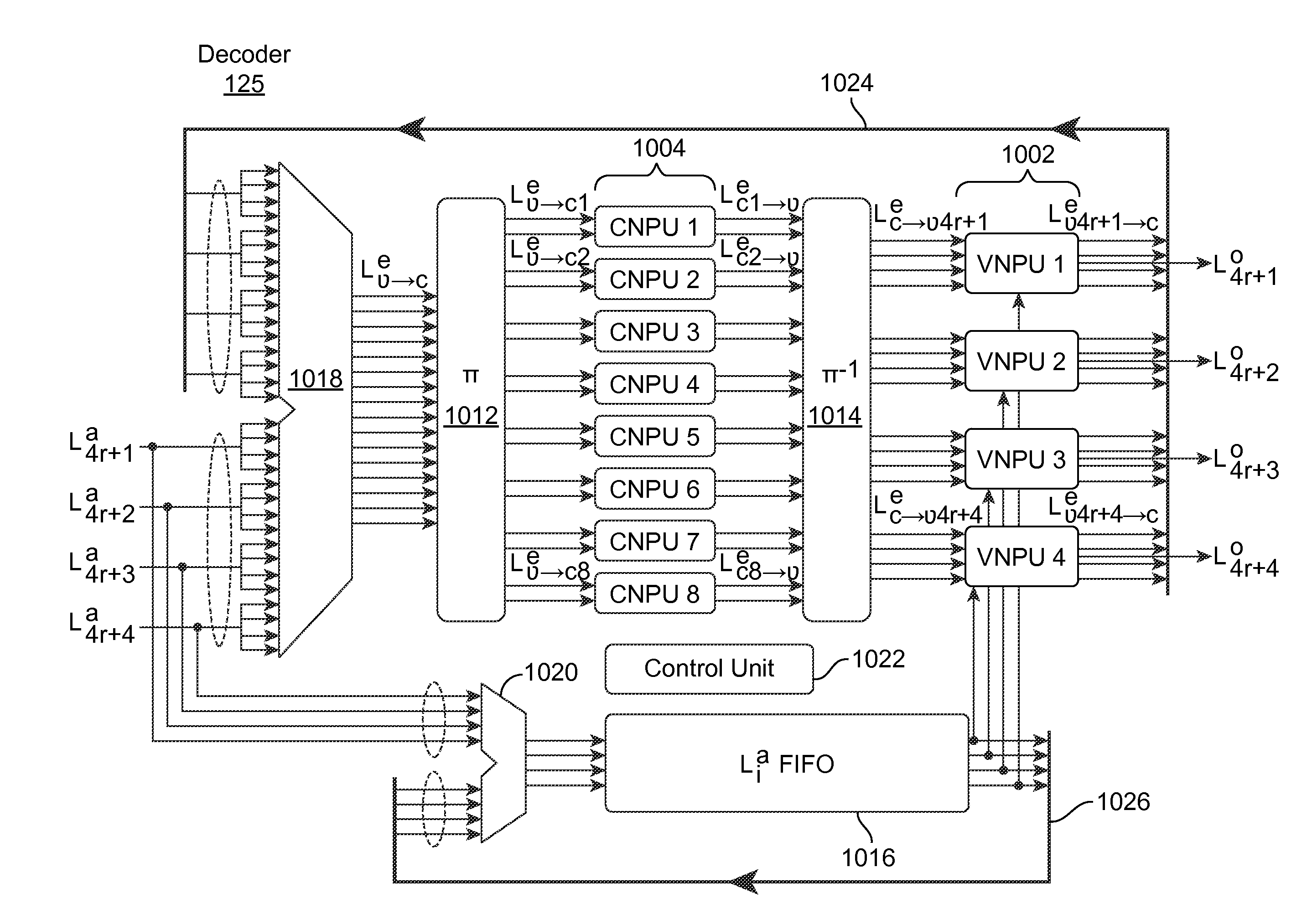

Non-Concatenated FEC Codes for Ultra-High Speed Optical Transport Networks

ActiveUS20120221914A1Improves decoding processAvoid delayError preventionChecking code calculationsUltra high speedParallel computing

A decoder performs forward error correction based on quasi-cyclic regular column-partition low density parity check codes. A method for designing the parity check matrix reduces the number of short-cycles of the matrix to increase performance. An adaptive quantization post-processing technique further improves performance by eliminating error floors associated with the decoding. A parallel decoder architecture performs iterative decoding using a parallel pipelined architecture.

Owner:MARVELL ASIA PTE LTD

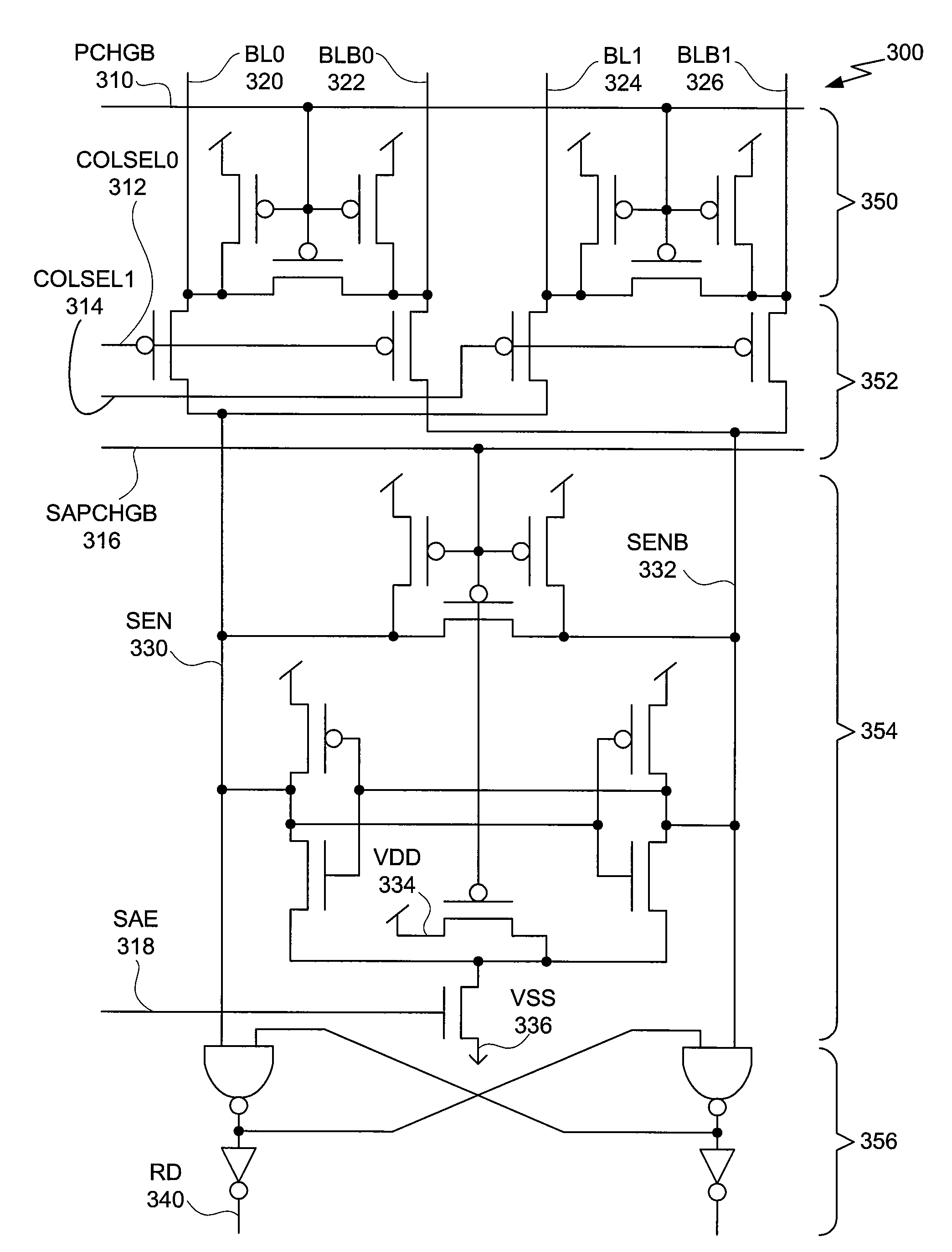

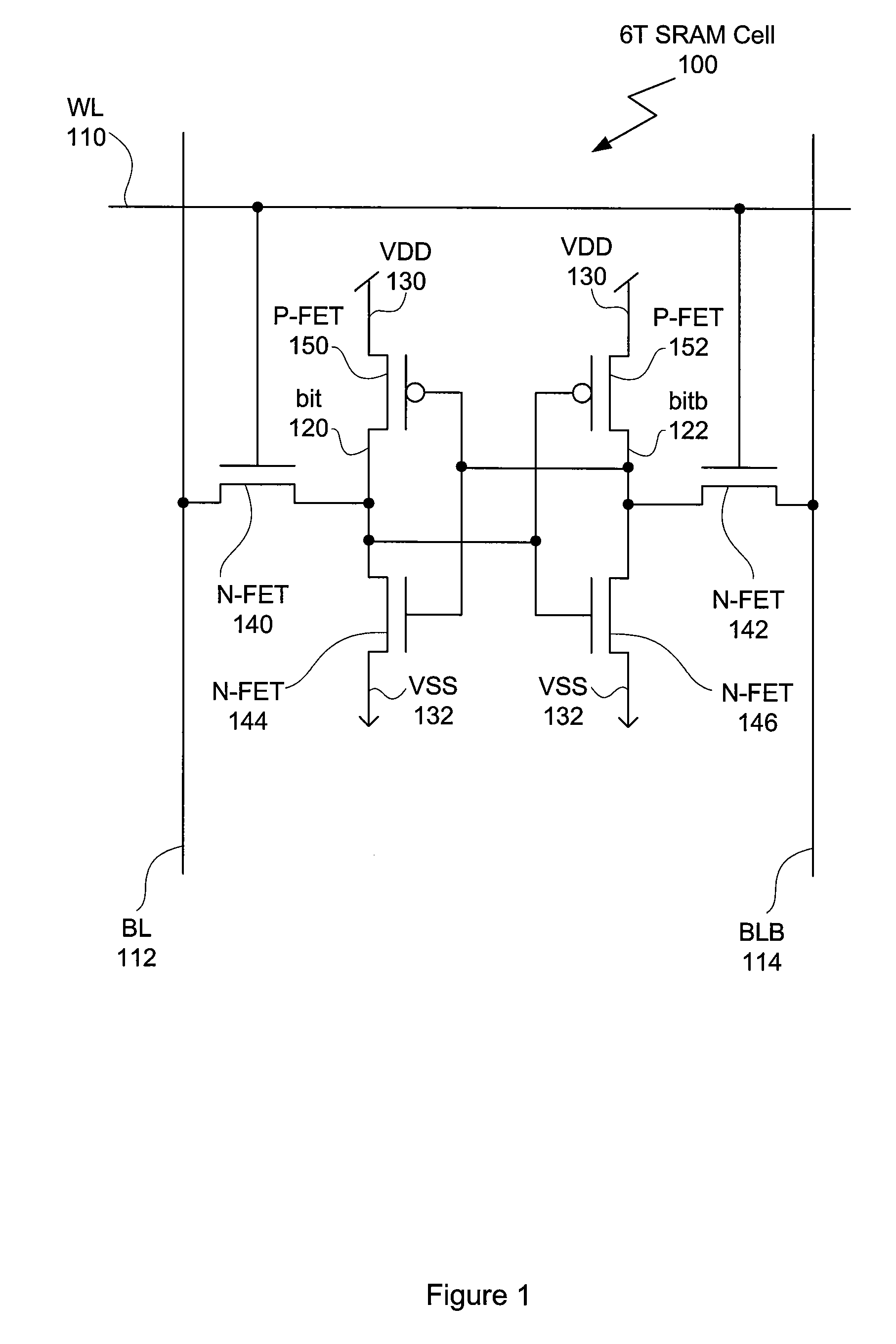

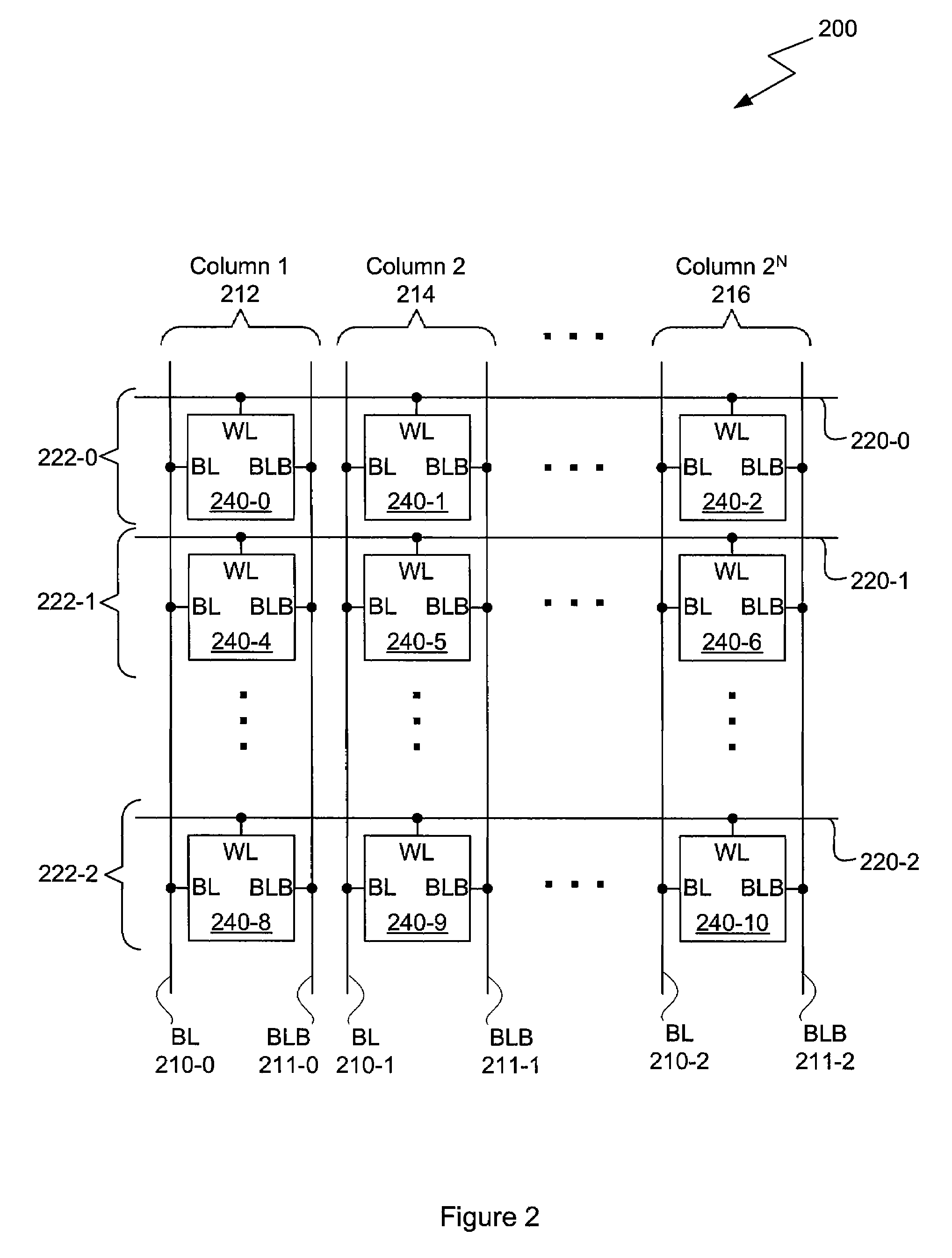

Sequentially-accessed 1R/1W double-pumped single port SRAM with shared decoder architecture

One embodiment of the present invention sets forth a synchronous two-port static random access memory (SRAM) design with the area efficiency of a one-port SRAM. By restricting both access ports to an edge-triggered, synchronous clocking regime, the internal timing of the SRAM can be optimized to allow high-performance double-pumped access to the SRAM storage cells. By double-pumping the SRAM storage cells, one read access and one write access are possible per clock cycle, allowing the SRAM to present two external ports, each capable of performing one transaction per clock cycle.

Owner:NVIDIA CORP

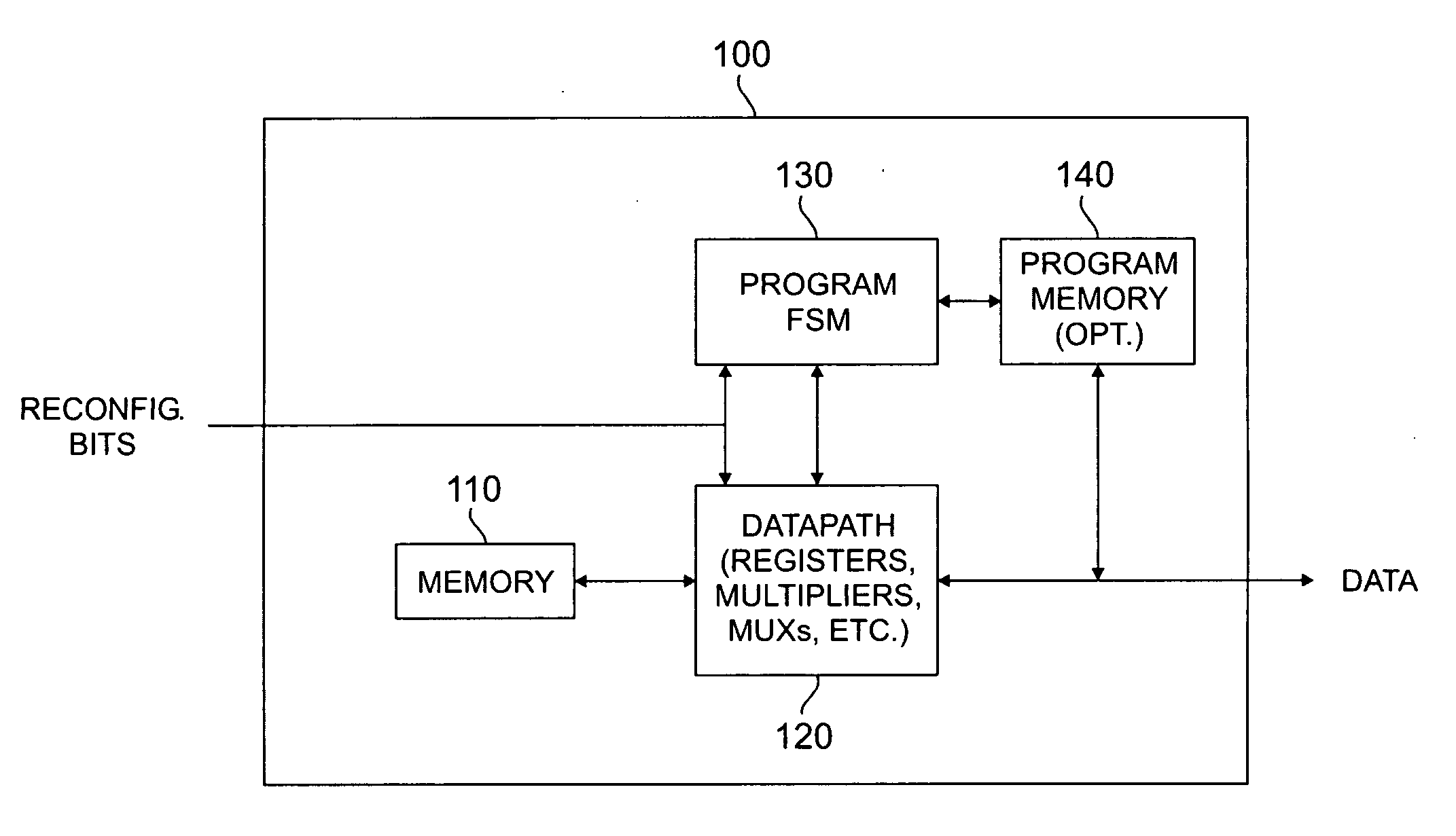

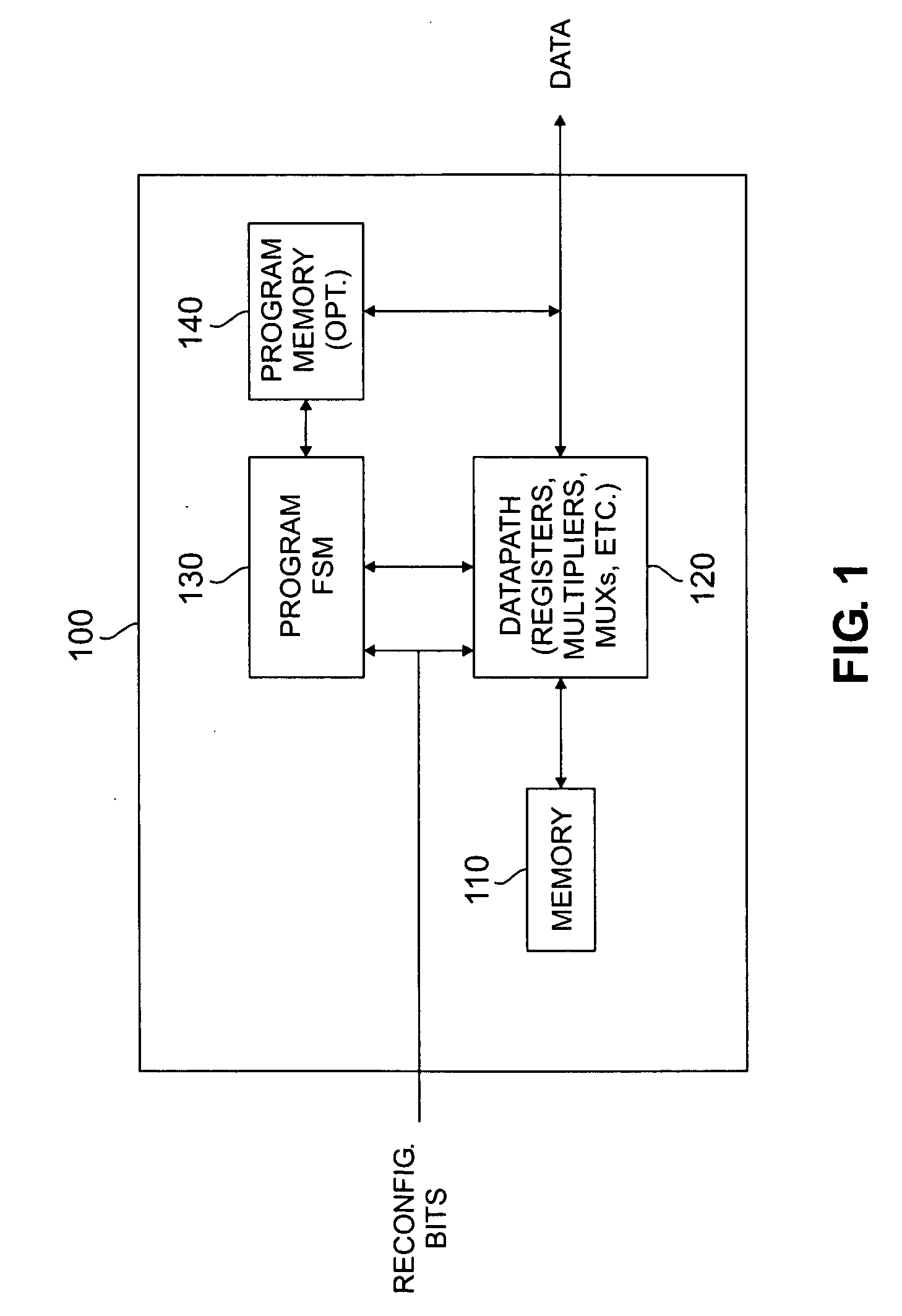

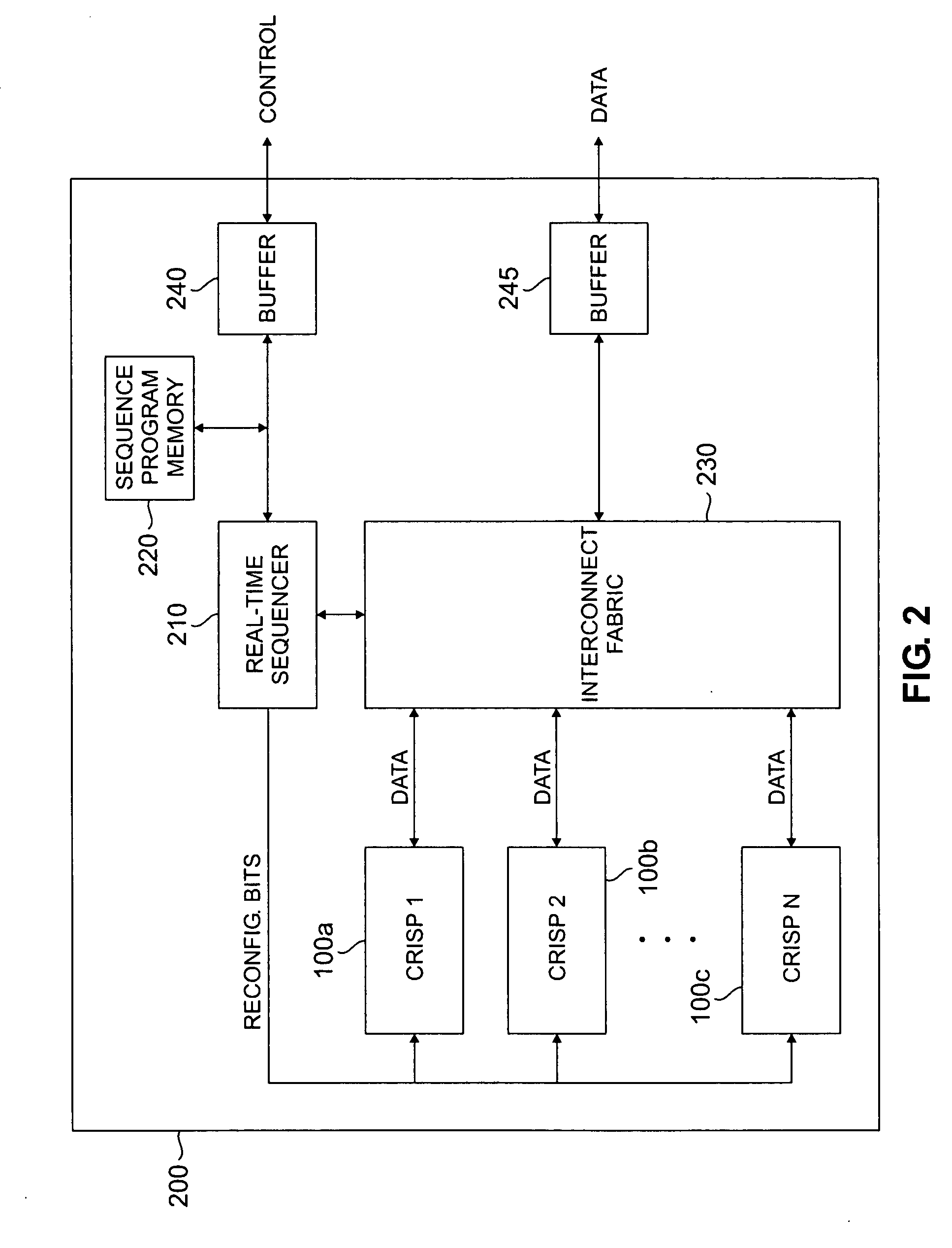

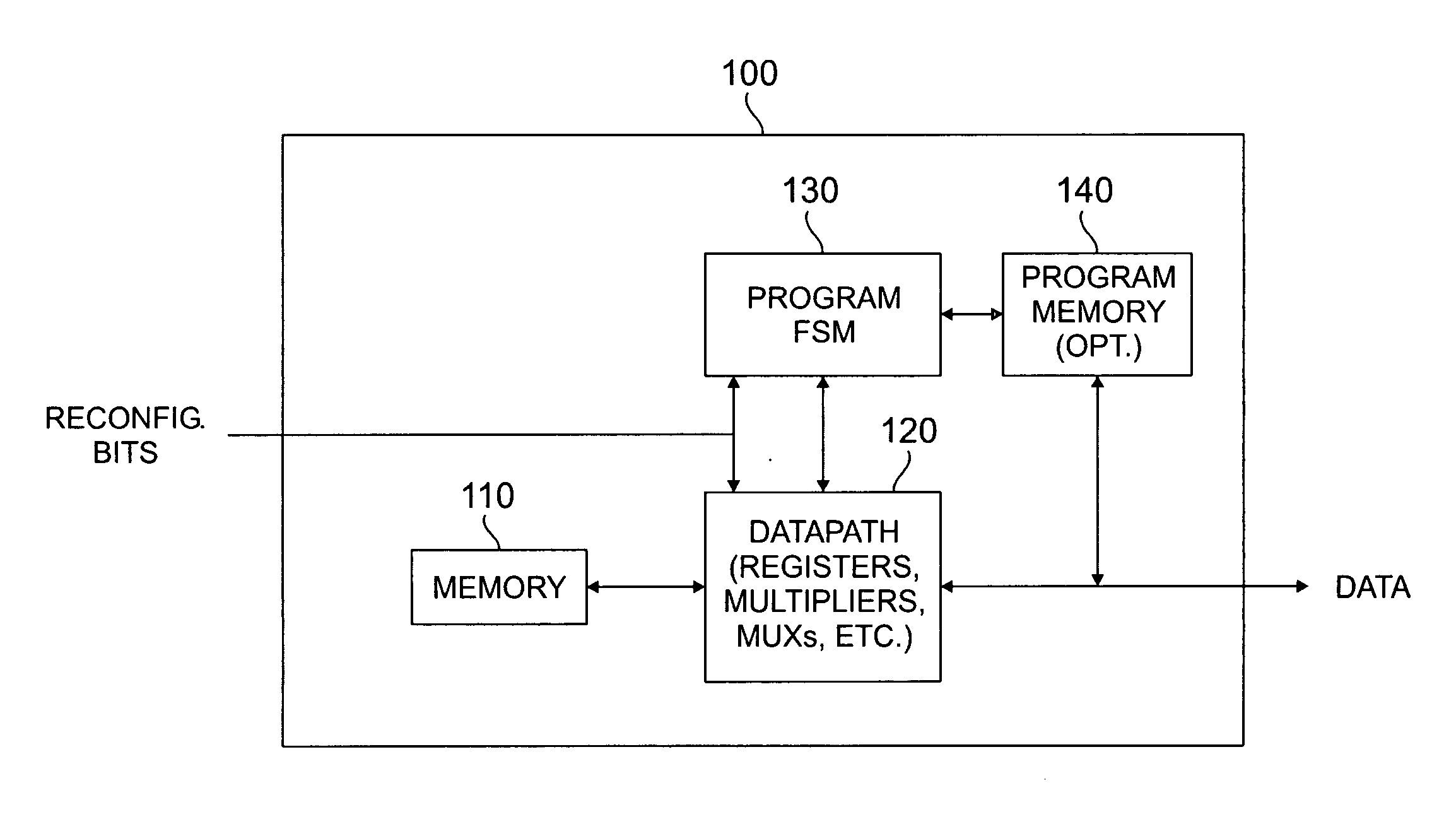

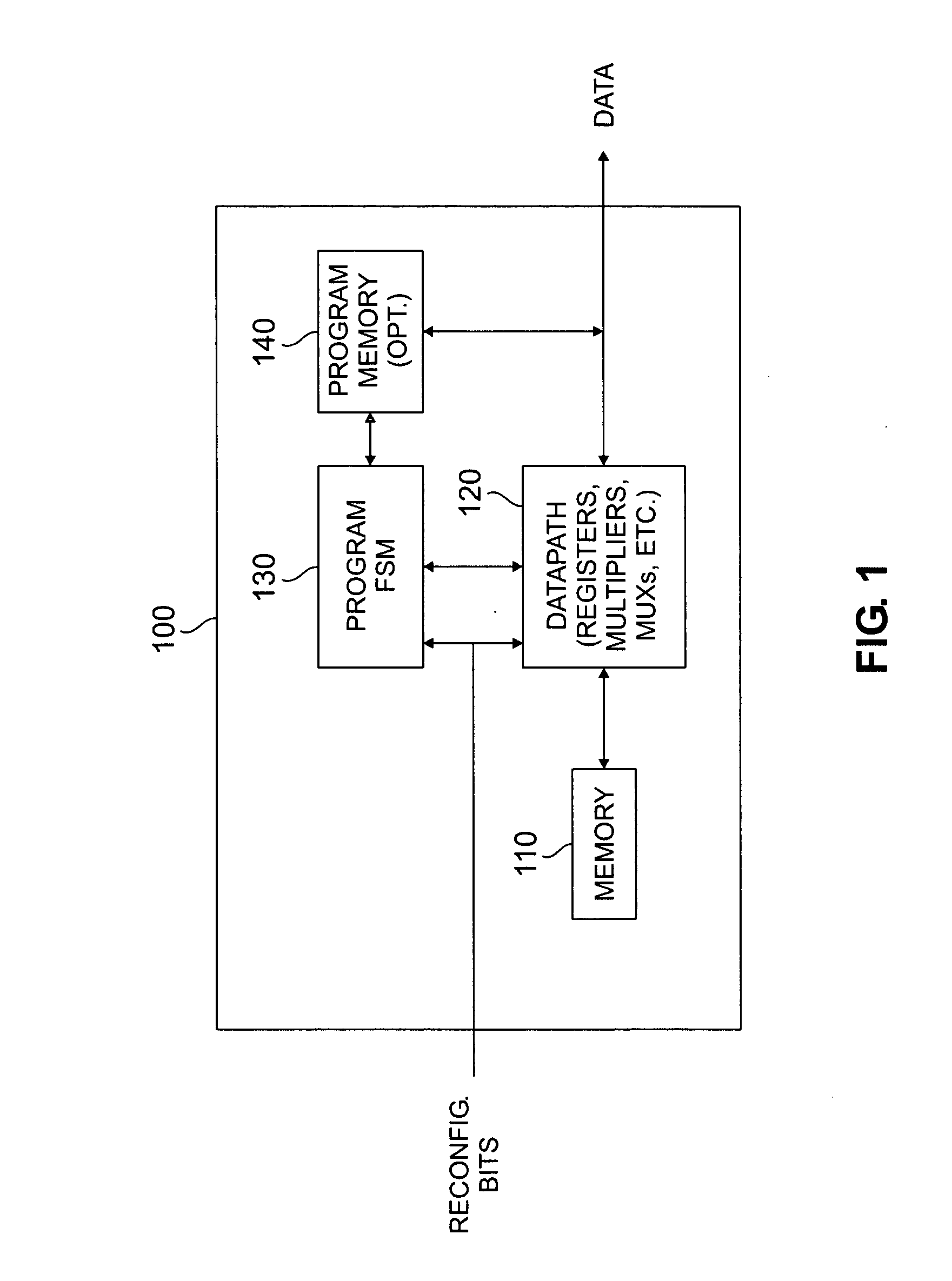

Viterbi decoder architecture for use in software-defined radio systems

InactiveUS20060195773A1Data representation error detection/correctionError preventionMultiple contextComputer architecture

A reconfigurable Viterbi decoder comprising a reconfigurable data path and a programmable finite state machine that controls the reconfigurable data path. The reconfigurable data path comprises a plurality of reconfigurable functional blocks including: i) a reconfigurable branch metric calculation block; and ii) a reconfigurable add-compare-select and path metric calculation block. The programmable finite state machine executes a plurality of context-related instructions associated with the reconfigurable Viterbi decoder.

Owner:SAMSUNG ELECTRONICS CO LTD

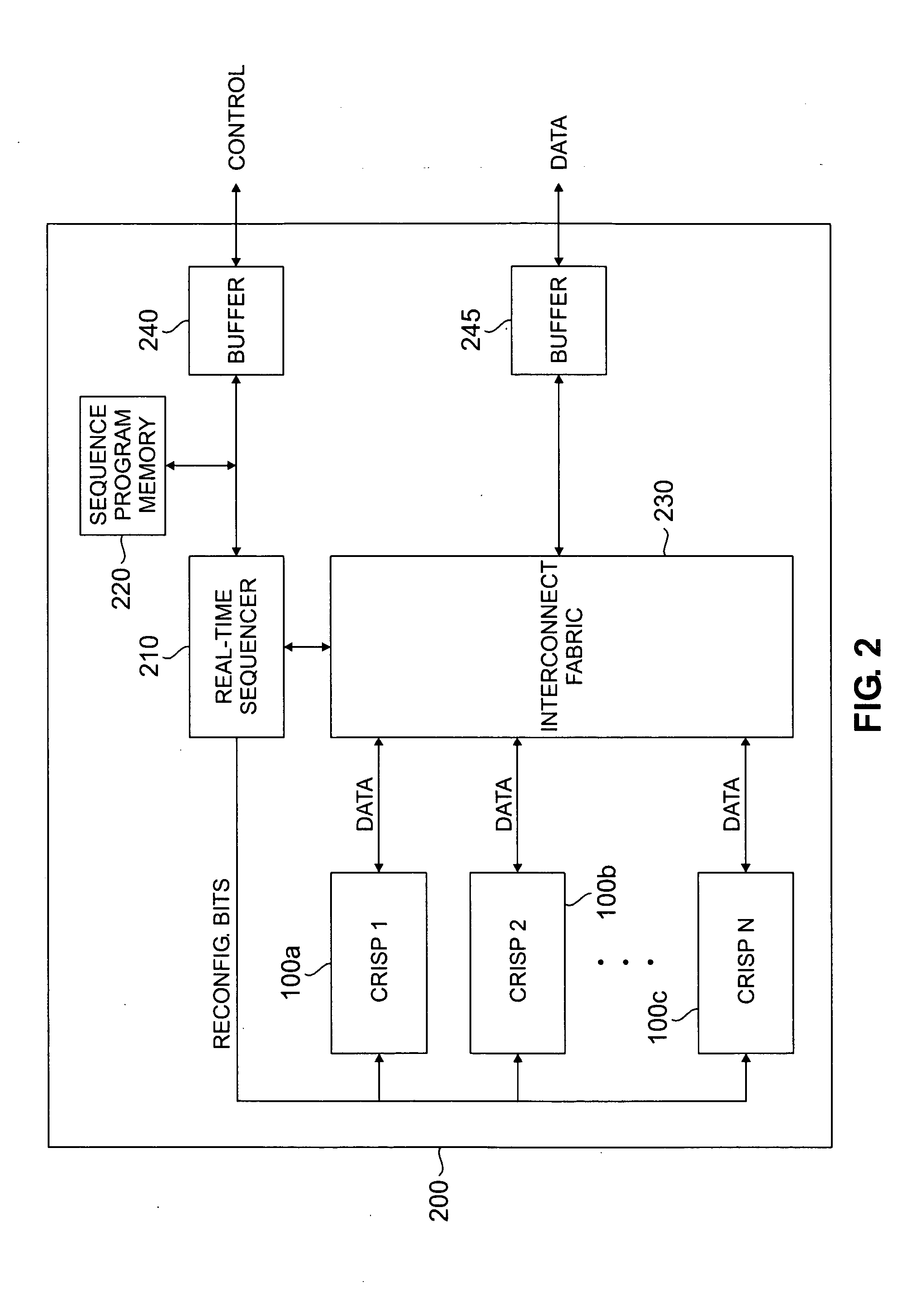

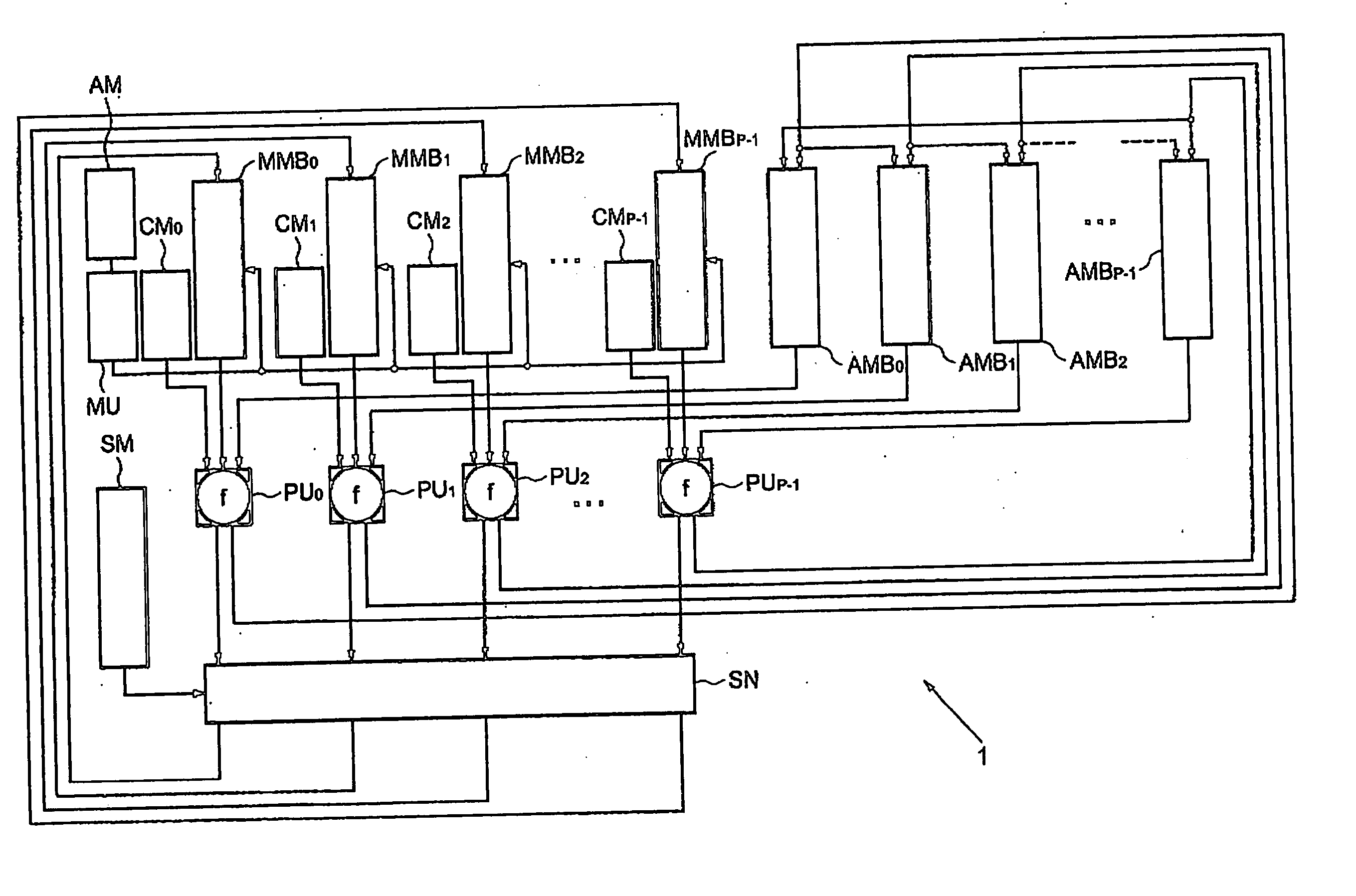

Turbo decoder architecture for use in software-defined radio systems

ActiveUS20060184855A1Data representation error detection/correctionOther decoding techniquesSoftware define radioData rate

A reconfigurable turbo decoder comprising N processing units. Each of the N processing units receives soft input data samples and decodes the received soft input data samples. The N processing units operate independently such that a first processing unit may be selected to decode the received soft input data samples while a second processing unit may be disabled. The number of processing units selected to decode the soft input data samples is determined by a data rate of the received soft input data samples. The reconfigurable turbo decoder also comprises N input data memories that store the received soft input data samples and N extrinsic information memories that store extrinsic information generated by the N processing units. Each of the N processing units is capable of reading from and writing to each of the N input data memories and each of the N extrinsic information memories.

Owner:SAMSUNG ELECTRONICS CO LTD

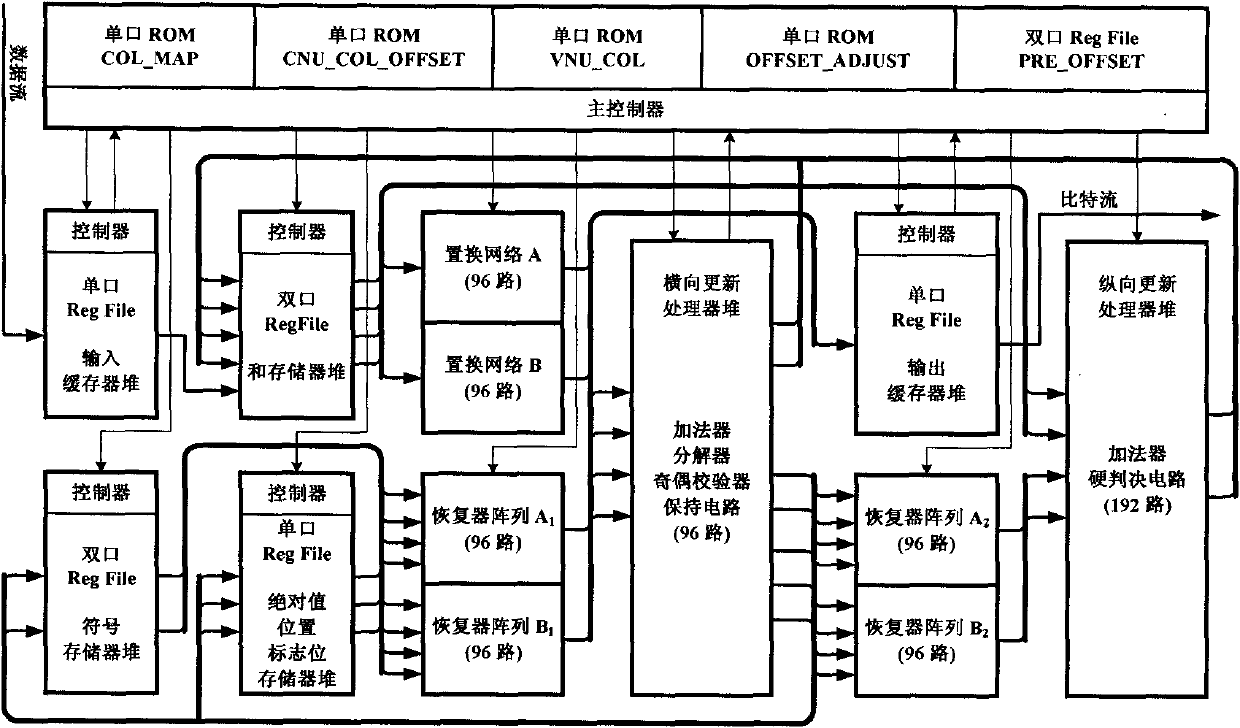

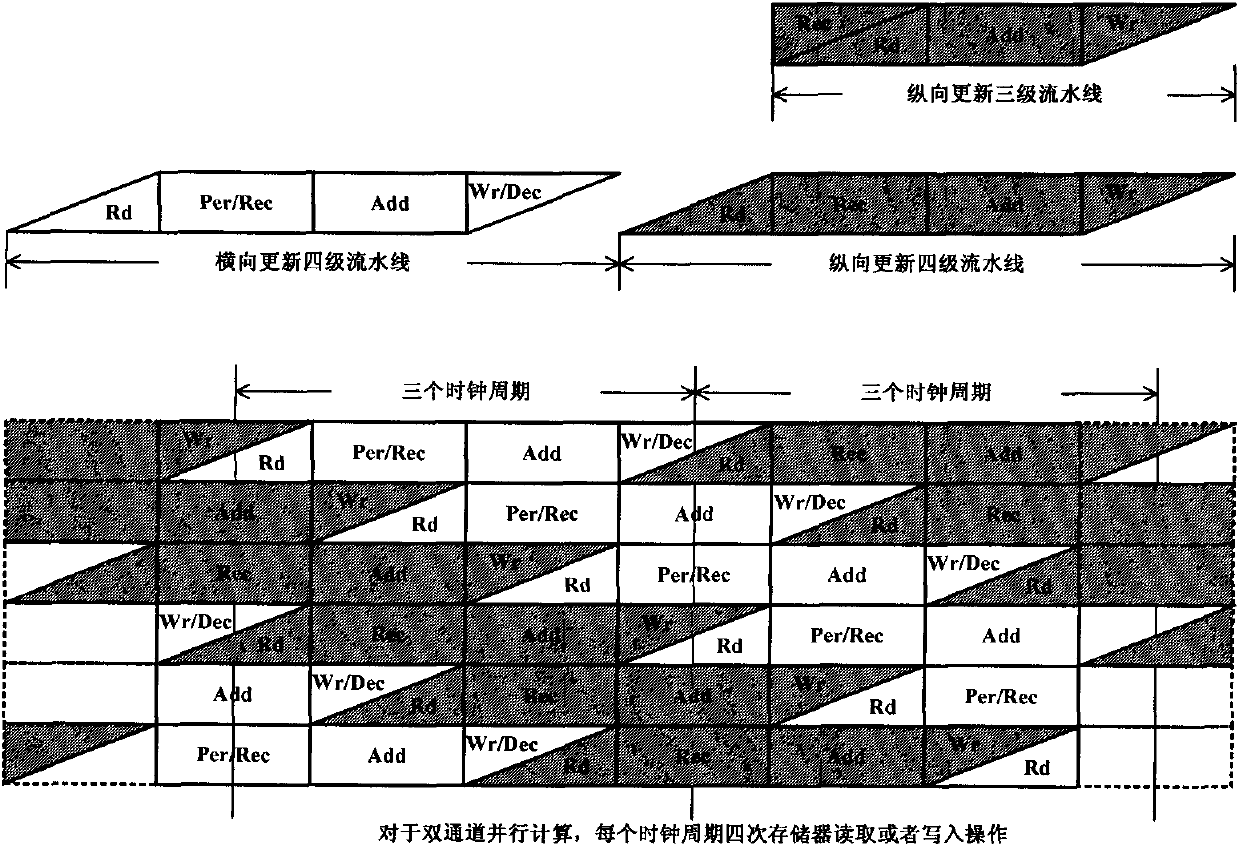

Ultrahigh-speed and low-power-consumption QC-LDPC code decoder based on TDMP

InactiveCN101771421AReduce visitsAvoid access violationsError correction/detection using multiple parity bitsComputer architectureParallel computing

The invention belongs to the technical field of wireless communication and micro-electronics, in particular to an ultrahigh-speed low-power-consumption and low-density parity check code (QC-LDPC) decoder based on TDMP. Through symmetrizing six grades of production lines, interlacing row blocks and line blocks, re-sequencing nonzero sub matrixes, carrying out four-quadrant division on a sum value register pile and adopting the technology of reading and writing the bypass, the decoder carries out serial scanning in the row sequence, two nonzero sub matrixes are respectively processed in each clock period during horizontal updating and vertical updating. The horizontal updating and the vertical updating are fully overlapped. Particularly, the sum value register pile stores the sum values of variable nodes, and is also used as an FIFO for storing transient external information transferred between two phases. The structure of the decoder has strong configurability, can be easily transplanted into any other irregular or irregular QC-LDPC codes, and has the excellent decoding performance, the peak frequency can reach 214 MHz, the thuoughput can reach about 1 gigabit per second, and the chip power consumption is only 397 milliwatts.

Owner:FUDAN UNIV

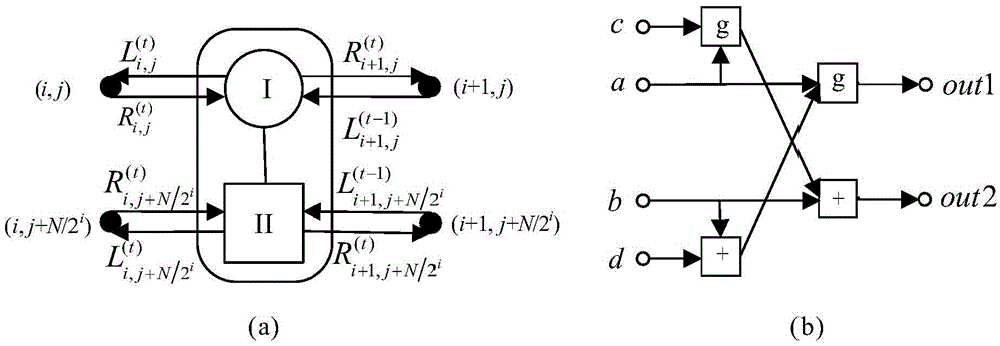

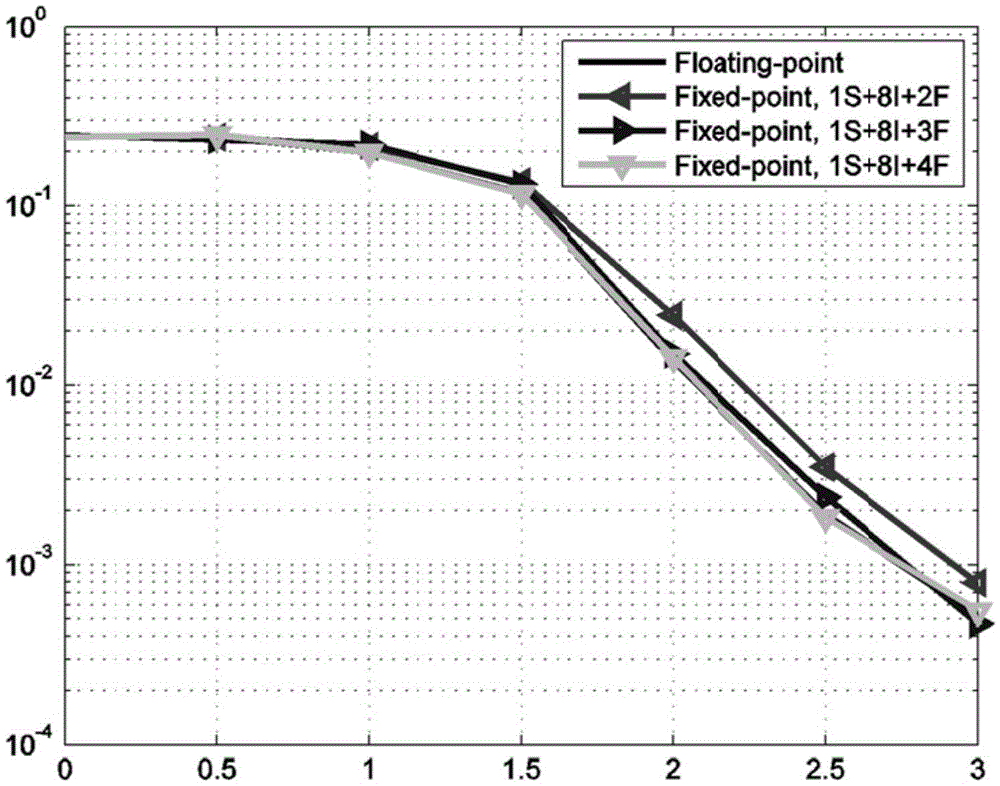

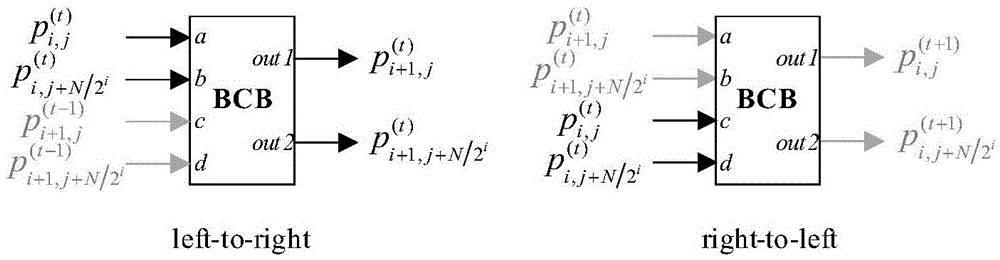

Assembly-line architecture of polarization code belief propagation decoder

ActiveCN105634507AReduce complexityImprove throughputCode conversionCyclic codesNODALRound complexity

Disclosed in the invention is assembly-line architecture of a polarization code belief propagation decoder. The assembly-line architecture comprises a BP decoder and a calculation module BCB. A BP decoding algorithm of the BP decoder is realized by iteration of n-order factor graph including (n+1) N nodes, wherein the N expresses a code length. Each node includes two kinds of likelihood probabilities: a first likelihood probability and a second likelihood probability; an input terminal of the BP decoder serves as a left end and an output terminal of the decoder serves as a right end; the first likelihood probability is used for message updating and transmission from the left side to the right side; and the second likelihood probability is used for message updating and transmission from the right side to the left side. The calculation module BCB is used for message updating and transmission between four nodes at an interval of an N / 2 bit at adjacent orders. According to the invention, the high-throughput-rate and low-complexity BP decoder architecture of the polarization code is realized; and the hardware realization complexity can be reduced and the processing speed is enhanced.

Owner:SOUTHEAST UNIV

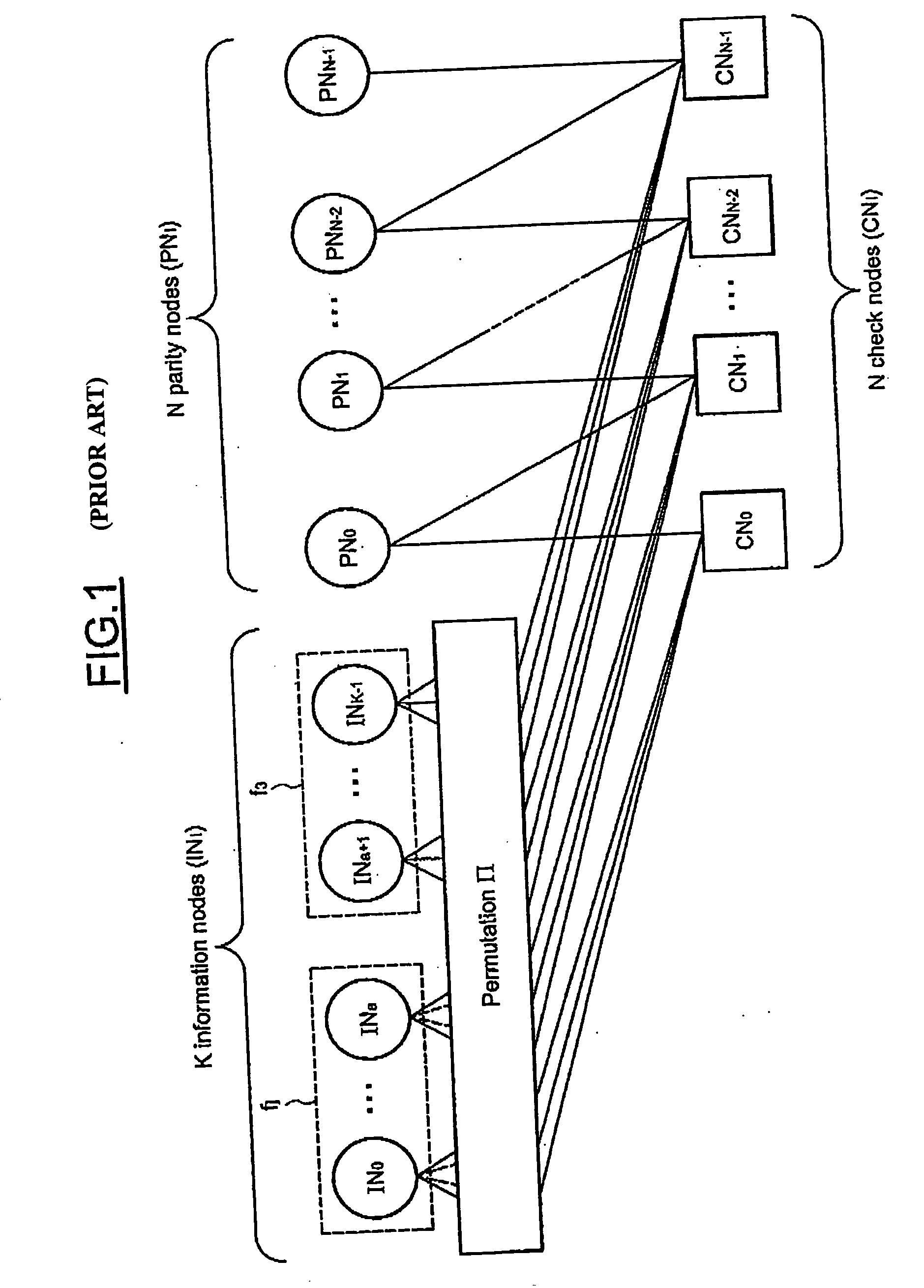

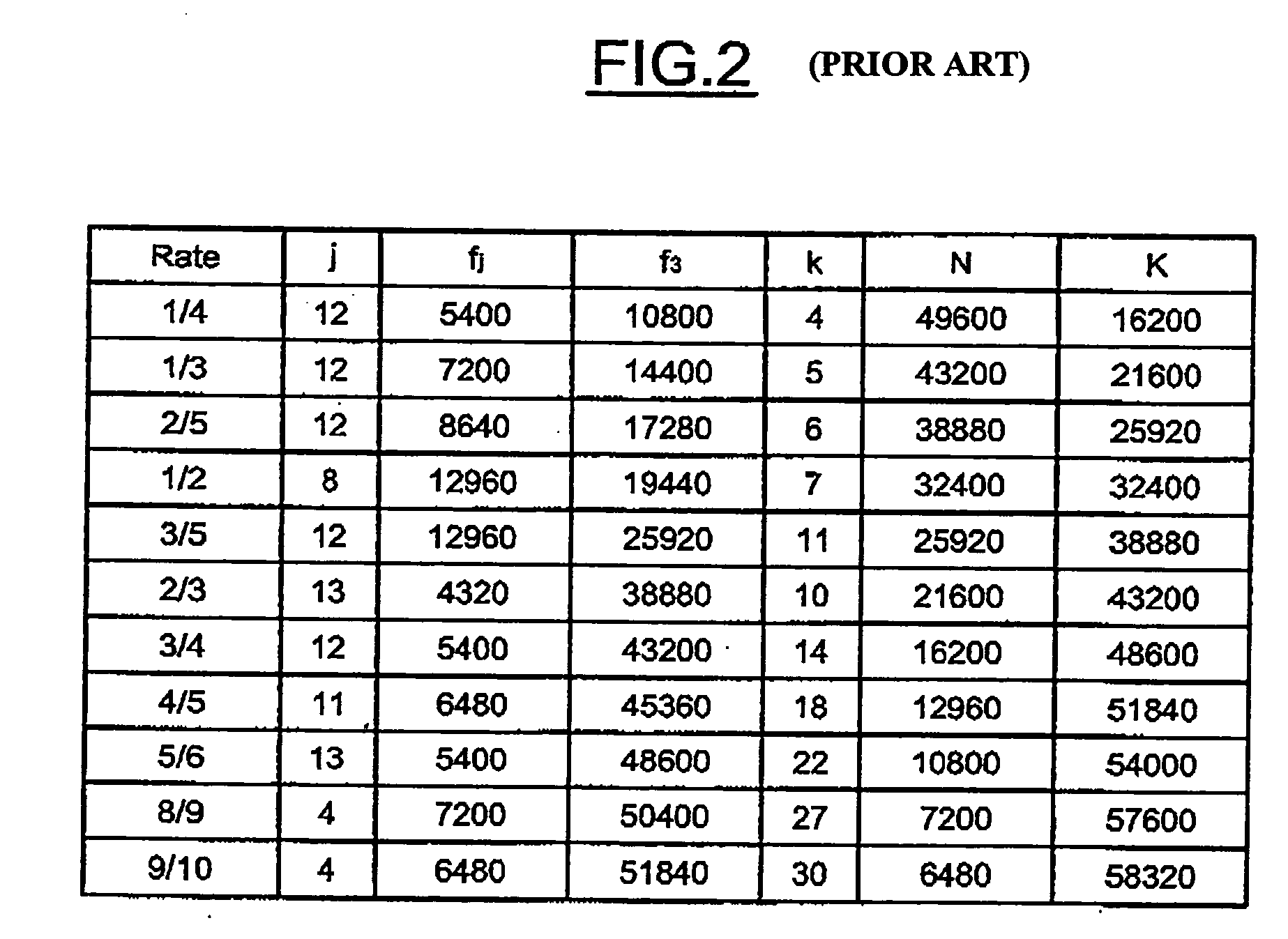

LDPC decoder for DVB-S2 decoding

ActiveUS20060206778A1Avoiding read/write conflictOvercome problemsRead-only memoriesDigital computer detailsComputer architectureMemory bank

The LDPC decoder includes a processor for updating messages exchanged iteratively between variable nodes and check nodes of a bipartite graph of the LDPC code. The decoder architecture is a partly parallel architecture clocked by a clock signal. The processor includes P processing units. First variable nodes and check nodes are mapped on the P processing units according to two orthogonal directions. The decoder includes P main memory banks assigned to the P processing units for storing all the messages iteratively exchanged between the first variable nodes and the check nodes. Each main memory bank includes at least two single port memory partitions and one buffer the decoder also includes a shuffling network and a shift memory.

Owner:STMICROELECTRONICS INT NV

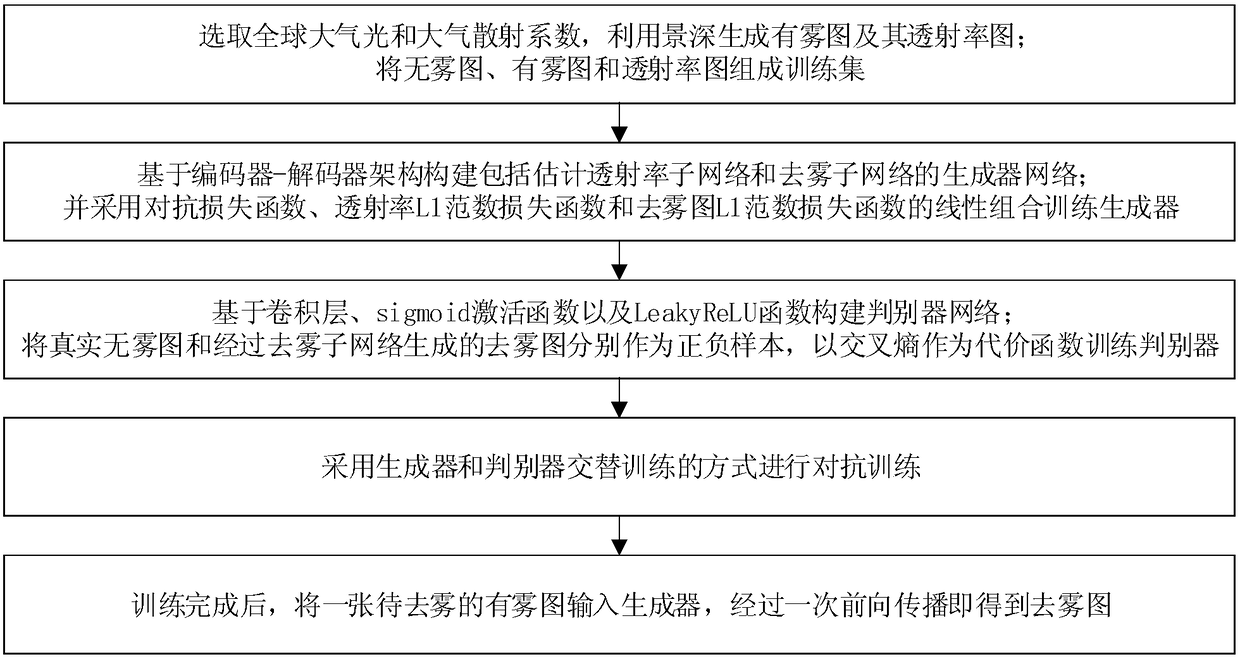

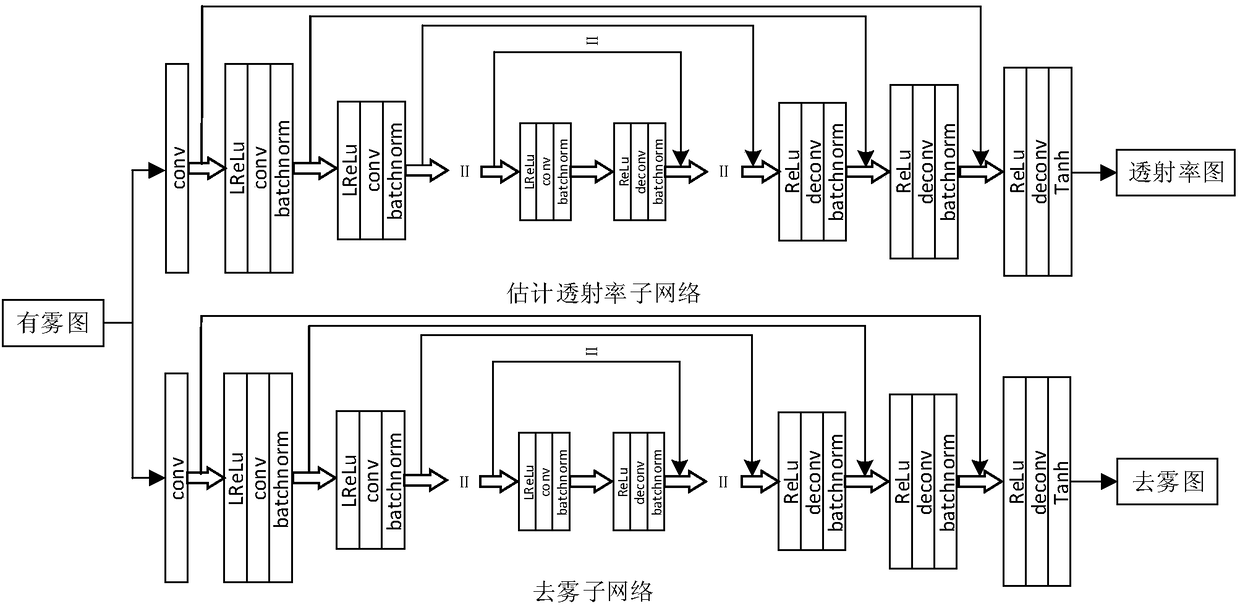

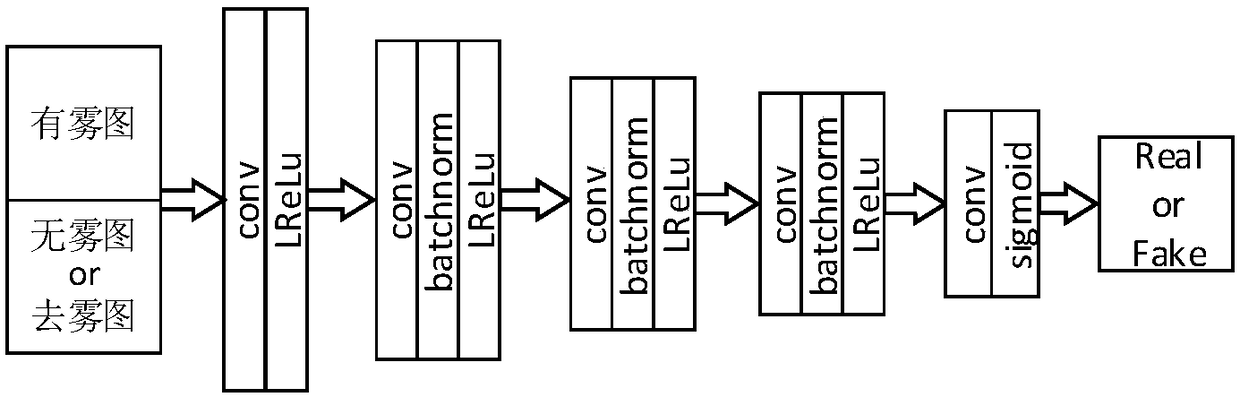

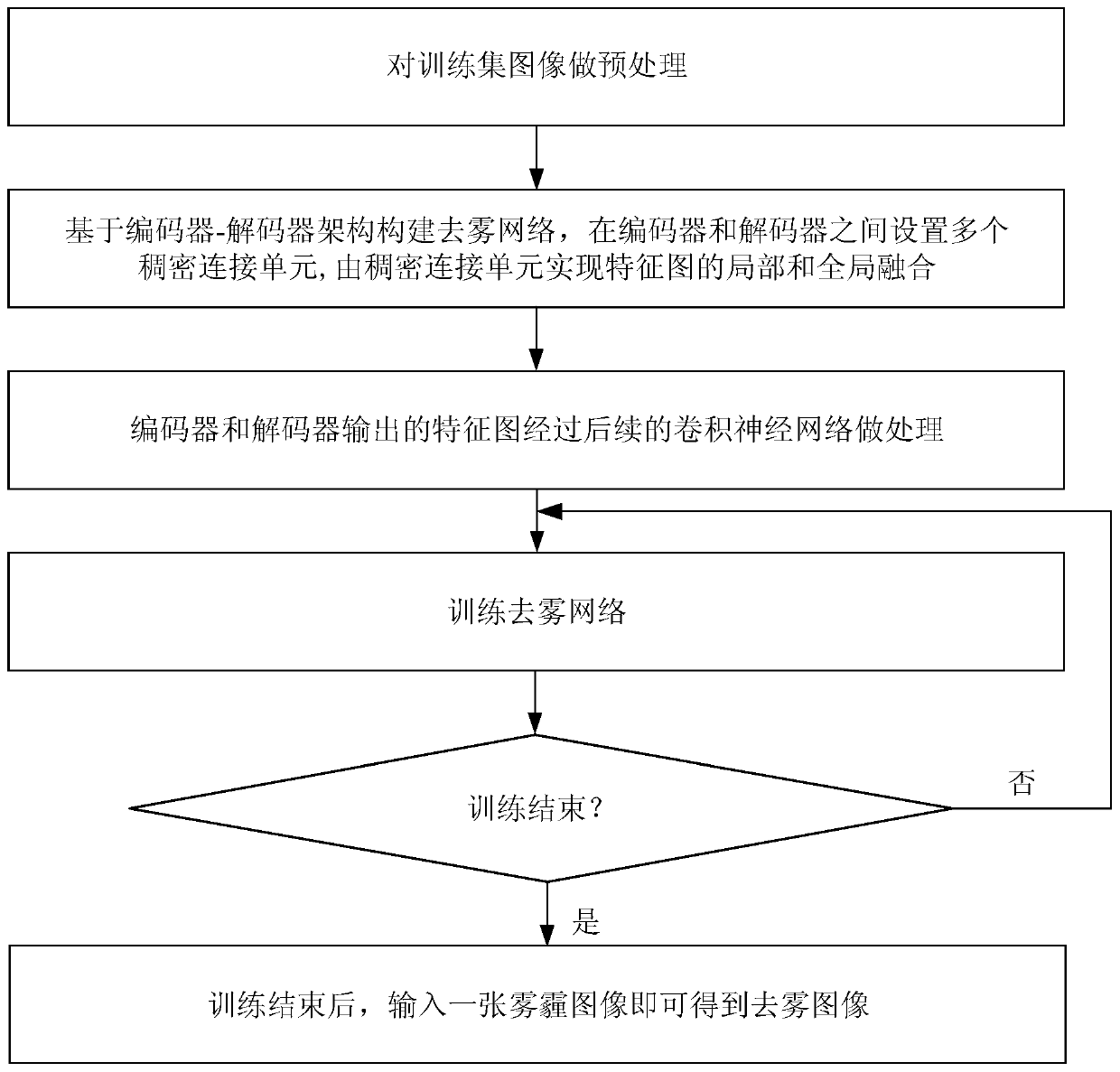

An image de-fog method based on depth neural network

ActiveCN109472818ASimple methodHigh defogging efficiencyImage enhancementImage analysisDiscriminatorDepth of field

The invention discloses an image fog removing method based on depth neural network, which comprises the following steps: selecting global atmospheric light and atmospheric scattering coefficient, generating fog map and its transmittance map by using depth of field; The training set is composed of non-fog map, fog map and transmittance map. Based on the encoder-decoder architecture, a generator network including an estimated transmittance subnetwork and a defogging subnetwork is constructed. The linear combination of antagonistic loss function, transmittance L1 norm loss function and defoggingL1 norm loss function is used to train the generator. The discriminator network is constructed based on convolution layer, sigmoid activation function and LeakyReLU function. The real non-fog map andthe de-fog map generated by the de-fog sub-network are used as positive and negative samples respectively, and the cross-entropy is used as the cost function to train the discriminator. Adopting generator and discriminator training alternately to carry on the antagonism training; After the training, a fog map to be defogged is input into the generator, and the defogging map is obtained after a forward propagation.

Owner:TIANJIN UNIV

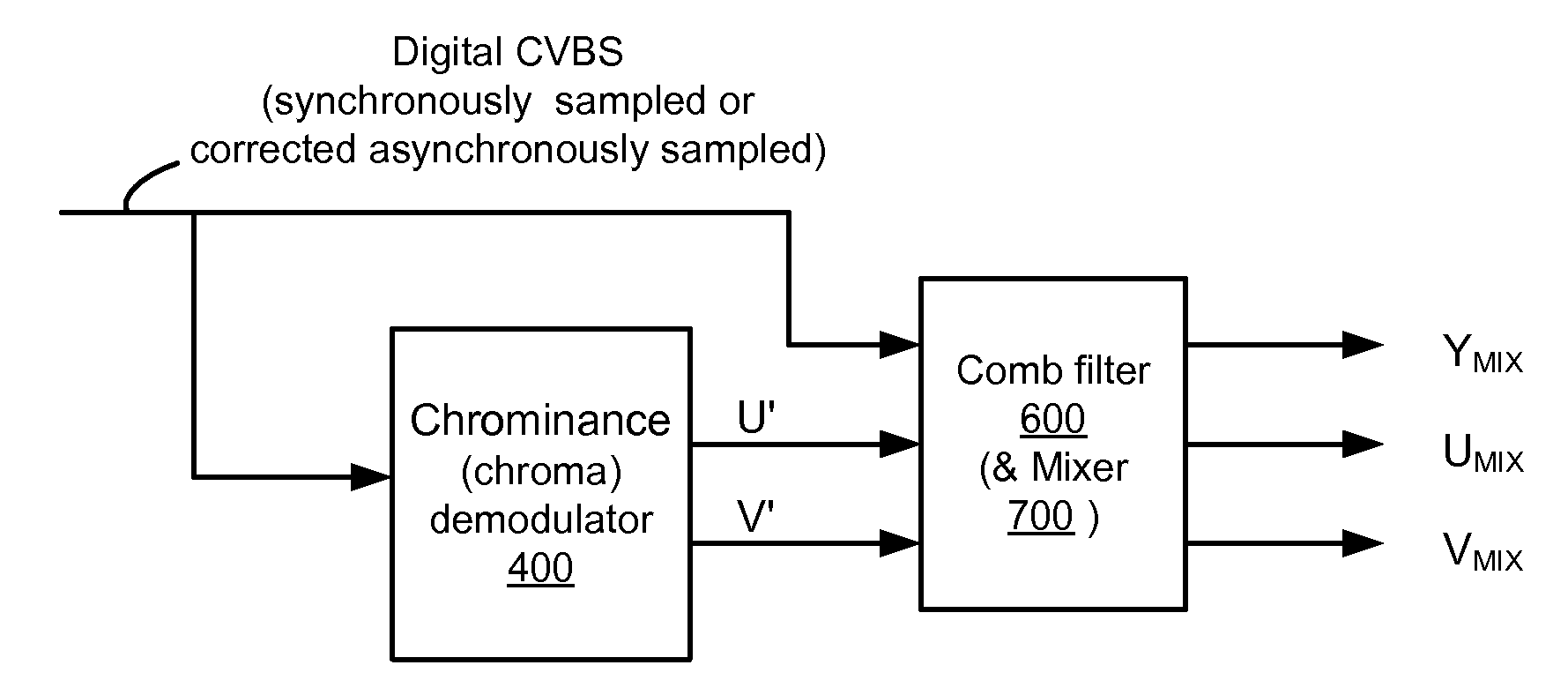

Digital Video Decoder Architecture

ActiveUS20090180027A1Color signal processing circuitsBrightness and chrominance signal processing circuitsDigital videoComputer graphics (images)

A digital video decoder architecture is provided wherein chrominance values are determined first, then luminance values are determined, in part, based on the previously determined chrominance values. In this architecture, luminance separation occurs after and based on

Owner:ZHANGJIAGANG KANGDE XIN OPTRONICS MATERIAL

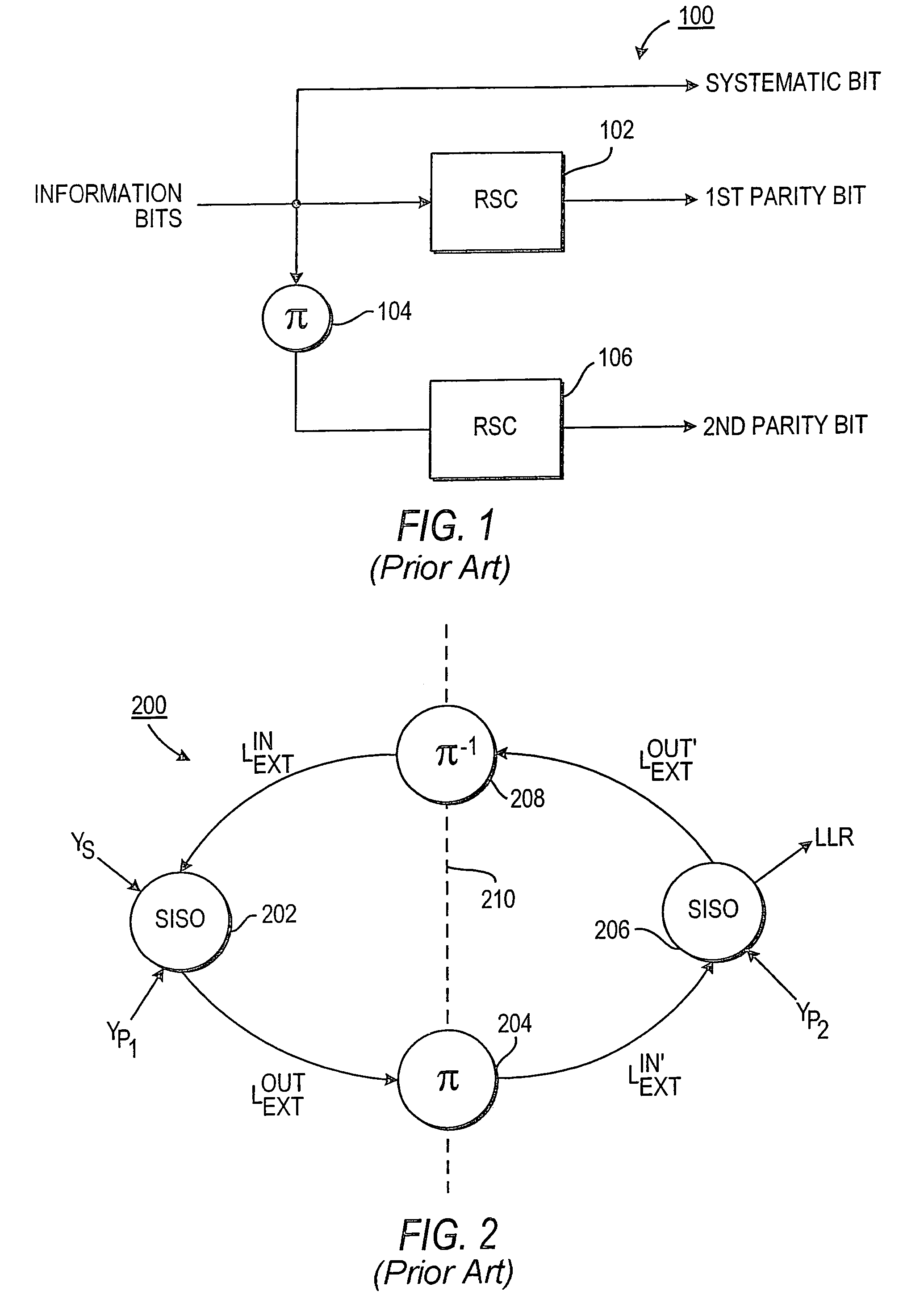

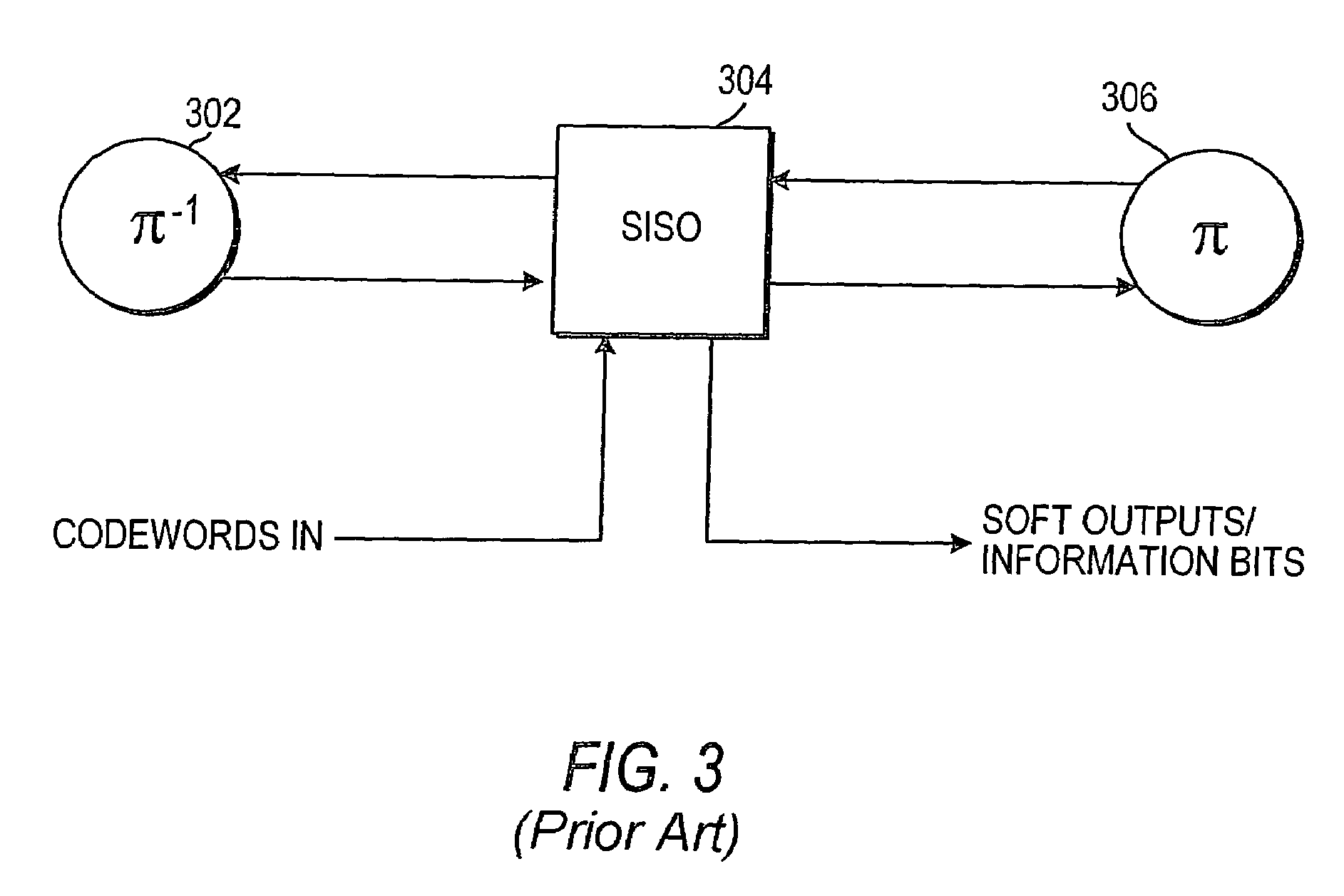

Unified serial/parallel concatenated convolutional code decoder architecture and method

ActiveUS7200798B2Data representation error detection/correctionOther decoding techniquesComputer hardwareConvolutional code

Owner:ALCATEL-LUCENT USA INC +1

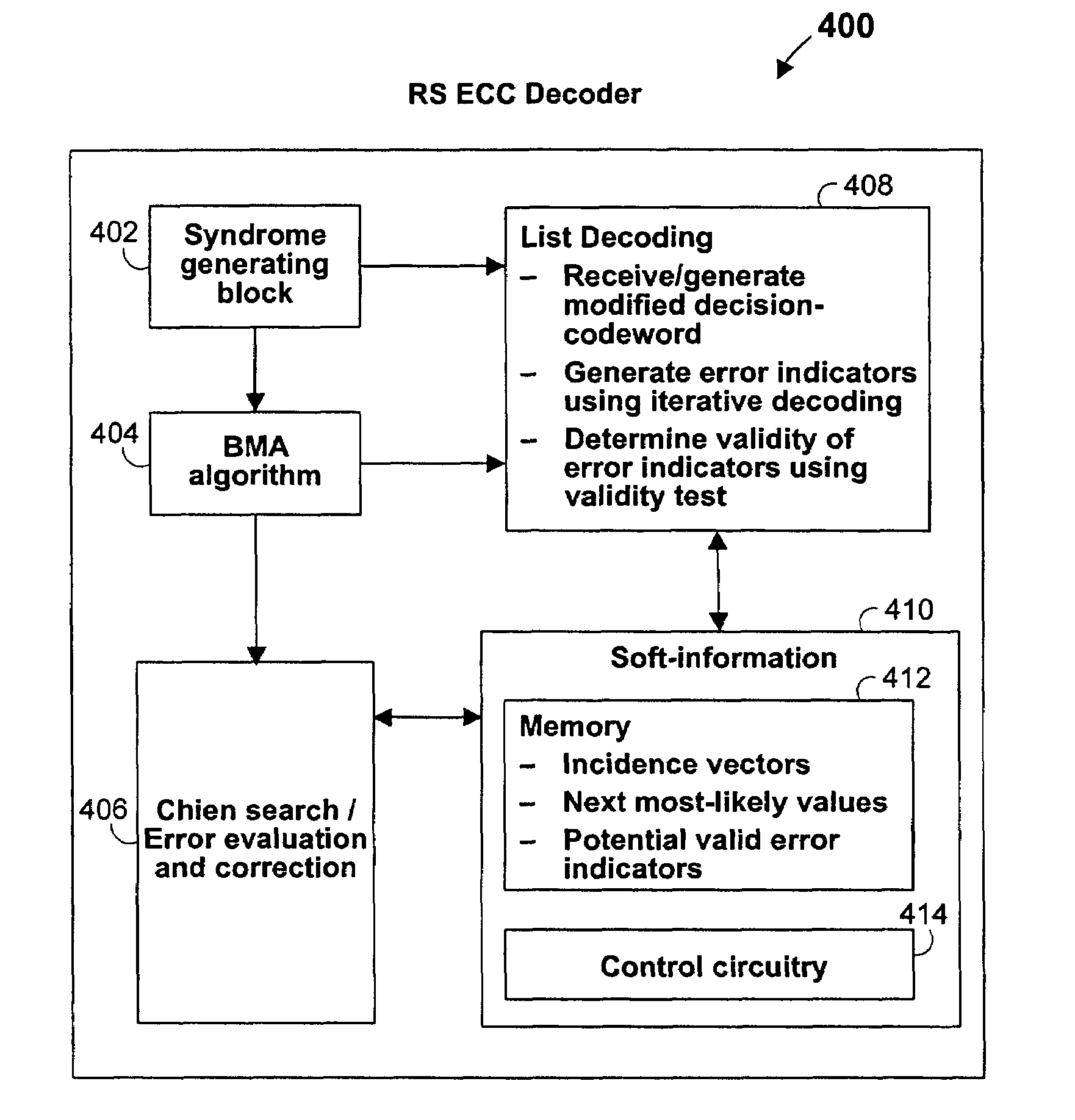

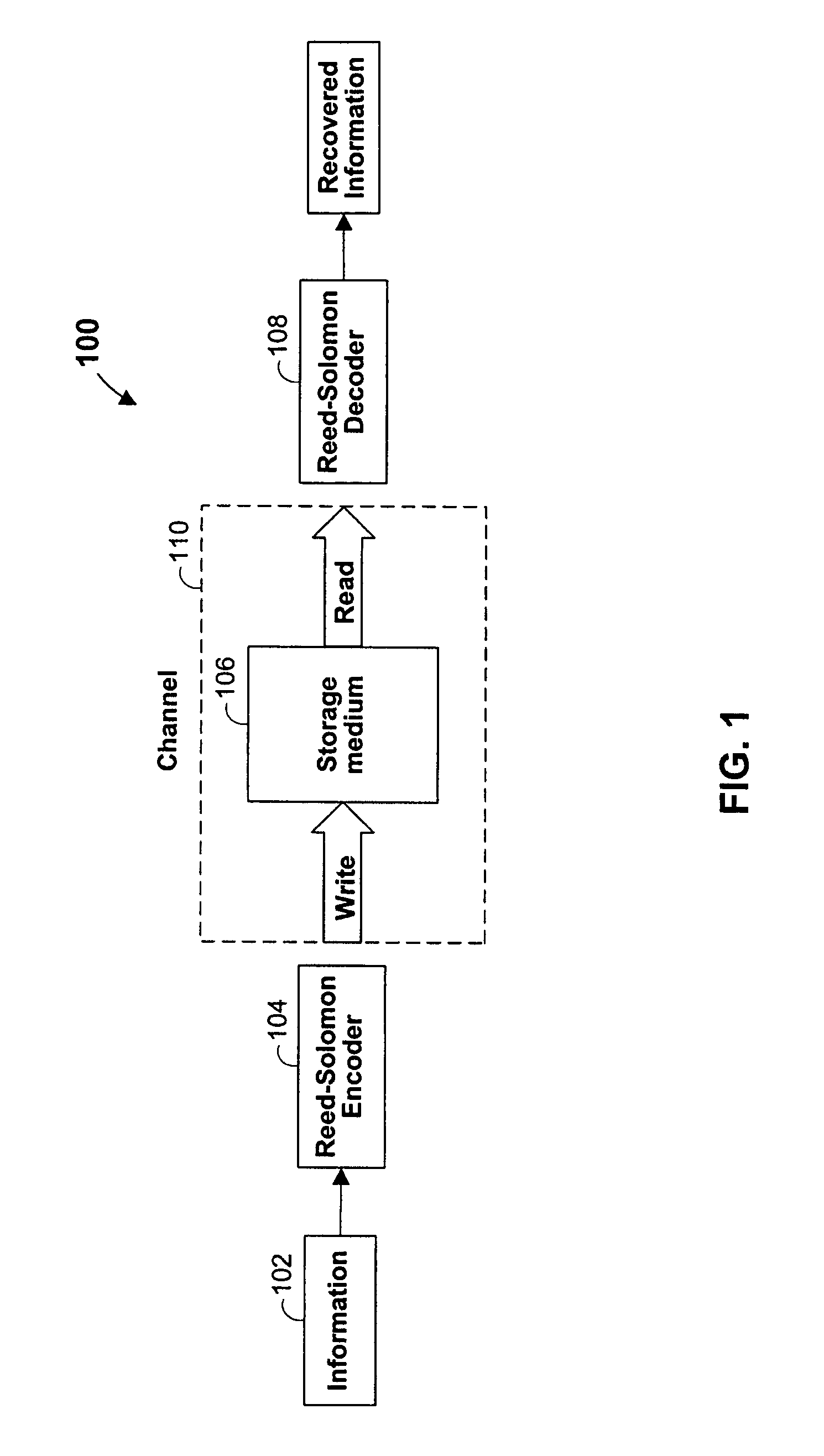

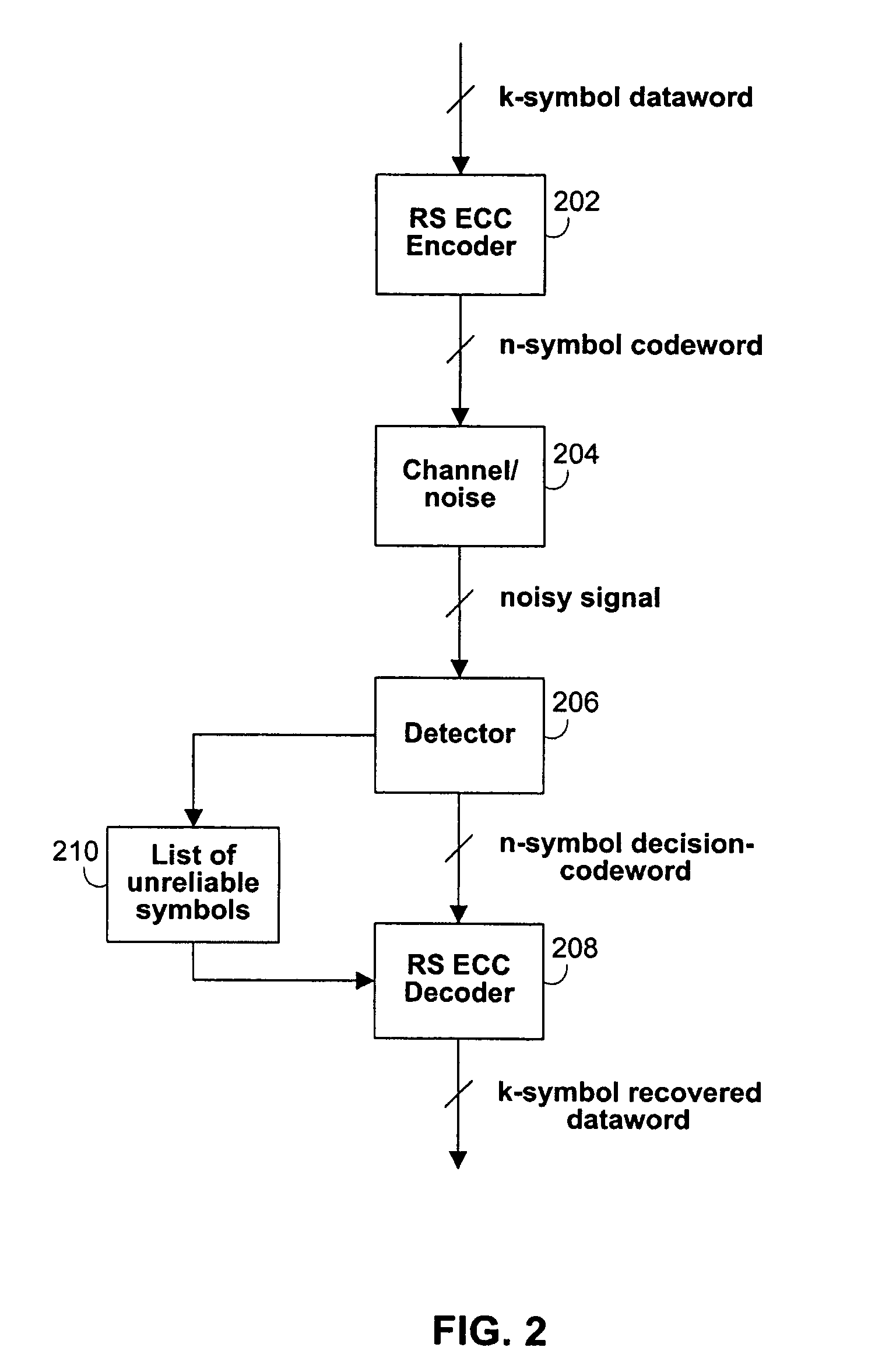

Architecture and control of reed-solomon list decoding

ActiveUS7454690B1Reduce the amount of memoryReduce the amount requiredJoint error correctionOther decoding techniquesTheoretical computer scienceSoft information

Systems and methods are provided for implementing list decoding in a Reed-Solomon (RS) error-correction system. A detector can provide a decision-codeword from a channel and can also provide soft-information for the decision-codeword. The soft-information can be organized into an order of combinations of error events for list decoding. An RS decoder can employ a list decoder that uses a pipelined list decoder architecture. The list decoder can include one or more syndrome modification circuits that can compute syndromes in parallel. A long division circuit can include multiple units that operate to compute multiple quotient polynomial coefficients in parallel. The list decoder can employ iterative decoding and a validity test to generate error indicators. The iterative decoding and validity test can use the lower syndromes.

Owner:MARVELL ASIA PTE LTD

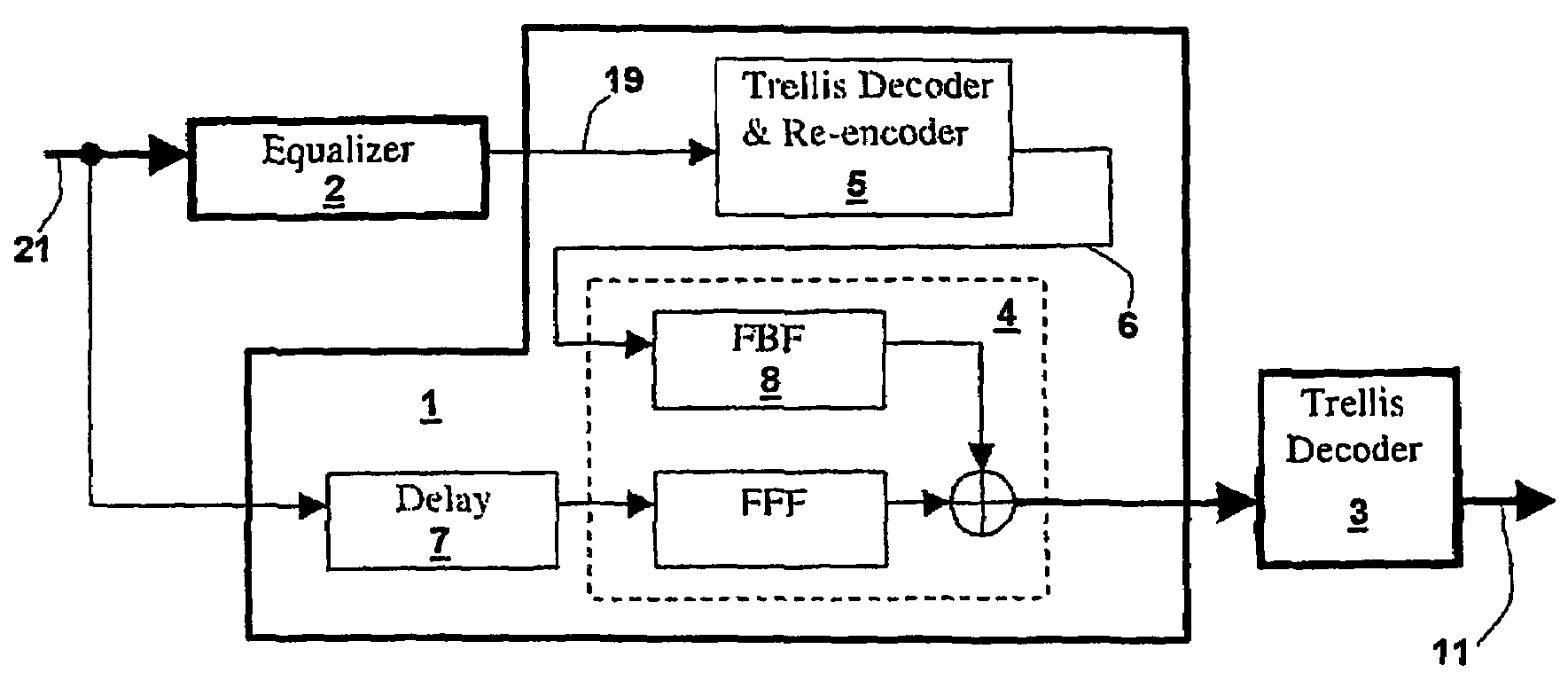

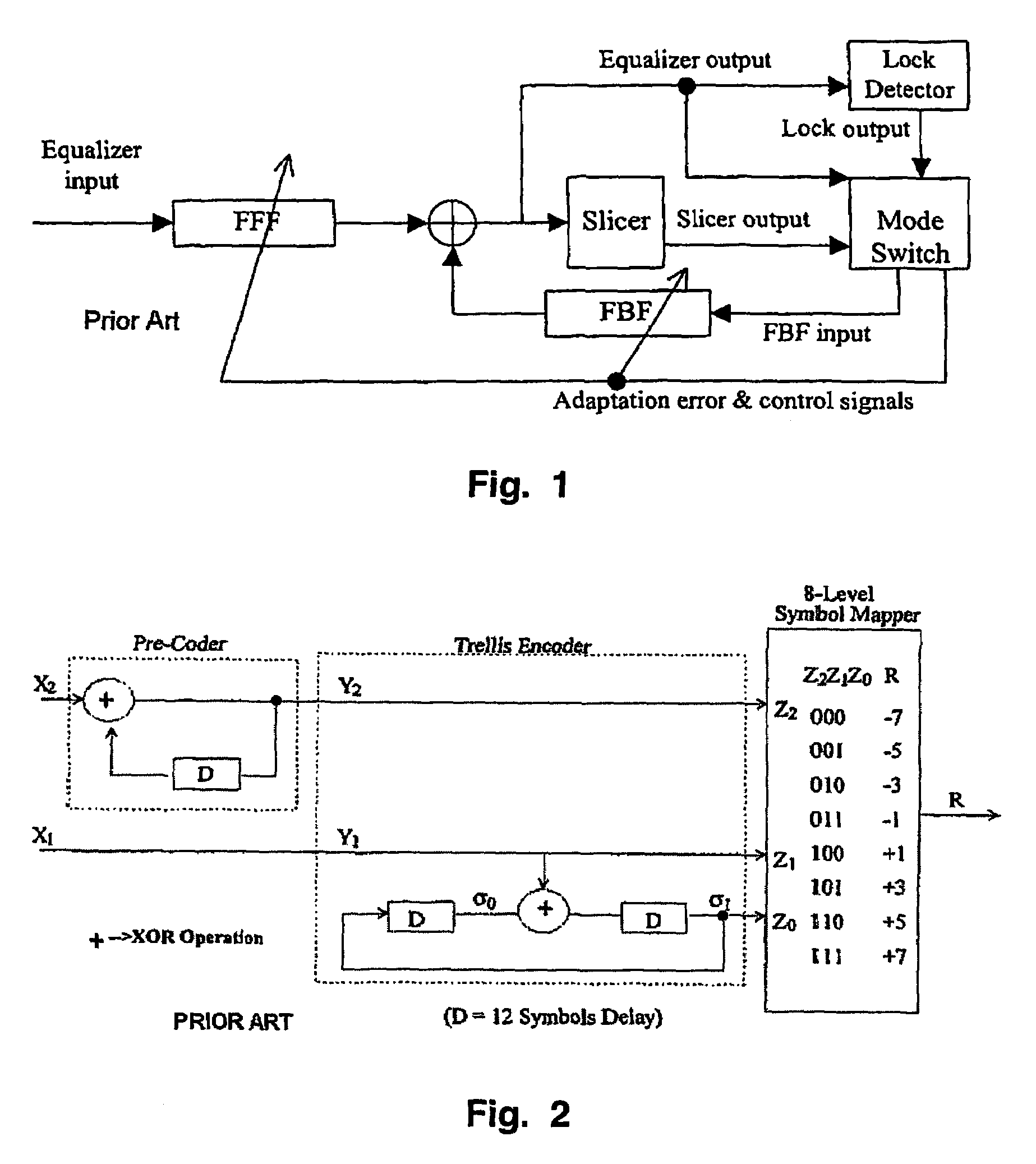

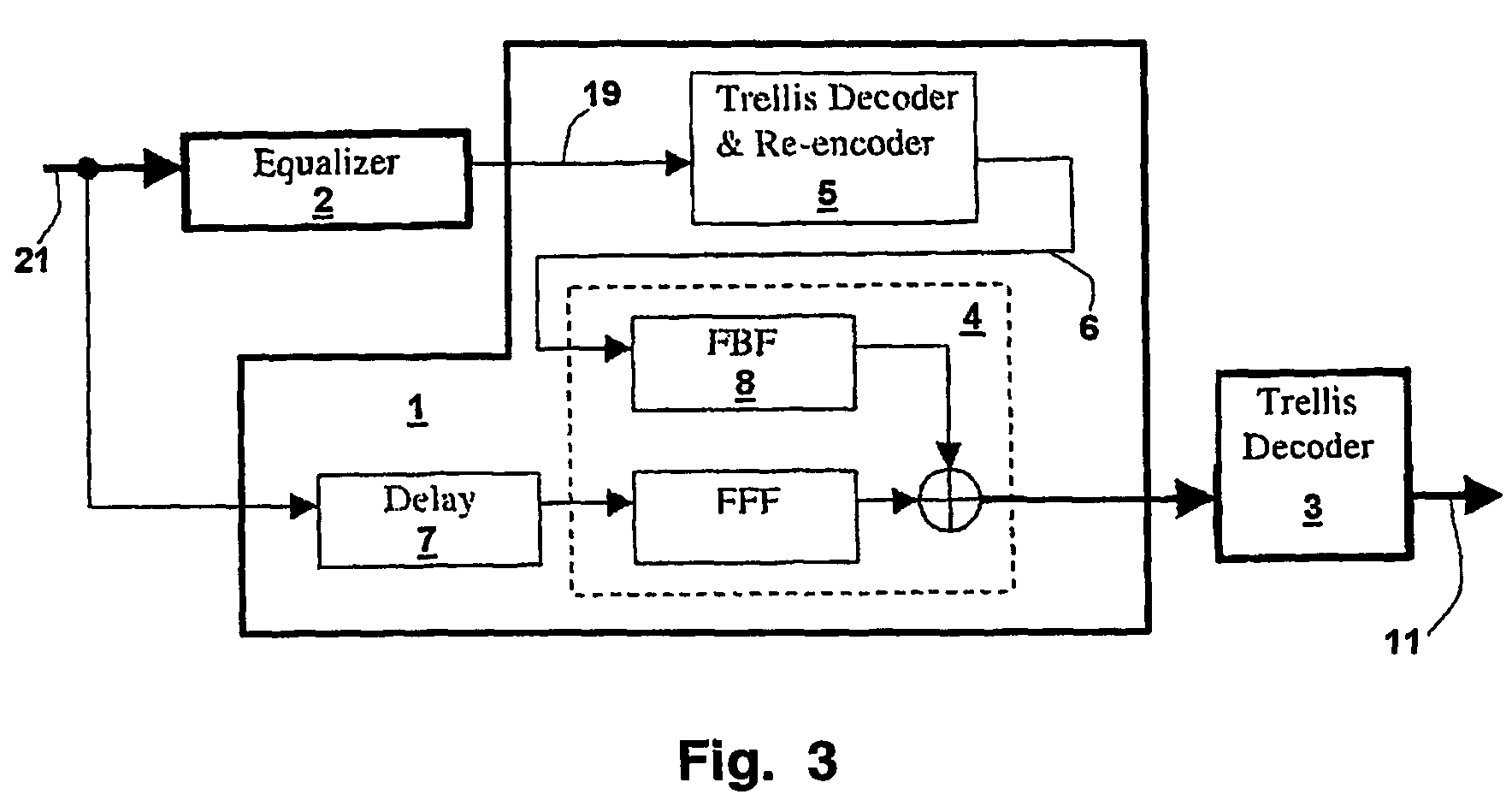

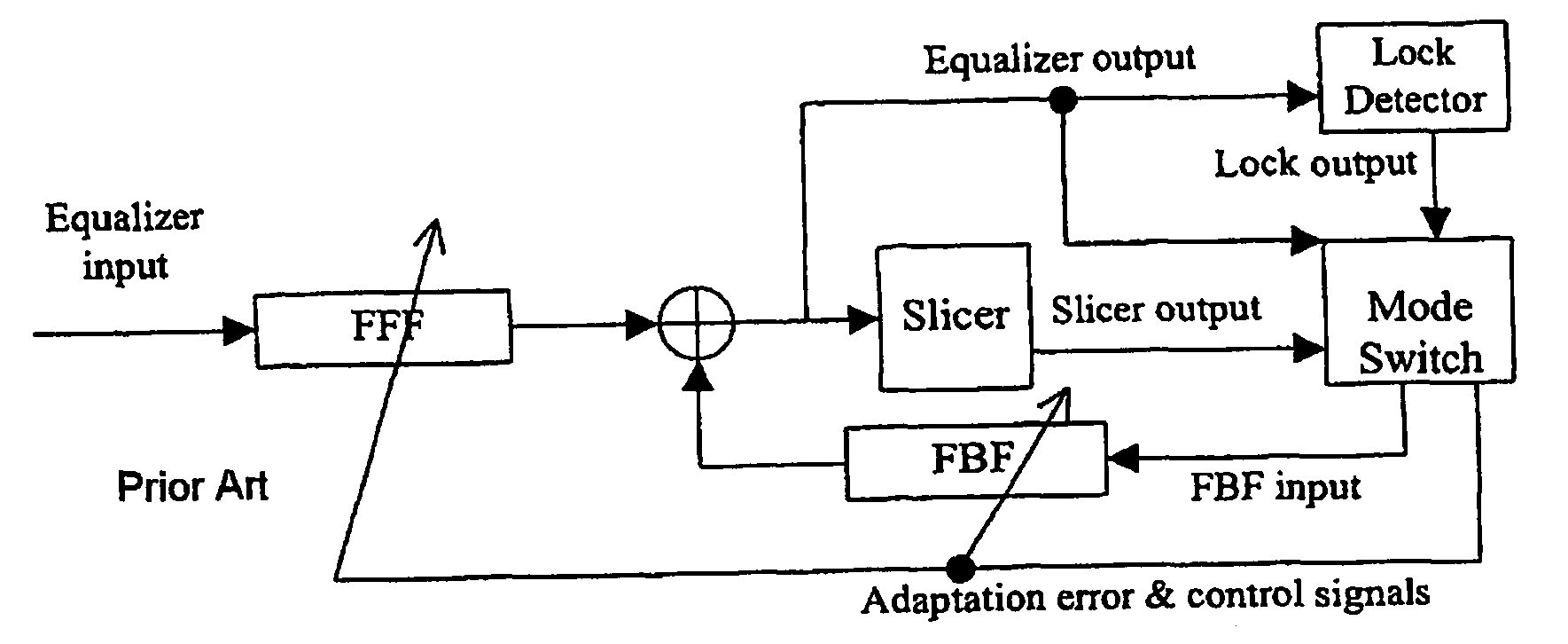

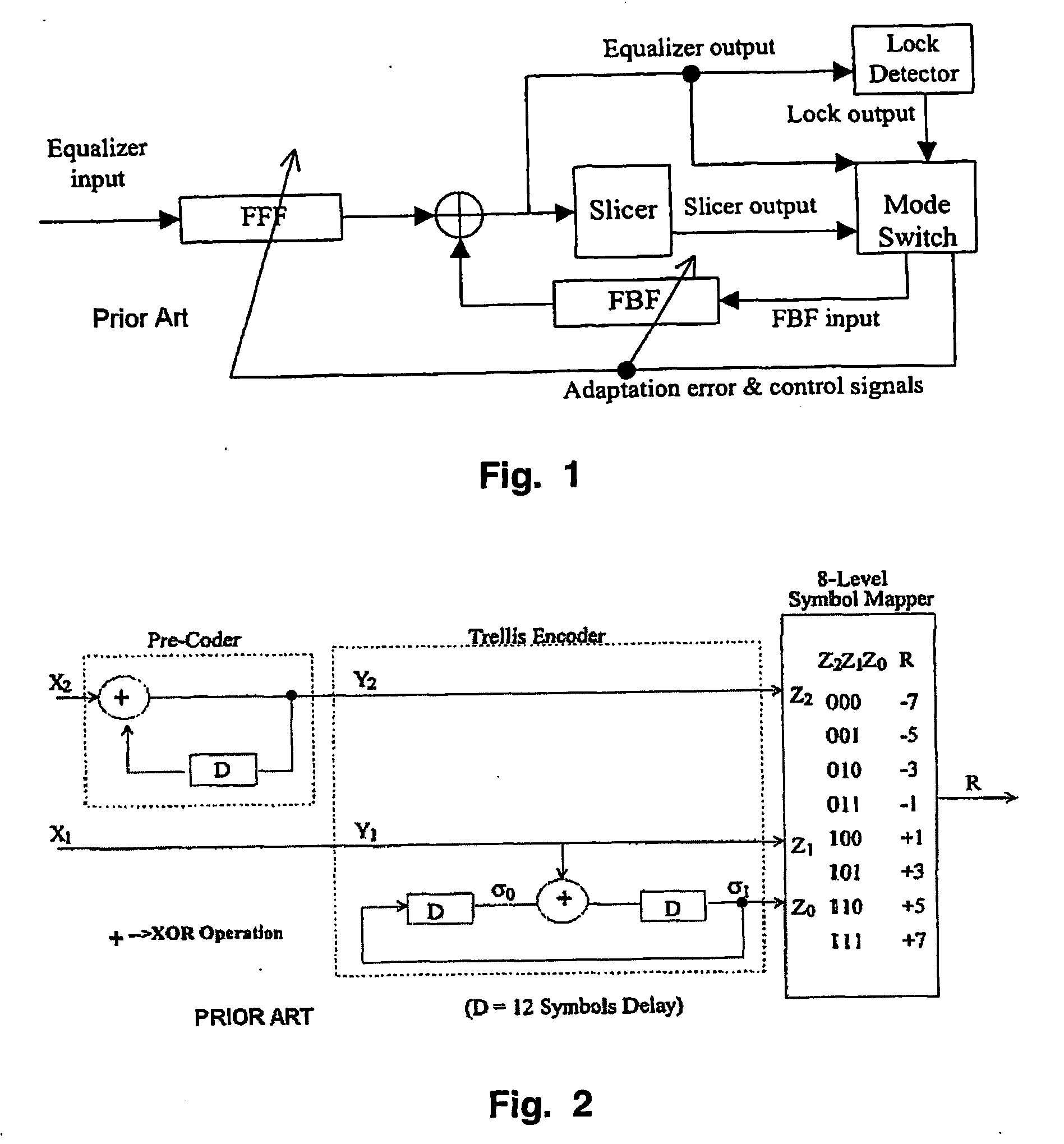

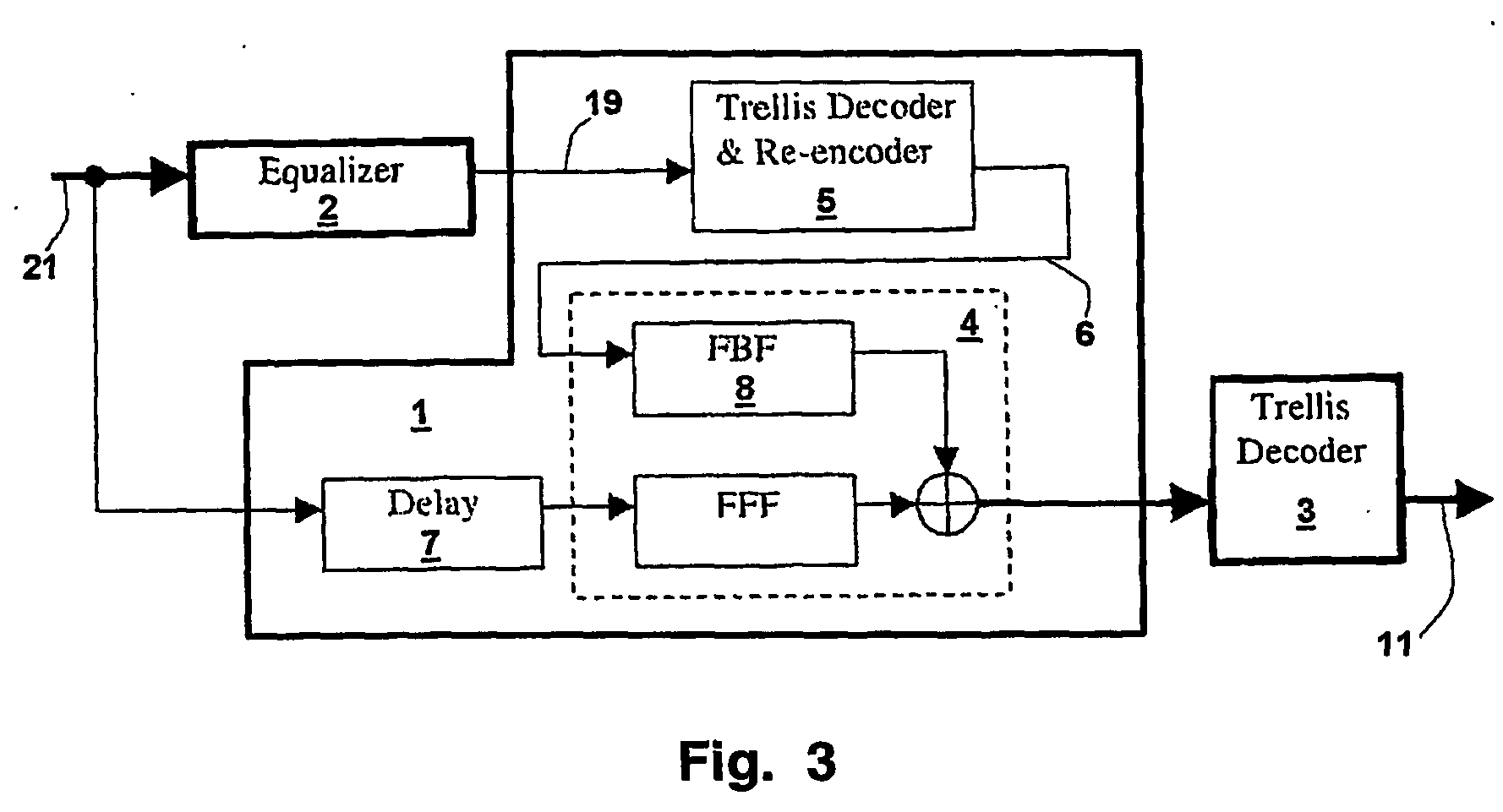

Concatenated equalizer/trellis decoder architecture for an HDTV receiver

InactiveUS7389470B2Multiple-port networksTelevision system detailsHigh-definition televisionHigh definition tv

A concatenated equalizer / trellis decoding system for use in processing a High Definition Television signal. The re-encoded trellis decoder output, rather than the equalizer output, is used as an input to the feedback filter of the decision feedback equalizer. Hard or soft decision trellis decoding may be applied. In order to account for the latency associated with trellis decoding and the presence of twelve interleaved decoders, feedback from the trellis decoder to the equalizer is performed by replicating the trellis decoder and equalizer hardware in a module that can be cascaded in as many stages as needed to achieve the desired balance between complexity and performance. The present system offers an improvement of between 0.6 and 1.9 decibels. Cascading of two modules is usually sufficient to achieve most of the potential performance improvement.

Owner:MAGNOLIA LICENSING LLC

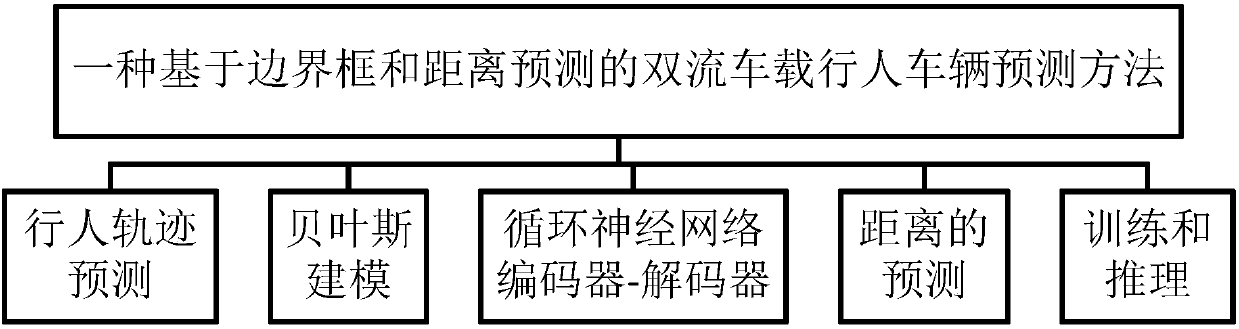

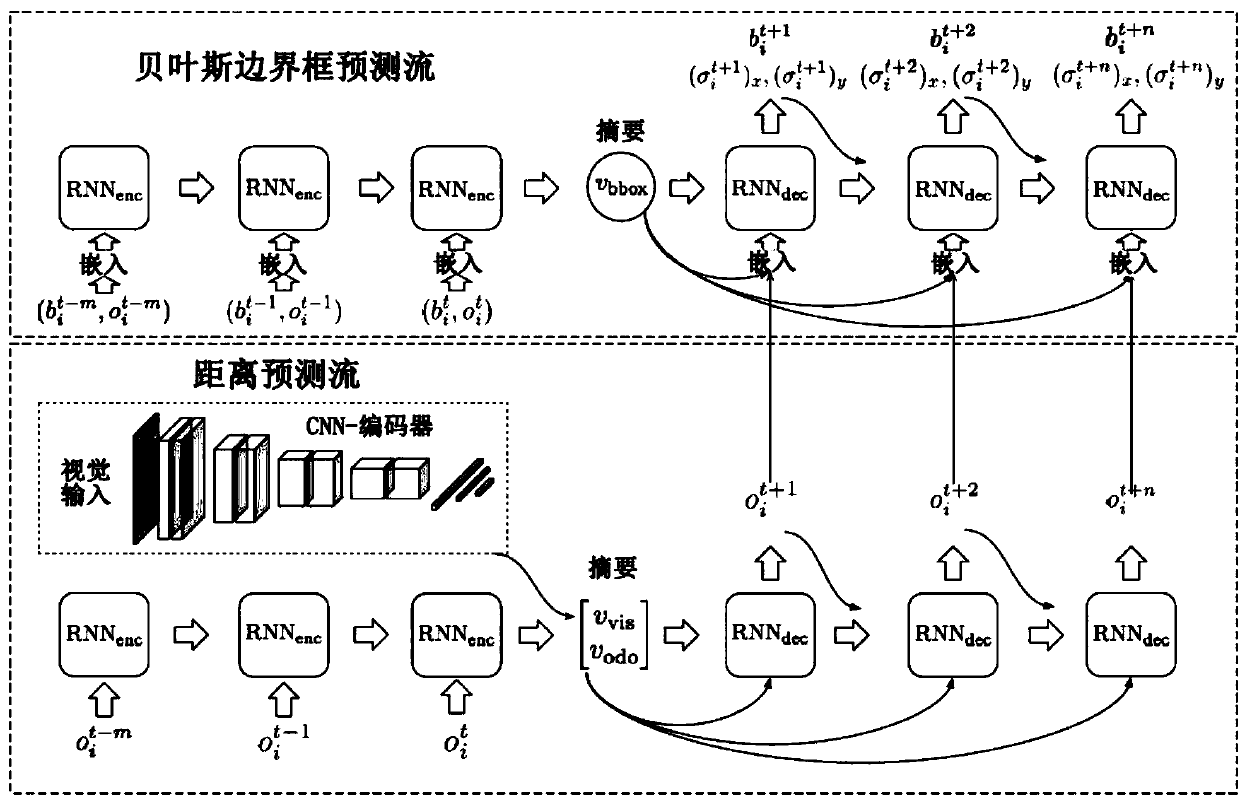

Double-flow vehicle-mounted pedestrian and vehicle prediction method based on boundary frame and distance prediction

InactiveCN108267123APicture taking arrangementsPicture interpretationMachine learningDecoder architecture

The invention provides a double-flow vehicle-mounted pedestrian and vehicle prediction method based on boundary frame and distance prediction. The method comprises the main contents: pedestrian trajectory prediction, Bayesian modeling, recurrent neural network (RNN) encoder-decoder, distance prediction, and training and reasoning. The process comprises that a distance prediction flow is used for predicting a most possible vehicle distance sequence, and the boundary frame flow is composed of a Bayesian RNN encoder-decoder structure and is used for predicting the attitude distribution on a pedestrian trajectory and capturing cognition and arbitrary uncertainty; since the prediction flow of the distance prediction method is used for estimating a prediction point, the prediction flow is trained by minimizing the mean square error of a training set; and the Bayesian boundary frame prediction flow is trained by estimating and minimizing the KL divergence approximate to weight distribution. The double-flow system structure including the pedestrian boundary frame prediction and the vehicle distance prediction is adopted, the time required for prediction is greatly shortened, and the prediction accuracy of the model is also significantly improved by the uncertainty estimation.

Owner:SHENZHEN WEITESHI TECH

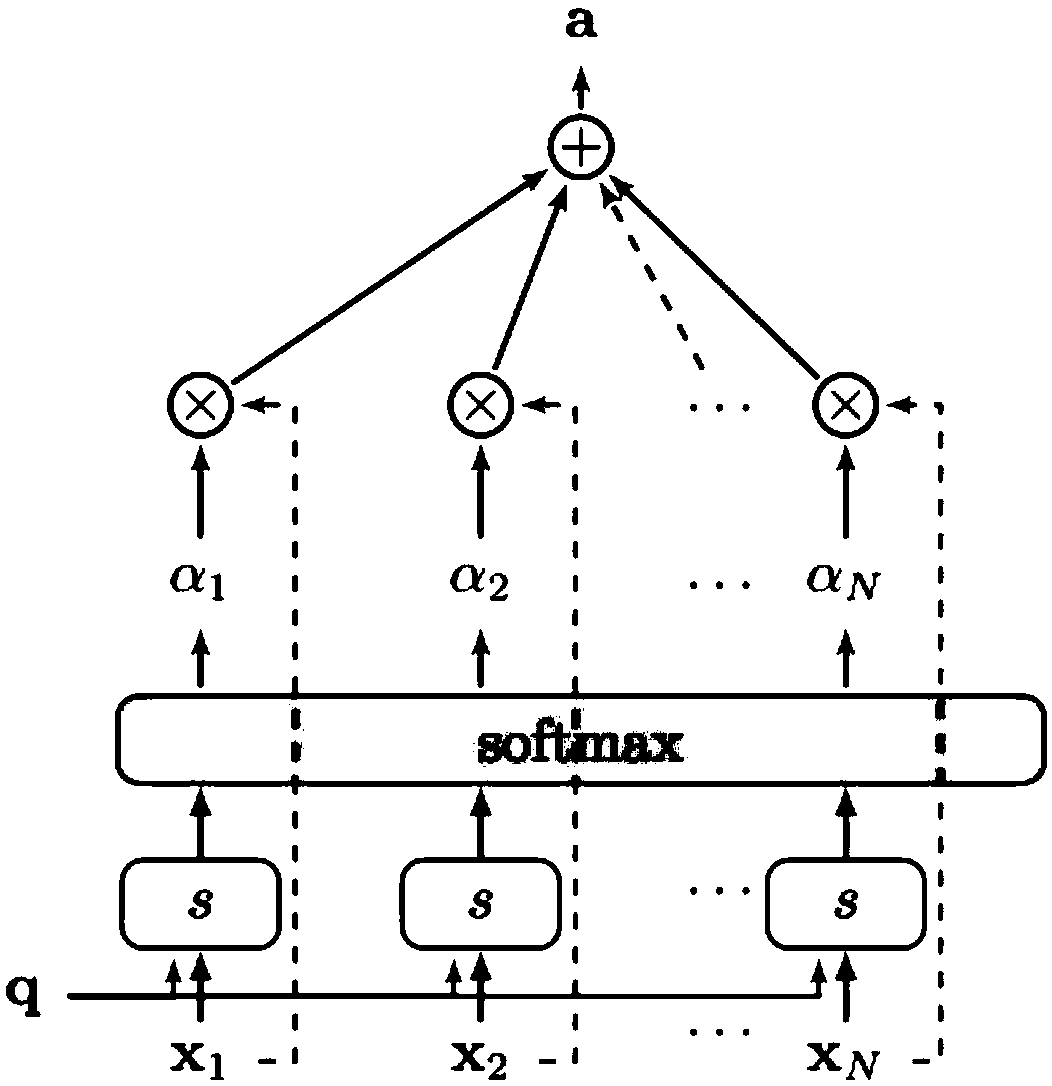

Mongolian and Chinese inter-translation method based on reinforced learning

ActiveCN108920468ASolve problems such as shortage and scarcity of resourcesEfficient use ofNatural language translationSpecial data processing applicationsData miningReinforcement learning

Nerve machine translation (NMT) of a coder-decoder architecture realizes an optimal result on current standard machine translation standards, but a lot of parallel corpus data are needed for traininga model; for the field of minority language translation, insufficient bilingual alignment corpus is a common problem, and resources are rare, so that the invention provides a Mongolian and Chinese inter-translation method based on reinforced learning. A system receives a Mongolian sentence and translates the same to generate a Chinese sentence, and a scalar score is acquired as feedback. Reinforced learning technology is utilized for effective learning from the feedback. Defining a mathematical framework of a solution in reinforced learning is called the Markov decision-making process. It is aimed to find a strategy to maximize expected translation quality. During training, if a certain behavior strategy causes a big environment reward, tendency of generating this behavior strategy in thefuture is about to be reinforced, and an optimal strategy is found finally to maximize expected discount reward sum and improve translation quality.

Owner:INNER MONGOLIA UNIV OF TECH

Concatenated equalizer/trellis decoder architecture for an hdtv receiver

InactiveUS20050154967A1Multiple-port networksTelevision system detailsHigh-definition televisionComputer science

A concatenated equalizer / trellis decoding system for use in processing a High Definition Television signal. The re-encoded trellis decoder output, rather than the equalizer output, is used as an input to the feedback filter of the decision feedback equalizer. Hard or soft decision trellis decoding may be applied. In order to account for the latency associated with trellis decoding and the presence of twelve interleaved decoders, feedback from the trellis decoder to the equalizer is performed by replicating the trellis decoder and equalizer hardware in a module that can be cascaded in as many stages as needed to achieve the desired balance between complexity and performance. The present system offers an improvement of between 0.6 and 1.9 decibels. Cascading of two modules is usually sufficient to achieve most of the potential performance improvement.

Owner:MAGNOLIA LICENSING LLC

Architecture for a communications device

InactiveUS20020129317A1Easy to handleReduce hardware costsError correction/detection using convolutional codesError preventionCDMA2000Computer hardware

The present invention discloses a single unified decoder for performing both convolutional decoding and turbo decoding in the one architecture. The unified decoder can be partitioned dynamically to perform required decoding operations on varying numbers of data streams at different throughput rates. It also supports simultaneous decoding of voice (convolutional decoding) and data (turbo decoding) streams. This invention forms the basis of a decoder that can decode all of the standards for TDMA, IS-95, GSM, GPRS, EDGE, UMTS, and CDMA2000. Processors are stacked together and interconnected so that they can perform separately as separate decoders or in harmony as a single high speed decoder. The unified decoder architecture can support multiple data streams and multiple voice streams simultaneously. Furthermore, the decoder can be dynamically partitioned as required to decode voice streams for different standards.

Owner:WSOU INVESTMENTS LLC

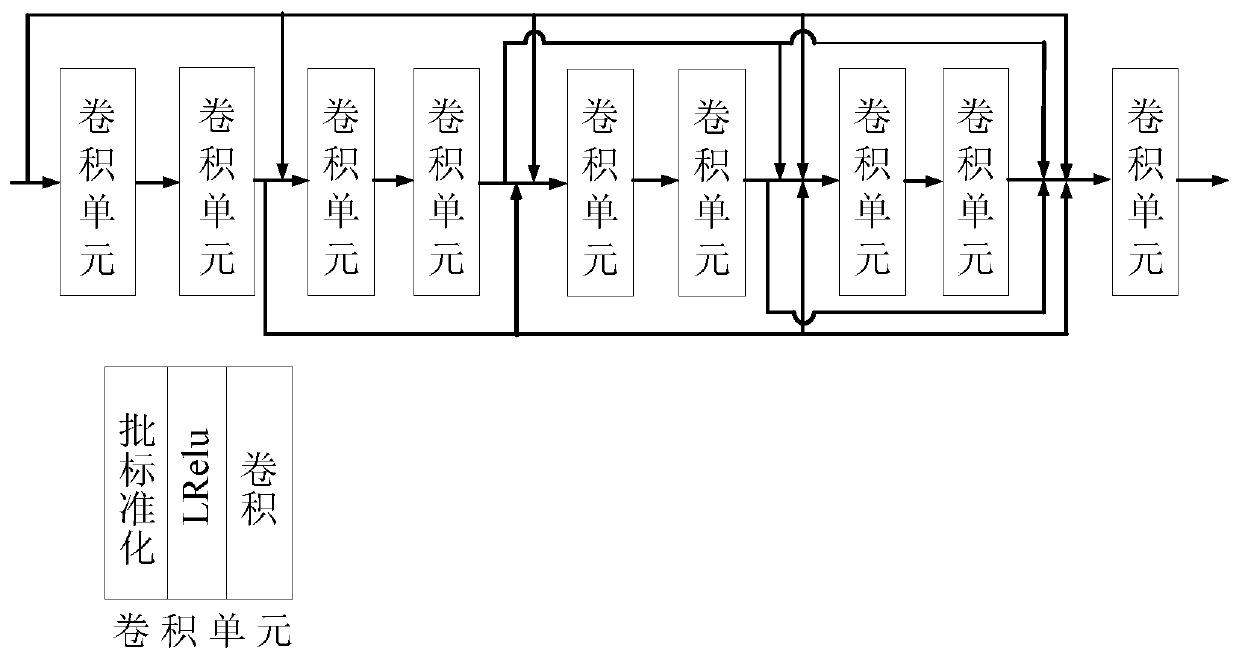

Image defogging method based on global and local feature fusion

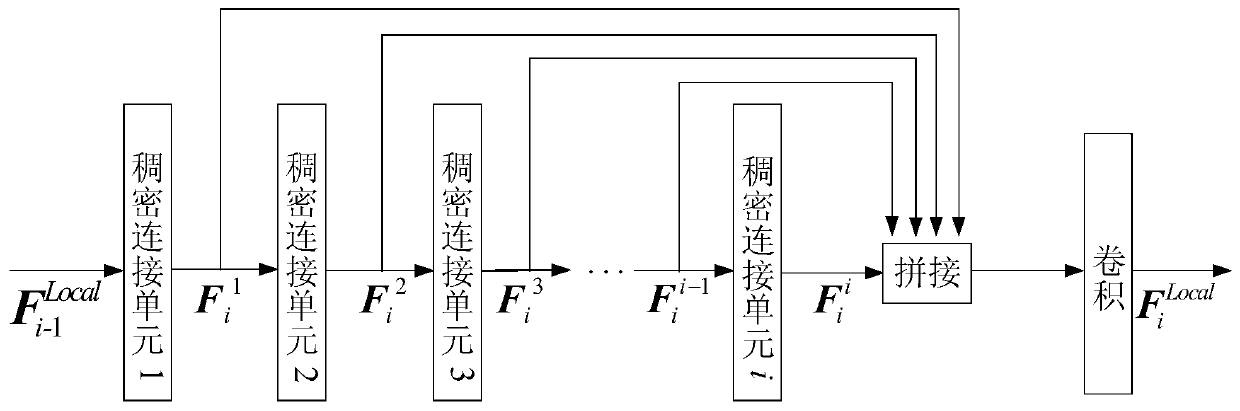

ActiveCN110544213AAvoid color distortion, halo and other phenomenaRestoring image detail lossImage enhancementImage analysisEncoder decoderFeature fusion

The invention discloses an image defogging method based on global and local feature fusion. The image defogging method comprises the following steps: constructing a defogging network based on an encoder-decoder architecture, setting a plurality of dense connection units between an encoder and a decoder, and achieving the local and global fusion of a feature map through the dense connection units;enabling the feature map output by the encoder-decoder architecture to pass through a subsequent convolutional neural network to obtain a defogged map; training a defogging network by using a linear combination of the L1 norm loss function, the perception loss function and the gradient loss function; and after the training is finished, inputting a haze image to obtain a defogged image. According to the image defogging method, the defogged image can be directly obtained from one haze image without priori information of the image or estimation of the transmission rate.

Owner:TIANJIN UNIV

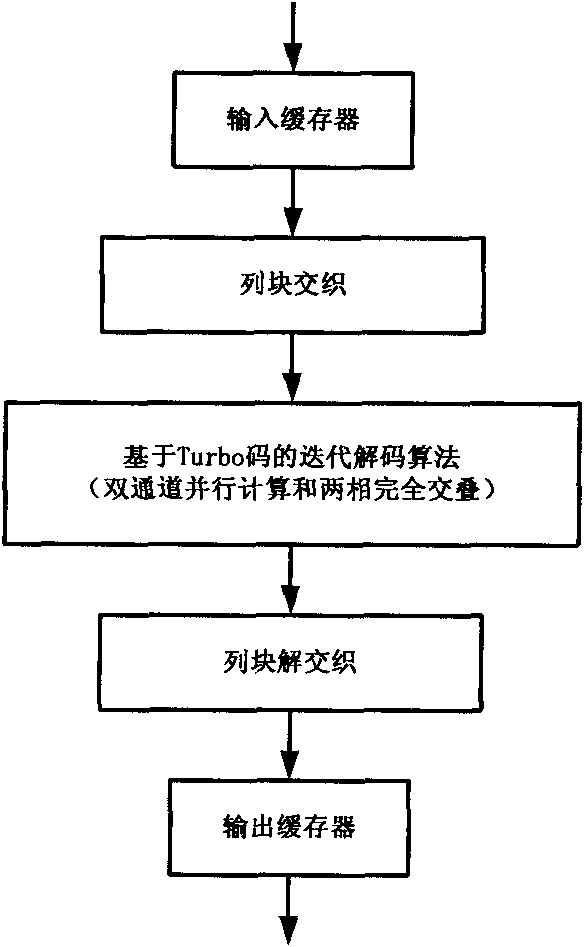

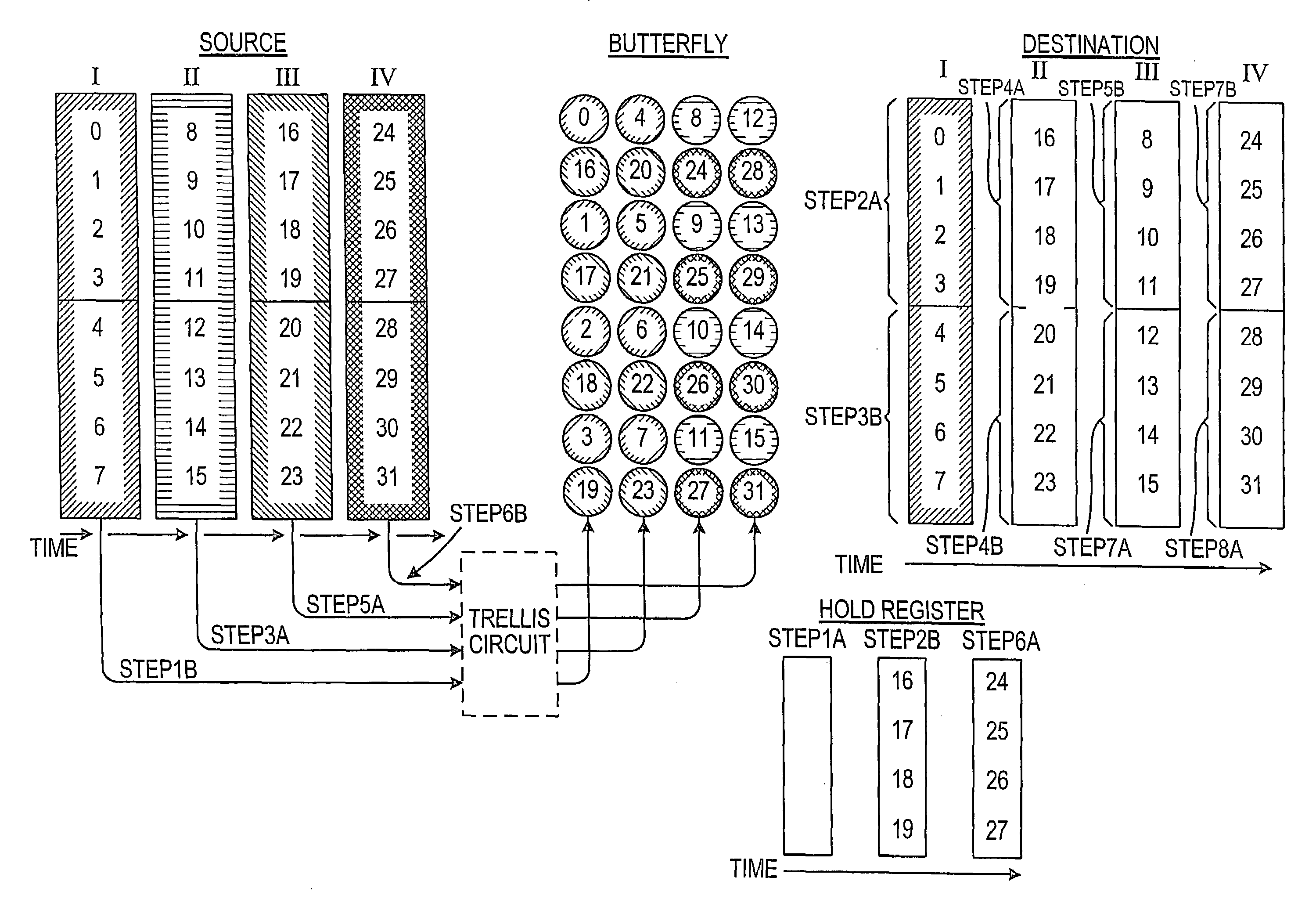

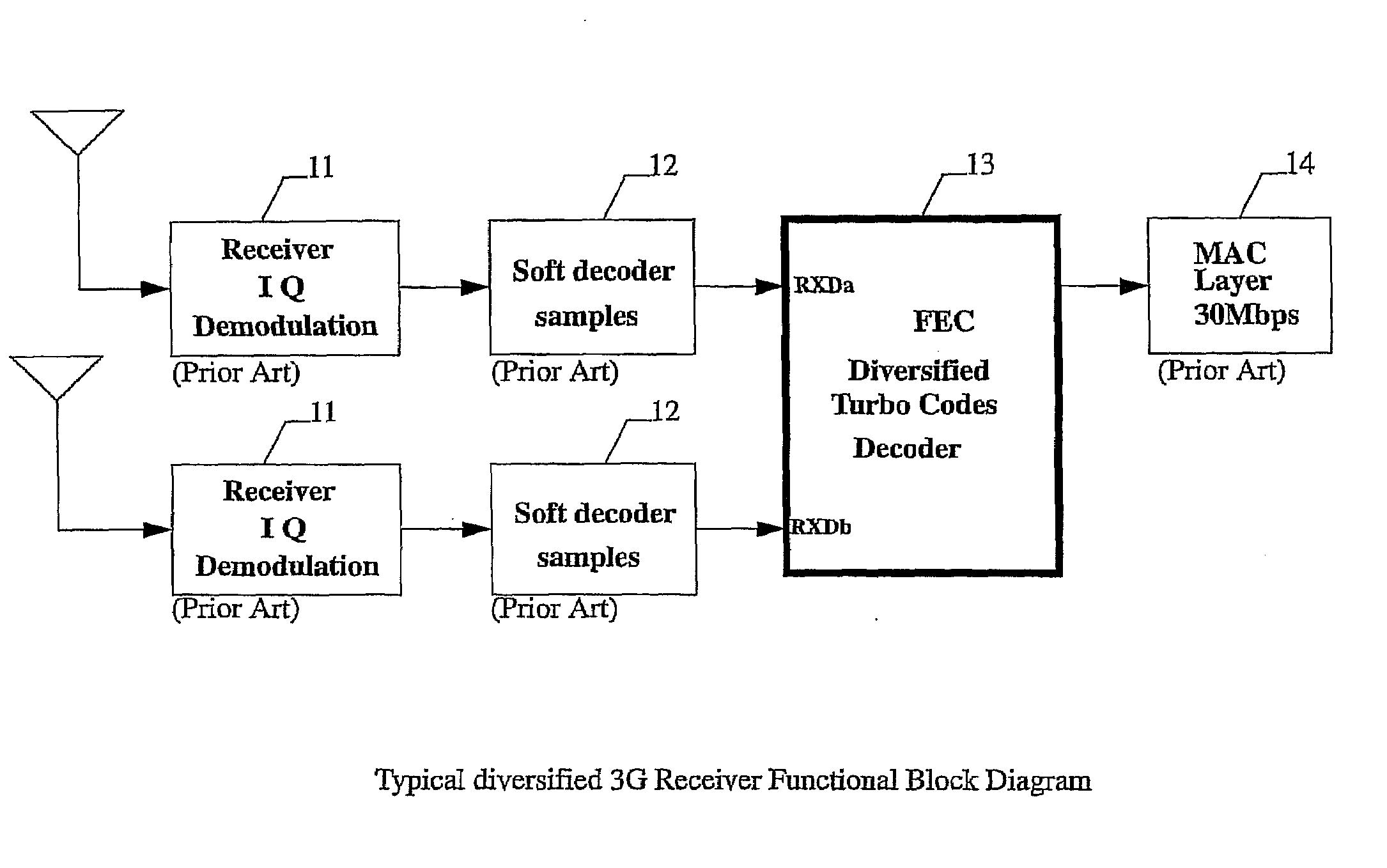

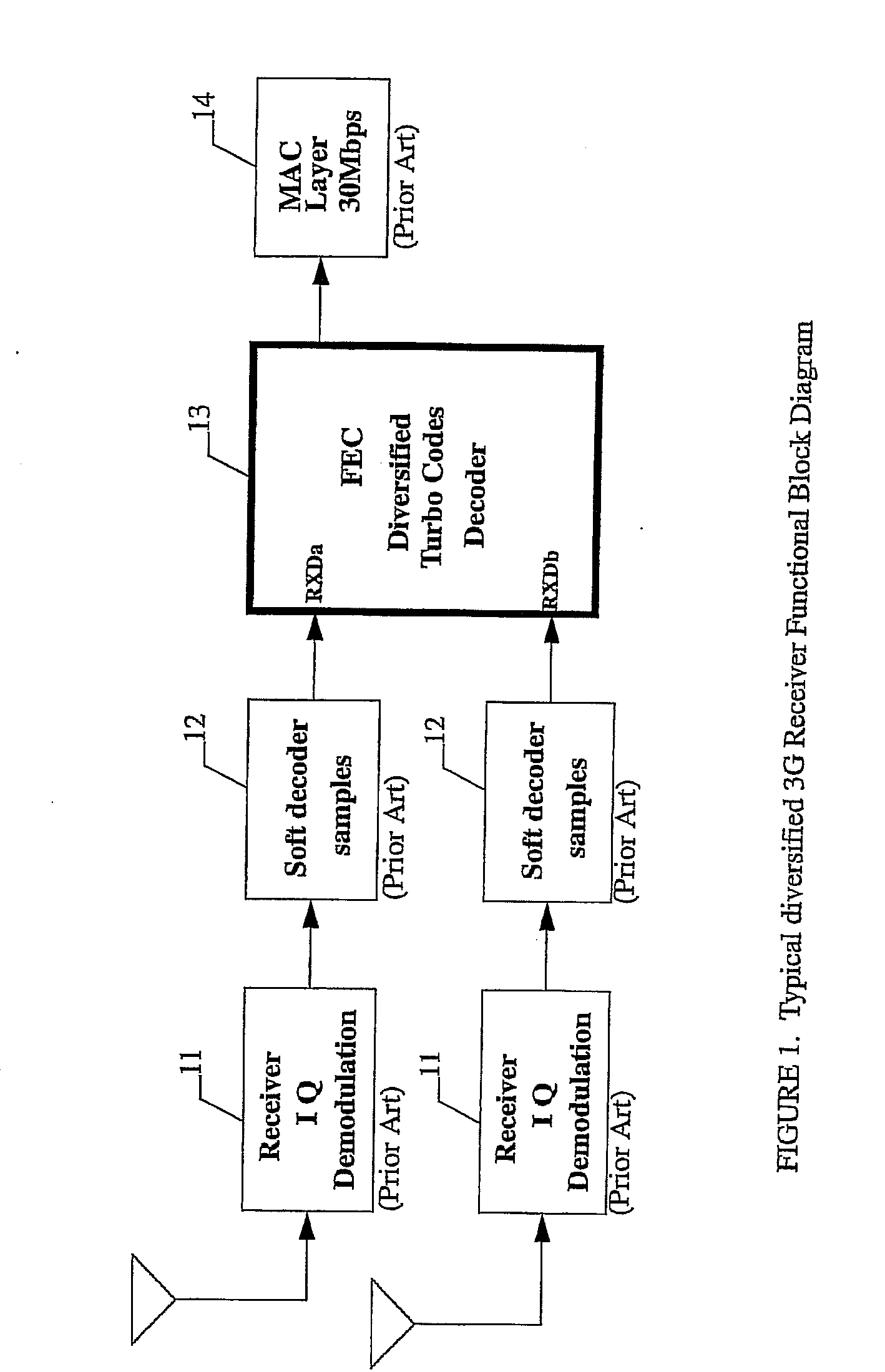

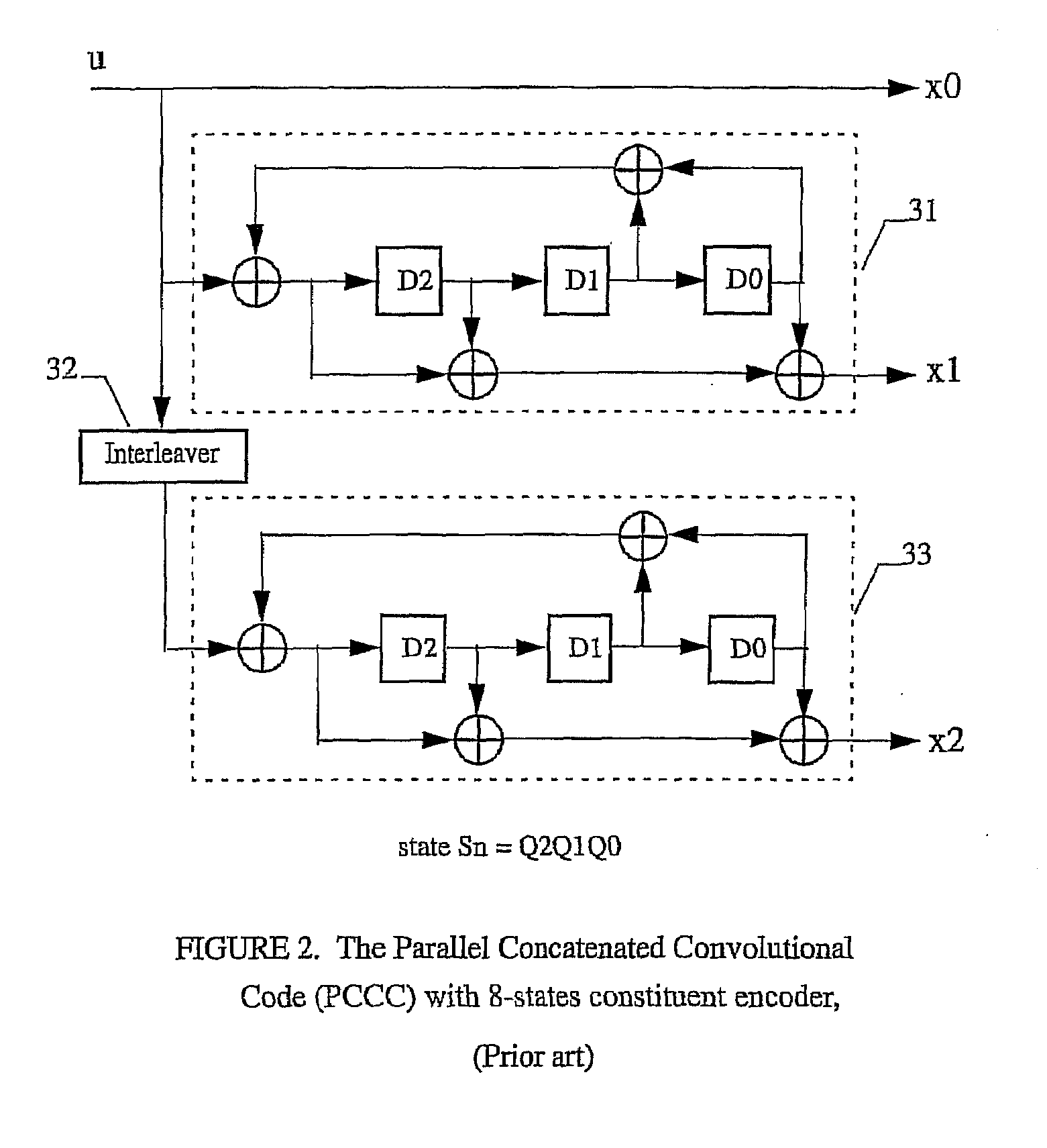

High speed turbo codes decoder for 3g using pipelined siso log-map decoders architecture

InactiveUS20090094505A1High speed data throughputReduce power consumptionData representation error detection/correctionOther decoding techniquesThird generationDiversity scheme

A baseband processor is provided having Turbo Codes Decoders with Diversity processing for computing baseband signals from multiple separate antennas. The invention decodes multipath signals that have arrived at the terminal via different routes after being reflected from buildings, trees or hills. The Turbo Codes Decoder with Diversity processing increases the signal to noise ratio (SNR) more than 6 dB which enables the 3rd Generation Wireless system to deliver data rates from up to 2 Mbit / s. The invention provides several improved Turbo Codes Decoder methods and devices that provide a more suitable, practical and simpler method for implementation a Turbo Codes Decoder in ASIC (Application Specific Integrated Circuits) or DSP codes. A plurality of parallel Turbo Codes Decoder blocks is provided to compute baseband signals from multiple different receiver paths. Several pipelined max-Log-MAP decoders are used for iterative decoding of received data. A Sliding Window of Block N data is used for pipeline operations. In a pipeline mode, a first decoder A decodes block N data from a first source, while a second decoder B decodes block N data from a second source during the same clock cycle. Pipelined max-Log-MAP decoders provide high speed data throughput and one output per clock cycle.

Owner:TURBOCODE LLC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com