Multi-mode comprehensive information recognition mobile double-arm robot device, system and method

A comprehensive information and robot technology, which is applied to the multi-mode comprehensive information recognition mobile dual-arm robot device system and field, can solve the problems of difficulty in recognizing the location of equipment, no voice interaction, and high intensity of placement work.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

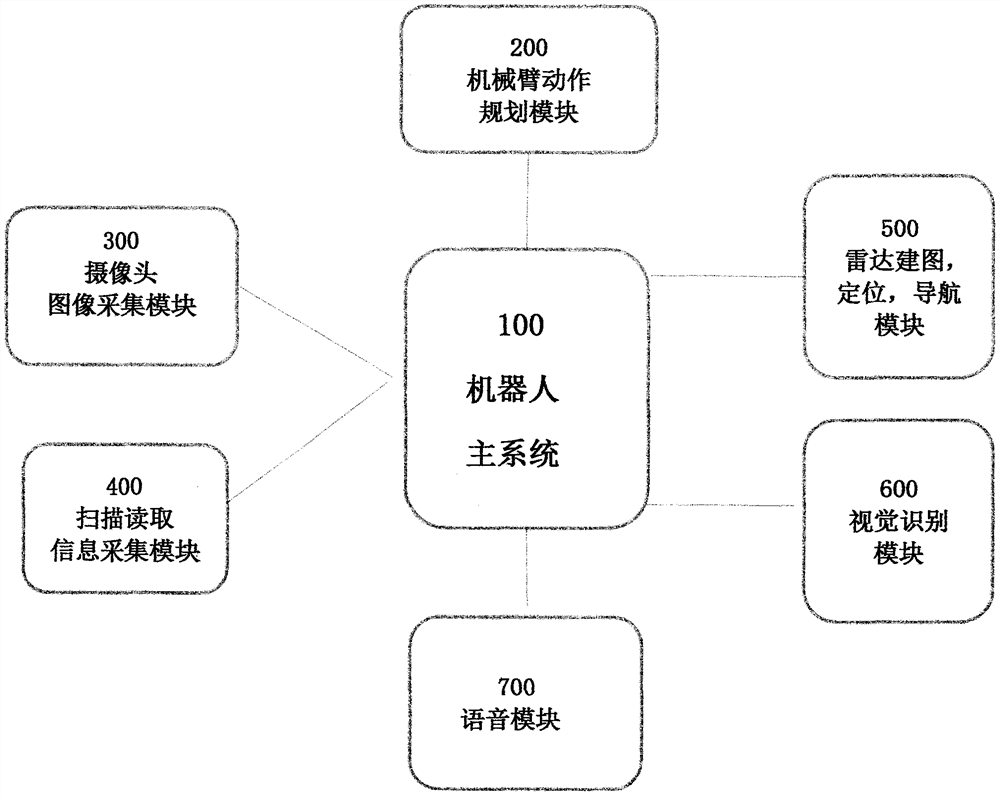

[0080] Such as figure 1 Shown, a kind of artificial intelligence robot embodiment that is used for campus comprises:

[0081] The main control system 10 of the robot, the module realizes the main control 10 of the robot and the data acquisition devices such as the camera 40 and the information collection and reading device 70, the main control system 10 and the robot arm 20, 30 are carried, the movement planning of the robot arm, Take and move school scene items (classrooms, laboratories, libraries), shopping mall items (supermarkets, shopping malls), medical items (outpatient clinics, wards), factories, and warehouse items. The main control system 10 communicates with the voice device, and the voice interaction between the robot and the user.

[0082] The main control system module 100 of the robot is connected with the voice device 90 , and communicates with the voice module 900 , the robot interacts with the user by voice, collects voice information, and issues voice comma...

Embodiment 2

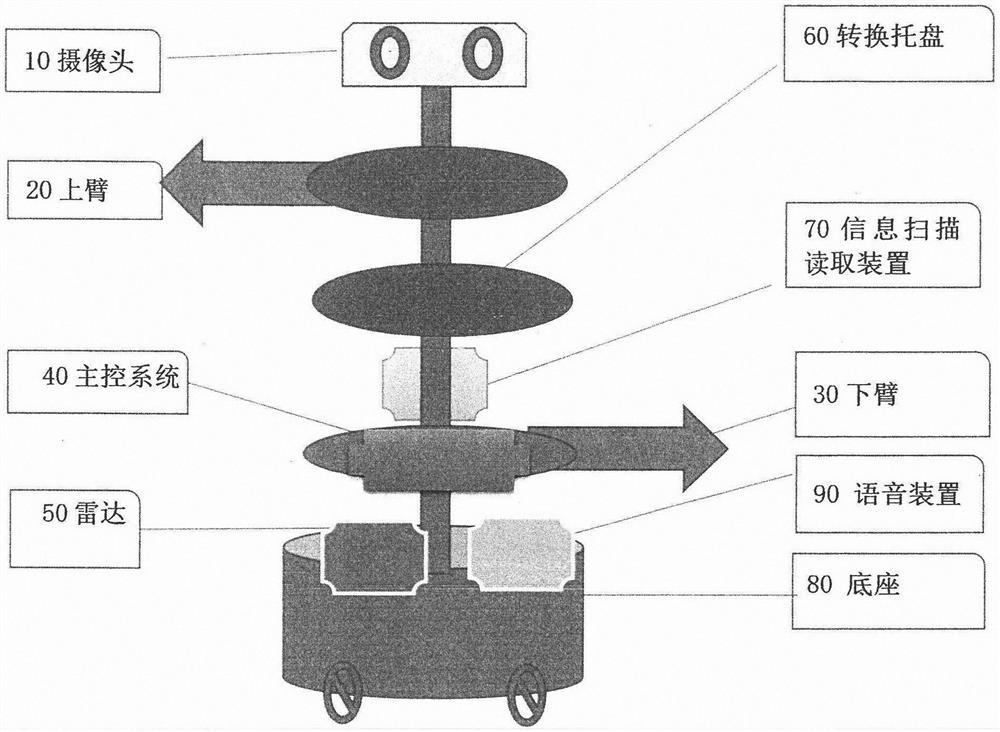

[0088] On the basis of Embodiment 1, the main control system module 100 of the robot, the visual recognition module 600 and the radar 50 build a map, and the positioning and navigation method, such as figure 2 Shown:

[0089] Set campus scene planning parameters, environment module. Enter the corresponding color, number, letter, text, special logo comprehensive features, etc. Extract the image features corresponding to the logo contour. Features are transformed into input data. Establish the characteristics of the image and input the characteristic value of the detection item. Improve the weight optimizer, quickly train the image, and get the output value. According to the special identification results, accurately identify the target, locate the target position,

[0090]The robot moves to the target position, specifies the navigation target under the main system 10, and sets the parameters frame_id, goal_id, PoseStamped, PositionPose, and the target composition of the Q...

Embodiment 3

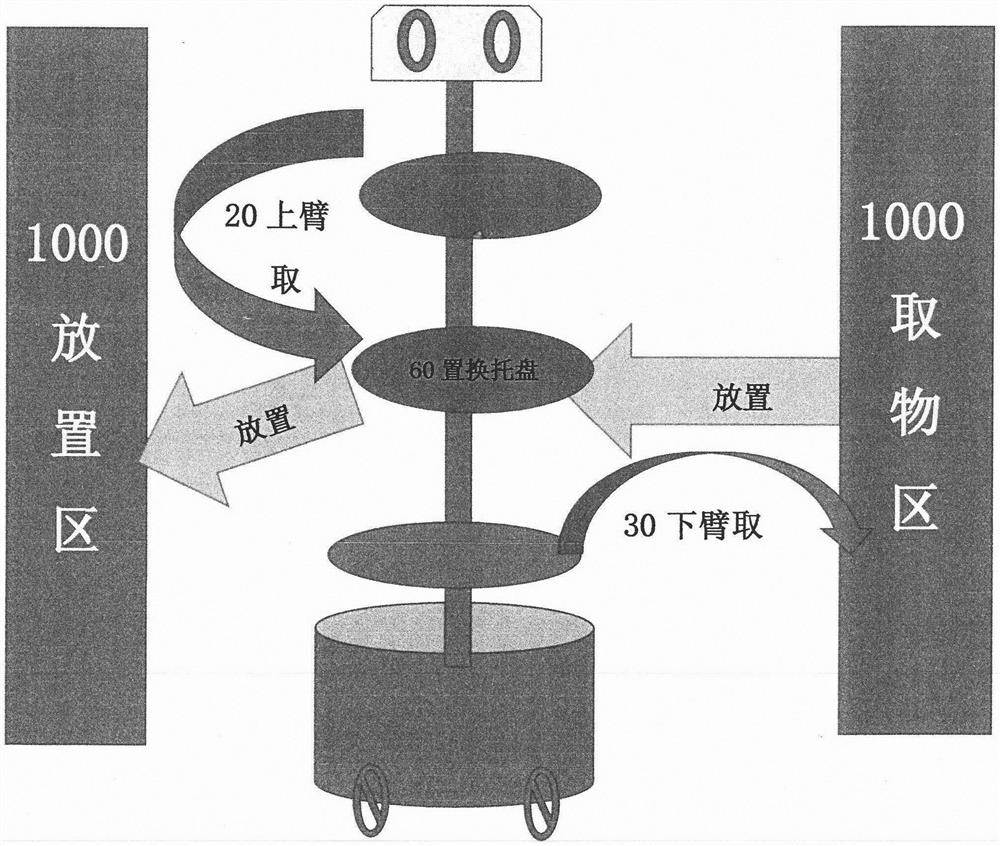

[0093] On the basis of Embodiment 1, the main control system module of the robot interacts with the visual recognition module and the robot arms 20, 30, and the target setting, target recognition, target positioning, and action planning methods are as follows: image 3 Shown:

[0094] In the picking and placing area 1000, the visual recognition module is used to create and identify the target (setting the size of the target object, the pose of the target object, and the color of the target object), and create a mathematical model according to the characteristics of equipment, objects, and scenes. Extract color, outline, digital code, two-dimensional code, text, and image features corresponding to special logo images. Classify and identify grabbing targets.

[0095] Convert the feature values of colors, numbers, letters, texts, special identification values, etc. into input data. Establish the mathematical model of the feature of the image, and input the feature value of th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com