Tracking device, tracking method, and tracking system

An information processing method and technology of a tracking device are applied in the fields of tracking devices and information processing, readable storage media, and electronic equipment, and can solve the problems of not reaching the accuracy of human movement trajectory and the like.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

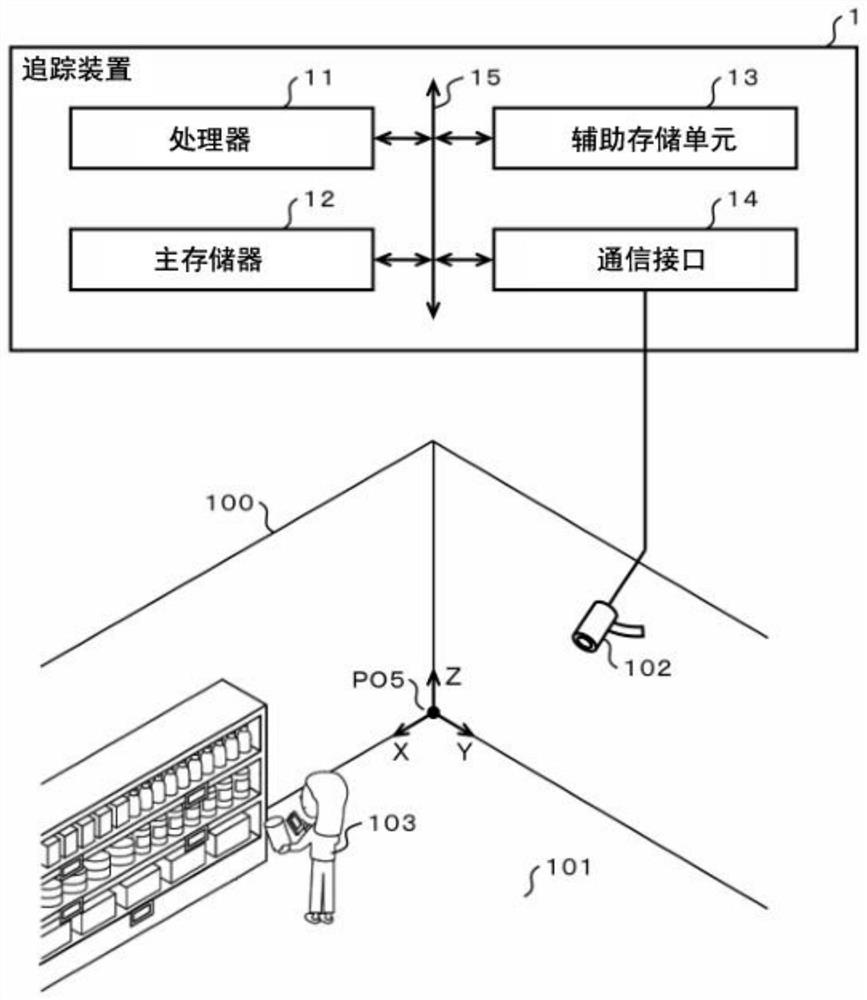

[0044] figure 1 It is a block diagram showing the main circuit configuration of the tracking device 1 according to the first embodiment.

[0045] The tracking device 1 tracks the actions of the person 103 in the store 101 based on the detection result of the person 103 by the smart camera 102 provided for the store 101 of the camera shop 100 .

[0046] The smart camera 102 takes a moving image (video). The smart camera 102 determines an area where the person 103 appears in the captured moving image (hereinafter referred to as a recognition area). The smart camera 102 measures the distance from the smart camera 102 to the person 103 reflected in the captured moving image. Any method such as a stereo camera method or a ToF (Time of Flight: Time of Flight) method can be applied to the distance measurement method. Every time the smart camera 102 determines a new recognition area, it outputs detection data including area data specifying the recognition area and distance data ind...

no. 2 example

[0100] Figure 5 It is a block diagram showing the main circuit configuration of the tracking device 2 according to the second embodiment. In addition, in Figure 5 In pair with figure 1 The same elements shown are given the same symbols, and their detailed descriptions are omitted.

[0101] The tracking device 2 tracks the actions of the person 103 in the store 101 based on the detection results of the person 103 captured by each of the two smart cameras 102 installed for taking pictures of the store 101 of the store 100 .

[0102] The two smart cameras 102 are installed in such a manner that the directions of the imaging centers are different from each other and can simultaneously image one person 103 .

[0103] The hardware configuration of the tracking device 2 is the same as that of the tracking device 1 . The tracking device 2 differs from the tracking device 1 in that the main memory 12 or the auxiliary storage unit 13 stores an information processing program for pe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com