Autonomous robot navigation method and system based on multi-angle visual perception

An autonomous robot and visual perception technology, applied in the field of robot navigation, can solve problems such as increasing the difficulty of model training, redundant image input information, over-reliance, etc., and achieve the effect of improving path planning and obstacle avoidance capabilities

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

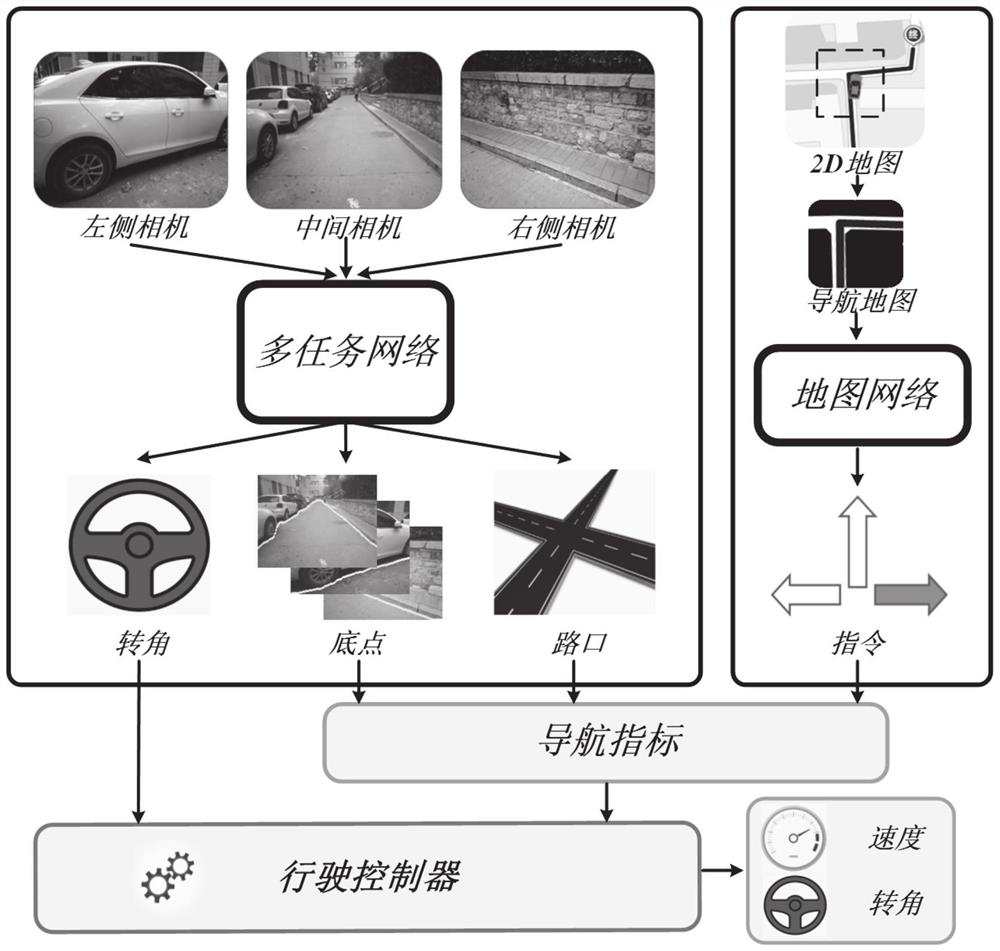

[0037] refer to figure 1 , the autonomous robot navigation method based on multi-angle visual perception of this embodiment includes:

[0038] Step 1: Obtain the image of the robot's forward direction and the images on the left and right sides in real time and input it to the multi-task network.

[0039] In the specific implementation process, the camera or video camera set at the front position of the robot and on both sides of the robot may be used to perform image acquisition to obtain corresponding visual information.

[0040] Step 2: Predict the robot's freely drivable area, intersection location and intersection steering through the multi-task network.

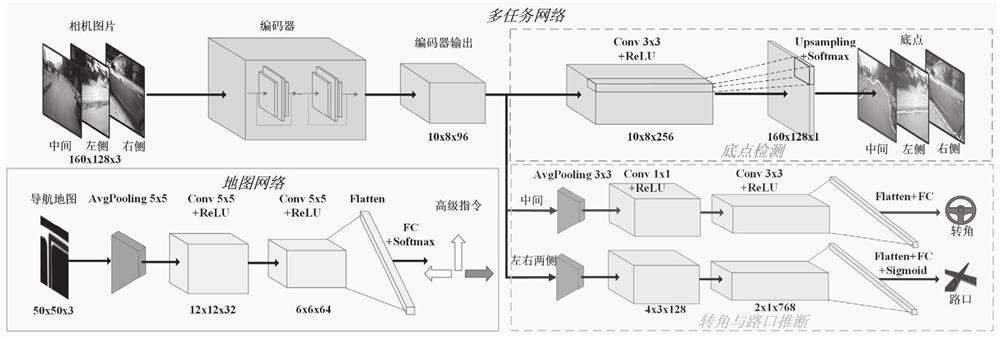

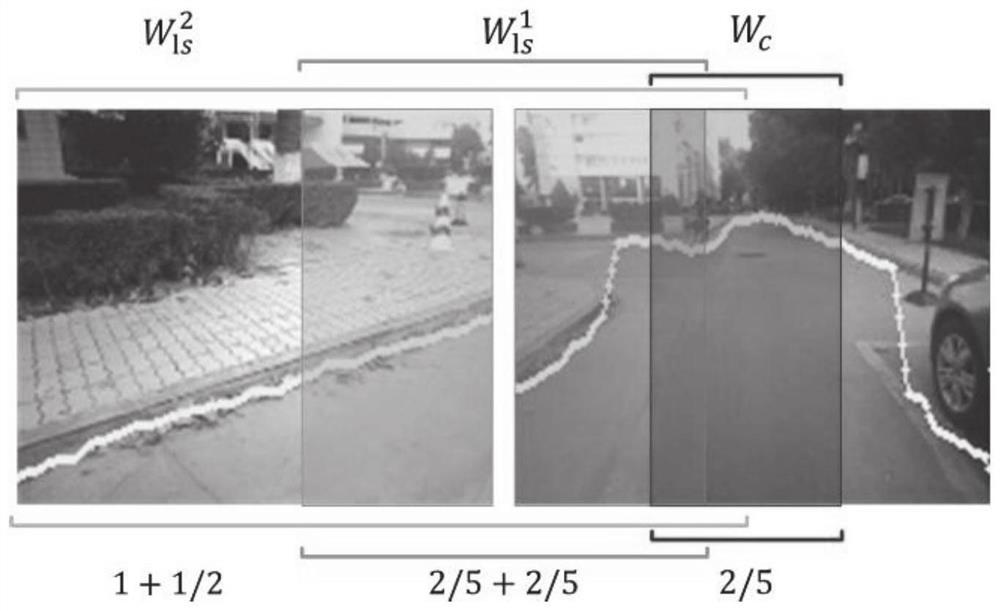

[0041] combine figure 2 It can be seen that the multi-task network of this embodiment includes an encoder, a bottom point detection network, and a corner and intersection inference network; the encoder is used to extract the image of the robot's forward direction and the features in the images on the left and right si...

Embodiment 2

[0095] This embodiment provides an autonomous robot navigation system based on multi-angle visual perception, which includes:

[0096] (1) An image acquisition module, which is used to acquire the image of the robot's forward direction and the images of the left and right sides in real time and input it to the multi-task network.

[0097] In the specific implementation process, the camera or video camera set at the front position of the robot and on both sides of the robot may be used to perform image acquisition to obtain corresponding visual information.

[0098] (2) Navigation prediction module, which is used to predict the freely drivable area of the robot, the position of the intersection and the turning of the intersection through the multi-task network.

[0099] combine figure 2 It can be seen that the multi-task network of this embodiment includes an encoder, a bottom point detection network, and a corner and intersection inference network; the encoder is used to e...

Embodiment 3

[0135] This embodiment provides a computer-readable storage medium on which a computer program is stored, and when the program is executed by a processor, implements the steps in the above-mentioned multi-angle visual perception autonomous robot navigation method.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com