Complex scene recognition method and system based on multispectral image fusion

A multi-spectral image and complex scene technology, applied in neural learning methods, character and pattern recognition, instruments, etc., can solve the problems of accurate information extraction, poor feature extraction ability, long calculation time, etc., to solve the problem of intelligent scene recognition. problems, enhanced extraction capabilities, the effect of reducing computational costs

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

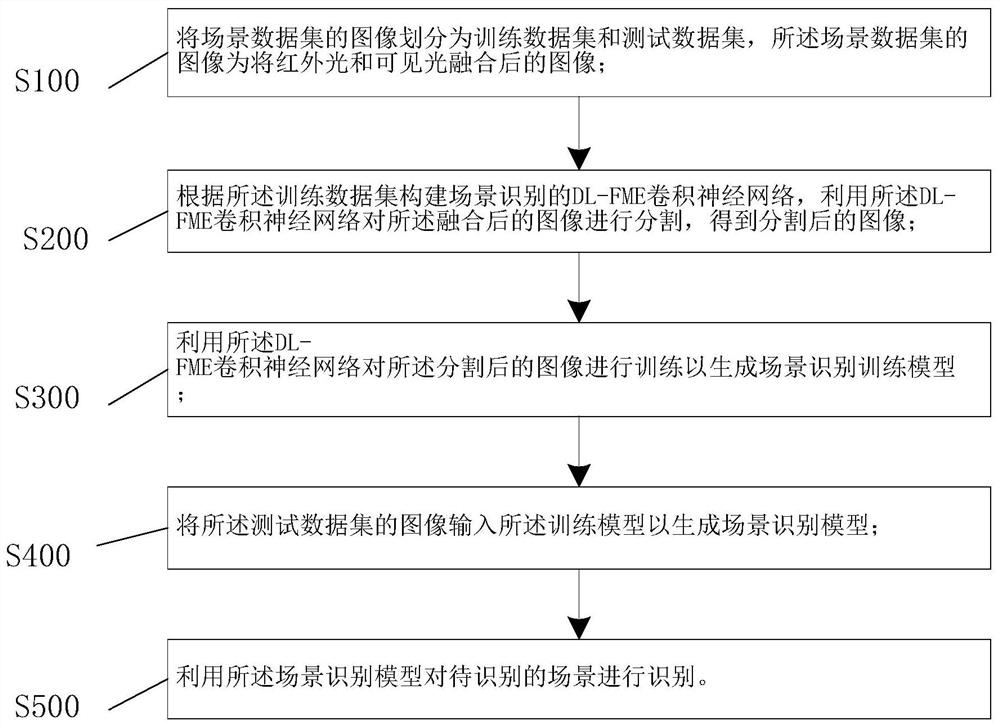

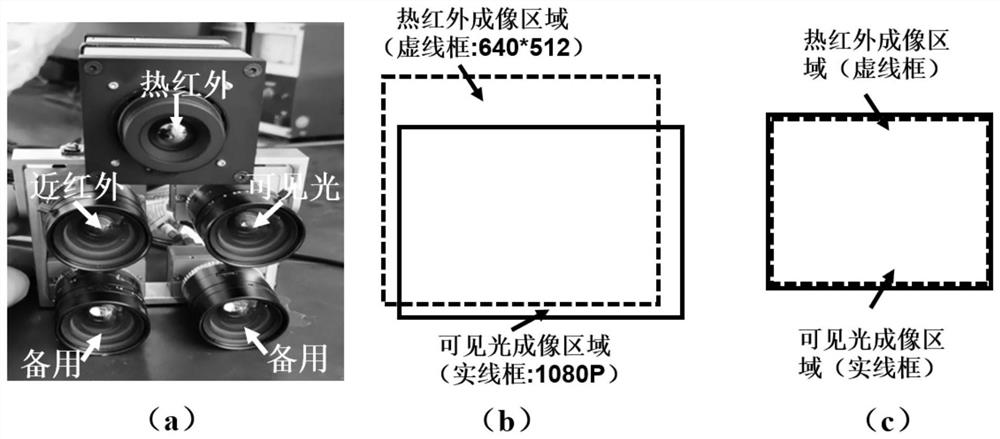

[0067] see figure 1 , figure 1 A schematic flow chart of a complex scene recognition method based on multispectral image fusion provided by an embodiment of the present invention;

[0068] The method includes the following steps:

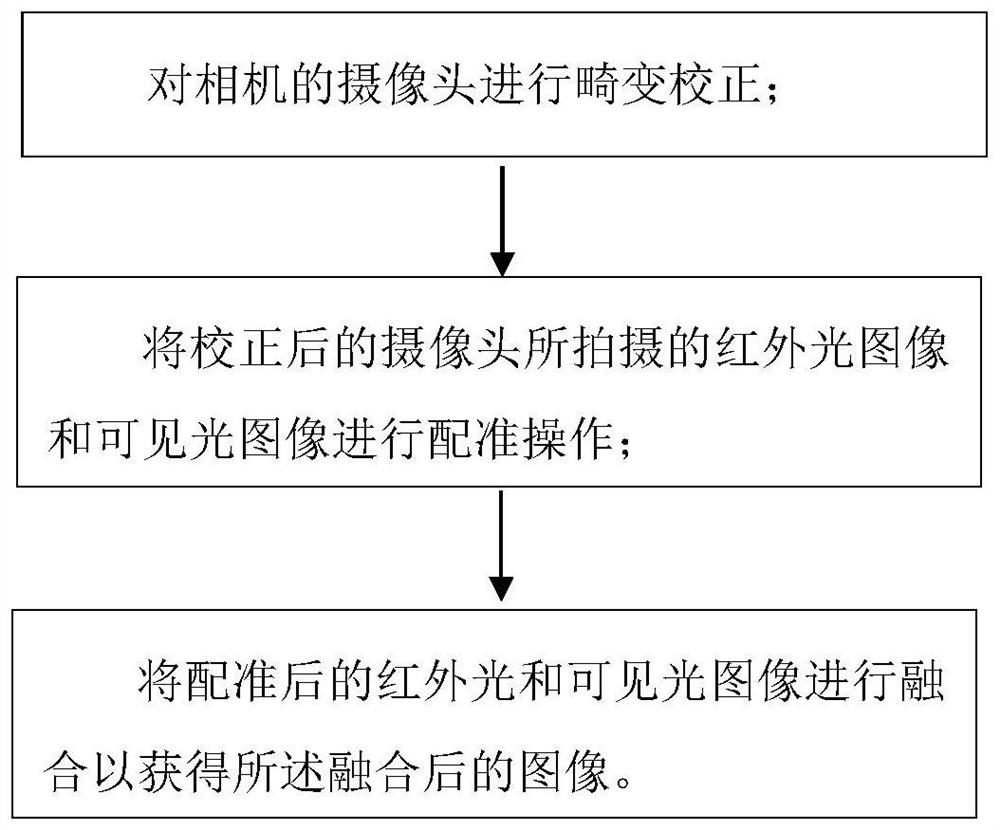

[0069] S100: Divide the image of the scene data set into a training data set and a test data set, where the image of the scene data set is an image obtained by fusing infrared light and visible light;

[0070] S200: Construct a DL-FME convolutional neural network for scene recognition according to the training data set, and use the DL-FME convolutional neural network to segment the fused image to obtain a segmented image;

[0071] S300: Using the DL-FME convolutional neural network to train the segmented image to generate a scene recognition training model;

[0072] S400: Input the images of the test data set into the scene recognition training model to generate a scene recognition model;

[0073] S500: Use the scene recognition model to recogni...

Embodiment 2

[0146] see Figure 6 , Figure 6 A schematic structural diagram of a complex scene recognition system based on multispectral image fusion provided by an embodiment of the present invention, including

[0147] Fusion module, for dividing the image of scene data set into training data set and test data set, the image of described scene data set is the image after infrared light and visible light fusion;

[0148] Training module, for utilizing described DL-FME convolutional neural network to train the image after the segmentation to generate scene recognition training model;

[0149] Extraction module, the extraction module is an important module of the training link, located in the DL-FME convolutional neural network that constructs scene recognition according to the training data set, utilizes the DL-FME convolutional neural network to process the fused image Feature extraction to obtain the features of the fused image;

[0150]An enhancement module, for inputting the images...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com