Cross-camera road space fusion and vehicle target detection tracking method and system

A space fusion and target detection technology, applied in the field of intelligent transportation, can solve problems such as the limited monitoring range of camera sensors

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

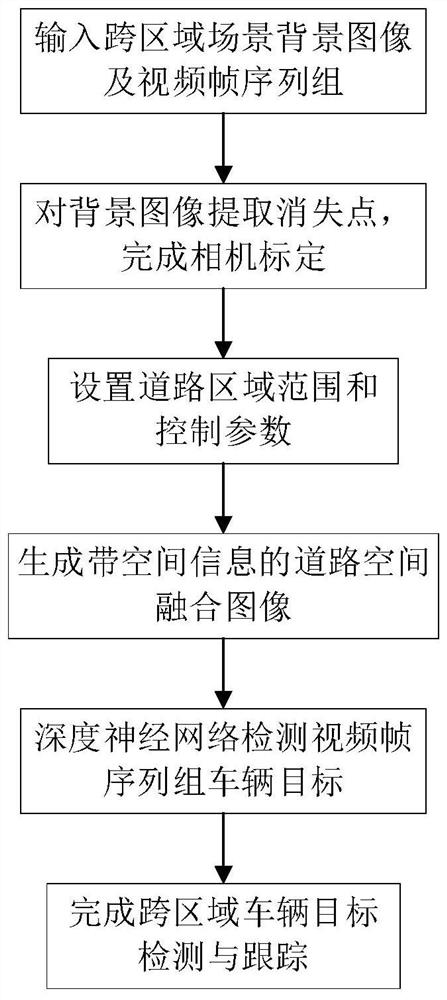

[0047] Such as Figure 1 to Figure 7 As shown, the present invention discloses a method and system for cross-camera road space fusion and vehicle target detection and tracking. The detailed steps are as follows:

[0048] Step 1, input scene 1 and scene 2 traffic scene background image p 1 and p 2 , video frame image sequence group s 1 and s 2 ; Wherein, the background image refers to the image that does not contain the vehicle target on the image, and the video frame image refers to the image extracted from the original video collected by the camera;

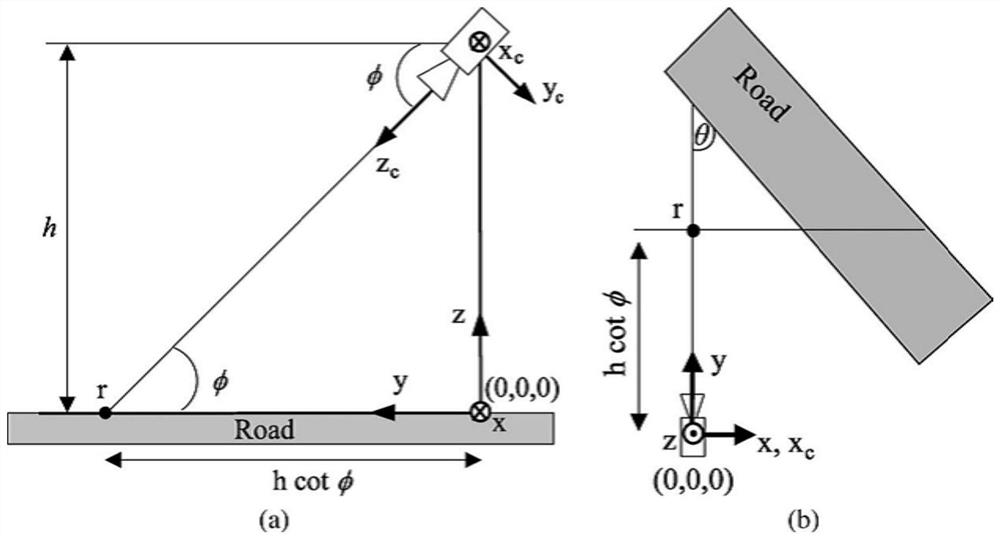

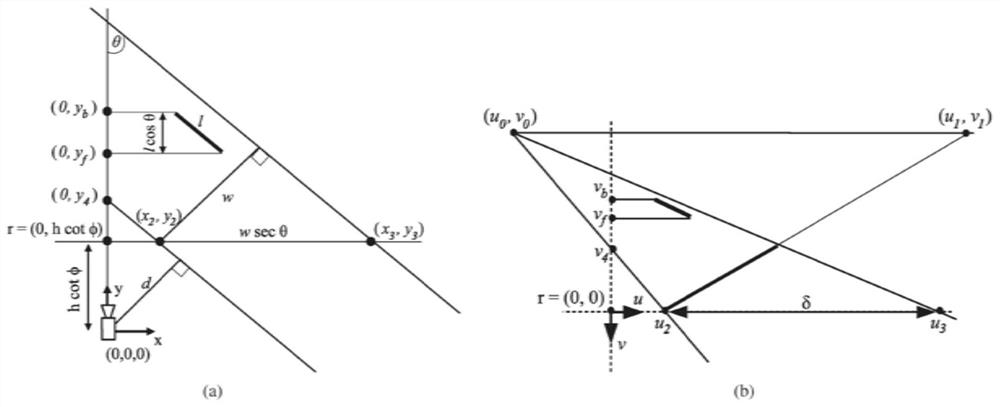

[0049] Step 2, construct the coordinate system and model, and complete the camera calibration. Background image p from step 1 1 and p 2 Extract the vanishing point, establish the camera model and coordinate system (world coordinate system, image coordinate system), the two-dimensional enveloping frame model of the vehicle target in the image coordinate system, and combine the vanishing point for camera calibration to obtai...

Embodiment 2

[0084] This embodiment provides a cross-camera road space fusion and vehicle target detection and tracking system, the system includes:

[0085] The data input module is used to input multiple traffic scene background images that need to be spliced and the video frame image sequence group corresponding to the scene that contains the vehicle;

[0086] The camera calibration module is used to establish the camera model and coordinate system, the two-dimensional envelope model of the vehicle target in the image coordinate system, perform camera calibration, obtain camera calibration parameters and the final scene two-dimensional-three-dimensional transformation matrix;

[0087] The control point identifies the road locale module, which is used in the p 1 and p 2 In , respectively set two control points to identify the range of the road area. The control points are located at the centerline of the road. Set the world coordinates and image coordinates of the control points in sc...

Embodiment 3

[0092] In order to verify the validity of the method proposed by the present invention, an embodiment of the present invention adopts the following Figure 4 Shown is a set of real road traffic scene images in which a single vanishing point along the road direction is identified and the camera is calibrated. Such as Figure 5 As shown, it is the result map of road space fusion using the method proposed in the present invention. On this basis, the vehicle target in the video frame sequence group is detected by the deep network method, combined with the road space fusion results, the cross-camera vehicle target detection and tracking are completed, and the results are as follows: Figure 7 shown.

[0093] The experimental results show that the road space fusion completed by this method has high precision and can better complete the cross-camera vehicle target detection and tracking. The experimental results are shown in Table 1. The experimental results show that this method c...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com