Direct method unsupervised monocular image scene depth estimation method

A technology of depth estimation and scene depth, which is applied in the fields of neural network, depth estimation, and computer vision, can solve problems such as the inability to eliminate external parameter variation errors, and achieve the effects of overcoming the strong dependence of radar sensors, wide application range, and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] Below in conjunction with accompanying drawing and specific embodiment the present invention is described in further detail:

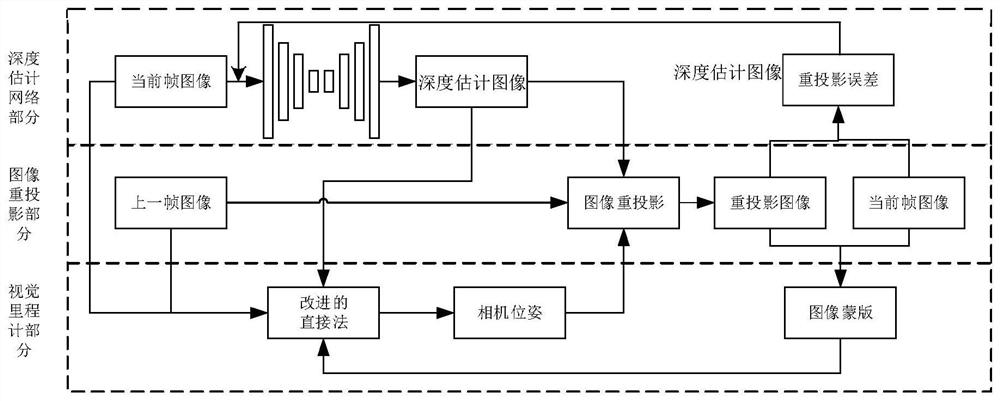

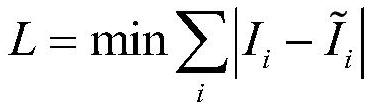

[0036] Such as figure 1 As shown, a direct method for unsupervised monocular image scene depth estimation method, including:

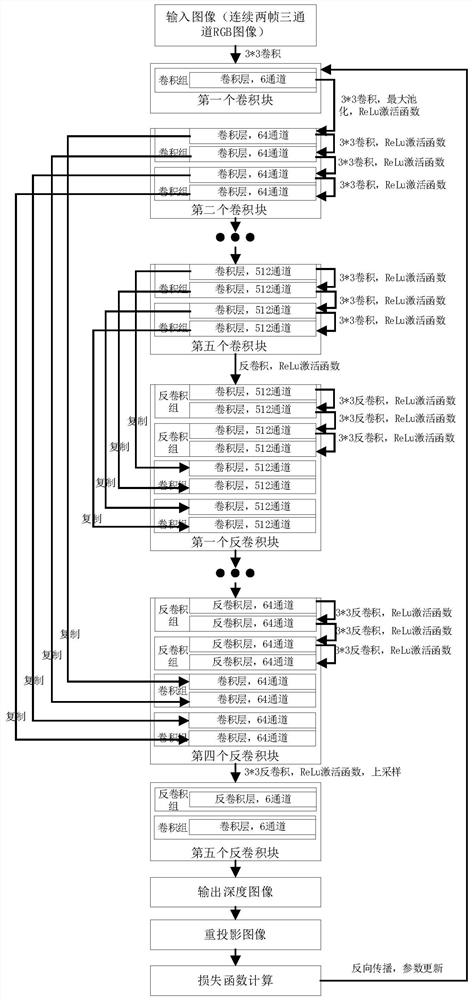

[0037] Step 1: The constructed depth estimation neural network is a fully convolutional U-shaped, including a convolutional part and a deconvolutional part. The depth estimation neural network takes the monocular continuous image as the input image, and uses the depth estimation neural network to output the depth estimation image.

[0038] Step 1 includes the following sub-steps:

[0039] Step 1.1: The convolution part uses the convolutional network in the Res-Net18 network structure as the main structure network. The convolution part is composed of several convolution blocks. The previous convolution block and the next convolution block are connected through the maximum pool operation. Each convolution block contains...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com