Real-time real-person virtual hair try-on method based on 3D face tracking

A 3D, real person technology, applied in the field of real-time real person virtual test hair, can solve problems such as lack of realism, and achieve the effect of enhancing experience, increasing realism, and avoiding time-consuming calculation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The invention provides a real-time real person virtual trial method based on 3D face tracking. The user collects video frames through an ordinary webcam, and the algorithm automatically fits the 3D hair model to the position of the user's face and head in the video frame, and performs augmented reality drawing, so that the user can watch the test hair combining virtual and real in real time Effect.

[0038] The technical scheme step that the present invention adopts is as follows:

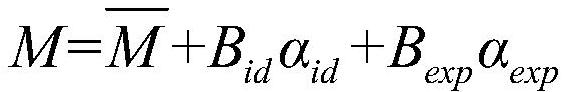

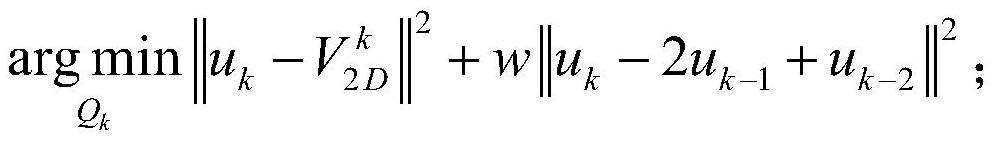

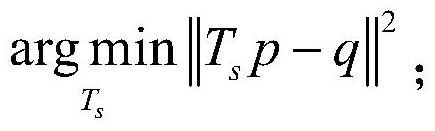

[0039] Part 1: Real-time 3D face tracking for virtual trial launch

[0040]1) Use the lightweight MobileNet (a deep neural network model structure) as the backbone neural network of the 3D face feature point regression algorithm. This network model can balance accuracy and computational efficiency. Compared with 2D face feature points, the present invention adopts 3D face feature points because it can better express the position and posture of the 3D face model in 3D space, and when the fa...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com