Automatic abstract generation method and device, electronic equipment and storage medium

An automatic generation and summarization technology, which is applied in electronic digital data processing, natural language data processing, unstructured text data retrieval, etc. It can solve the problem that rare named entities cannot be generated, so as to enhance the feature representation ability and improve the accuracy. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

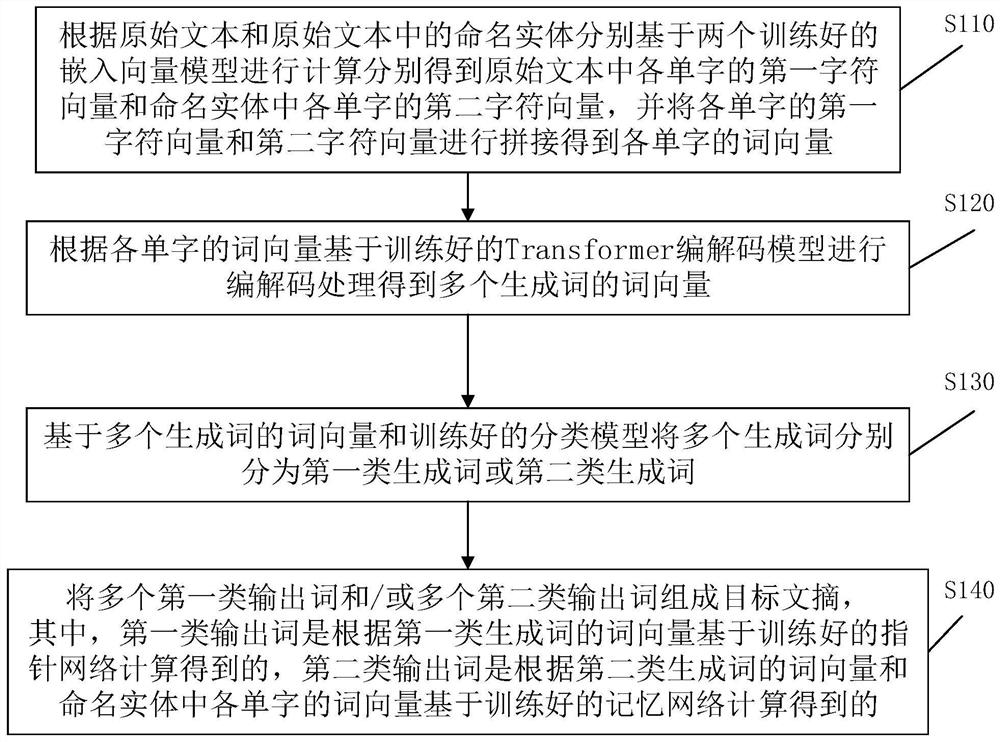

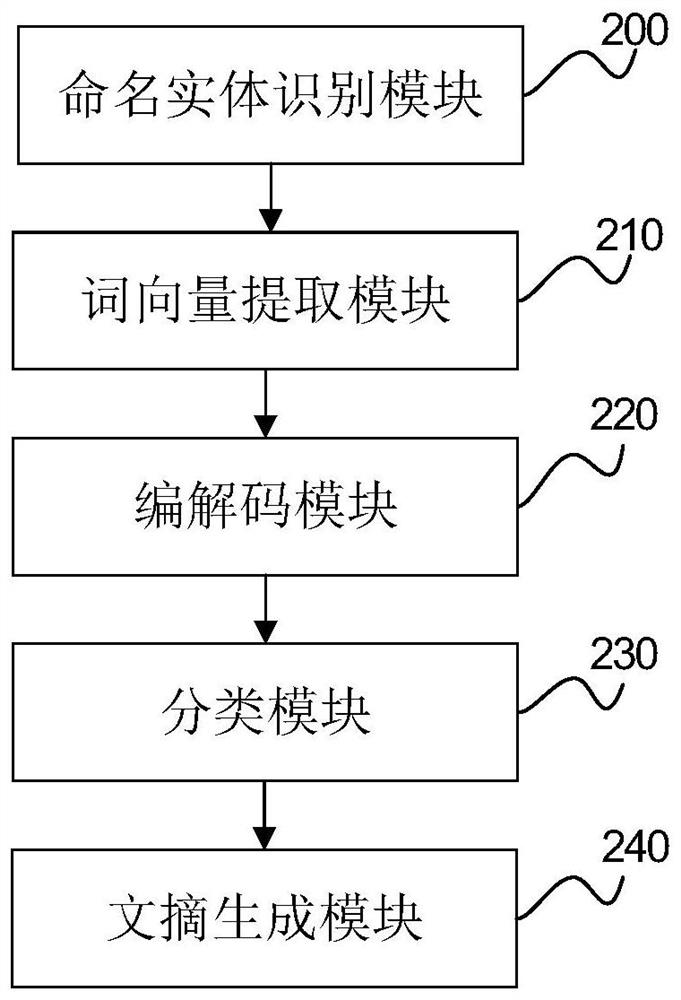

[0050] Embodiment 1 provides a method for automatically generating abstracts, please refer to figure 1 shown, including the following steps:

[0051] S110. According to the original text and the named entities in the original text, calculate based on two trained embedding vector models respectively to obtain the first character vector of each word in the original text and the second character vector of each word in the named entity, and set The first character vector and the second character vector of each word are concatenated to obtain the word vector of each word.

[0052] Named entity information is very important. Named entity words such as person names, place names, time or organization names in the original text have a high probability of appearing in the corresponding abstracts. Therefore, by combining the first character vector and the second character vector of each word Splicing is performed so that the word vector of each word in the original text contains the nam...

Embodiment 2

[0081] Embodiment 2 is an improvement on the basis of Embodiment 1. The trained Transformer encoding and decoding model includes a position encoding layer, an encoder and a decoder. Since the Transformer model relies entirely on the attention mechanism for encoding and decoding, unlike traditional methods Relying on the sequence model, the temporal characteristics cannot be reflected from the input perspective of the model. Therefore, adding the position vector obtained by the word vector of each word through the position encoding layer to the embedding vector of each word helps to determine the position of each word, or the distance between each word in the sequence, and then better express relationship between words. Specifically include the following steps:

[0082] Input the word vector of each word into the position encoding layer to perform position encoding to obtain the position vector of each word; calculate the word vector and position vector of each word to obtain ...

Embodiment 3

[0092] Embodiment 3 is an improvement on the basis of Embodiment 2. The pointer network uses the probability distribution calculation function to connect the output of the decoder and the output of the encoder in the trained Transformer codec model, and solves the named entities that do not appear in the dictionary through the pointer mechanism. Problems that cannot be generated, and have the ability to effectively handle long-distance dependencies and parallel computing characteristics.

[0093] The trained pointer network includes a linear transformation layer, a normalization layer and a probability distribution calculation function. The linear transformation layer is a simple fully connected neural network that projects the word vectors produced by the decoder into a much larger vector called logits. Assuming that 10,000 different English words in the dictionary are learned from the training set, the log probability vector is a vector of 10,000 cells in length - each cell ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com