Patents

Literature

210 results about "Encoding (memory)" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

Memory has the ability to encode, store and recall information. Memories give an organism the capability to learn and adapt from previous experiences as well as build relationships. Encoding allows the perceived item of use or interest to be converted into a construct that can be stored within the brain and recalled later from short-term or long-term memory. Working memory stores information for immediate use or manipulation which is aided through hooking onto previously archived items already present in the long-term memory of an individual.

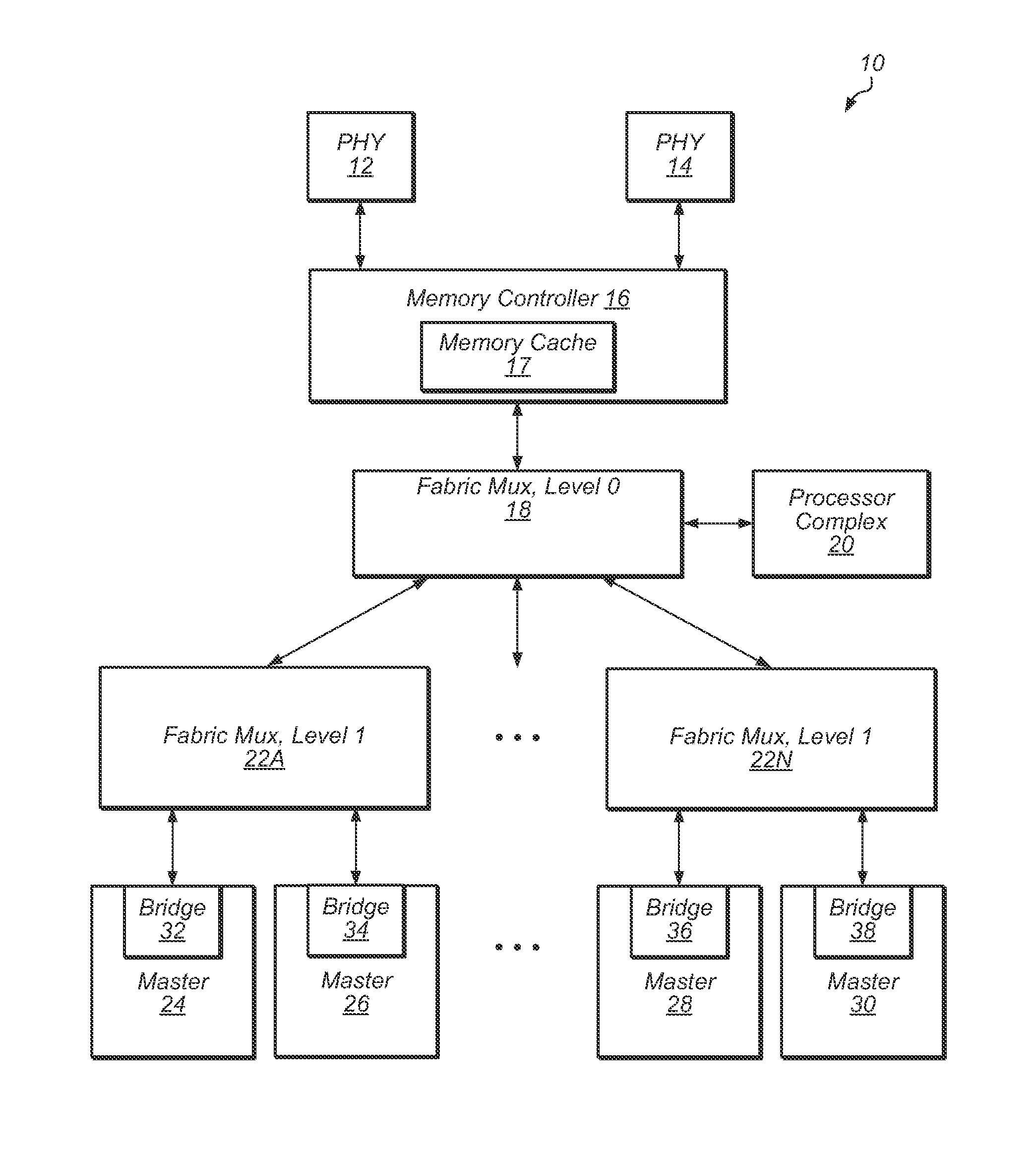

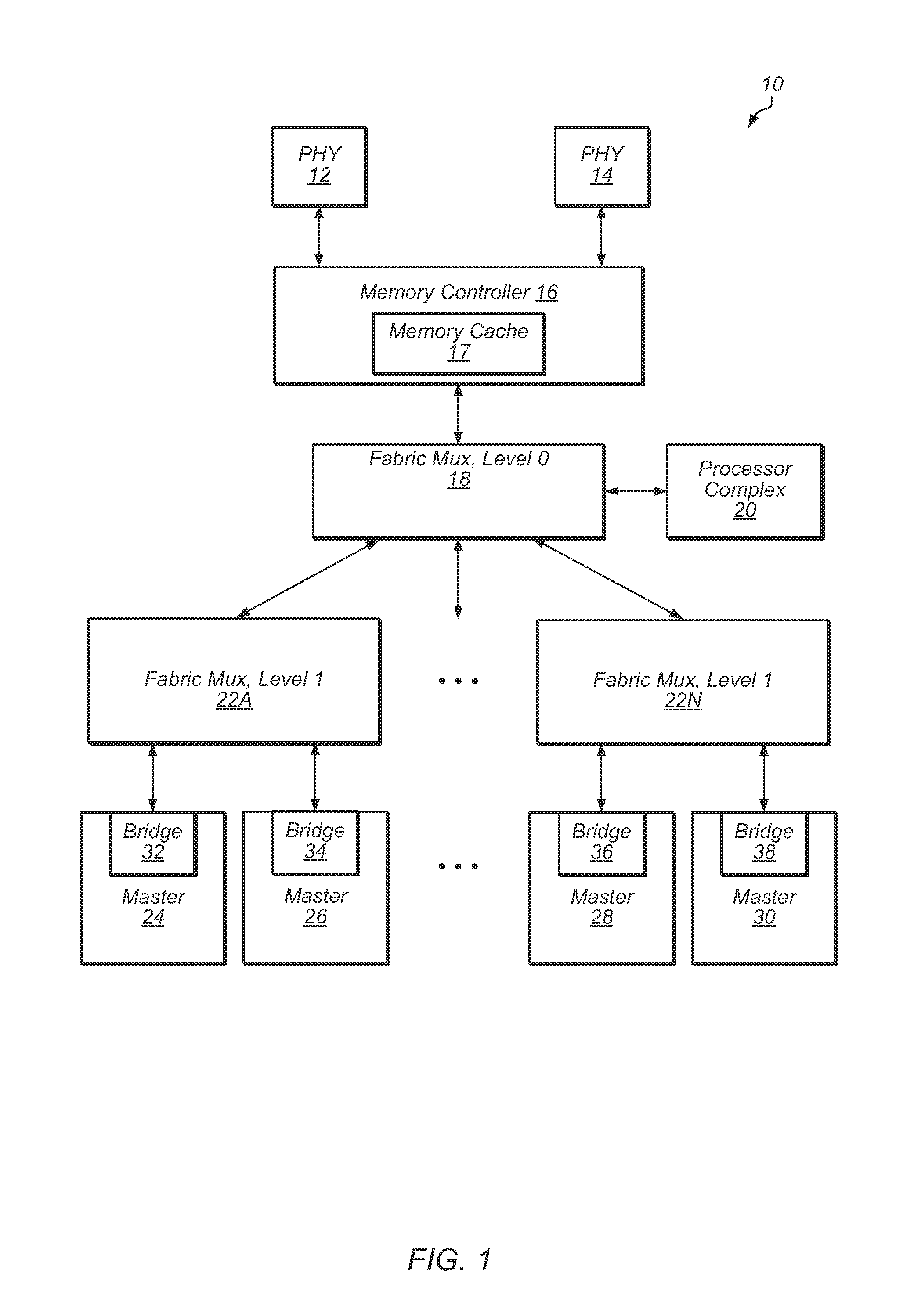

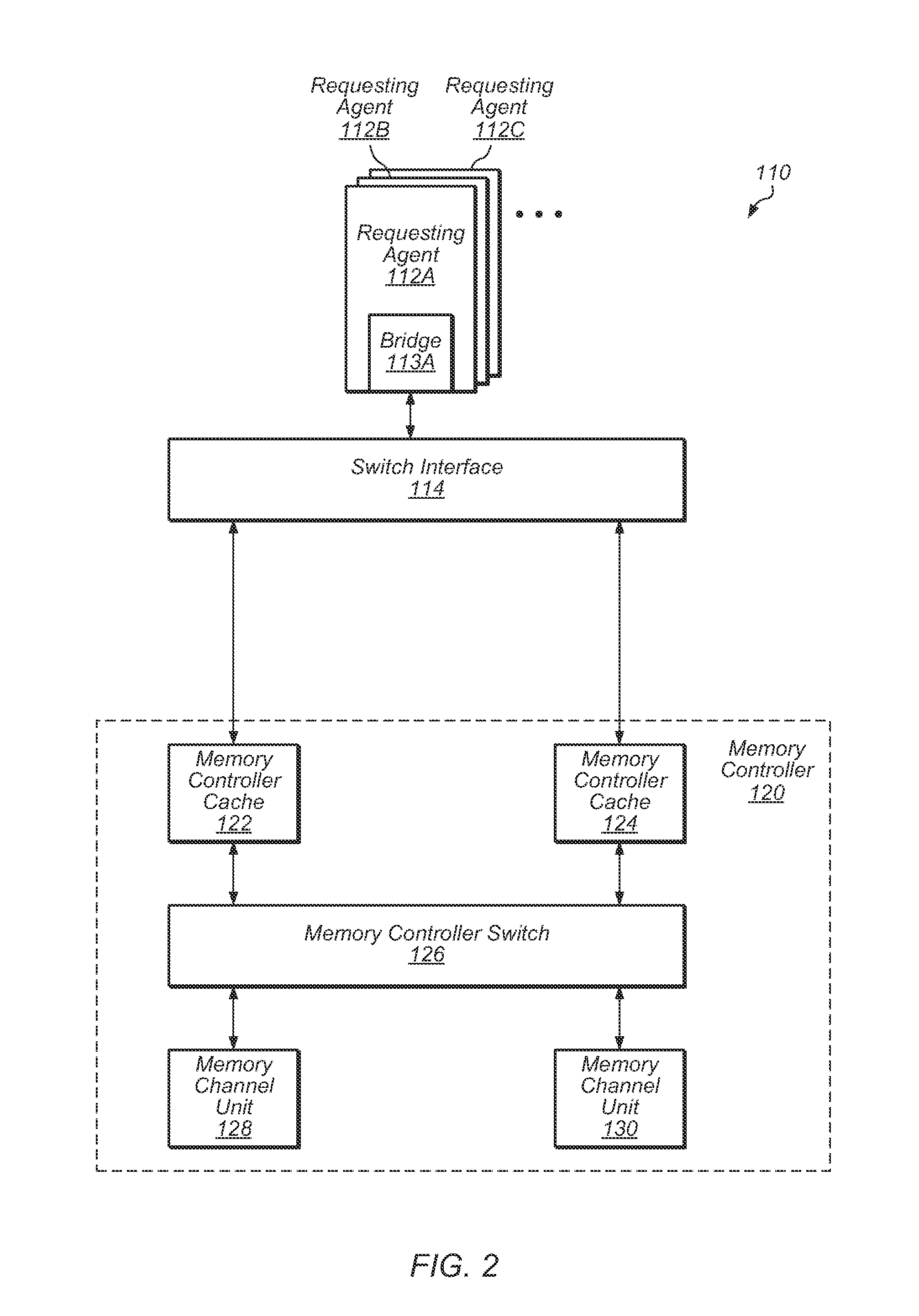

Compressed encoding for repair

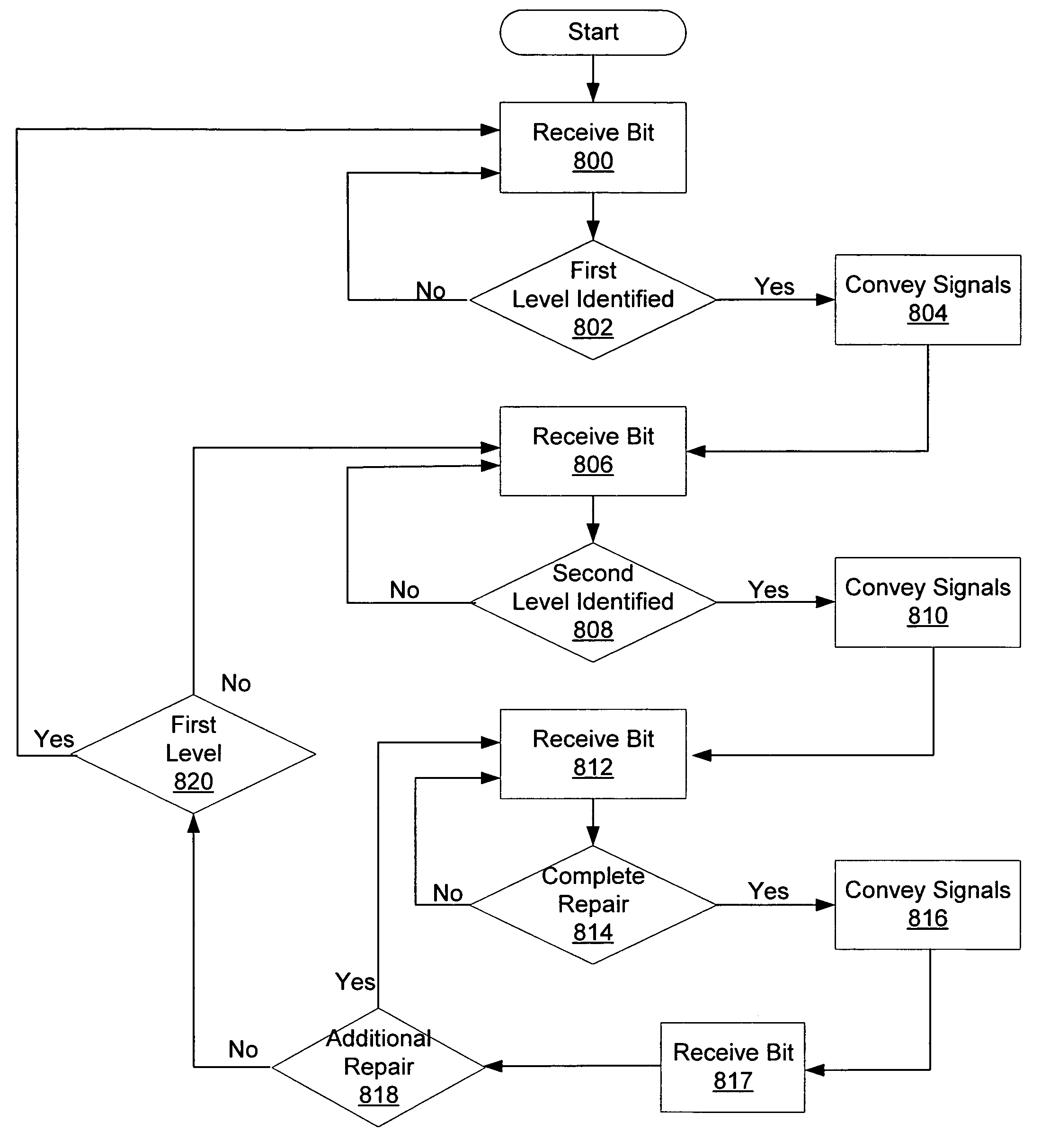

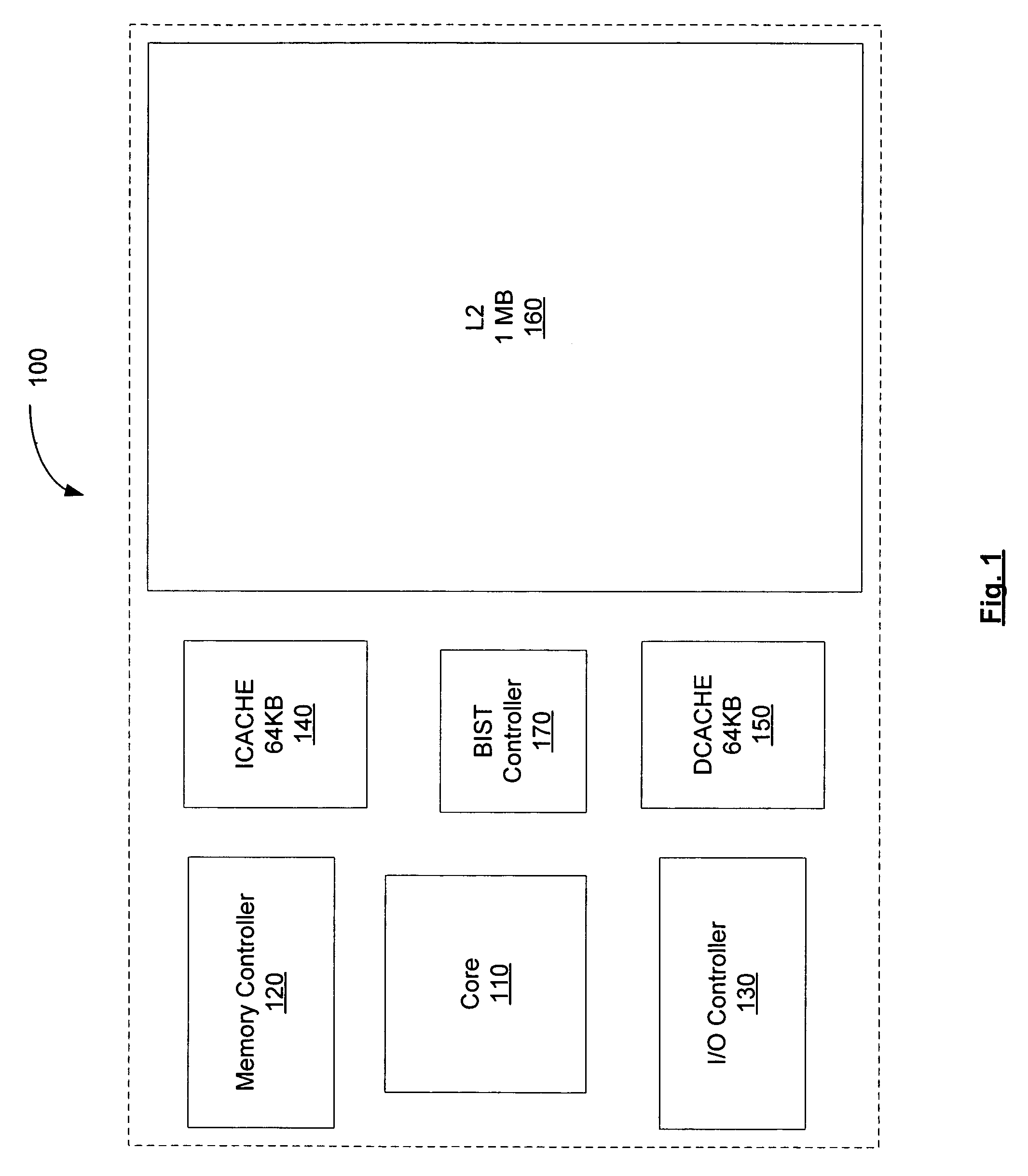

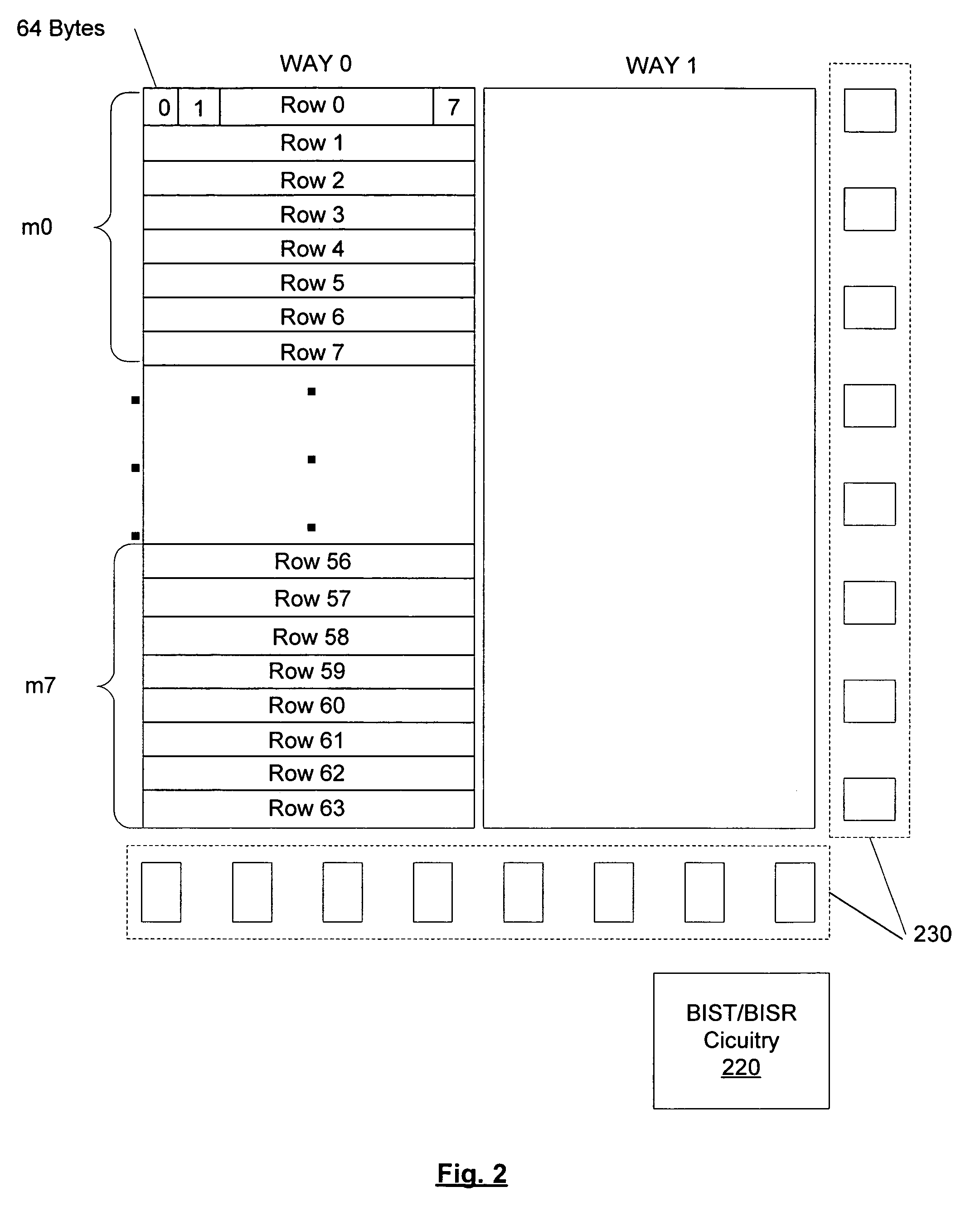

A hierarchical encoding format for coding repairs to devices within a computing system. A device, such as a cache memory, is logically partitioned into a plurality of sub-portions. Various portions of the sub-portions are identifiable as different levels of hierarchy of the device. A first sub-portion may corresponds to a particular cache, a second sub-portion may correspond to a particular way of the cache, and so on. The encoding format comprises a series of bits with a first portion corresponding to a first level of the hierarchy, and a second portion of the bits corresponds to a second level of the hierarchy. Each of the first and second portions of bits are preceded by a different valued bit which serves to identify the hierarchy to which the following bits correspond. A sequence of repairs are encoded as string of bits. The bit which follows a complete repair encoding indicates whether a repair to the currently identified cache is indicated or whether a new cache is targeted by the following repair. Therefore, certain repairs may be encoded without respecifying the entire hierarchy.

Owner:ADVANCED MICRO DEVICES INC

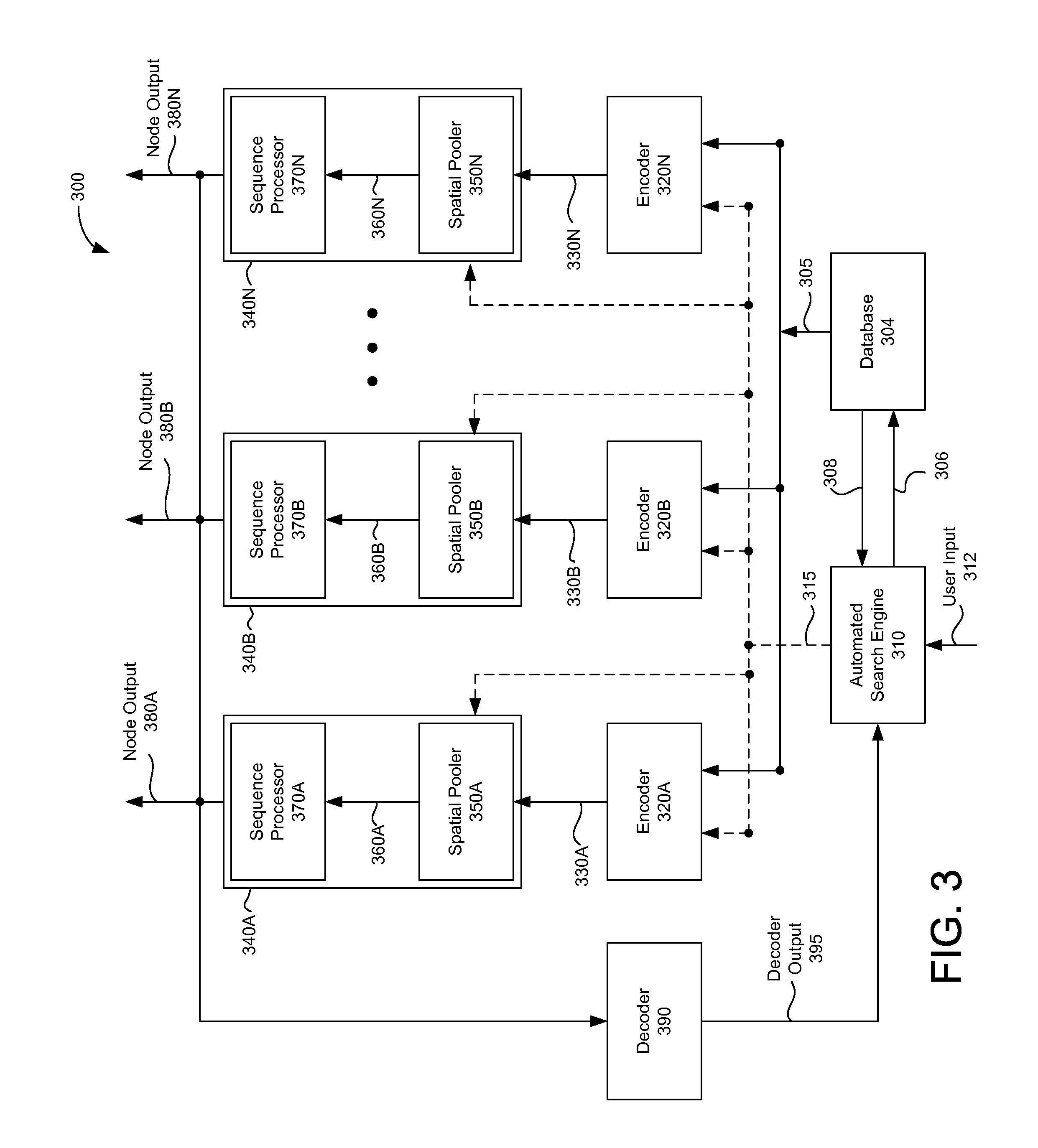

Automated search for detecting patterns and sequences in data using a spatial and temporal memory system

ActiveUS20130054552A1Mathematical modelsDigital data processing detailsData miningDistributed representation

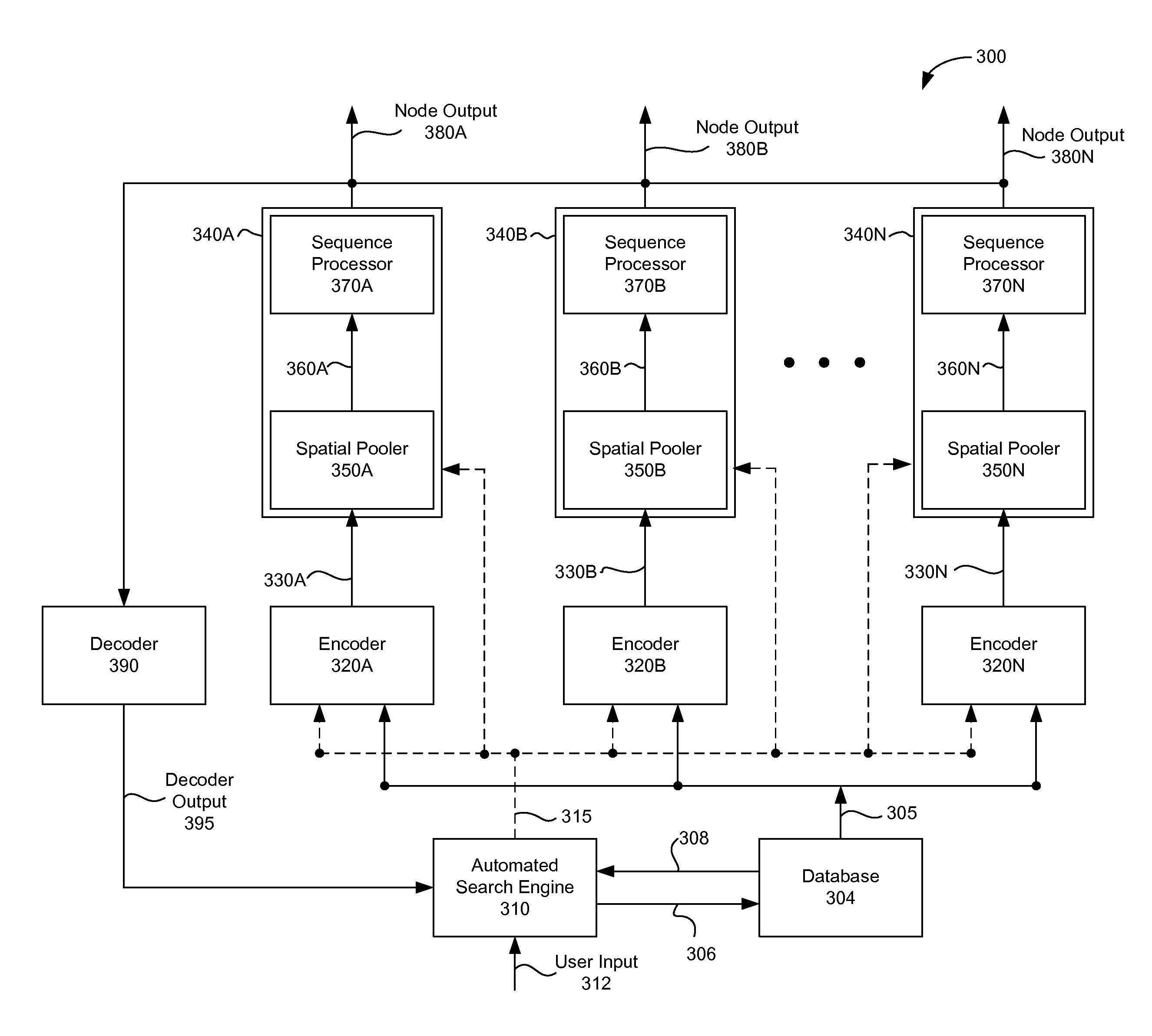

A spatial and temporal memory system (STMS) processes input data to detect whether spatial patterns and / or temporal sequences of spatial patterns exist within the data, and to make predictions about future data. The data processed by the STMS may be retrieved from, for example, one or more database fields and is encoded into a distributed representation format using a coding scheme. The performance of the STMS in predicting future data is evaluated for the coding scheme used to process the data as performance data. The selection and prioritization of STMS experiments to perform may be based on the performance data for an experiment. The best fields, encodings, and time aggregations for generating predictions can be determined by an automated search and evaluation of multiple STMS systems.

Owner:NUMENTA INC

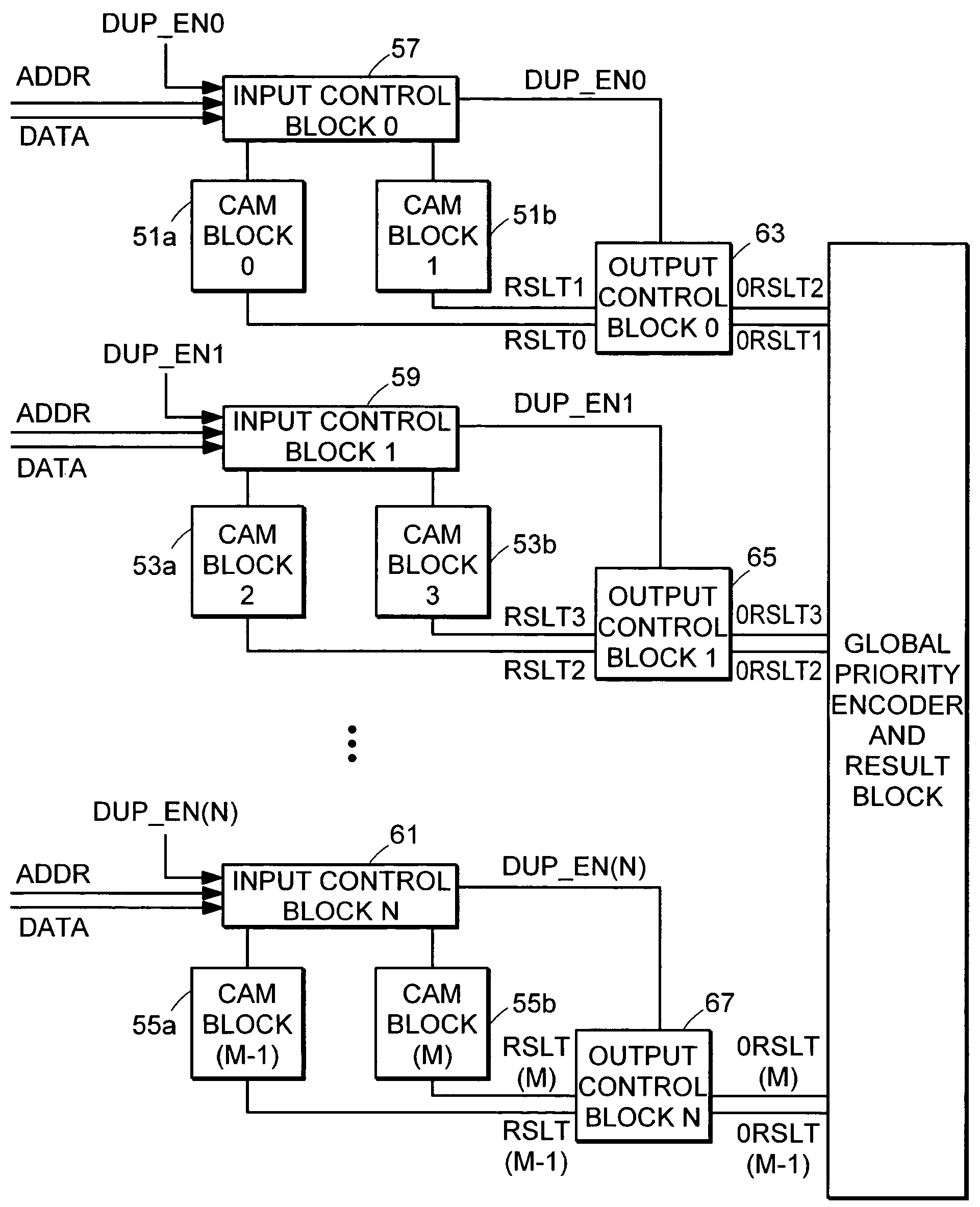

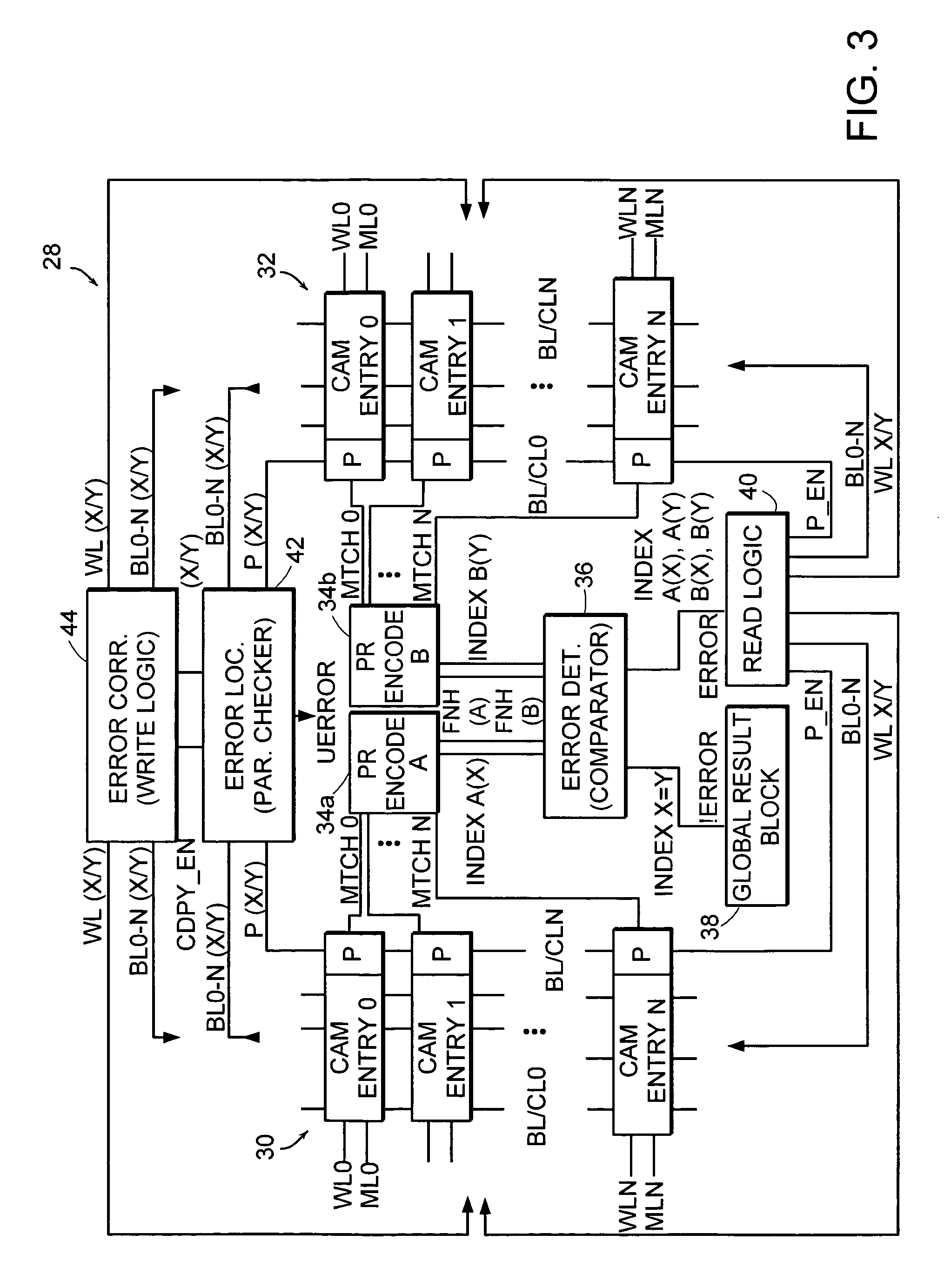

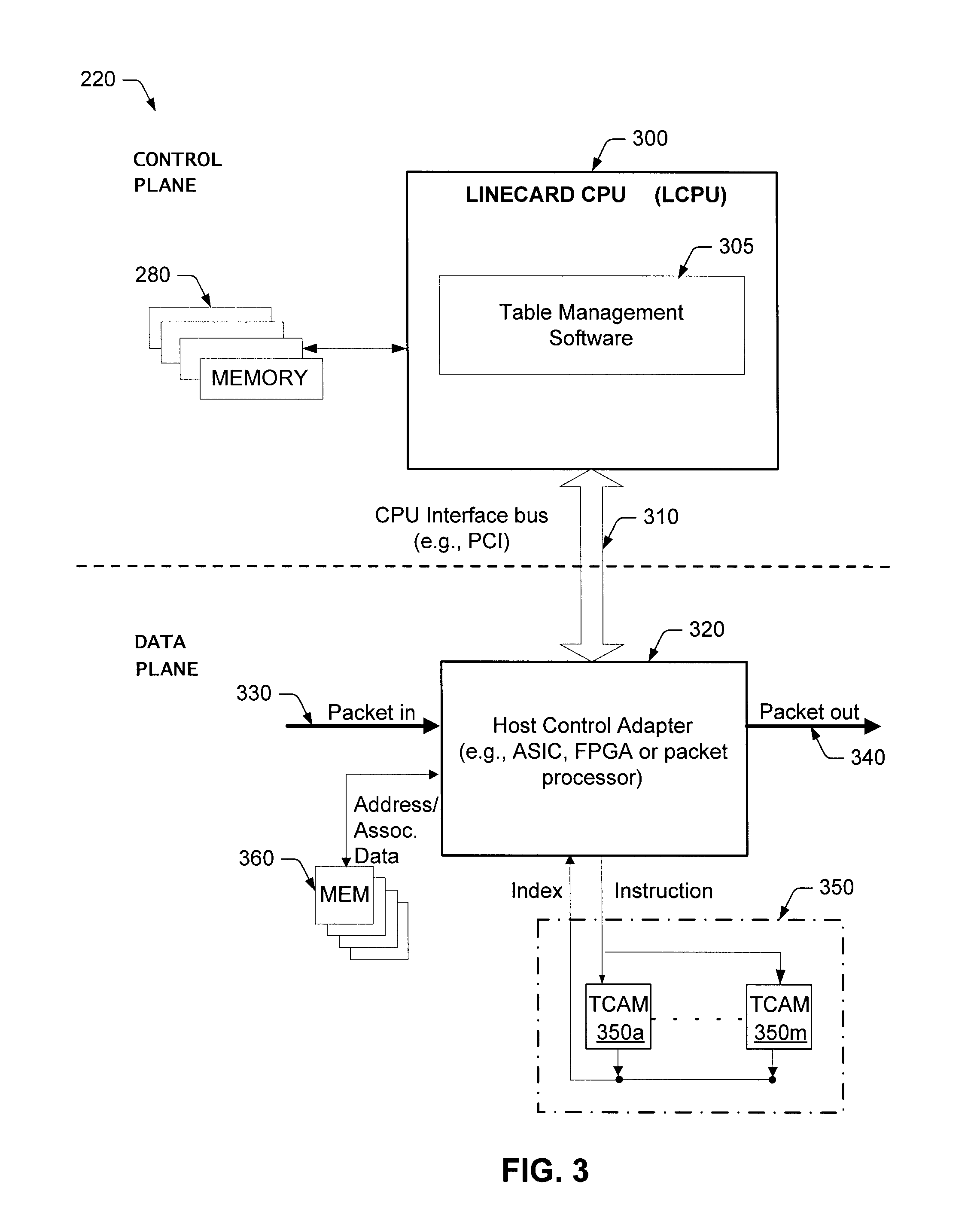

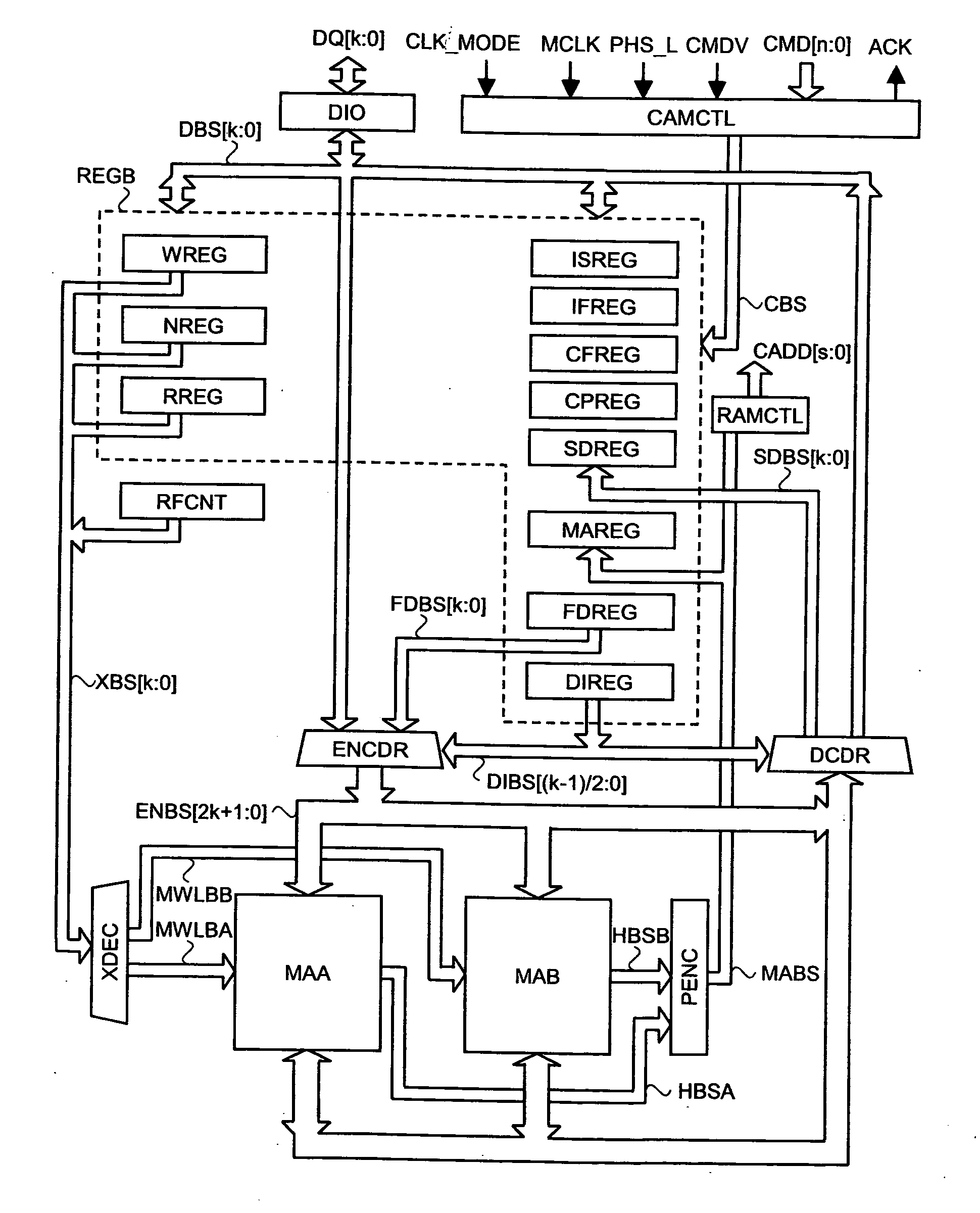

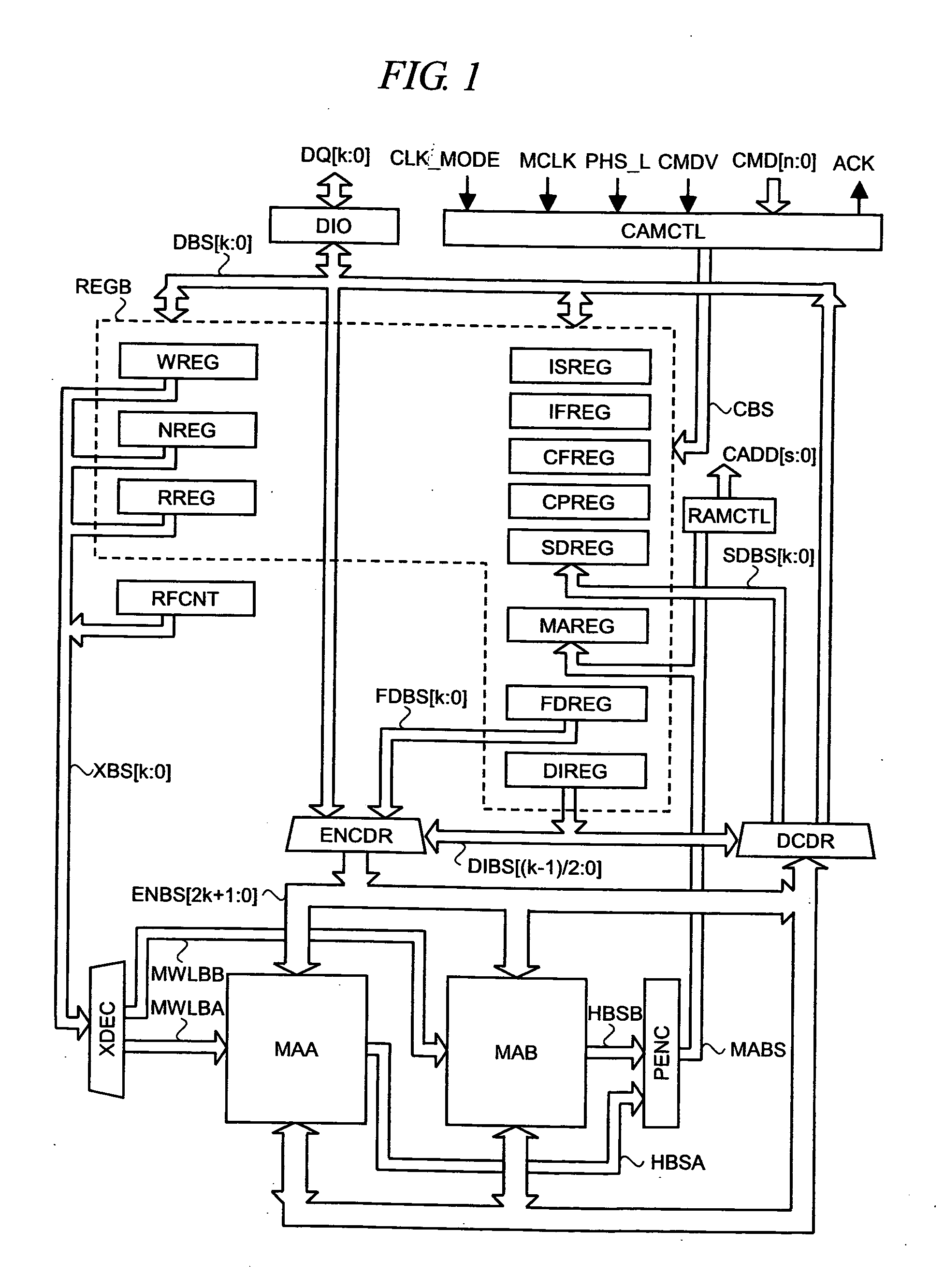

Error correcting content addressable memory

InactiveUS7254748B1Maximize usable CAM spaceRedundant data error correctionMemory systemsSoft errorMatch line

A CAM and method for operating a CAM are presented. Copies of a CAM database are duplicated and placed in a first set of CAM locations and a second set of CAM locations. An error detector is used to determine false matches in the case of soft errors within the entries producing those false matches. While the entries producing a match should have the same index location, errors might cause those match lines to have an offset. If so, the present CAM, through use of duplicative sets of CAM locations, will detect the offset and thereafter the values in each index location that produces a match, along with the corresponding parity or error detection encoding bit(s). If the parity or error detection encoding bit(s) indicate an error in a particular entry, then that error is located and the corresponding entry at the same index within the other, duplicative set of CAM locations is copied into the that erroneous entry. Since duplicative copies are by design placed into the first and second sets of CAM locations, whatever value exists in the opposing entry can be written into the erroneous entry to correct errors in that search location. The first and second sets of CAM locations are configurable to be duplicative or distinct in content, allowing error detection and correction to be performed at multiple user-specified granularities. The error detection and correction during search is backward compatible to interim parity scrubbing and ECC scan, as well as use of FNH bits set by a user or provider.

Owner:AVAGO TECH INT SALES PTE LTD

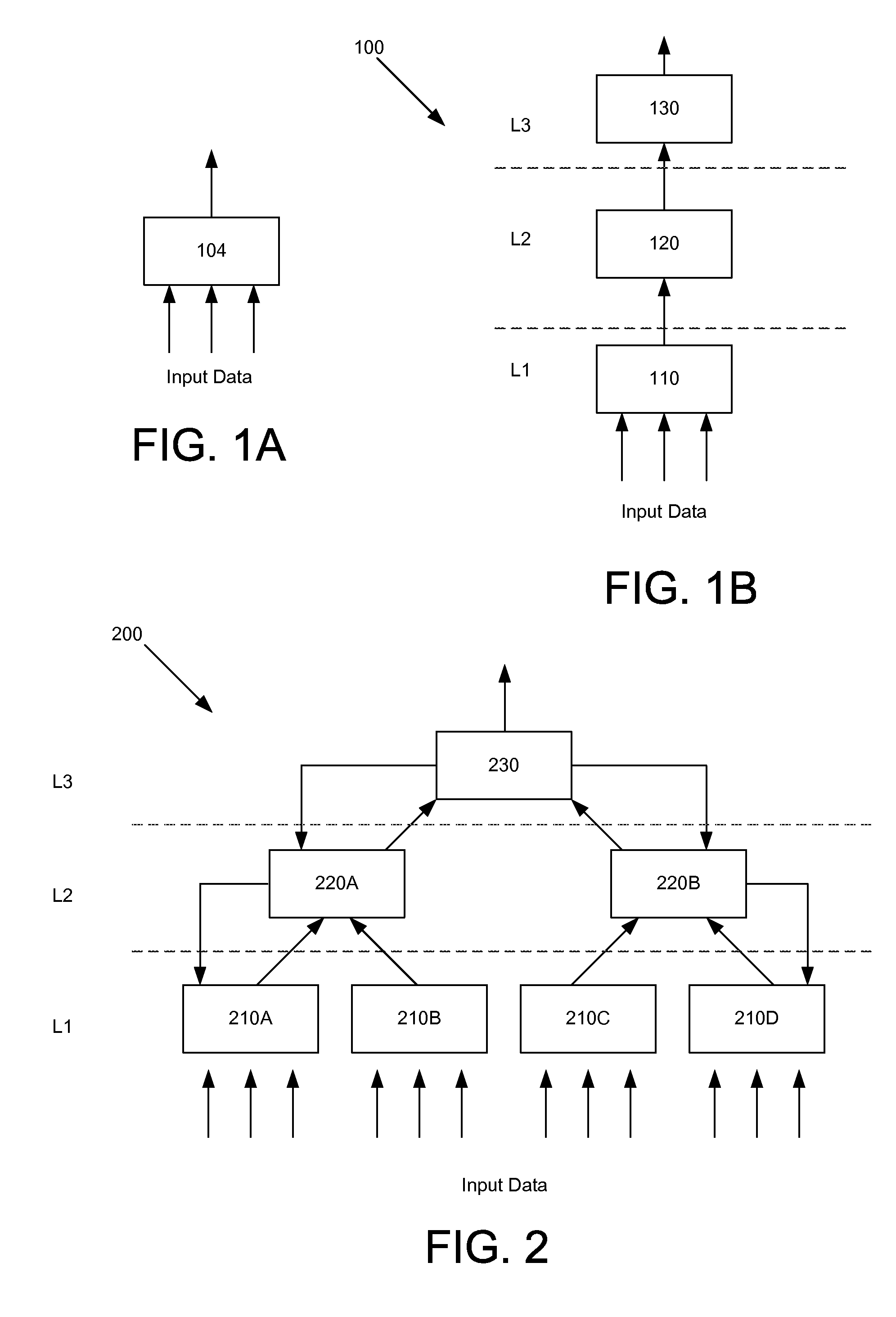

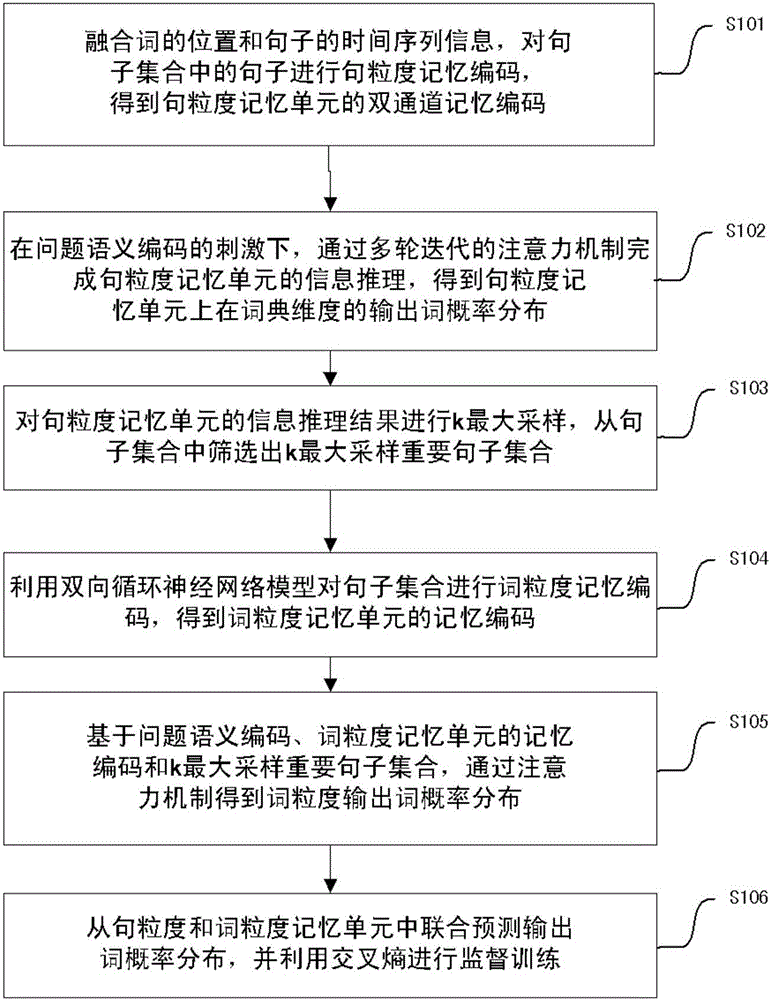

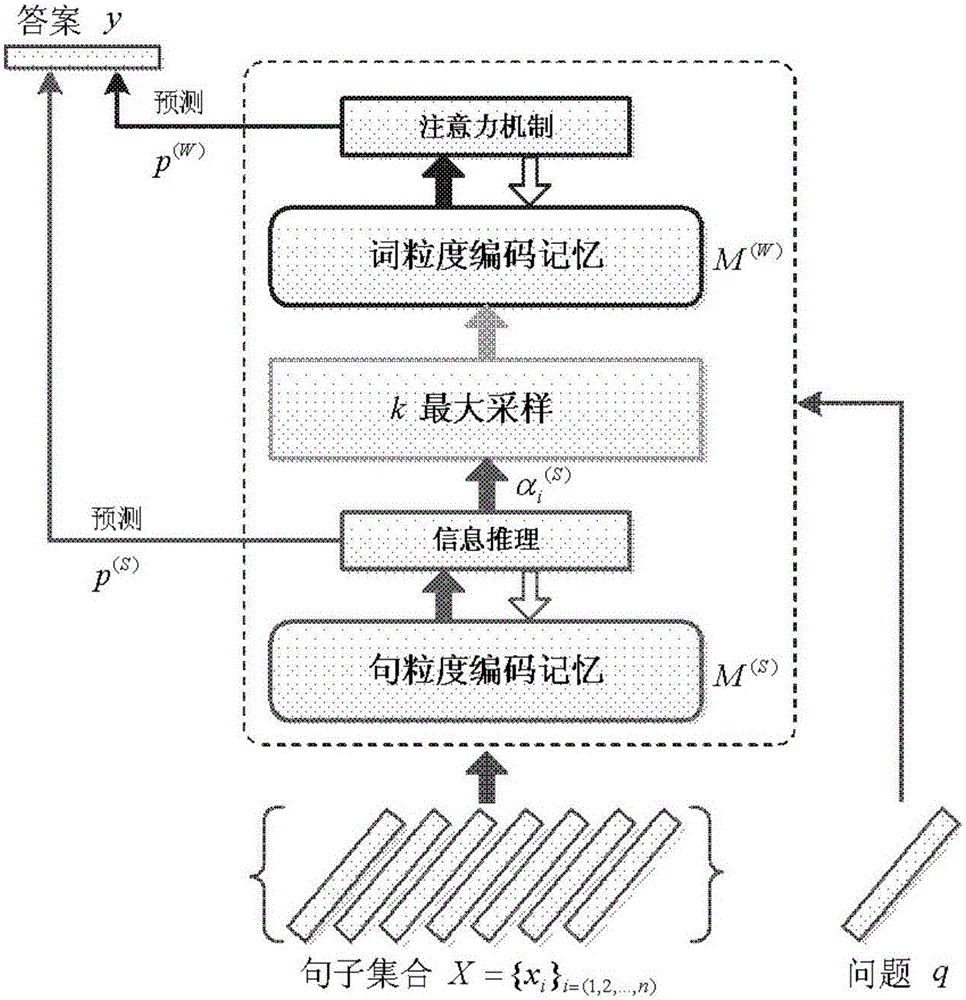

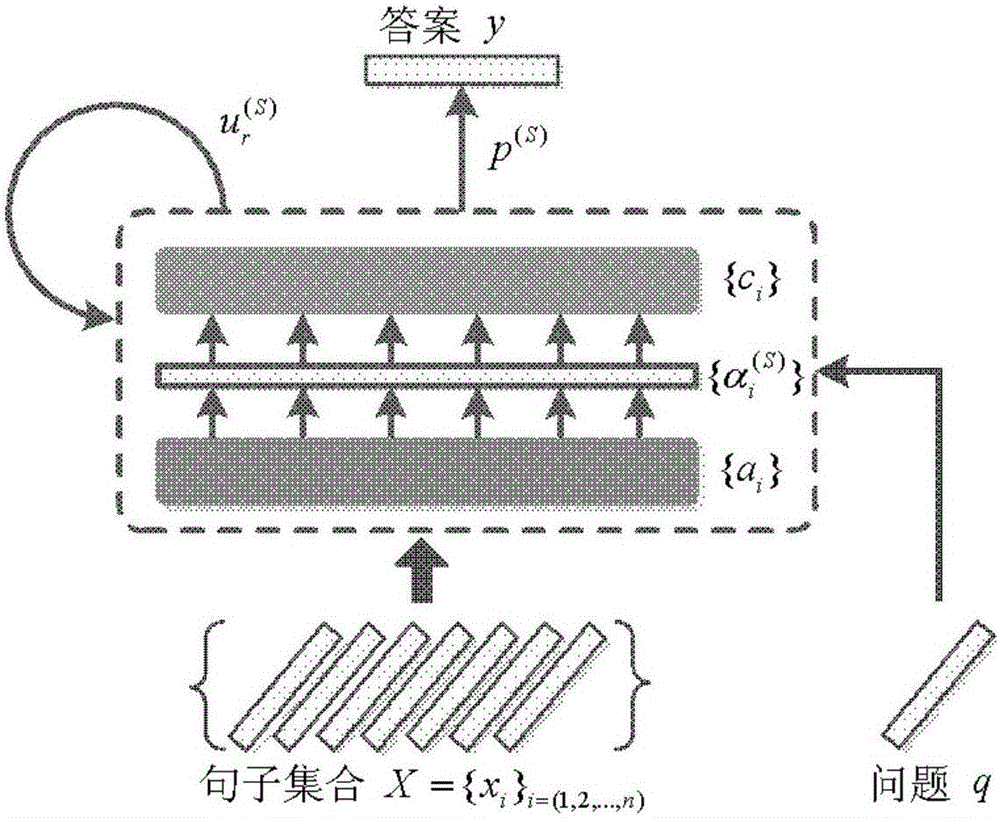

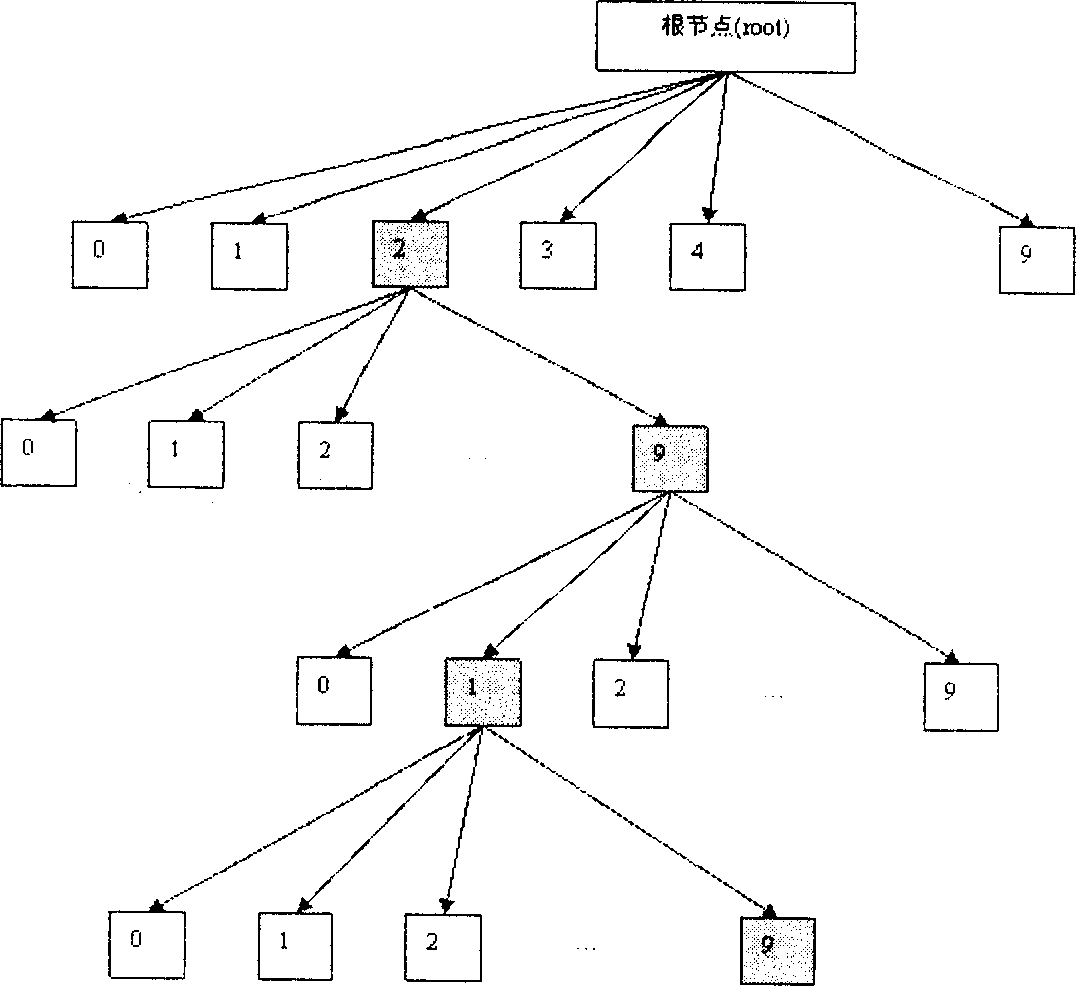

Q&A method based on hierarchal memory network

ActiveCN106126596AImprove accuracyImprove timelinessSemantic analysisSpecial data processing applicationsAlgorithmGranularity

The invention provides a Q&A method based on a hierarchal memory network. The method comprises the following steps: firstly performing sentence granularity memory encoding, and finishing the information inference of the sentence granularity memory unit through an attention mechanism in multi-rounds of iterations under the stimulation of the problem semantic encoding, screening the sentence through k maximum sampling, and further performing the word granularity memory encoding on the basis of the sentence granularity encoding, namely, performing the memory encoding on two levels to form the hierarchal memory encoding; the output word probability distribution is predicted through the combination of the sentence granularity memory unit and the word granularity memory unit, the accuracy of the automatic Q&A is improved, and the answer selection problem of low-frequency word and unlisted word is effectively solved.

Owner:INST OF AUTOMATION CHINESE ACAD OF SCI

Preferred state encoding in non-volatile memories

ActiveUS20160314042A1Input/output to record carriersStatic storageComputer architectureSoftware engineering

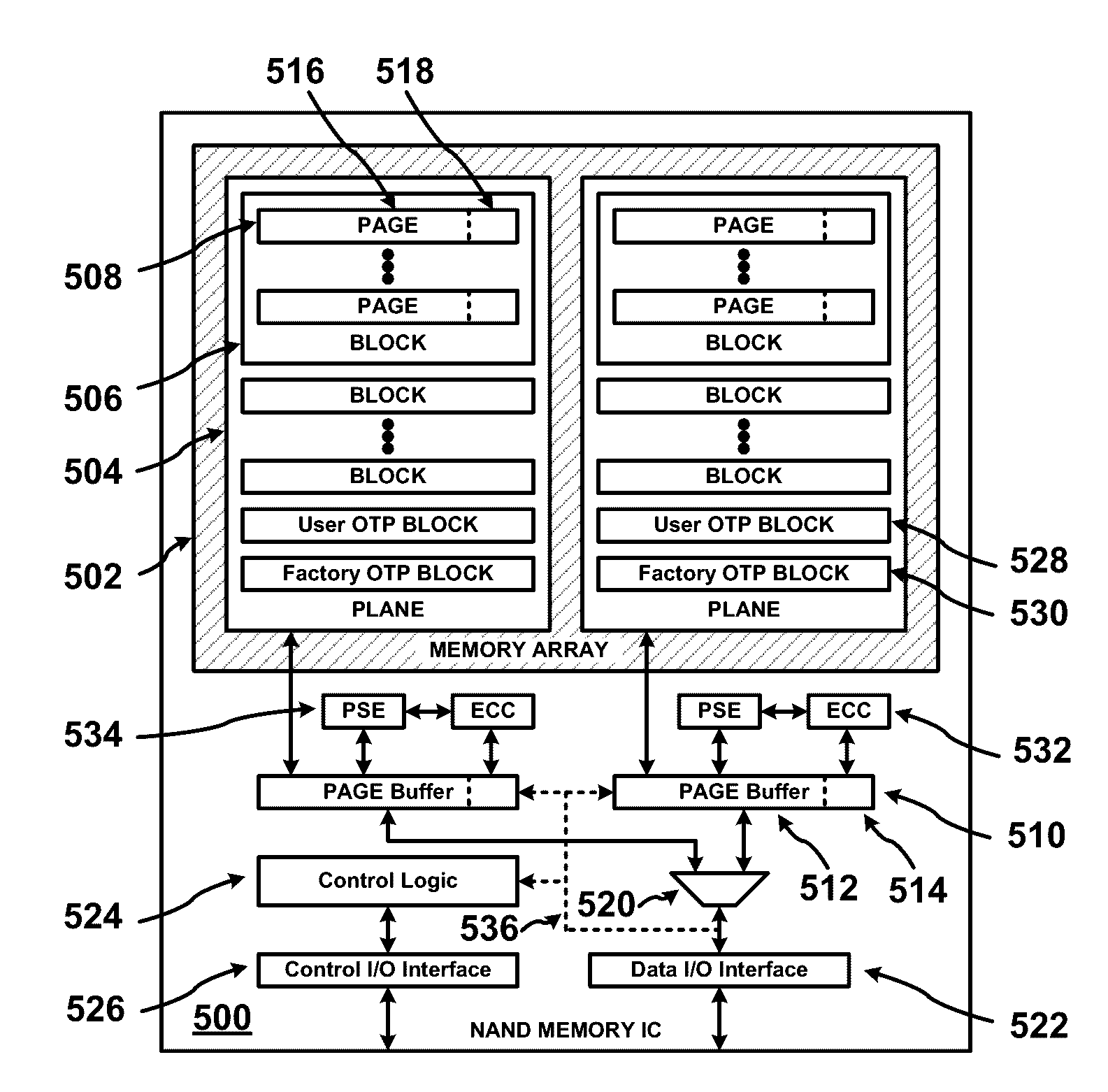

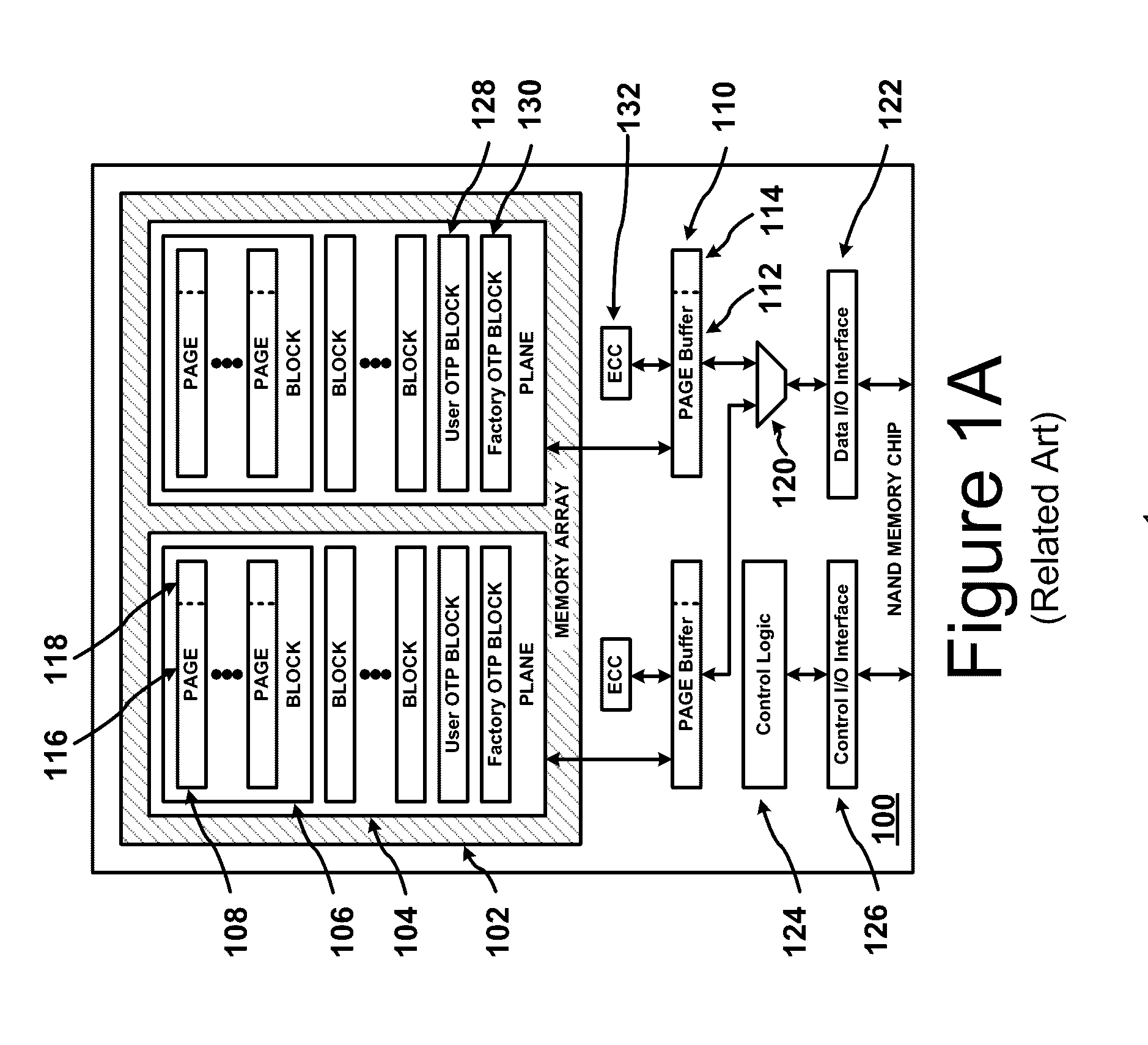

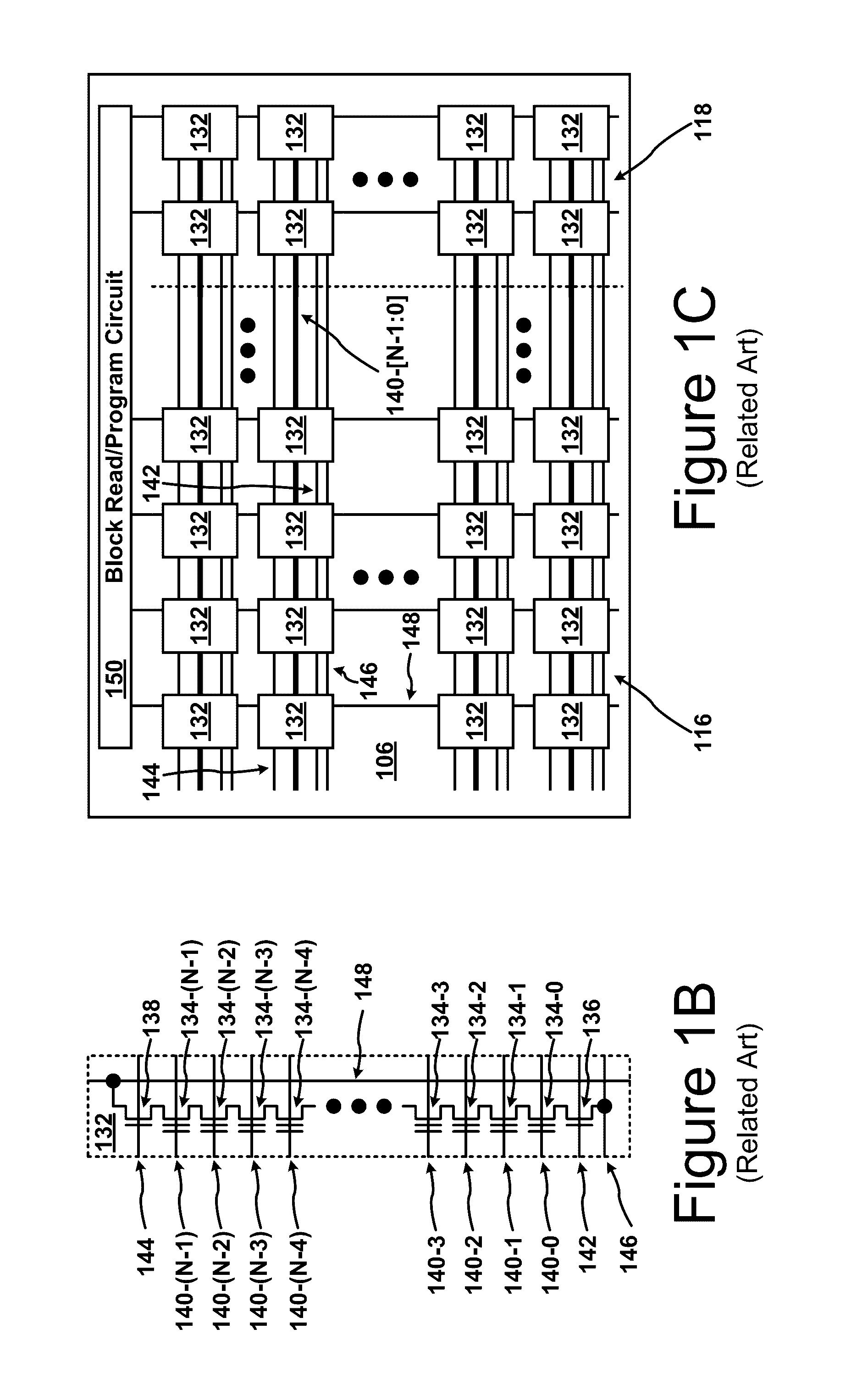

The invention pertains to non-volatile memory devices, and more particularly to advantageously encoding data in non-volatile devices in a flexible manner by both NVM manufacturers and NVM users. Multiple methods of preferred state encoding (PSE) and / or error correction code (ECC) encoding may be used in different pages or blocks in the same NVM device for different purposes which may be dependent on the nature of the data to be stored.

Owner:INVENSAS CORP

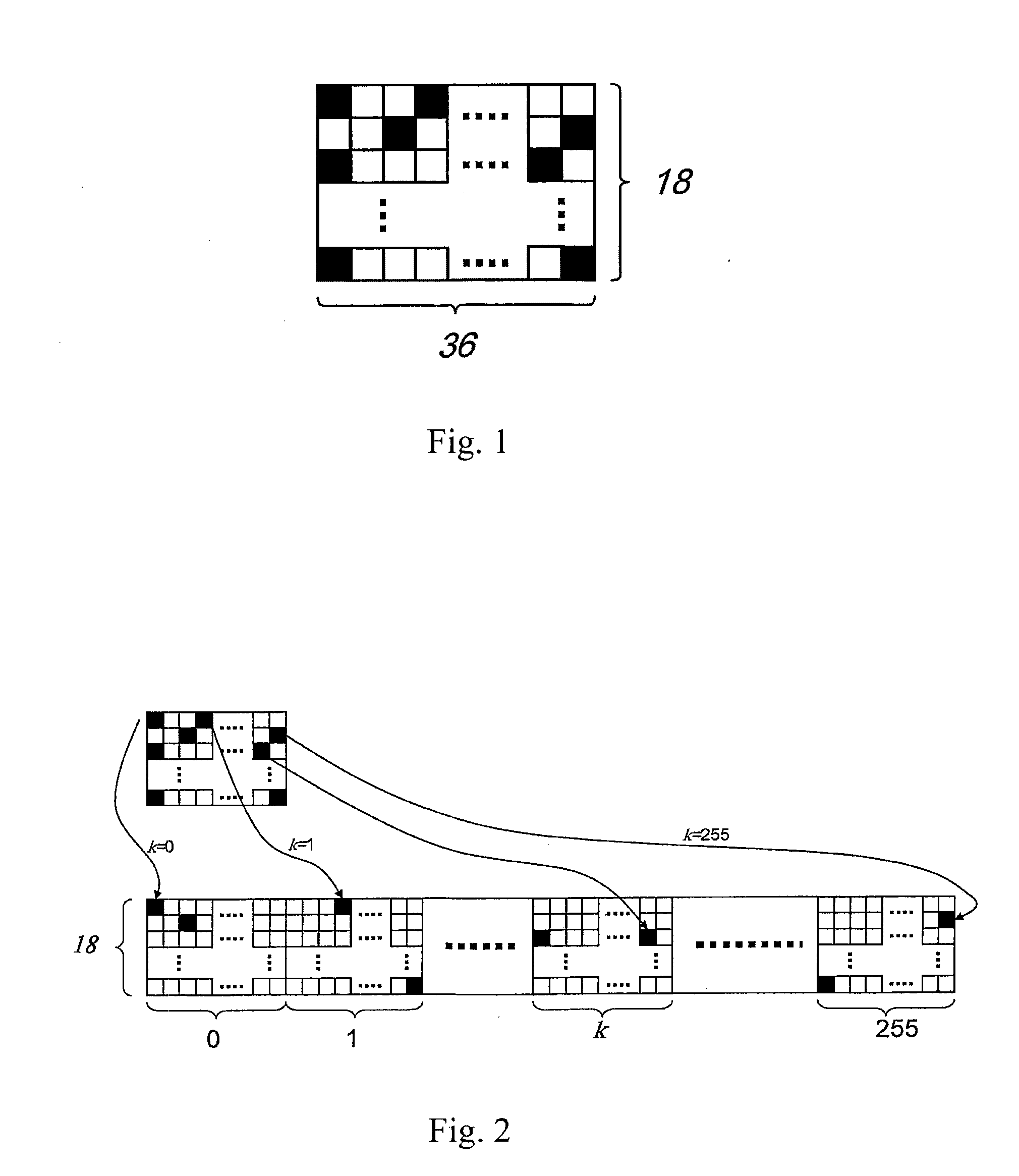

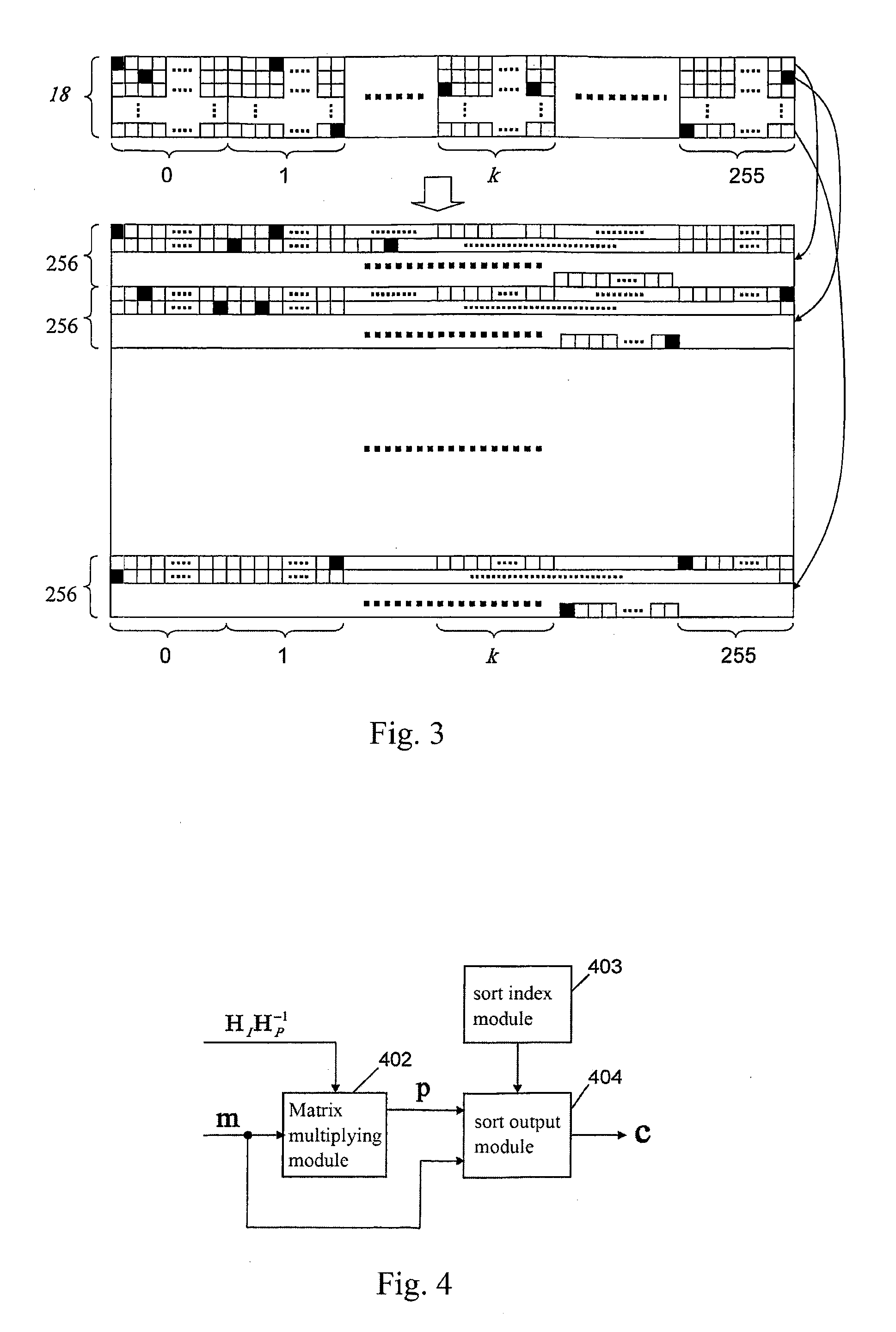

Method for constructing checking matrix of LDPC code and coding and decoding apparatus utilizing the method

InactiveUS20110239077A1Improve performanceLess occupiedError preventionCode conversionAlgorithmEngineering

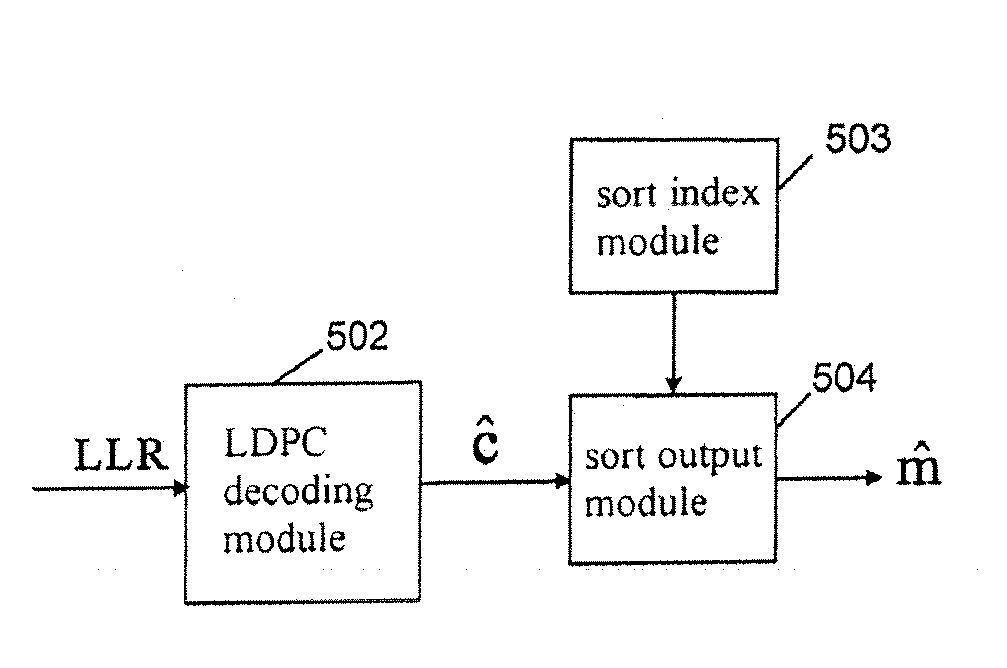

The present invention relates to a method for constructing LPDC code check matrix and encoding and decoding devices using the same. The encoding device encodes the inputted binary information and outputs the encoded system code sequence of position transformation. The encoding device comprises: a matrix multiplication module outputting a check sequence p which is obtained through the binary information sequence m multiplied with a matrix; a sorting index module having N memory units storing index values of a sorting table IDX in turn; and a sorting output module for sorting the m and p and outputting a code word c based on the index value stored in the sorting index table. The present invention constructs the LDPC code check matrix using an algebraic structure, obtaining the LDPC code with stable performance. In addition, the encoding and decoding devices of the present invention occupy less memory, which is preferable for optimization of the devices.

Owner:TIMI TECH

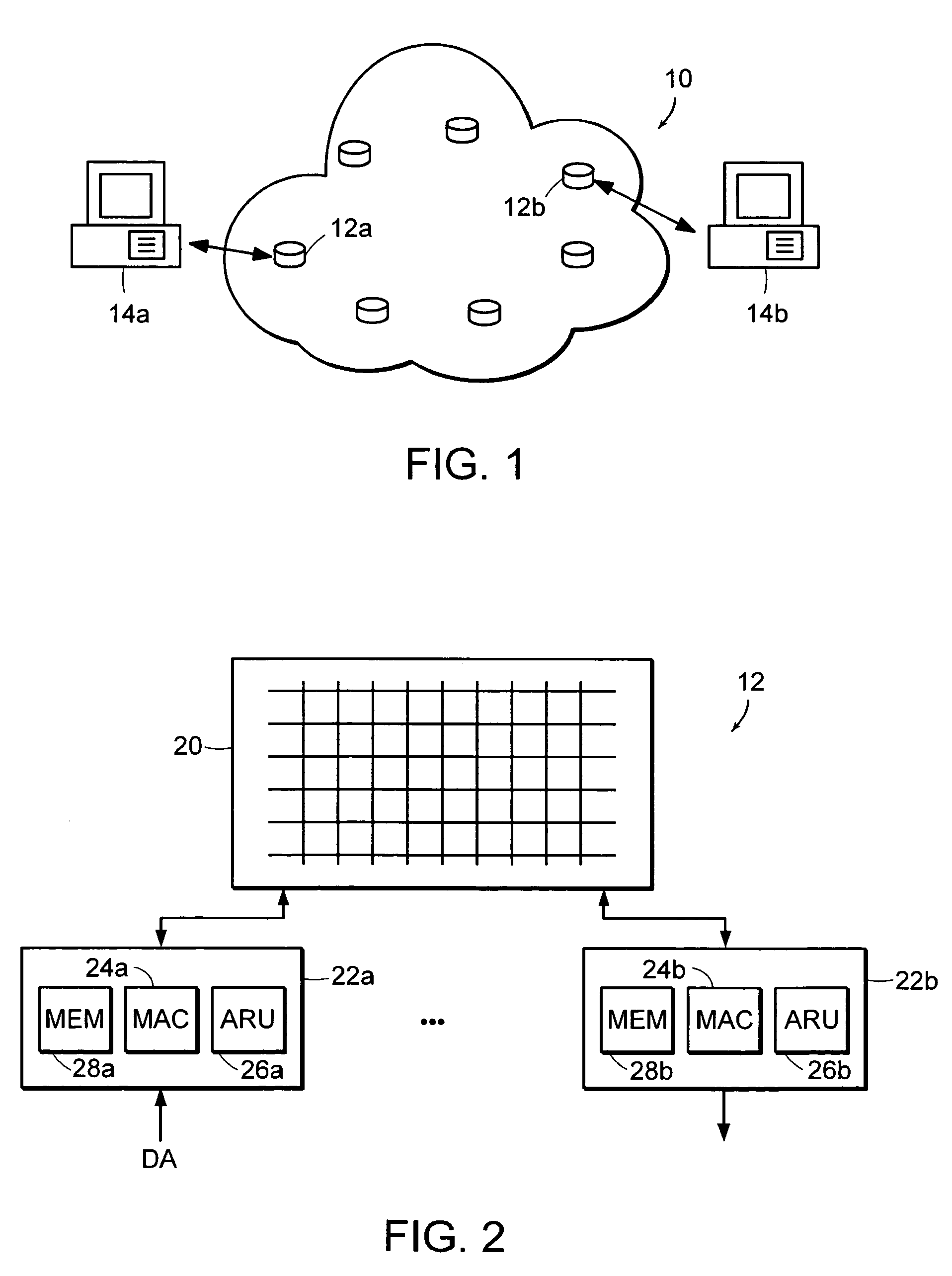

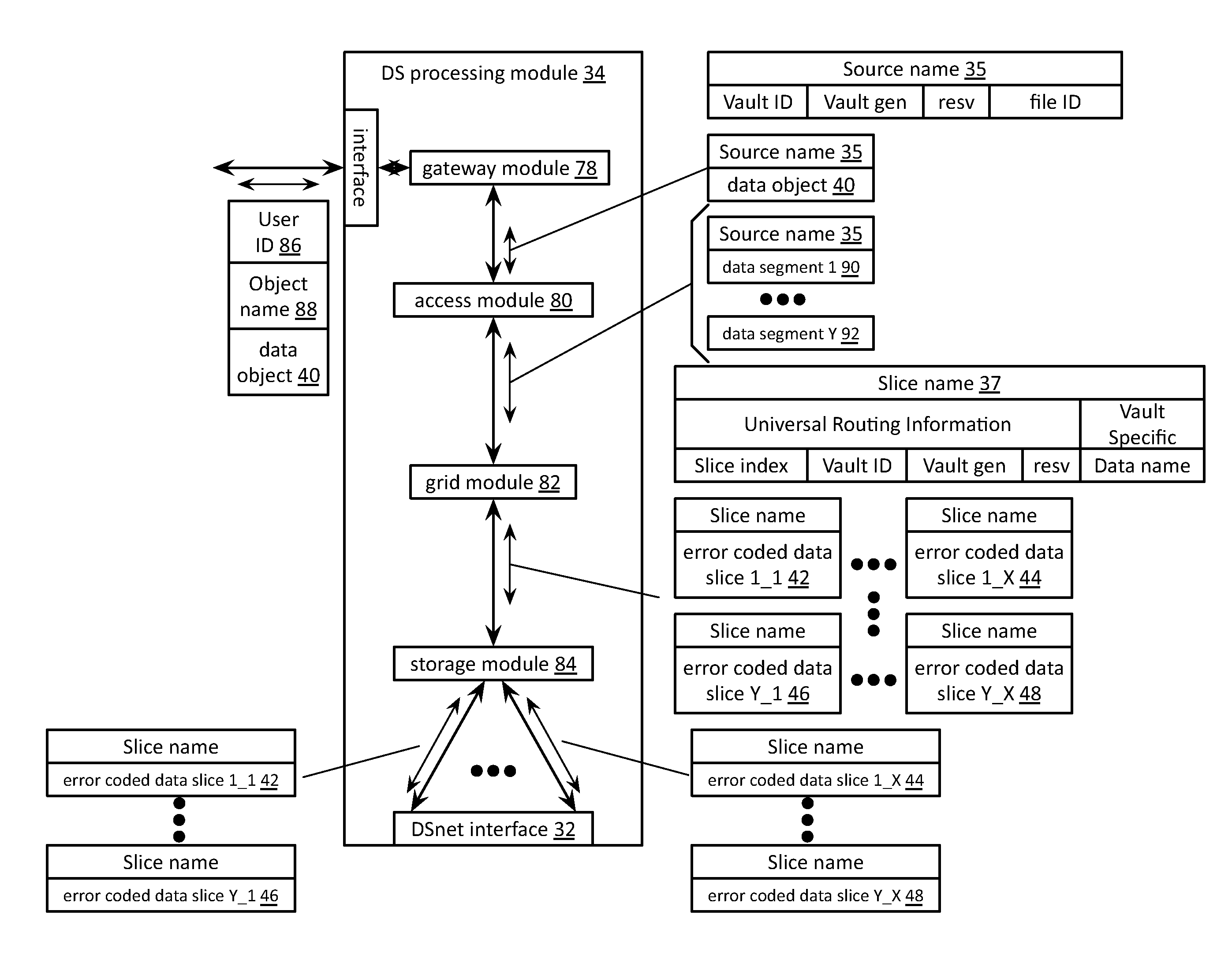

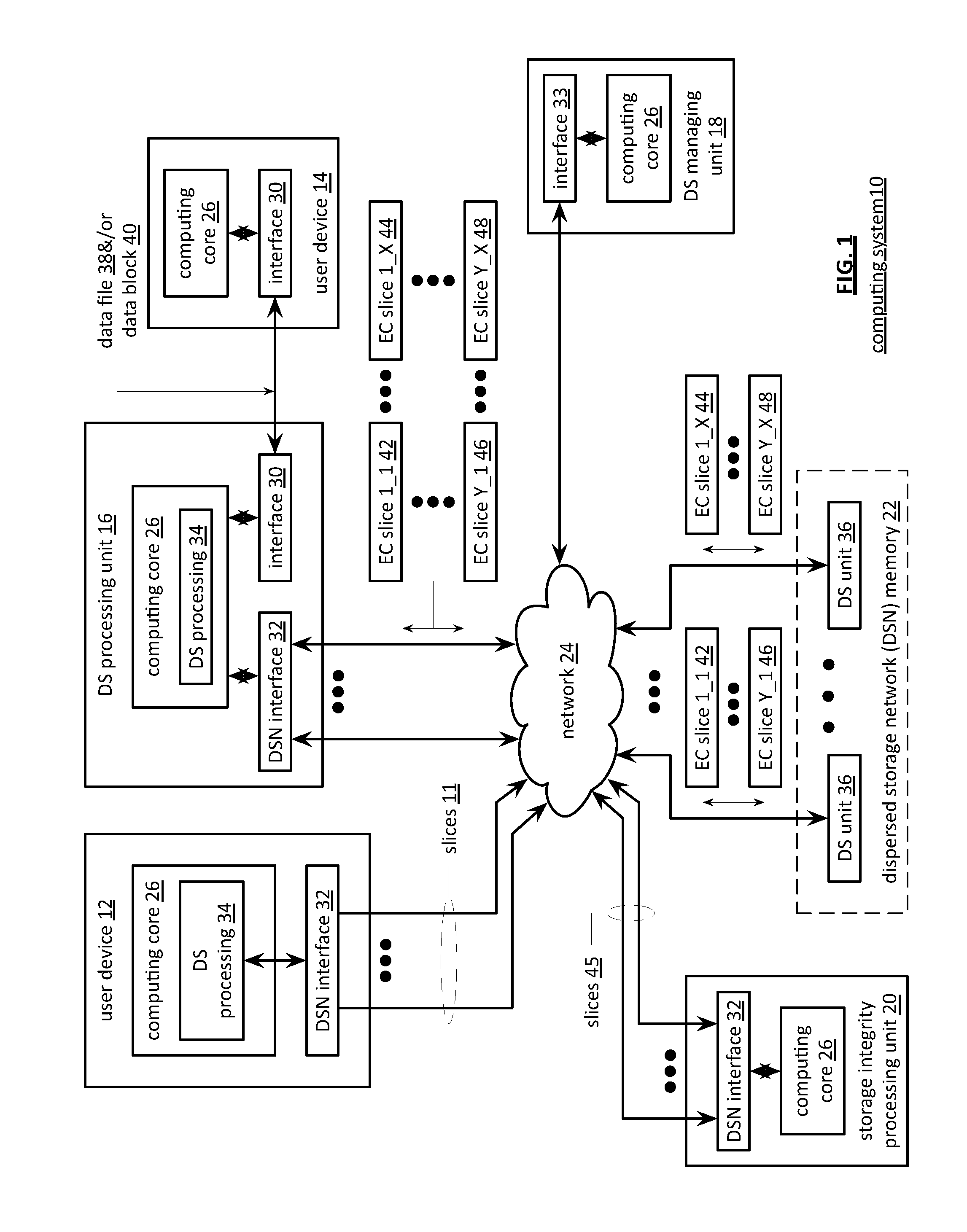

Dispersed storage network slice name verification

ActiveUS20110264989A1Data representation error detection/correctionCode conversionAlgorithmComputer engineering

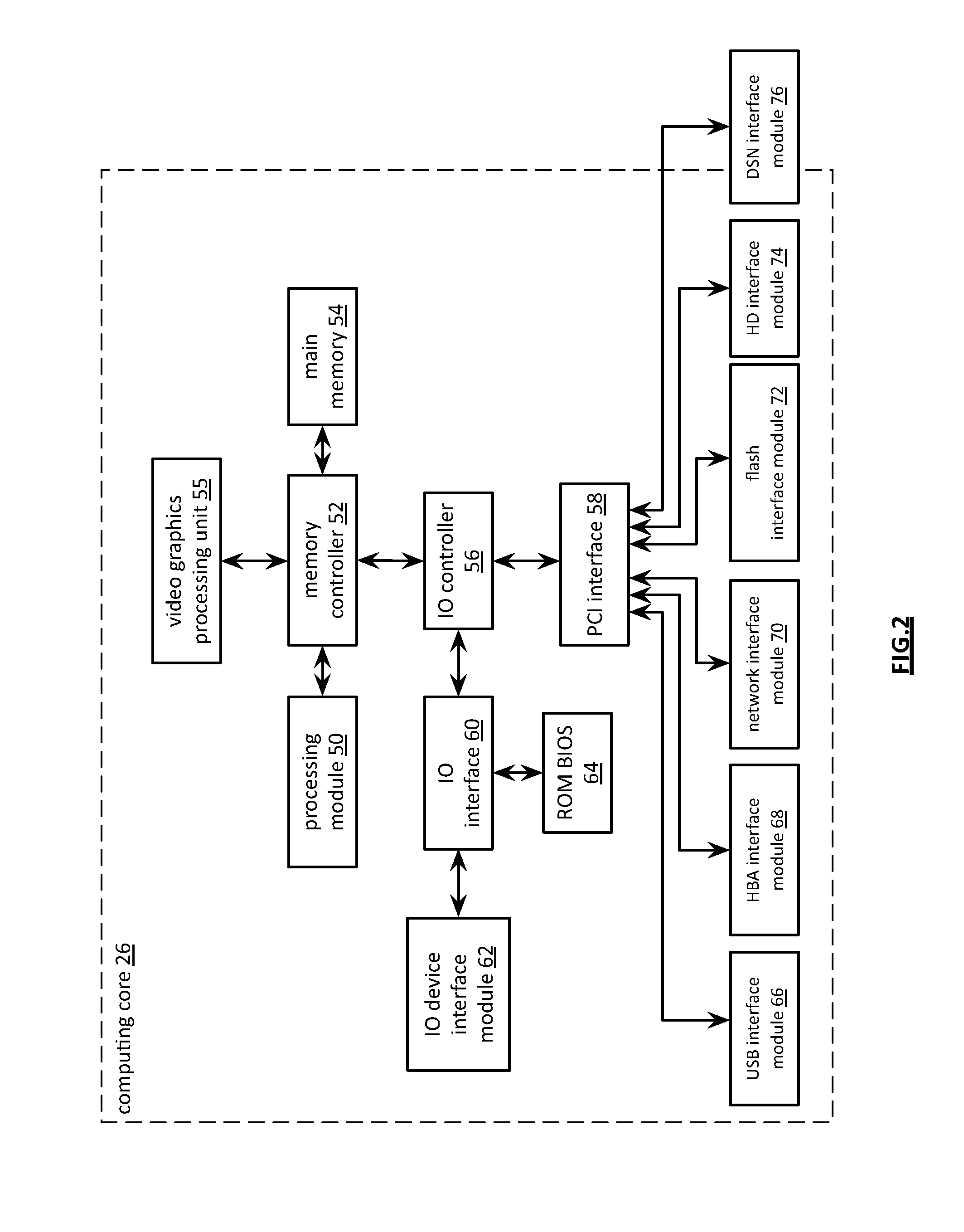

A method begins by a processing module dispersed storage error encoding data to produce a plurality of sets of encoded data slices in accordance with dispersed storage error coding parameters. The method continues with the processing module determining a plurality of sets of slice names corresponding to the plurality of sets of encoded data slices. The method continues with the processing module determining integrity information for the plurality of sets of slice names and sending the plurality of sets of encoded data slices, the plurality of sets of slice names, and the integrity information to a dispersed storage network memory for storage therein.

Owner:PURE STORAGE

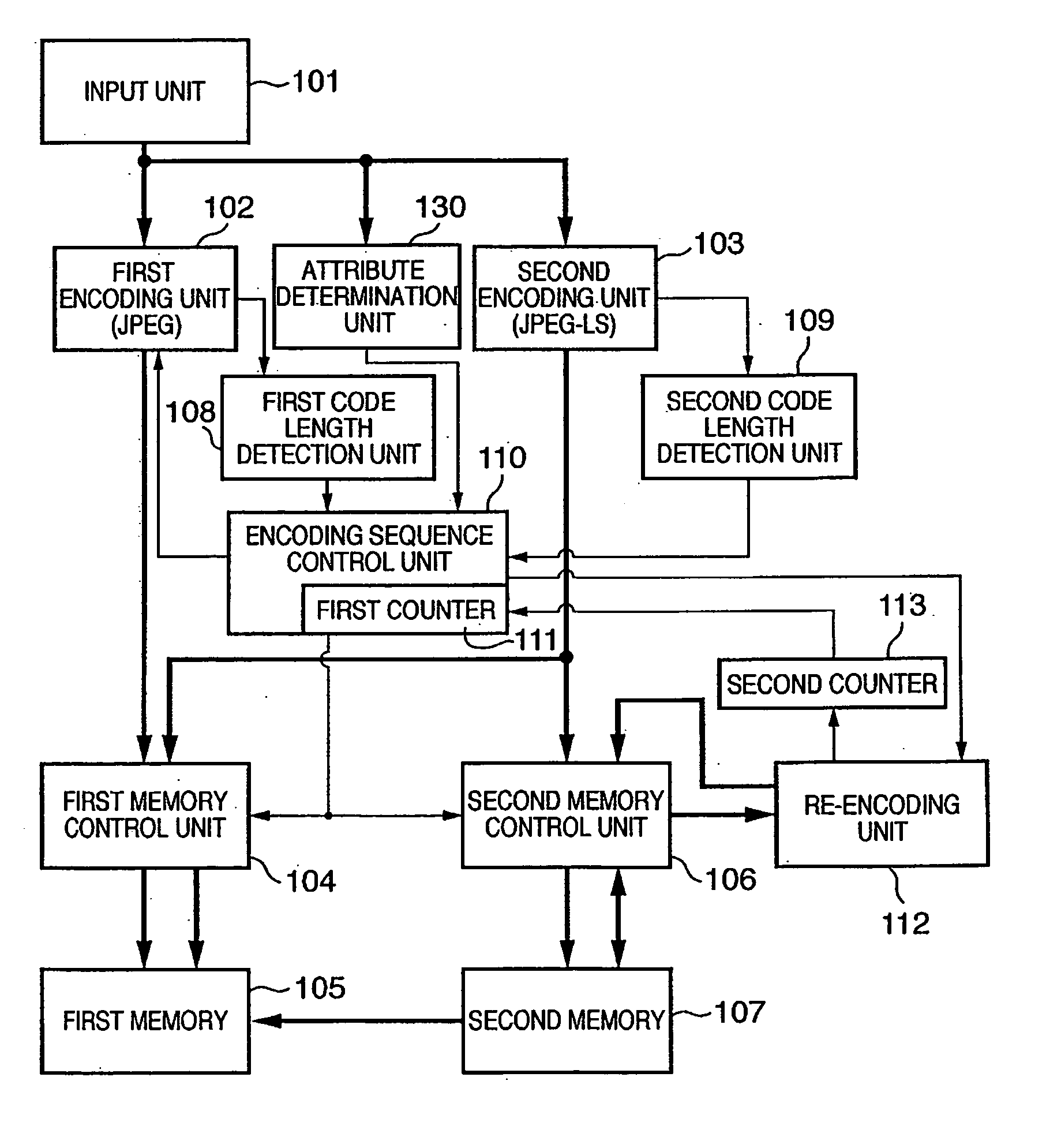

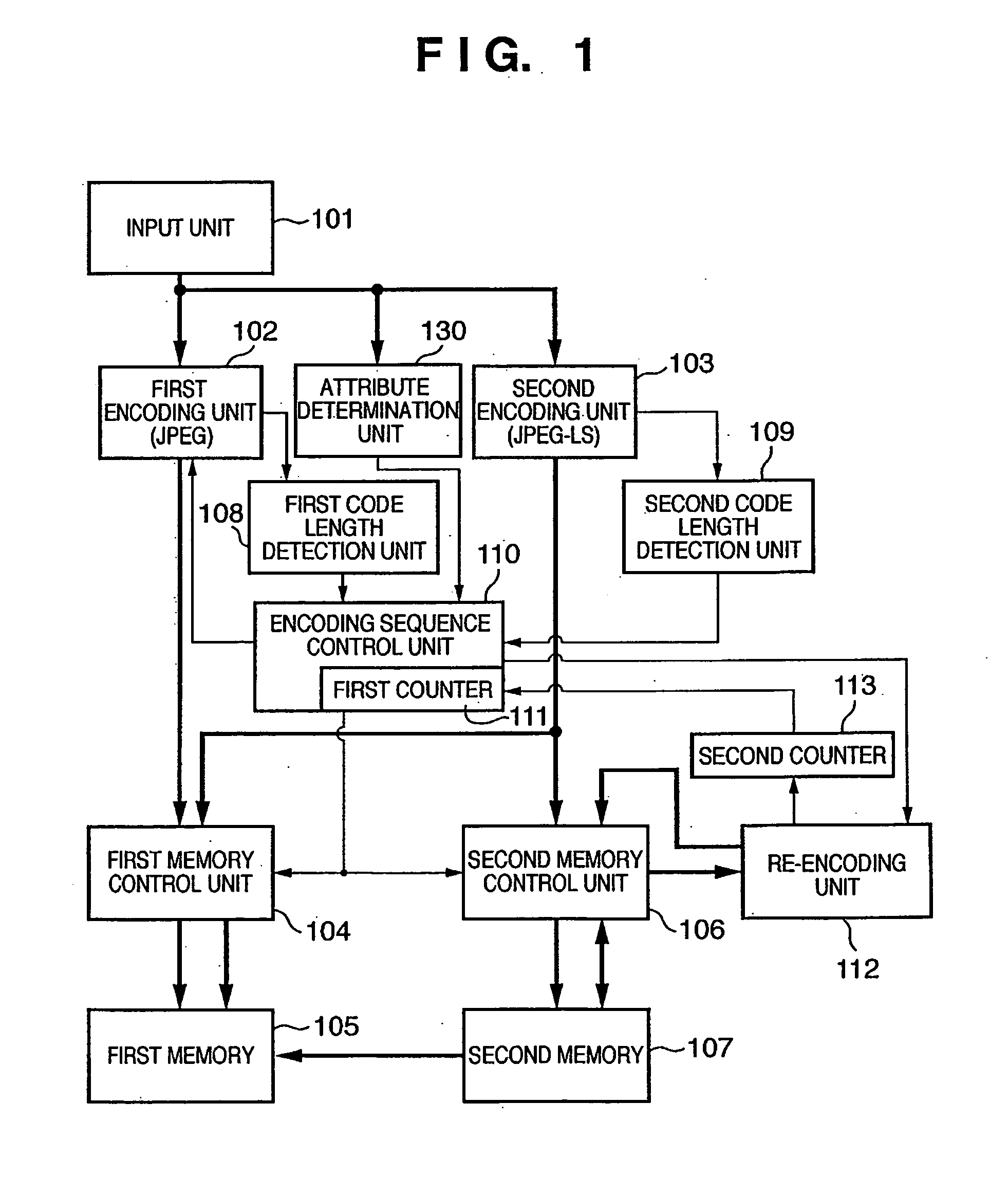

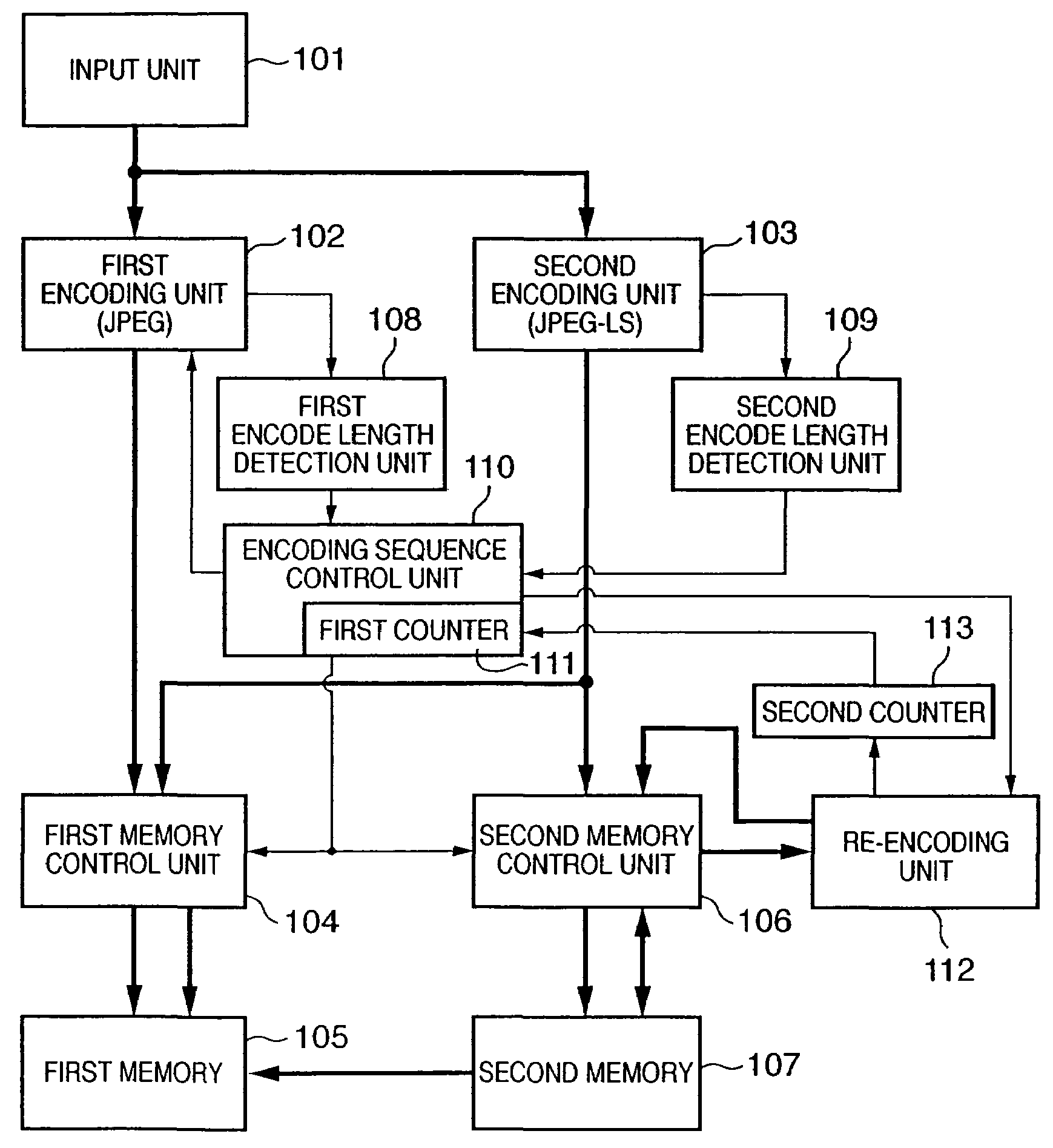

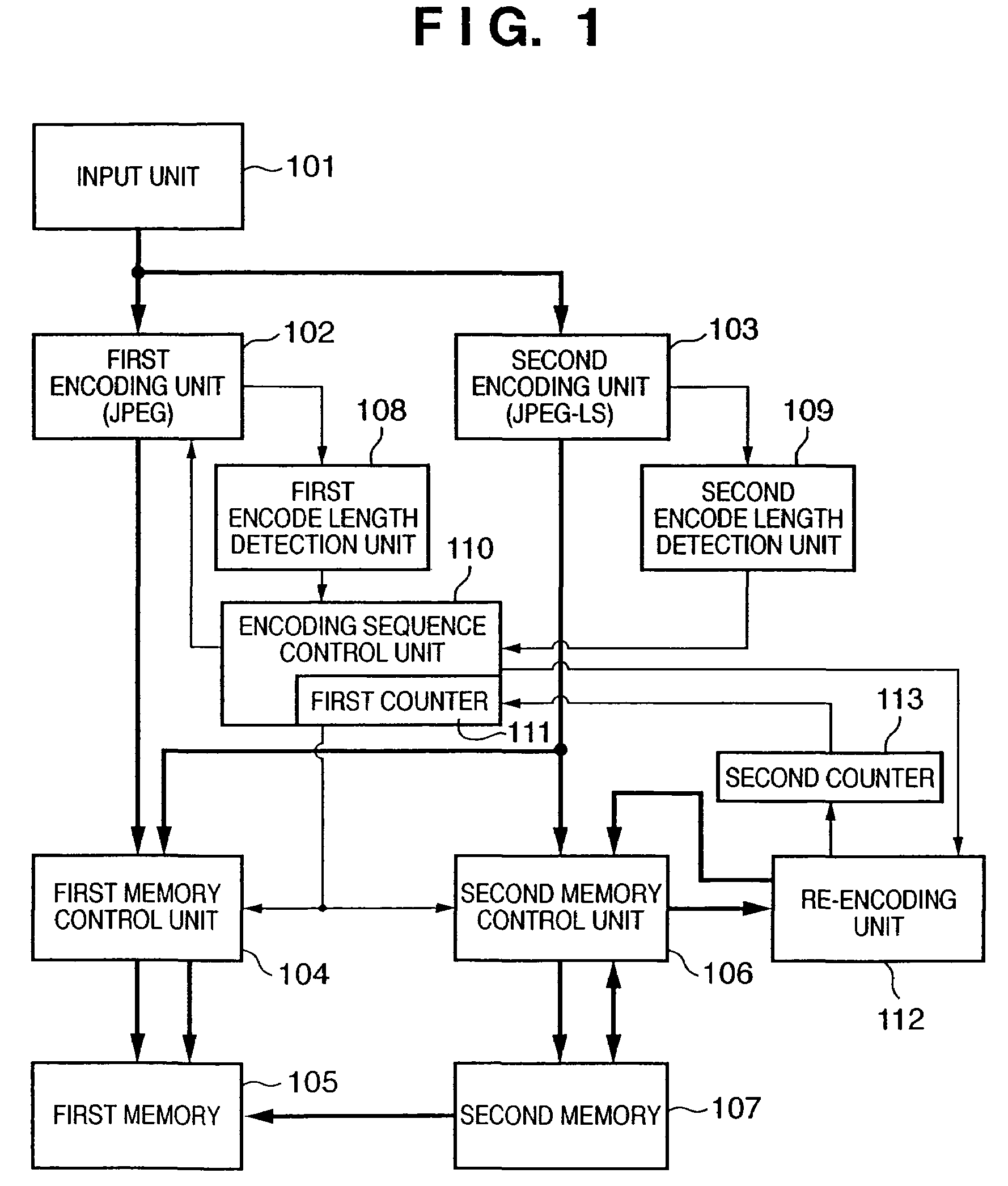

Image encoding apparatus and method, computer program, and computer-readable storage medium

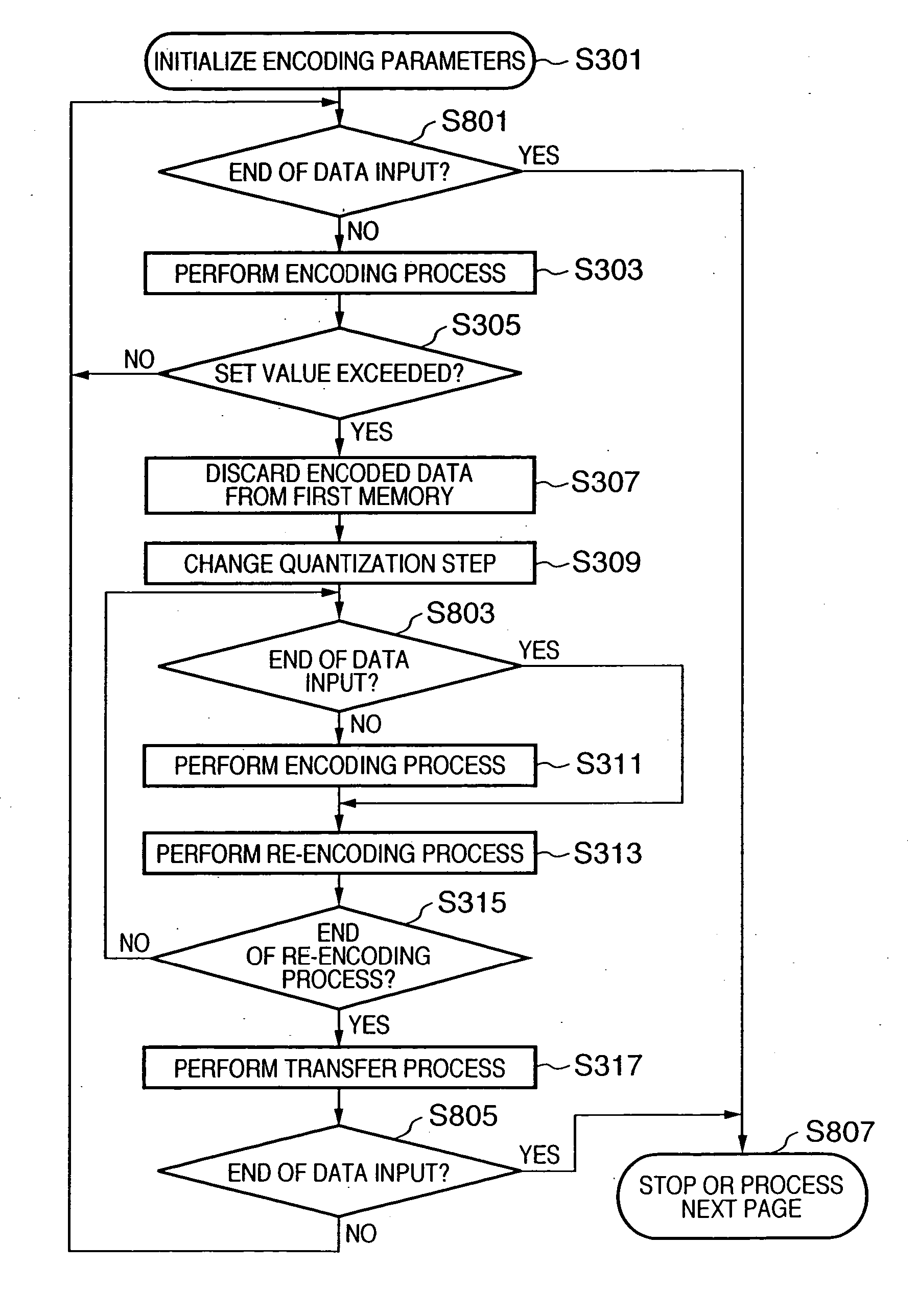

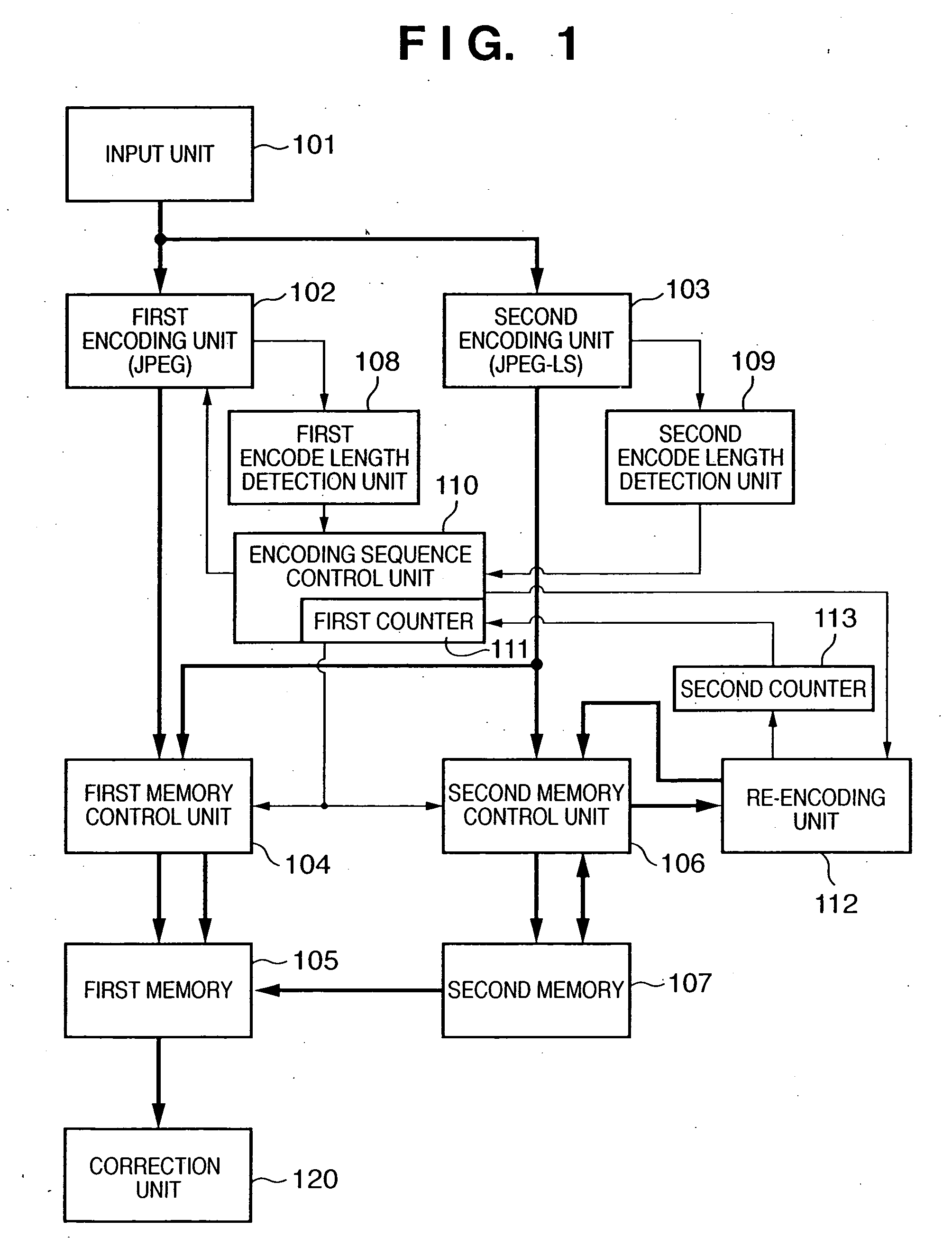

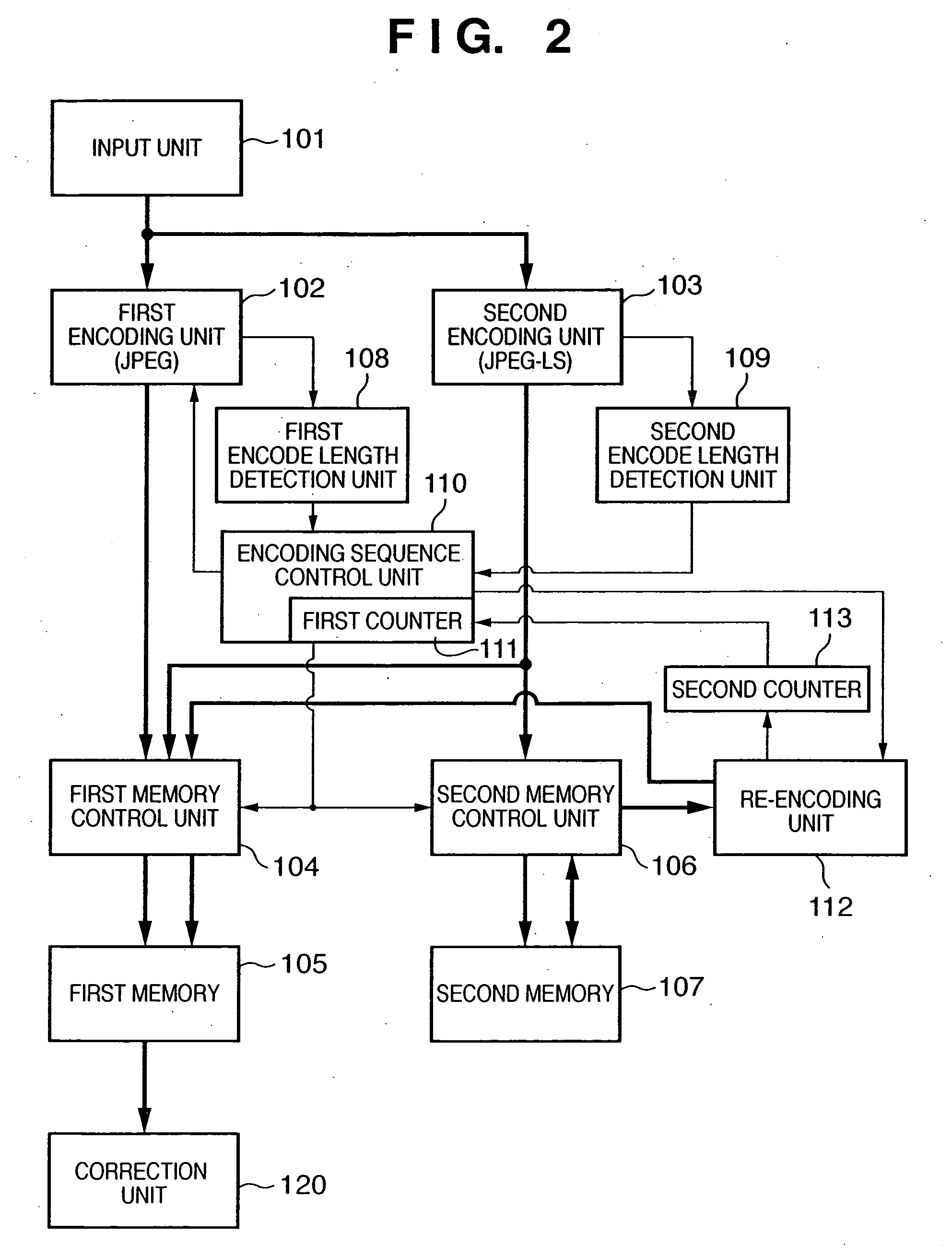

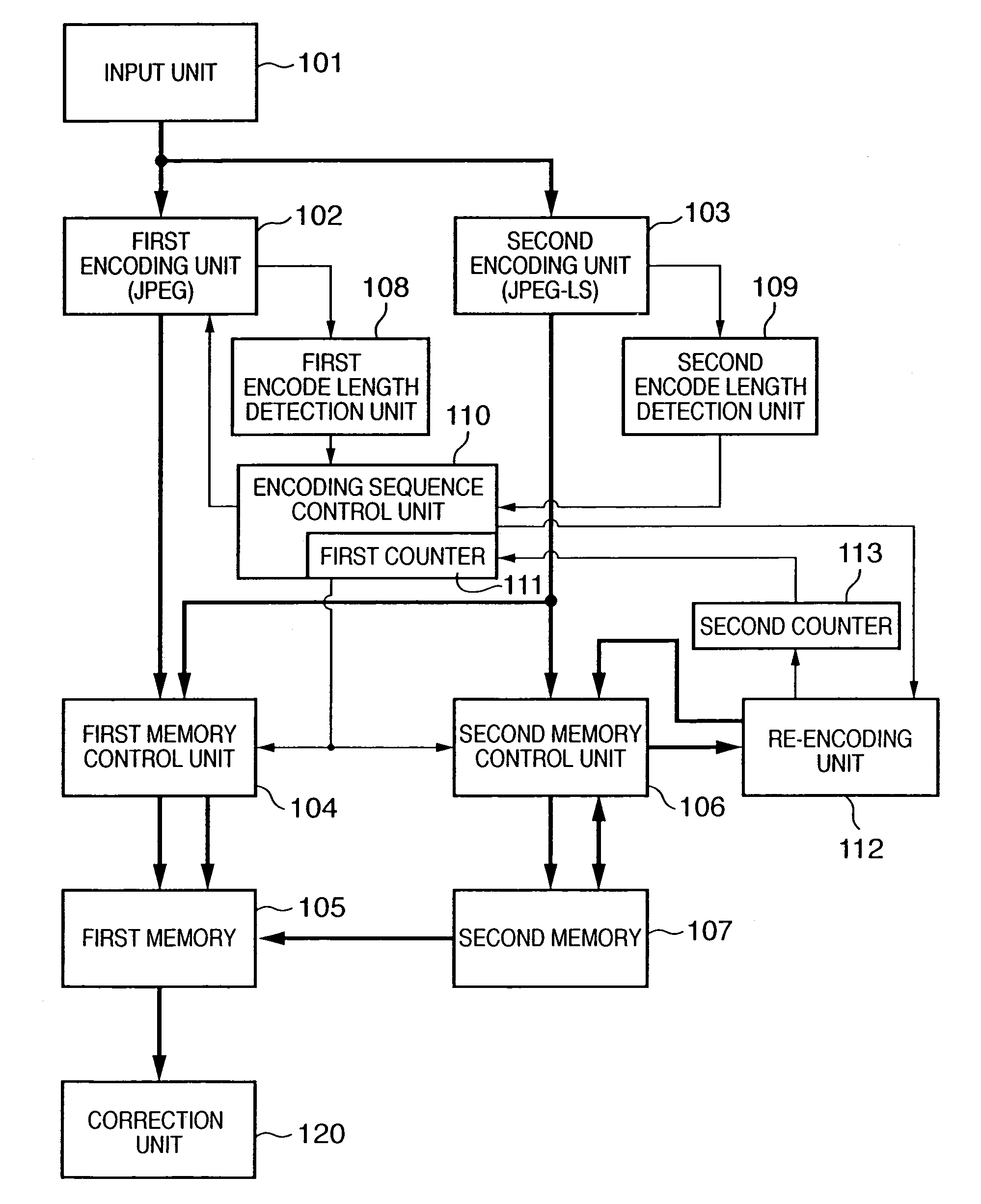

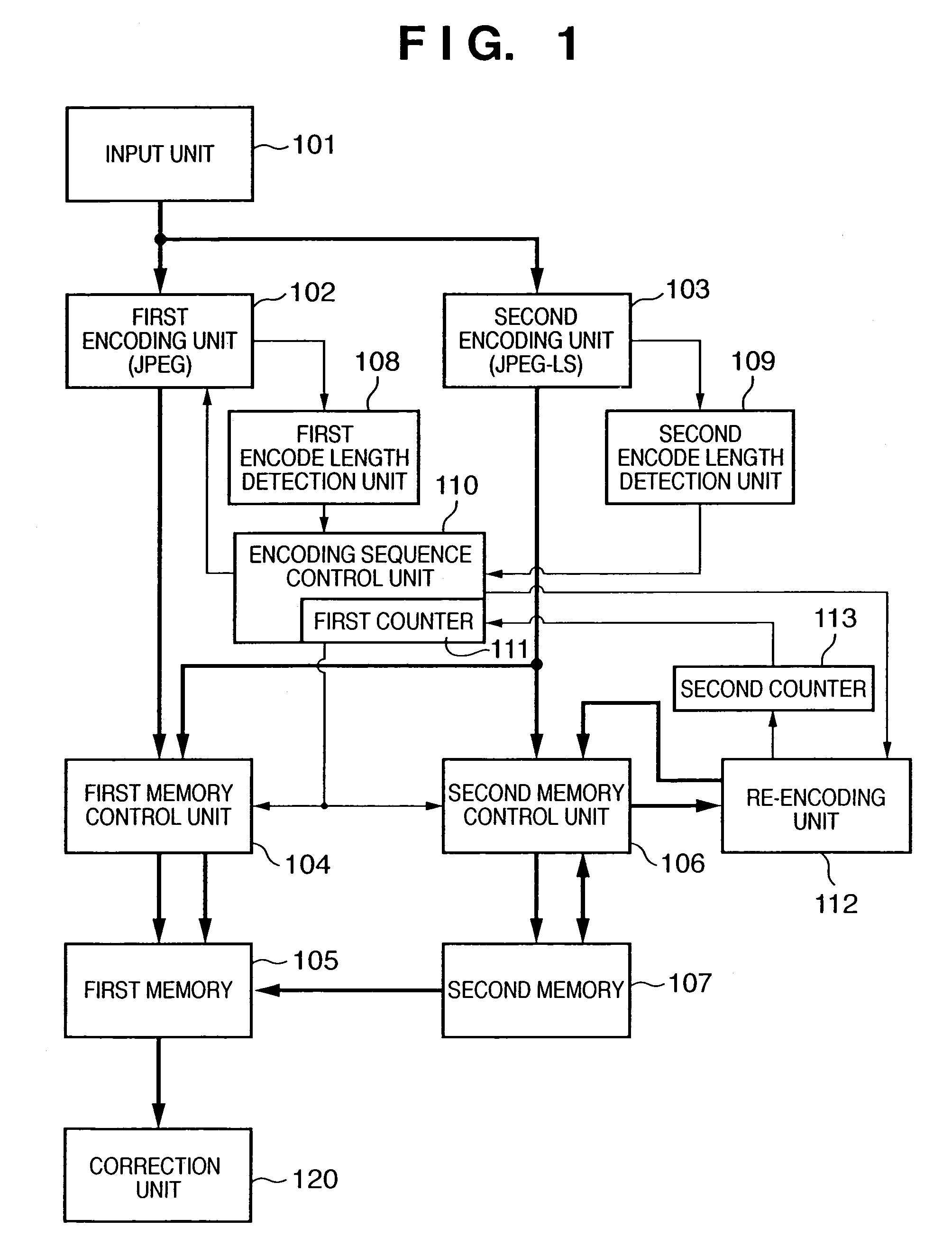

InactiveUS20060050975A1Suppress generation of block noiseCharacter and pattern recognitionDigital video signal modificationSequence controlLossless coding

According to this invention, encoded data of a target data amount is generated by one image input operation while both lossless encoding and lossy encoding are adopted. For this purpose, an encoding sequence control unit controls a first encoding unit for lossy (JPEG) encoding, a second encoding unit for lossless (JPEG-LS) encoding, first and second memories, and a re-encoding unit, and stores, in a first memory, encoded data of a target data amount or less that contains both losslessly and lossily encoded data. A correction unit corrects, of encoded data stored in the first memory, encoded data of an isolated type to the type of neighboring encoded data, and outputs the corrected data.

Owner:CANON KK

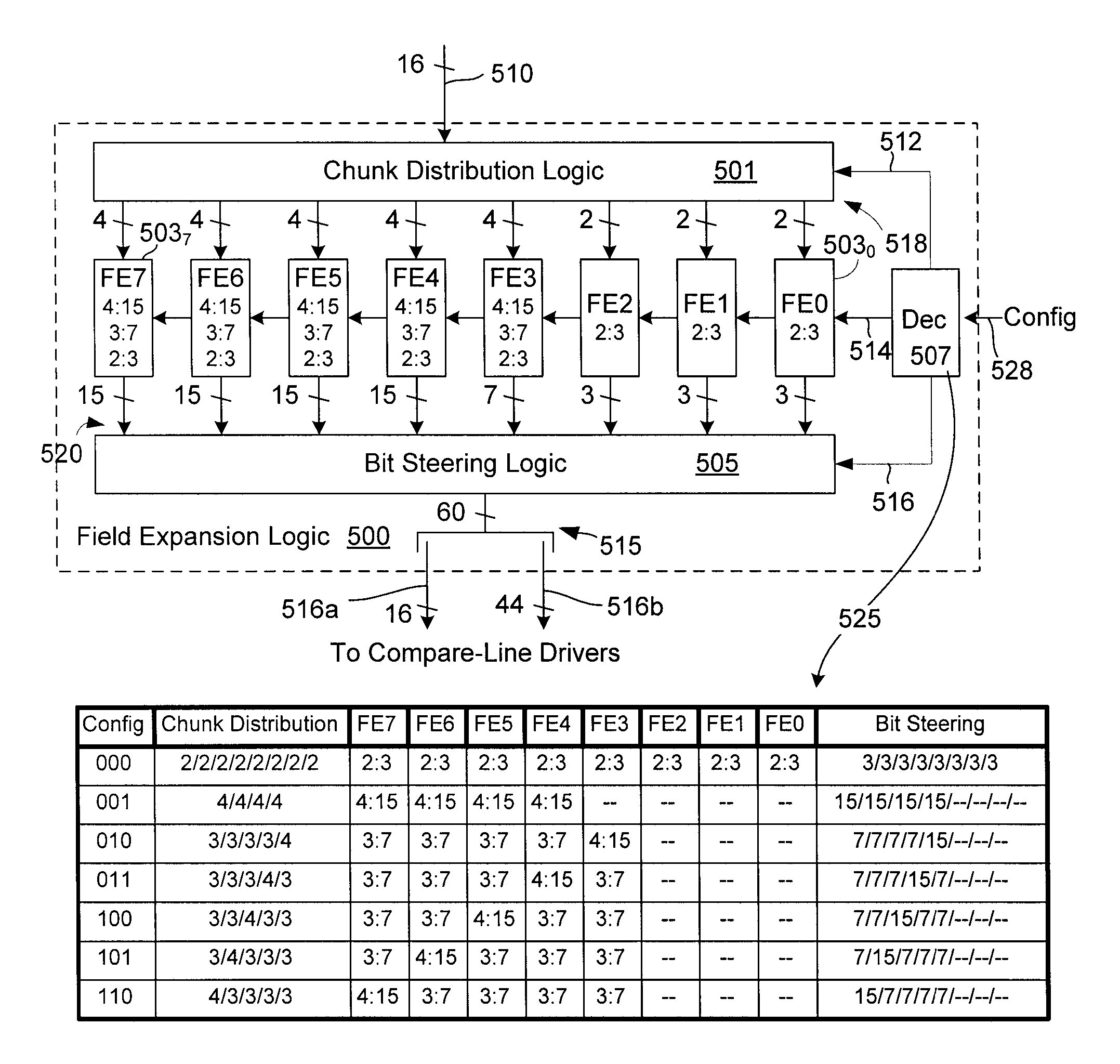

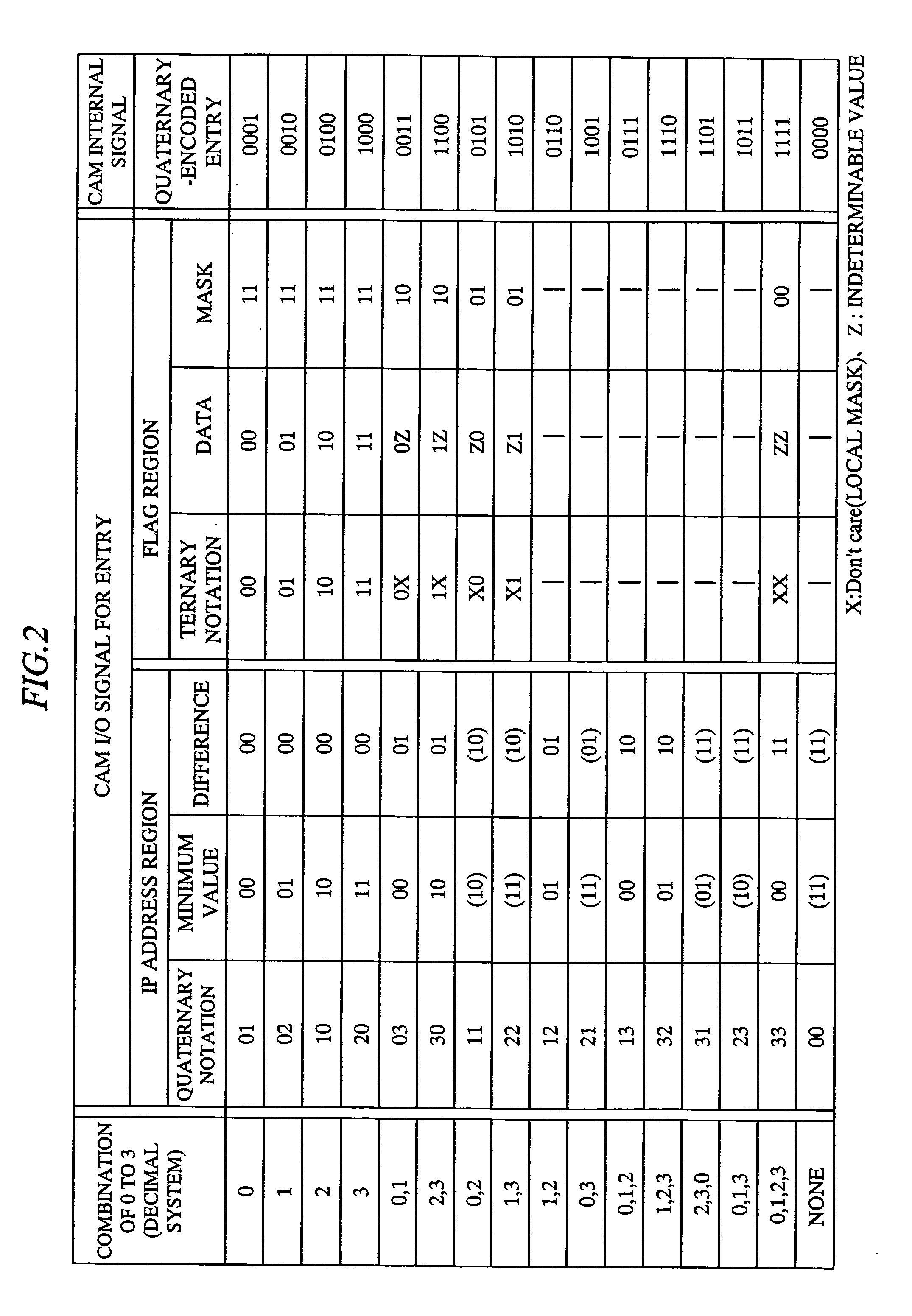

Range representation in a content addressable memory (CAM) using an improved encoding scheme

Owner:AVAGO TECH WIRELESS IP SINGAPORE PTE

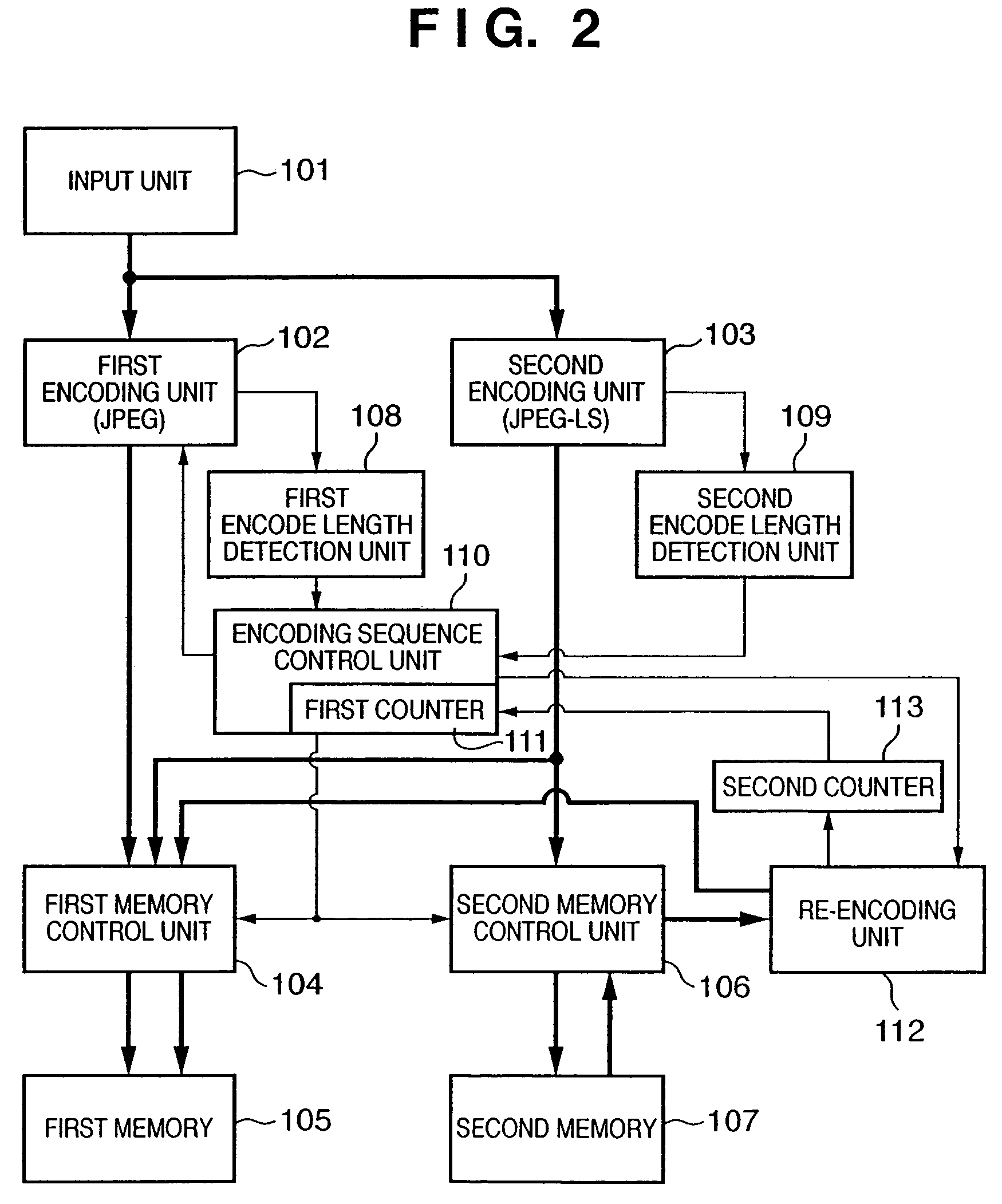

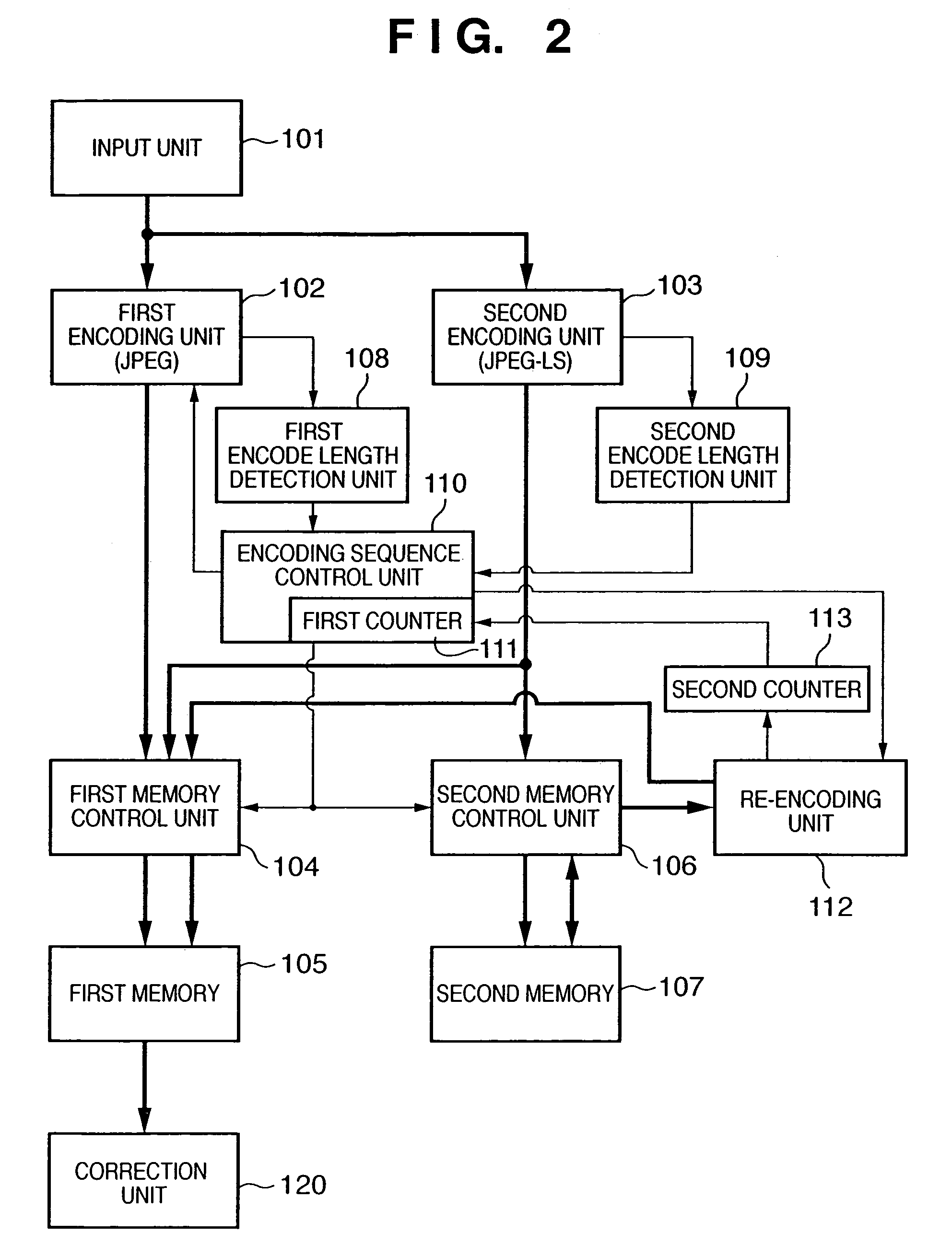

Image encoding apparatus, control method therefor, computer program, and computer-readable storage medium

InactiveUS20060104528A1Increase the compression ratioImage codingCharacter and pattern recognitionSequence controlLossless coding

According to this invention, encoded data of a target data amount can be generated by one image input operation while both lossless encoding and lossy encoding are adopted. For this purpose, a first encoding unit which generates lossy encoded data, a second encoding unit which generates lossless encoded data, and an attribute determination unit which detects the number of colors in a pixel block to be encoded parallel-process the same pixel block. When the number of colors in the pixel block of interest is equal to or smaller than a predetermined number, an encoding sequence control unit stores lossless encoded data in a first memory. When the number of colors exceeds the predetermined number, the encoding sequence control unit stores encoded data of a shorter code length among lossy encoded data and lossless encoded data in the first memory.

Owner:CANON KK

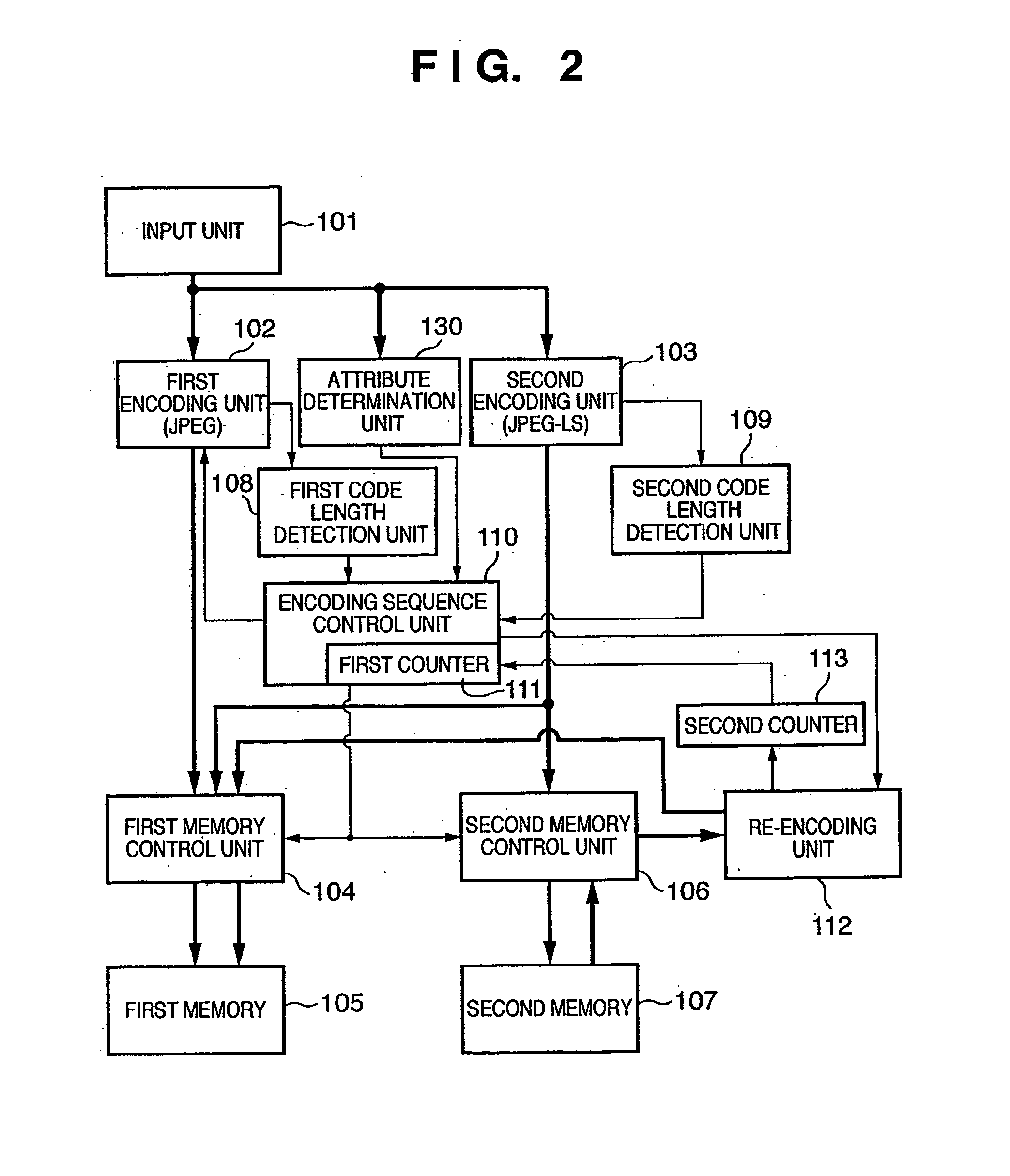

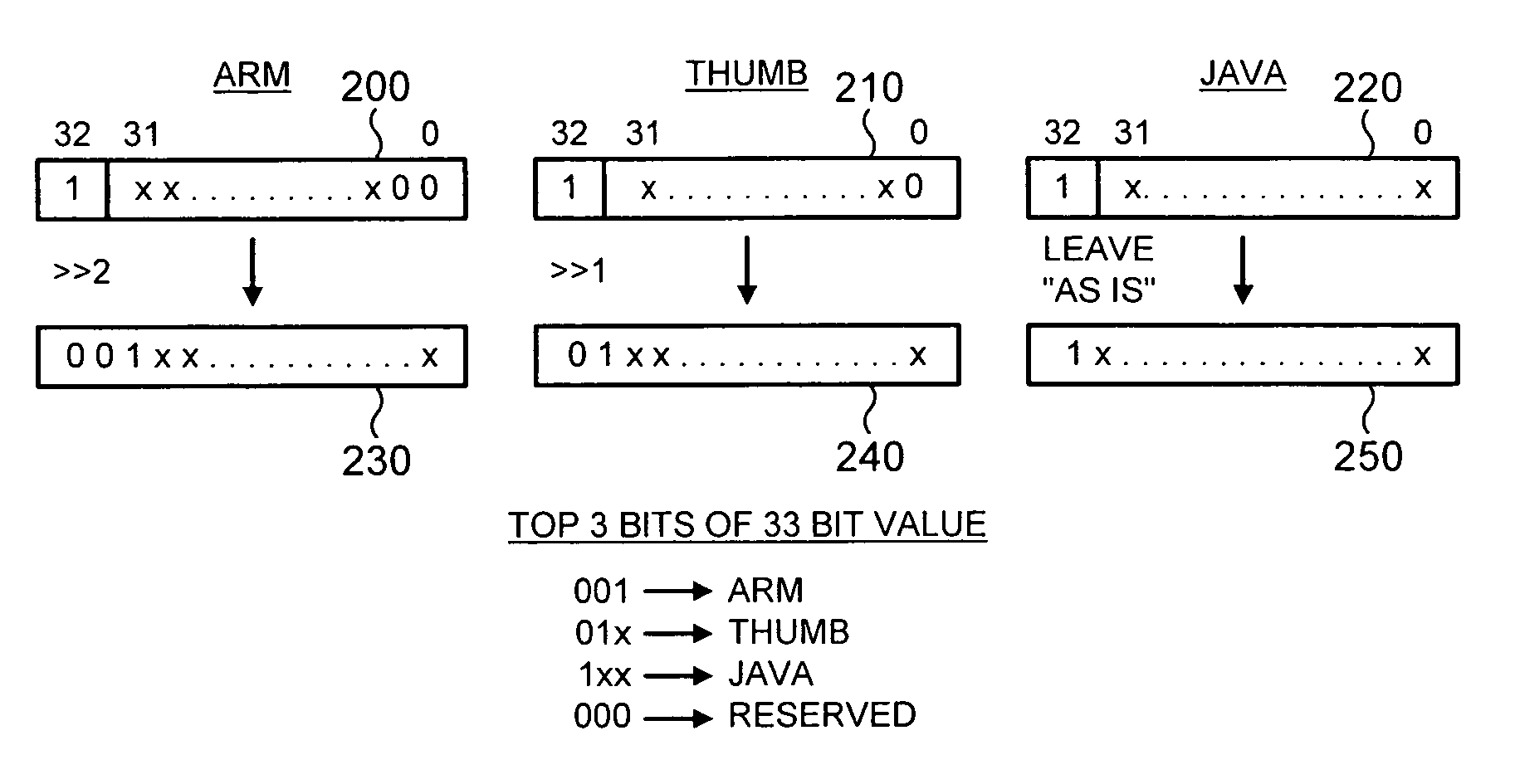

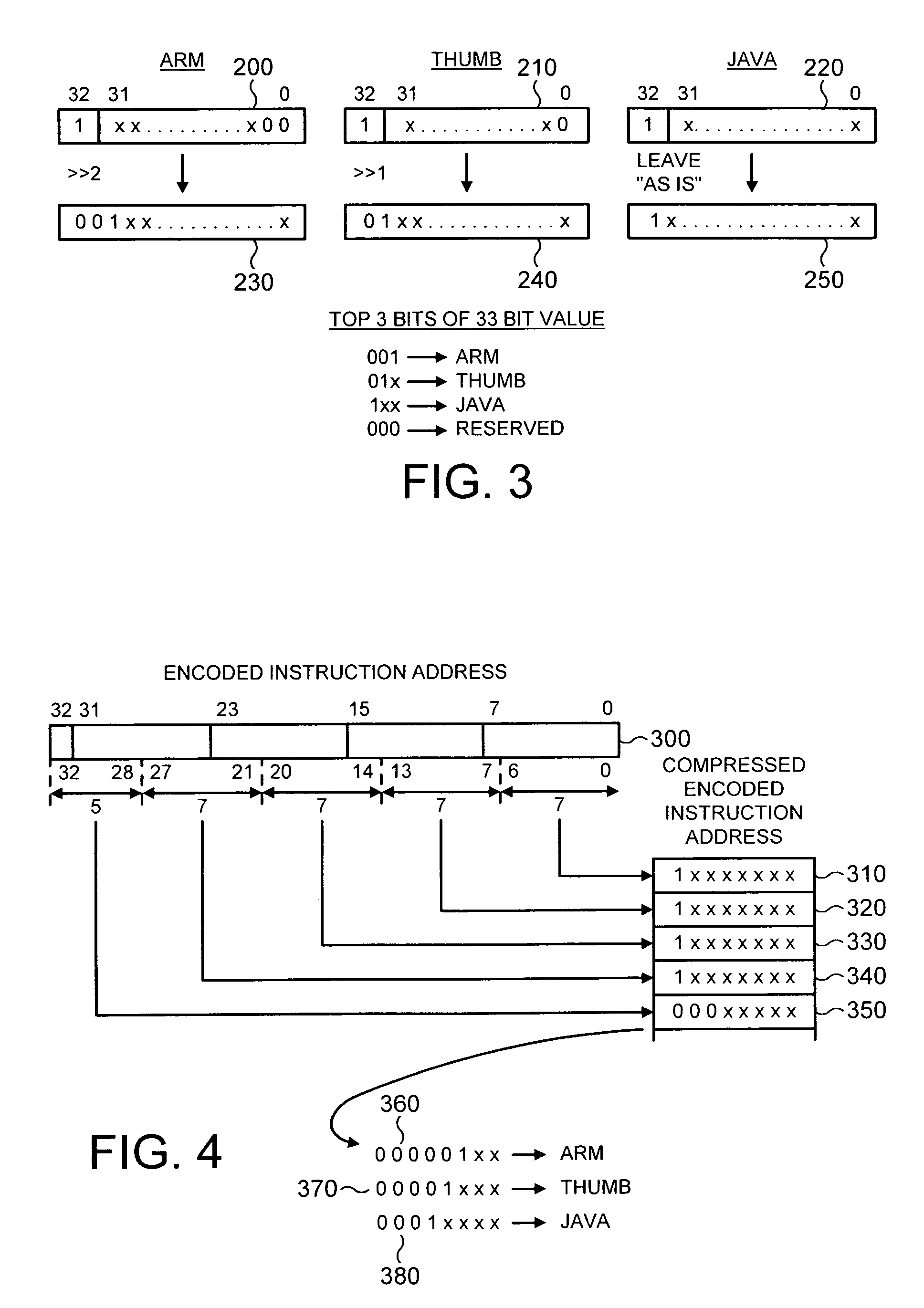

Apparatus and method for efficiently incorporating instruction set information with instruction addresses

InactiveUS7093108B2Efficient codingEfficient representationDigital computer detailsHardware monitoringProcessing InstructionInstruction set

The present invention provides an apparatus and method for storing instruction set information. The apparatus comprises a processing circuit for executing processing instructions from any of a plurality of instruction sets of processing instructions, each processing instruction being specified by an instruction address identifying that processing instruction's location in memory. A different number of instruction address bits need to be specified in the instruction address for processing instructions in different instruction sets. The apparatus further comprises encoding logic for encoding an instruction address with an indication of the instruction set corresponding to that instruction to generate an n-bit encoded instruction address. The encoding logic is arranged to perform the encoding by performing a computation equivalent to extending the specified instruction address bits to n-bits by prepending a pattern of bits to the specified instruction address bits, the pattern of bits prepended being dependent on the instruction set corresponding to that instruction. Preferably, the encoded instruction address is then compressed. This approach provides a particularly efficient technique for incorporating instruction set information with instruction addresses, and will be useful in any implementations where it is desired to track such information, one example being in tracing mechanisms used to trace the activity of a processing circuit.

Owner:ARM LTD

Image encoding apparatus and method, computer program, and computer-readable storage medium

Owner:CANON KK

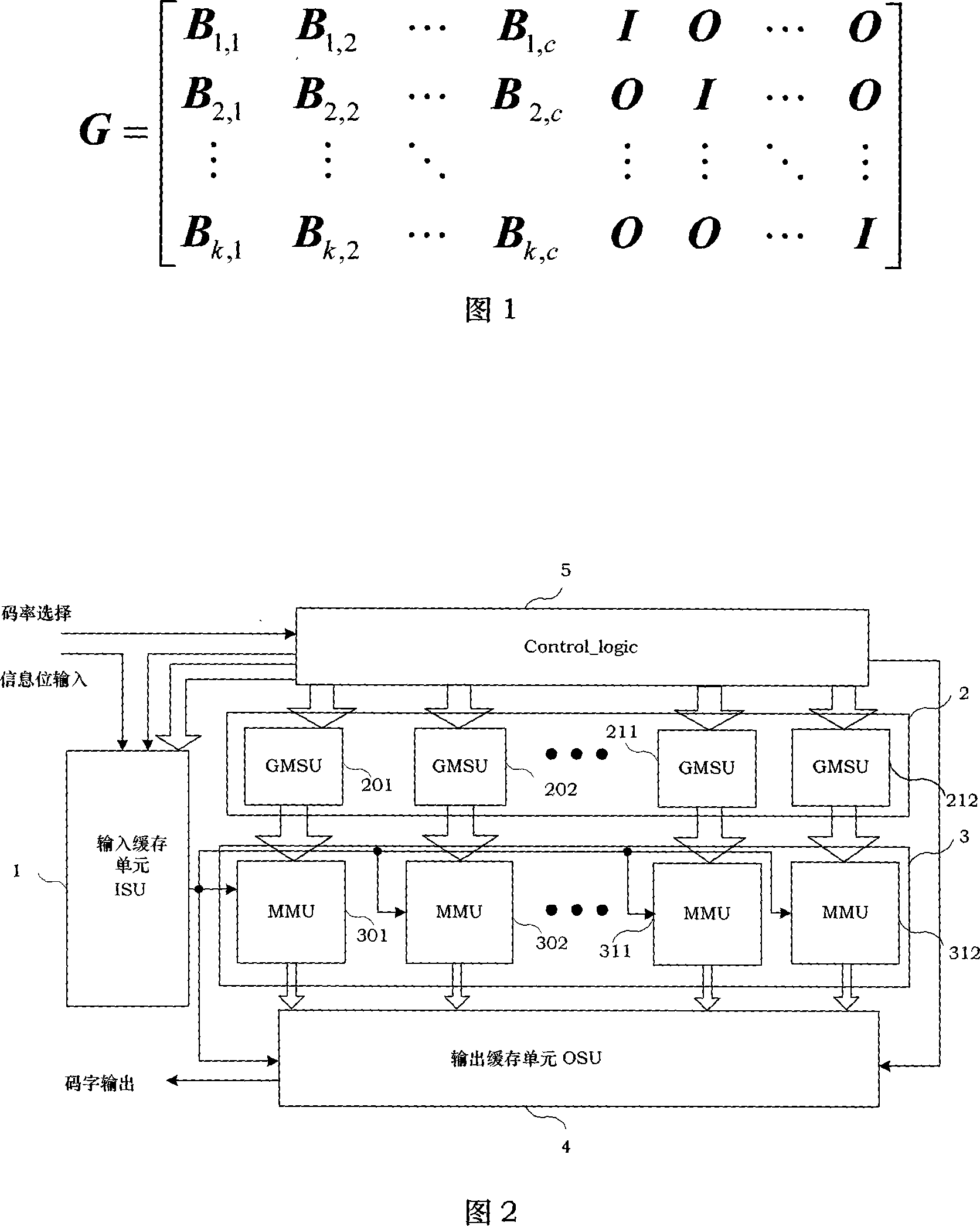

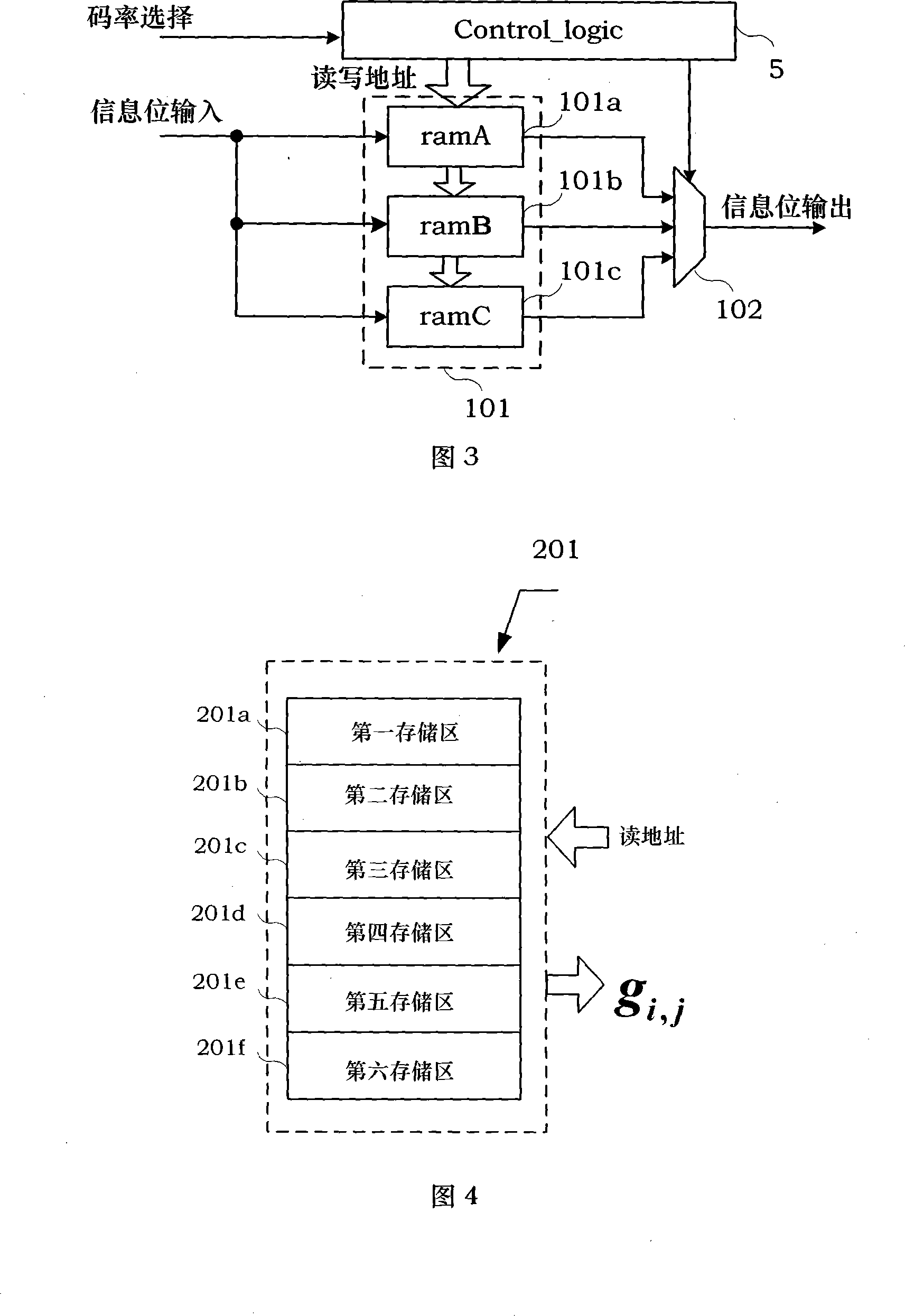

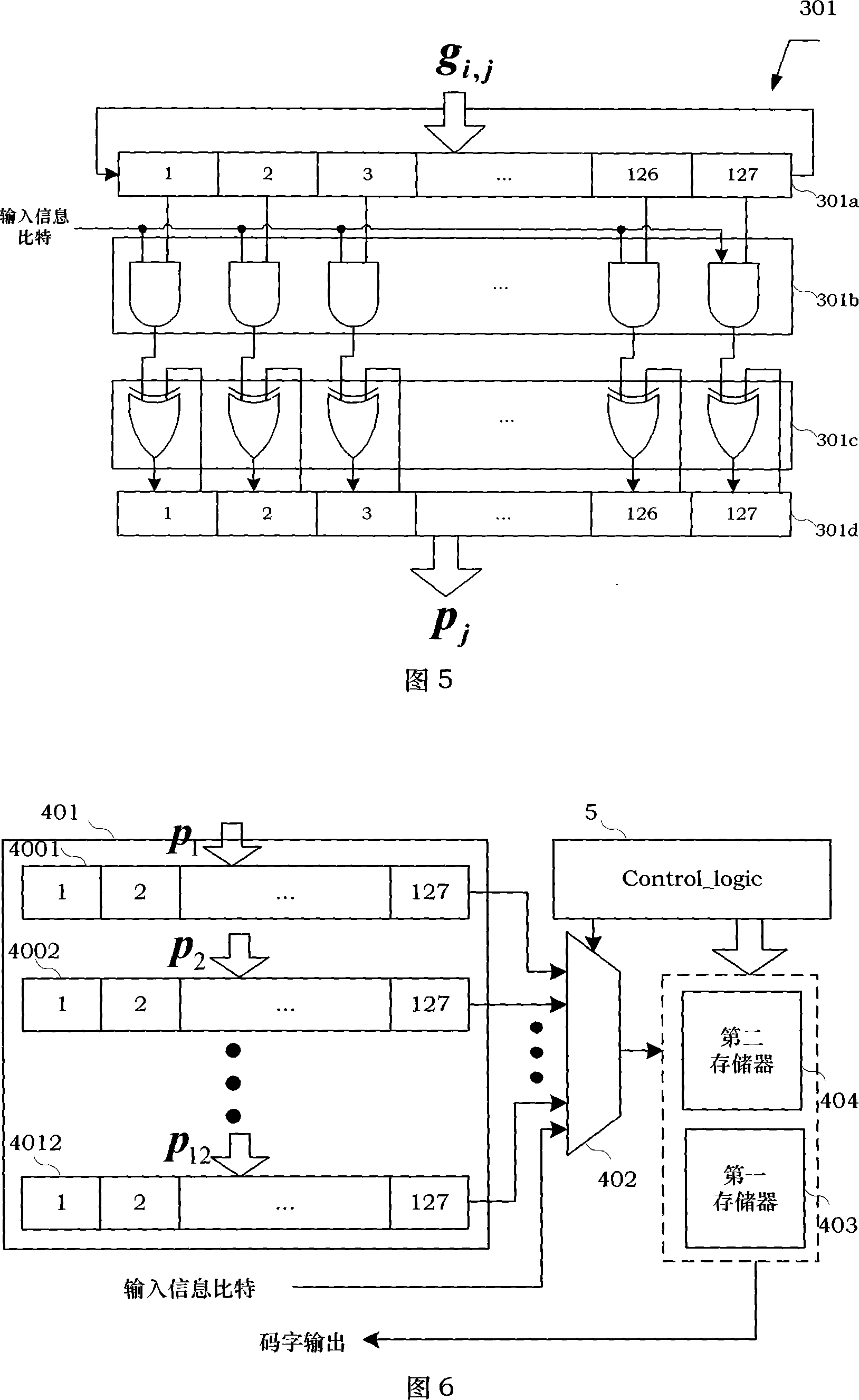

Encoder device and encoding method for LDPC code

InactiveCN101114834AError correction/detection using multiple parity bitsTheoretical computer scienceDigital television terrestrial broadcasting

The invention discloses an encoder device and an encoding method of an LDPC code. With the invention, a generator matrix of different code rates is segmented, and the generator matrix after segmentation is validly stored in a memory; when in encoding, the information to be encoded is cached by a plurality of small memories, output bit by bit and multiplied with the generator matrix stored beforehand; then, a summation of the operation results of each bit is summed. When the multiplication of all the information bits and all generator matrix blocks is finished, a parity bit is obtained and output in parallel into an output unit to finish the calculation. The encoder device and the encoding method provided by the invention can encode the LDPC code of single code rate and a plurality of code rates, thus particularly being suitable for the encoder design of the LDPC code of digital ground broadcast standard.

Owner:BEIHANG UNIV

Translating cache hints

ActiveUS20140372699A1Facilitate communicationMemory adressing/allocation/relocationEnergy efficient computingParallel computingSystem identification

Owner:APPLE INC

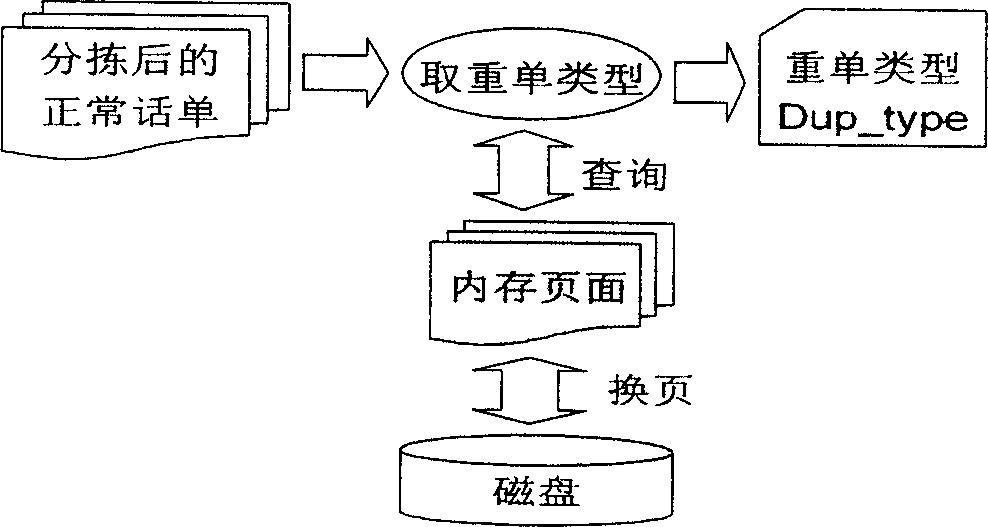

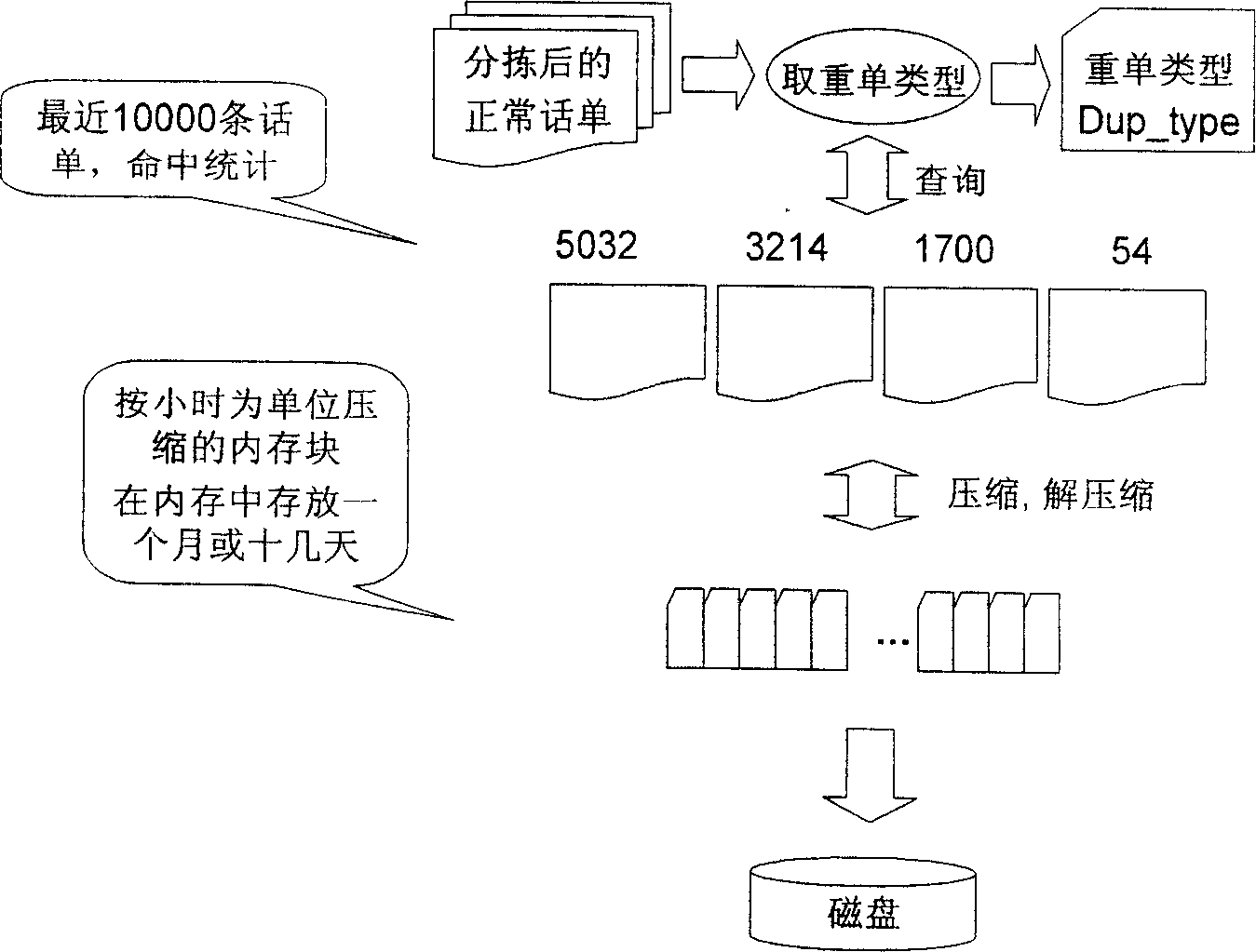

Mass toll-ticket fast cross rearrangement based on memory

ActiveCN1897629AEfficient methodEfficient designTelephonic communicationSelection arrangementsAVL treeTheoretical computer science

The invention uses the memory multi level storage mechanism, the index technique of the digital search tree based on the binary balance tree (AVL tree)and the process of combining the compression technique based on the BCD code and the RLC(Run-Length Coding) algorithm with the cross and rearrange approach based on the time slice. Said multi level storage mechanism uses three -levels storage mechanism comprising a first level used as the widow of working set, a second level used as the compression and rearrange information and a third level used as the storage disc; the exchange between the first level and the second level is for encoding and decoding, and the data saving in first and second level is implemented using memory mapping approach; the index approach of the digital search tree based on the binary balance tree (AVL tree) is used for the cross and rearrangement.

Owner:LINKAGE SYST INTEGRATION

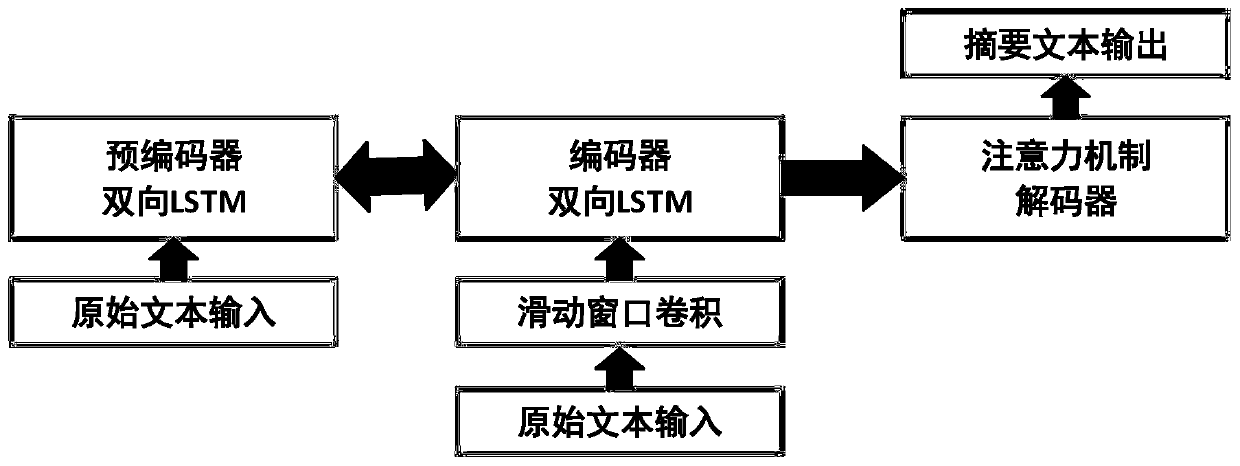

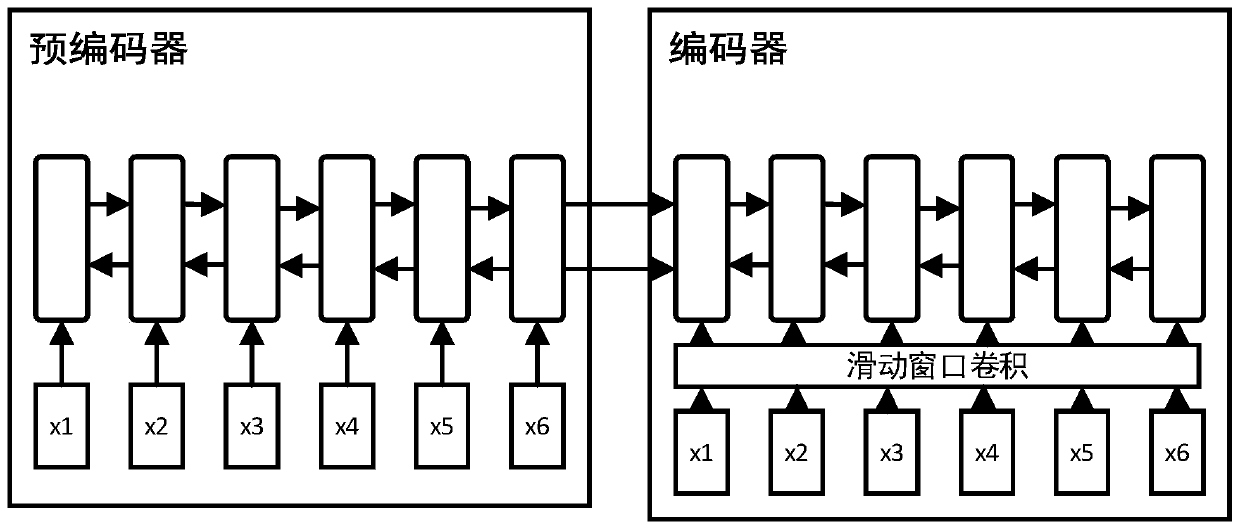

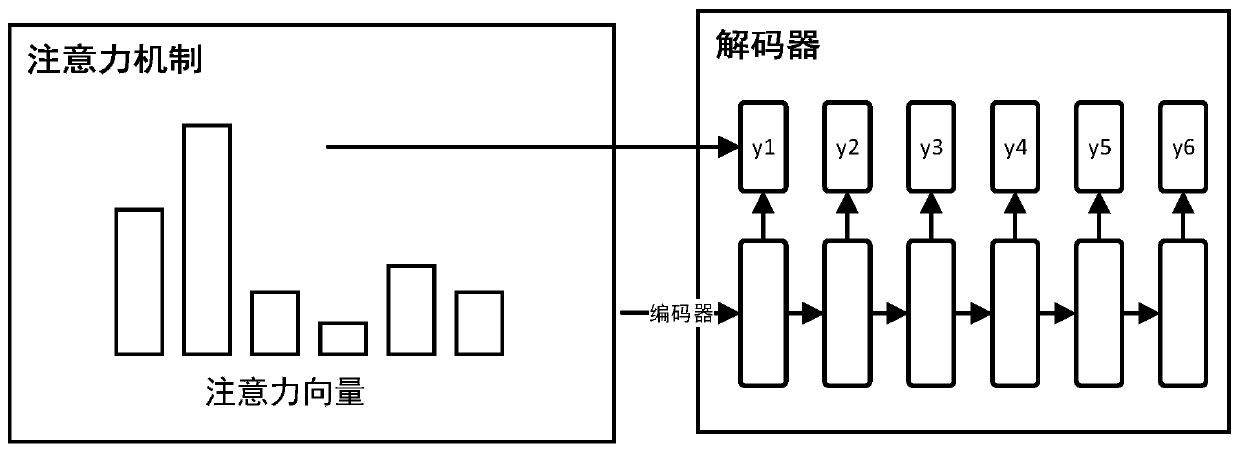

Chinese text abstract generation method based on sequence-to-sequence model

ActiveCN111078866ADifficulty of SimplificationAvoid piecing together duplicated resultsNeural architecturesSpecial data processing applicationsAlgorithmTheoretical computer science

The invention discloses a Chinese text abstract generation method based on a sequence-to-sequence model, and the method comprises the steps: firstly carrying out the word segmentation of a text, filling the text to a fixed length, and carrying out the Gaussian random initialization of a word vector; encoding the text, inputting the encoded text into a bidirectional long short-term memory (LSTM) network, and taking the final output state as precoding; performing convolutional neural network (CNN) on the word vectors according to different window sizes, and outputting the word vectors as windowword vectors; constructing an encoder, constructing a bidirectional LSTM (Long Short Term Memory), taking precoding as an initialization parameter of the bidirectional LSTM, and taking a window word vector in the previous step as input; and constructing a decoder, and generating a text by using a one-way LSTM and combining an attention mechanism. According to the method, a traditional encoder froma sequence to a sequence model is improved, so that the model can obtain more original text information in an encoding stage, a better text abstract is finally decoded, and a word vector with smallerfine granularity is used, so that the method is more suitable for a Chinese text.

Owner:SOUTH CHINA UNIV OF TECH

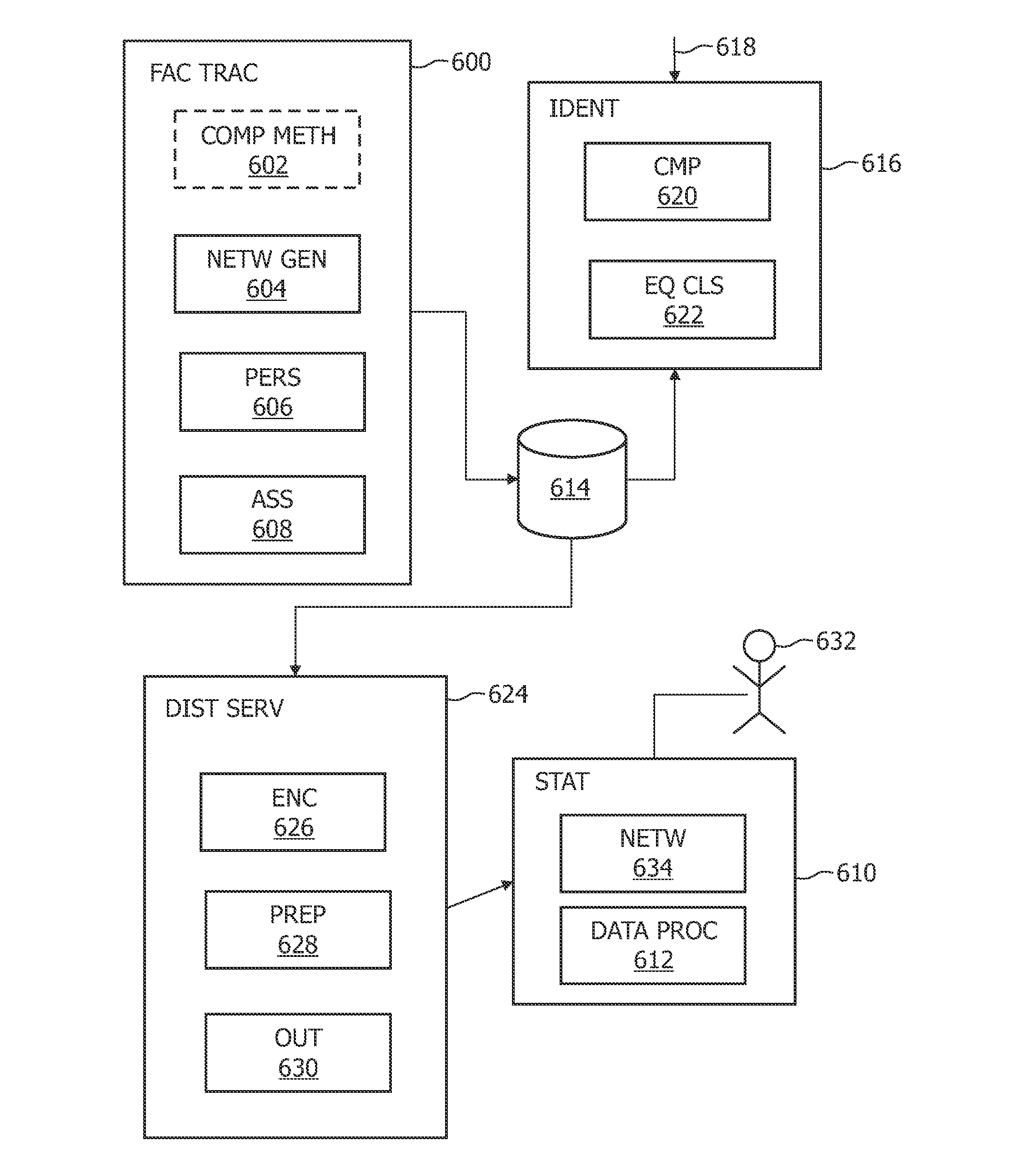

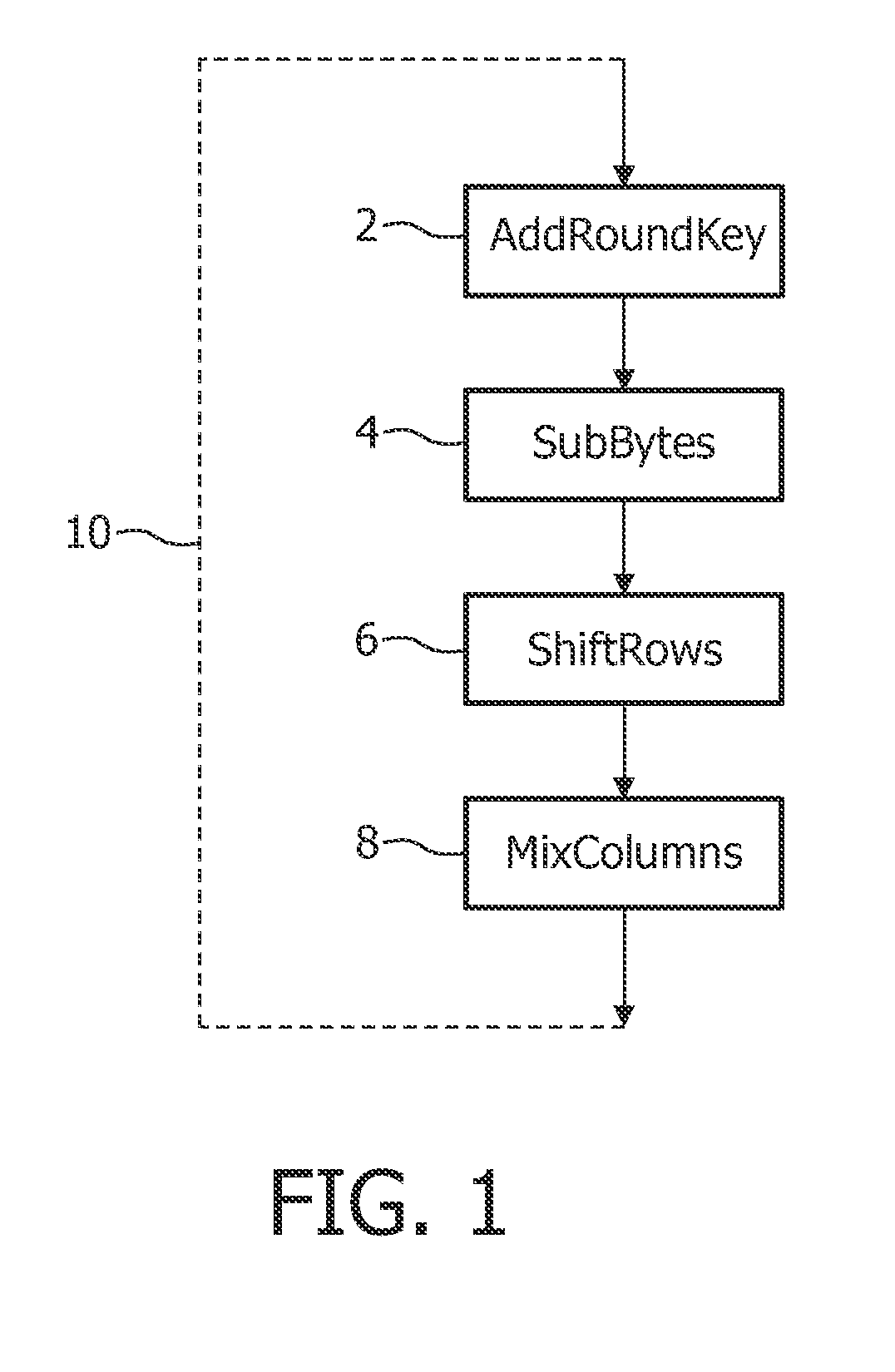

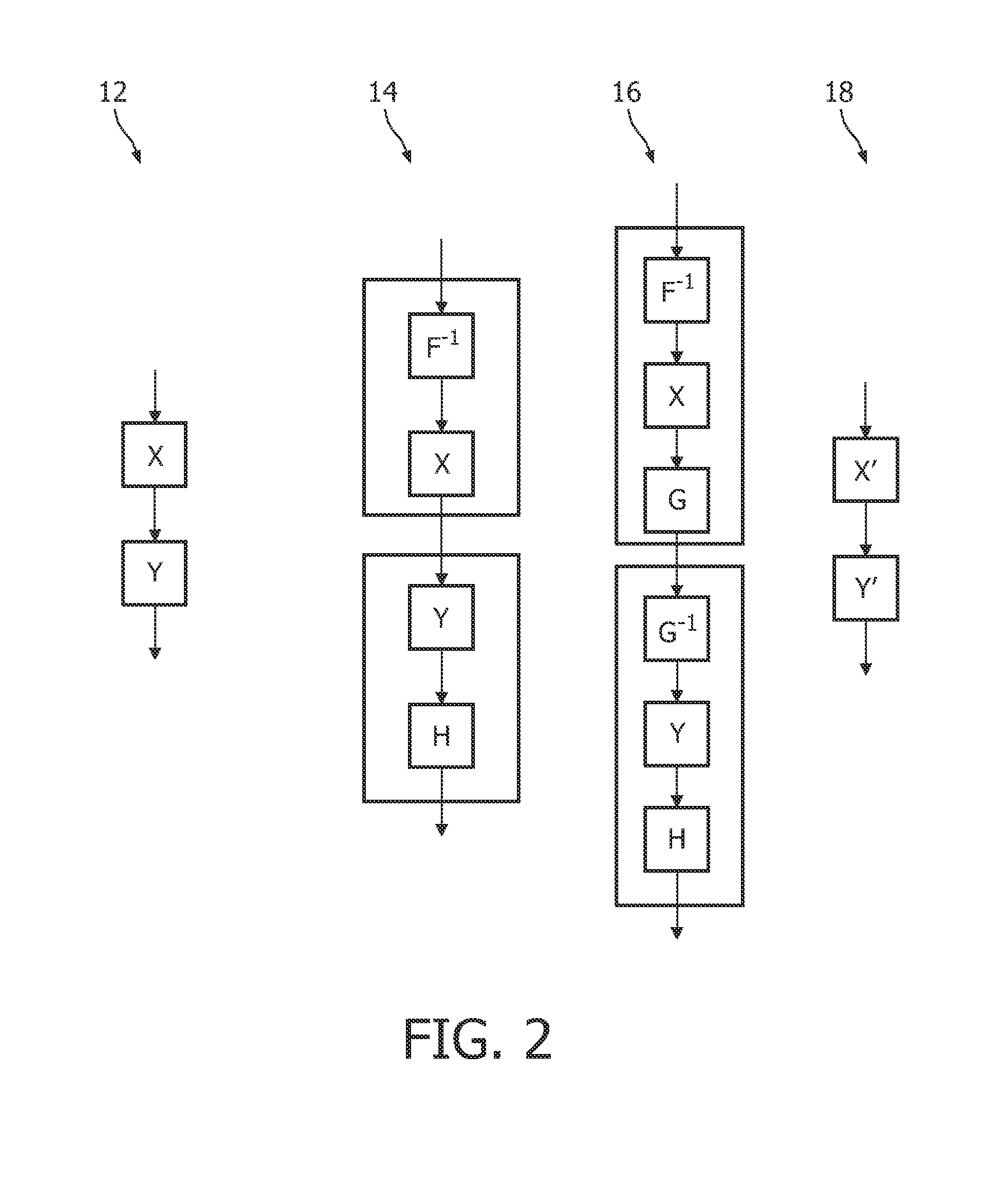

Method and system for tracking or identifying copy of implementation of computational method, and computation system

InactiveUS8306216B2Efficient use ofEfficient integrationHandling data according to predetermined rulesAnalogue secracy/subscription systemsAlgorithmTheoretical computer science

A method and system is provided facilitating tracing copies of an implementation of a computational method where different versions of a network of look-up tables representing steps of the computational method are generated, and stored in a memory, each version being unique so that output encodings and / or input decodings of the white-box implementations of the computational method are different in the different versions. The network is formed by using an output value of a first look-up table as an input value of a second look-up table. The different versions are generated by changing at least one value in the network, end results of the version corresponding to a relevant domain of input values being substantially the same for each version. A method and system for computation for a user with the corresponding version in the memory, and / or for identifying a copy of an implementation of the computational method is provided.

Owner:IRDETO ACCESS

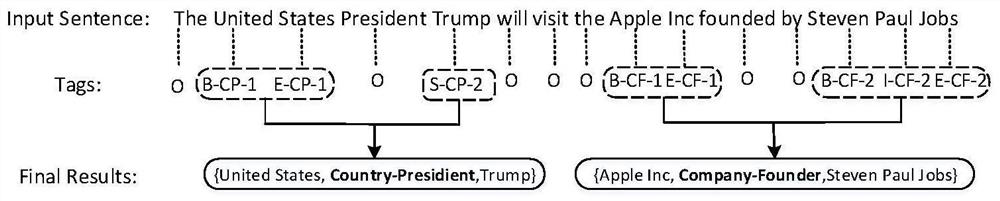

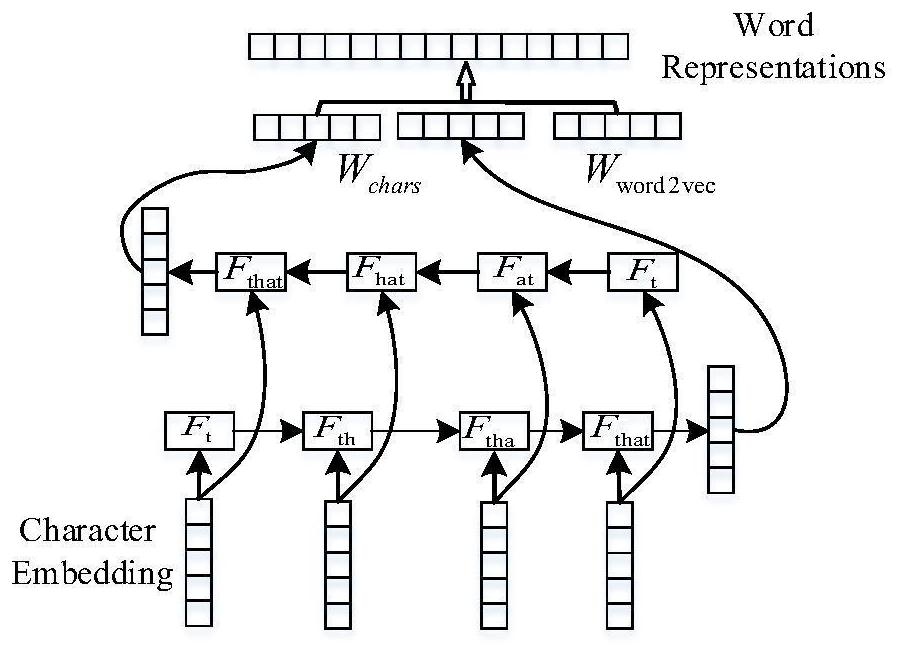

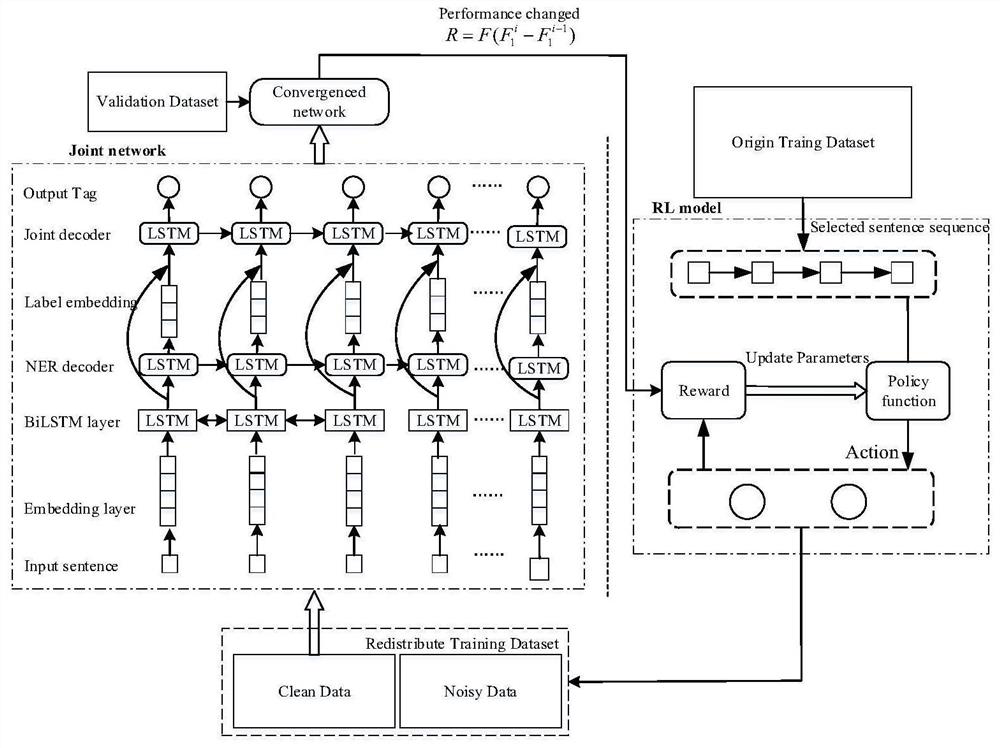

Entity and relationship joint extraction method based on reinforcement learning

The invention discloses a joint information extraction method. According to the method, entity and relationship information is jointly extracted from an input text, and the method is composed of a joint extraction module and a reinforcement learning module. The joint extraction module adopts an end-to-end design and comprises a word embedding layer, a coding layer, an entity identification layer and a joint information extraction layer. Wherein the word embedding layer adopts a mode of combining a Glove pre-training word embedding library and word embedding representation based on character granularity. The encoding layer encodes the input text by using a bidirectional long-short memory network. The entity identification layer and the joint information extraction layer decode by using a one-way long-short memory network. The reinforcement learning module is used for removing noise in a data set, and a strategy network of the reinforcement learning module is composed of a convolutionalneural network. The strategy network comprises a pre-training process and a re-training process, and in the pre-training process, a pre-training data set is used for carrying out supervised training on the strategy network. In the retraining process, the strategy network is updated by obtaining the rewards of the joint extraction network, which is an unsupervised learning process.

Owner:SICHUAN UNIV

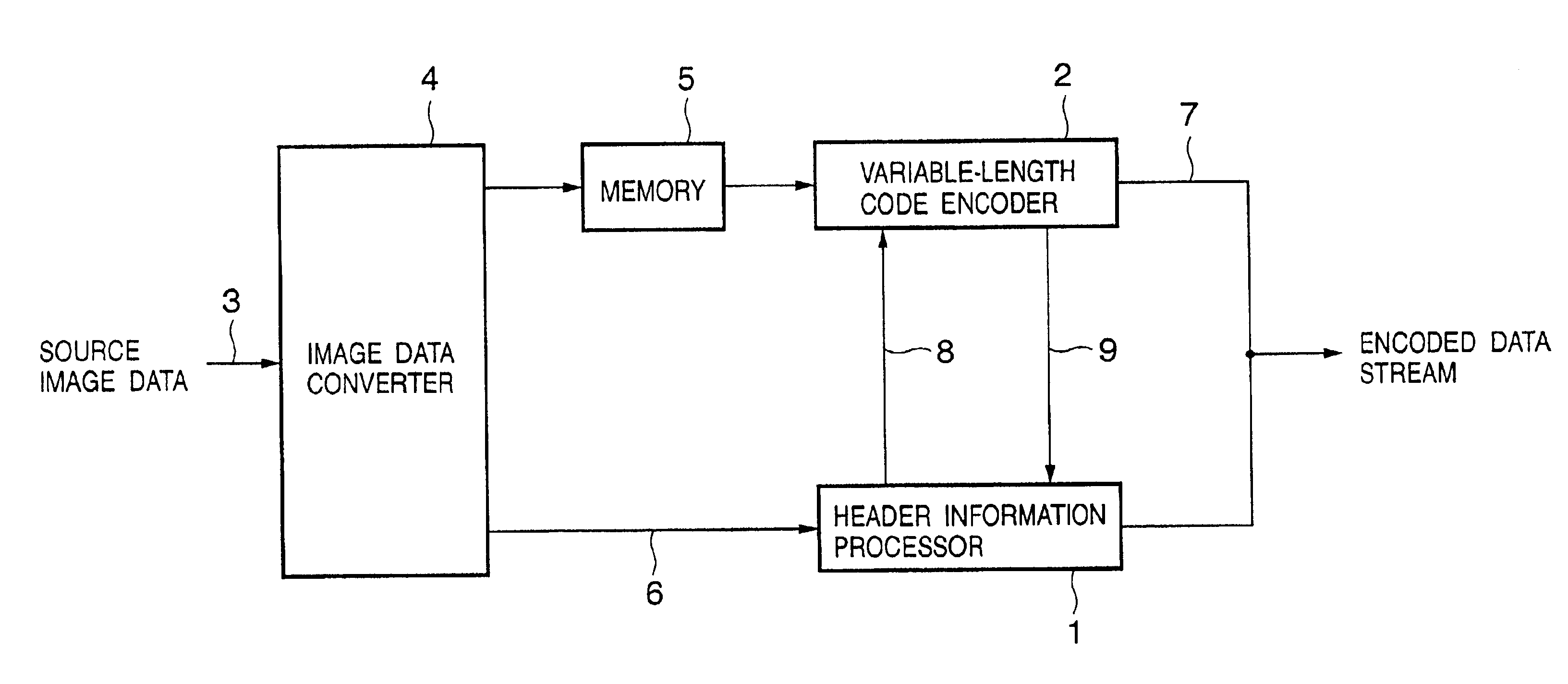

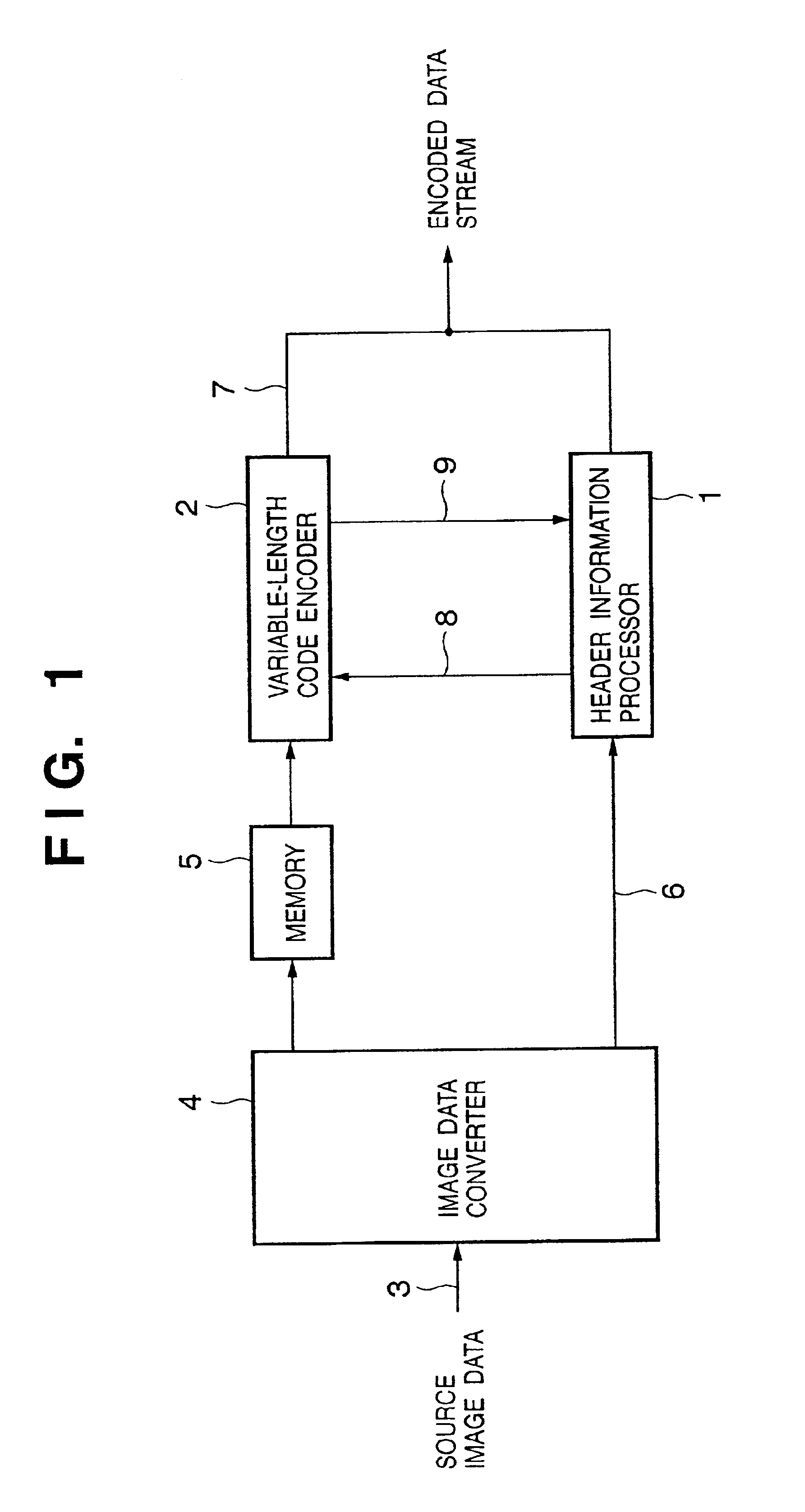

Image processing method, apparatus, and storage medium

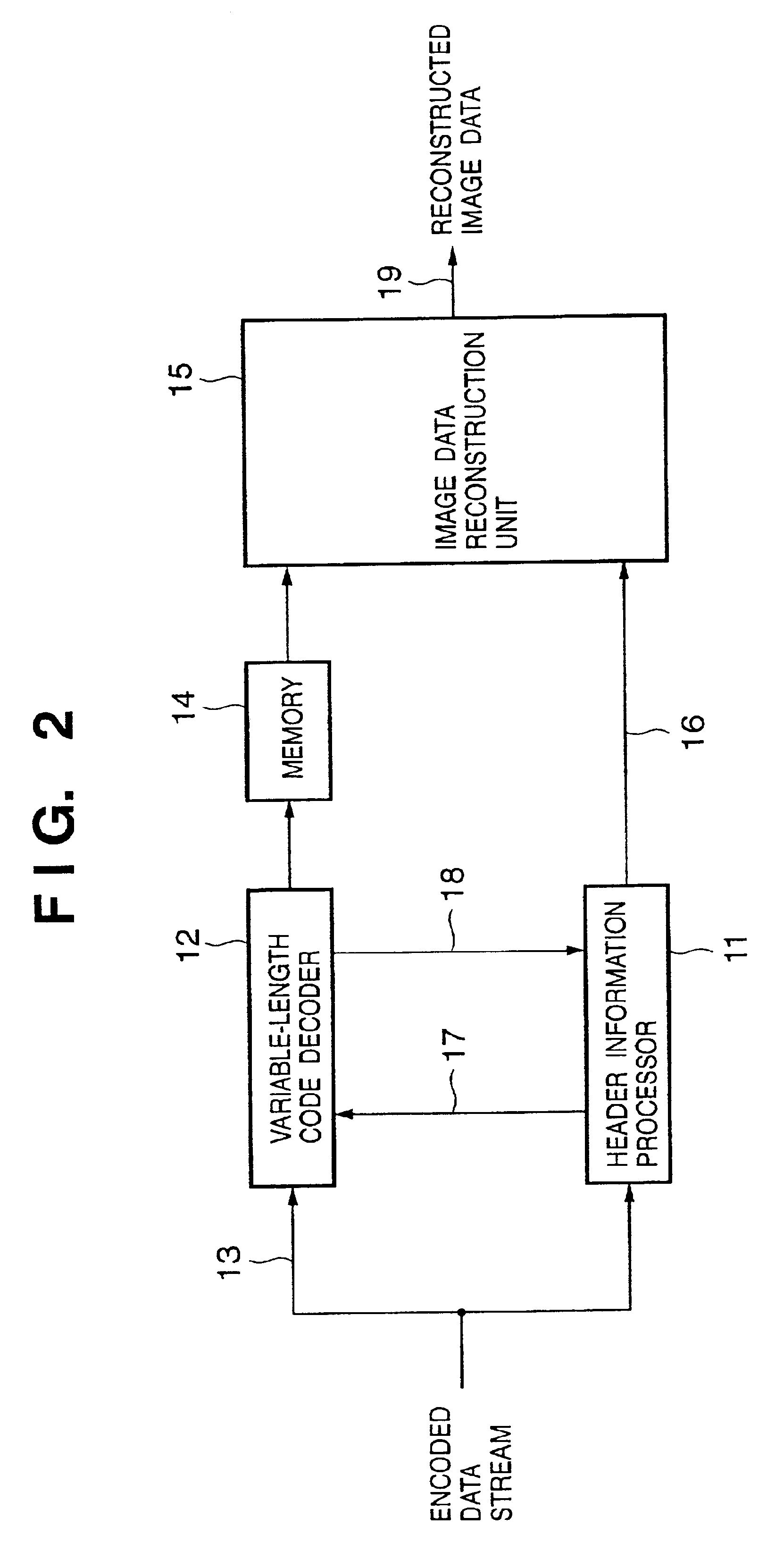

ActiveUS6947602B2Prolonging total processing timeMinimize power consumptionCharacter and pattern recognitionTelevision systemsVariable-length codeImaging processing

Whether a header information processor quickly enters a program inactive state at the timing when it issues an operation start command of an encoding process for a predetermined processing unit to a variable-length code encoder, or the header information processor enters the program inactive state upon completion of execution steps to be processed is adaptively selected in accordance with the number of execution steps. One memory is shared by the header information processor and variable-length code encoder, and address input permission means for controlling to grant permission of an address input to the memory to one of the header information processor and the variable-length code encoder is provided. The memory is used as a work area of the header information processor, and as a storage area of a variable-length code table which is looked up by the variable-length code encoder.

Owner:CANON KK

Image encoding apparatus and method, computer program, and computer-readable storage medium

InactiveUS7454070B2Suppress generation of block noiseCharacter and pattern recognitionDigital video signal modificationSequence controlLossless coding

According to this invention, encoded data of a target data amount is generated by one image input operation while both lossless encoding and lossy encoding are adopted. For this purpose, an encoding sequence control unit controls a first encoding unit for lossy (JPEG) encoding, a second encoding unit for lossless (JPEG-LS) encoding, first and second memories, and a re-encoding unit, and stores, in a first memory, encoded data of a target data amount or less that contains both losslessly and lossily encoded data. A correction unit corrects, of encoded data stored in the first memory, encoded data of an isolated type to the type of neighboring encoded data, and outputs the corrected data.

Owner:CANON KK

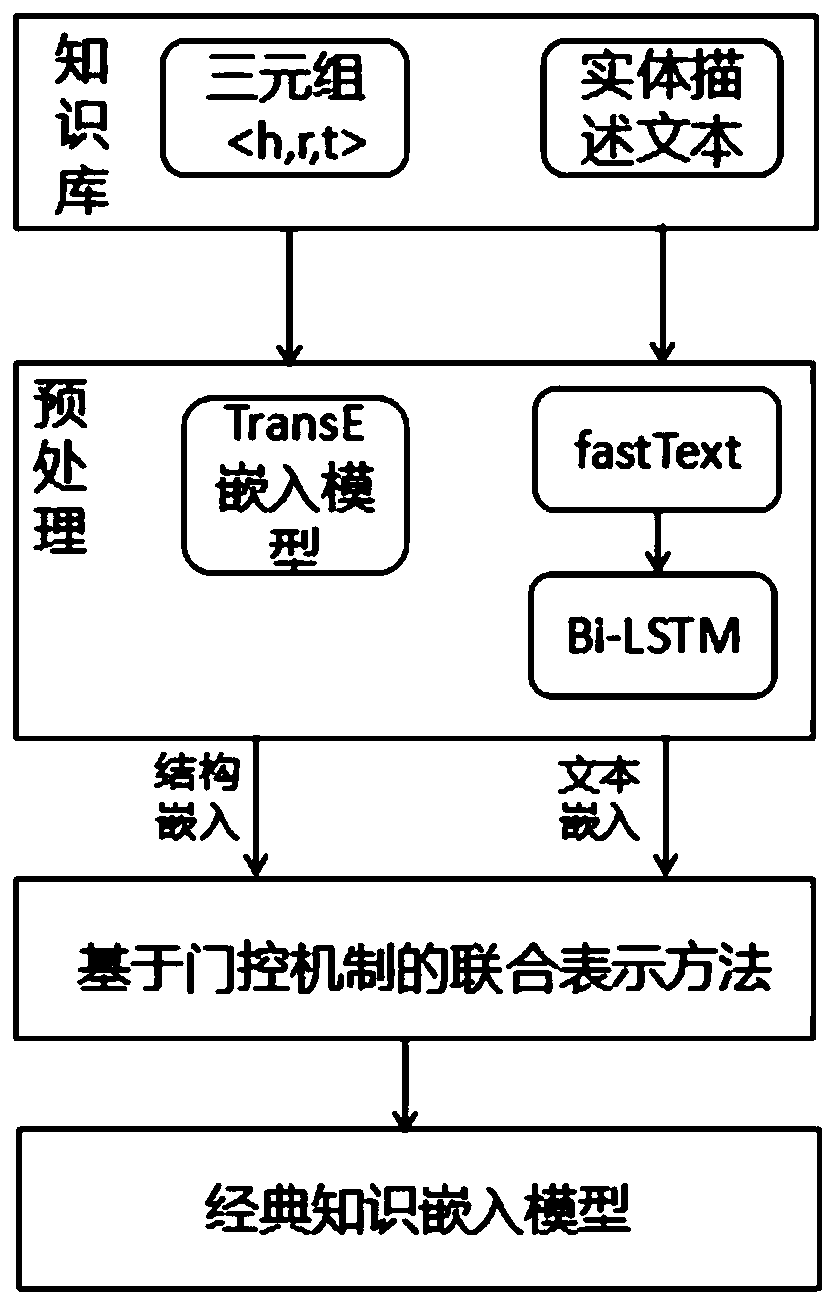

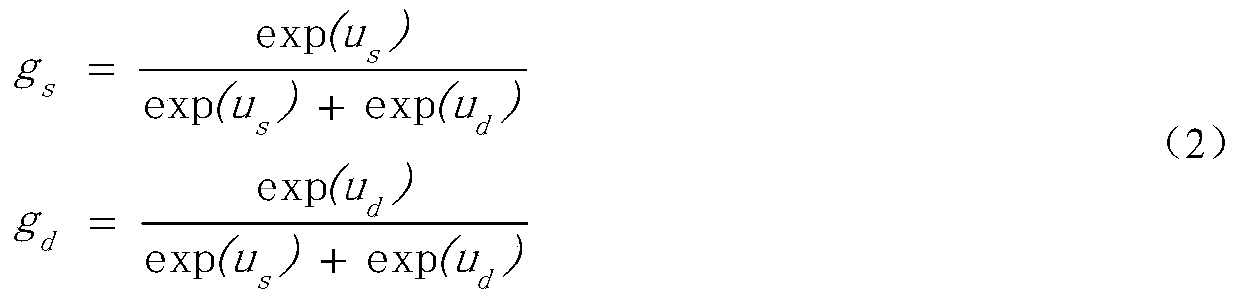

Knowledge representation method based on combination of text embedding and structure embedding

ActiveCN110851620AImprove the ability to distinguishEasy to completeNatural language data processingEnergy efficient computingStructural representationAlgorithm

The invention discloses a knowledge representation method based on the combination of text embedding and structure embedding, and the method comprises the steps: 1, carrying out the preprocessing of an entity description text in a knowledge base, and extracting a subject term from each entity description; 2, encoding the subject term into a term vector by using fasttext, wherein each entity description is expressed as a multi-dimensional term vector; step 3, inputting the processed multi-dimensional word vectors into a bidirectional long-short memory network (A-BiLSTM) with an attention mechanism or a long-short memory network (A-LSTM) with an attention mechanism for encoding, processing the multi-dimensional word vector representing each entity into a one-dimensional vector, namely text representation, and training an existing StransE model to obtain structural representation of the entity; 4, introducing a gating mechanism, and proposing four methods related to text embedding and structure embedding combination to obtain a final entity embedding matrix; and 5, inputting the entity embedding matrix into a ConvKB knowledge graph embedding model, a TransH knowledge graph embedding model, a TransR knowledge graph embedding model, a Distmult knowledge graph embedding model and a Hole knowledge graph embedding model, and improving a knowledge completion task.

Owner:TIANJIN UNIV

Semiconductor device

InactiveUS20050270818A1Improve usabilityReduce installation costsDigital storageTransmissionComputer hardwareValue set

In accordance with the regions which are component elements of memory information (entry) and input information (comparison information or search key), quaternary information including a pair of the minimum value and the difference or ternary information including a pair of the data and the mask are used as I / O signals. In addition, in accordance with the two types of information, two types of encoding circuits and decoding circuits are disposed, and either one of the encoding circuits and the decoding circuits are activated in accordance with the values set to the registers disposed to designate the format of information in each region of the entry and the search key. By selecting the desired register from the plurality of registers in response to the external command signals and address signals, the encoding and decoding in accordance with the information to be processed are carried out.

Owner:HITACHI LTD

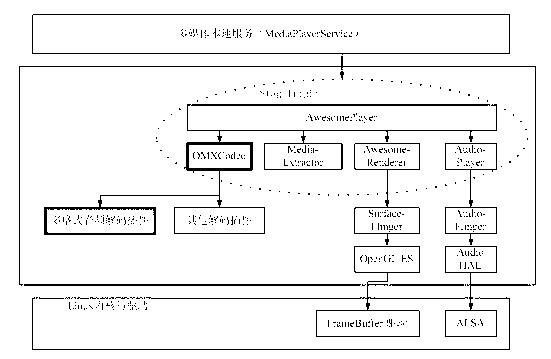

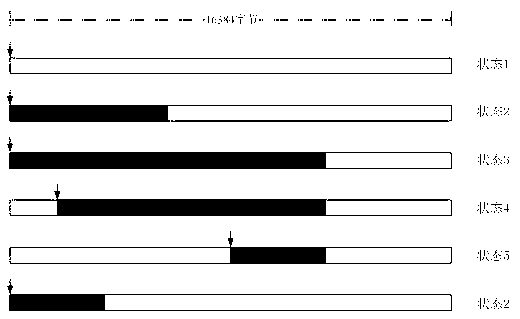

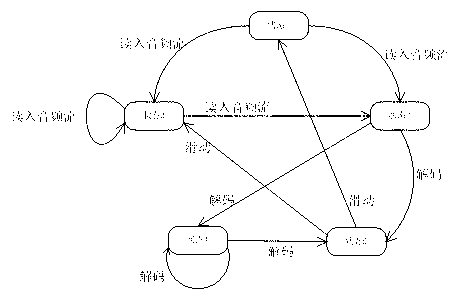

Audio decoding system and method adapted to android stagefright multimedia framework

ActiveCN102857833AAudio Decoding Complexity No.Improve portabilitySelective content distributionMultimedia frameworkAmbient data

The invention discloses an audio decoding system and method adapted to an android stagefright multimedia framework. The method comprises the following steps of: saving an unpacking component input by an Awesome player, to complete registration of audio decoders; acquiring media metadata of audio and saving the media metadata to local; acquiring a context environmental data item, and applying for a memory resource as a decoding output buffer; according to the context environment, opening and initializing a decoder in the audio decoders matched with audio stream format, and applying for a memory resource as a decoding input buffer; through the unpacking component, reading audio encoding data to the input buffer and performing audio decoding; updating sampling rate data in local media metadata to serve as the sampling rate of the audio encoding data; and according to local media metadata, calculating to obtain a time stamp of the decoding output data and save the time stamp to the output buffer, and returning from the output buffer original audio data carrying the time stamp. By adopting the audio decoding system and method adapted to the android stagefright multimedia framework provided by the invention, audio formats supported by an android system can be expanded.

Owner:深圳市佳创软件有限公司

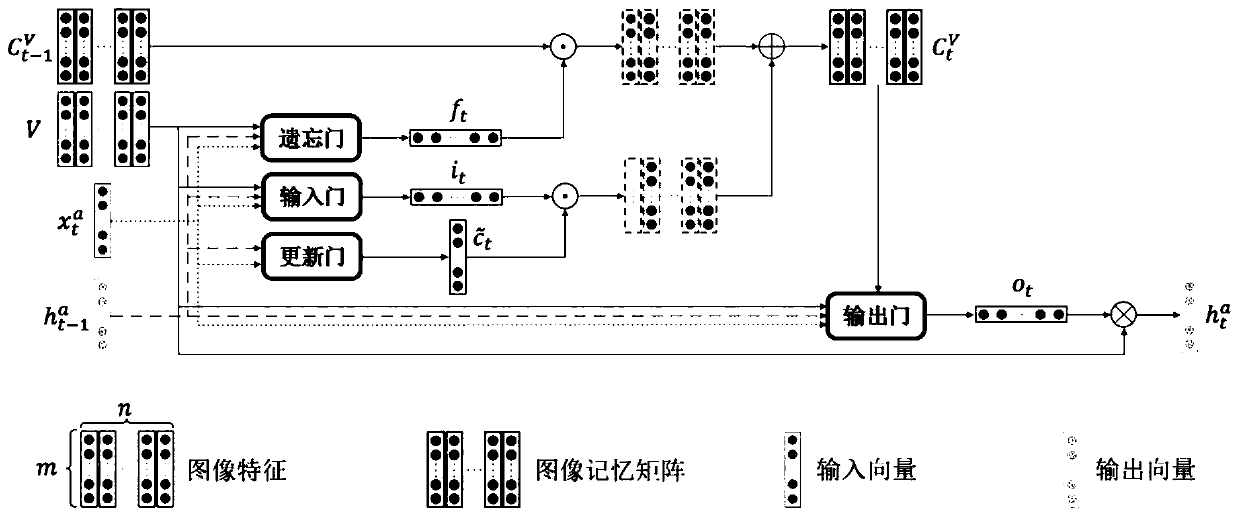

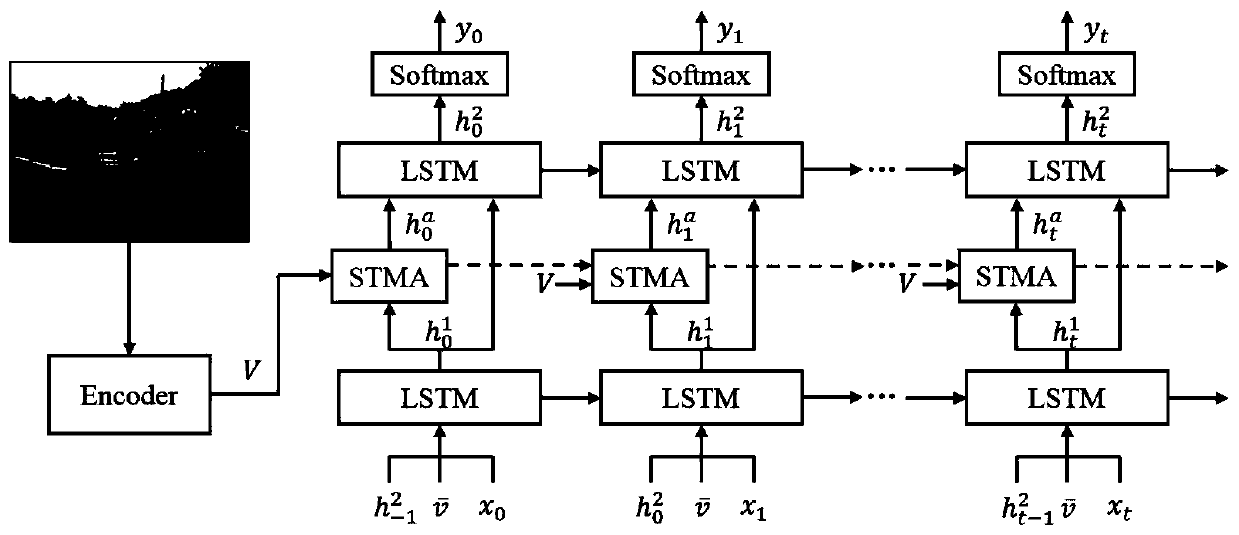

Image description method based on space-time memory attention

ActiveCN111144553AThe location of the image focus is accurateAccurate Image Description ResultsInternal combustion piston enginesNeural architecturesAttention modelData set

The invention discloses an image description method based on spatio-temporal memory attention. The method comprises the steps that (1) acquiring and pro-processing an MS COCO image description data set; (2) constructing an encoder model, pre-training the encoder model, and completing the encoding of MS COCO image data I to obtain an image feature V; (3) constructing a decoder, and decoding the image feature V; and (4) model training. According to the model built by the method, gate control and memory in the long-short-term memory network are adopted in an original attention model. Compared with a traditional attention model, a memory matrix is newly added to the space-time memory attention model and used for dynamically storing past attention features, continuous self-updating is conductedunder the control effect of an input gate, an output gate and a forgetting gate, and finally relevant attention features in time sequence space are output. Based on the STMA model, the method is moreaccurate in image attention position, and the image description result is more accurate.

Owner:BEIJING UNIV OF TECH

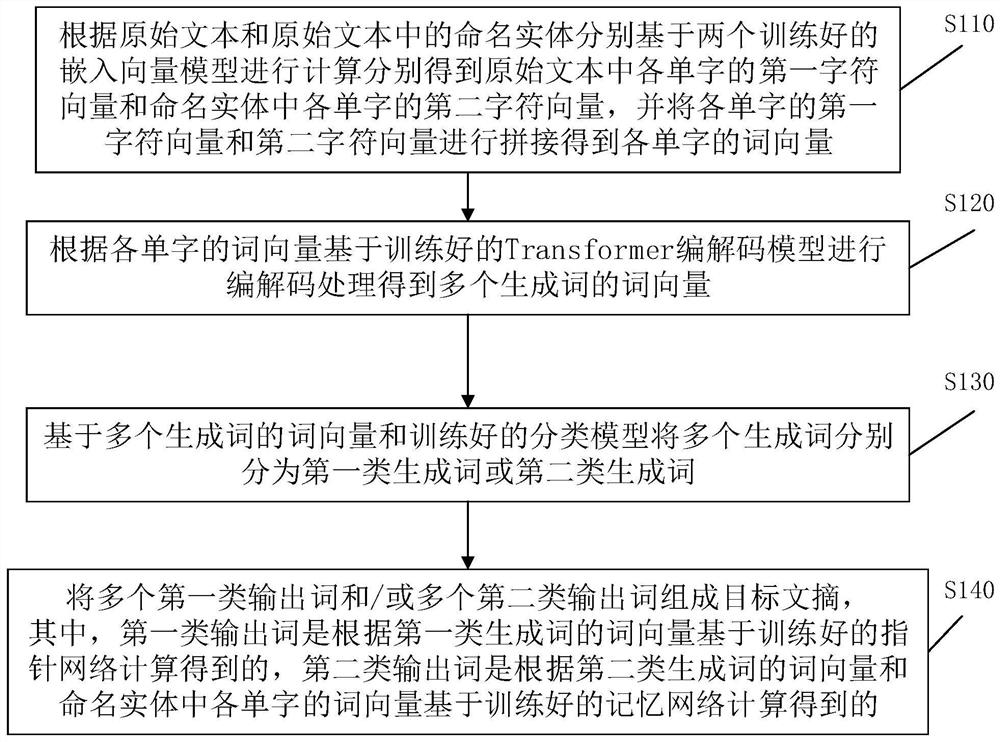

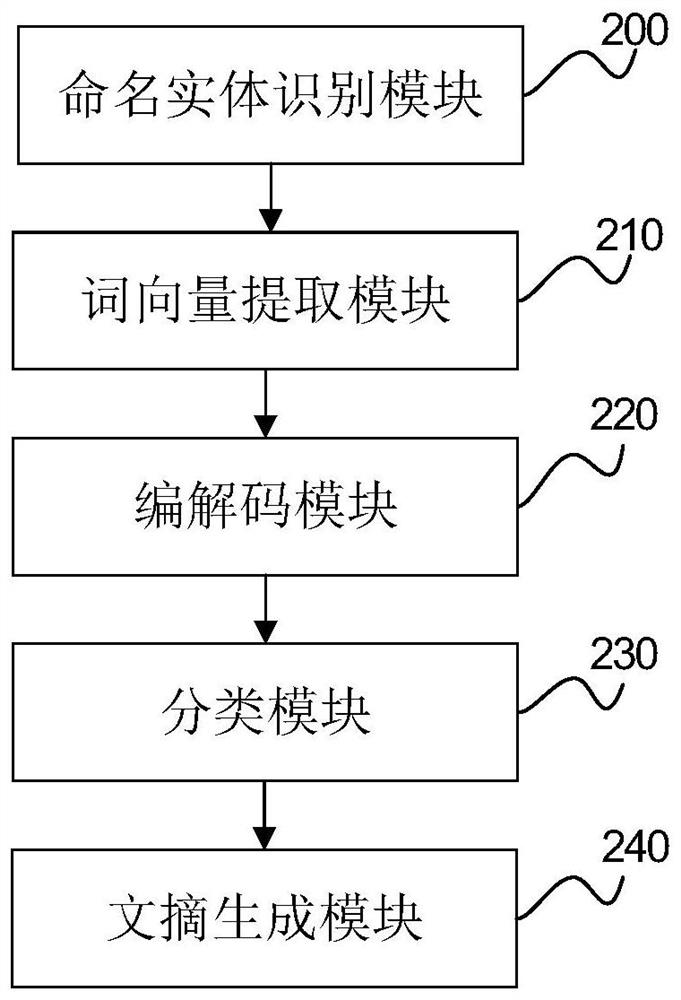

Automatic abstract generation method and device, electronic equipment and storage medium

PendingCN112183083AEnhanced Feature RepresentationSolve problems that cannot be generatedNatural language data processingSpecial data processing applicationsAlgorithmEngineering

The invention discloses an abstract automatic generation method and apparatus, an electronic device and a storage medium. The method comprises the steps of performing calculation on an original text and a named entity in the original text based on two trained embedded vector models to obtain a first character vector and a second character vector of each single character, and performing splicing toobtain a word vector of each single character; encoding and decoding the word vectors of each single character through the trained Transformer encoding and decoding model to obtain the word vectors of the multiple generated words, so that the feature representation capacity of the word vectors of the multiple generated words can be enhanced, and dividing each generated word into the first type ofgenerated words or the second type of generated words; and calculating the first type of generated words and the second type of generated words by adopting a trained pointer network and a trained memory network respectively to obtain first type of output words and second type of output words, and forming a target abstract by a plurality of first type of output words and / or a plurality of second type of output words, so that the problem that a remote named entity cannot be generated can be effectively solved.

Owner:杭州远传新业科技股份有限公司

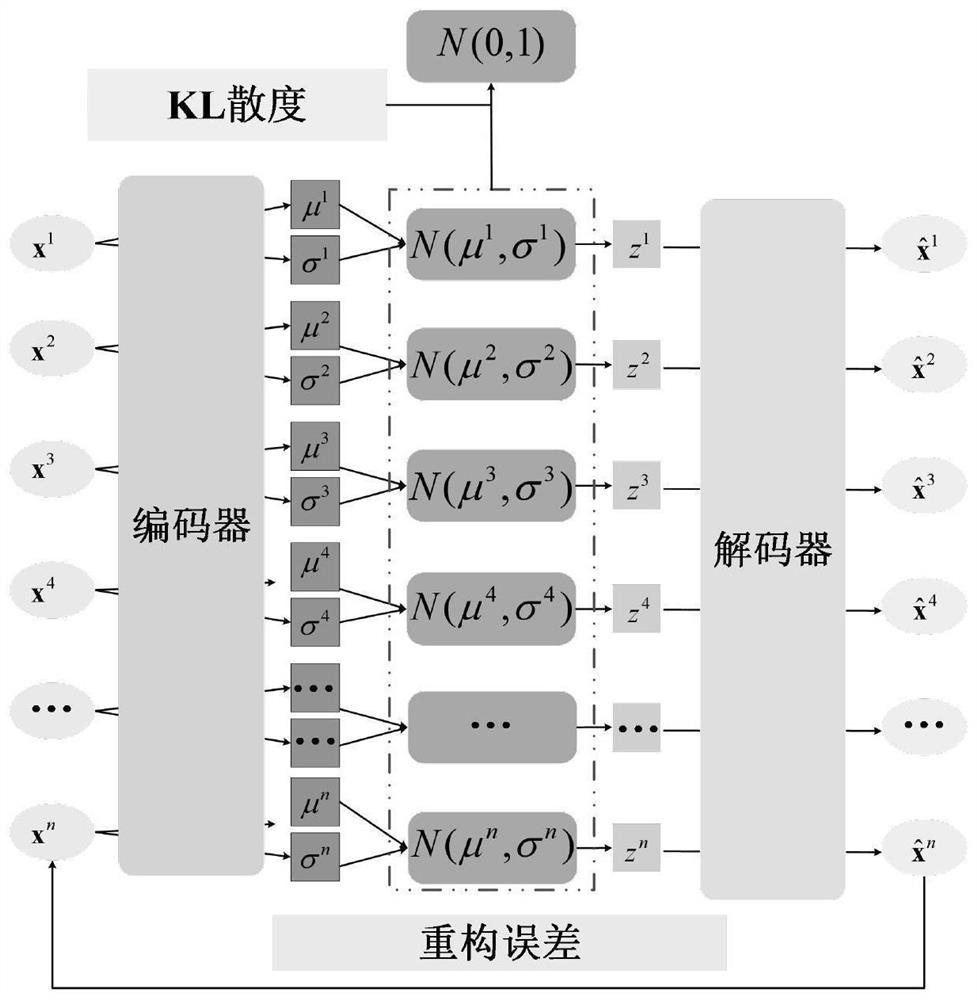

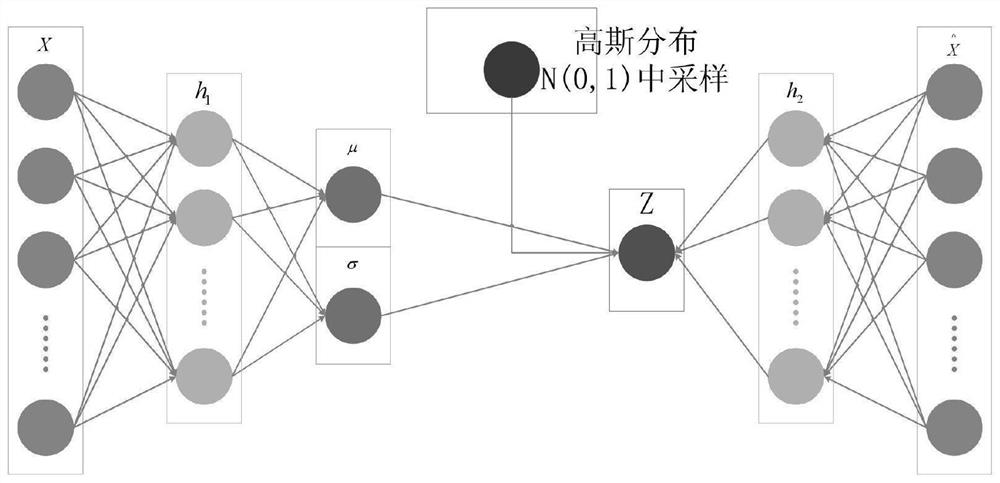

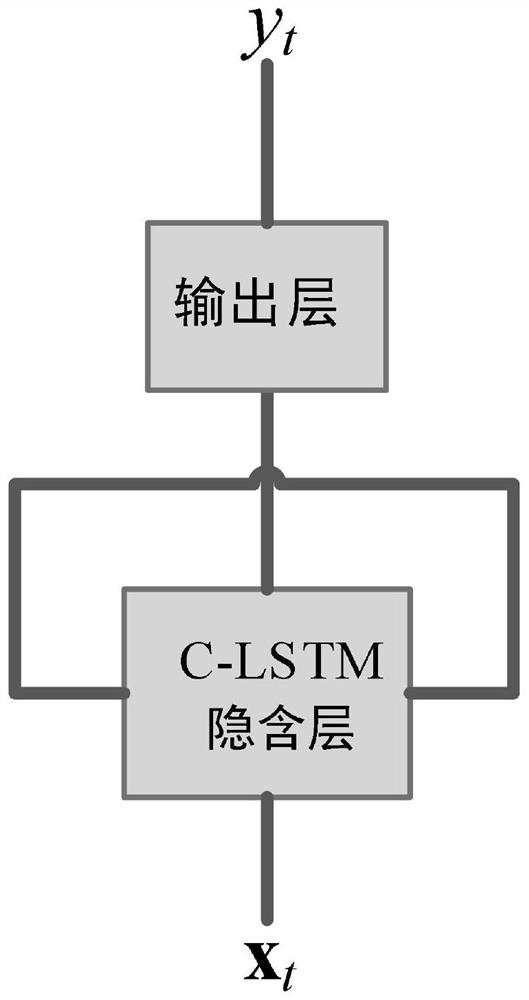

Gear residual life prediction method based on cocktail long-short-term memory neural network

The invention relates to a gear residual life prediction method based on a cocktail long short-term memory neural network, and belongs to the technical field of automation. The method comprises the following steps: S1, constructing gear health index based on variational self-encoding; S2, defining a cocktail long and short term memory network C-LSTM; and S3, predicting the residual life of the gear based on the health index constructed by the VAE and the C-LSTM. According to the method, firstly, health indexes capable of accurately showing the gear health state degradation trend are formed onthe basis of a variational auto-encoder (VAE), then unknown health indexes are predicted step by step according to a proposed cocktail long-short-term neural network, and predicted RUL can be obtainedwhen a set threshold value is reached.

Owner:CHONGQING UNIV

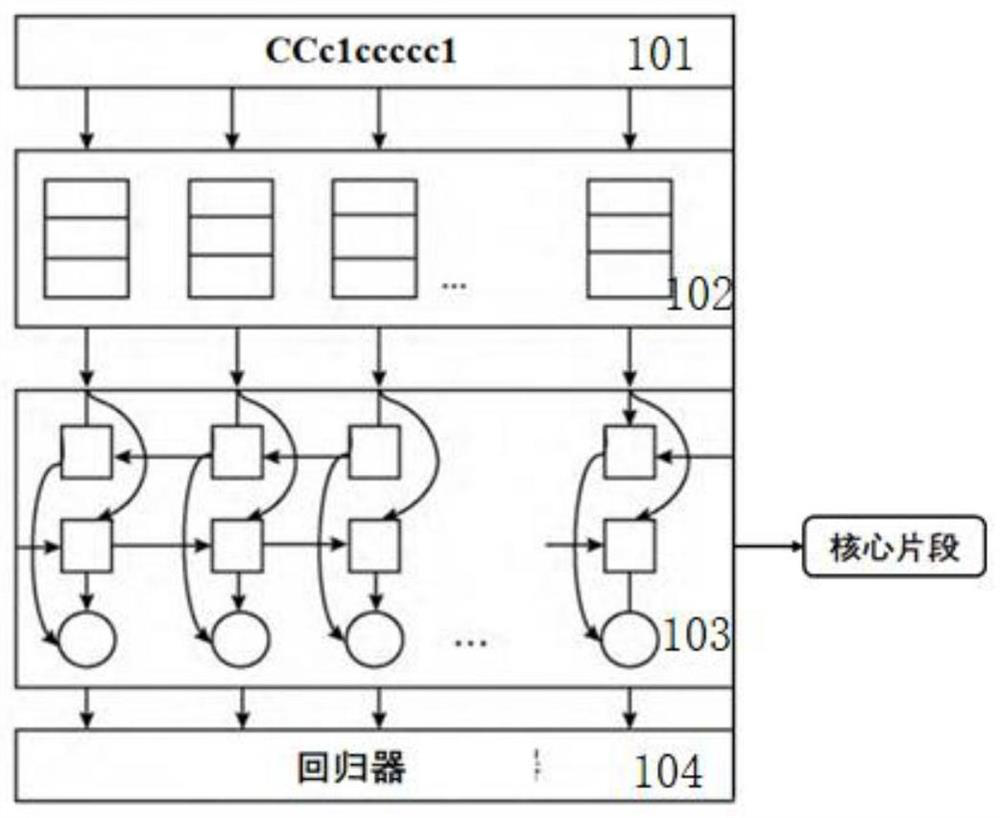

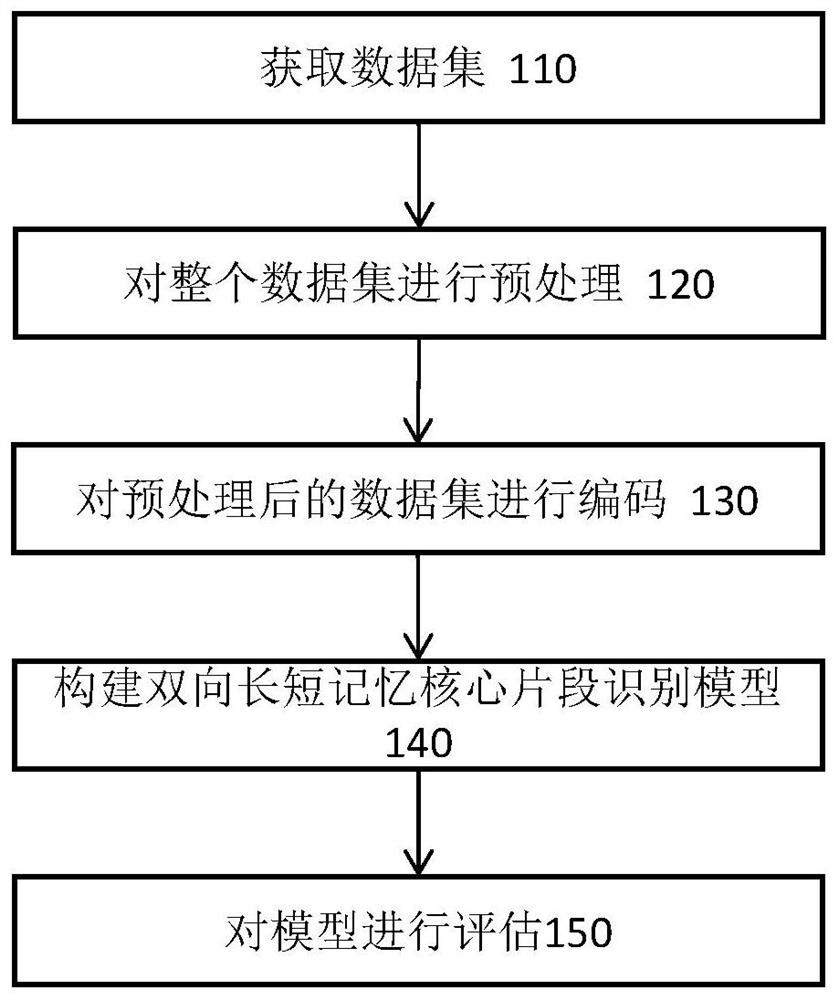

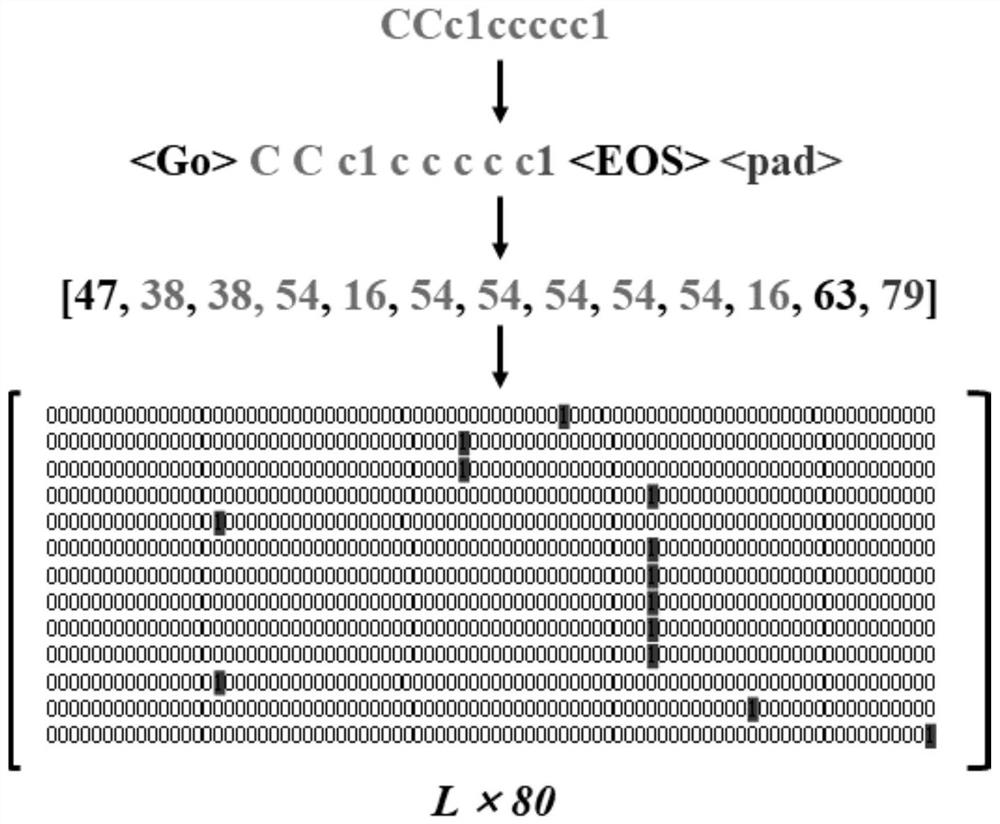

Drug small molecule activity prediction method based on bidirectional long-short memory model

The invention discloses a drug small molecule activity prediction method based on a bidirectional long-short memory model. The method comprises the steps of: obtaining a data set; preprocessing the data set: expressing all compound molecules in a data set by using SMILES, standardizing SMILES expressions of all the molecules, unifying encoding modes and sequences of atoms, bonds and connection relationships in the SMILES expressions of the molecules, and carrying out deduplication processing by using InChIKey of the molecules; encoding the pre-processed data set, in which a single element, a single number, a single symbol and the entire square bracket of the SMILES sequence are regarded as a sequence token by one-hot encoding, each token itself has chemical significance and directivity, and the combination of any tokens complies with chemical rules; constructing a bidirectional long-short memory core fragment identification model; inputting encoded data into the bidirectional long-short memory core fragment identification model to obtain a hidden state moment; and evaluating the bidirectional long-short memory core fragment identification model.

Owner:MINDRANK AI LTD

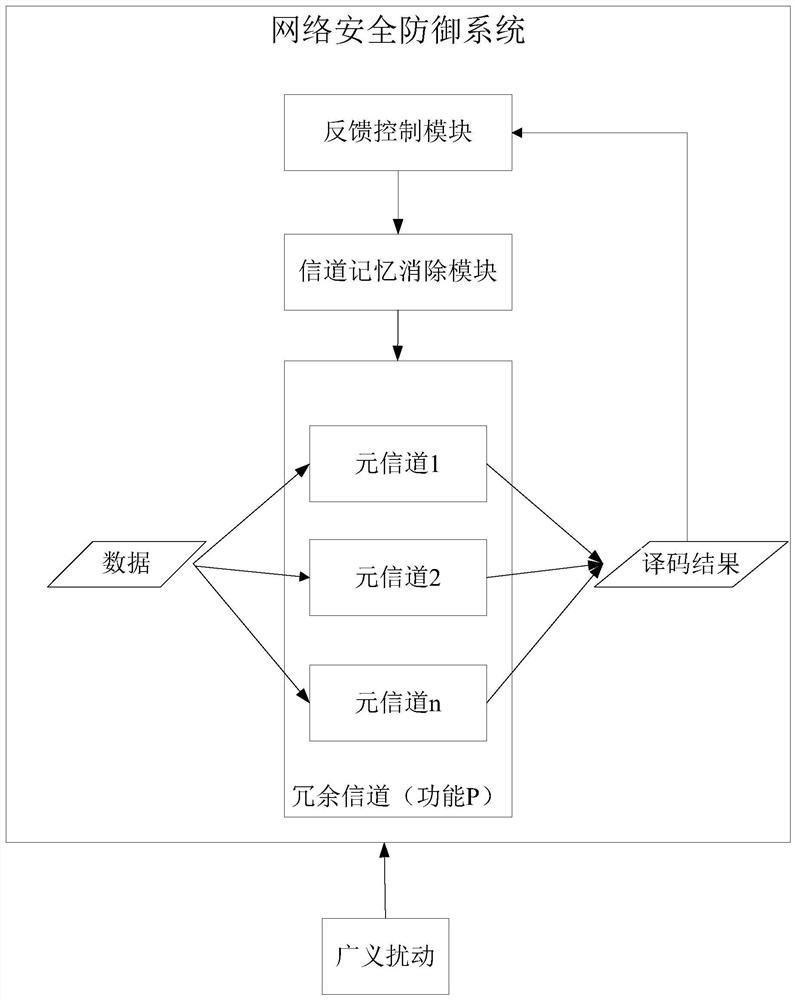

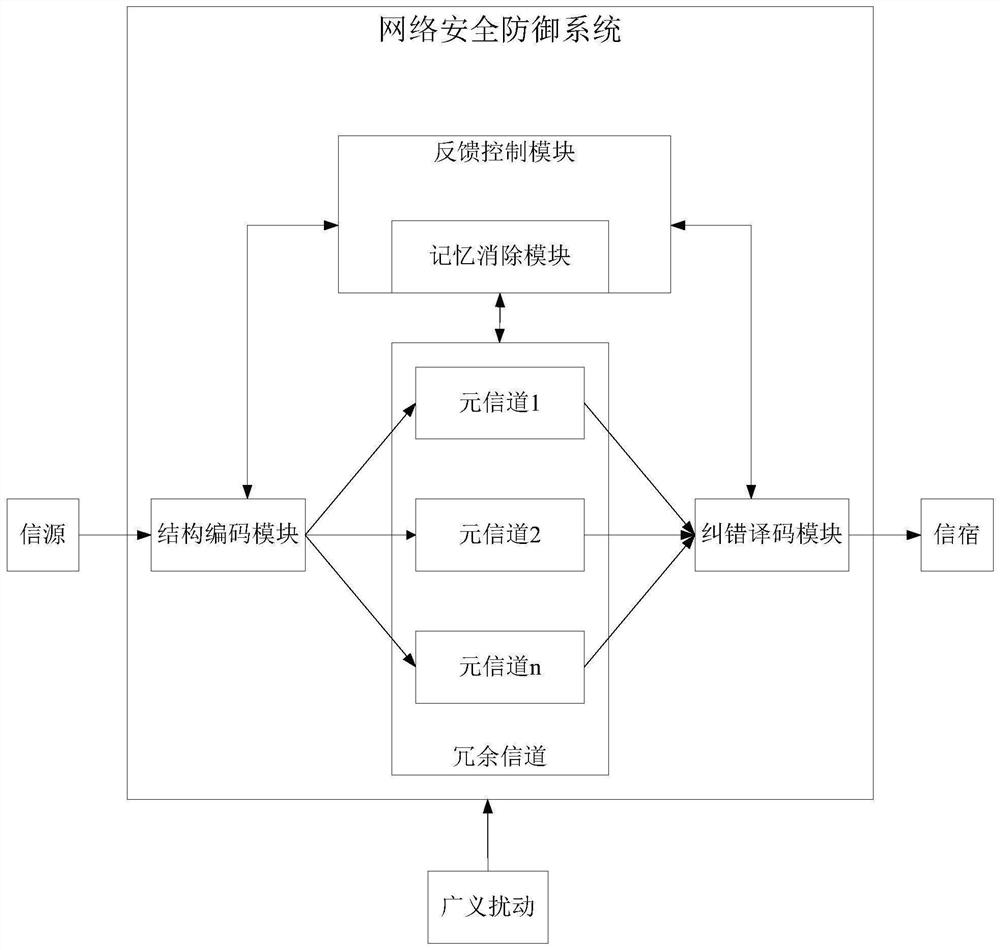

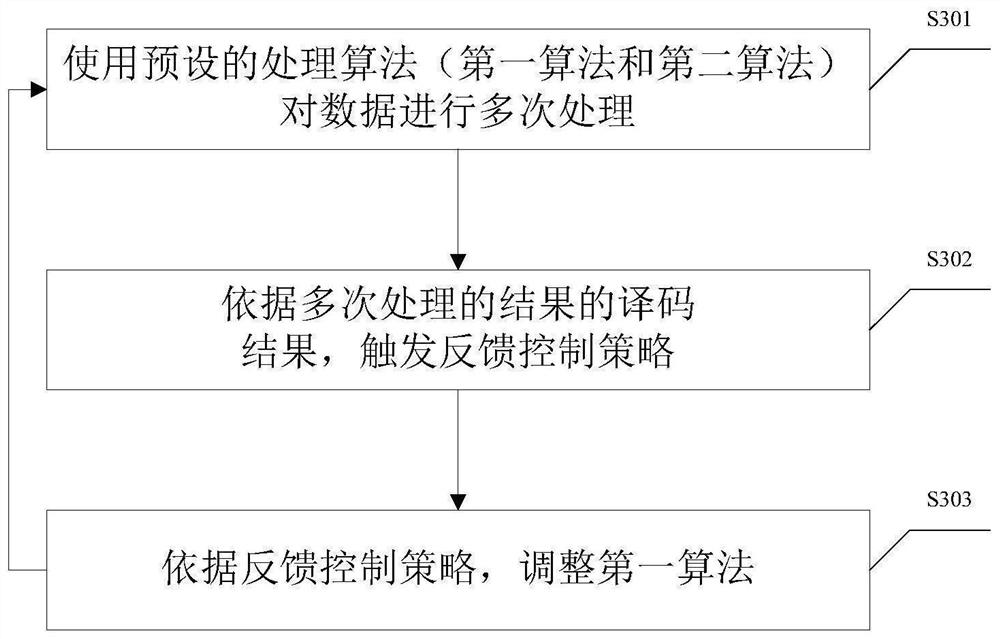

Network security defense method and system, effect evaluation method and related device thereof

According to the technical scheme, the influence of generalized disturbance on the second strategy is eliminated by using the first strategy comprising encoding, decoding and memory elimination strategies, correct calculation, storage and communication of the data are realized by acting the second strategy on the data, and the generalized disturbance is used as an external cause, so the internal cause of the fault of the second strategy can be activated, and error and failure are generated, so the error and failure caused by the activated fault can be corrected through the first strategy, andthe first strategy is obtained through adjustment of the feedback control strategy triggered by the decoding result of the processed data; therefore, a closed loop is formed between the steps, and theendogenous security problem is solved without external software and hardware. In conclusion, according to the technical scheme provided by the invention, the endogenous safety problem can be solved through an endogenous safety mechanism.

Owner:CHINA NAT DIGITAL SWITCHING SYST ENG & TECHCAL R&D CENT +1

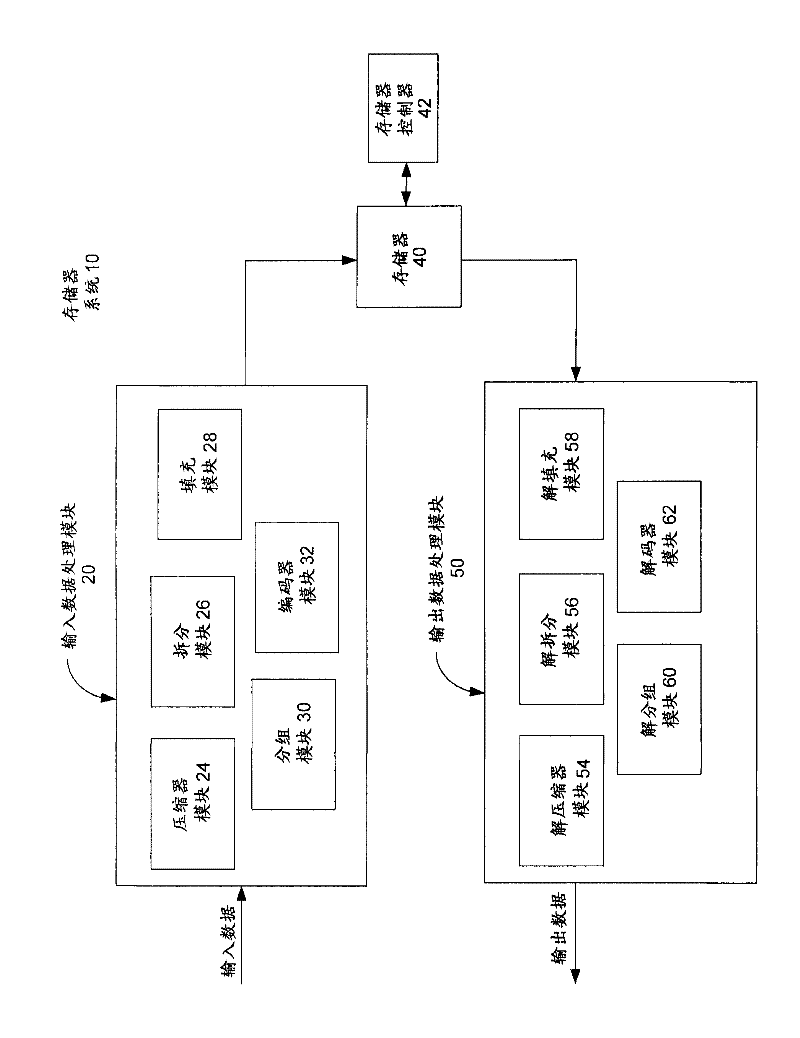

Data compression and encoding in a memory system

ActiveCN102541747AMemory architecture accessing/allocationMemory adressing/allocation/relocationData compressionData mining

The invention relates to data compression and encoding in a memory system. Embodiments provide a method comprising receiving input data comprising a plurality of data sectors; compressing the plurality of data sectors to generate a corresponding plurality of compressed data sectors; splitting a compressed data sector of the plurality of compressed data sectors to generate a plurality of split compressed data sectors; and storing the plurality of compressed data sectors, including the plurality of split compressed data sectors, in a plurality of memory pages of a memory.

Owner:MARVELL ASIA PTE LTD

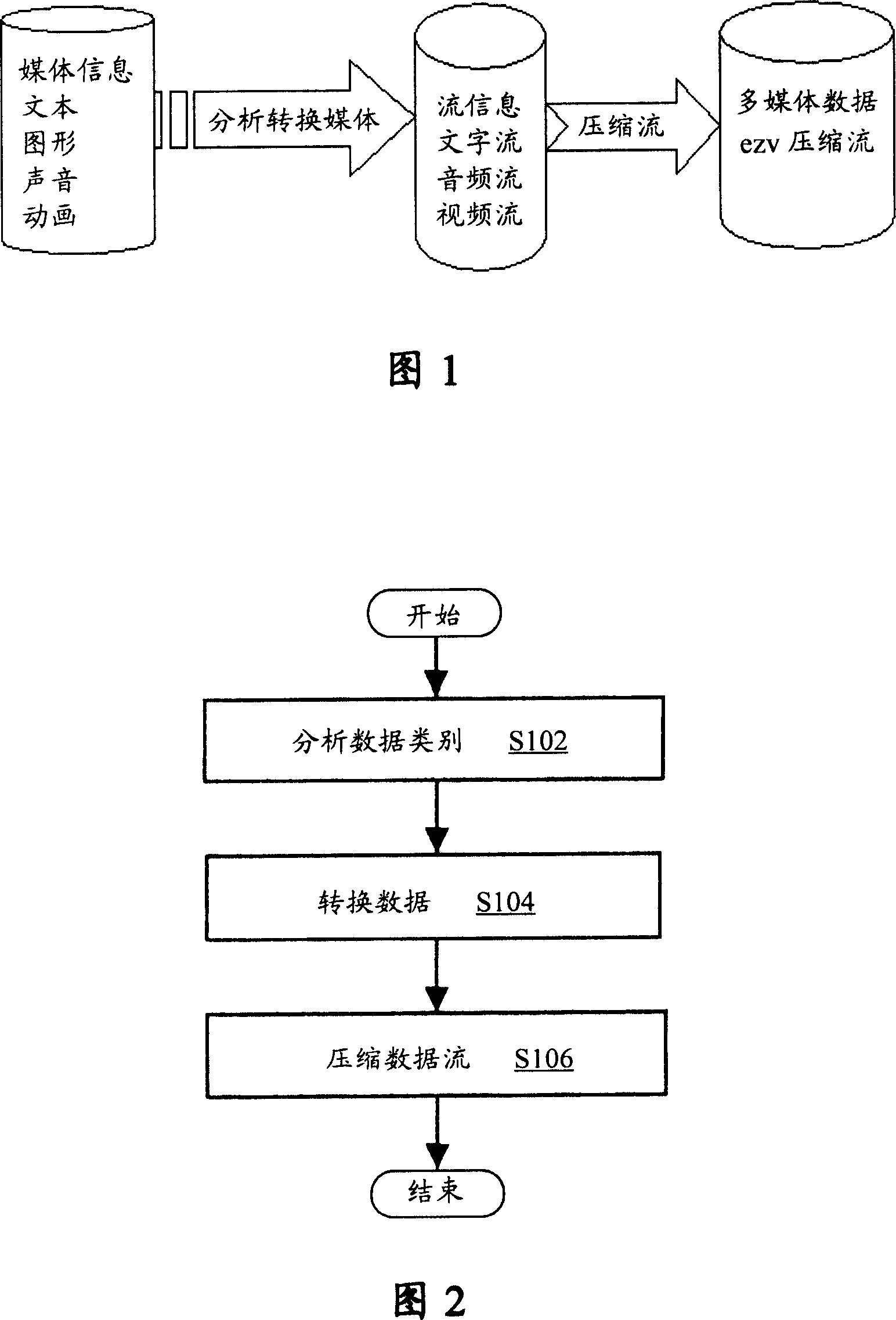

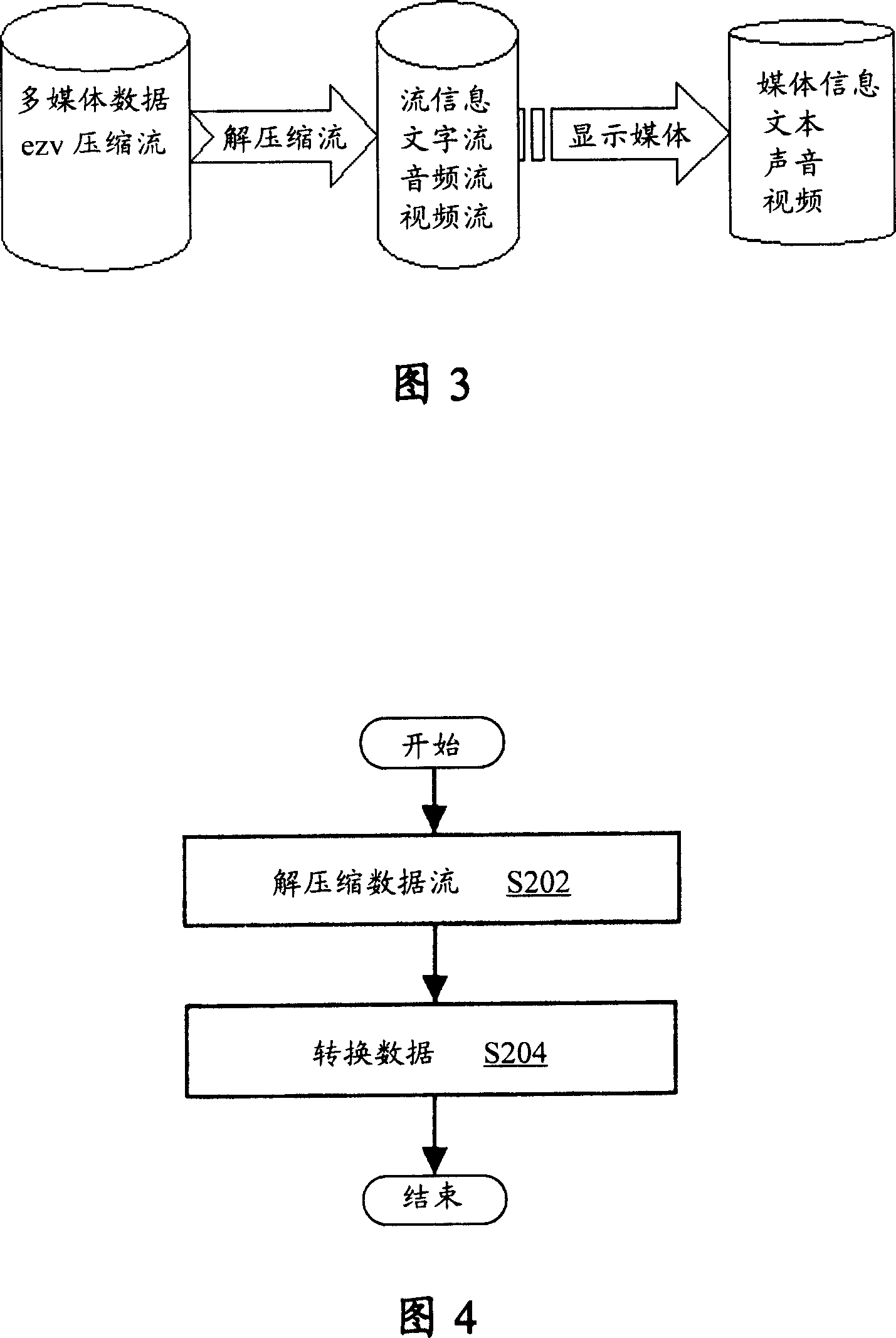

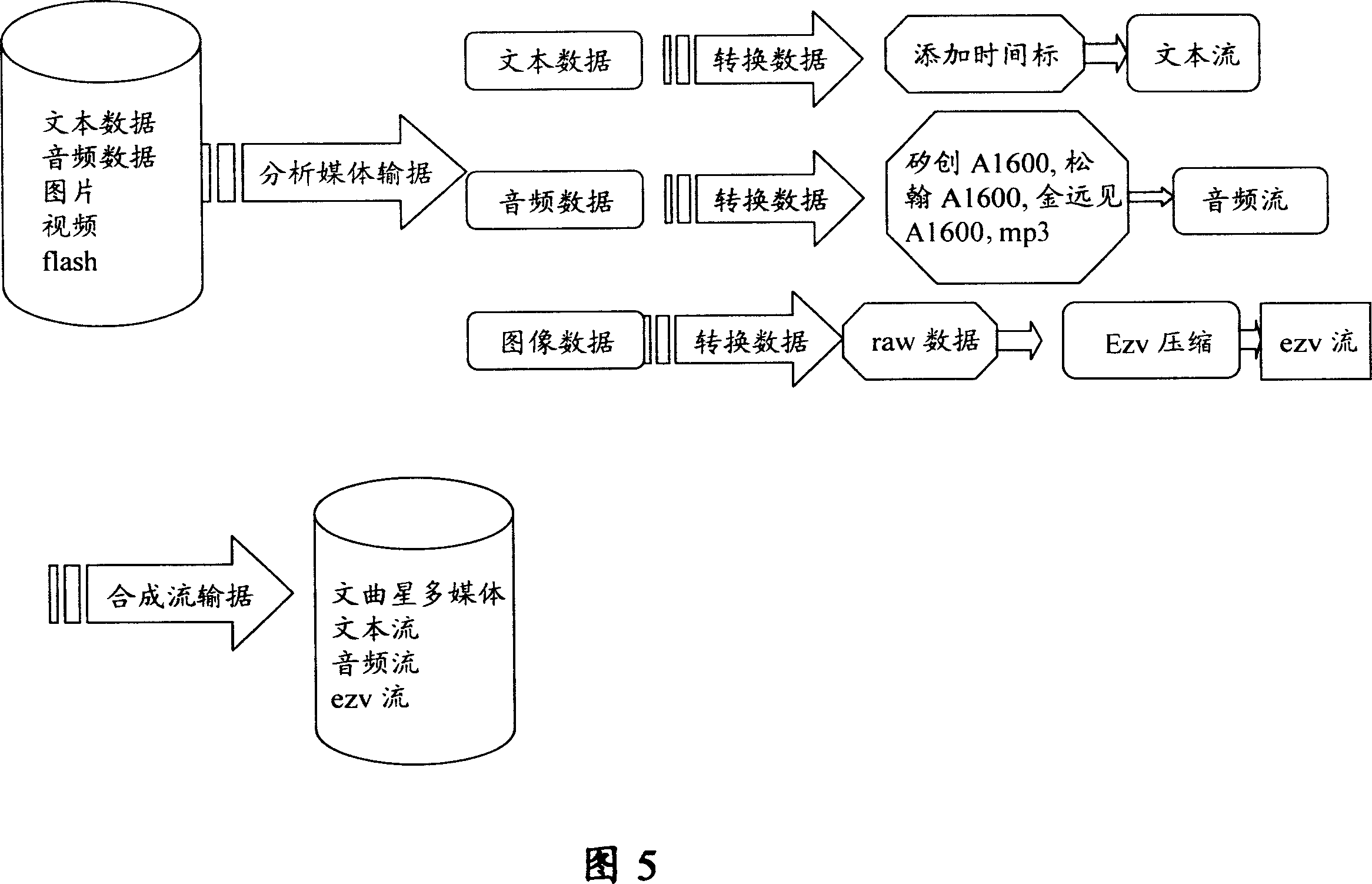

Multimedia coding-decoder and its method

InactiveCN101026753APulse modulation television signal transmissionDigital video signal modificationAnalysis dataAudio frequency

The disclosed method includes encoding method and decoding method. The encoding method includes following steps: analyzing data sorts, dividing data to text, image, animation, audio, and video; converting data, divided result together with information of time dimension are stored in format of data stream; compressing data stream, EZV lossless compression algorithm is adopted in order to suit features of smaller memory capacity in consuming electronic products since data quantity of digital audio and video information is very huge. The decoding method includes following steps: decompressing data stream, restoring data stream containing information of time dimension; converting data stream into text, image, animation, audio, and video etc. to recover original media information. The codec includes encoder of running the said encoding method, and decoder of running the said decoding method.

Owner:北京金远见电脑技术有限公司

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com