Neural network low-bit quantization method

A technology of neural network and quantization method, applied in the field of data compression, can solve the problems of large memory and limit the application of neural network, and achieve the effect of strong practicability, improving quantization efficiency and improving accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023]The specific embodiments of the present invention will be described below in conjunction with the drawings.

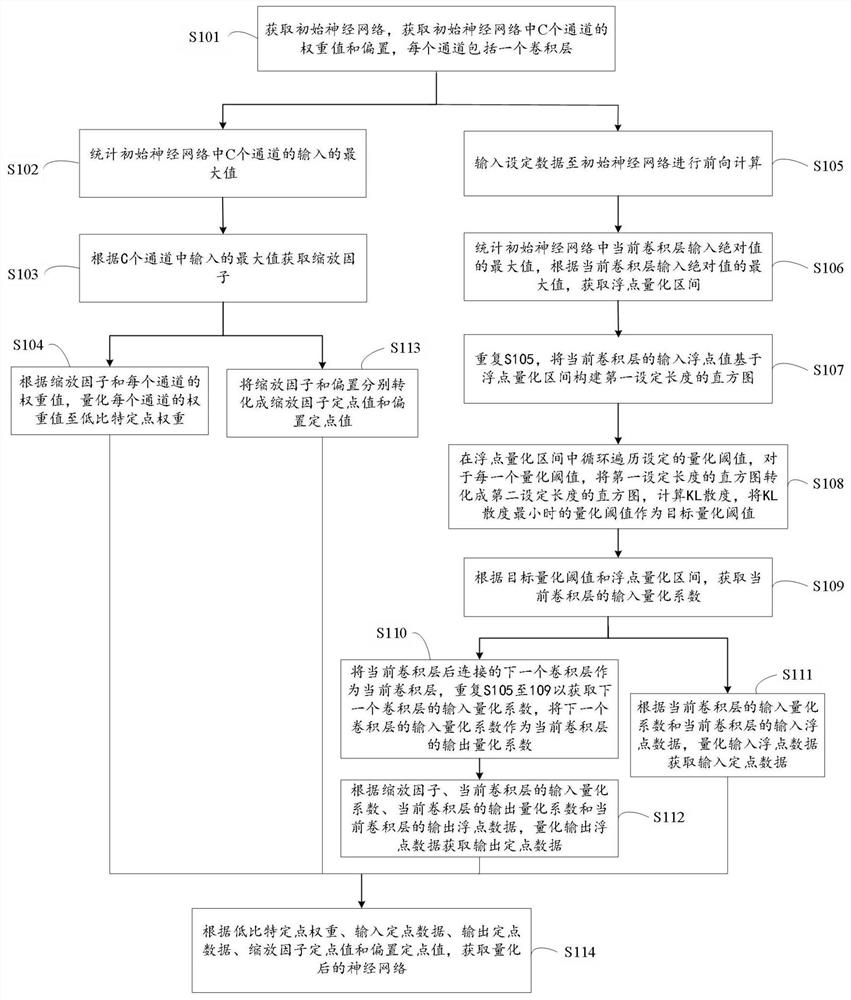

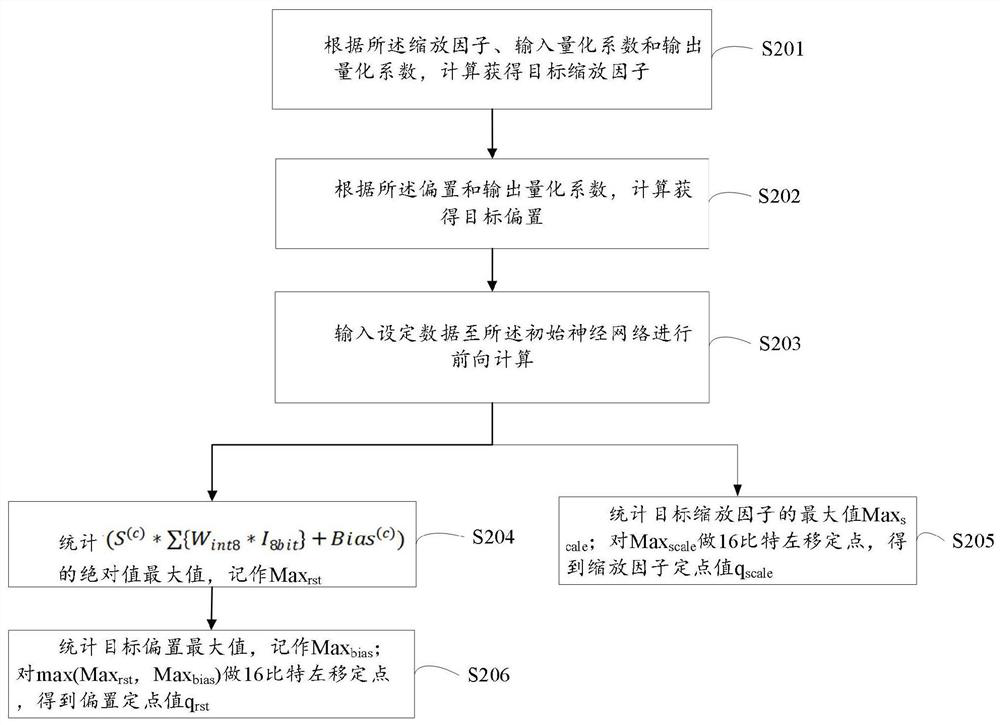

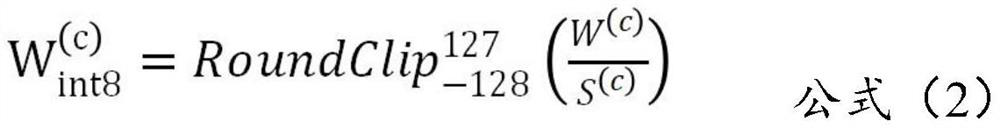

[0024]Such asfigure 1 As shown, an embodiment of the present invention is a neural network low-bit quantization method, including S101 to S114.

[0025]S101: Obtain an initial neural network, and acquire the weight values and biases of C channels in the initial neural network, and each channel includes a convolutional layer.

[0026]The initial neural network can be applied to tasks such as image classification, target detection and natural language processing. The initial neural network has been trained. The initial neural network is a floating-point storage operation, that is, it turns out that a weight needs to be represented by float32, that is, the initial neural network is a floating-point operation.

[0027]Quantify the initial neural network, that is, convert floating-point operations into integer storage operations, and realize the compression technology of the model's ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com