Patents

Literature

207 results about "Fixed-point arithmetic" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

In computing, a fixed-point number representation is a real data type for a number that has a fixed number of digits after (and sometimes also before) the radix point (after the decimal point '.' in English decimal notation). Fixed-point number representation can be compared to the more complicated (and more computationally demanding) floating-point number representation.

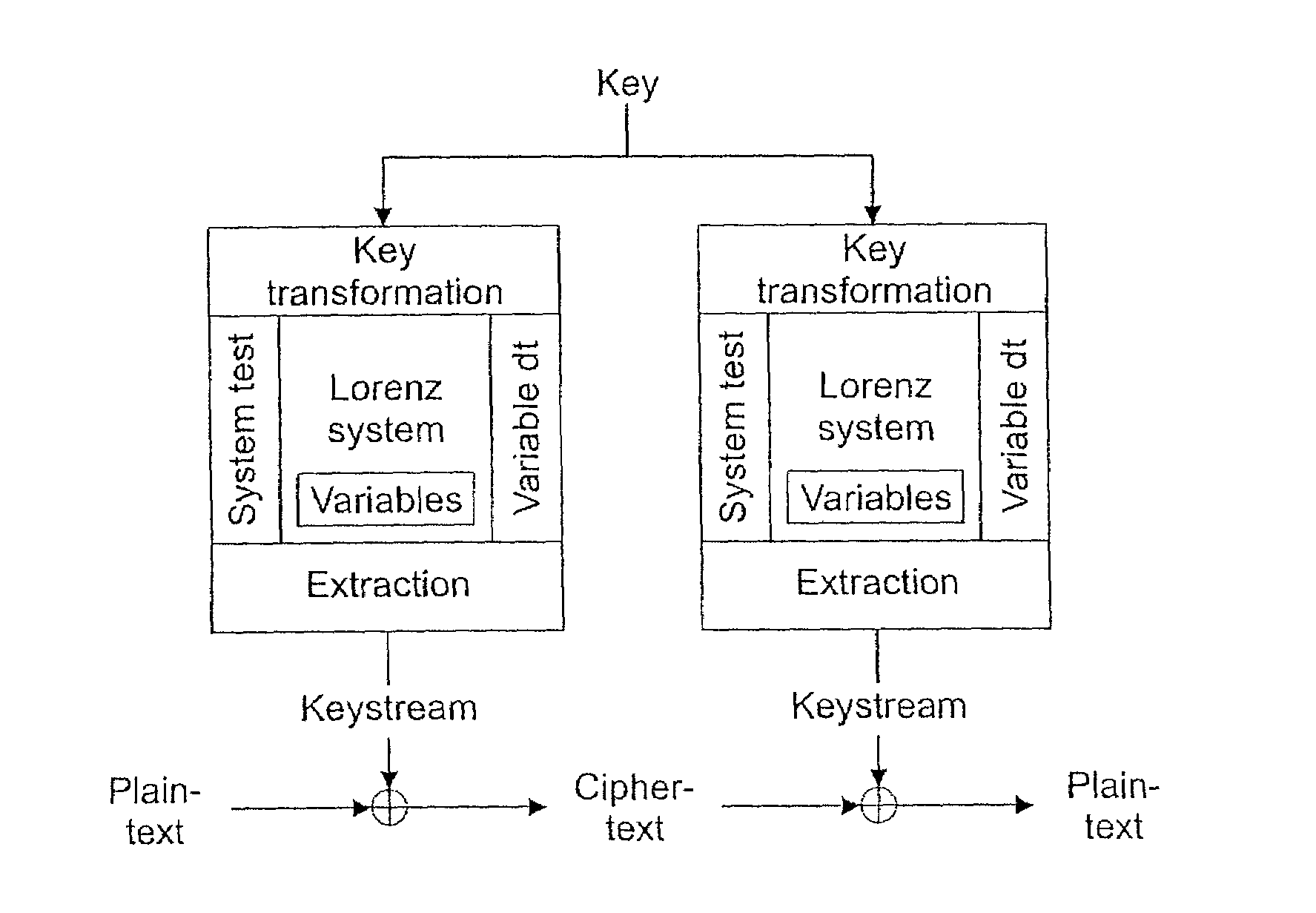

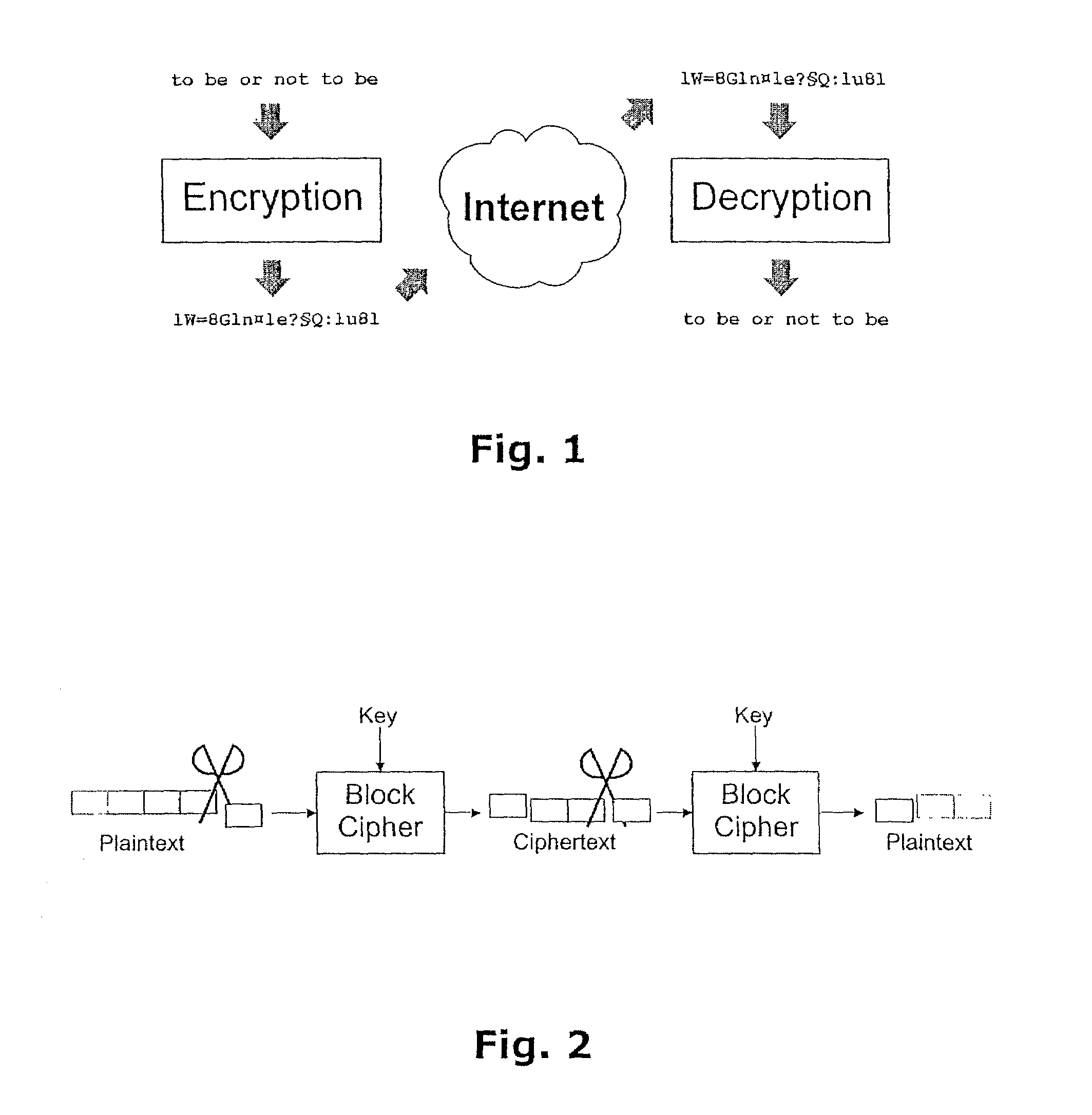

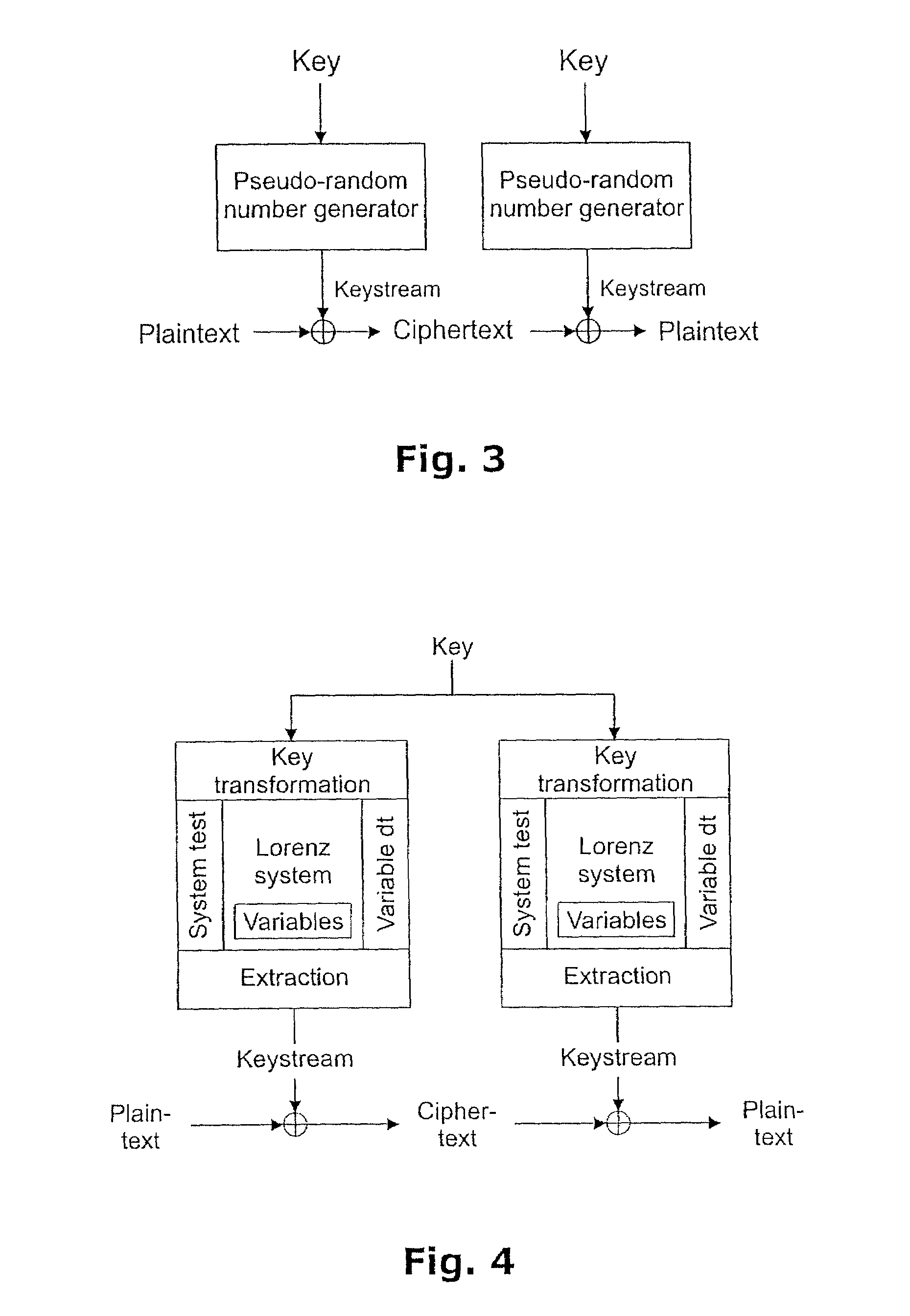

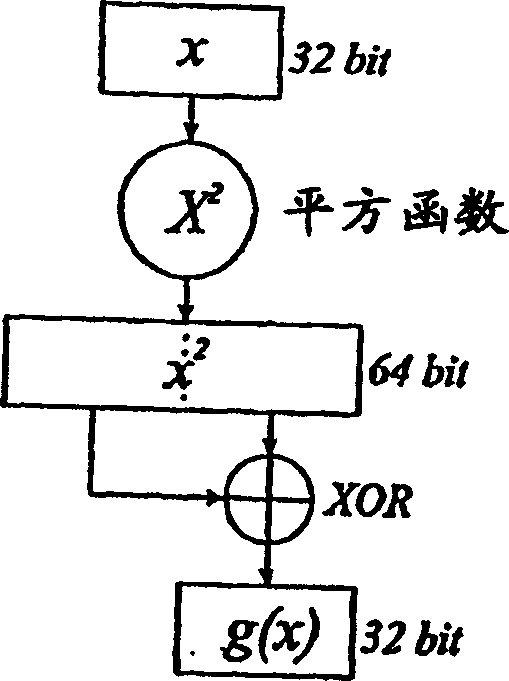

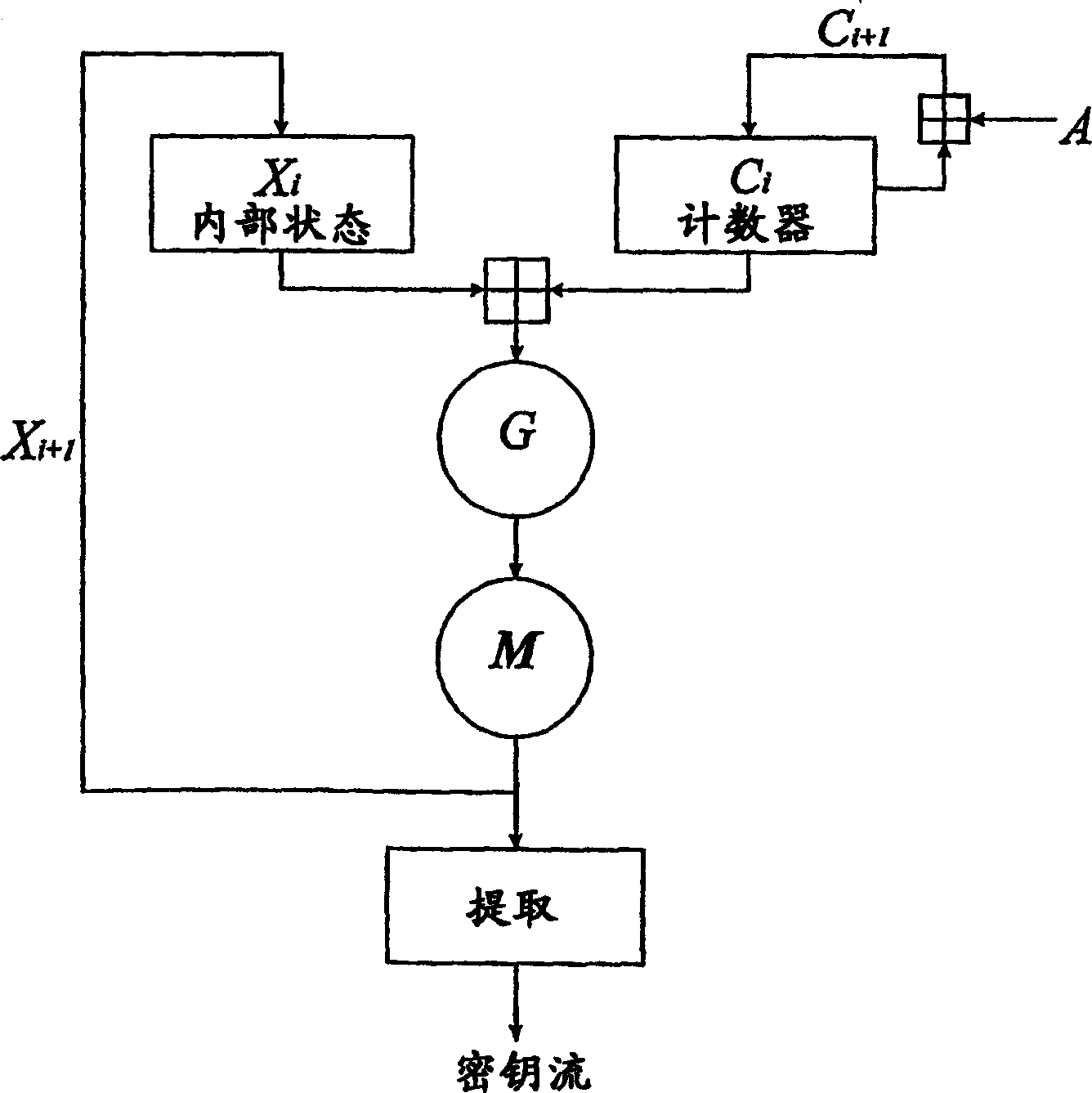

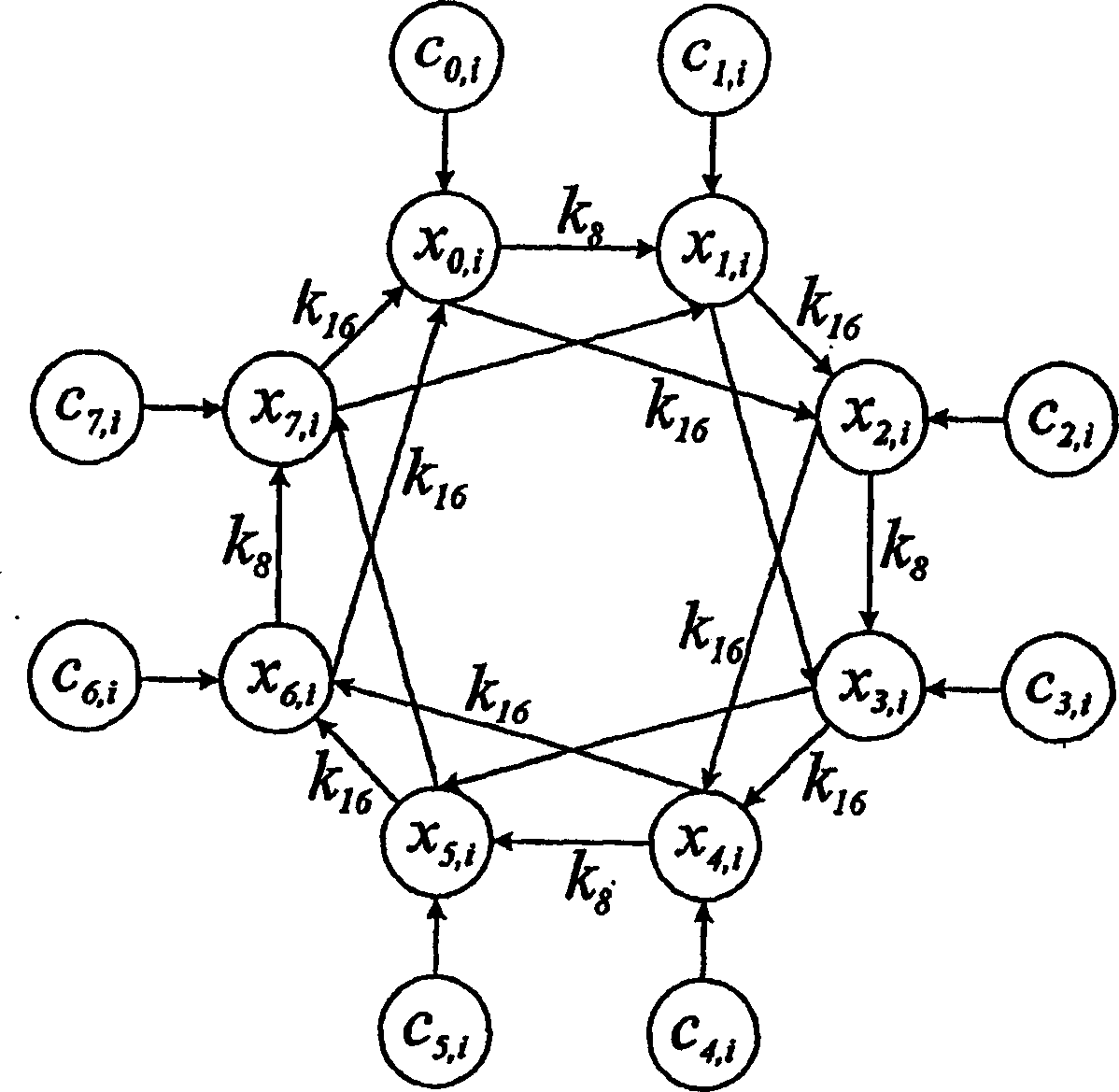

Method of generating pseudo-random numbers in an electronic device, and a method of encrypting and decrypting electronic data

InactiveUS7170997B2Compromise safetyQuick buildRandom number generatorsSecret communicationTheoretical computer scienceElectronic data

A method of performing numerical computations in a mathematical system with at least one function, including expressing the mathematical system in discrete terms, expressing at least one variable of the mathematical system as a fixed-point number, performing the computations in such a way that the computations include the at least one variable expressed as a fixed-point number, obtaining, from the computations, a resulting number, the resulting number representing at least one of at least a part of a solution to the mathematical system, and a number usable in further computations involved in the numerical solution of the mathematical system, and extracting a set of data which represents at least one of a subset of digits of the resulting number, and a subset of digits of a number derived from the resulting number.

Owner:CRYPTICO

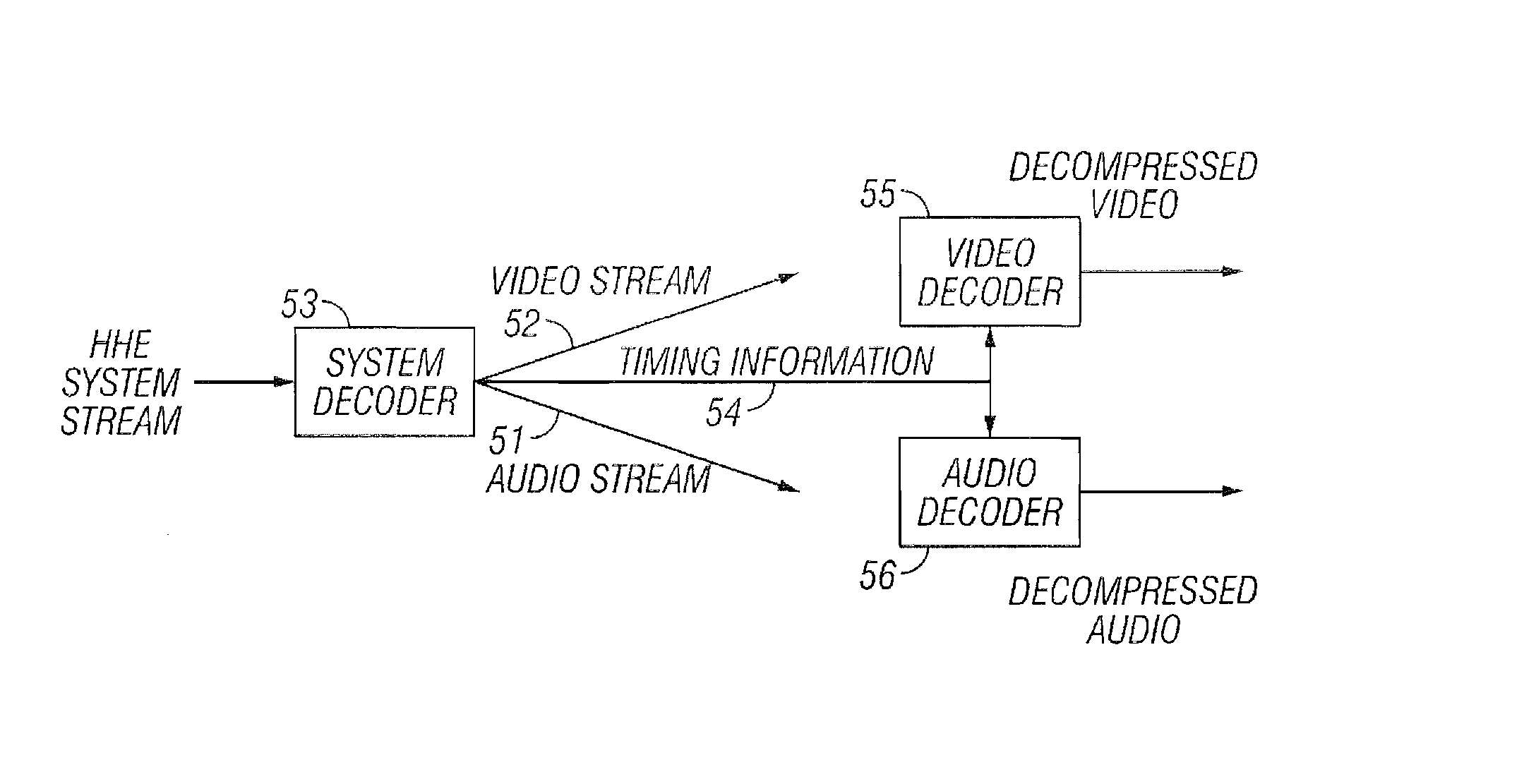

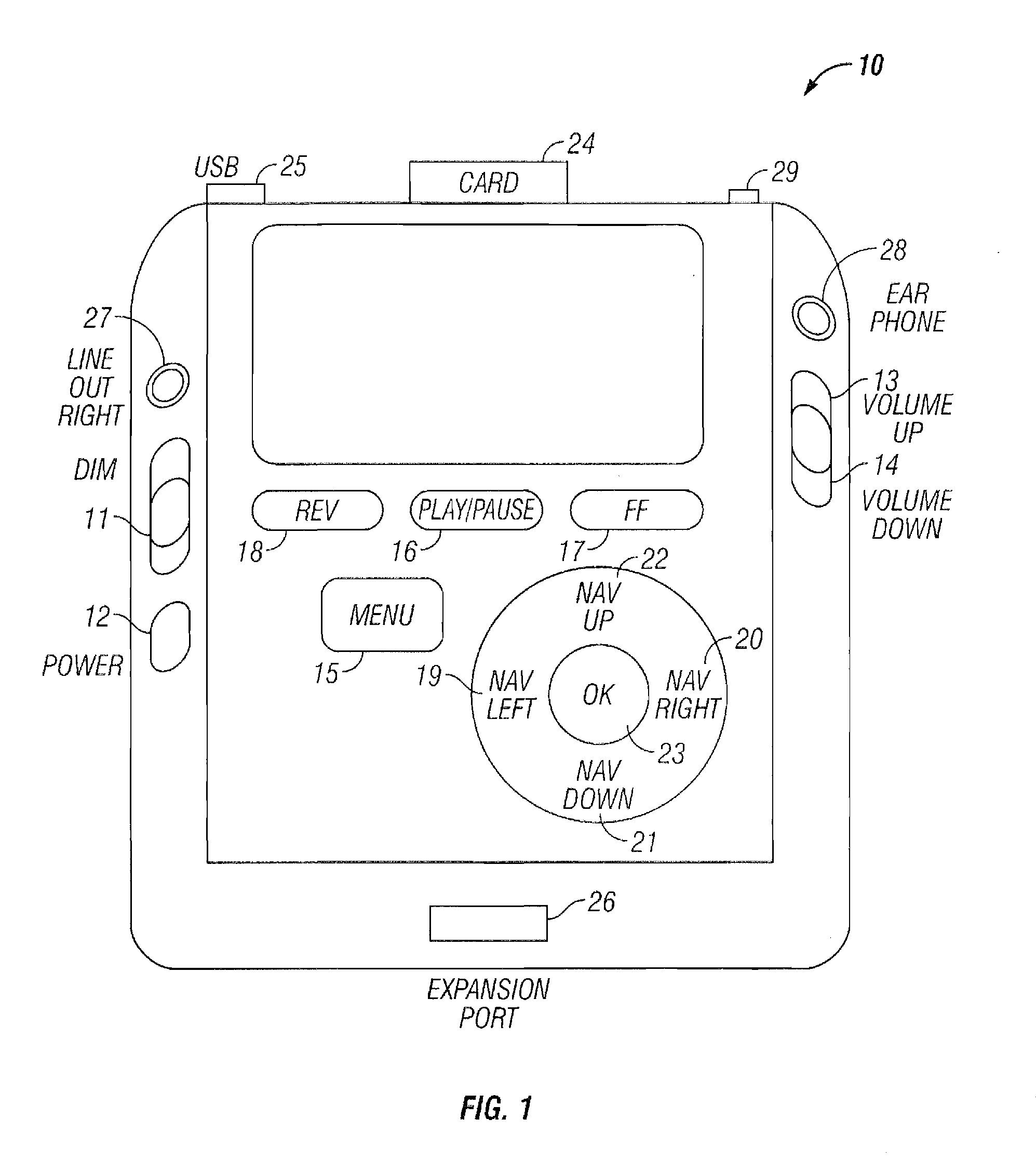

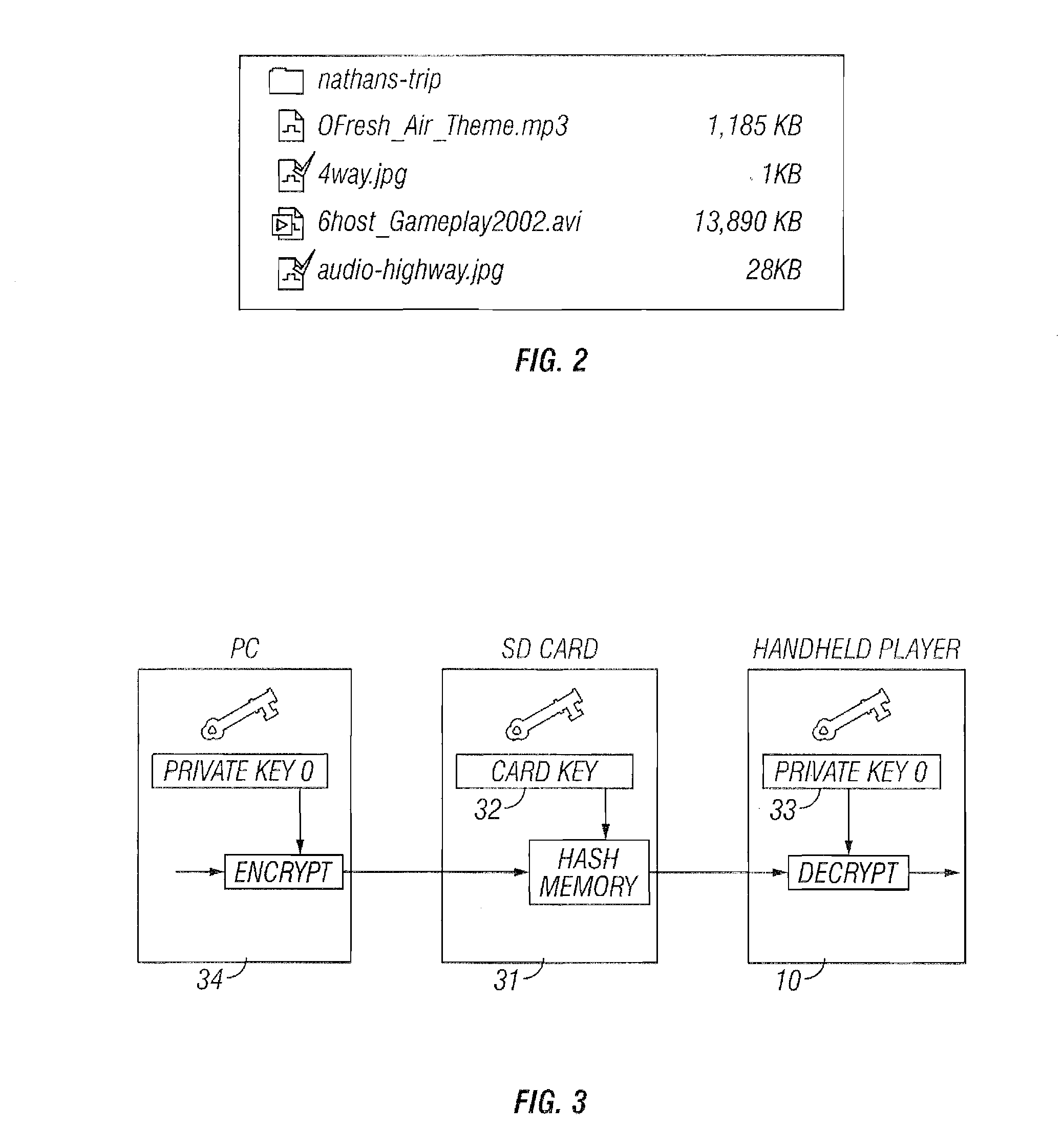

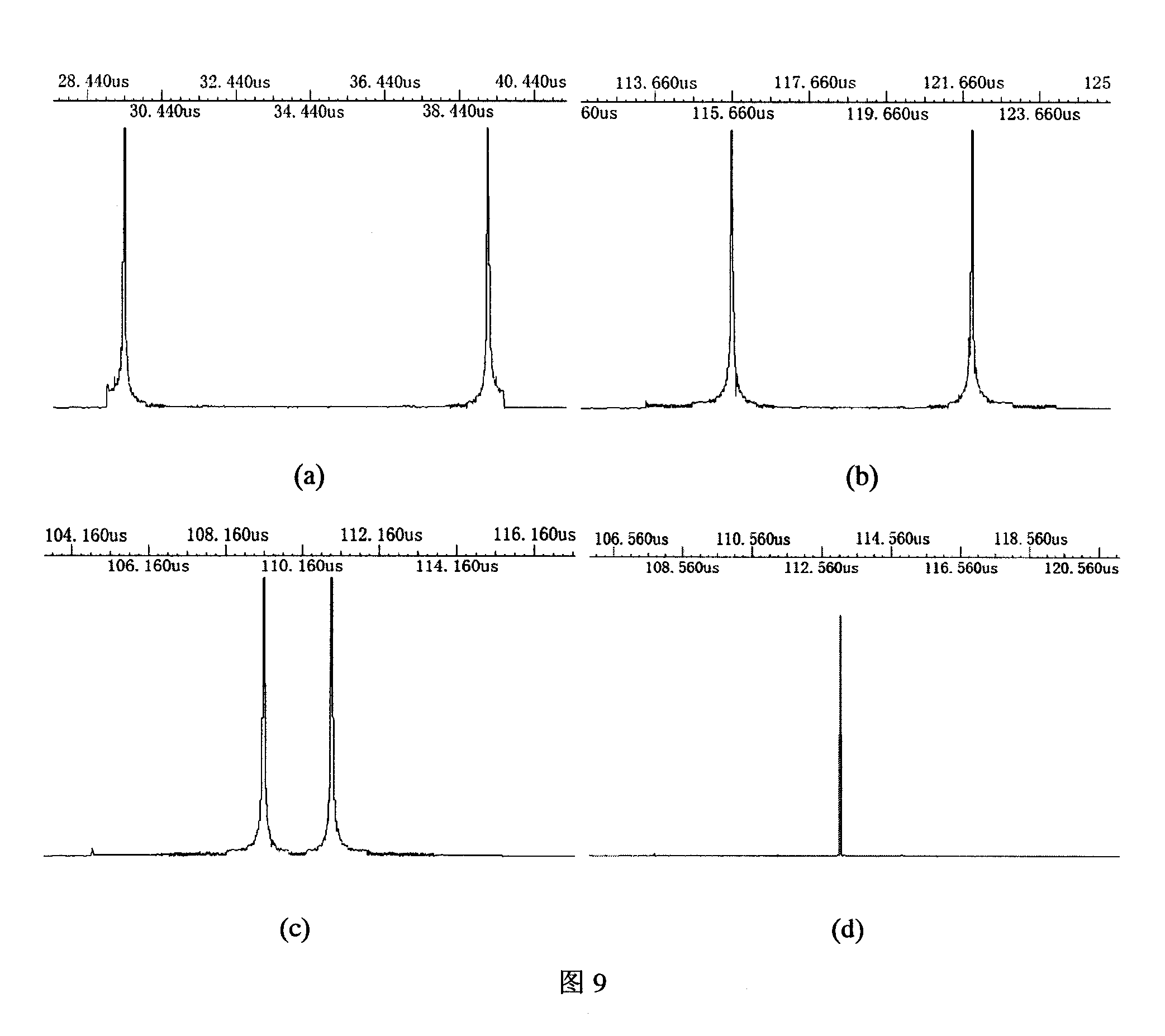

Method and apparatus for coding information

InactiveUS20060274835A1Reduce complexityTelevision system detailsPicture reproducers using cathode ray tubesVideo playerComputer graphics (images)

The invention provides a method and apparatus for coding information that is specifically adapted for smaller presentation formats, such as in a hand held video player. The invention addresses, inter alia, reducing the complexity of video decoding, implementation of an MP3 decoder using fixed point arithmetic, fast YcbCr to RGB conversion, encapsulation of a video stream and an MP3 audio stream into an AVI file, storing menu navigation and DVD subpicture information on a memory card, synchronization of audio and video streams, encryption of keys that are used for decryption of multimedia data, and very user interface (Ul) adaptations for a hand held video player that implements the improved coding invention herein disclosed.

Owner:INTERNET ENTERTAINMENT LLC

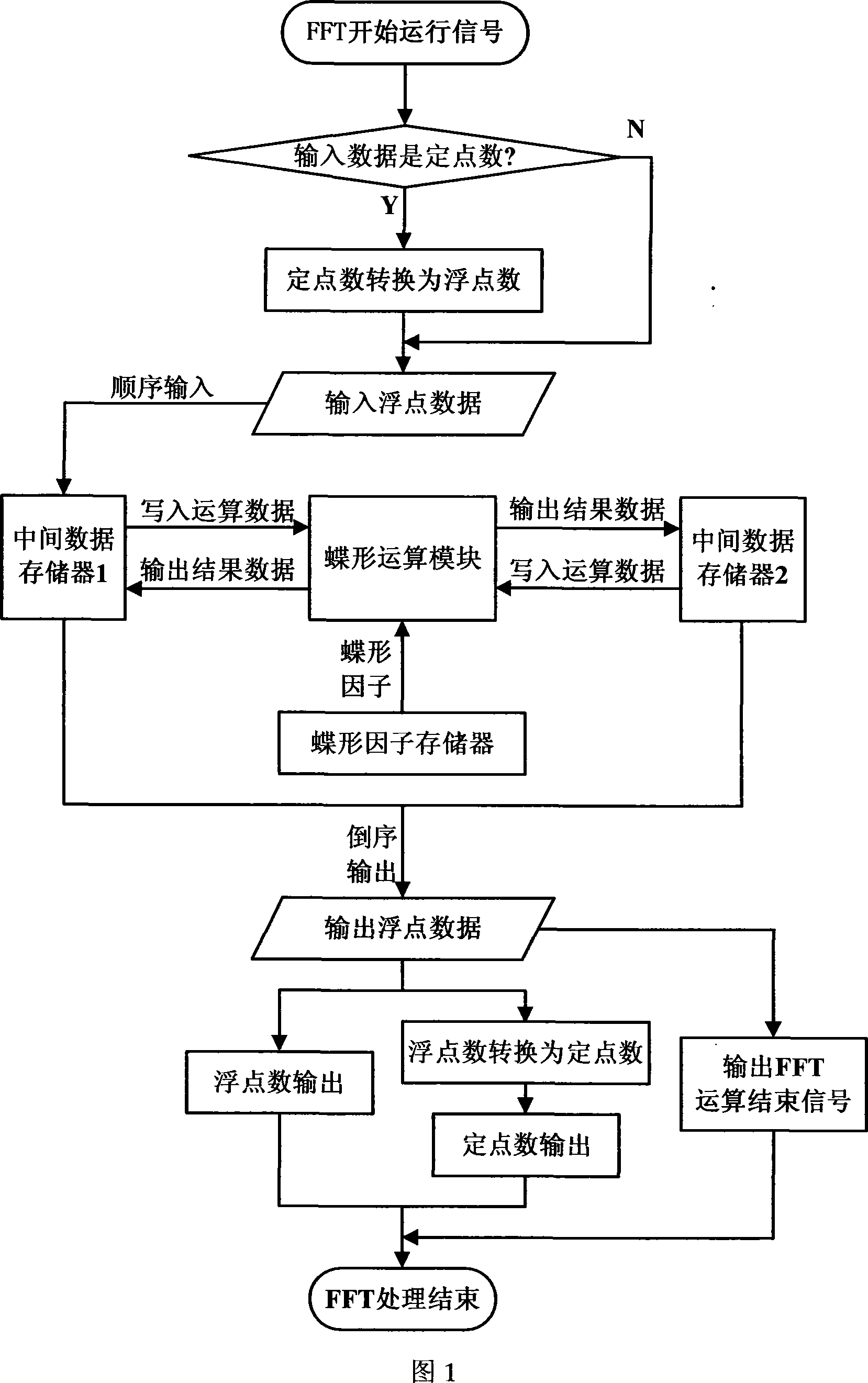

Method for processing floating-point FFT by FPGA

InactiveCN101231632AImprove computing efficiencyImprove processing precisionComplex mathematical operationsDigital signal processingRadar

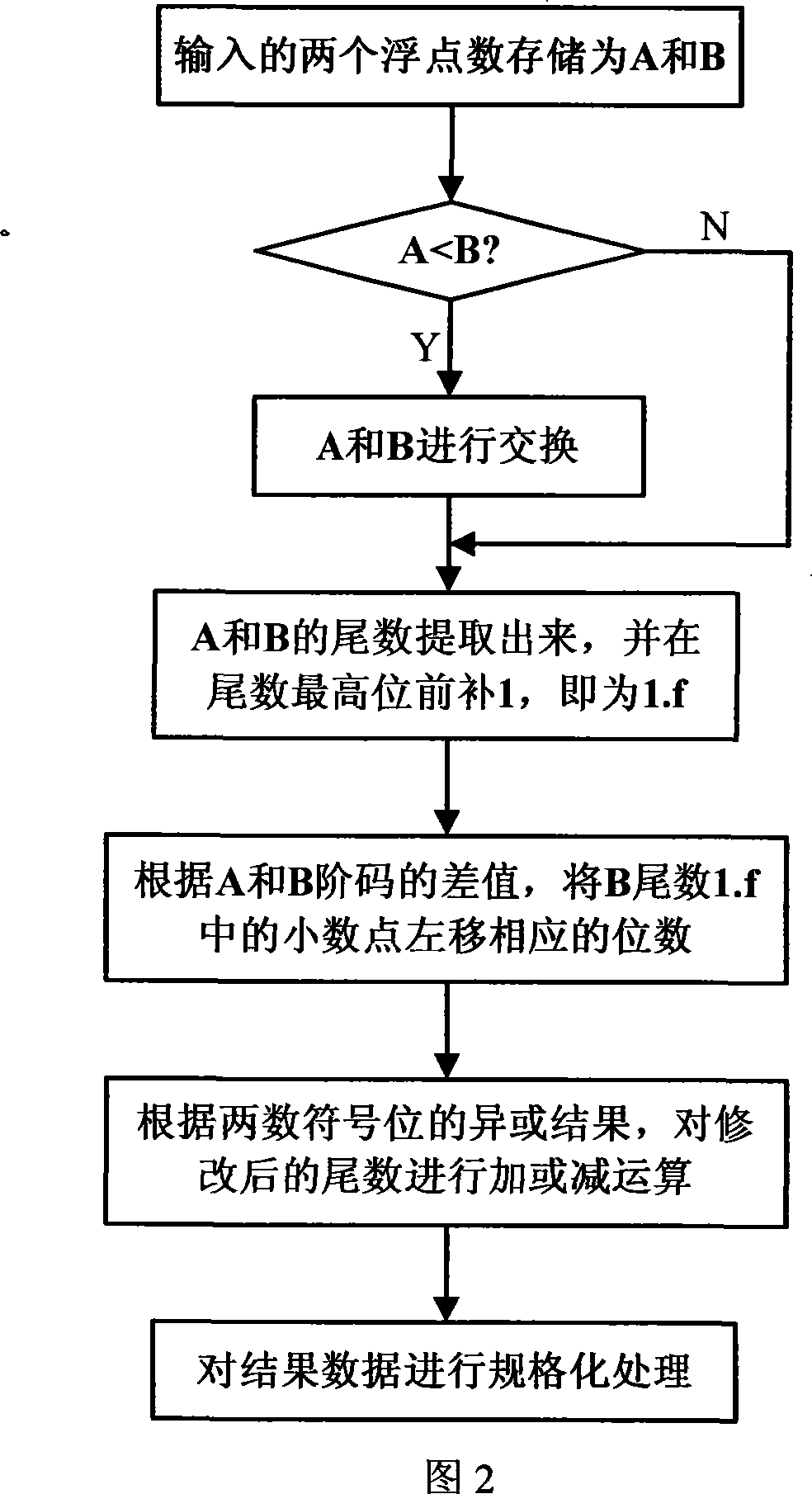

The invention discloses a method which utilizes FPGA to carry out the floating-point FFT processing, and relates to the signal processing technical field. The method aims to avoid the deficiency of the prior FFT processing method, exceeds the universal DSP processing method in the operation efficiency, and can finish the whole FFT processing in a shorter clock cycle. The method has the realization process that the input data are processed to be floating-point numbers; floating-point data are stored into an intermediate data memory 1 in order; the data are read from the intermediate data memory 1 to carry out a butterfly operation, and the processing result is stored into an intermediate data memory 2; the data are read from the intermediate data memory 2 to carry out the butterfly operation, and the processing result is stored in the intermediate data memory 1; the two operations are alternatively carried out until the FFT processing is over; the operation result is read from the intermediate data memory 1 or 2 according to the inverted order of the address; the output floating-point data are processed to be fixed-point numbers, and are output together with the floating-point result. The invention is applicable to the digital signal processing technology field, such as radar, communication, images, etc.

Owner:XIDIAN UNIV

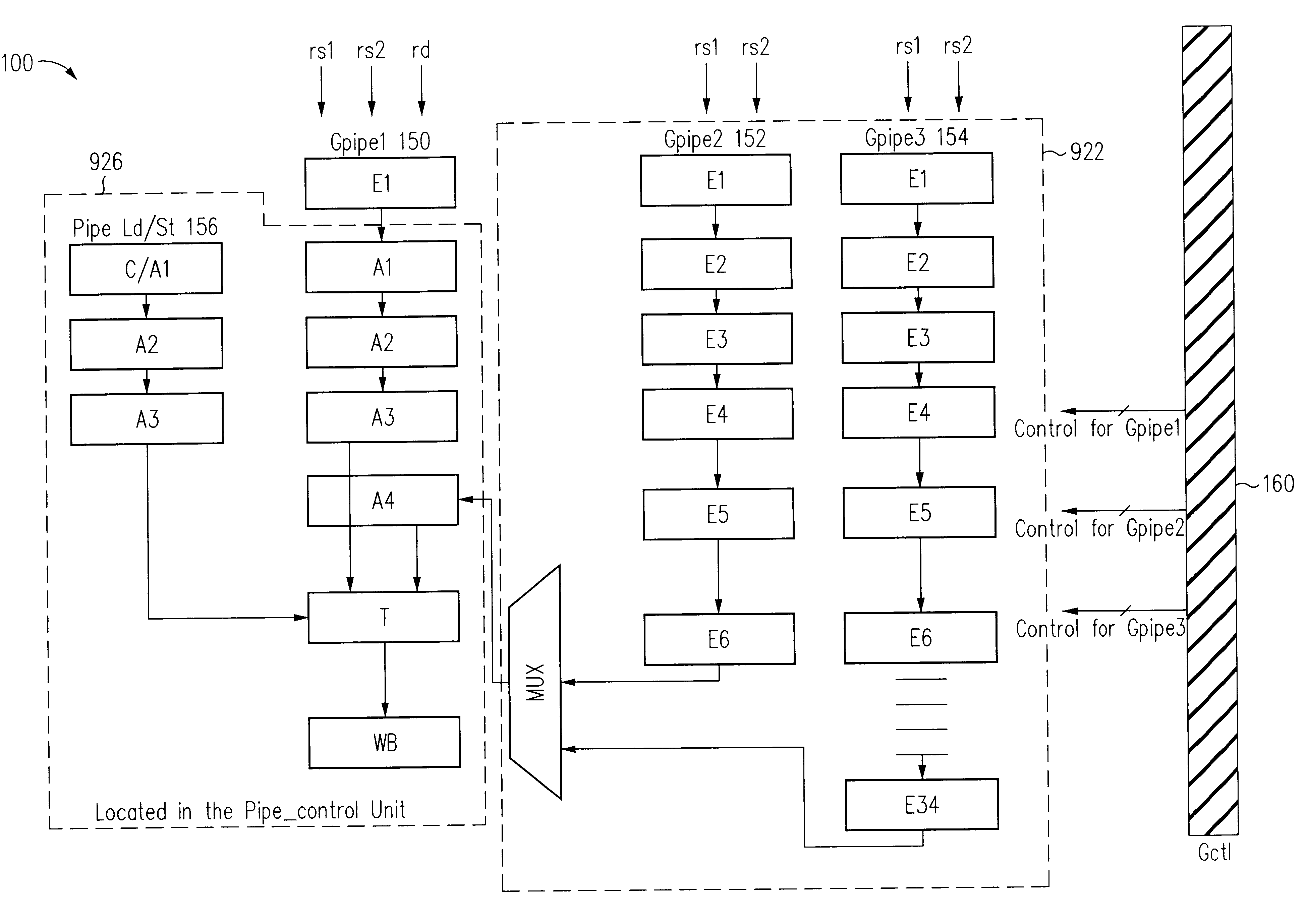

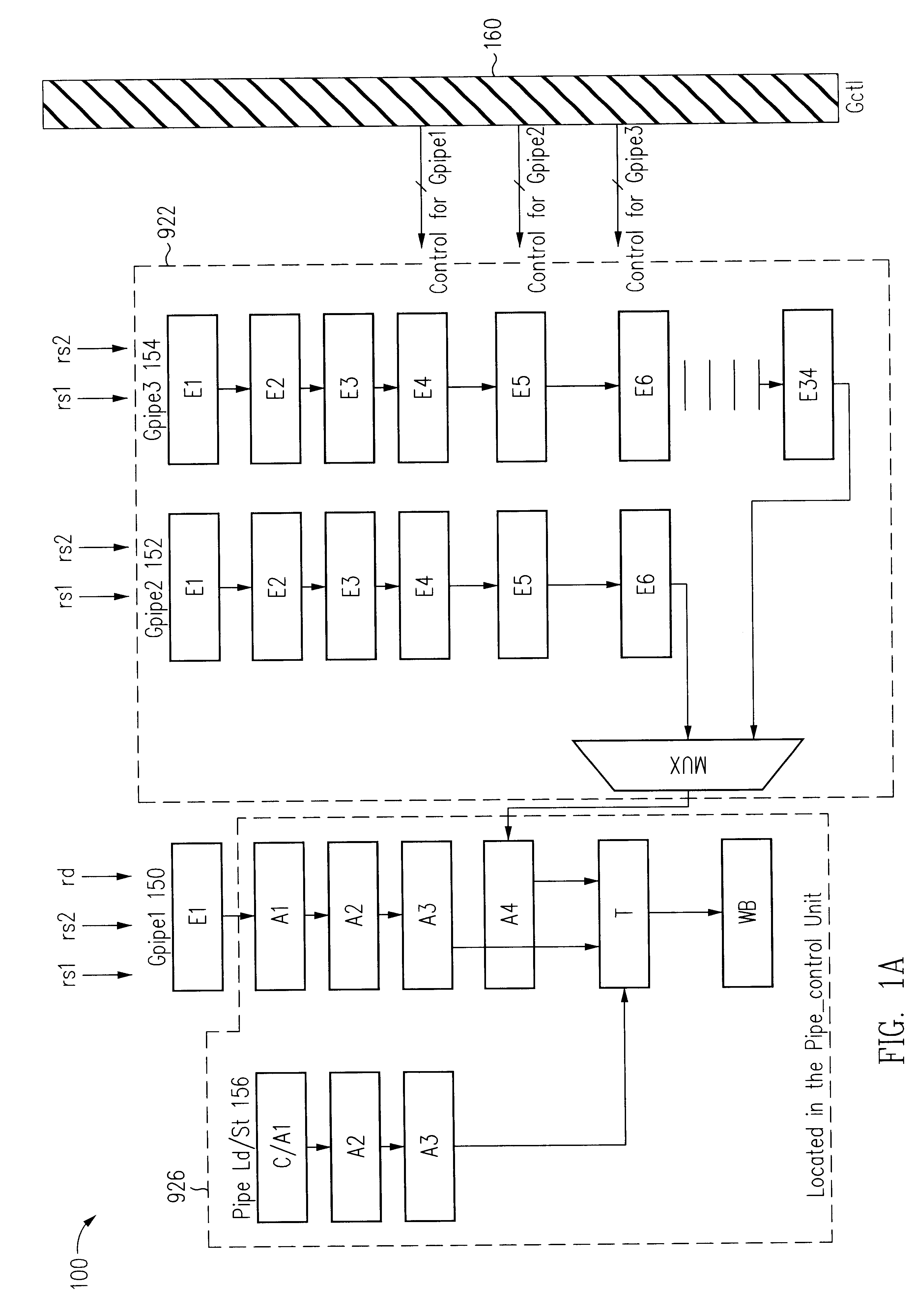

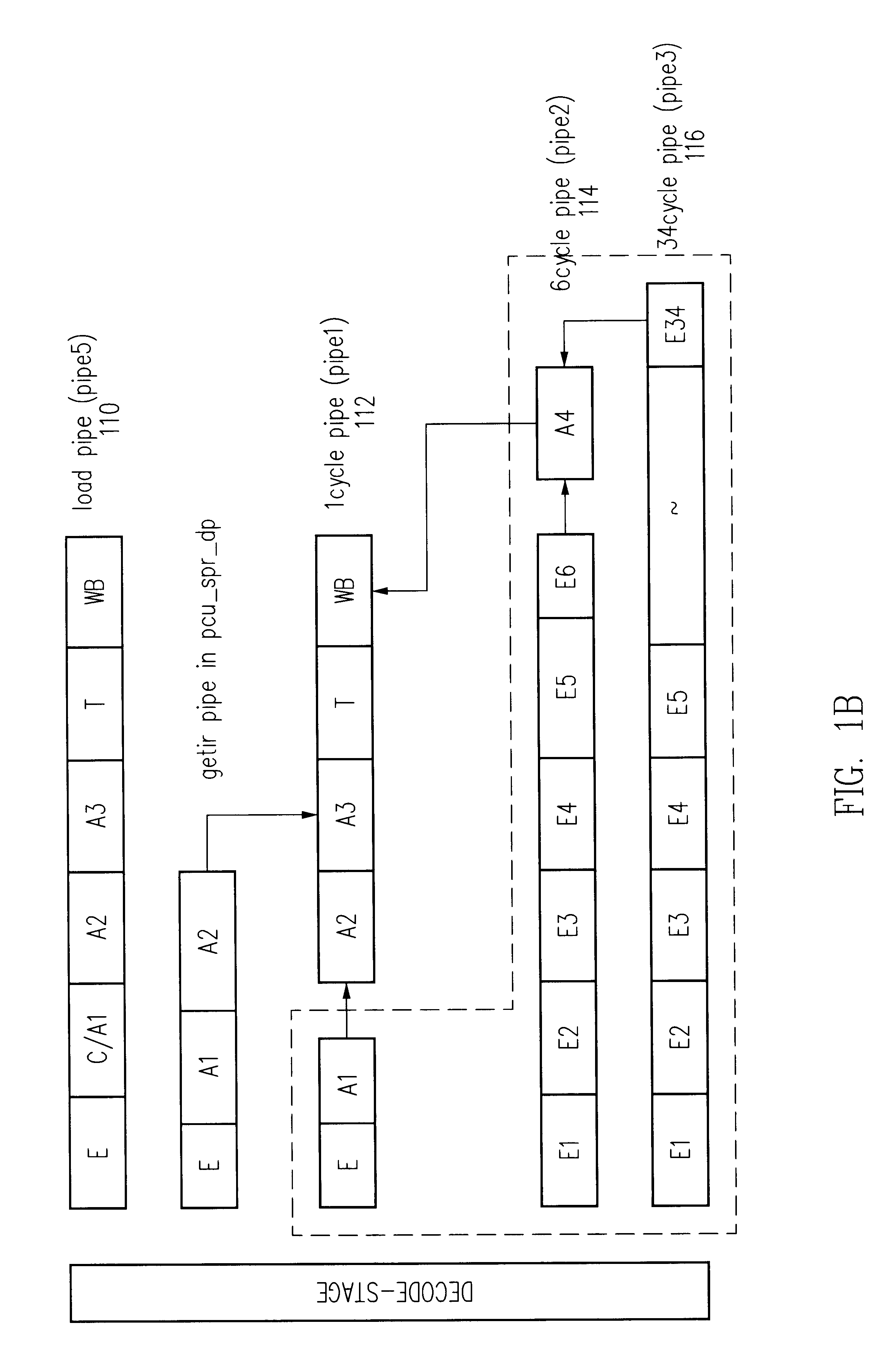

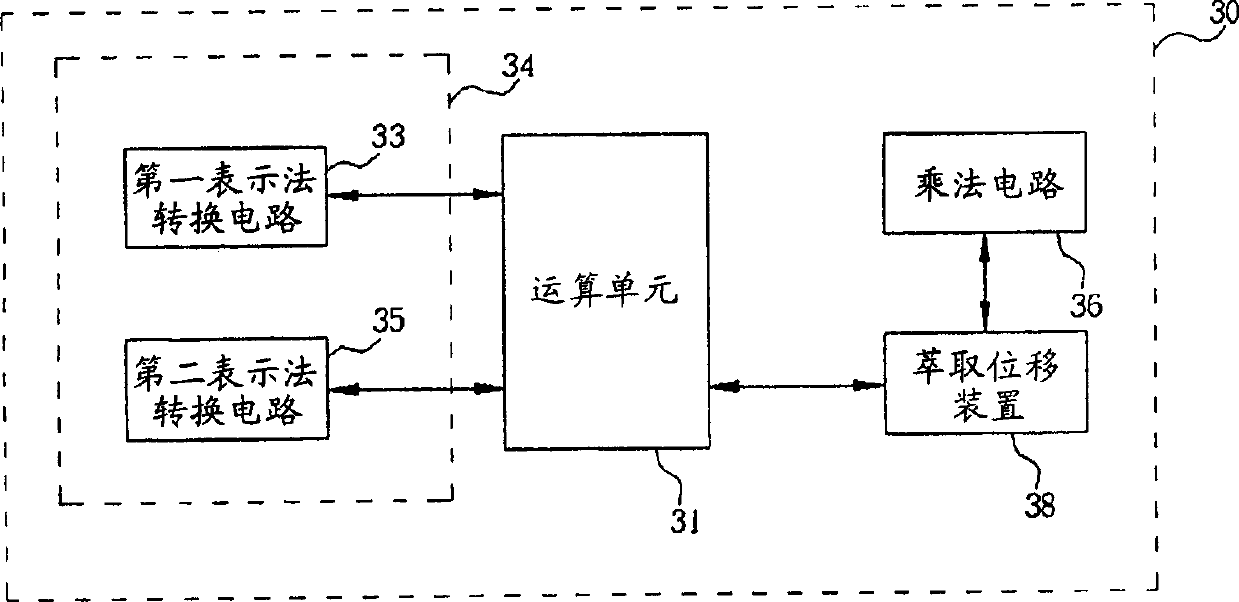

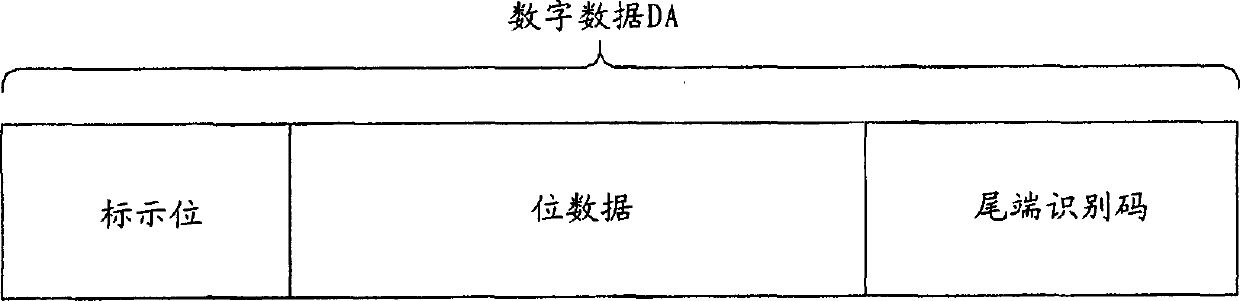

Compound dynamic preset number representation and algorithm, and its processor structure

InactiveCN1658153BSave resourcesReduce complexityMachine execution arrangementsDigital dataAlgorithm

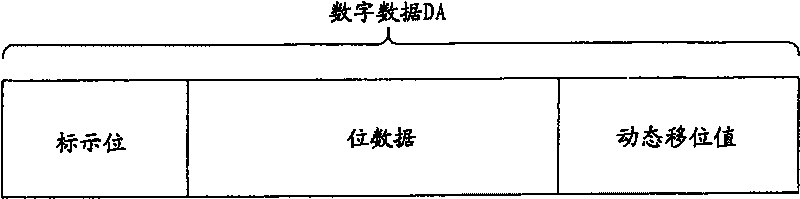

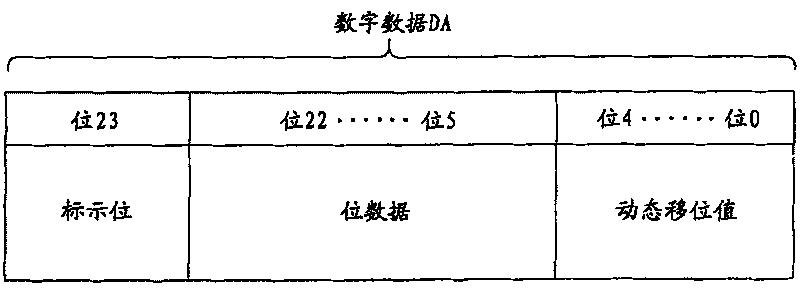

This invention offers a new fixed-point number representation to represent the digital data after it is numerically conversed. This new representation includes two parts. The first part is that it setthe reserved number of least significant bits in the digital data as a dynamic dislocation value. This value represents the shifted digit capacity in numeric conversion. The other part is that it corresponds all the bits except the dynamic dislocation value to the partial bits of the digital data before numerical conversion. The partial bits includes at least a most important bit containing the numeric information.

Owner:MEDIATEK INC

Parallel fixed point square root and reciprocal square root computation unit in a processor

InactiveUS6341300B1Register arrangementsComputation using non-contact making devicesParallel computingLeading zero

A parallel fixed-point square root and reciprocal square root computation uses the same coefficient tables as the floating point square root and reciprocal square root computation by converting the fixed-point numbers into a floating-point structure with a leading implicit 1. The value of a number X is stored as two fixed-point numbers. In one embodiment, the fixed-point numbers are converted to the special floating-point structure using a leading zero detector and a shifter. Following the square root computation or the reciprocal square root computation, the floating point result is shifted back into the two-entry fixed-point format. The shift count is determined by the number of leaded zeros detected during the conversion from fixed-point to floating-point format.

Owner:ORACLE INT CORP

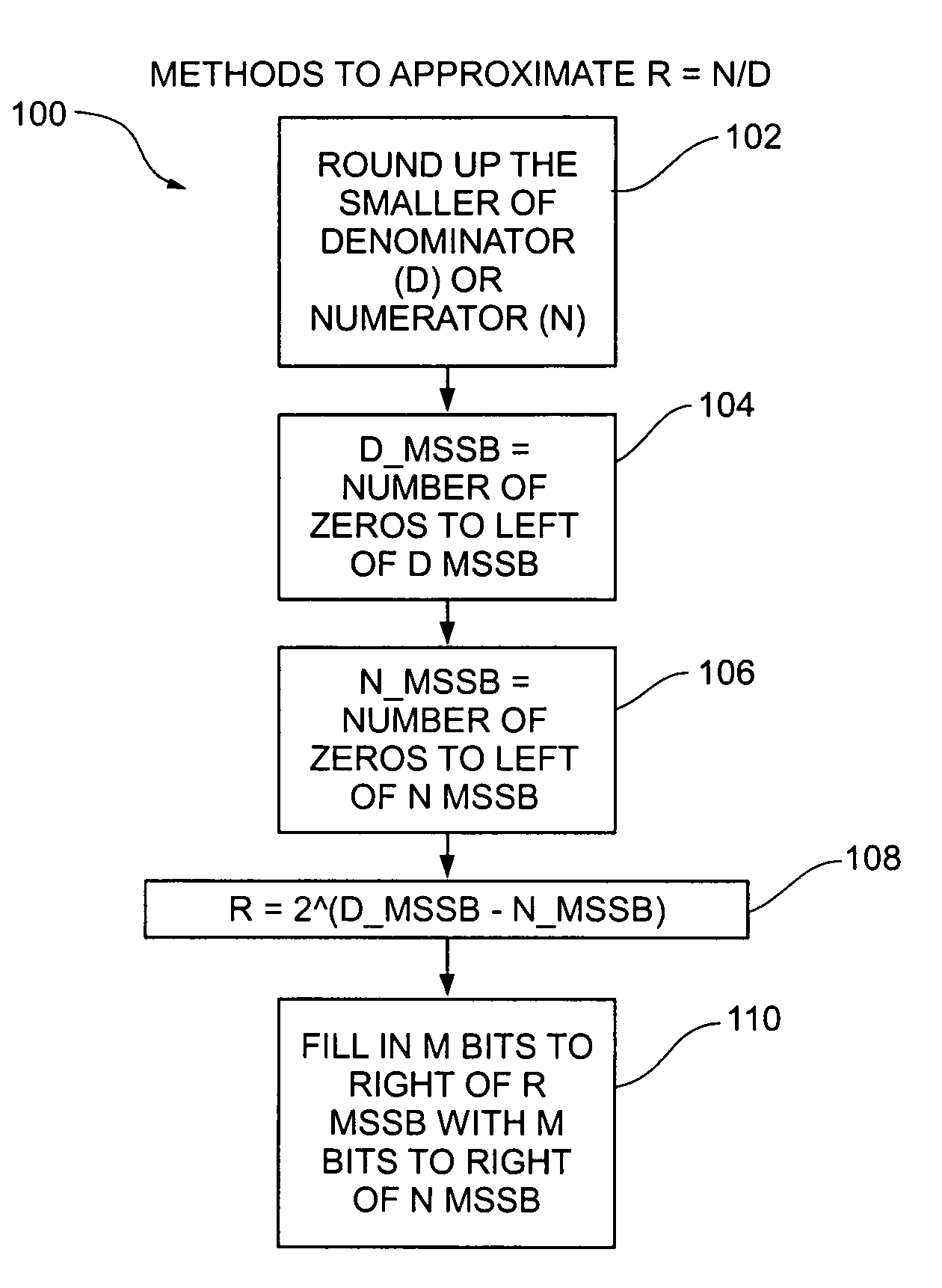

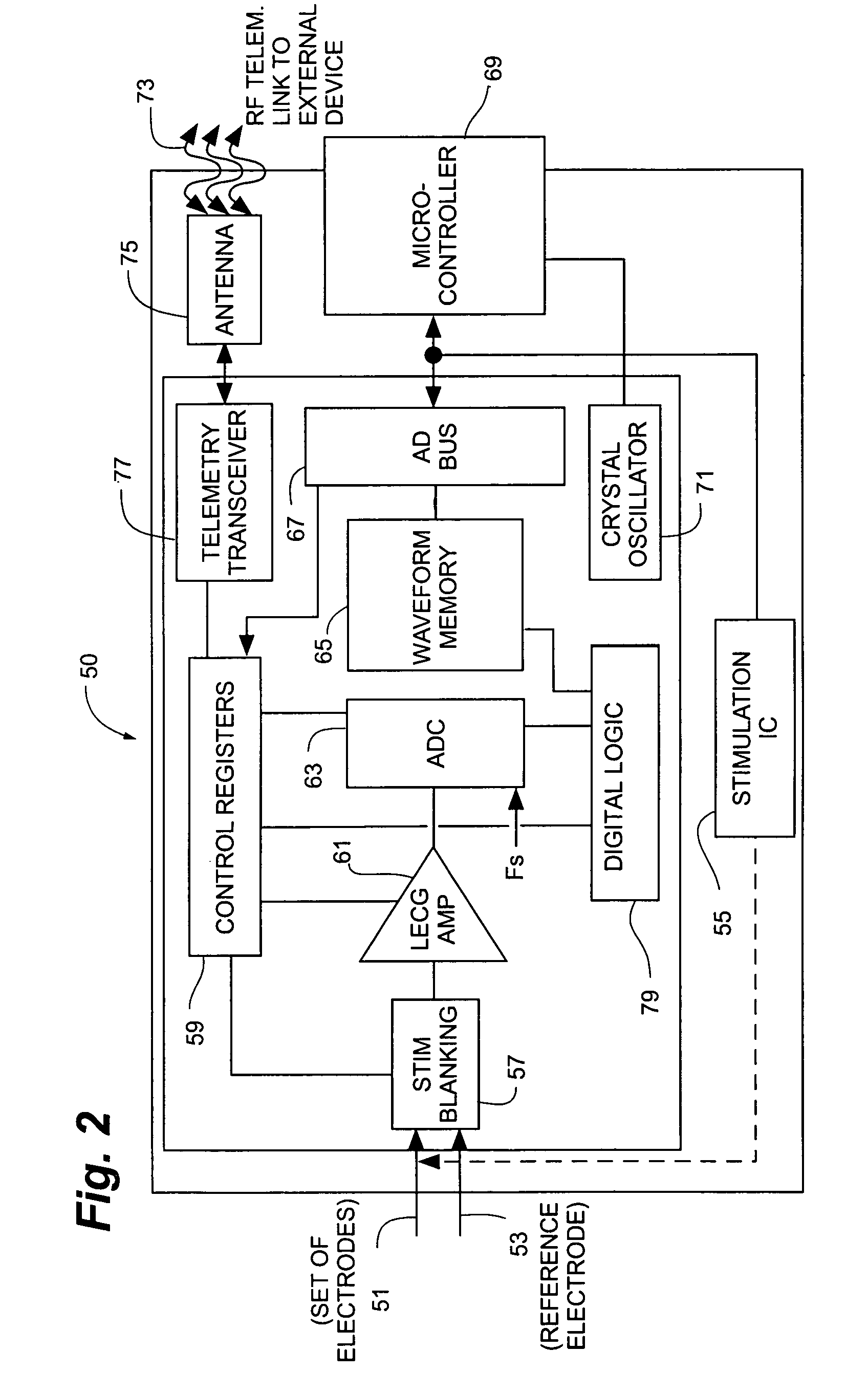

Division approximation for implantable medical devices

Methods and devices for performing division approximation in implantable and wearable self-powered medical devices. The present invention provides rapid methods for performing an approximation of division on fixed point numbers, where the methods are easily implemented in small, low power consumption devices as may be found in implantable medical devices. One example of use is in rapidly determining the approximate ratio between foreground and background activity in seizure detection algorithms. Some methods approximate the ratio of Numerator (N) to Denominator (D) by raising 2 to the power of the difference in the number of zeros to the left of the Most Significant Set Bit (MSSB) of D vs. N. Some methods may also pad bits to the right of the approximate ratio MSSB using bits from the right of the N MSSB, and / or pre-process the smaller of D or N by rounding the value upward. Methods may be implemented in firmware and / or in discrete logic.

Owner:MEDTRONIC INC

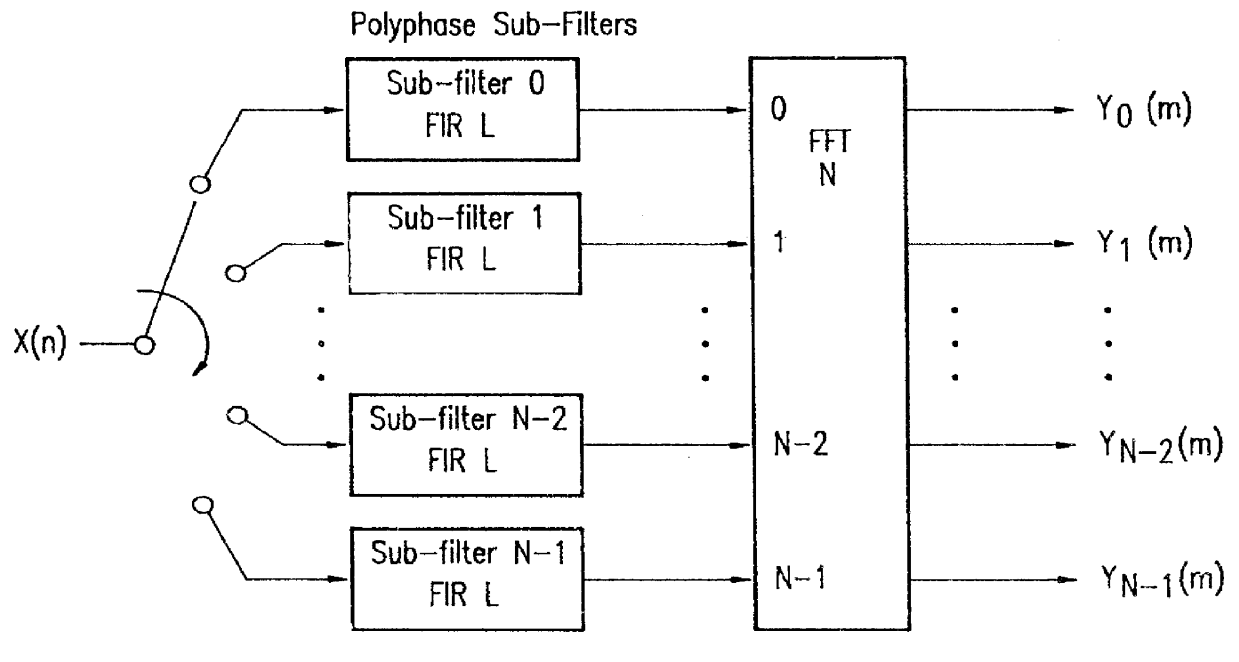

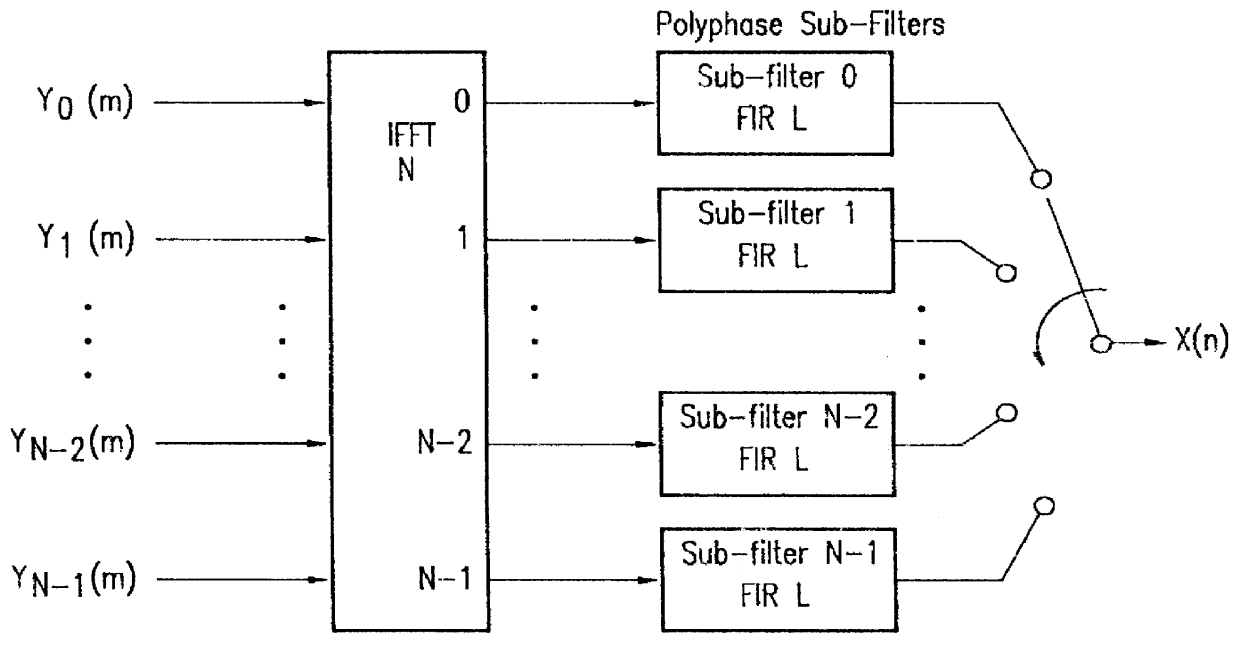

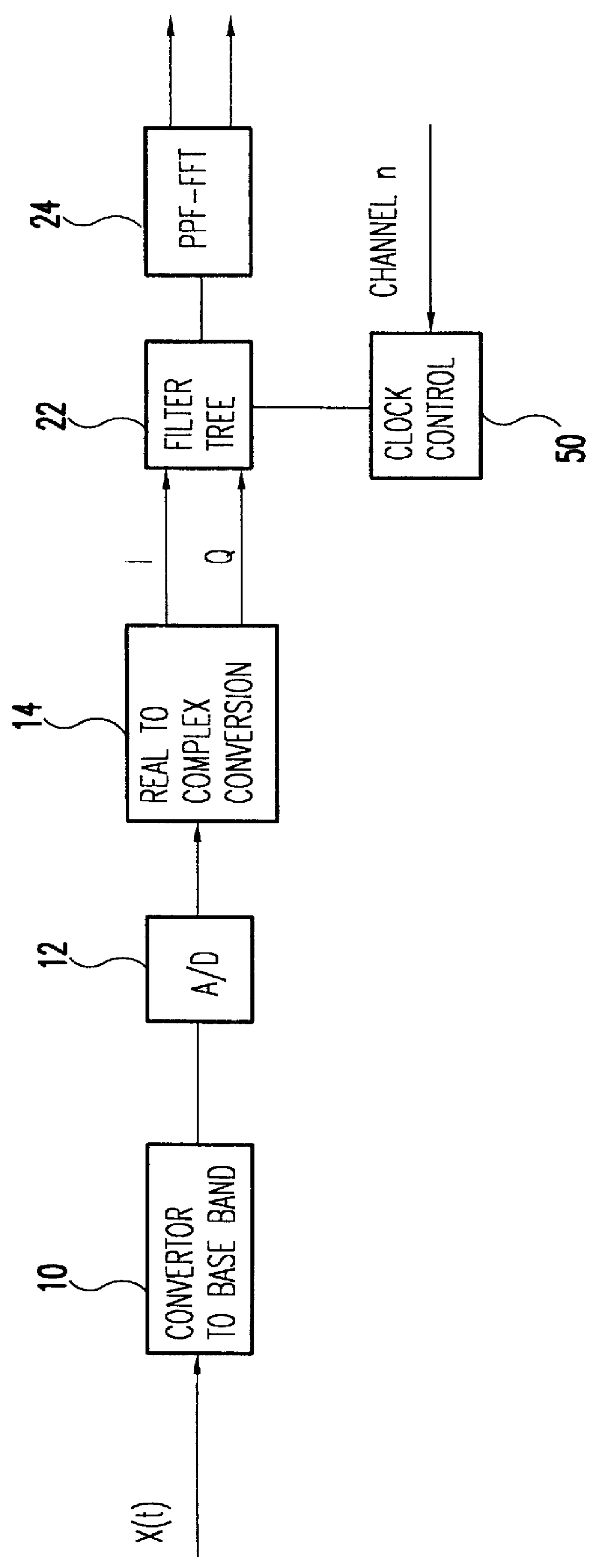

Digital multi-channel demultiplexer/multiplexer (MCD/M) architecture

InactiveUS6091704AReduced dimensionFacilitate efficient sub-band definition filteringDigital technique networkFrequency-division multiplex detailsDigital signal processingFinite impulse response

A digital signal processing system for multiplexing / demultiplexing a large number of closely spaced FDM channels in which sub-band definition filtering divides the FDM spectral band comprised of "N" channels into "K" sub-bands in order to reduce the dimension of the polyphase filter fast Fourier transform structure required to complete the multiplexing / demultiplexing. This reduces the order of the required prototype filter by a factor proportional to K. The number of sub-bands K is chosen so that it is large enough to ensure the polyphase filter, fast Fourier transform structure for each sub-band is realizable within a finite word length, fixed point arithmetic implementation compatible with a low power consumption. To facilitate efficient sub-band definition filtering, the real basebanded composite signal is inputted at a spectral offset from DC equal to one quarter the FDM channel bandwidth for the N channels and the signal is sampled at a frequency 50% greater than the applicable Nyquist rate. The quarter band spectral offset and oversampling by 50% above the theoretical Nyquist rate facilitate the use of computationally efficient bandshift and symmetric half-band Finite Impulse Response (FIR) filtering.

Owner:HANGER SOLUTIONS LLC

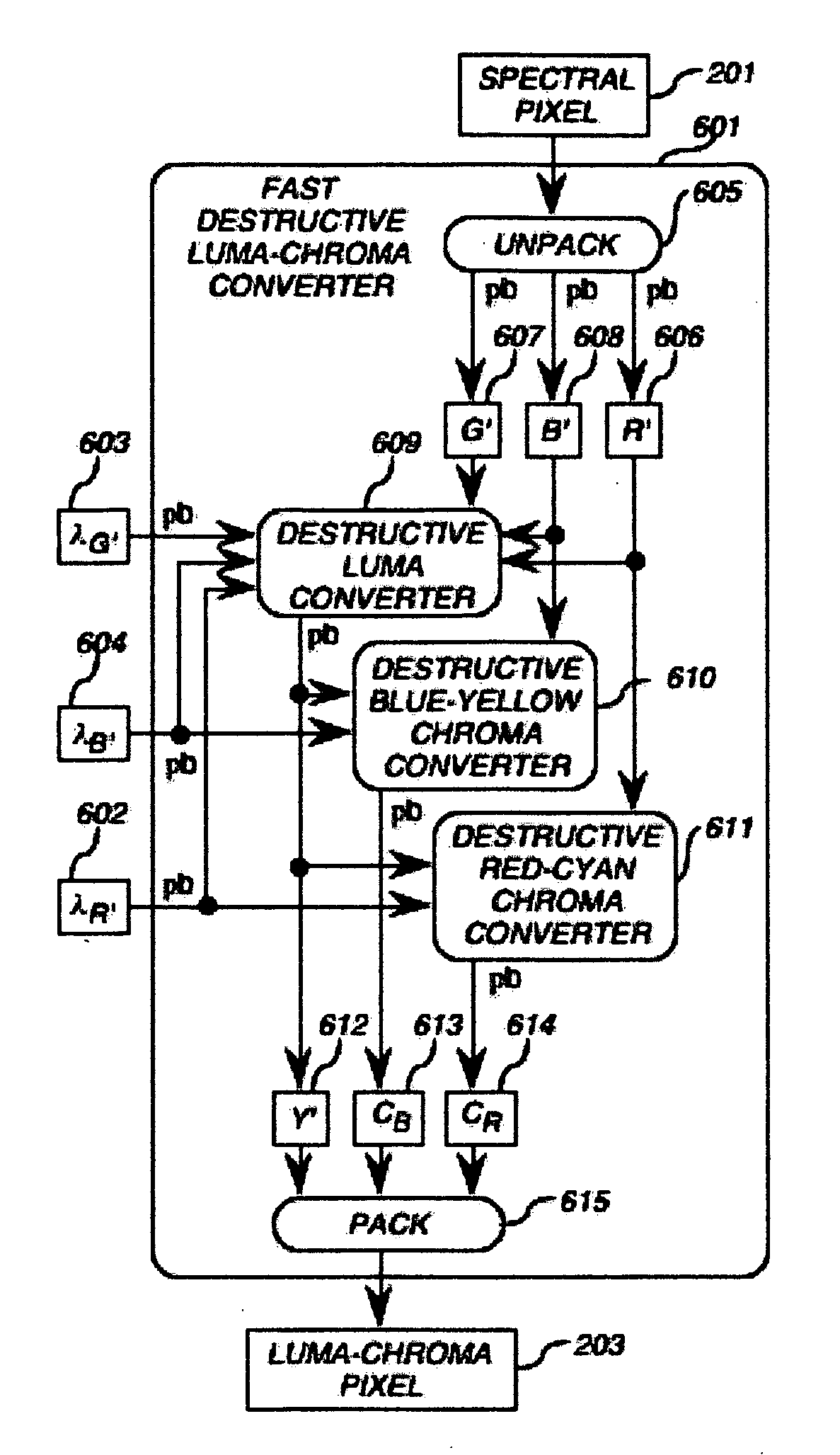

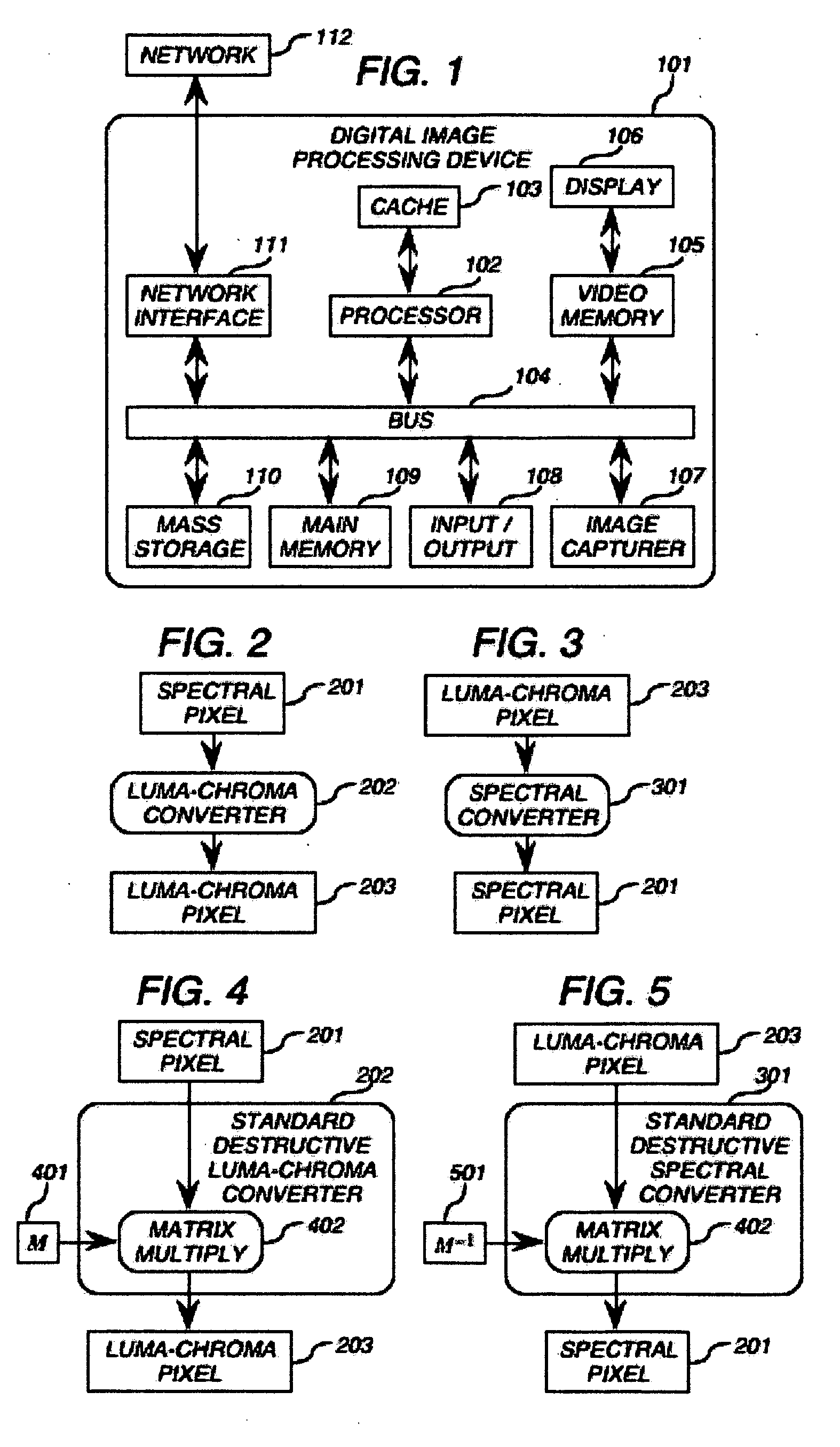

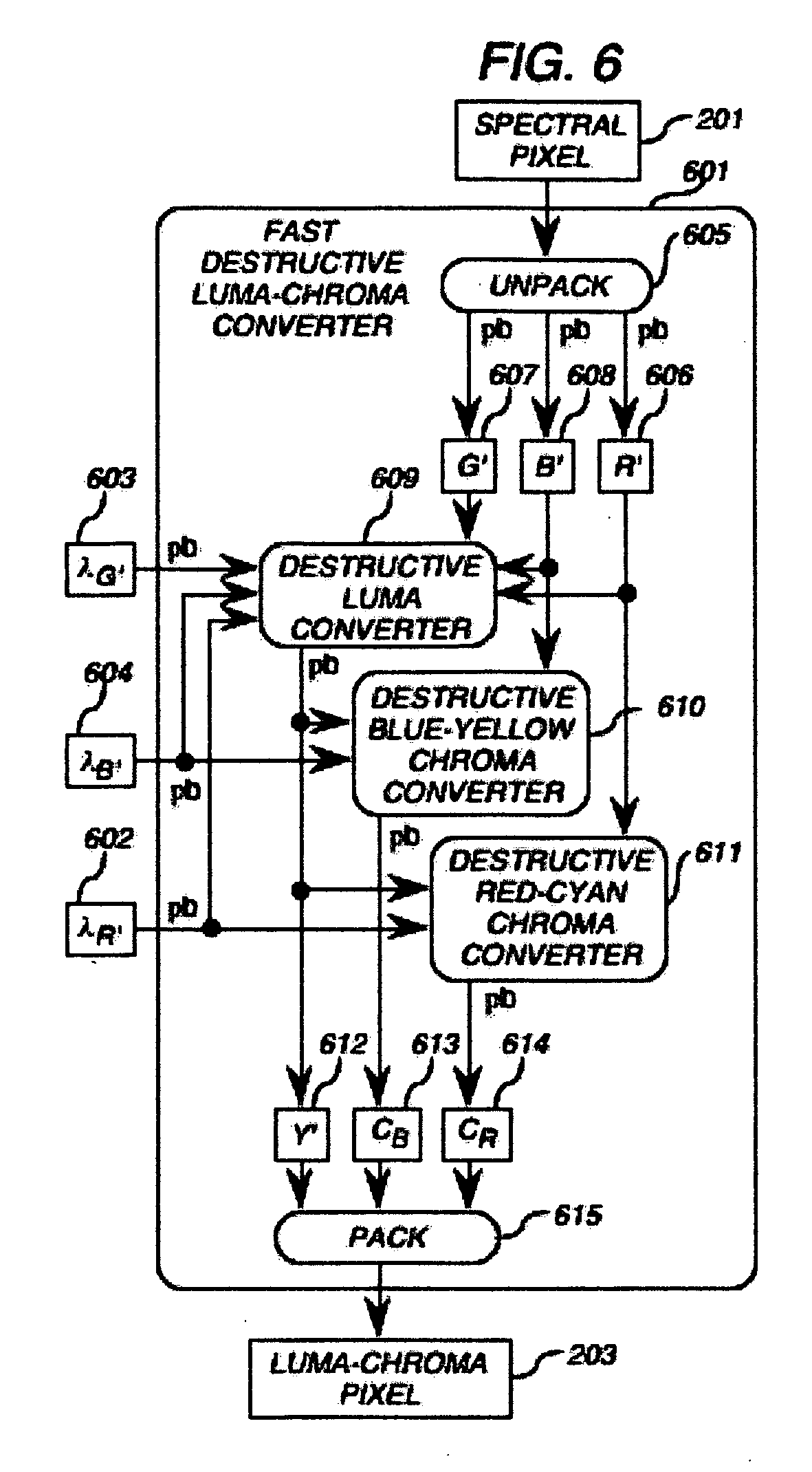

Method and apparatus for lossless and minimal-loss color conversion

InactiveUS20060274335A1Zero errorGuaranteed minimal errorDigitally marking record carriersColor signal processing circuitsDigital videoColor transformation

A method and apparatus for perfectly lossless and minimal-loss interconversion of digital color data between spectral color spaces (RGB) and perceptually based luma-chroma color spaces (Y′CBCR) is disclosed. In particular, the present invention provides a process for converting digital pixels from R′G′B′ space to Y′CBCR space and back, or from Y′CBCR space to R′G′B′ space and back, with zero error, or, in constant-precision implementations, with guaranteed minimal error. This invention permits digital video editing and image editing systems to repeatedly interconvert between color spaces without accumulating errors. In image codecs, this invention can improve the quality of lossy image compressors independently of their core algorithms, and enables lossless image compressors to operate in a different color space than the source data without thereby becoming lossy. The present invention uses fixed-point arithmetic with signed and unsigned rounding normalization at key points in the process to maintain reversibility.

Owner:ANDREAS WITTENSTEIN

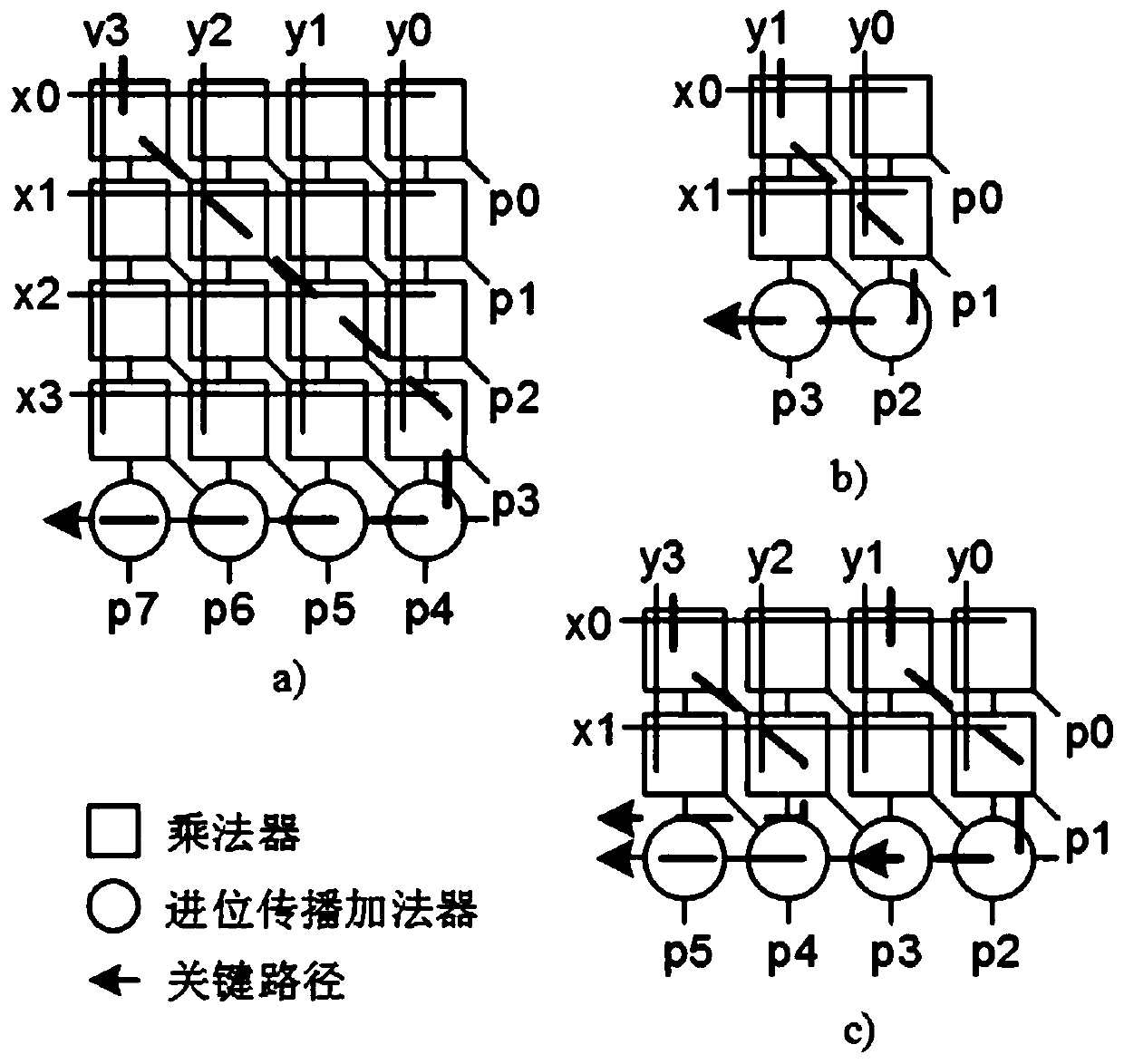

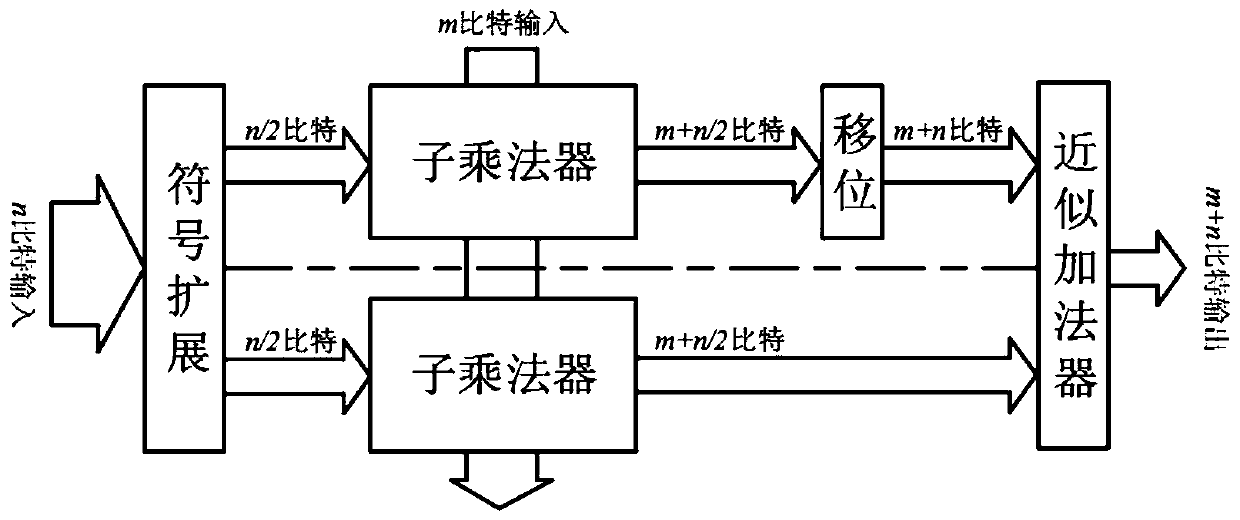

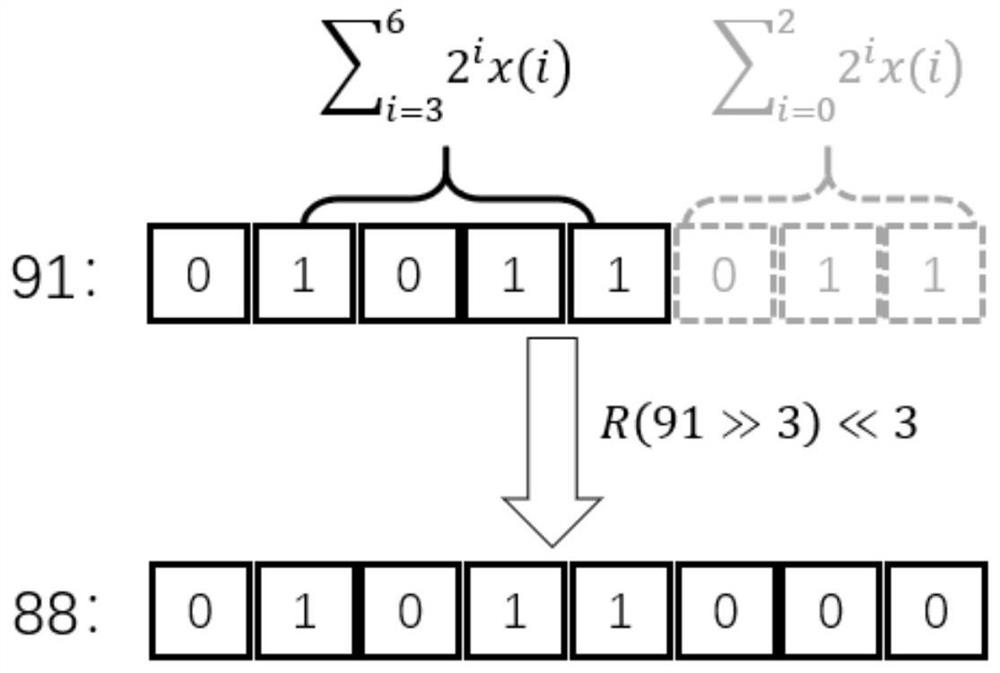

Configurable approximate multiplier for quantizing convolutional neural network and implementation method of configurable approximate multiplier

ActiveCN110780845AImprove computing efficiencySmall area overheadComputation using non-contact making devicesPhysical realisationBinary multiplierTheoretical computer science

The invention discloses a configurable approximate multiplier for quantizing a convolutional neural network and an implementation method of the configurable approximate multiplier. The configurable approximate multiplier comprises a symbol extension module, a sub multiplier module and an approximate adder. The symbol extension module splits long-bit-width signed fixed-point number multiplication into two short-bit-width signed fixed-point number multiplication. The sub-multiplier module comprises a plurality of sub-multipliers, each sub-multiplier only receives one signed fixed-point number output by the symbol extension module, and one signed fixed-point number multiplication is completed in combination with the other input; and the approximate adder merges results output by the sub-multiplier modules to obtain a final result of long-bit-width signed fixed-point number multiplication. For two signed fixed-point number multiplication operations with unequal input bit lengths, the speedand the energy efficiency are obviously improved; in a quantitative convolutional neural network with a large number of multiplication operations, the advantages of the method are embodied to the greatest extent.

Owner:ZHEJIANG UNIV

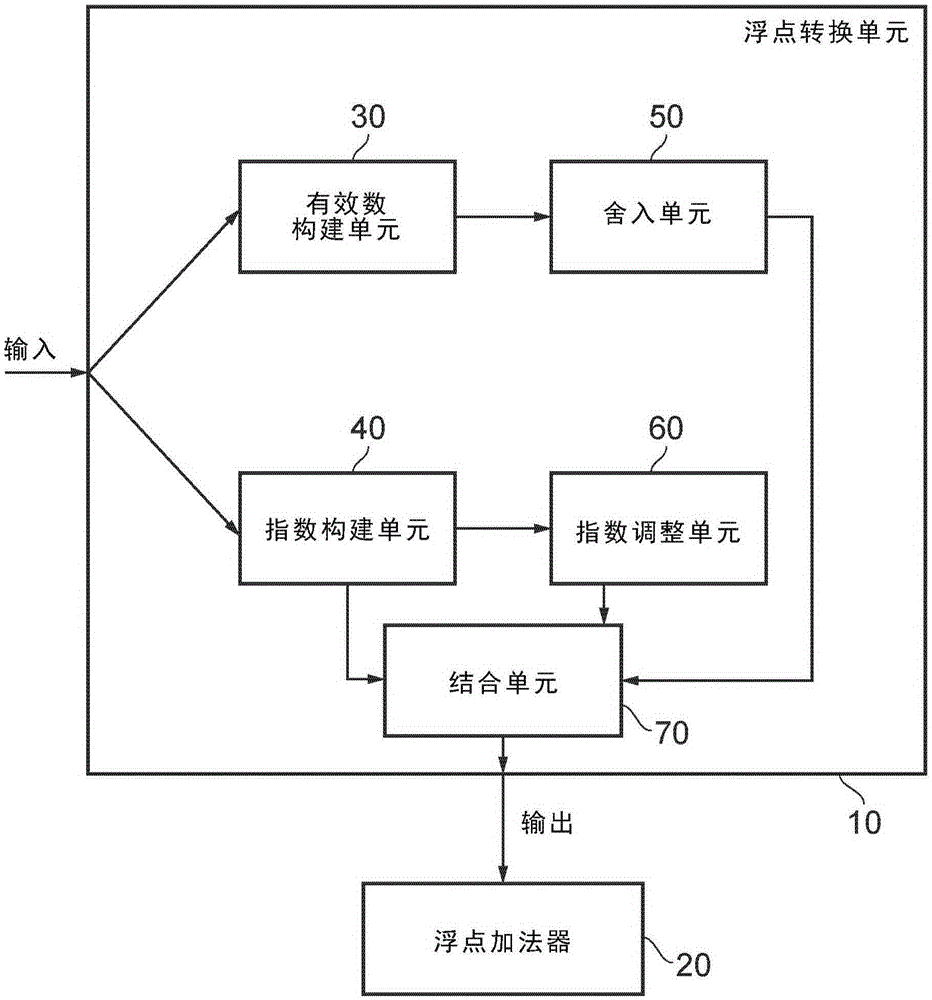

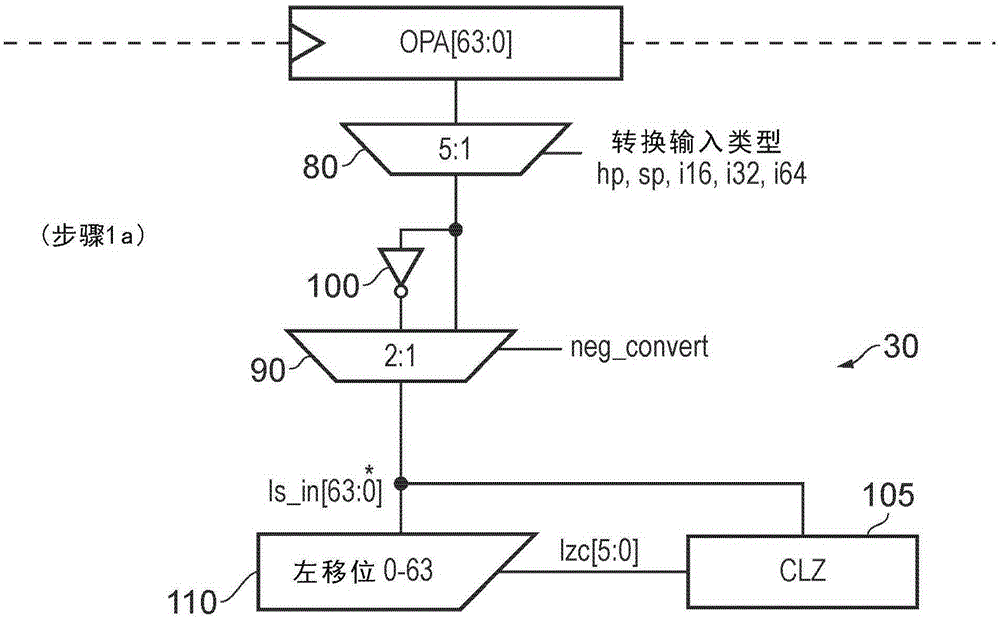

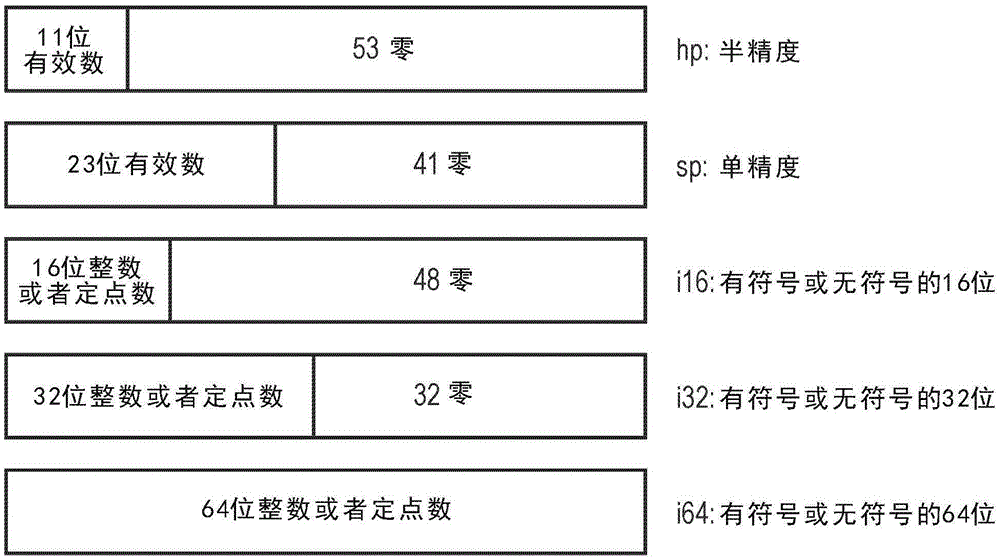

Standalone floating-point conversion unit

The invention relates to a standalone floating-point conversion unit. A data processing apparatus includes floating-point adder circuitry and floating-point conversion circuitry that generates a floating-point number as an output by performing a conversion on any input having a format from a list of formats including: an integer number, a fixed-point number, and a floating-point number having a format smaller than the output floating-point number. The floating-point conversion circuitry is physically distinct from the floating-point adder circuitry.

Owner:ARM LTD

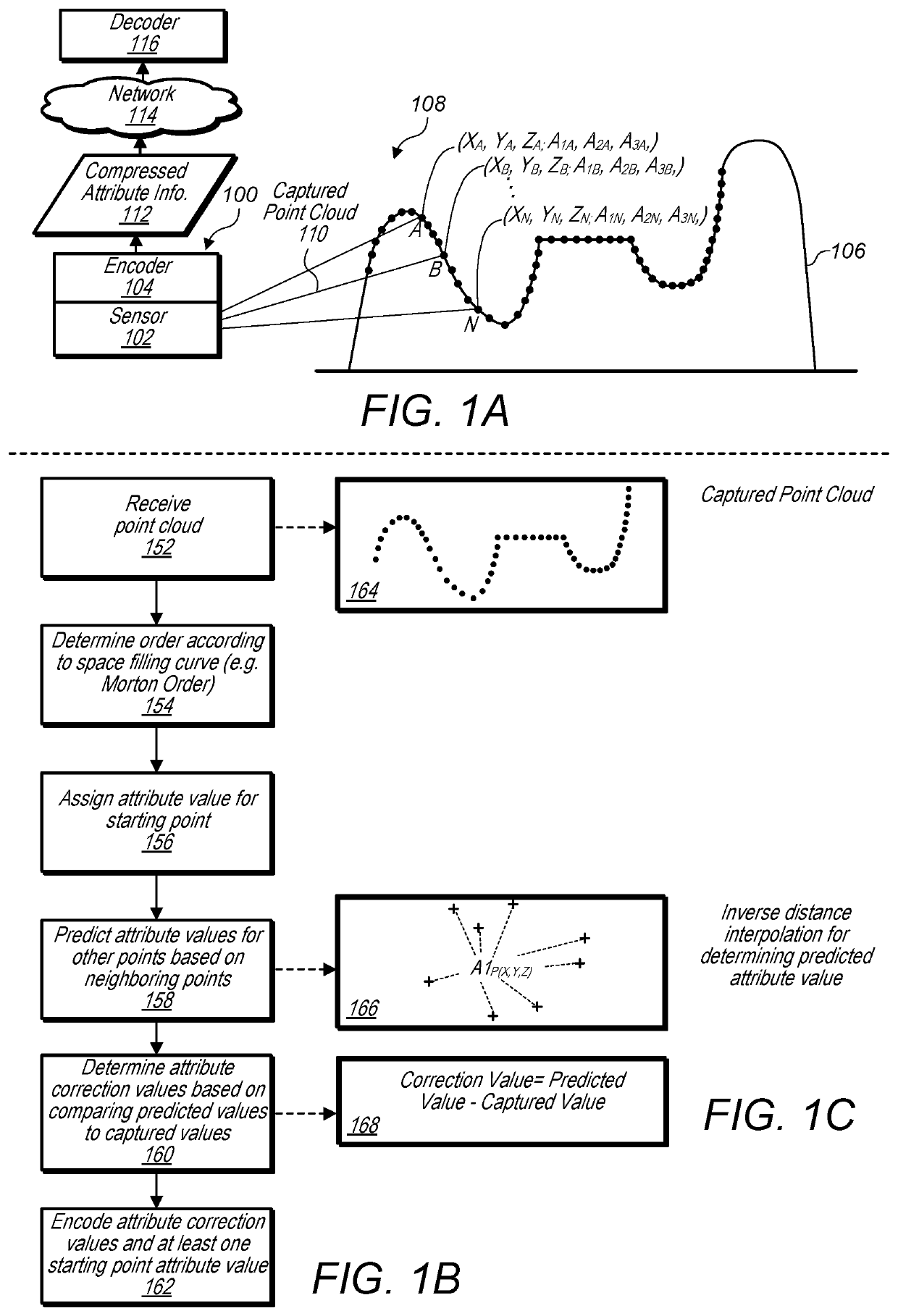

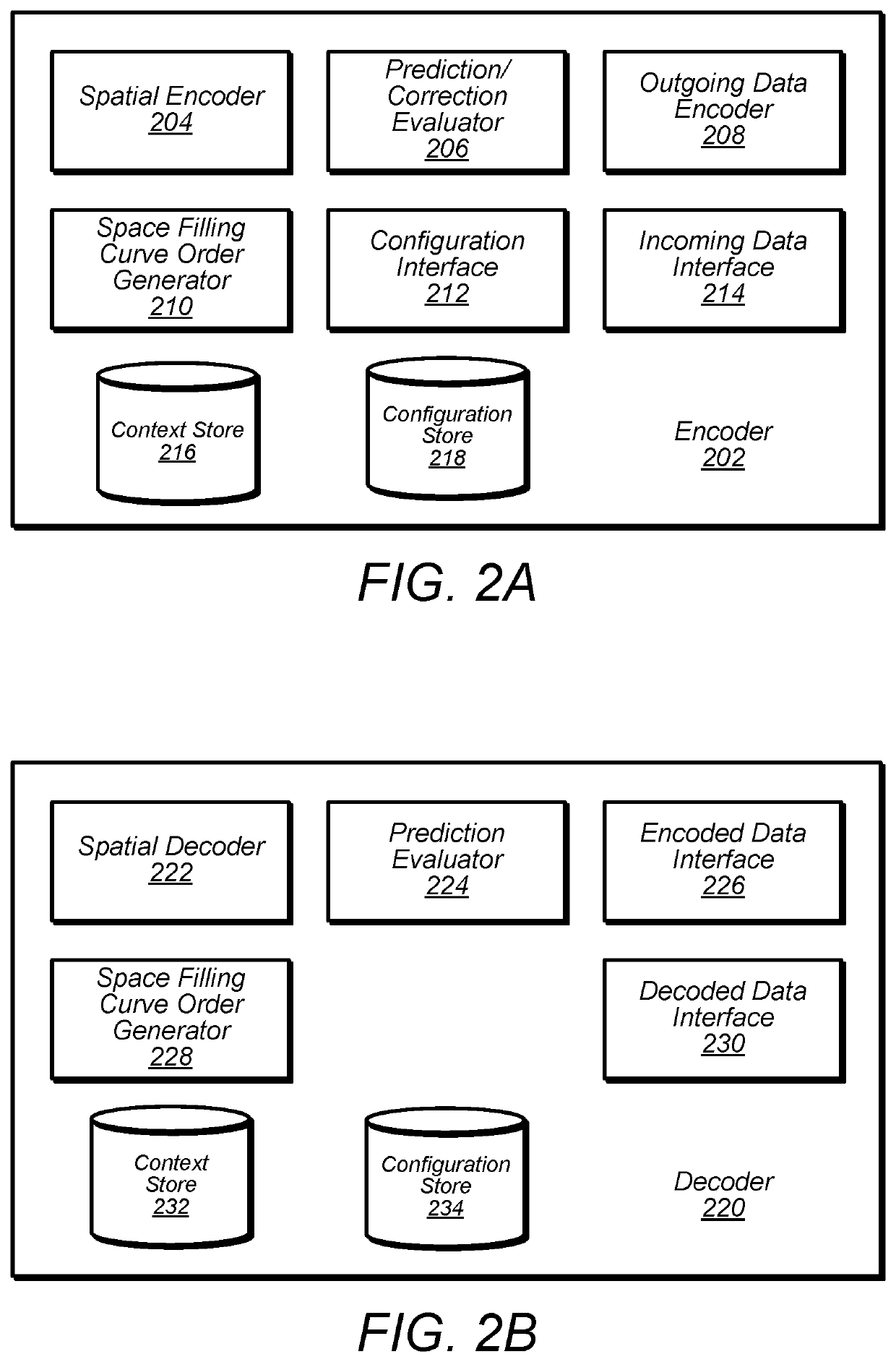

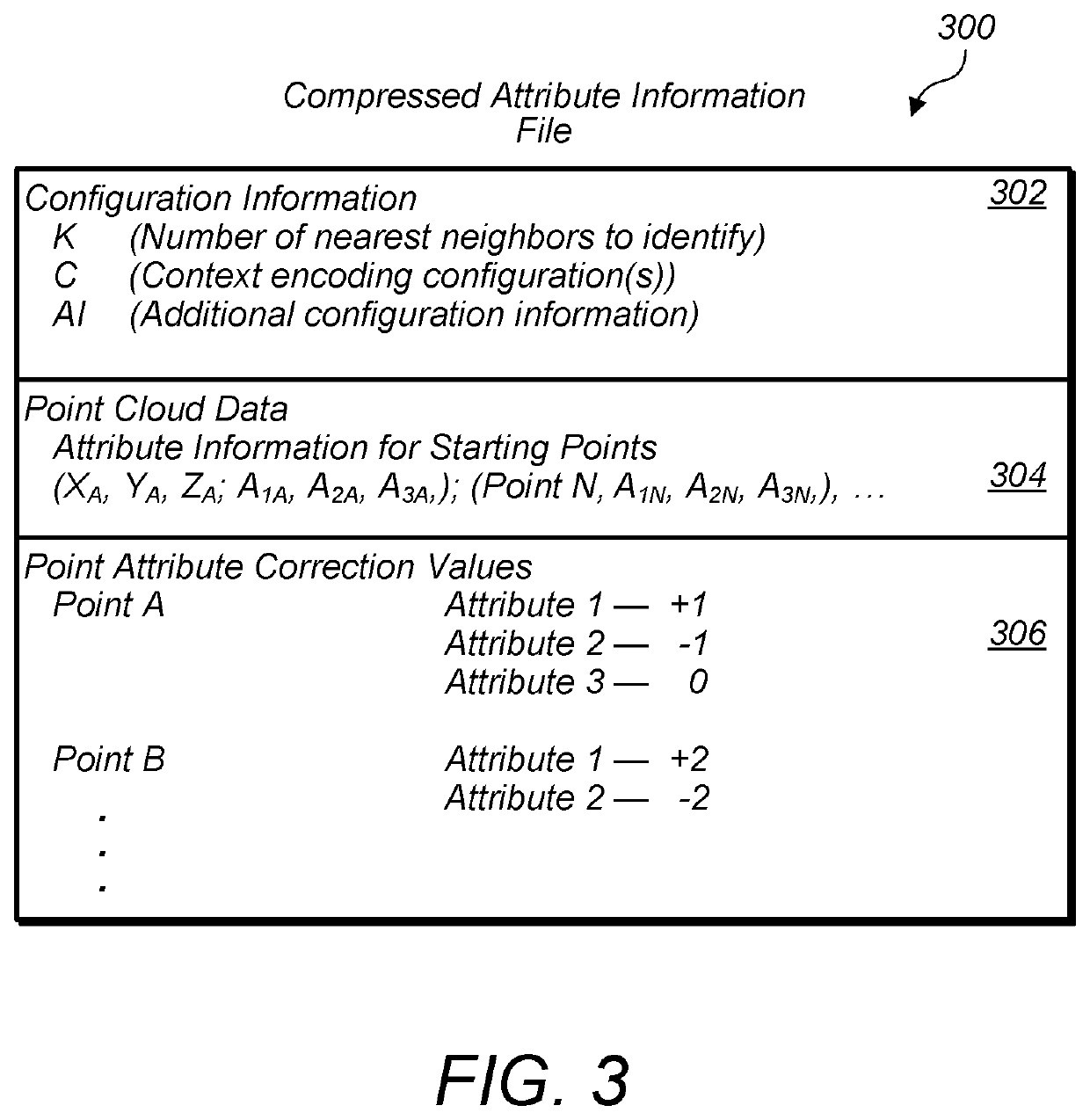

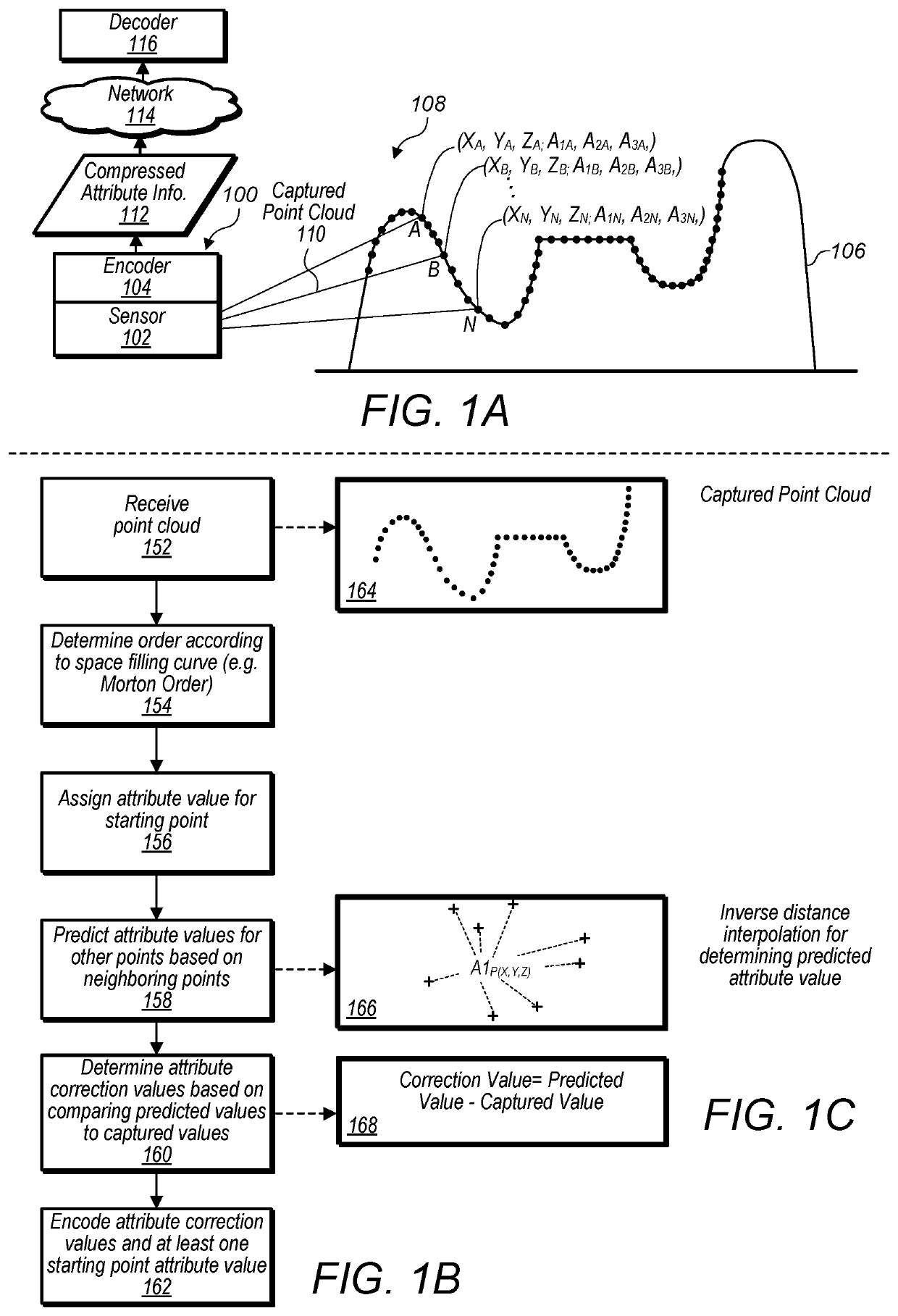

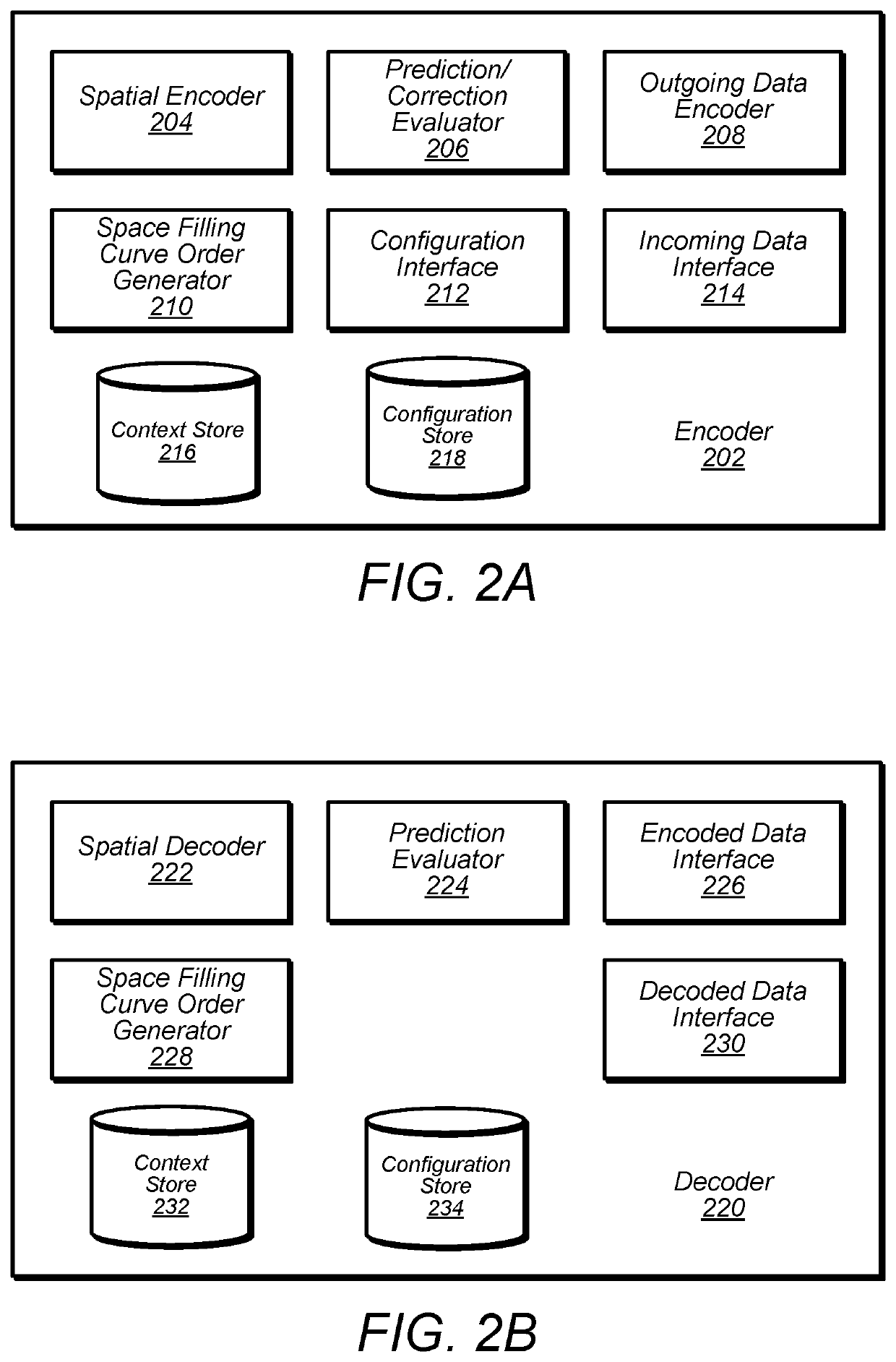

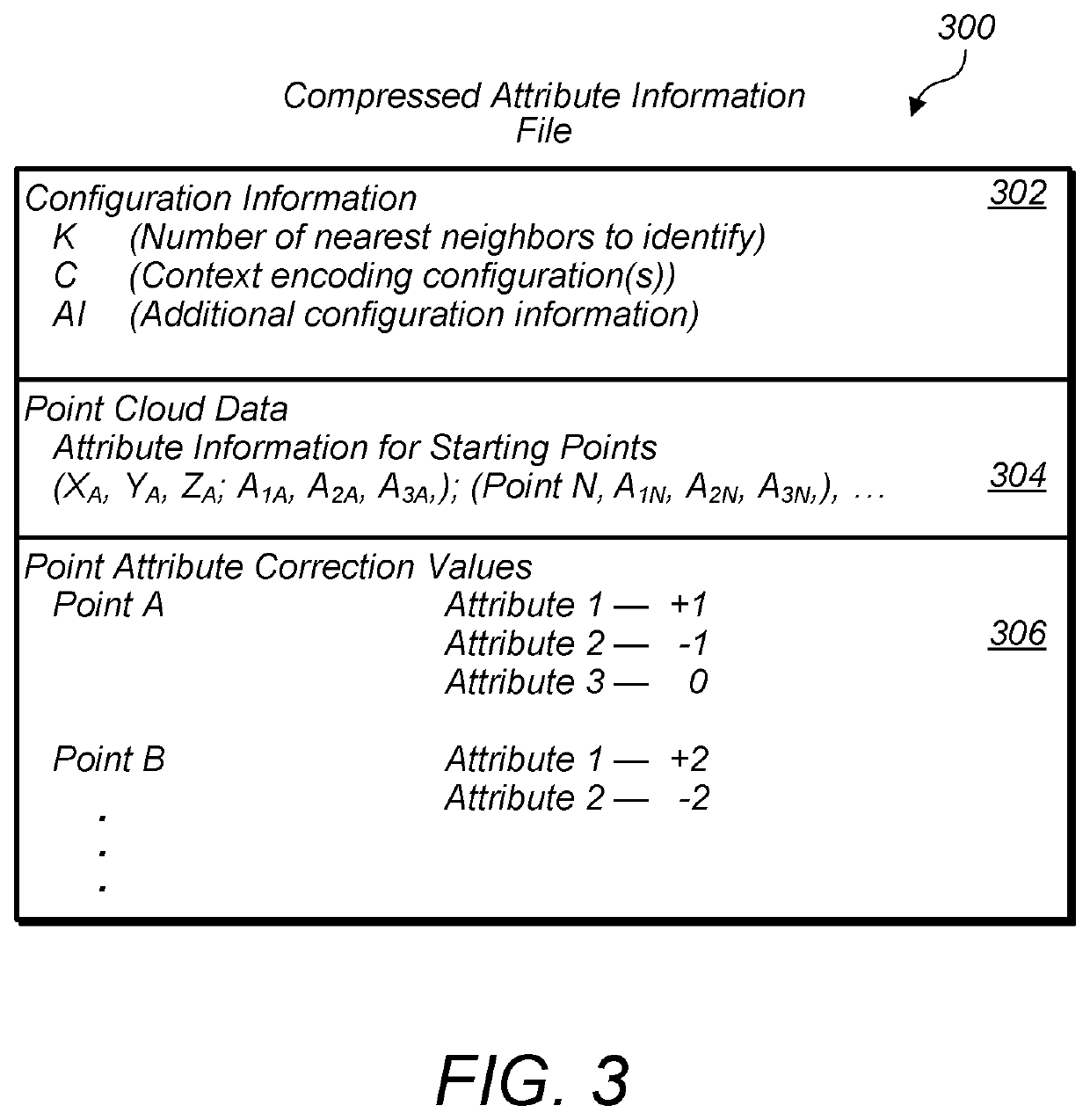

Point cloud compression using fixed-point numbers

A system comprises an encoder configured to compress attribute information for a point cloud and / or a decoder configured to decompress compressed attribute information. Attribute values for at least one starting point are included in a compressed attribute information file and attribute correction values are included in the compressed attribute information file. Attribute values are predicted based, at least in part, on attribute values of neighboring points. The predicted attribute values are compared to attribute values of a point cloud prior to compression to determine attribute correction values. In order to improve computing efficiency and / or repeatability, fixed-point number representations are used when determining predicted attribute values and attribute correction values. A decoder follows a similar prediction process as an encoder and corrects predicted values using attribute correction values included in a compressed attribute information file using fixed-point number representations.

Owner:APPLE INC

Method for improving unpredictability of output of pseudo-random number generators

InactiveCN1668995AGood bit mixingImprove unpredictabilityRandom number generatorsChaos modelsData setTheoretical computer science

A method for performing computations in a mathematical system which exhibits a positive lyapunov exponent, or exhibits chaotic behavior, comprises varying a parameter of the system. When employed in cryptography, such as, e.g., in a pseudo-random number generator of a stream-cipher algorithm, in a block-cipher system or a HASH / MAC system, unpredictability may be improved. In a similar system, a computational method comprises multiphying two numbers and manipulating at least one of the most significant bits of the number resulting from the multiplication to produce an output. A number derived from a division of two numbers may be used for deriving an output. In a system for generating a sequence of numbers, an array of counters is updated at each computational step, whereby a carry value is added to each counter. Fixed-point arithmetic may be employed. A method of determining an identification value and for concurrently encrypting and / or decrypting a set of data is disclosed.

Owner:克瑞迪科公司

Point cloud compression using fixed-point numbers

A system comprises an encoder configured to compress attribute information for a point cloud and / or a decoder configured to decompress compressed attribute information. Attribute values for at least one starting point are included in a compressed attribute information file and attribute correction values are included in the compressed attribute information file. Attribute values are predicted based, at least in part, on attribute values of neighboring points. The predicted attribute values are compared to attribute values of a point cloud prior to compression to determine attribute correction values. In order to improve computing efficiency and / or repeatability, fixed-point number representations are used when determining predicted attribute values and attribute correction values. A decoder follows a similar prediction process as an encoder and corrects predicted values using attribute correction values included in a compressed attribute information file using fixed-point number representations.

Owner:APPLE INC

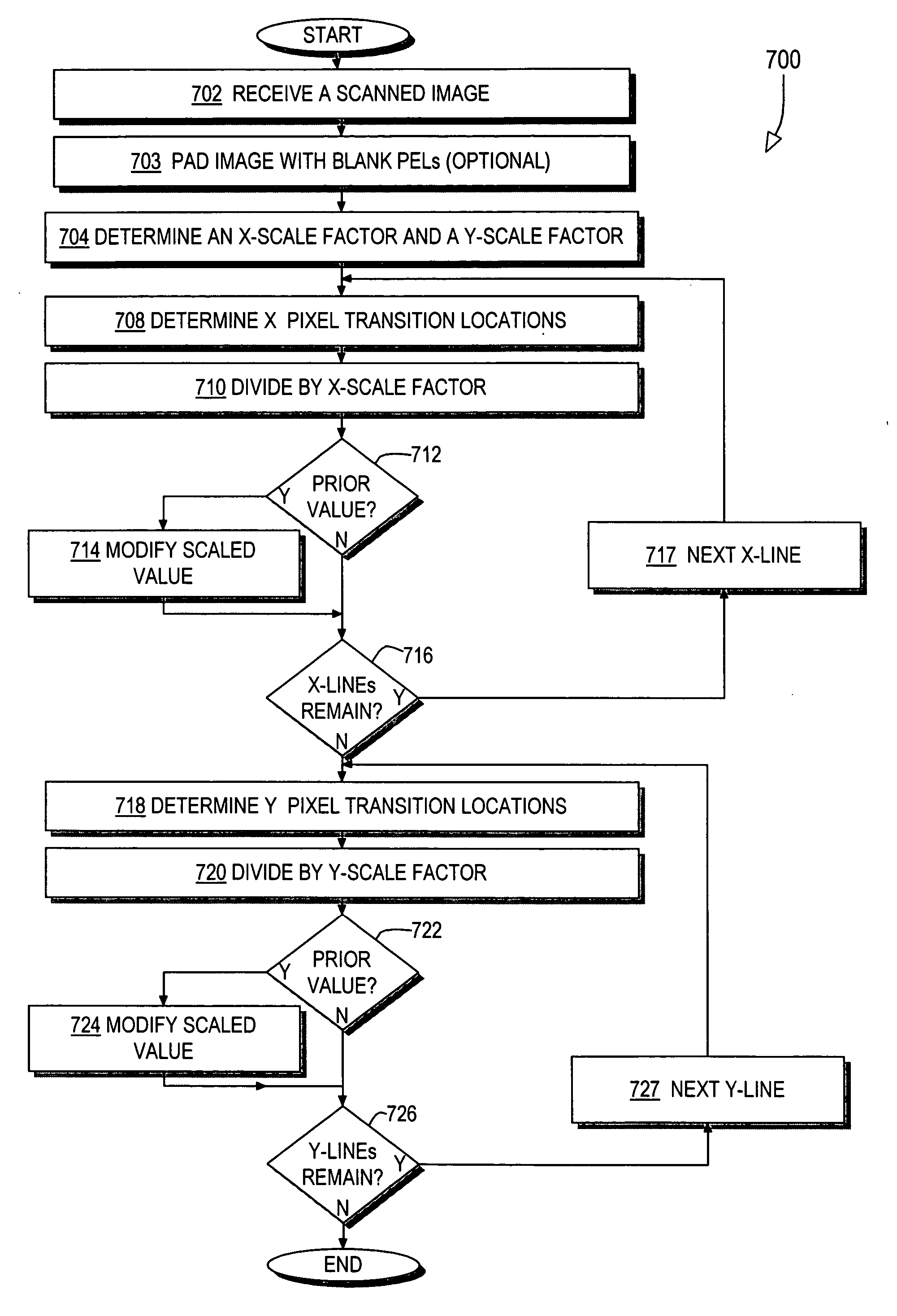

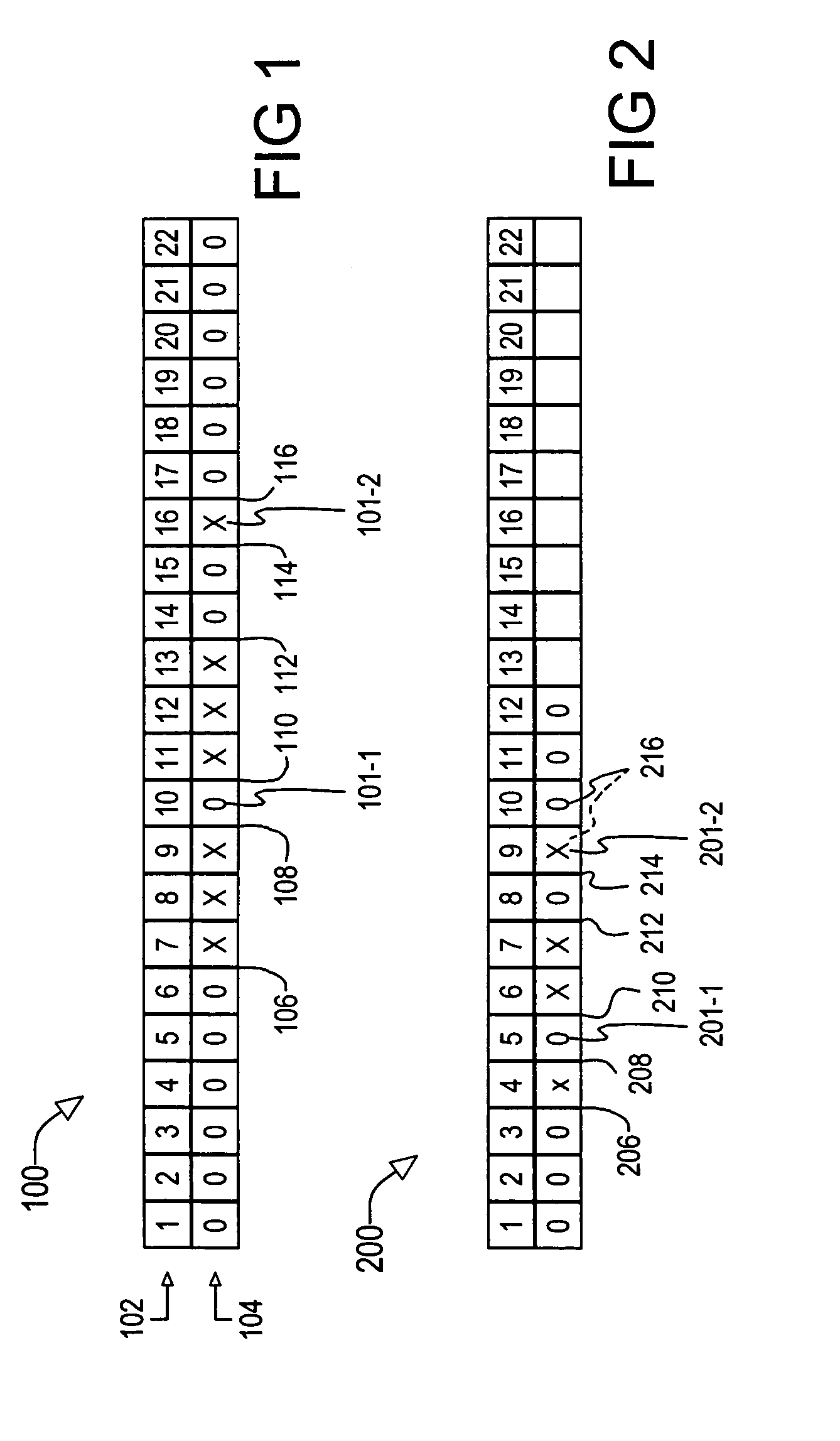

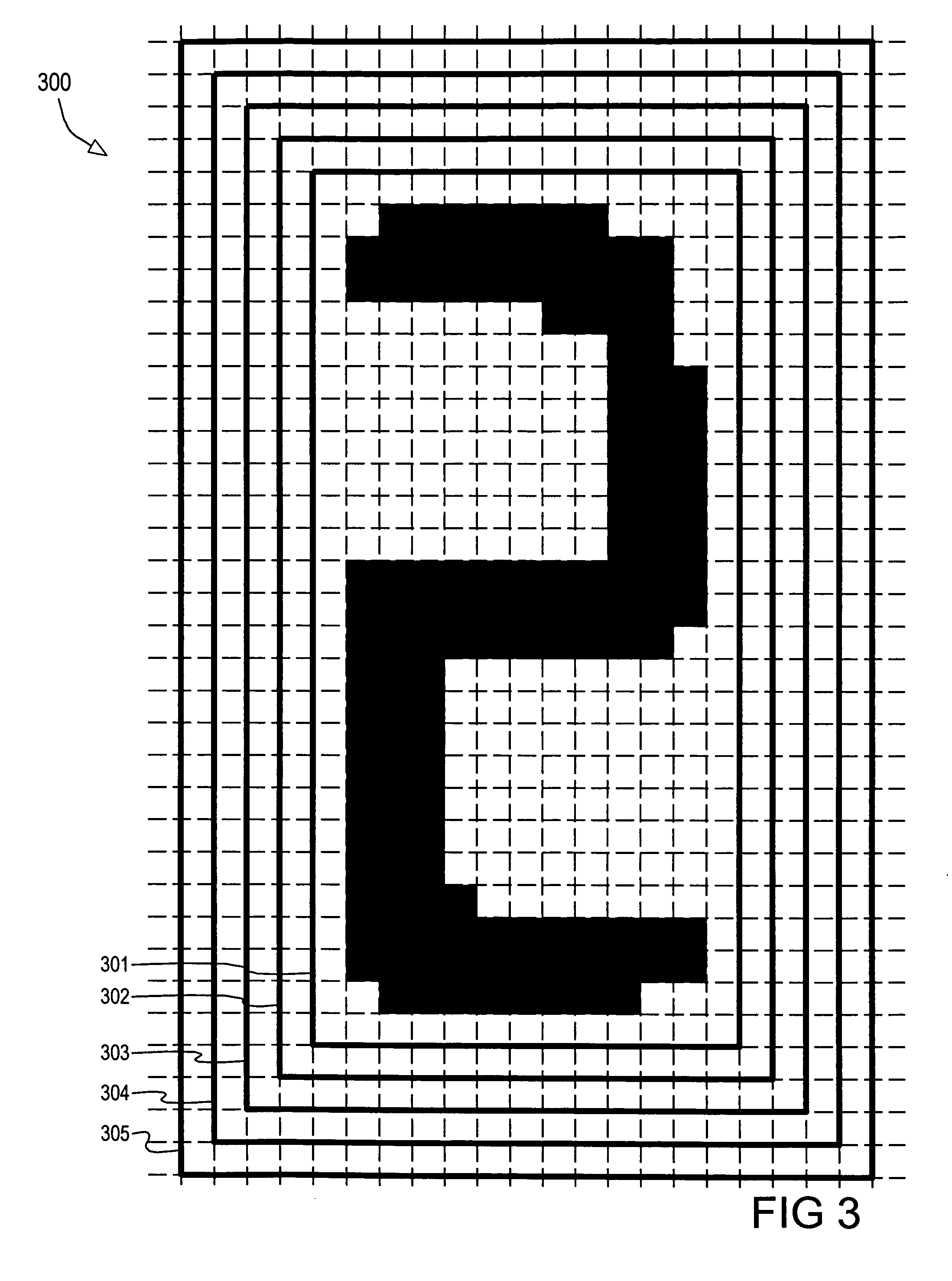

Black white image scaling for optical character recognition

InactiveUS20050196071A1Improve readabilityIncrease probabilityCharacter recognitionImage scaleFixed-point arithmetic

A system and method for capturing and scaling images includes a scaling engine with the ability to employ a first scaling factor in a first direction and a second scaling factor in a second direction. In addition, the preferred scaling engine manipulates the scaling process so that the scaling calculations are performed using fixed point arithmetic. The preferred scaling engine preserves isolated features such as a single white pixel in a field of black pixels and vice versa. Improved readability is achieved in one embodiment by performing the scaling process multiple times using different degrees of “padding” where padding refers to the technique of surrounding an image with a perimeter of one or more blank (white) pixel elements.

Owner:TOSHIBA GLOBAL COMMERCE SOLUTIONS HLDG

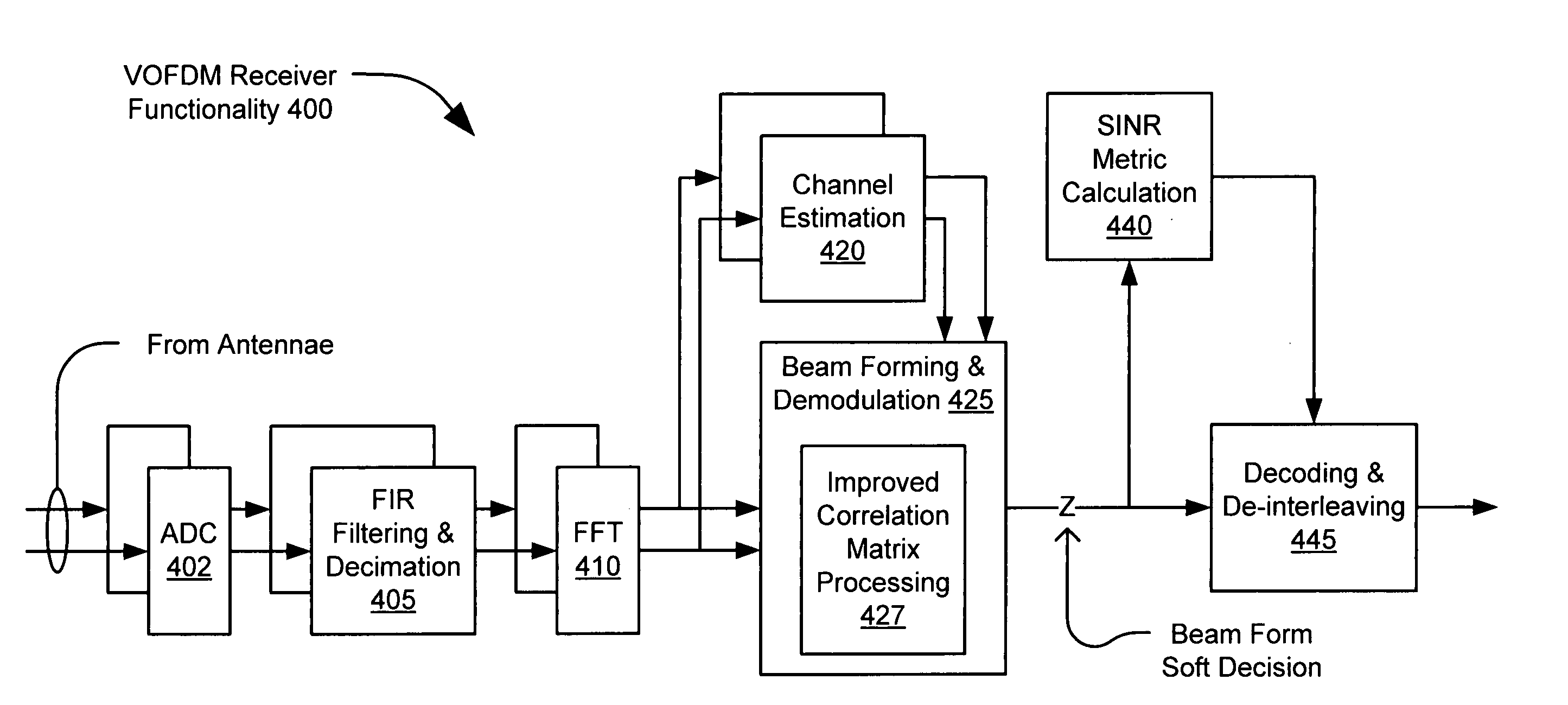

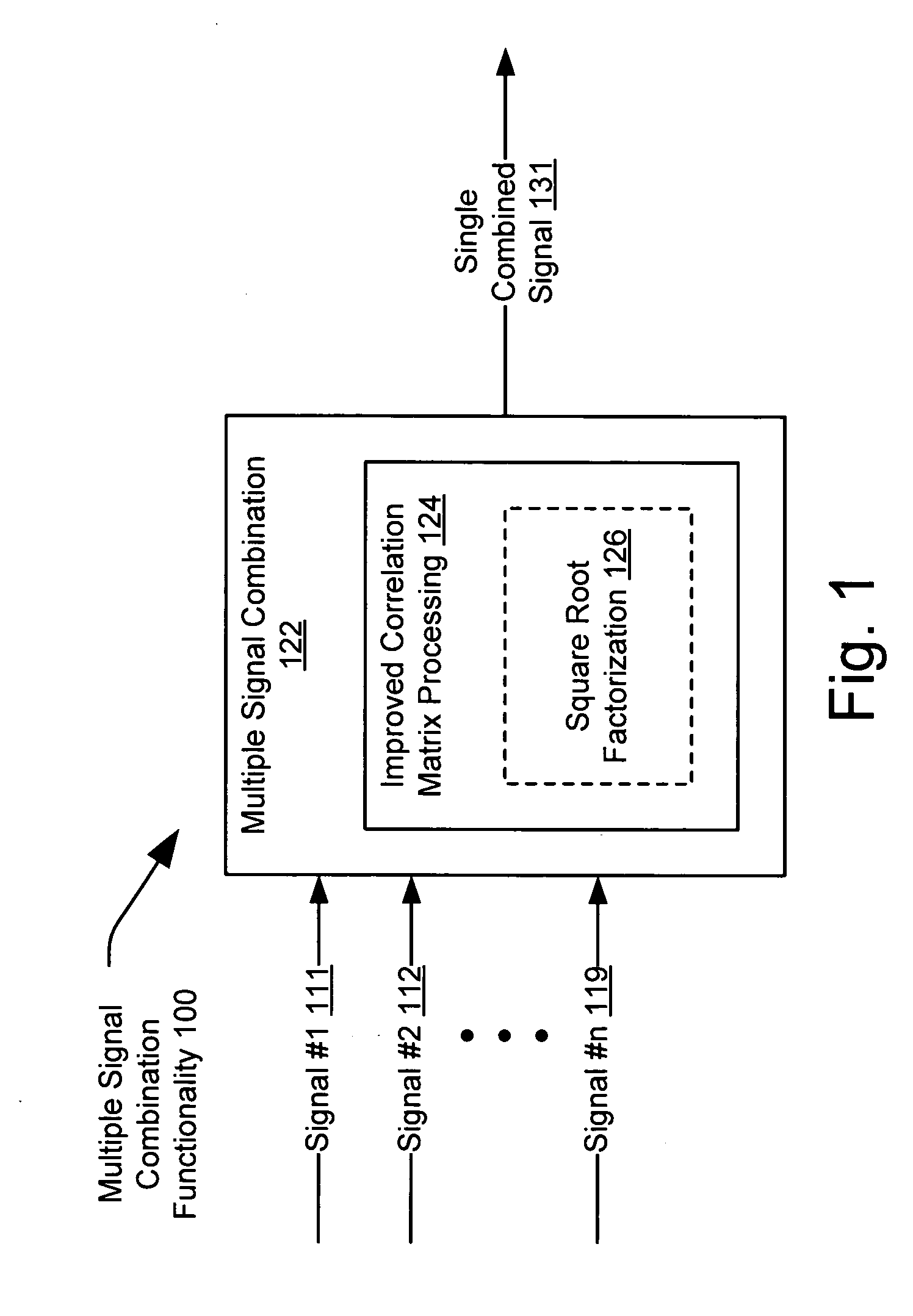

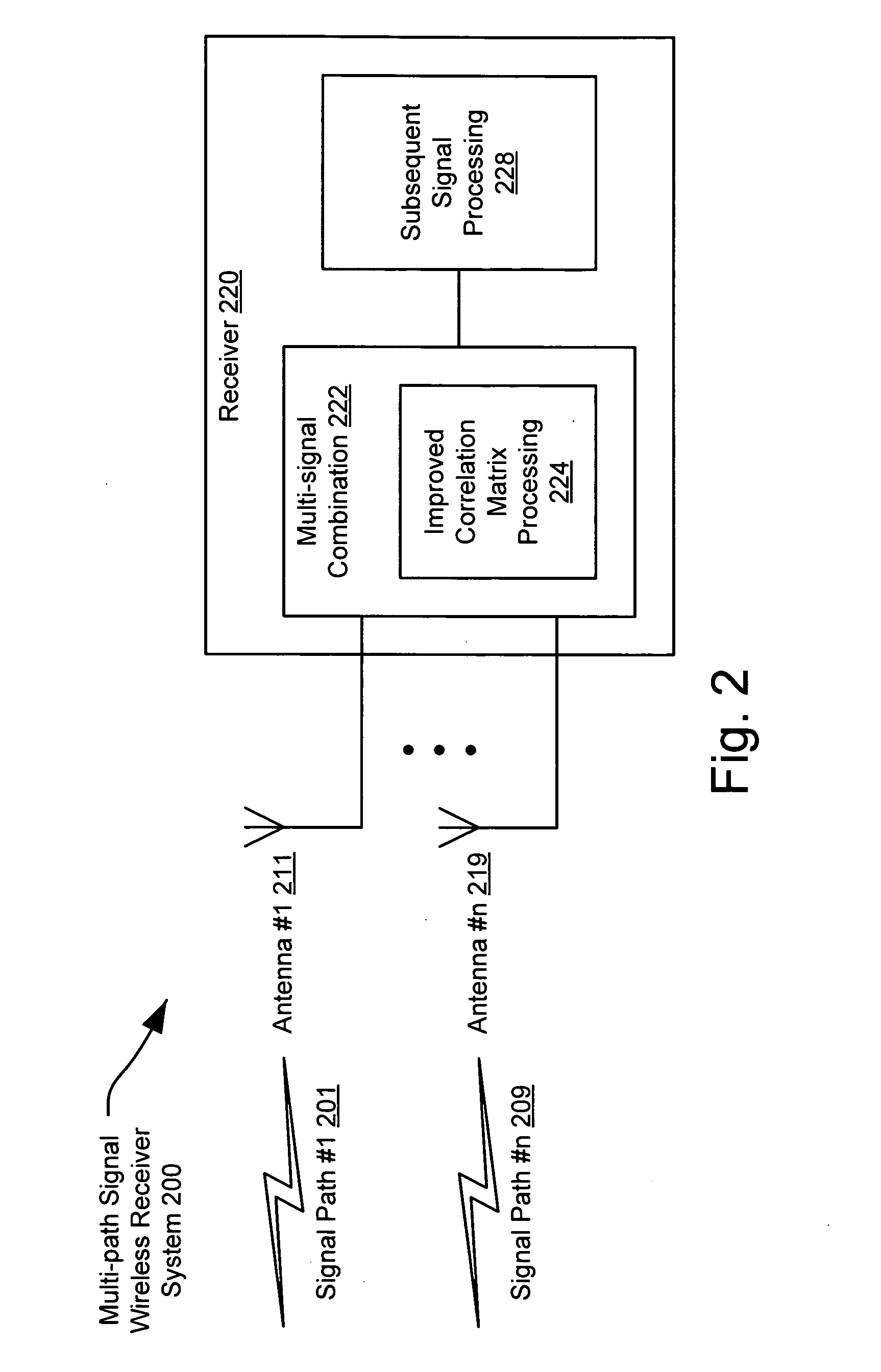

VOFDM receiver correlation matrix processing using factorization

InactiveUS20060008018A1Spatial transmit diversityPolarisation/directional diversityAlgorithmIntermediate variable

Owner:AVAGO TECH INT SALES PTE LTD

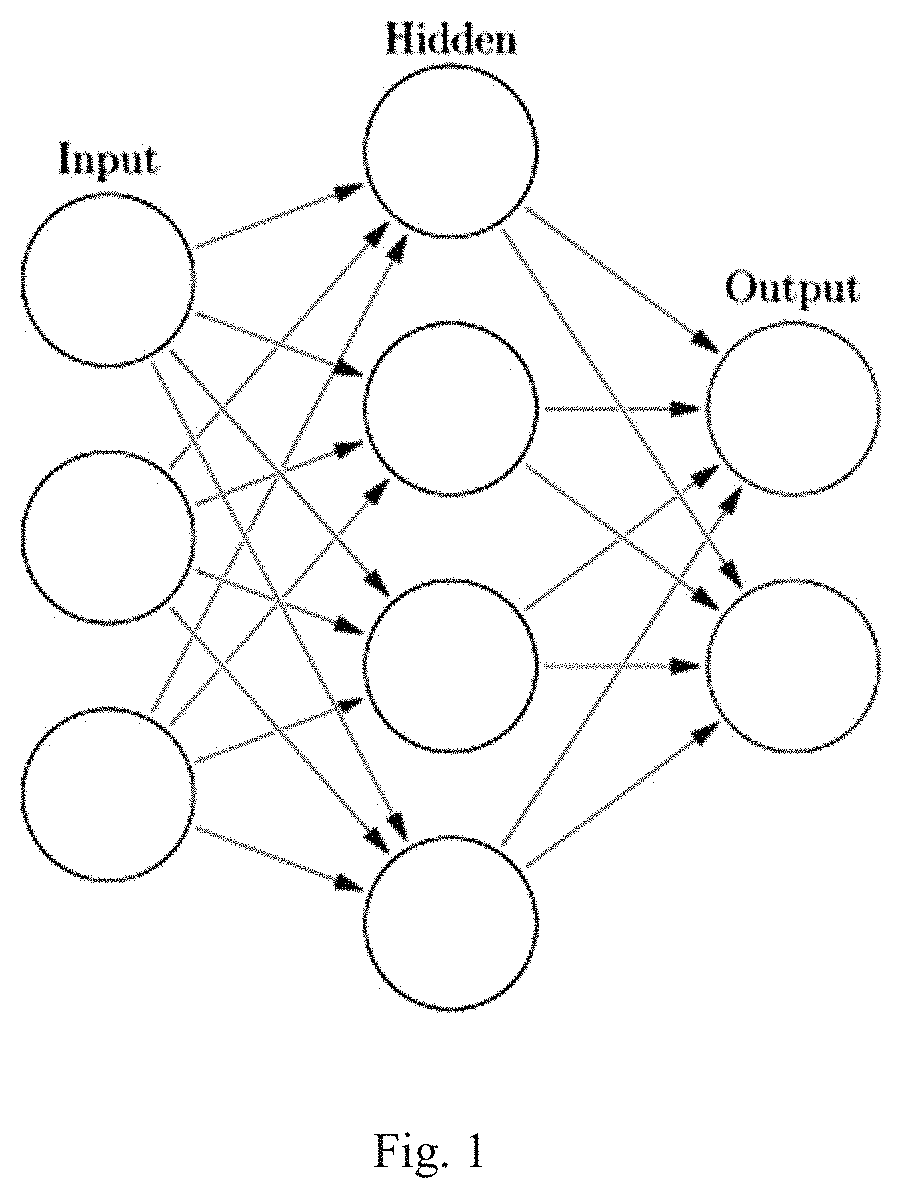

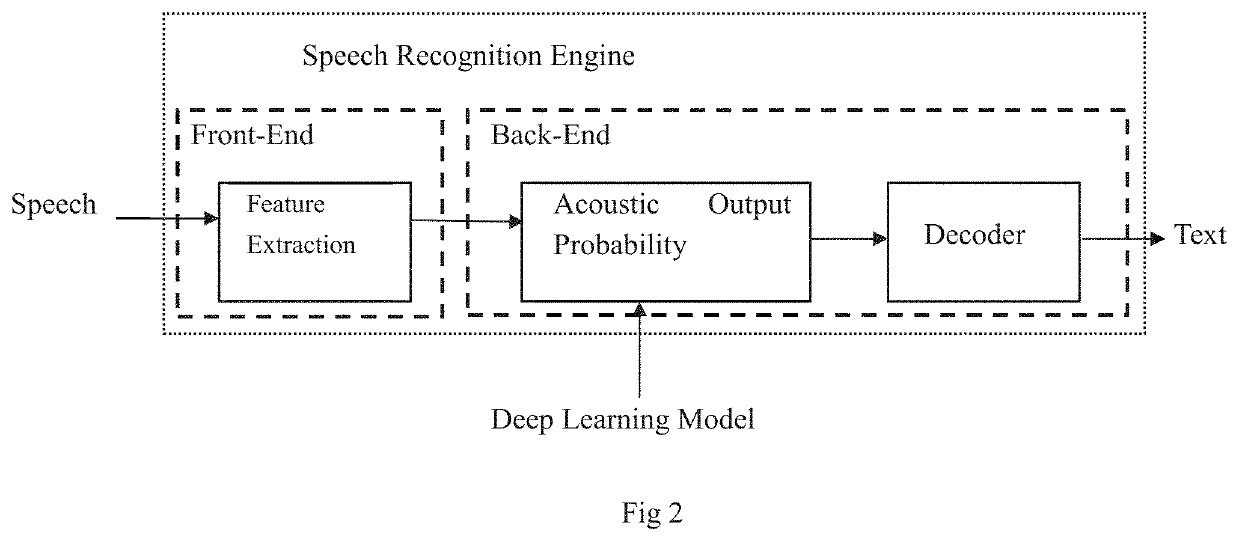

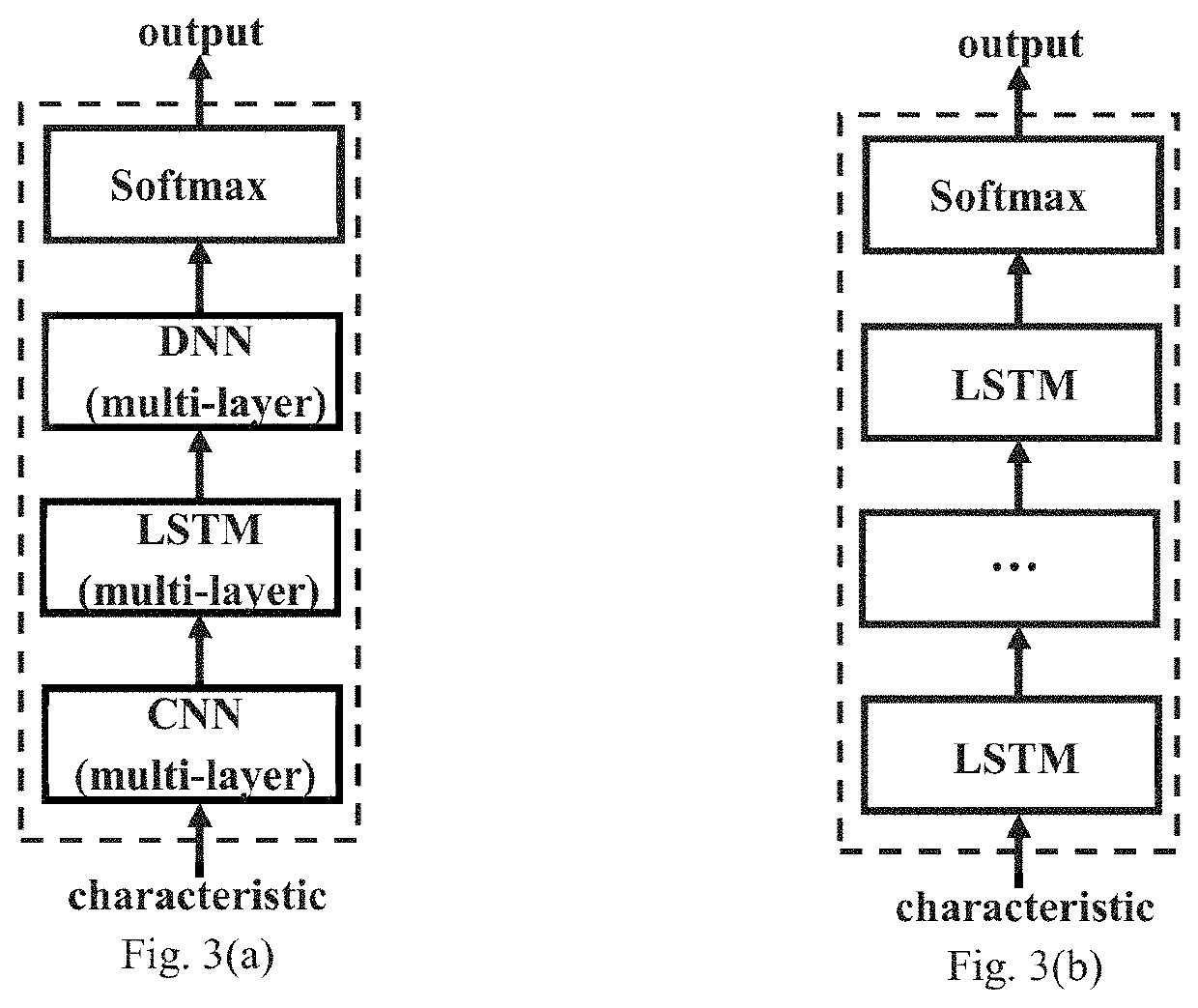

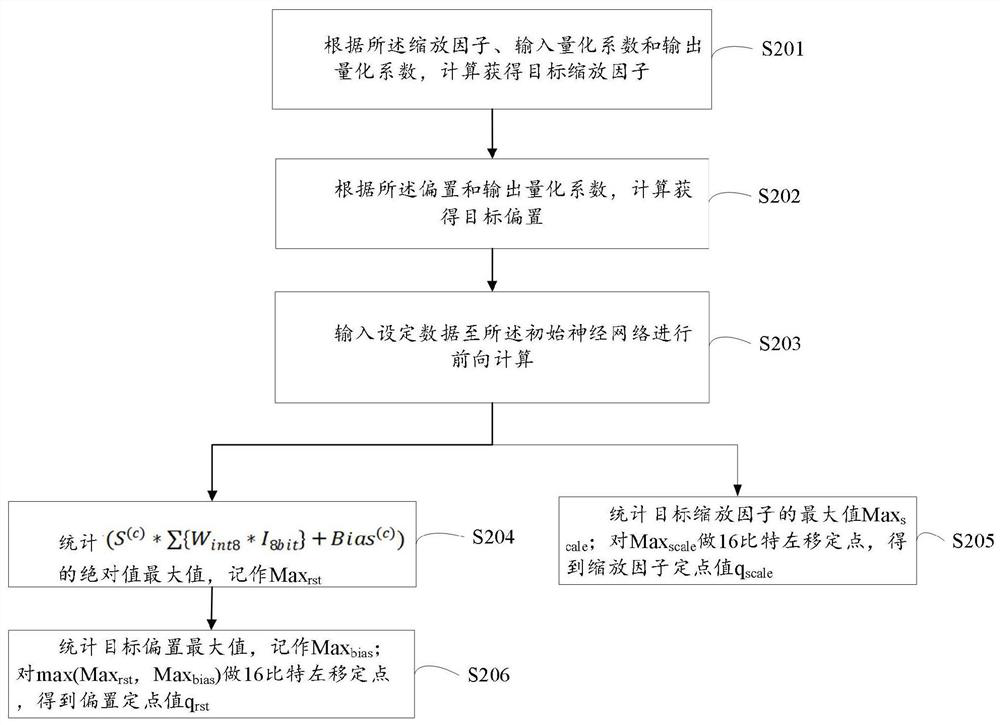

Fixed-point training method for deep neural networks based on dynamic fixed-point conversion scheme

The present disclosure proposes a fixed-point training method and apparatus based on dynamic fixed-point conversion scheme. More specifically, the present disclosure proposes a fixed-point training method for LSTM neural network. According to this method, during the fine-tuning process of the neural network, it uses fixed-point numbers to conduct forward calculation. Accordingly, within several training cycles, the network accuracy may returned to the desired accuracy level under floating point calculation.

Owner:XILINX TECH BEIJING LTD

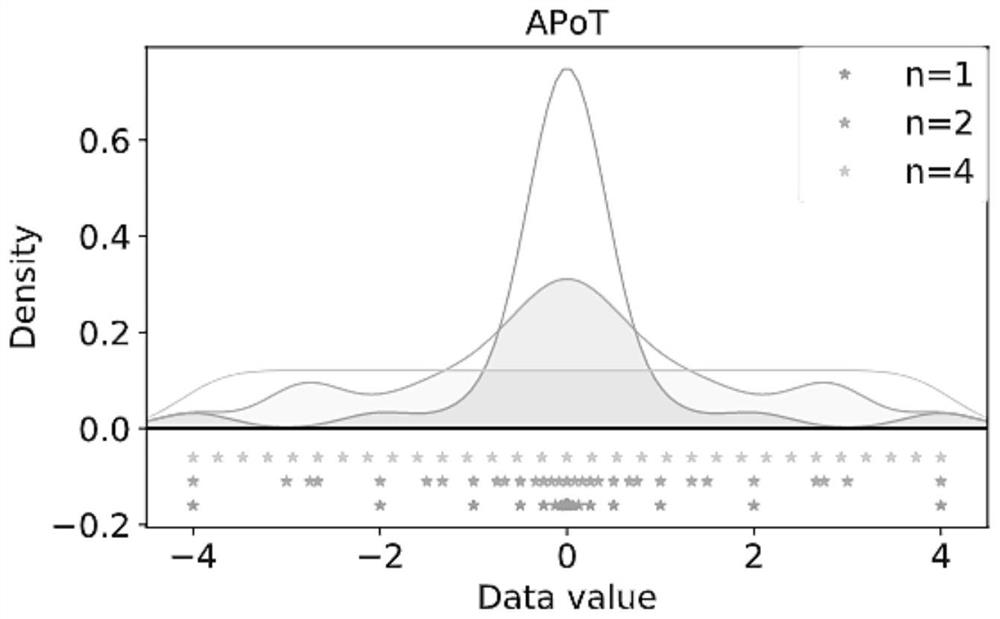

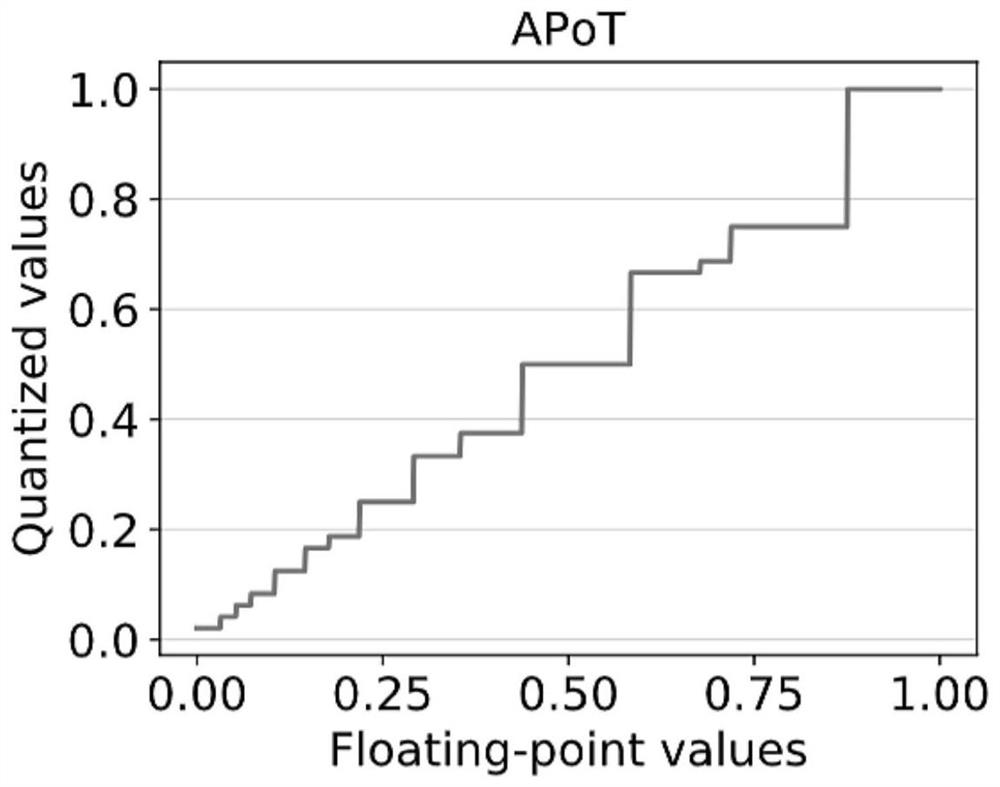

Deep neural network quantification method based on elastic significant bits

ActiveCN111768002AReduce precision lossImprove efficiencyPhysical realisationNeural learning methodsAlgorithmOriginal data

The invention provides a deep neural network quantization method based on elastic significant bits. According to the method, a fixed point number or a floating point number is quantized into a quantized value with elastic significant bits, redundant mantissa parts are discarded, and the distribution difference between the quantized value and original data is quantitatively evaluated in a feasiblesolution mode. According to the invention, by using the quantized values with elastic significant bits, the distribution of the quantized values can cover a series of bell-shaped distribution from long tails to uniformity through different significant bits to adapt to weight / activation distribution of DNNs, so that low precision loss is ensured; multiplication calculation can be realized by multiple shift addition on hardware, so that the overall efficiency of the quantization model is improved; and the distribution difference function quantitatively estimates the quantization loss brought bydifferent quantization schemes, so that the optimal quantization scheme can be selected under different conditions, low quantization loss is achieved, and the precision of the quantization model is improved.

Owner:NANKAI UNIV

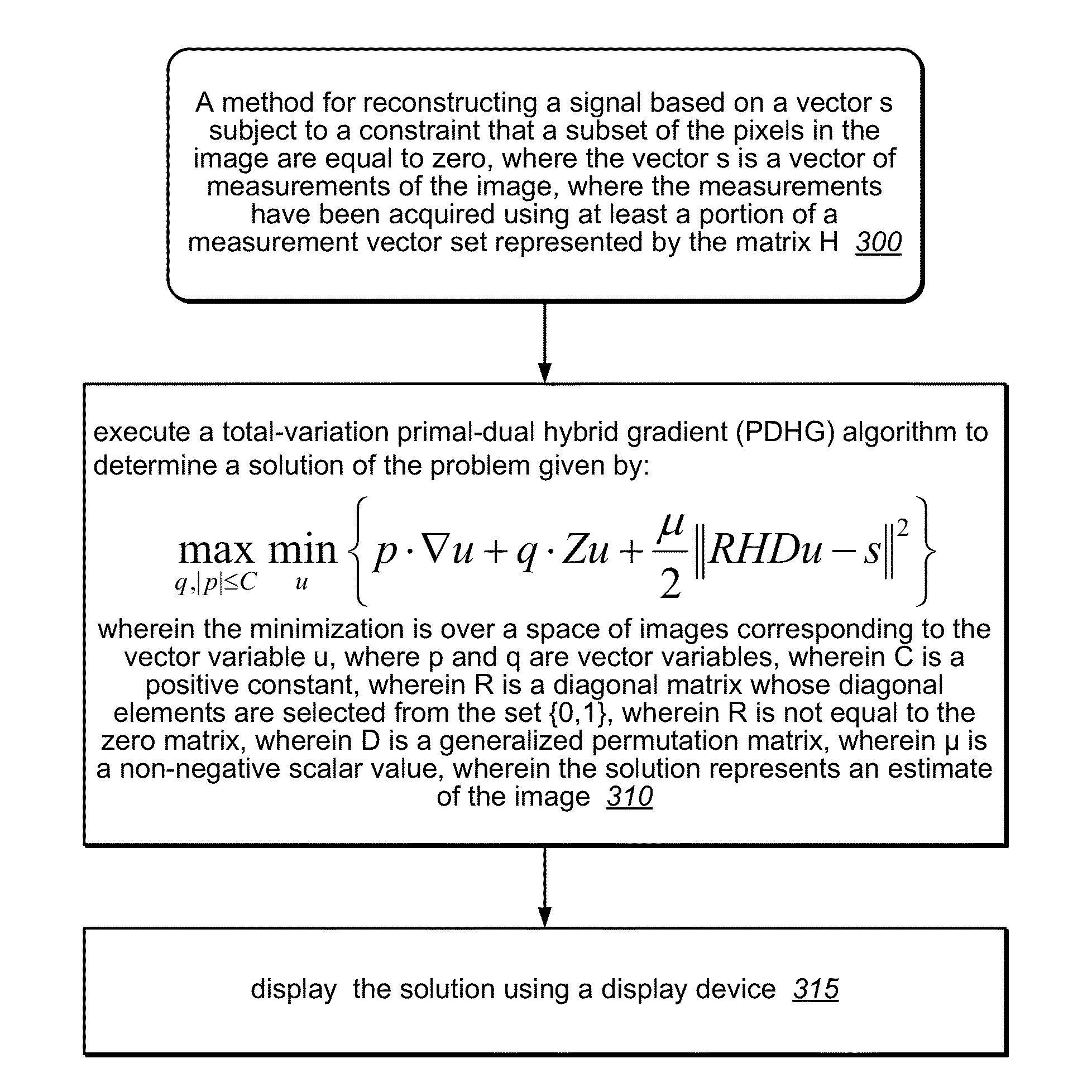

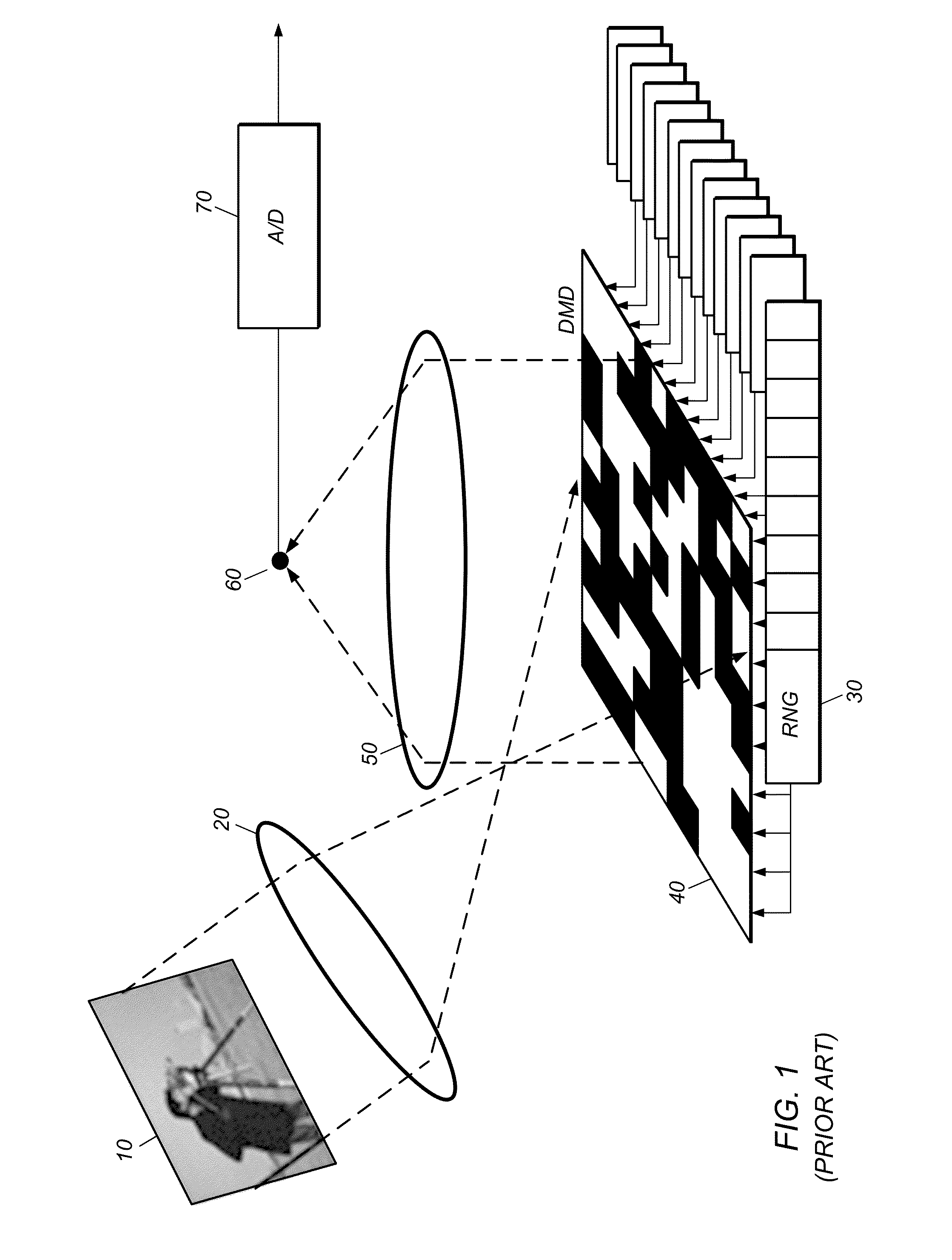

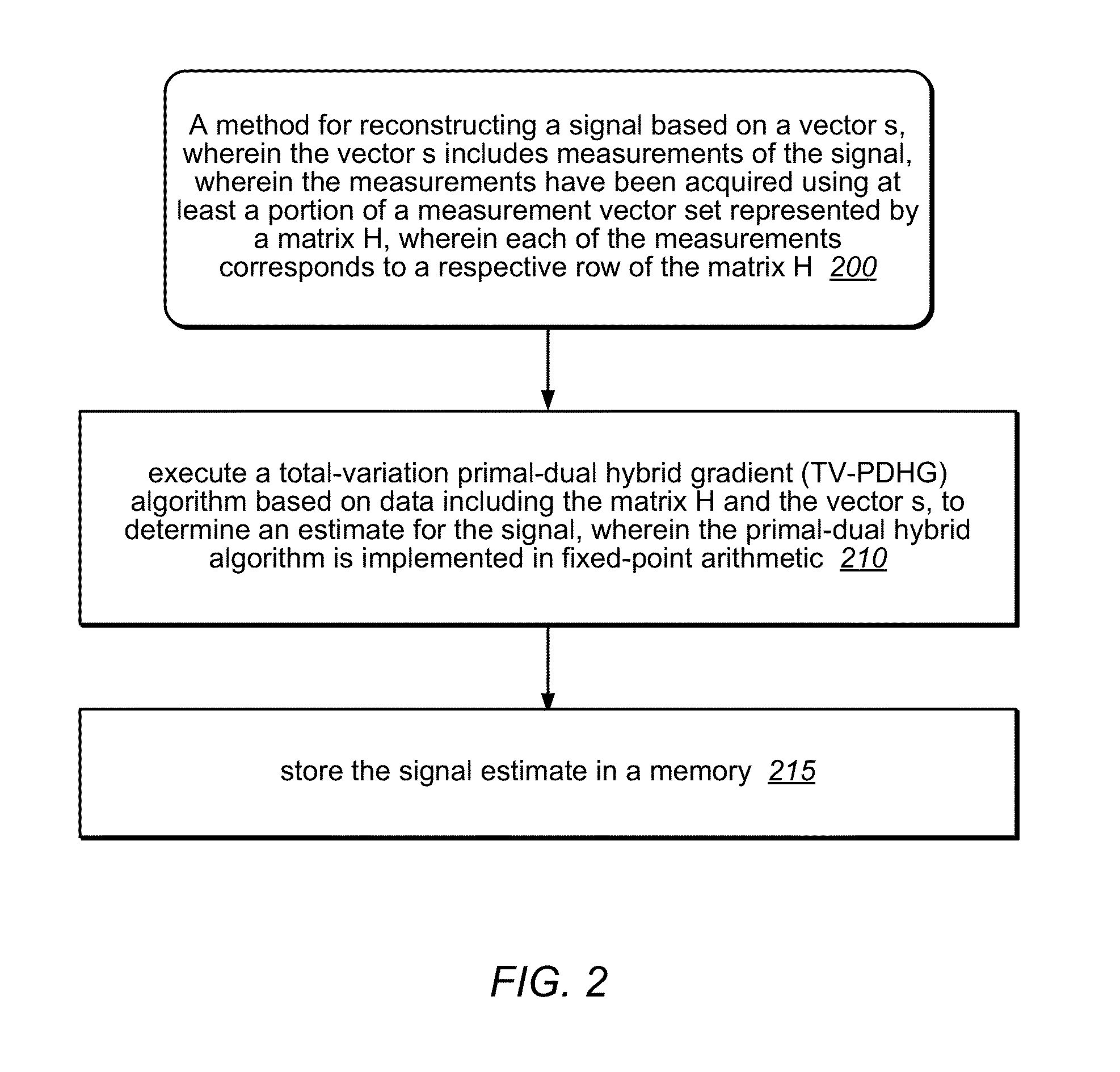

Signal reconstruction using total-variation primal-dual hybrid gradient (TV-PDHG) algorithm

A mechanism for reconstructing a signal (e.g., an image) based on a vector s, which includes measurements of the signal. The measurements have been acquired using at least a portion of a measurement vector set represented by a matrix H. Each of the measurements corresponds to a respective row of the matrix H. (For example, each of the measurements may correspond to an inner product between the signal and a respective row of the matrix product HD, wherein D is a generalized permutation matrix.) A total-variation primal-dual hybrid gradient (TV-PDHG) algorithm is executed based on data including the matrix H and the vector s, to determine an estimate for the signal. The TV-PDHG algorithm is implemented in fixed-point arithmetic.

Owner:INVIEW TECH CORP

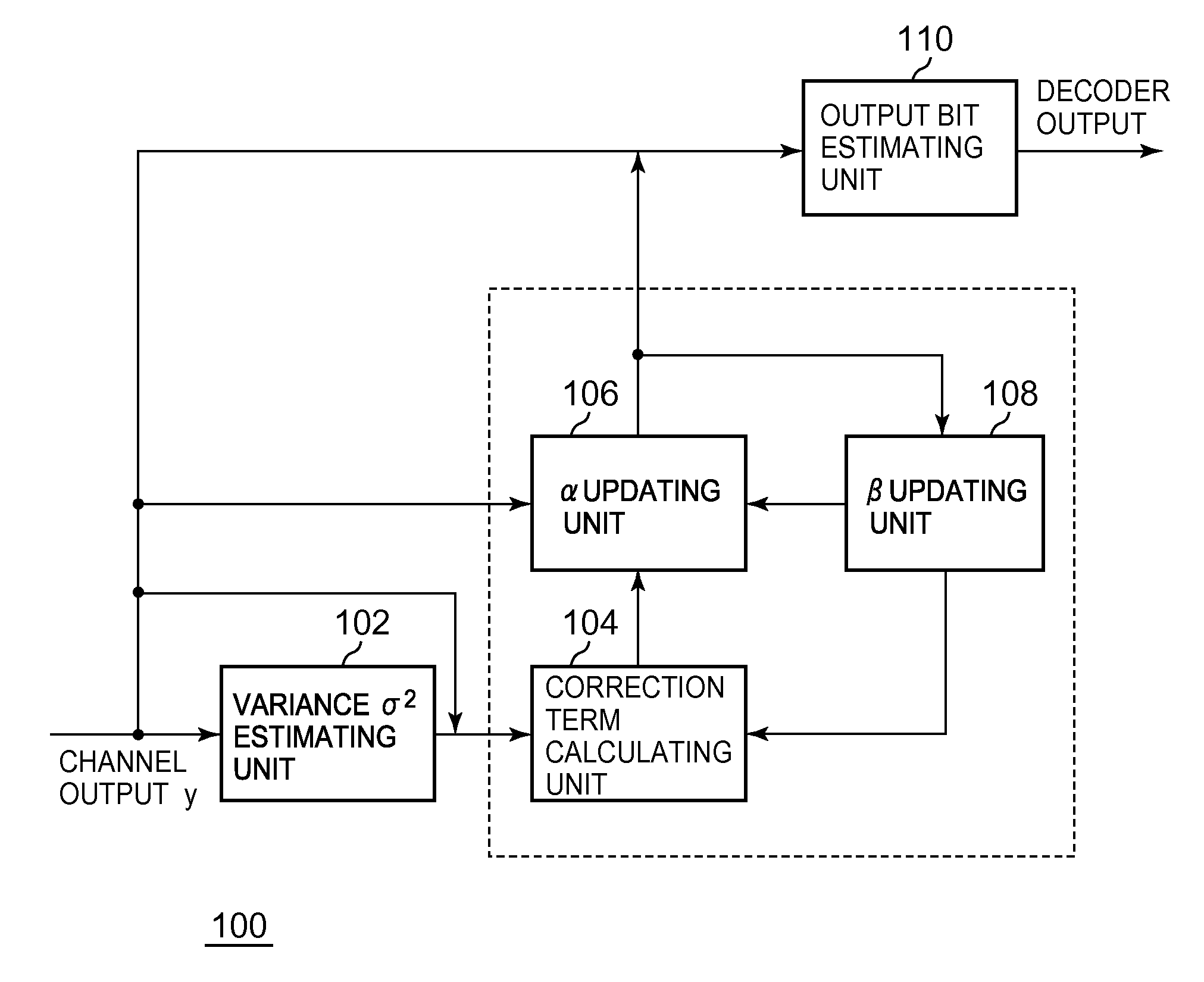

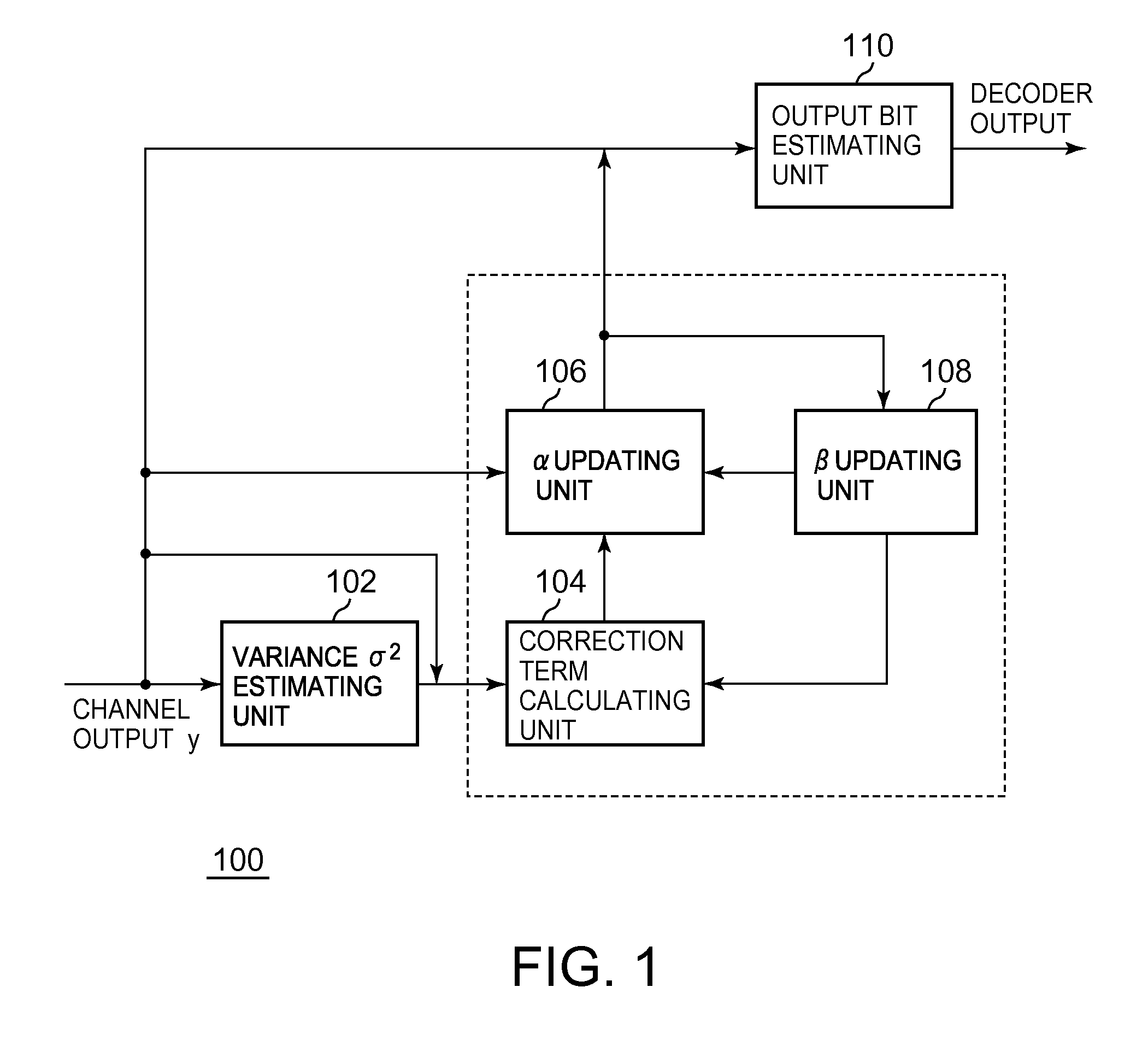

Calculation technique for sum-product decoding method (belief propagation method) based on scaling of input log-likelihood ratio by noise variance

InactiveUS20110145675A1Guaranteed uptimeCode conversionError correction/detection using block codesBelief propagationLog likelihood

One or more embodiments provide a decoding technique (and its approximate decoding technique) enabling a stable operation even if a noise variance is low at the implementation with a fixed-point arithmetic operation having a finite dynamic range. A technique is provided for causing a computer to perform calculation using a sum-product decoding method (belief propagation method) with respect to LDPC or turbo codes. For calculating an update equation of a log extrinsic value ratio from an input, a (separated) correction term is prepared obtained by variable transformation (scale transformation) of the update equation so that the update equation is represented by a sum (combination) of a plurality of terms by transformation of the equation and a communication channel noise variance is a term separated from other terms constituting a sum of a plurality of terms as a term to be a factor (scale factor) by which a log is multiplied. With an estimated communication channel noise variance as an input, the (separated) correction term is approximated by a simple function so as to cause the computer to make calculation (iteration) on the basis of a fixed point on bit strings of finite length (m,f: m is the total number of bits and f is the number of bits allocated to the fractional part).

Owner:IBM CORP

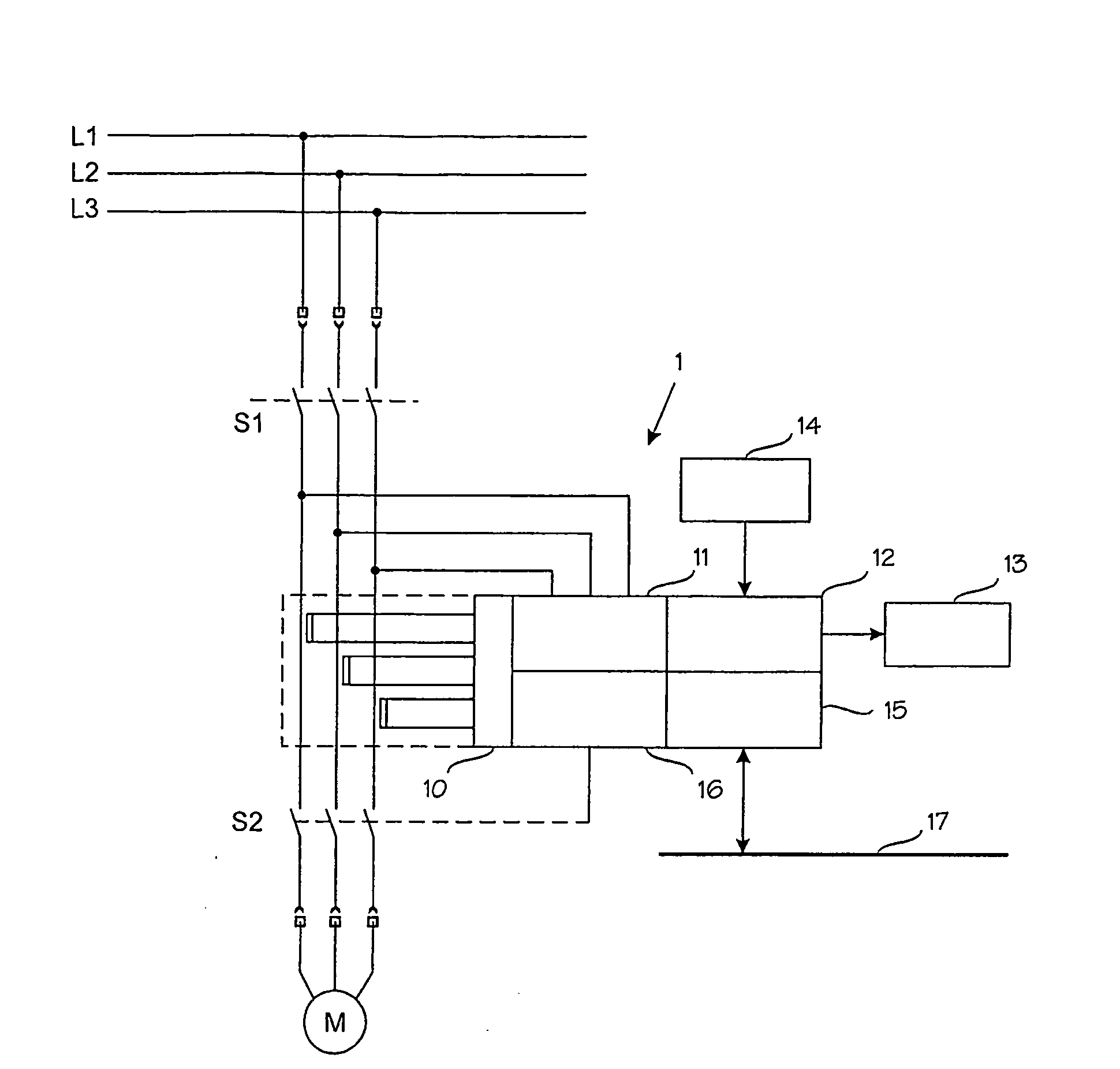

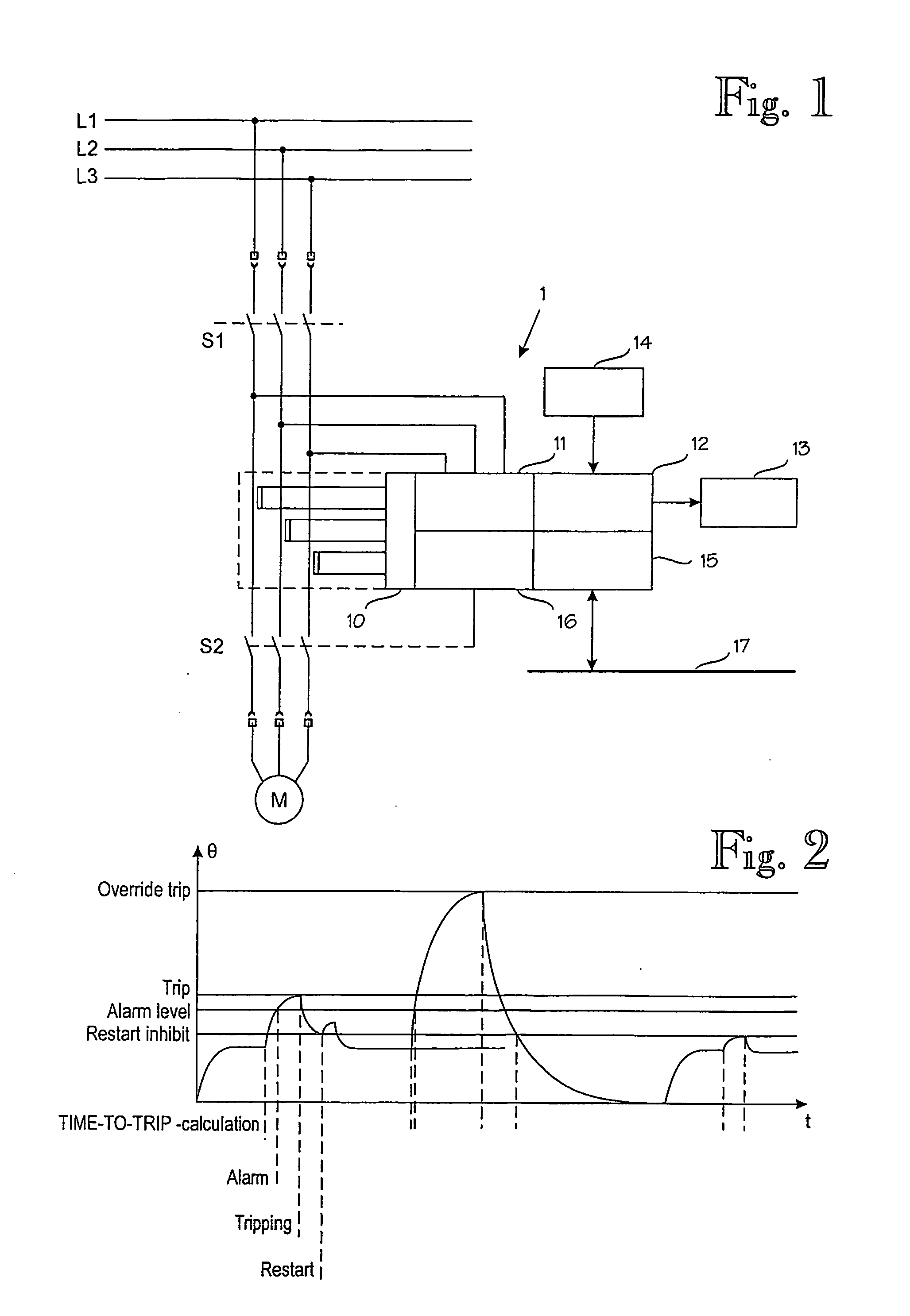

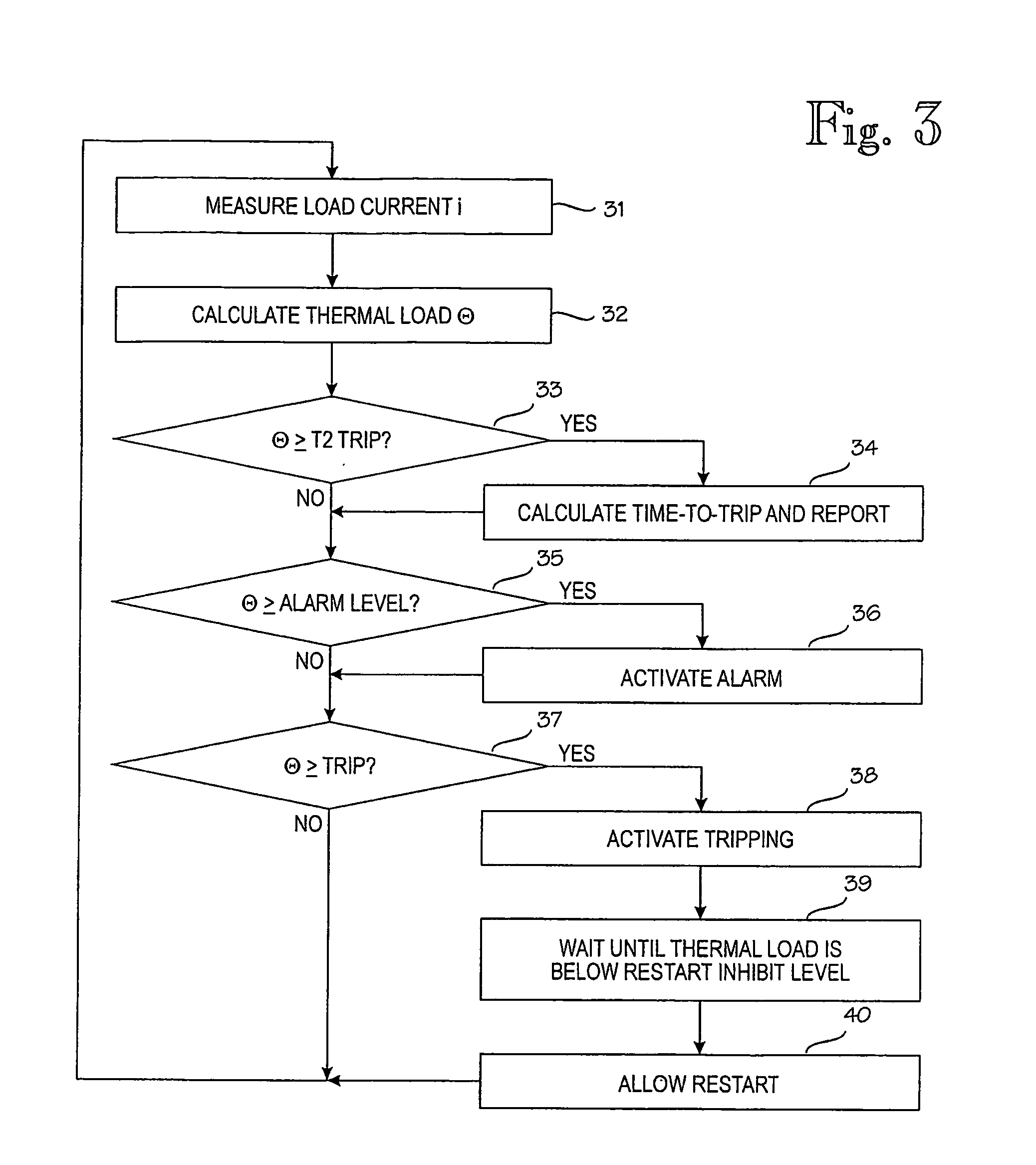

Thermal Overload Protection

InactiveUS20080253041A1Reduce power consumptionLow production costSingle-phase induction motor startersMotor/generator/converter stoppersEngineeringFixed-point arithmetic

A thermal overload protection for an electrical device, particularly an electric motor (M), measures a load current supplied to the electrical device (M), and calculates the thermal load on the electrical device on the basis of the measured load current, and shuts off (S2) a current supply (L1, L2, L3) when the thermal load reaches a given threshold level. The protection comprises a processor system employing X-bit, preferably X=32, fixed-point arithmetic, wherein the thermal load is calculated by a mathematic equation programmed into the microprocessor system structured such that a result or a provisional result never exceeds the X-bit value.

Owner:ABB OY

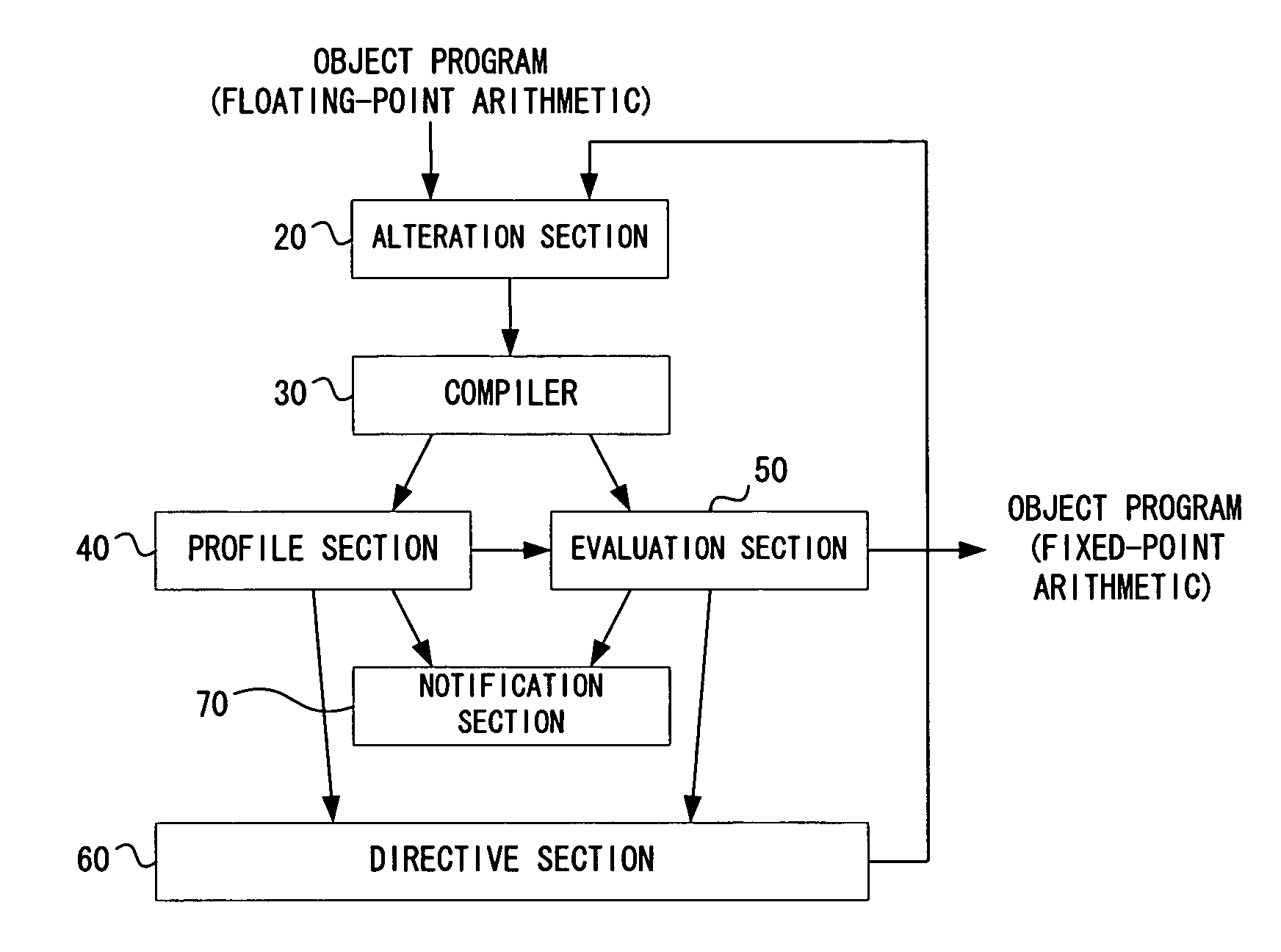

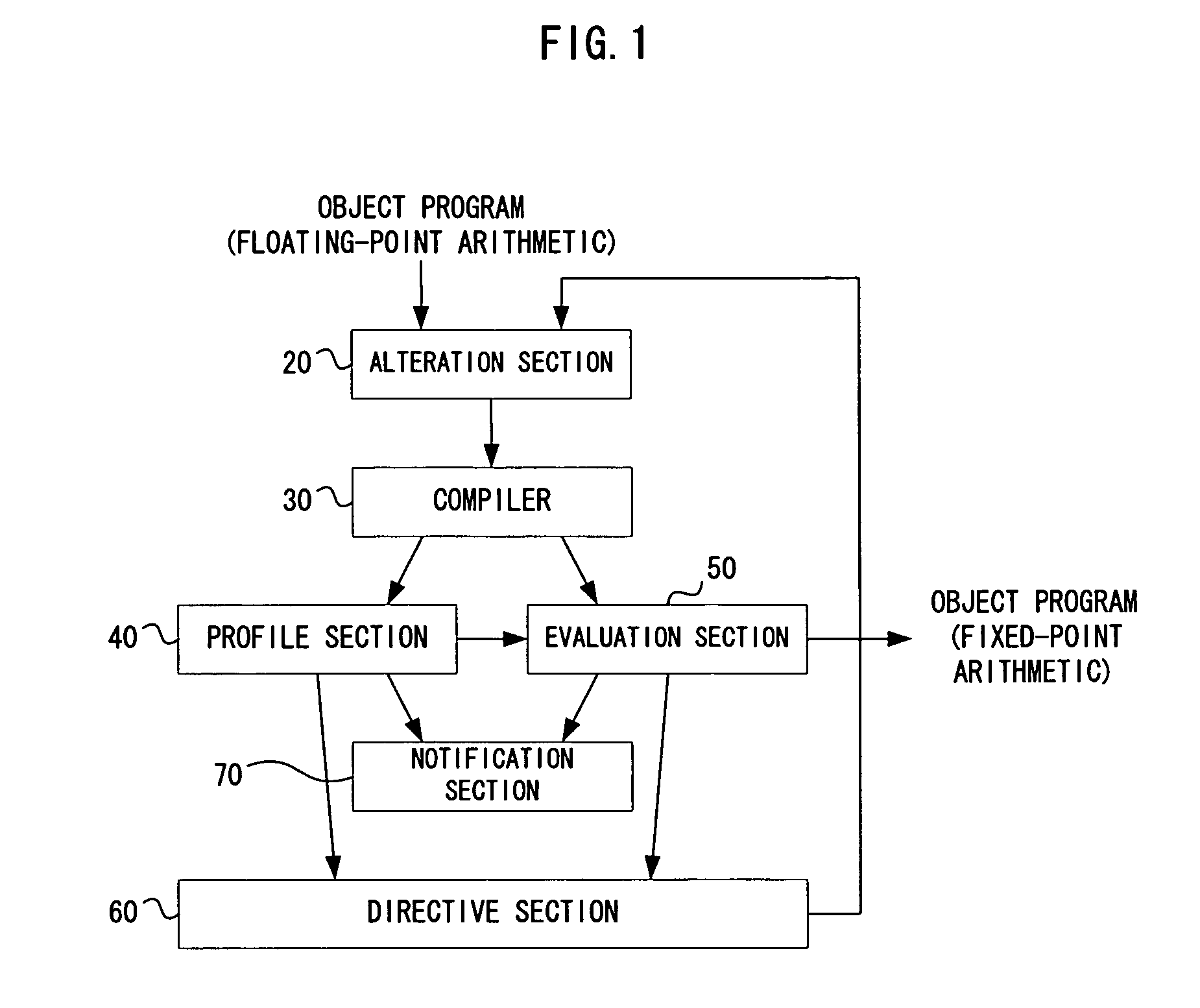

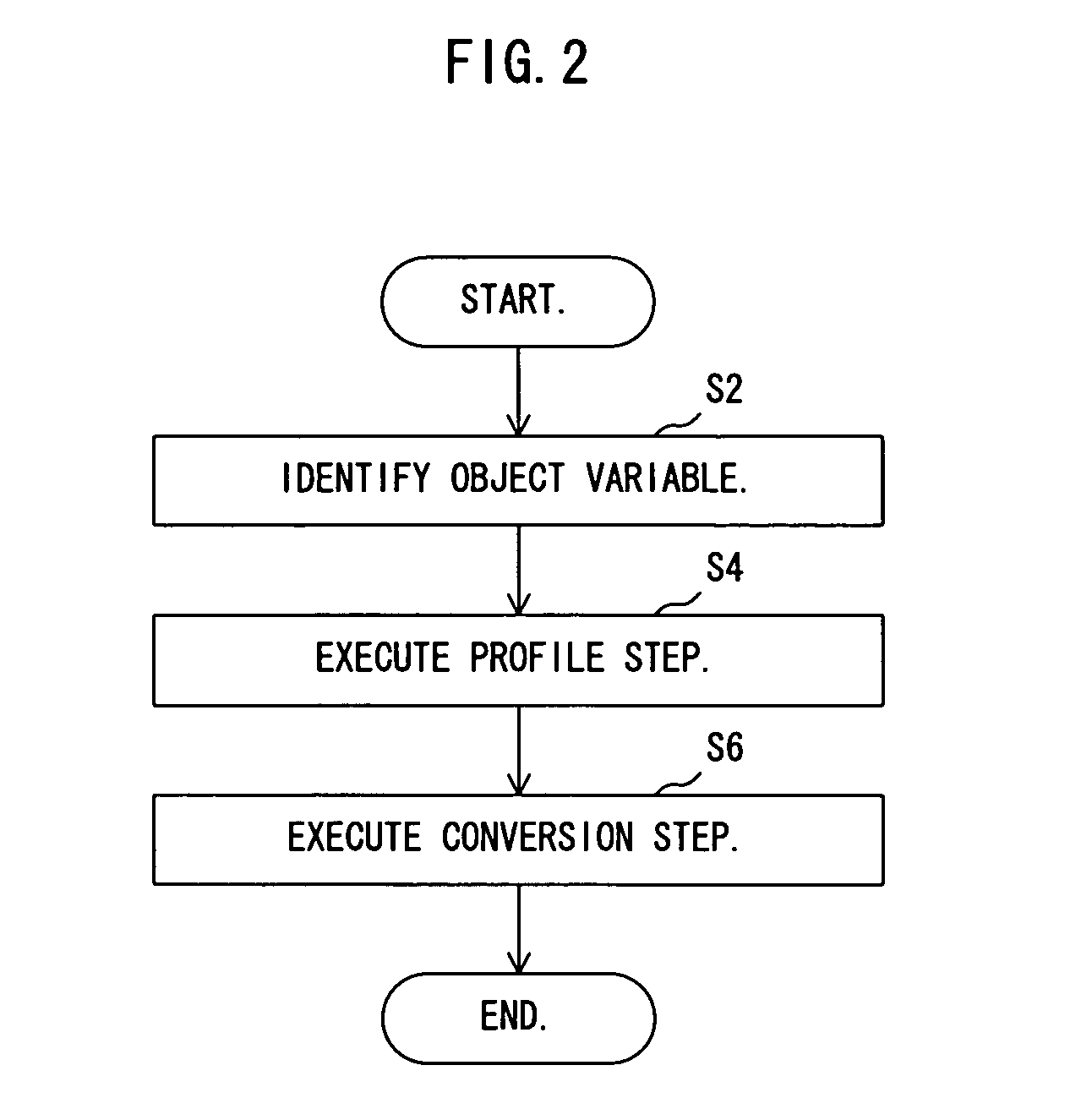

Arithmetic program conversion apparatus, arithmetic program conversion program and arithmetic program conversion method

InactiveUS20080040409A1Level accuracyLevel of accuracy can be always maintainedSoftware engineeringDigital computer detailsParallel computingFixed-point arithmetic

An arithmetic program conversion apparatus, an arithmetic program conversion program and an arithmetic program conversion method that can convert the floating-point arithmetic of an arithmetic program into a fixed-point arithmetic without degrading the accuracy. The apparatus comprises a profile section that uses as object variables the floating-point type variables of an arithmetic program for performing floating-point arithmetic operations, alters the arithmetic program so as to output the changes in the values of the object variables as history at the time of executing the arithmetic program in order to provide a first program, executes the first program and detects the range of value of the object variables according to the history obtained as a result of the execution and a conversion section that alters the arithmetic program according to the ranges of value of the object variables as detected by the profile section so as to convert the object variables into fixed-point type variables in order to provide a second program, executes the second program and determines if the accuracy of the outcome of the execution of the second program meets the predefined and required accuracy level or not.

Owner:FUJITSU LTD

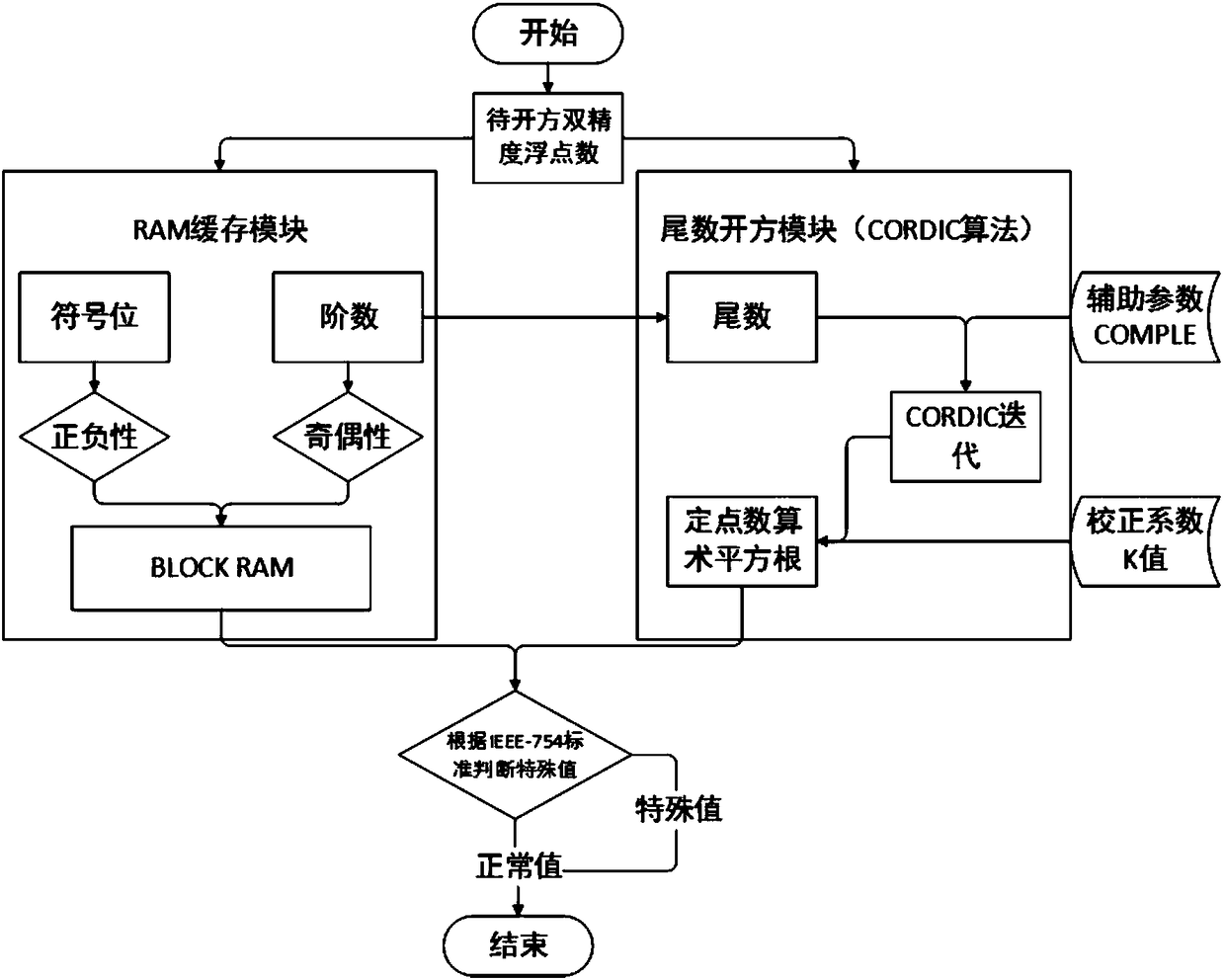

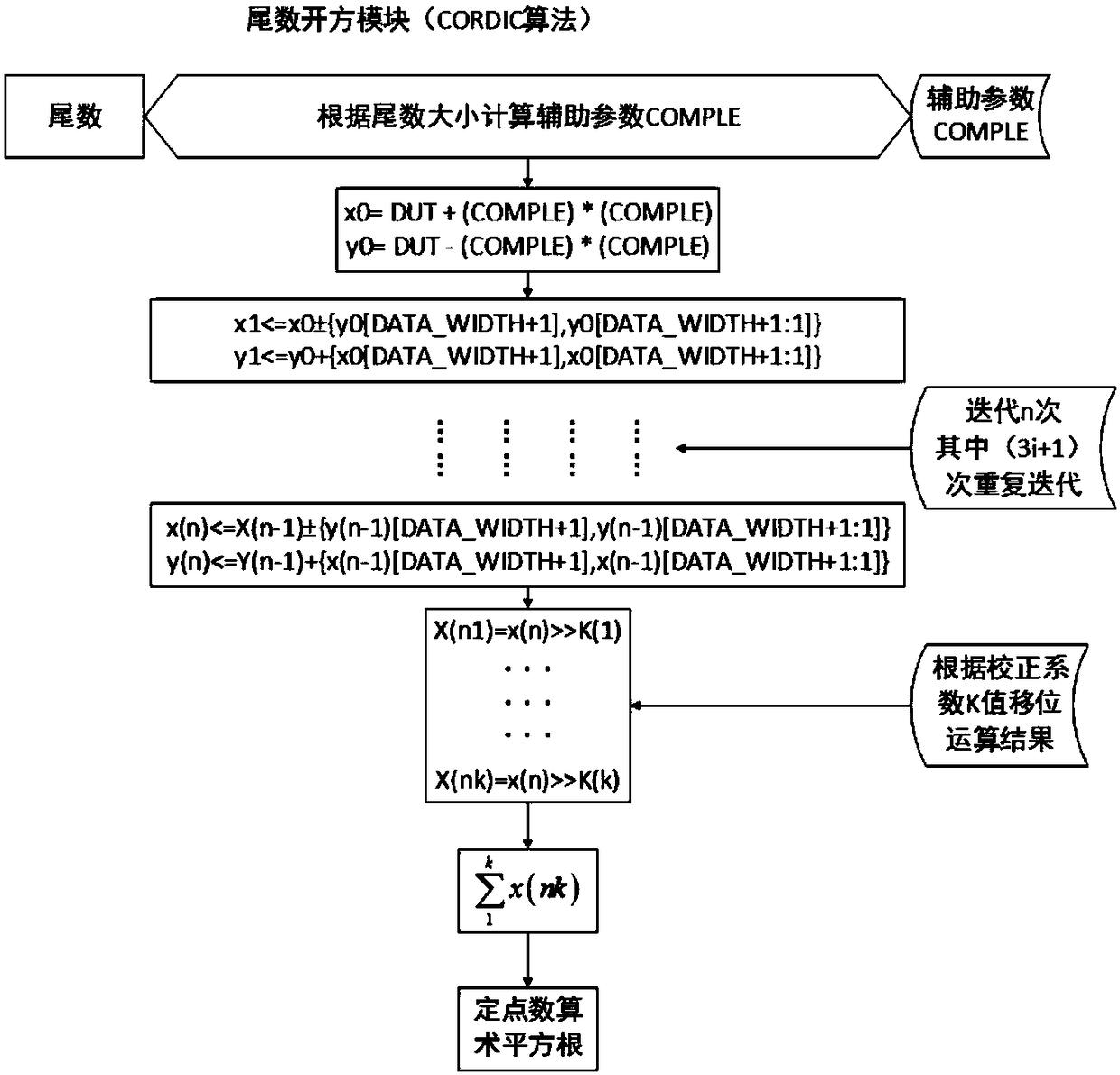

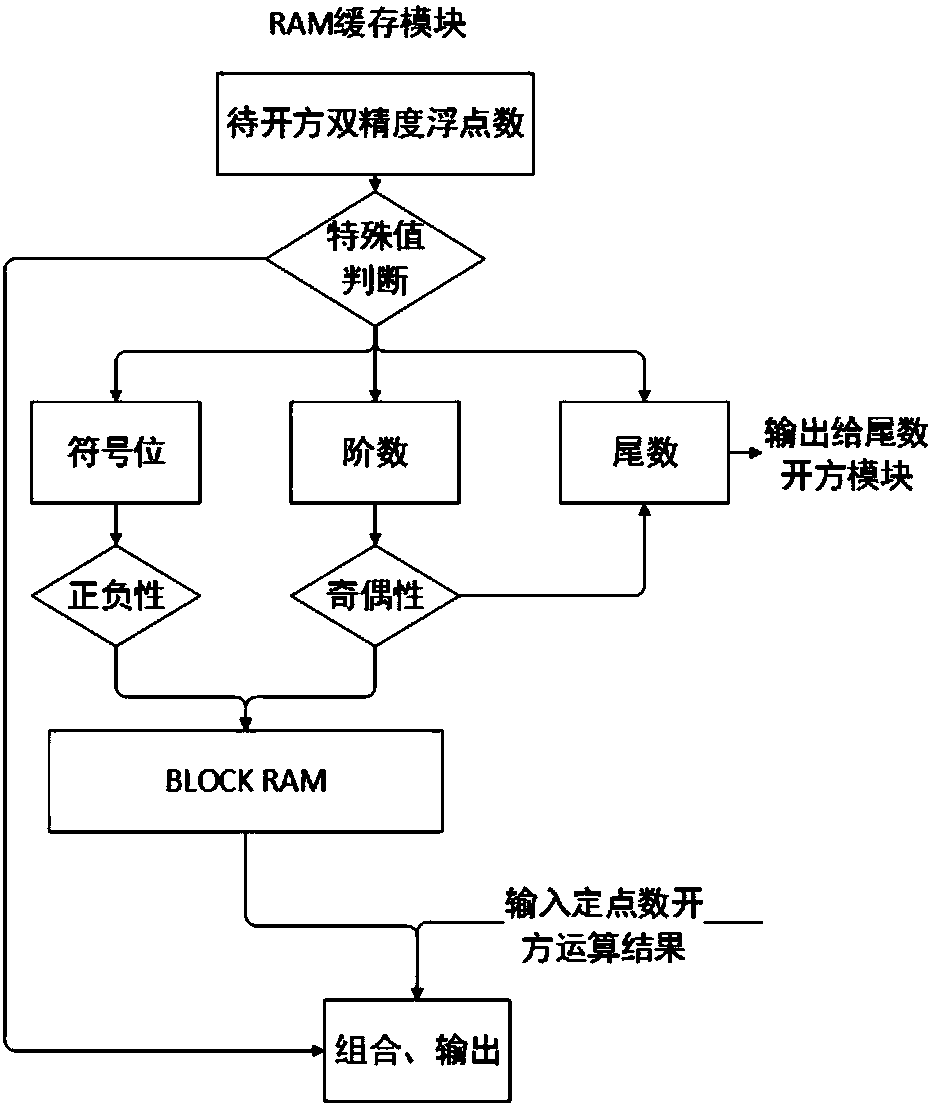

Double-precision floating point extraction operation method and system

ActiveCN108196822AReduce mistakesImprove computing efficiencyDigital data processing detailsSign bitComputer science

The invention relates to a double-precision floating point extraction operation method and system. The method comprises the steps that 1, a 64-bit double-precision floating point number is disintegrated into sign bits, orders and mantissa, a positive / negative value of a to-be-extracted number is judged based on the sign bits, exponent parity of the to-be-extracted number is judged based on the orders, the mantissa is shifted according to the exponent parity, and the sign bits and exponents obtained after operation are stored into an RAM; 2, the mantissa part of the to-be-extracted number is input into an extraction module, extraction operation is performed on a magnified 106-bit fixed-point number through a CORDIC algorithm, a correction coefficient K value is processed through shift operation in an FPGA, an auxiliary parameter COMPLE is calculated according to the value of the mantissa, and meanwhile repeated iteration is performed during partial iterative operation (i,3i+1 times) inoperation of the 106-bit fixed-point number; and 3, arithmetic square roots of the mantissa are output, and after special values are isolated, the rest is combined with the sign bits and the exponentsin the RAM to complete double-precision floating point number extraction operation. Through the double-precision floating point extraction operation method and system, operation efficiency can be substantially improved.

Owner:BEIJING SATELLITE INFORMATION ENG RES INST

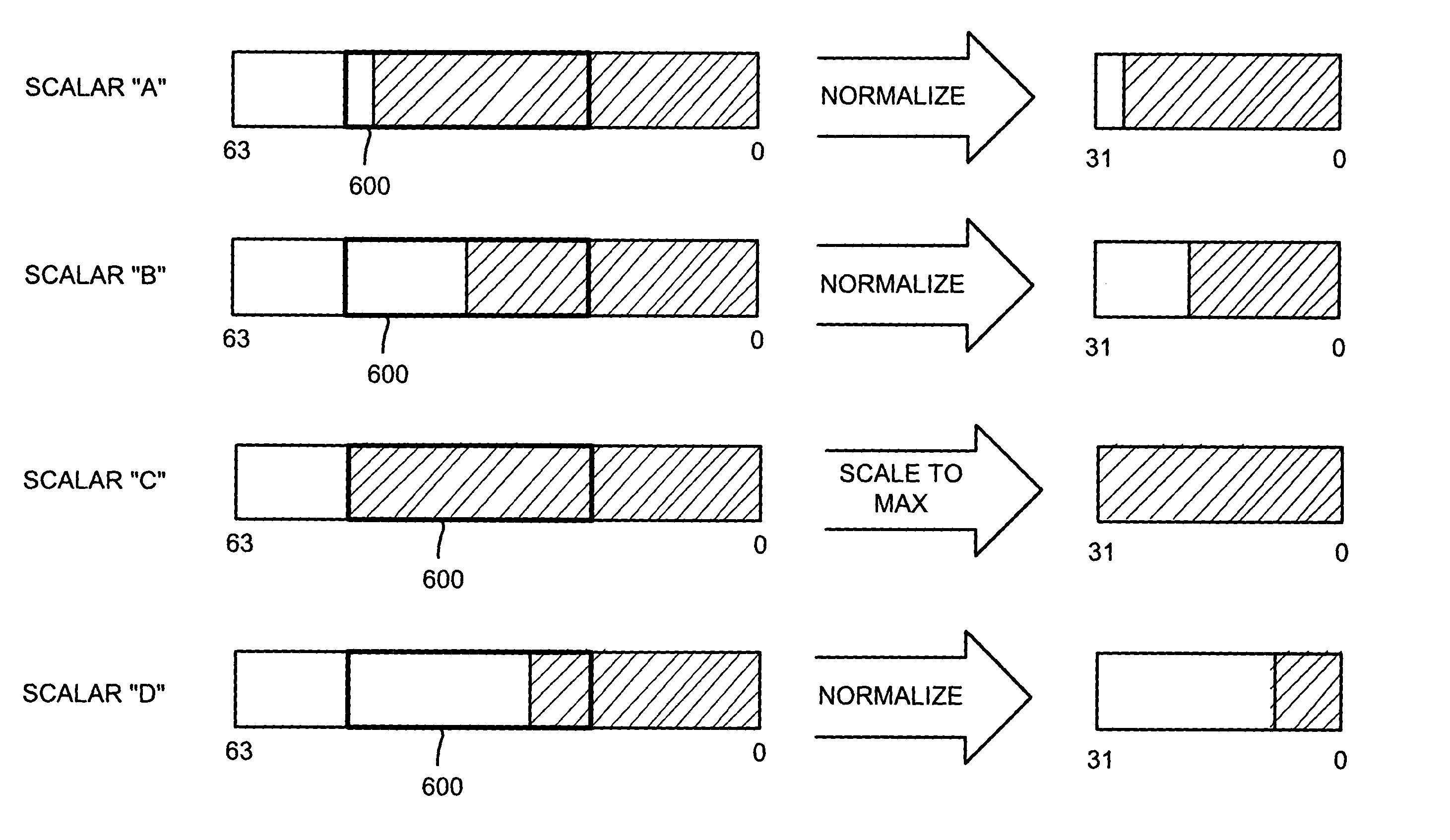

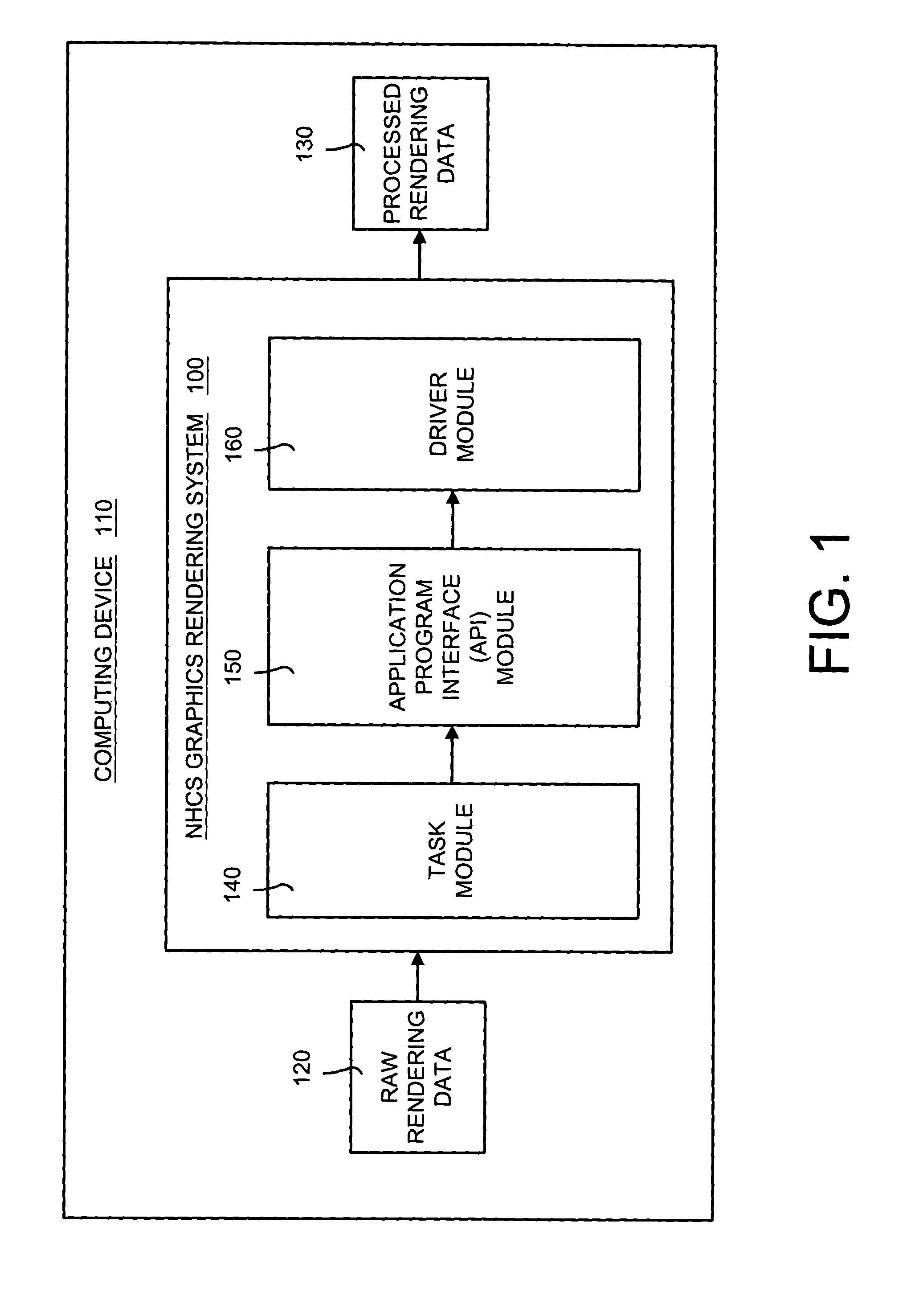

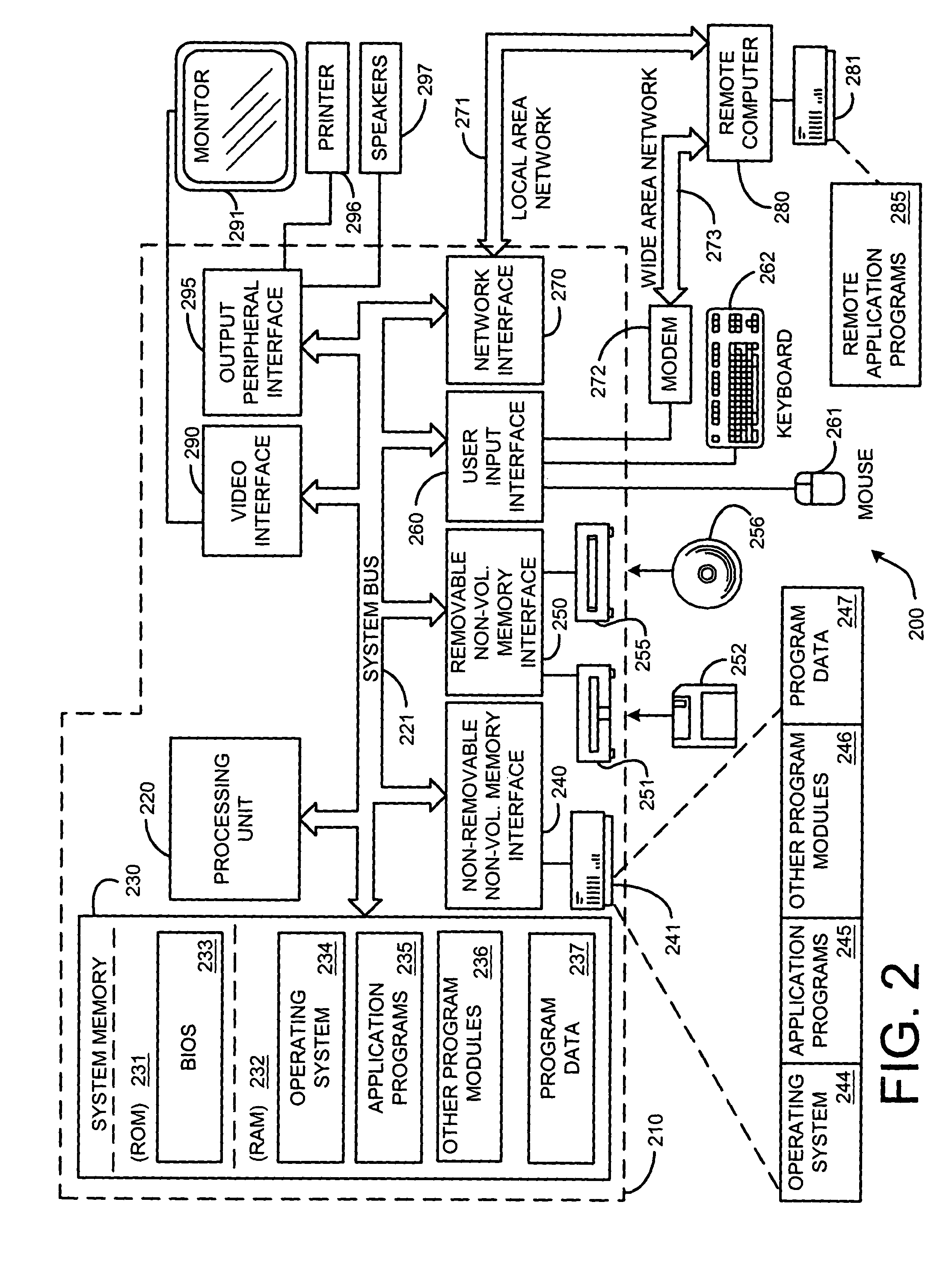

Optimized fixed-point mathematical library and graphics functions for a software-implemented graphics rendering system and method using a normalized homogenous coordinate system

InactiveUS7139005B2Less memoryFast and efficientCathode-ray tube indicatorsImage generationComputational scienceGraphics

A software-implemented graphics rendering system and method designed and optimized for embedded devices (such as mobile computing devices) using fixed-point operations including a variable-length fixed point representation for numbers and a normalized homogenous coordinates system for vector operations. The graphics rendering system and method includes a fixed-point mathematics library and graphics functions that includes optimized basic functions such as addition, subtraction, multiplication, division, all vertex operations, matrix operations, transform functions and lighting functions, and graphics functions. The mathematical library and graphics functions are modified and optimized by using a variable-length fixed-point representation and a normalized homogenous coordinate system (NHCS) for vector operations.

Owner:MICROSOFT TECH LICENSING LLC

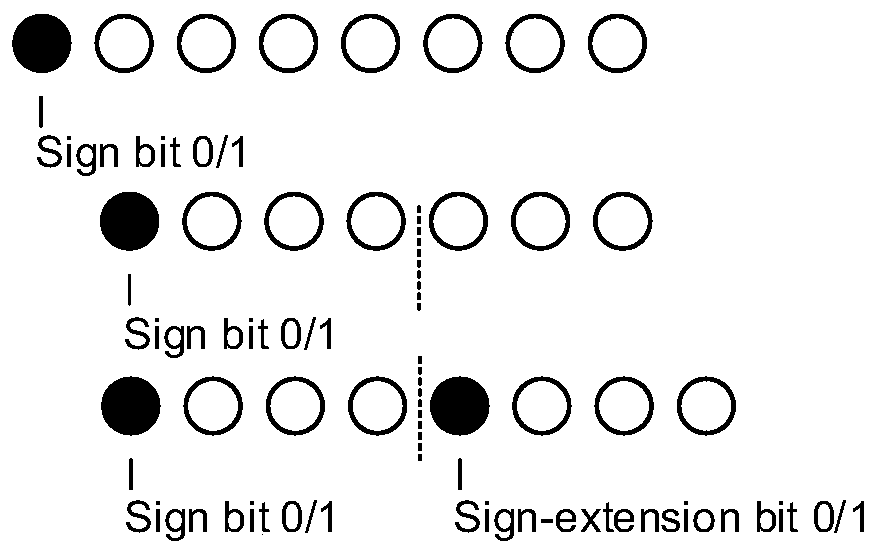

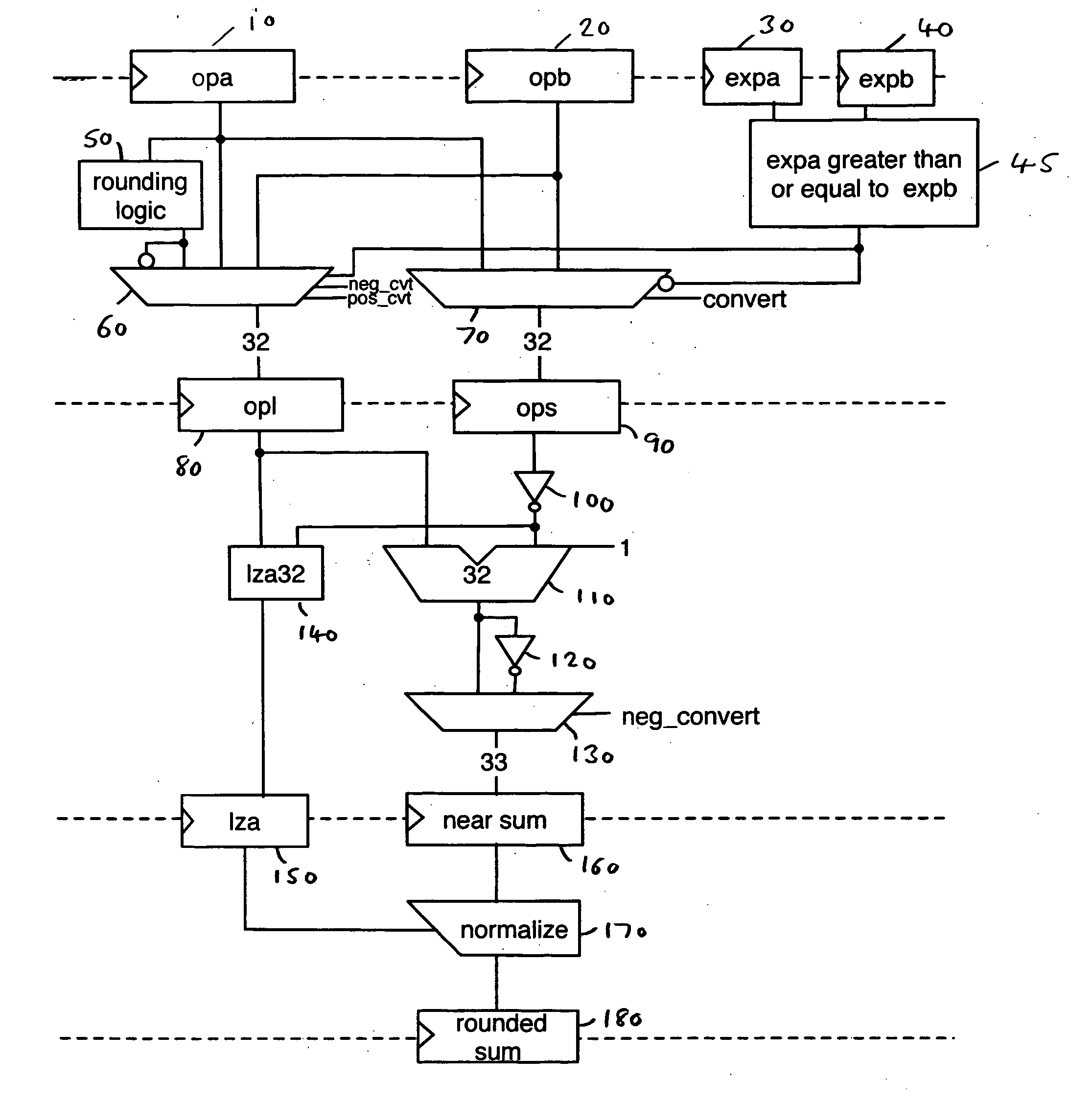

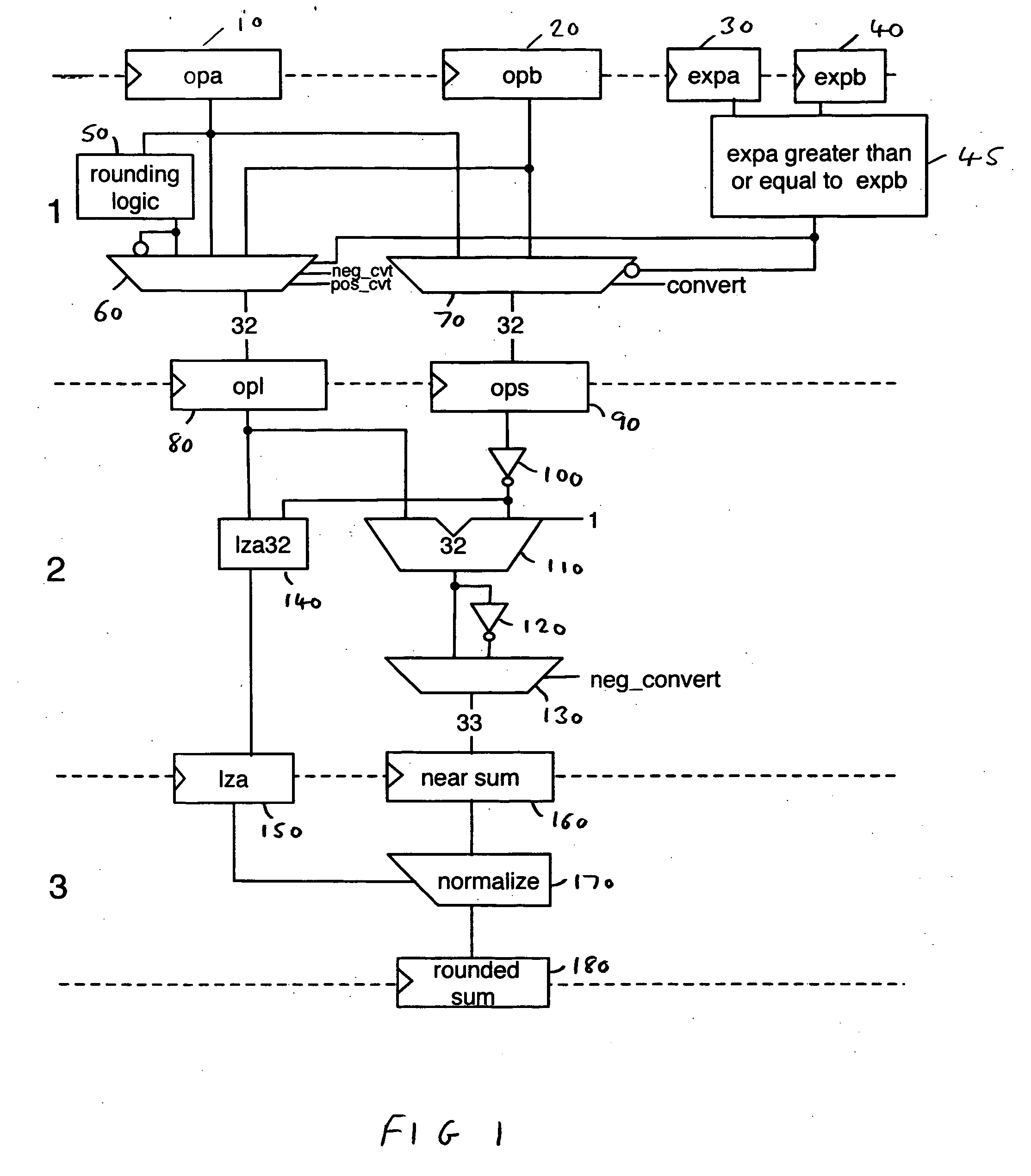

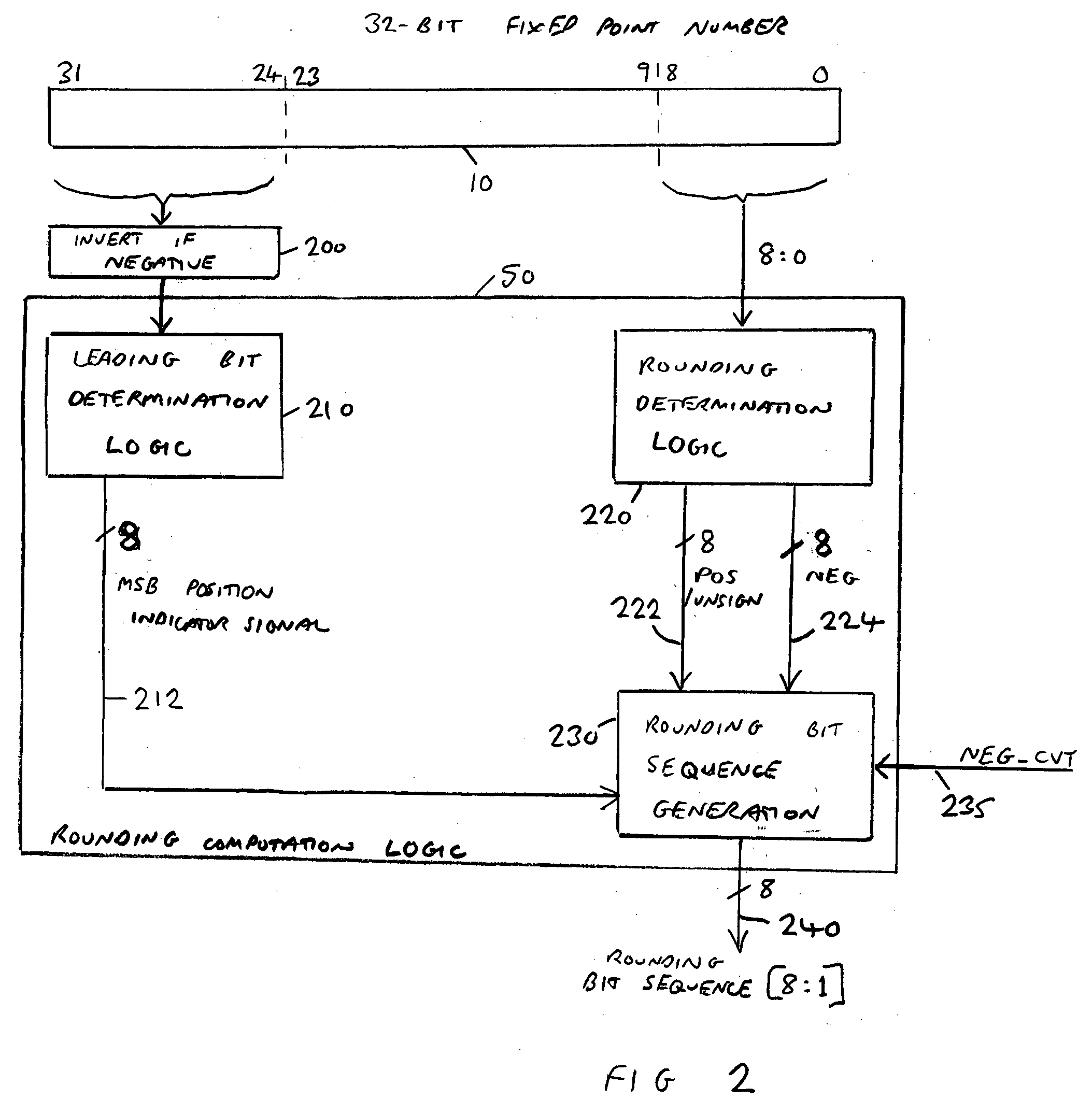

Data processing apparatus and method for converting a fixed point number to a floating point number

ActiveUS20060136536A1Sequencing is requiredDigital data processing detailsDigital computer detailsLeast significant bitFixed-point arithmetic

A data processing apparatus and method are provided for converting an m-bit fixed point number to a rounded floating point number having an n-bit significand, where n is less than m. The data processing apparatus comprises determination logic for determining the bit location of the most significant bit of the value expressed within the m-bit fixed point number, and low order bit analysis logic for determining from a selected number of least significant bits of the m-bit fixed point number a rounding signal indicating whether a rounding increment is required in order to generate the n-bit significand. Generation logic is then arranged in response to the rounding signal to generate a rounding bit sequence appropriate having regard to the bit location determined by the determination logic. Adder logic then adds the rounding bit sequence to the m-bit fixed point number to generate an intermediate result, whereafter normalisation logic shifts the intermediate result to generate the n-bit significand. At this point, due to the incorporation of the rounding information prior to the addition, the generated n-bit significand is correctly rounded.

Owner:ARM LTD

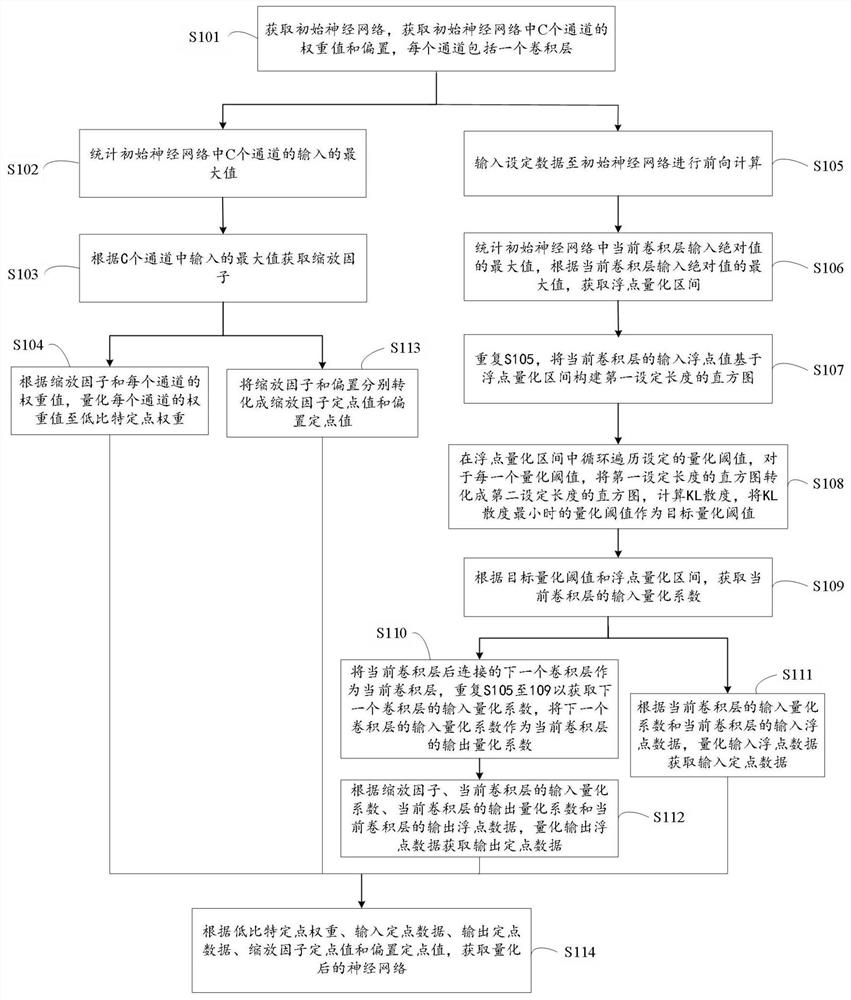

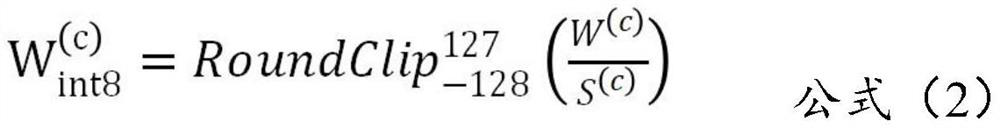

Neural network low-bit quantization method

PendingCN112381205APracticalImprove Quantization EfficiencyNeural architecturesPhysical realisationAlgorithmQuantized neural networks

The invention relates to a neural network low-bit quantification method. The weight value of each channel of a neural network is quantified to a low-bit fixed-point weight. And the method also includes obtaining an input quantization coefficient of the current convolution layer according to the target quantization threshold and the floating point quantization interval; taking the input quantization coefficient of the next convolution layer as the output quantization coefficient of the current convolution layer; quantizing the input floating point data to obtain input fixed point data; and quantizing the output floating point data to obtain output fixed point data; and converting the scaling factor and the bias into a scaling factor fixed-point value and a bias fixed-point value respectively; according to the low-bit fixed-point weight, the input fixed-point data, the output fixed-point data, the scaling factor fixed-point value and the offset fixed-point value, obtaining a neural network, and applying the quantized neural network model to embedded equipment.

Owner:BEIJING TSINGMICRO INTELLIGENT TECH CO LTD

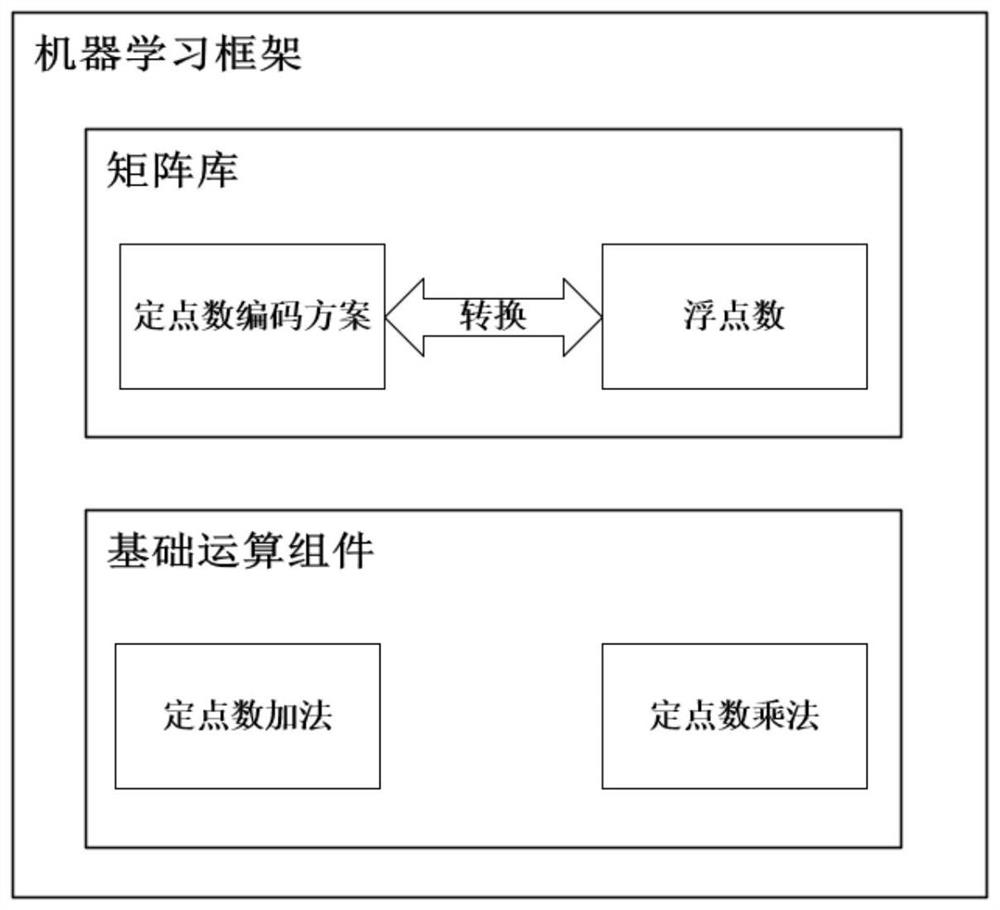

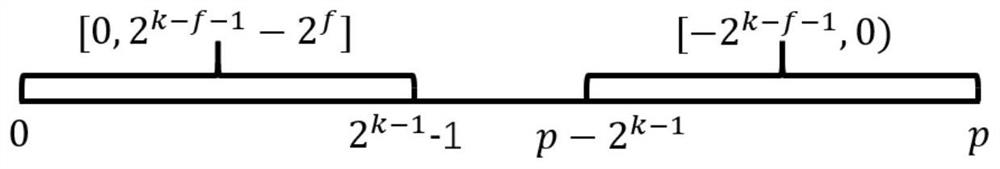

Fixed-point number coding and operation system for privacy protection machine learning

ActiveCN111857649ASafe and efficient fixed-point representationSafe and Efficient Computing SupportComputation using non-contact making devicesNeural architecturesAlgorithmPrivacy protection

The invention belongs to the technical field of network space safety, and particularly relates to a fixed-point number coding and operation system for privacy protection machine learning. The system comprises a fixed point number representation module, according to the fixed-point number encoding method, the fixed-point number encoding mode in the finite field is applied to privacy protection machine learning, the purpose of providing an overall solution of fixed-point number encoding and operation in privacy protection machine learning is achieved, and a fixed-point number encoding scheme andan operation mechanism in privacy protection machine learning are achieved. Compared with an existing machine learning framework, models (such as linear regression, logistic regression, a BP neural network and an LSTM neural network) trained by the machine learning framework for representing the fixed-point number through the system can execute prediction and classification tasks with almost thesame precision.

Owner:FUDAN UNIV

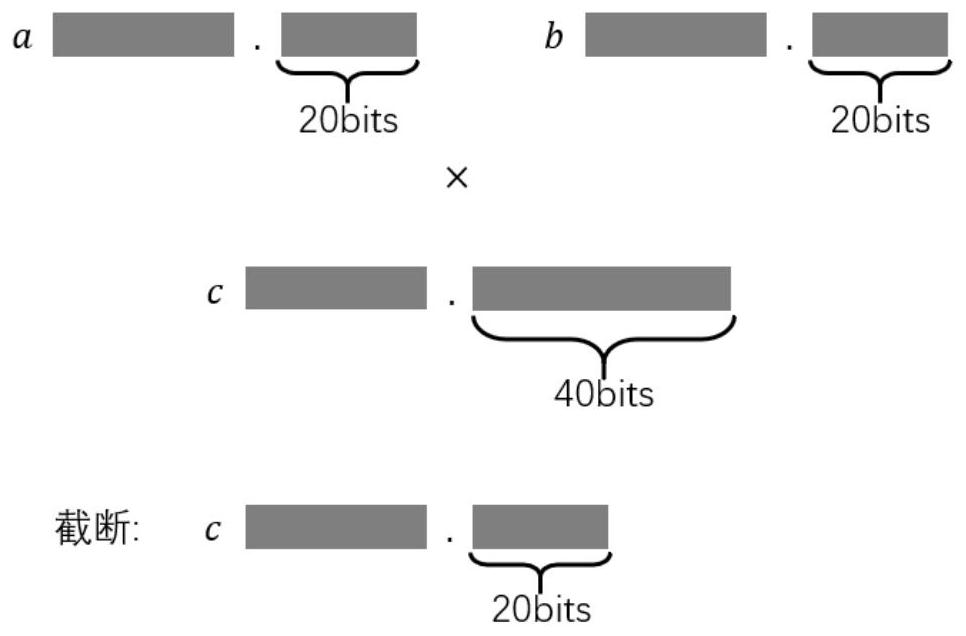

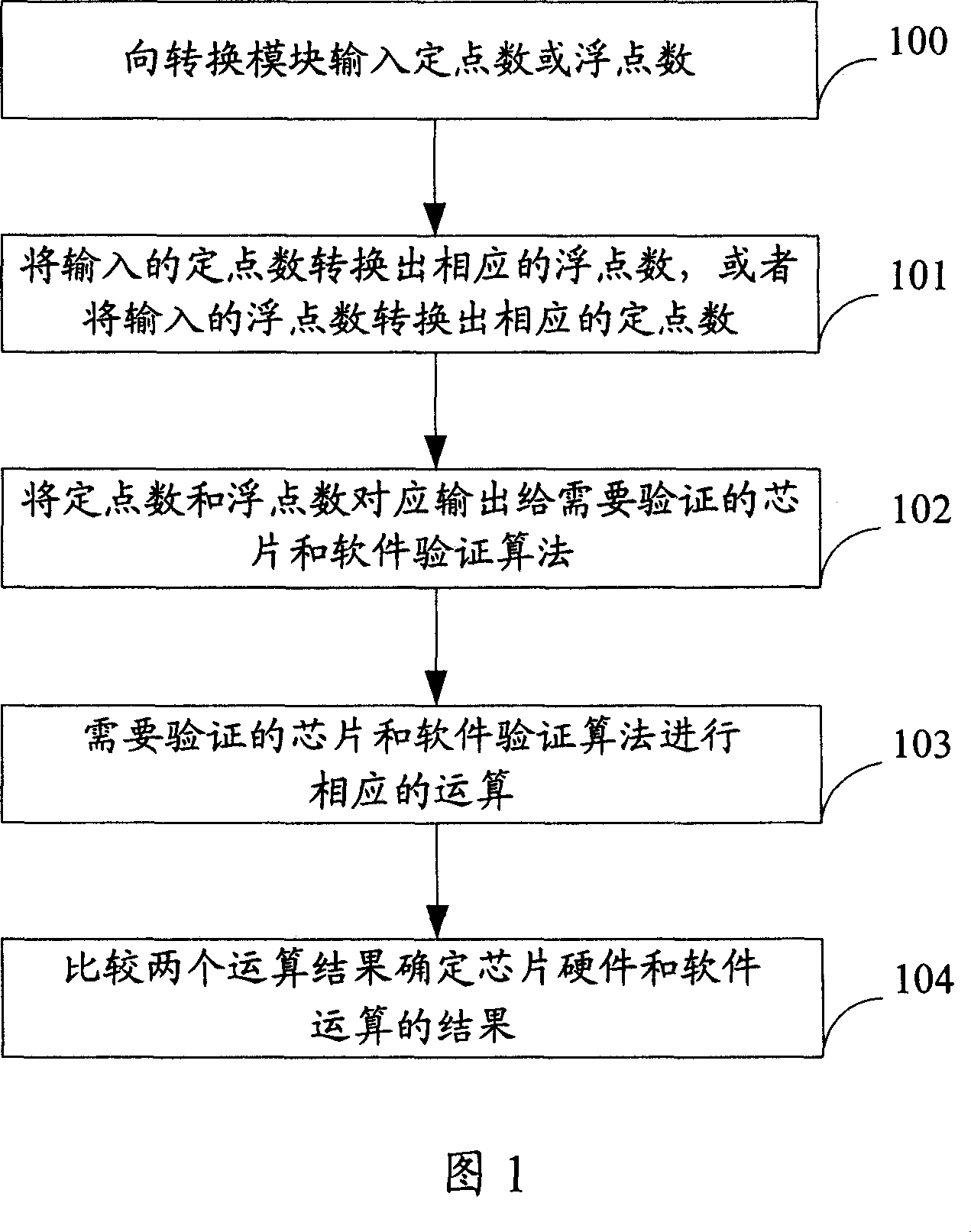

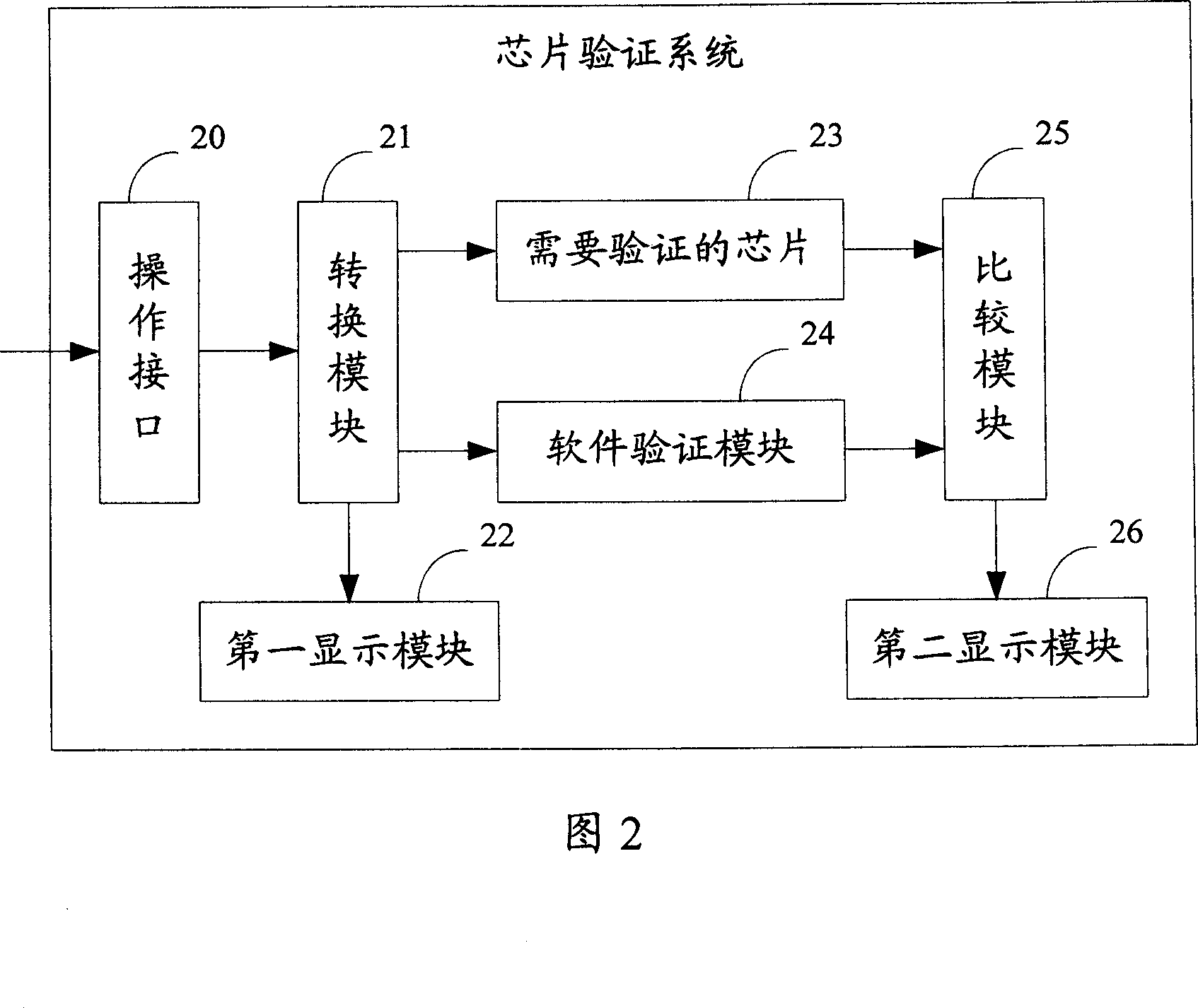

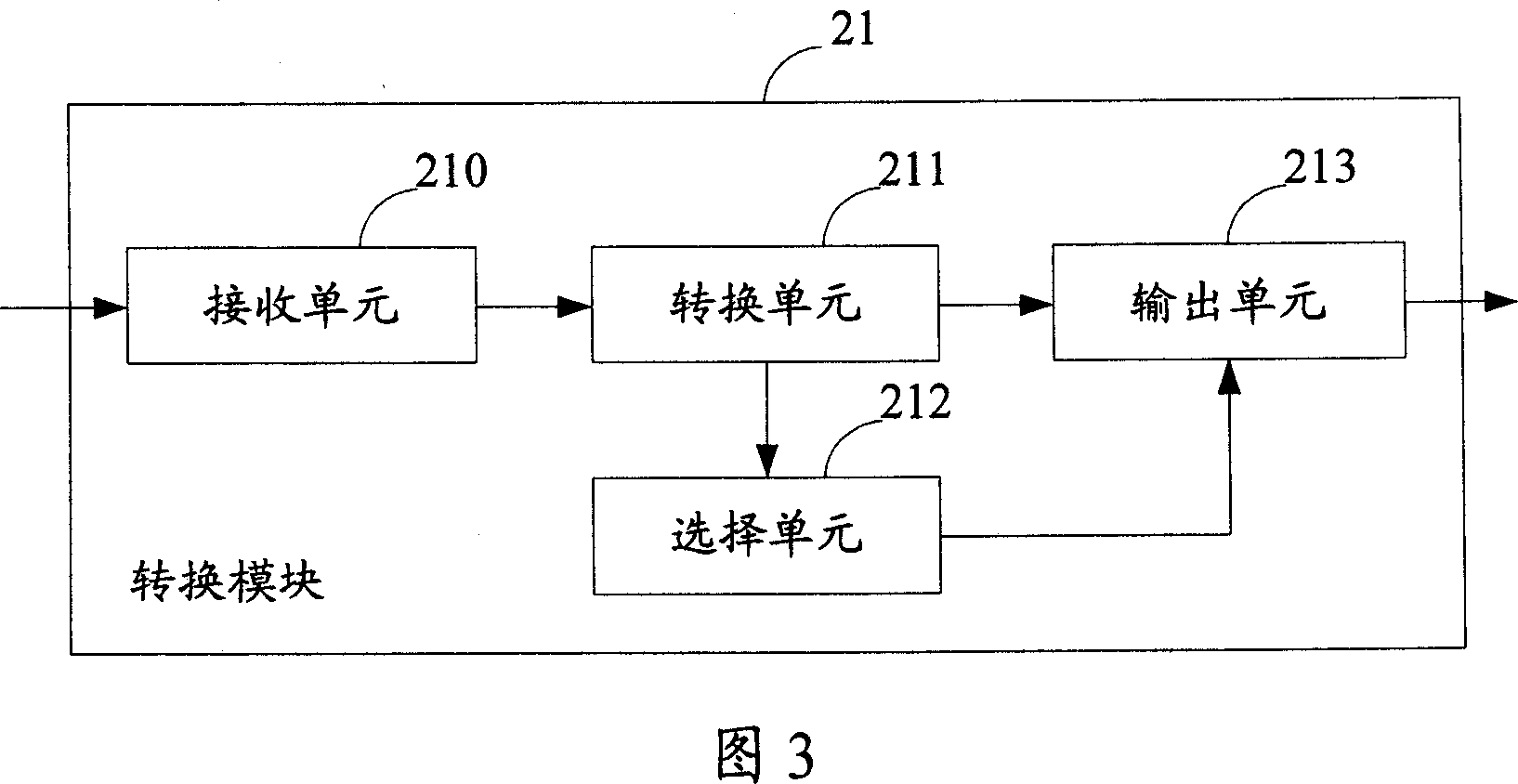

Method and system of chip checking

InactiveCN1949184AImprove verification efficiencyHigh precisionDetecting faulty computer hardwareFixed-point arithmeticSoftware

The invention discloses chip verification method and system. The method includes following steps: using operating interface to input fixed-point number or floating-point number to the conversion device; converting the fixed point number into the corresponding floating one according to the symbol mark, word length, and calibrate or converting the floating point number into the fixed one; outputting them to the verified chip and software verification algorithm to process corresponding operation; comparing the chip operative result with the algorithm operative result; when they are the same the hardware and software operation of the chip are right. The invention can be used to increase chip verification efficiency and precision.

Owner:VIMICRO CORP

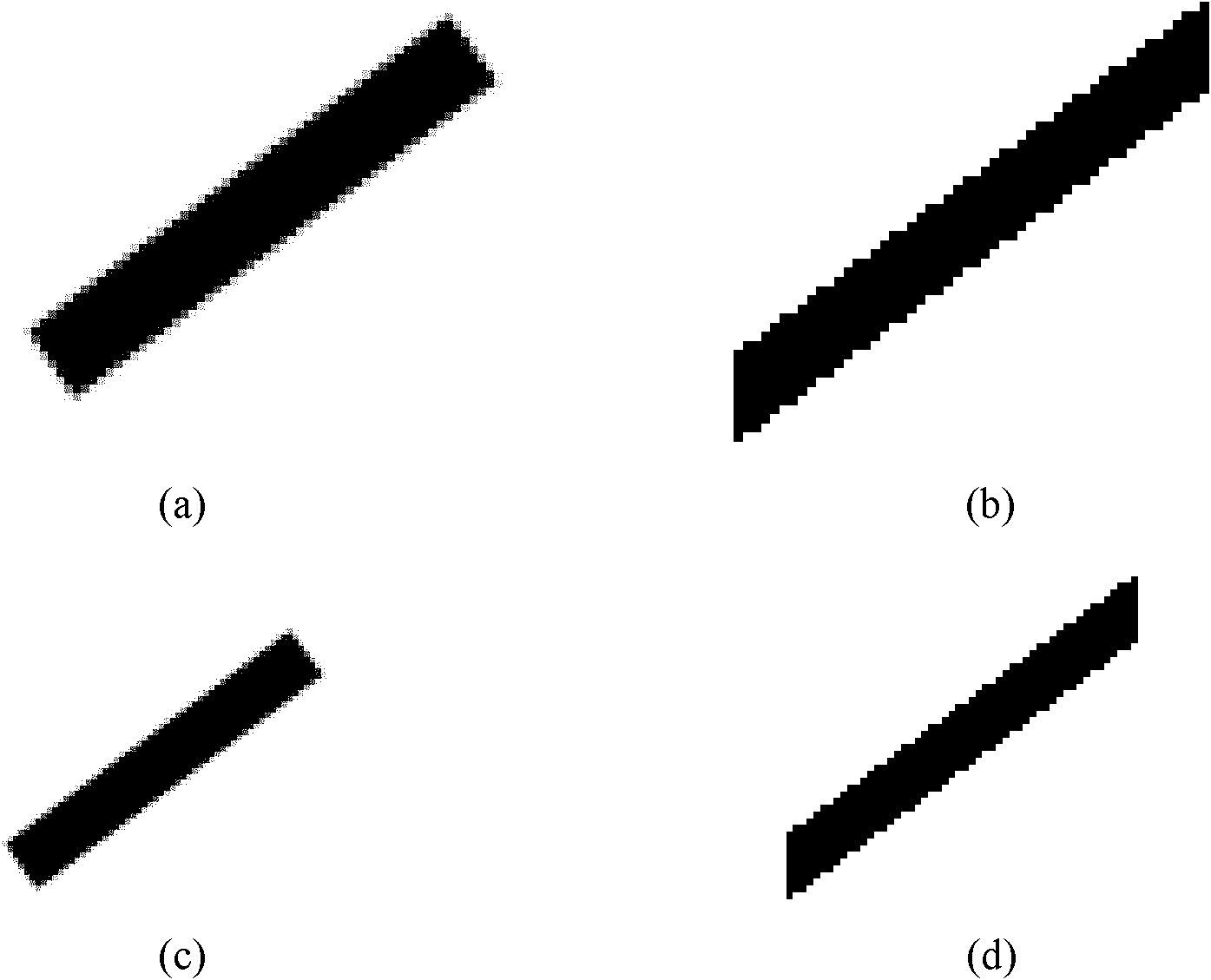

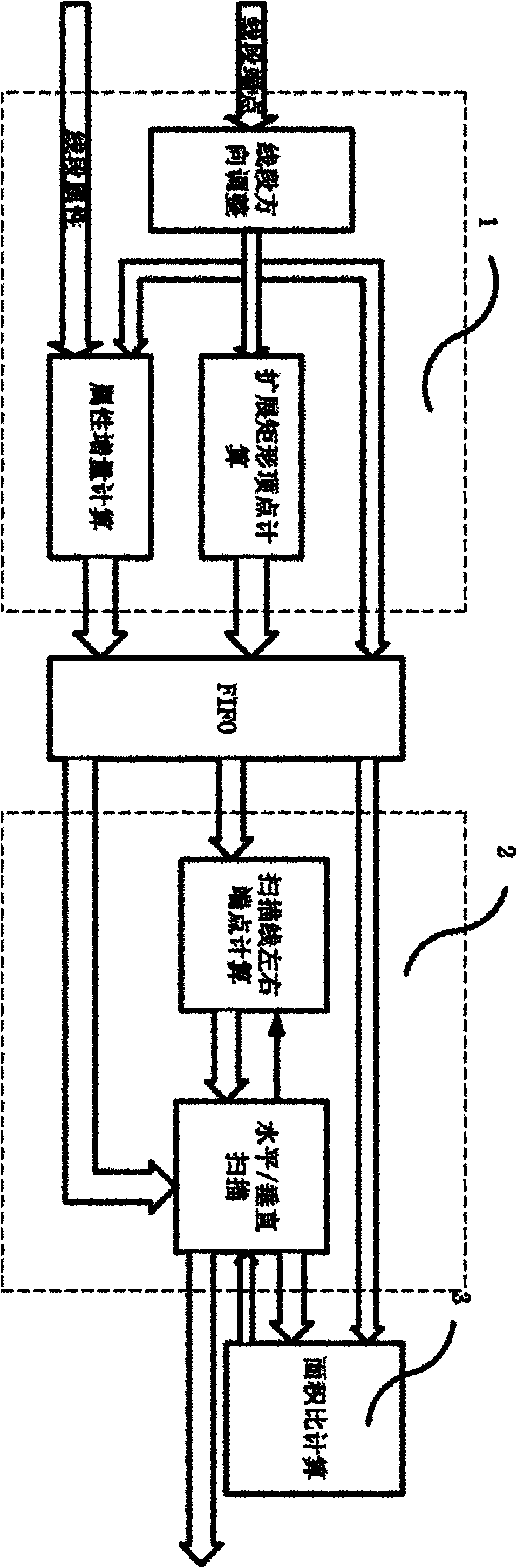

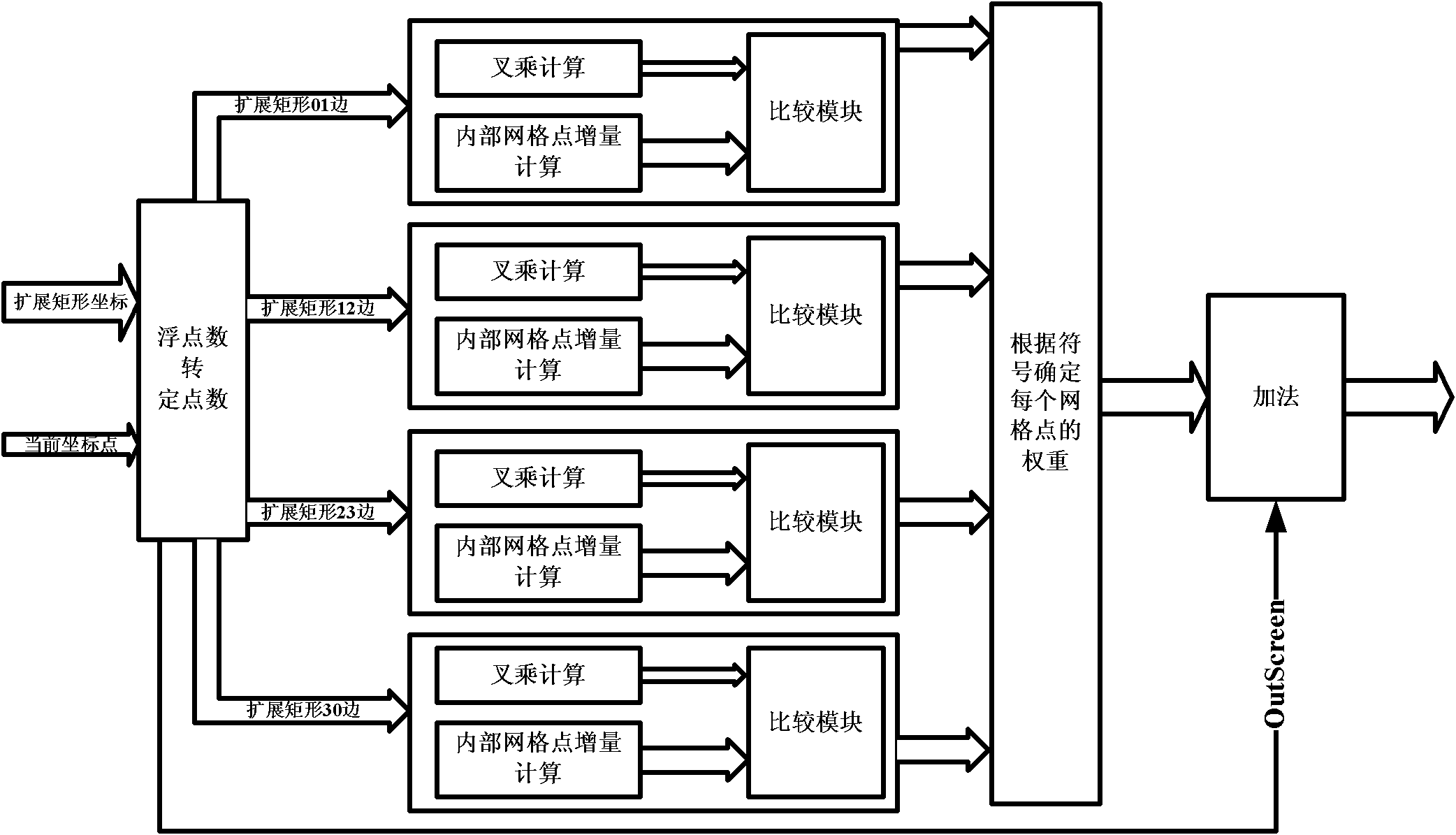

Method for realizing anti-aliasing of line segment integrating floating points and fixed points by using supersampling algorithm

InactiveCN102063523AGuaranteed efficiencySimple logicSpecial data processing applicationsAnti-aliasingAlgorithm

The invention discloses a method for realizing anti-aliasing of a line segment integrating floating points and fixed points by using the supersampling algorithm. The method comprises the steps of: adjusting the endpoint order of the line segment; expanding the line segment into a rectangle; calculating attribute increment of the line segment; writing data into a FIFO (First-in, First-out); calculating the boundary of a scanning line; generating a pixel point coordinate; calculating the area ratio; finally outputting and fusing the coordinate, the attribute and the area ratio after the coordinate generated by scanning and the area ratio of the attribute delay are used to calculate the required cycle. By the mode of combining the accuracies of the floating points and the fixed points, the accuracy of the number of the floating points adopted for expanding the coordinates of four top points of the rectangle is calculated, the coordinate point generated by the scanning line is changed into a fixed point number by increasing two decimals, the top point of the expanded rectangle is converted into a fixed point number containing two decimals, therefore, the area ratio can be calculated by the fixed point number, and a great number of resources can be reduced when the accuracy loss is low.

Owner:CHANGSHA JINGJIA MICROELECTRONICS

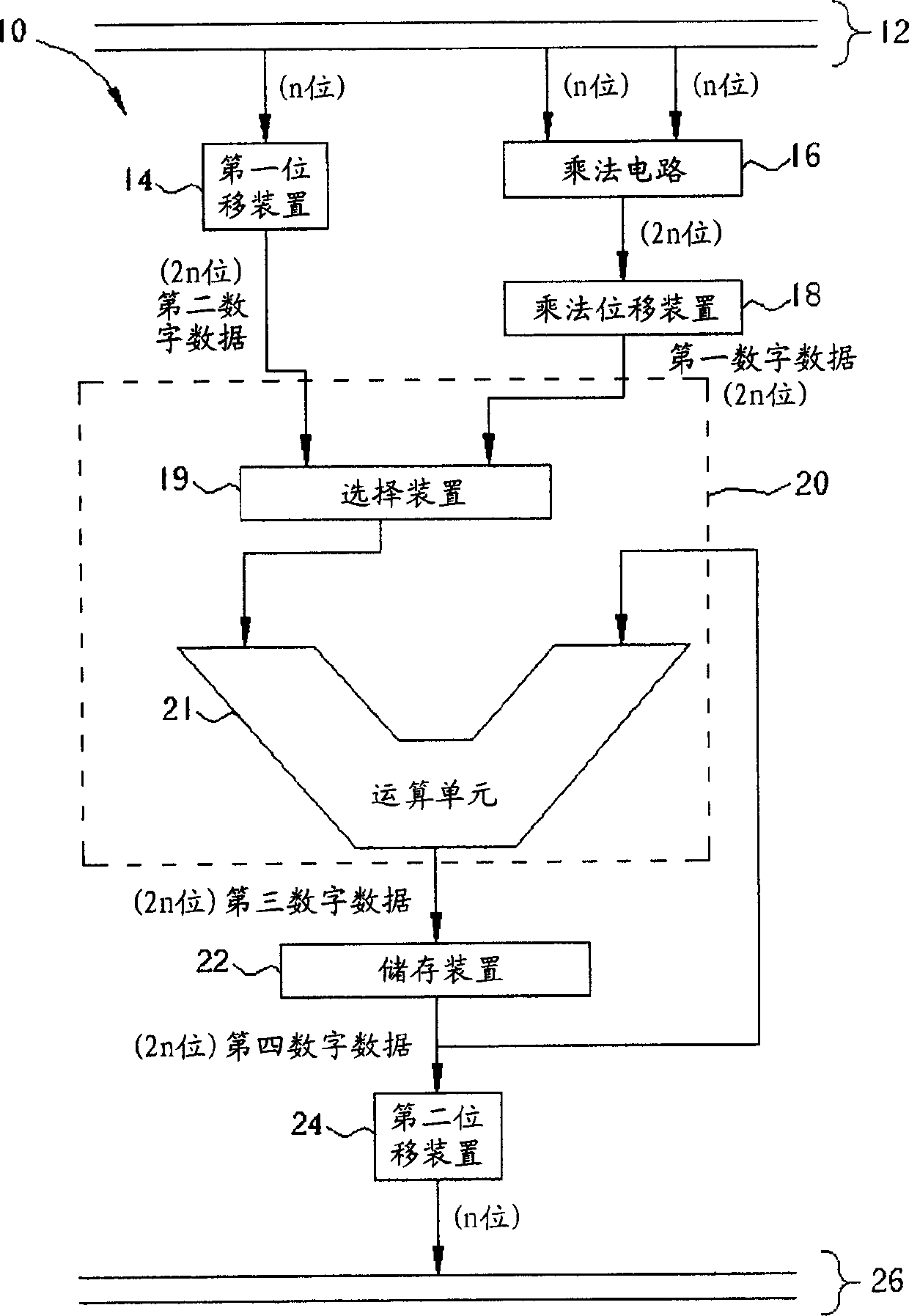

Digital signal processor applying skip type floating number operational method

InactiveCN1570847AComputation using non-contact making devicesData conversionDigital signal processingDigital data

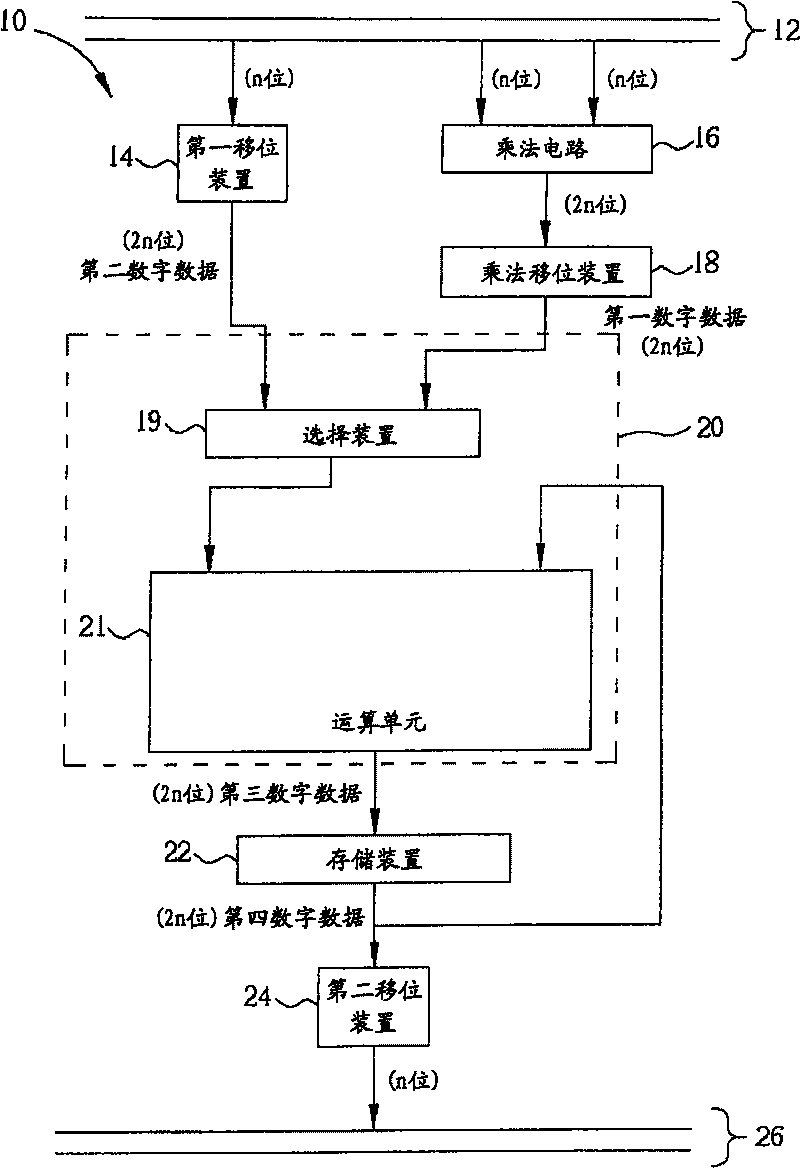

This invention provides a kind of digital signal processor. It is used for processing multiple digital data which have specific fixed-point notation or a skipping floating-point notation. The digital signal processor comprises a multiplying circuit, a extractive displacement apparatus, multiple notation transformation circuit and a arithmetic element. The multiplying circuit can multiply two low-order digital data to get a high-order digital data. The extractive displacement apparatus connects electrically with this multiplying circuit, can transfer a high-order digital data which with the skipping floating-point notation to a high-order digital data which with the fixed-point notation. Each notation transformation circuit can transfer the digital data between fixed-point notation and skipping floating-point notation. The arithmetic element can be used to compute multiple digital data.

Owner:MEDIATEK INC

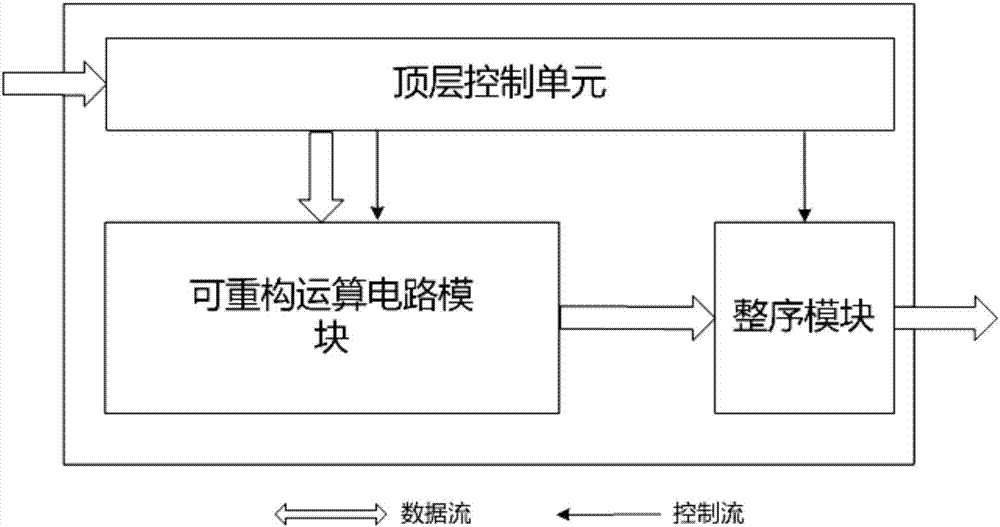

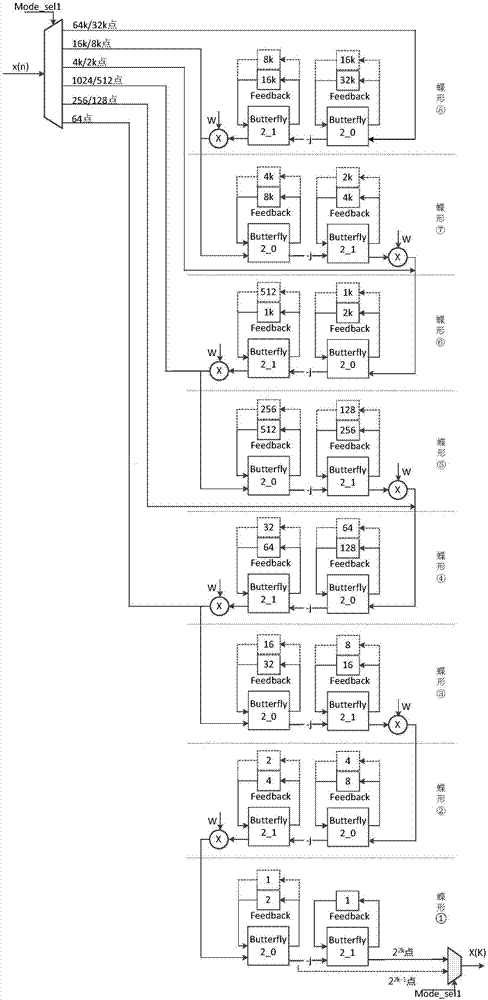

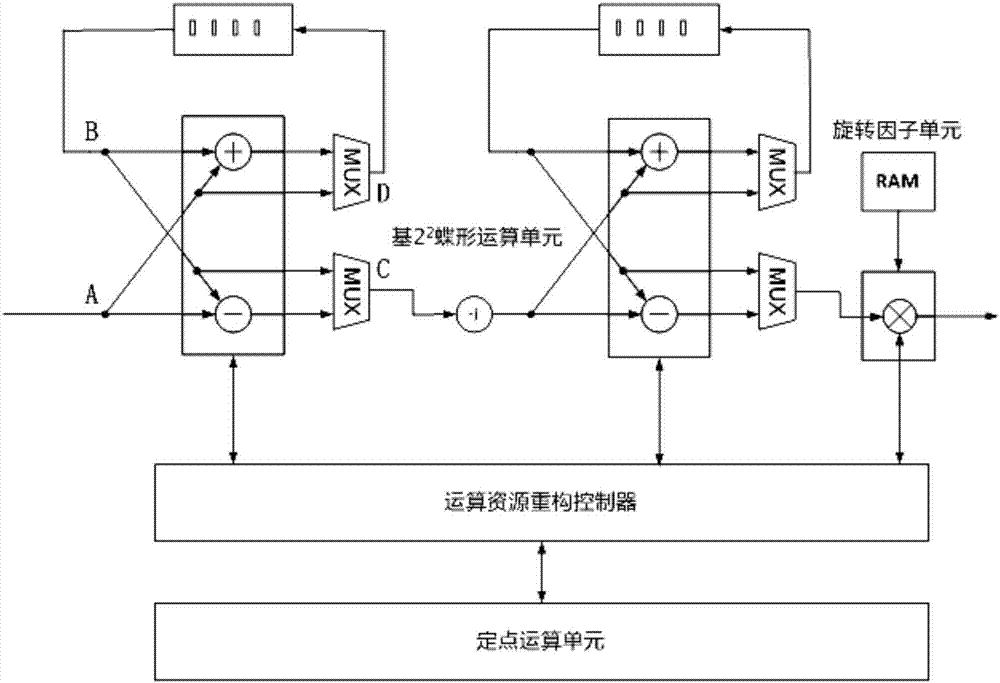

Reconfigurable universal fixed floating-point FFT processor

InactiveCN106951394AImprove versatilitySave resourcesDigital data processing detailsComplex mathematical operations18-bitComputer architecture

The invention provides a reconfigurable universal fixed floating-point FFT processor, which can realize not only 18-bit fixed-point FFT operation but also 32-bit single-precision floating-point FFT operation. According to the processor, a fixed-point arithmetic device (comprising a multiplier and an adder) is separated from a main body structure and can be reconfigured as a single-precision floating-point arithmetic device. A processor main body finishes fixed-point or floating-point FFT operation by calling the fixed-point arithmetic device or the single-precision floating-point arithmetic device formed by reconfiguration. The processor adopts a mixed base flow water structure and supports not only fixed floating-point calculation but also a configurable calculation point number, so that the universality of the processor is effectively improved under the condition of ensuring accuracy and data throughput.

Owner:NANJING UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com