Configurable approximate multiplier for quantizing convolutional neural network and implementation method of configurable approximate multiplier

A convolutional neural network and multiplier technology, which is applied in the field of configurable approximate multipliers, can solve the problems of poor efficiency, small bit width, and DAS multipliers are not used, and achieves the effect of improving computing efficiency and reducing area overhead.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0034] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments.

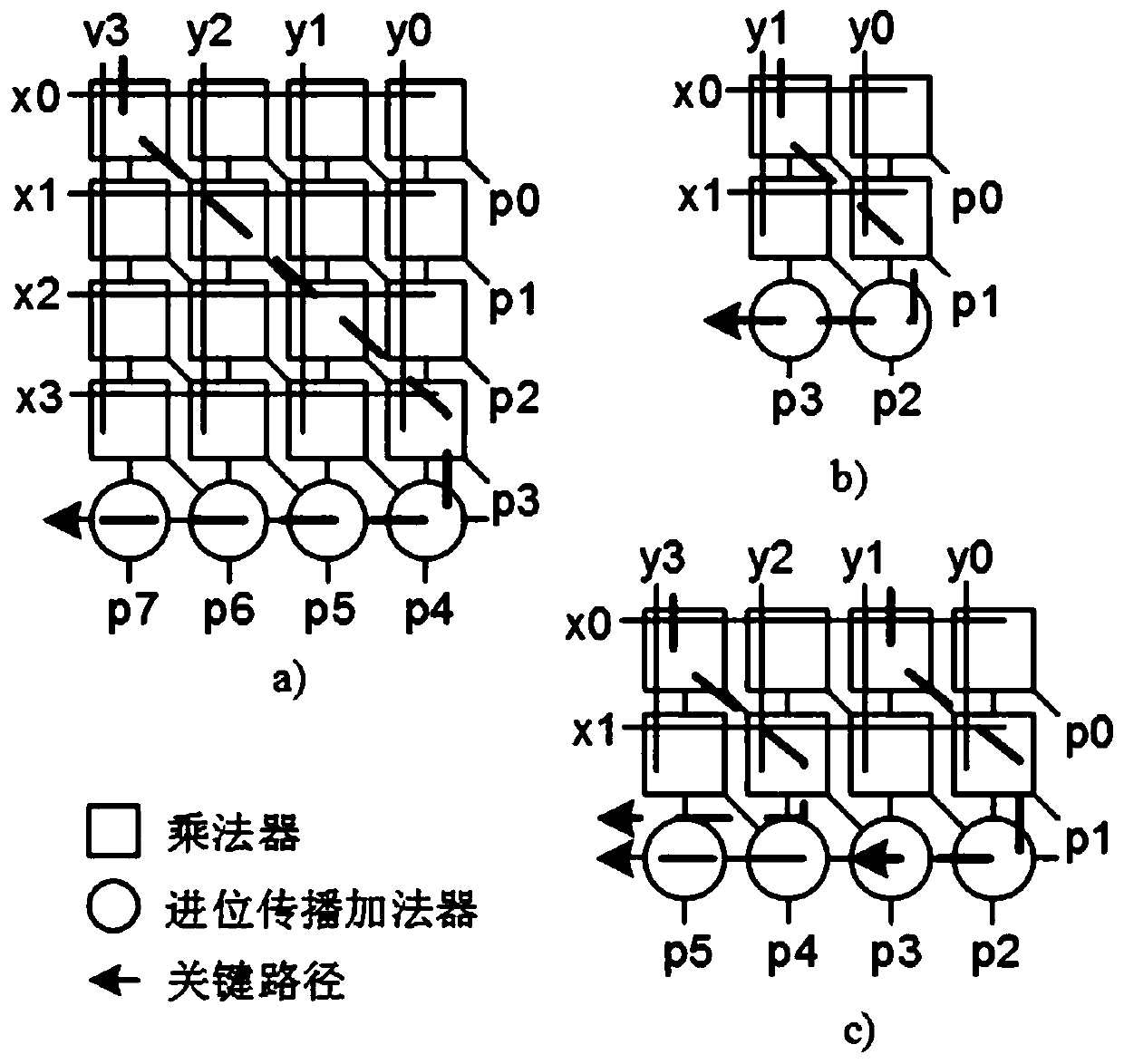

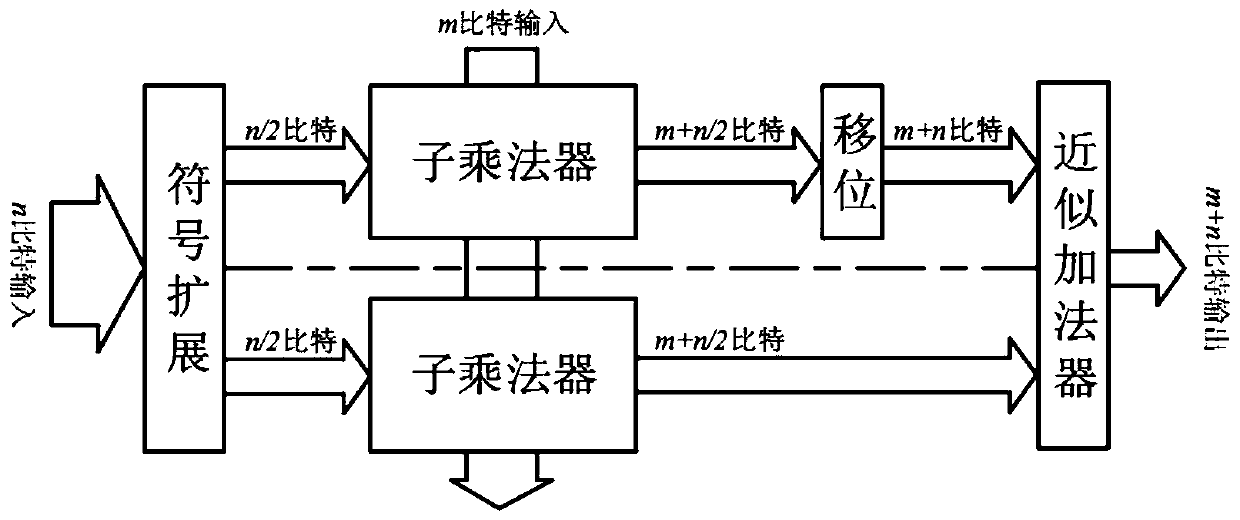

[0035] Such as figure 2 As shown, the present invention proposes a configurable approximate multiplier for quantizing convolutional neural networks, including the following modules:

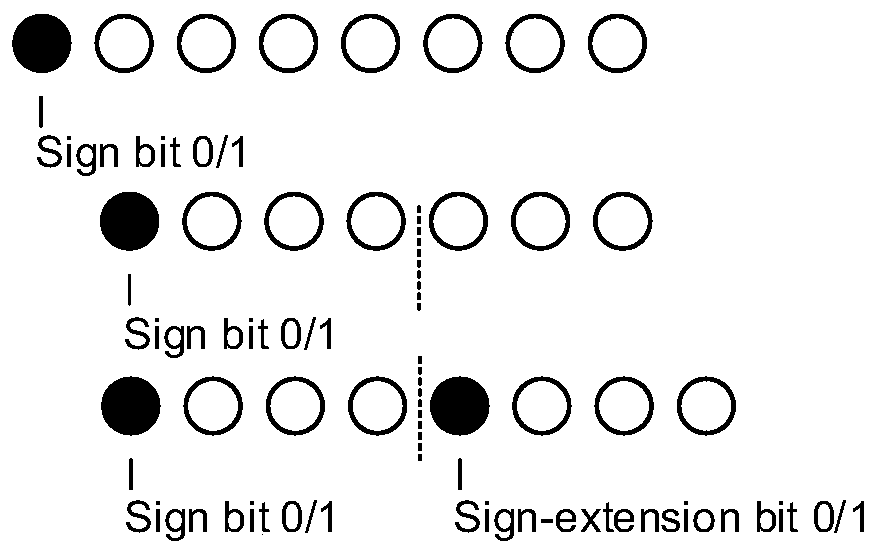

[0036] (1) Sign extension module: will represent the range in -2 n-2 to 2 n-2 The n-bit signed fixed-point number of -1 is expressed as two n / 2-bit signed fixed-point numbers. When the n-bit signed fixed-point number is non-negative, the n / 2-1 bits from the lowest bit to the top are truncated, and in it 0 is added before the highest bit, and the whole is used as the input of the low bit multiplier, and the other n / 2 bits are used as the input of the high bit multiplier.

[0037] When n=8, the split method is:

[0038] 00XX_XXXX=0XXX_XXX→0XXX_0XXX

[0039] When the n-bit signed fixed-point number is negative, if the decimal value is less than -(2 n...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com