Patents

Literature

109 results about "Quantized neural networks" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

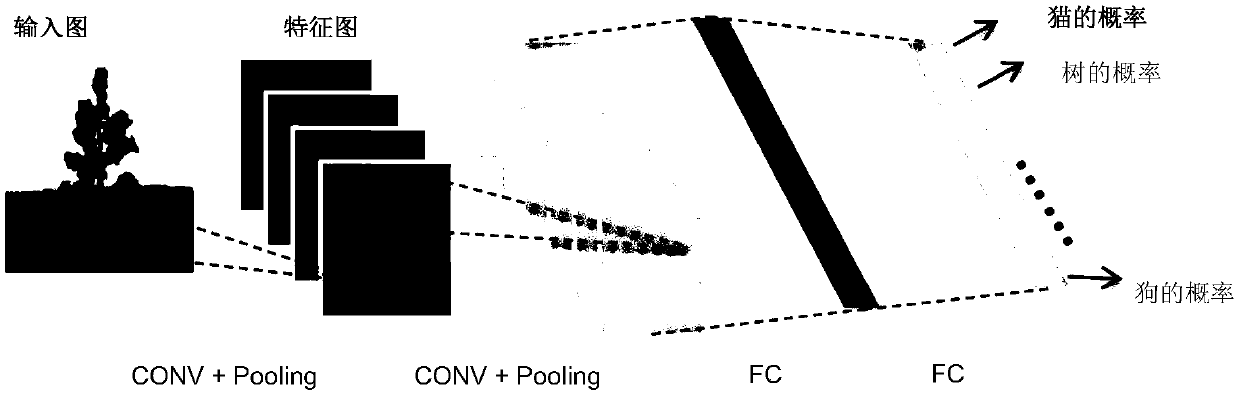

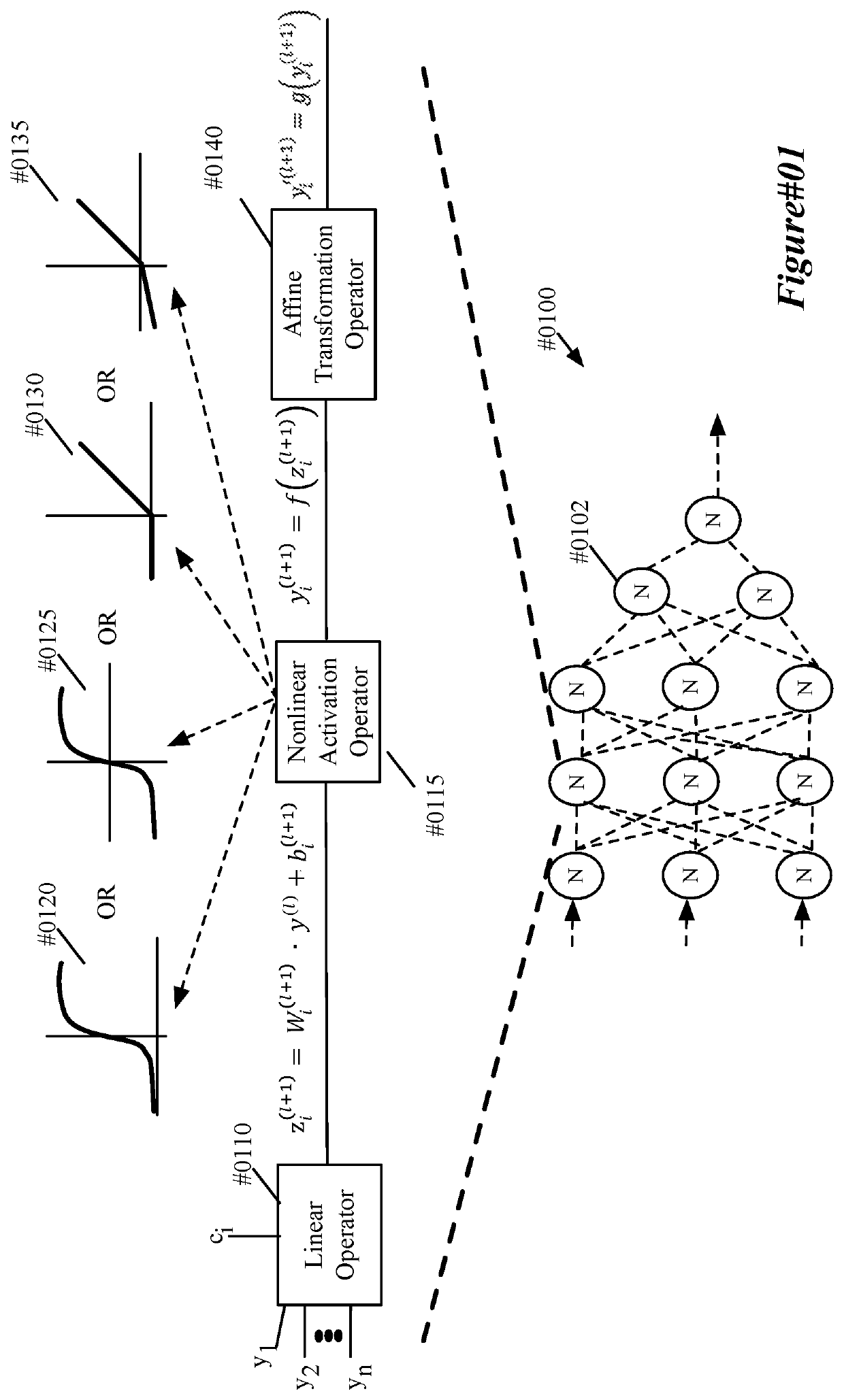

Quantized-CNN is a novel framework of convolutional neural network (CNN) with simultaneous computation acceleration and model compression in the test-phase.

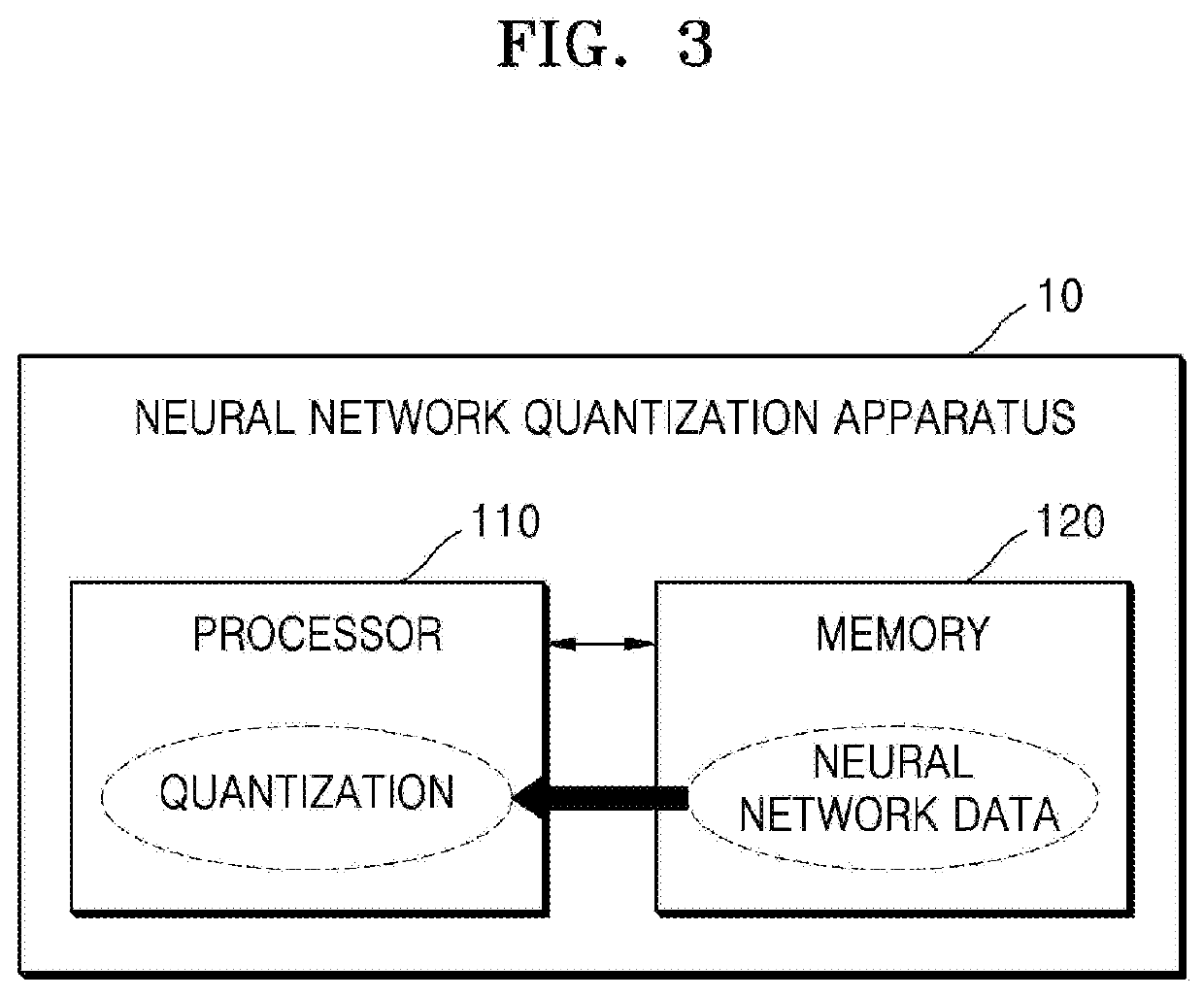

Method and apparatus for generating fixed-point quantized neural network

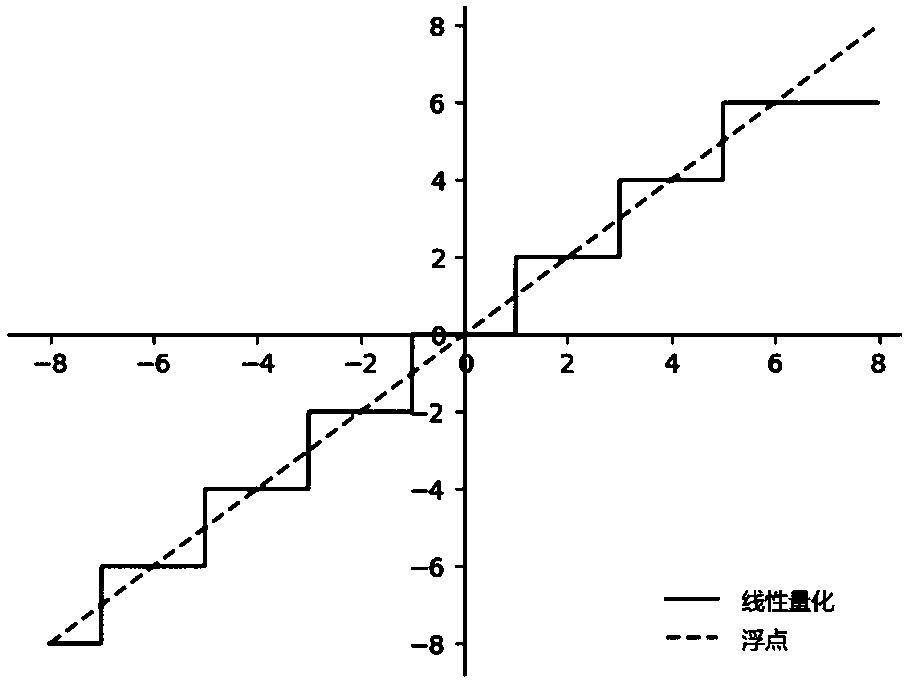

ActiveUS20190042948A1Well formedDigital data processing detailsCode conversionQuantized neural networksFloating point

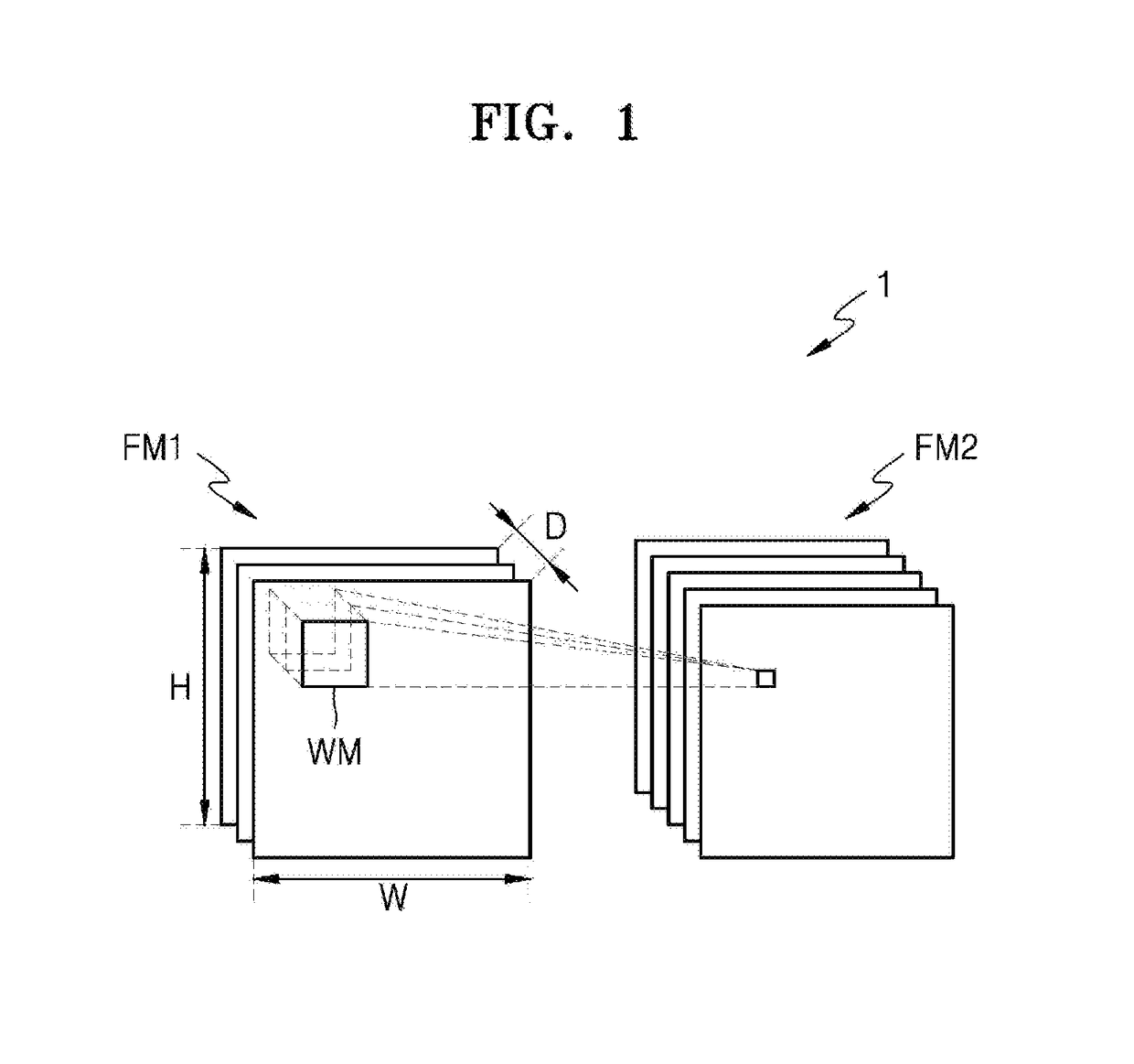

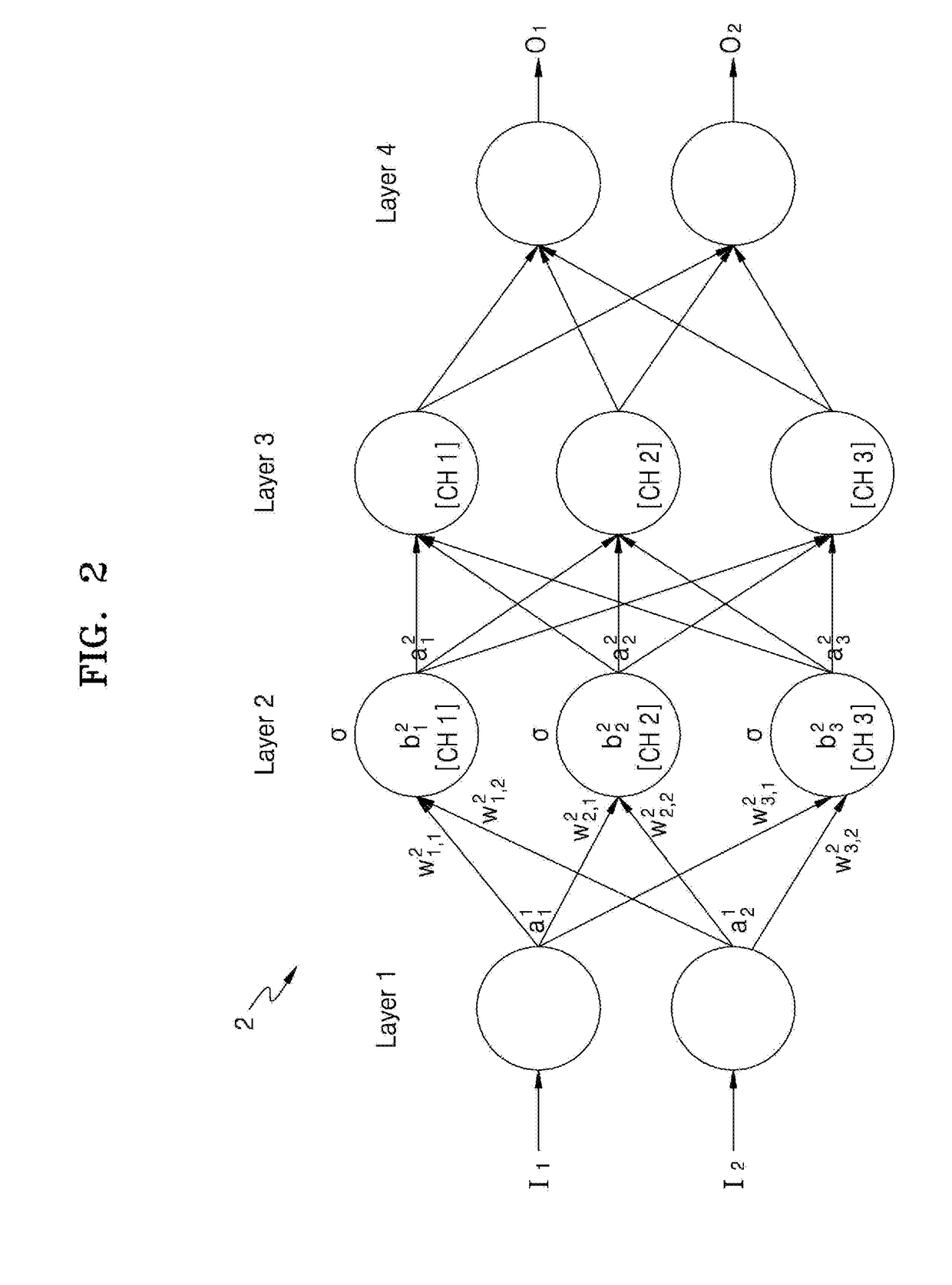

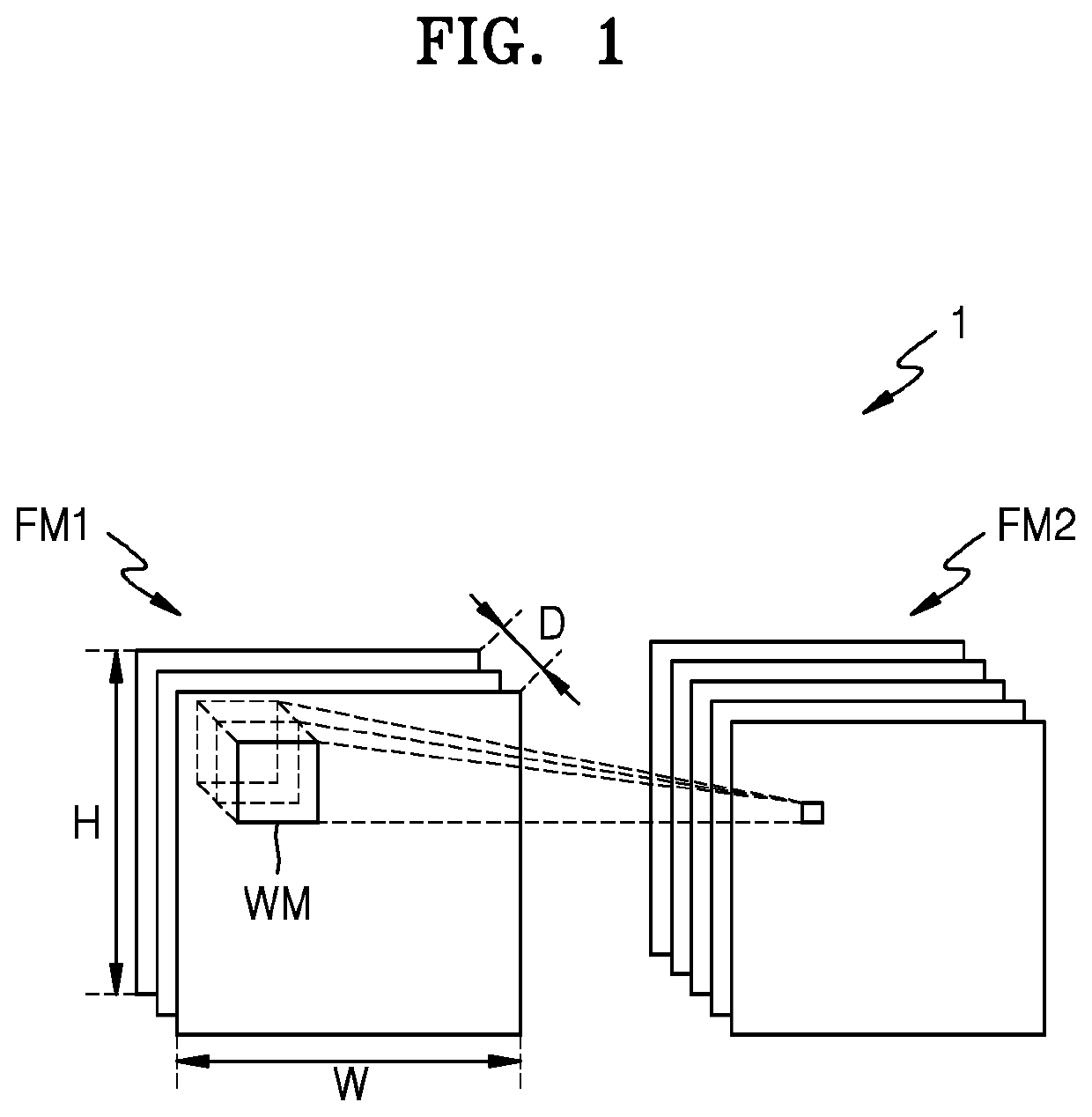

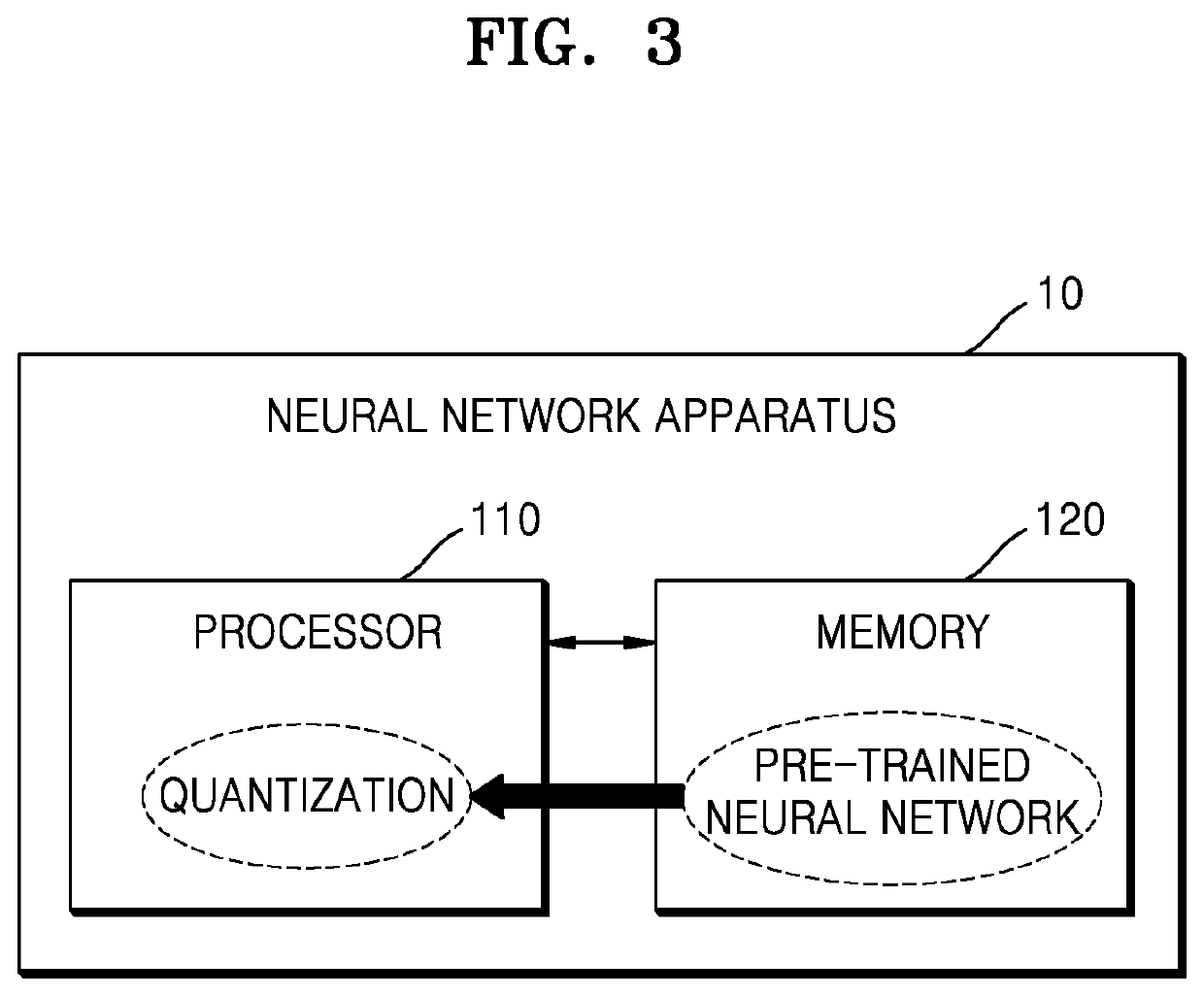

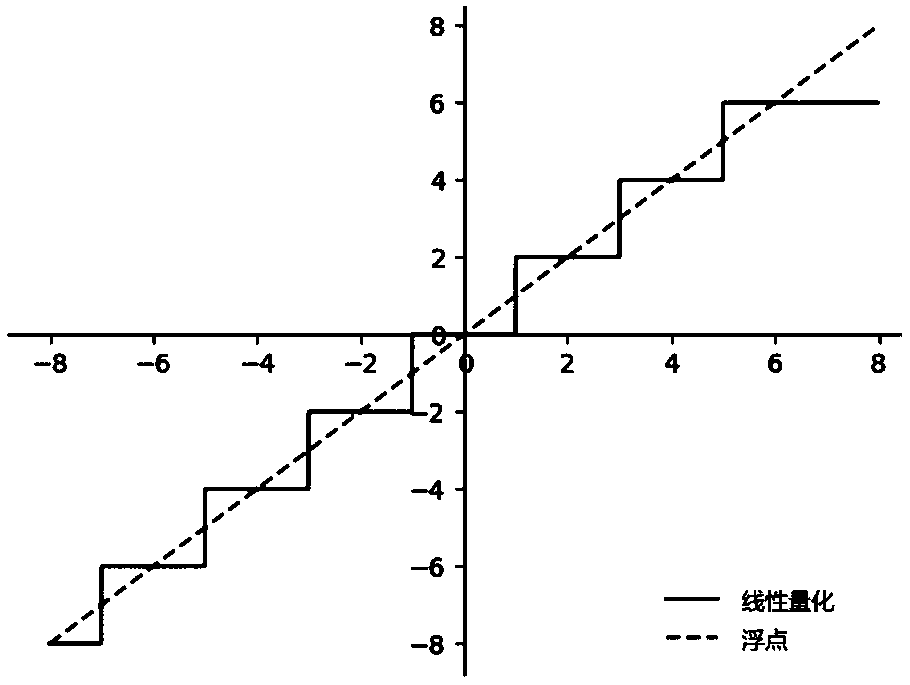

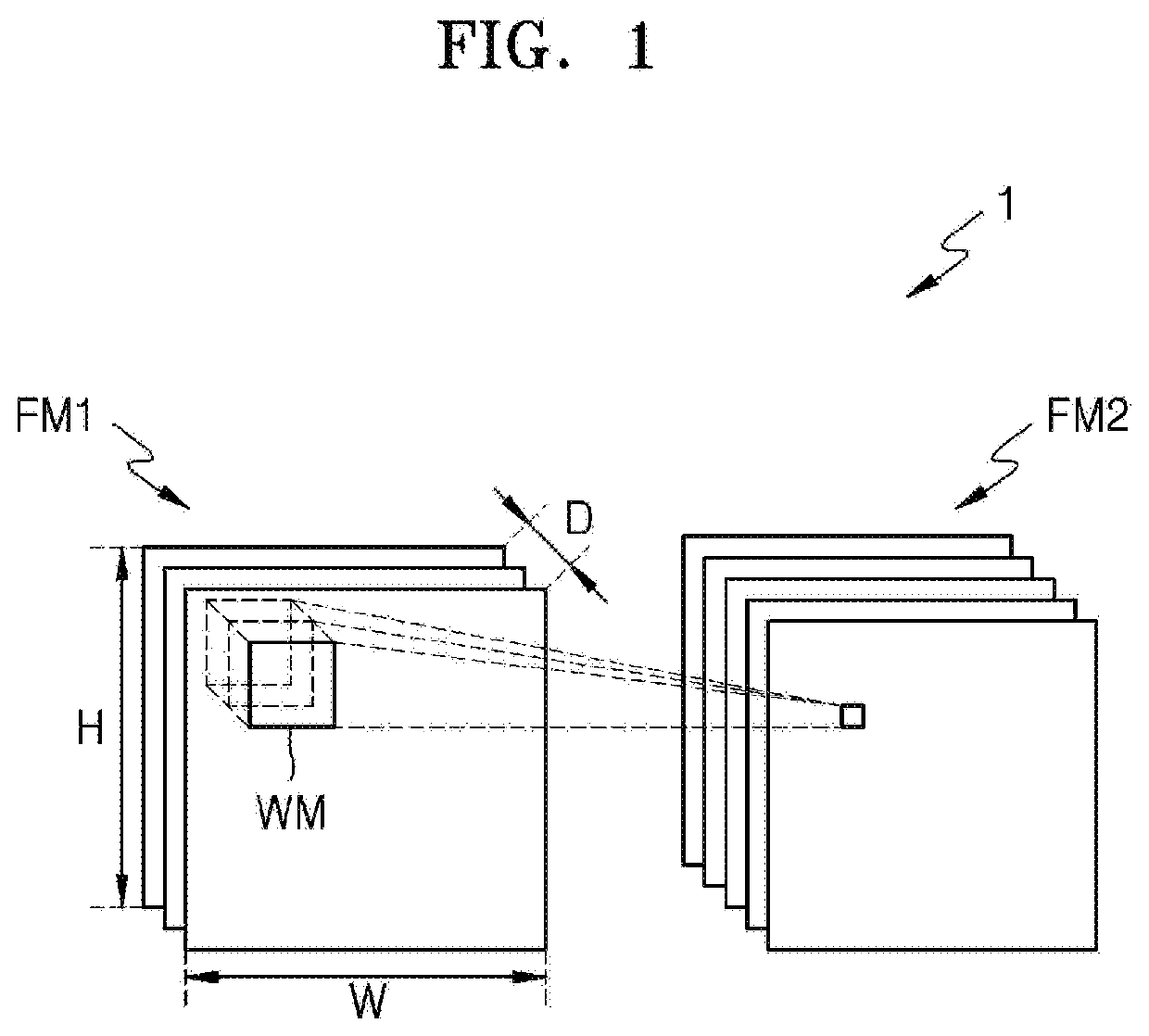

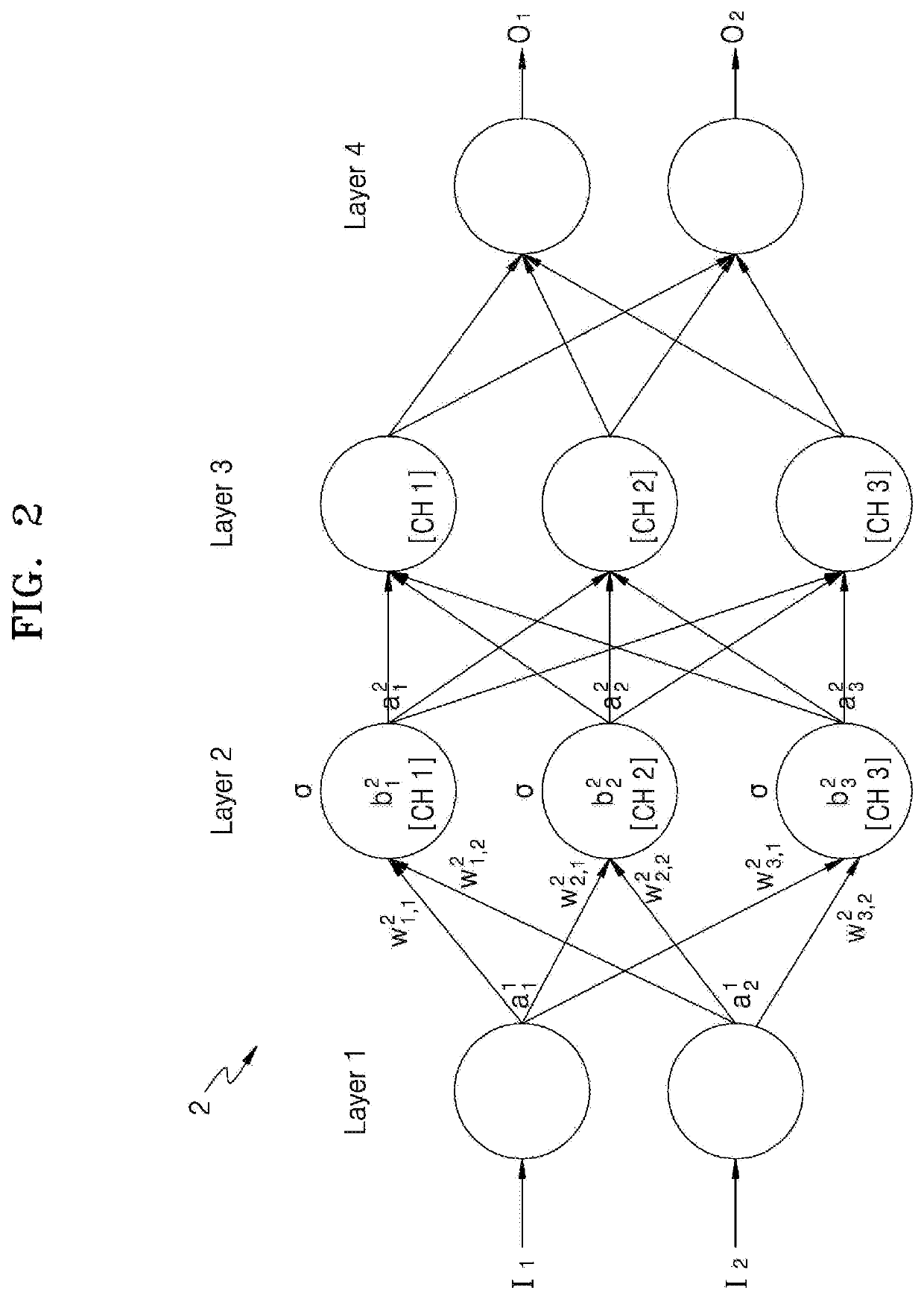

A method of generating a fixed-point quantized neural network includes analyzing a statistical distribution for each channel of floating-point parameter values of feature maps and a kernel for each channel from data of a pre-trained floating-point neural network, determining a fixed-point expression of each of the parameters for each channel statistically covering a distribution range of the floating-point parameter values based on the statistical distribution for each channel, determining fractional lengths of a bias and a weight for each channel among the parameters of the fixed-point expression for each channel based on a result of performing a convolution operation, and generating a fixed-point quantized neural network in which the bias and the weight for each channel have the determined fractional lengths.

Owner:SAMSUNG ELECTRONICS CO LTD

Neural-network-model compression method, system and device and readable storage medium

InactiveCN108229681ASmall sizeNo loss of precisionNeural architecturesNeural learning methodsAlgorithmQuantized neural networks

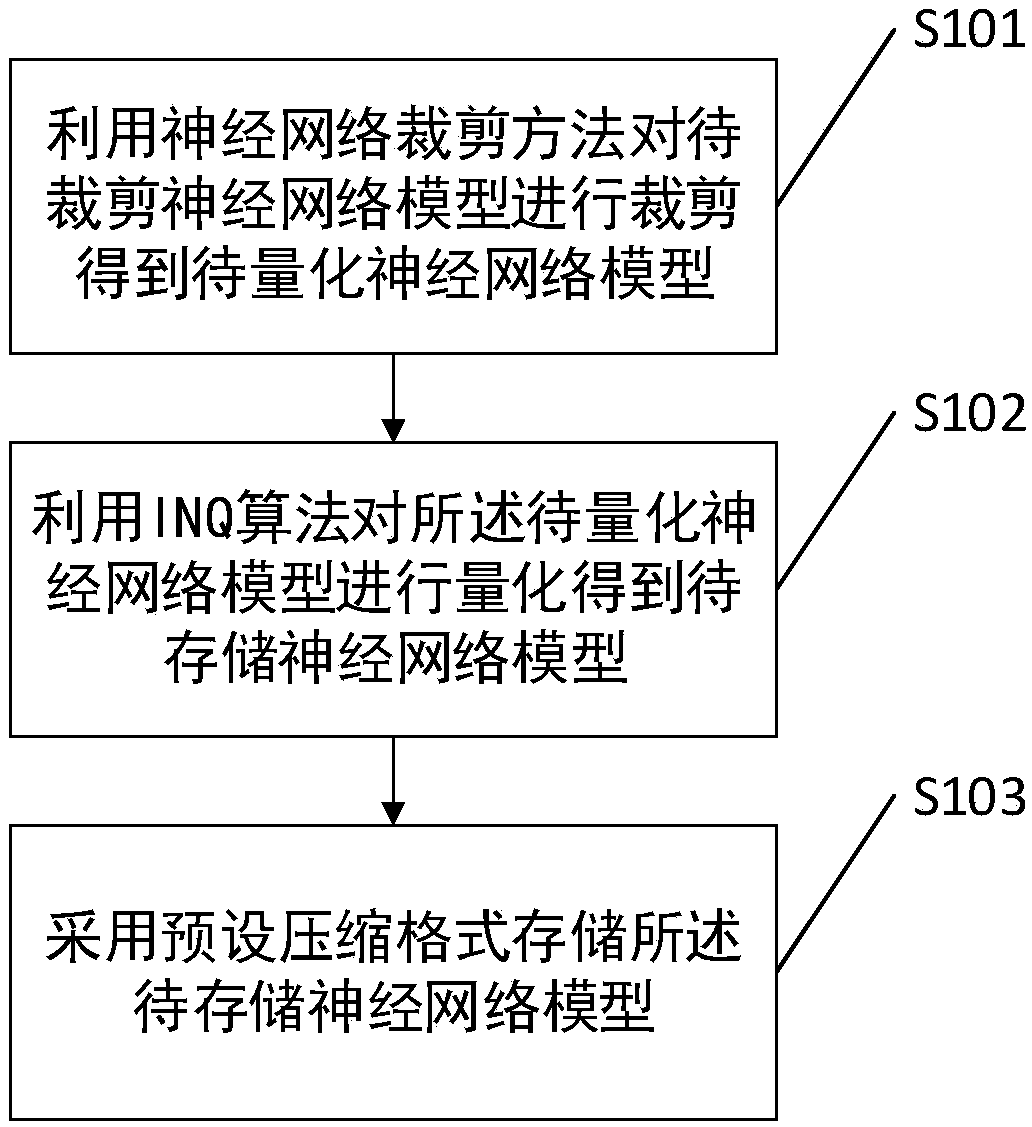

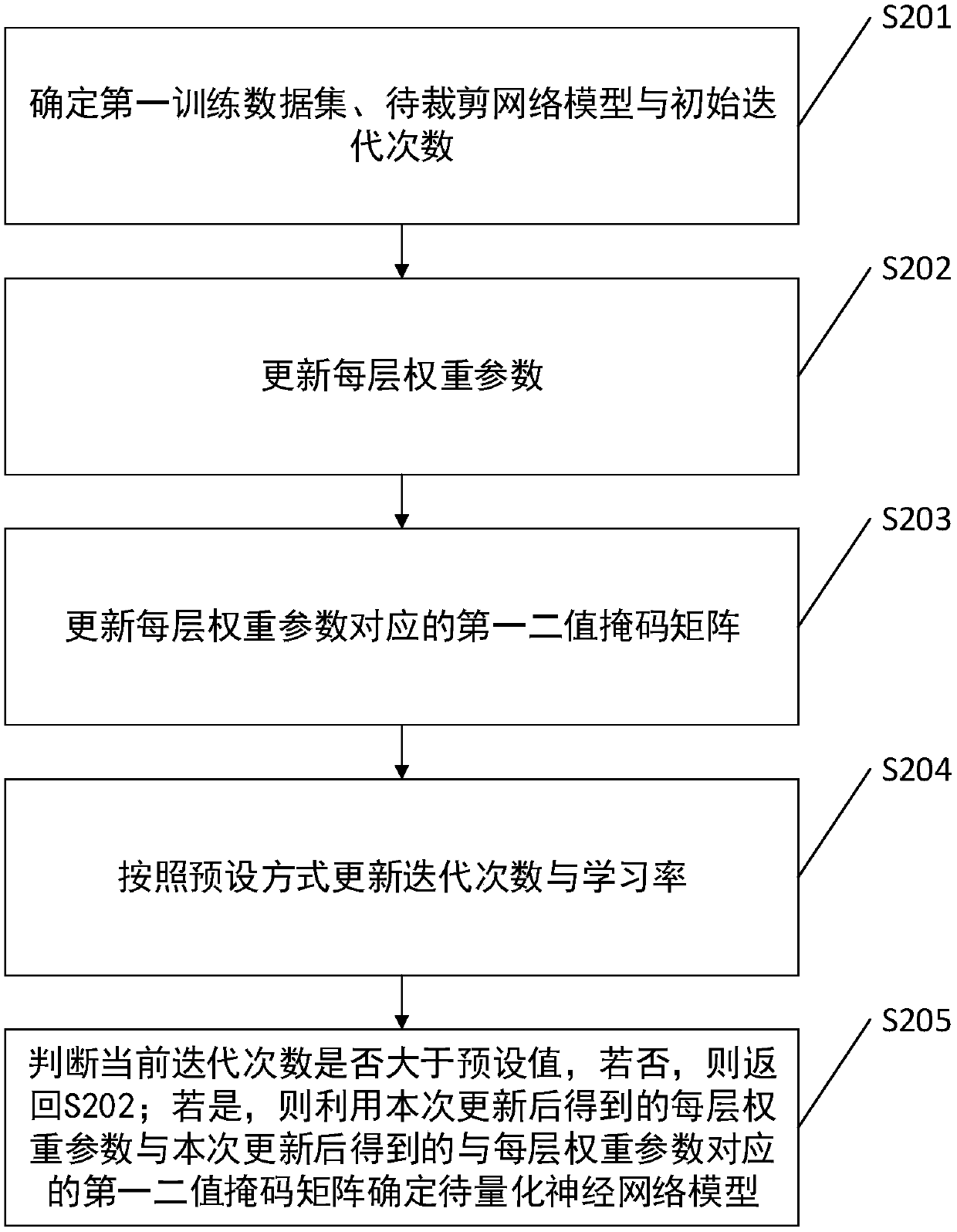

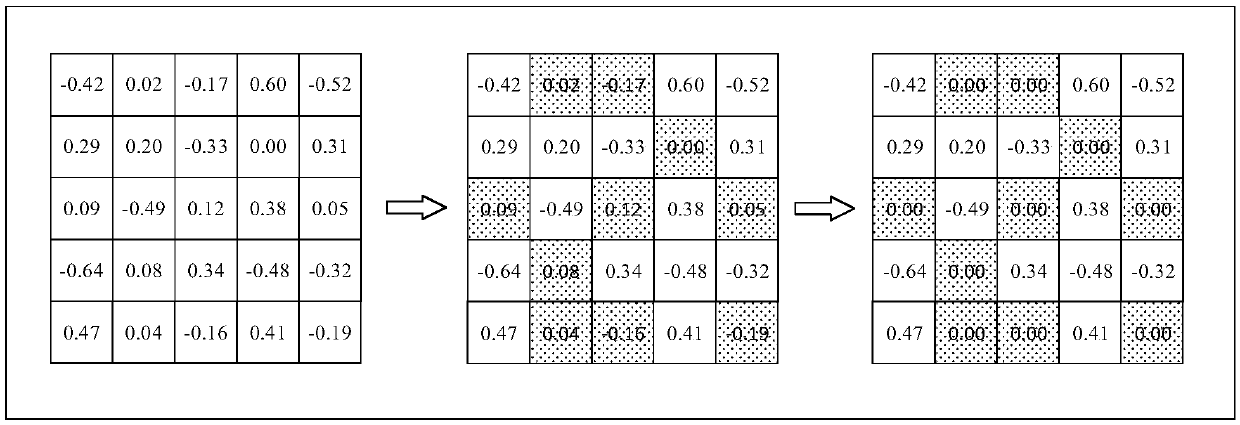

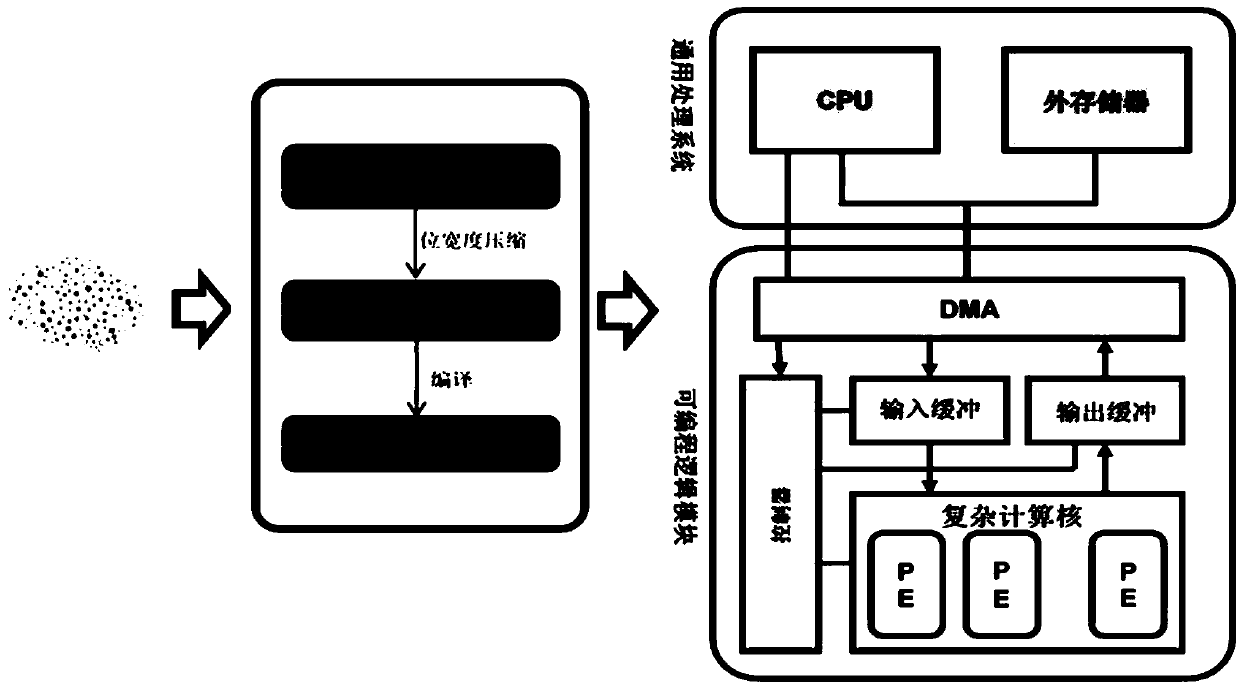

The invention discloses a neural-network-model compression method, system and device and a computer-readable storage medium. The method includes: utilizing a neural-network clipping method to clip a to-be-clipped neural network model to obtain a to-be-quantized neural network model; utilizing an INQ algorithm to quantize the to-be-quantized neural network model to obtain a to-be-stored neural network model; and using a compression format to store the to-be-stored neural network model. Therefore, according to the neural-network-model compression method provided by the embodiment of the invention, the neural network model is cropped, the INQ algorithm is used at the same time to quantify the same after cropping, thus a model size can be reduced in a case of effectively guaranteeing no loss of model precision after compression, thus the problem of excessive consumed resources can be solved, and calculation can be accelerated.

Owner:ZHENGZHOU YUNHAI INFORMATION TECH CO LTD

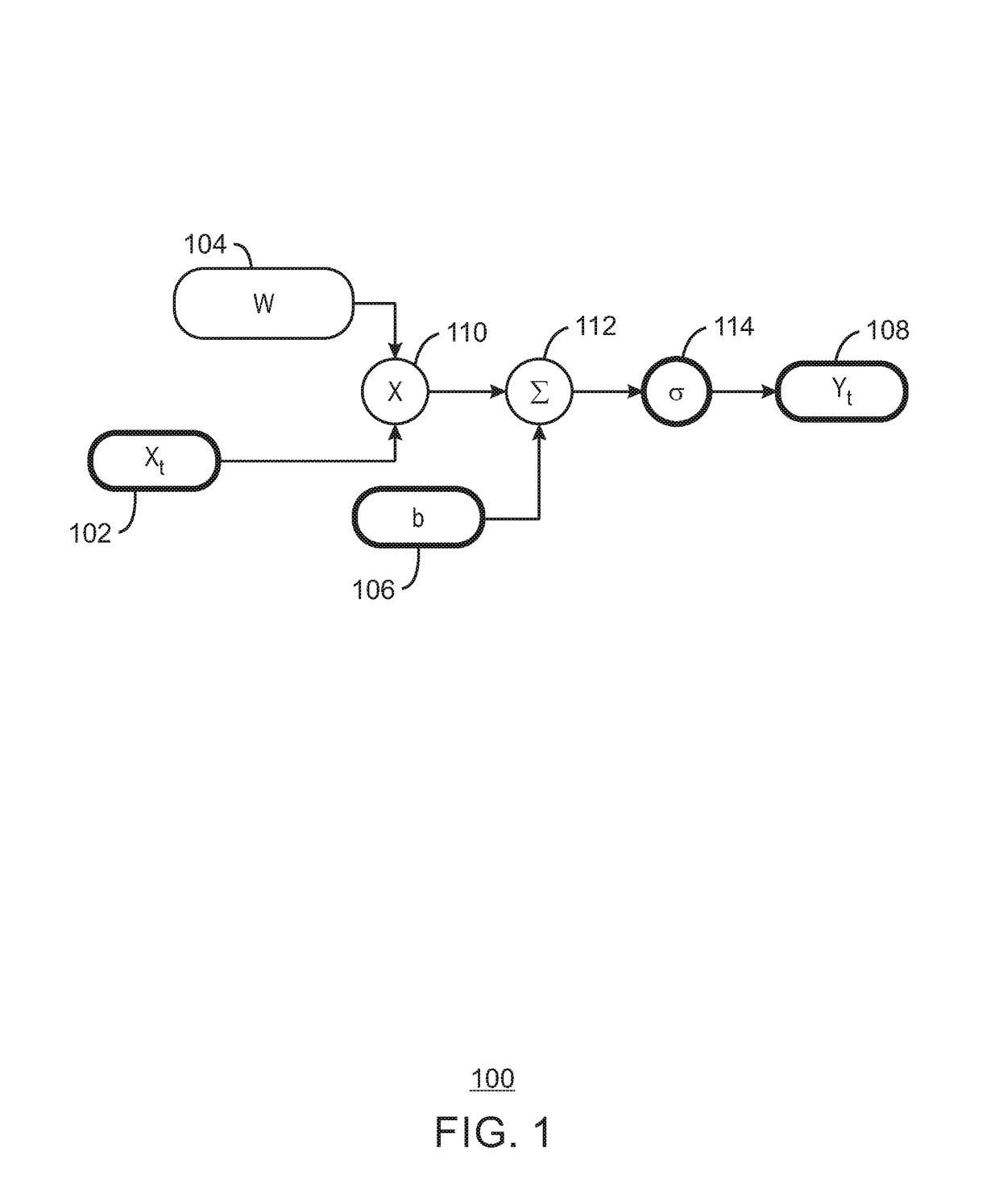

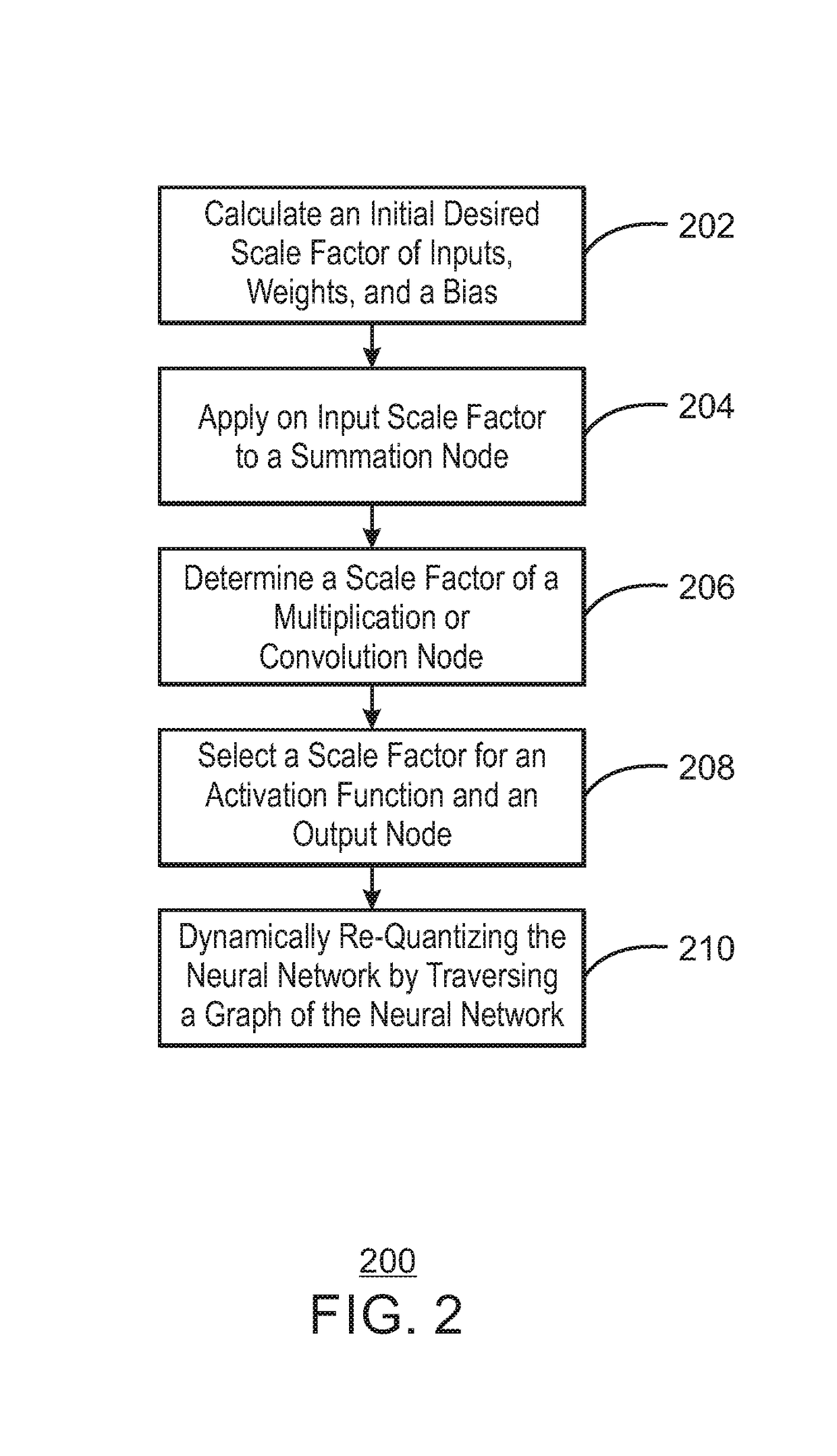

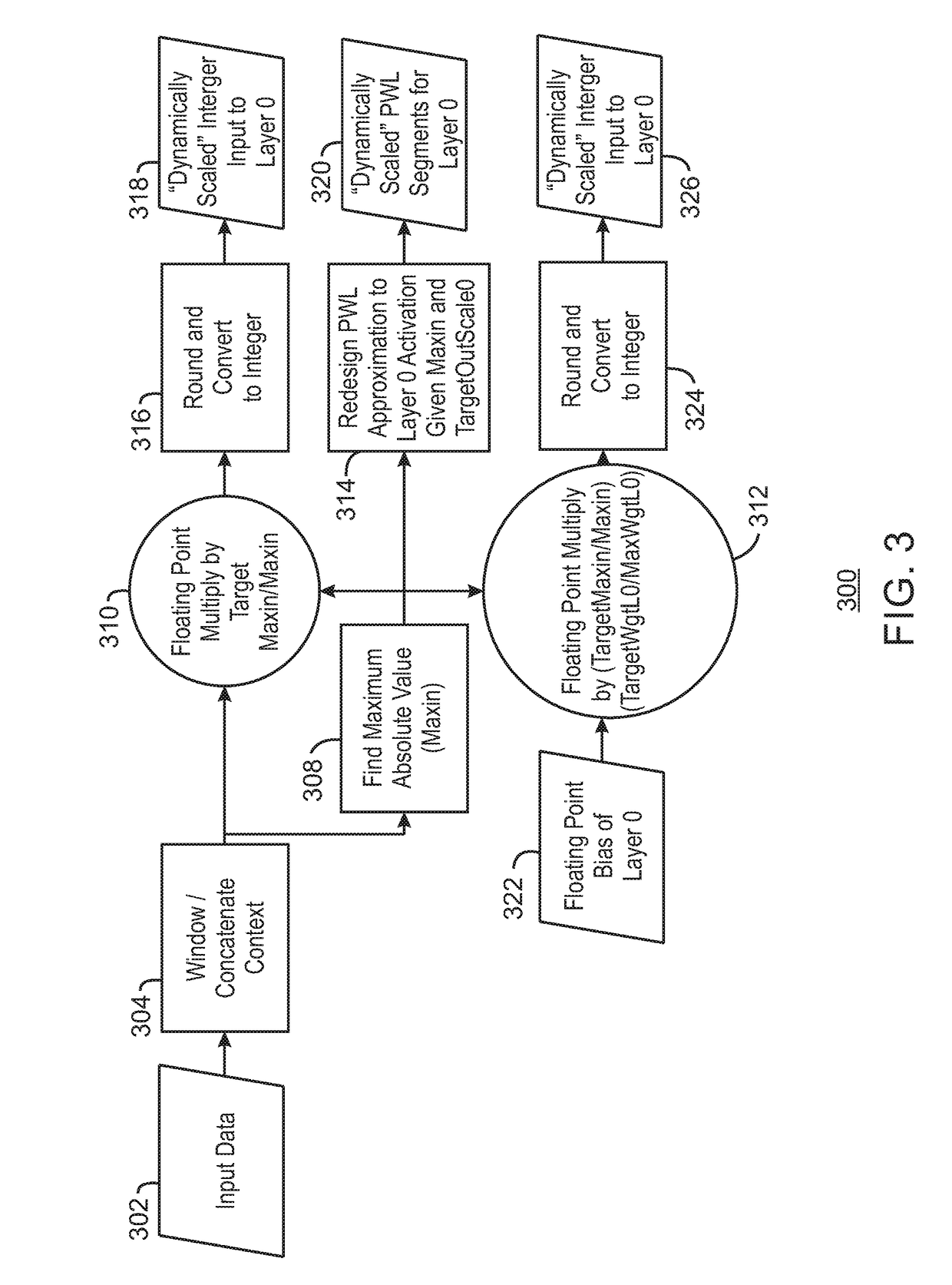

Dynamic quantization of neural networks

An apparatus for applying dynamic quantization of a neural network is described herein. The apparatus includes a scaling unit and a quantizing unit. The scaling unit is to calculate an initial desired scale factors of a plurality of inputs, weights and a bias and apply the input scale factor to a summation node. Also, the scaling unit is to determine a scale factor for a multiplication node based on the desired scale factors of the inputs and select a scale factor for an activation function and an output node. The quantizing unit is to dynamically requantize the neural network by traversing a graph of the neural network.

Owner:INTEL CORP

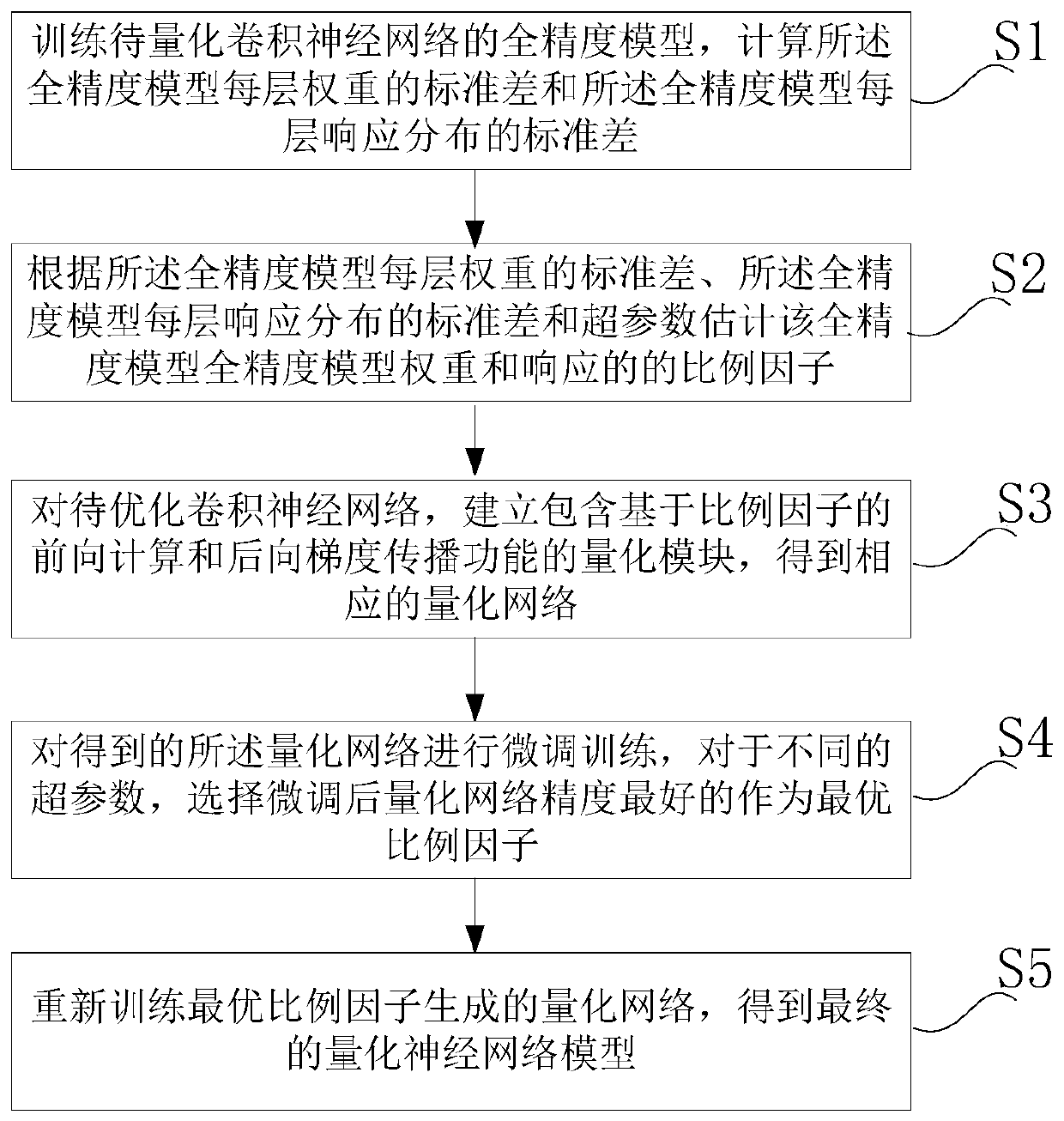

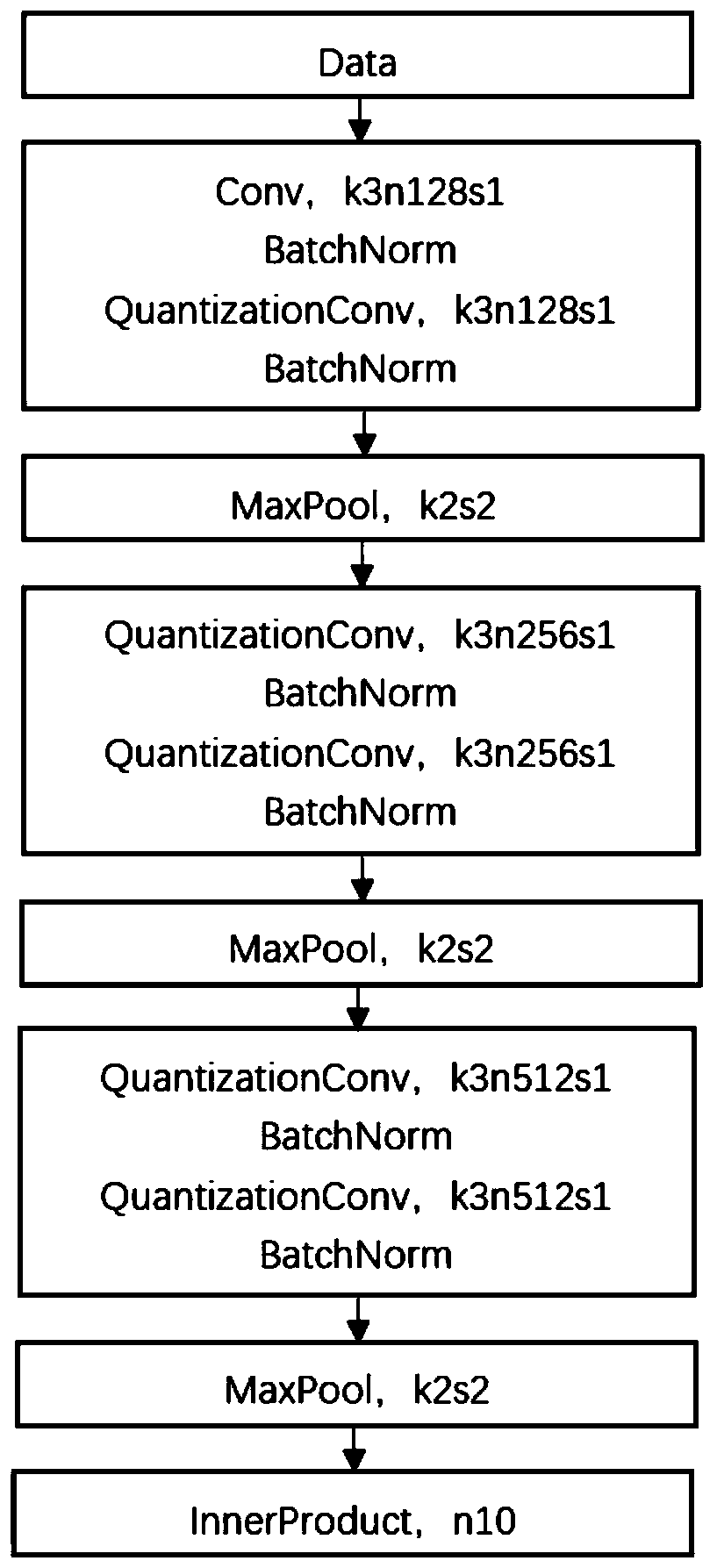

Convolutional neural network quantification method and device, computer and storage medium

InactiveCN110363281AAccelerate the effectTaking into account the compression effecNeural architecturesNeural learning methodsComputation complexityQuantized neural networks

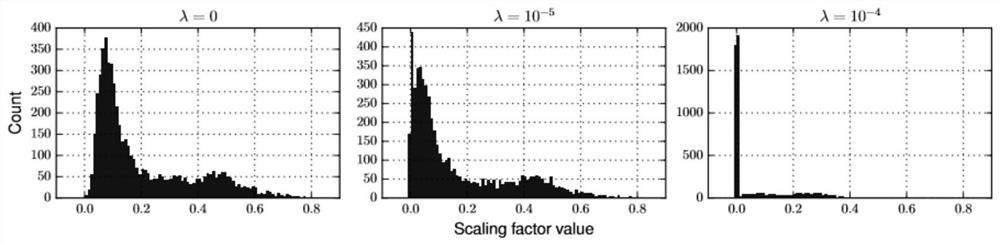

The invention provides a convolutional neural network quantification method, which comprises the steps of training a full-precision model of a convolutional neural network to be quantified, and calculating standard deviation of weight and response distribution of each layer of the full-precision model; estimating scale factors of parameters and features of the full-precision model according to thestandard deviation and hyper-parameters of the weight and response distribution of each layer of the full-precision model; for the to-be-optimized convolutional neural network, establishing a quantization module containing scaling factor-based forward calculation and backward gradient propagation functions to obtain a corresponding quantization network; carrying out fine tuning training on the quantization network, and determining an optimal scale factor; and retraining the quantization network generated by the optimal scaling factor to obtain a final quantization neural network model. The invention further provides a convolutional neural network quantization device, a computer and a storage medium. According to the invention, the problems of complex realization and high calculation complexity of the existing model quantification method are improved.

Owner:SHANGHAI JIAO TONG UNIV

Neural network method and appartus with parameter quantization

PendingUS20200026986A1Reduce errorsNeural architecturesNeural learning methodsData setNormal density

A neural network method of parameter quantization includes obtaining channel profile information for first parameter values of a floating-point type in each channel included in each of feature maps based on an input in a first dataset to a floating-point parameters pre-trained neural network; determining a probability density function (PDF) type, for each channel, appropriate for the channel profile information based on a classification network receiving the channel profile information as a dataset; determining a fixed-point representation, based on the determined PDF type, for each channel, statistically covering a distribution range of the first parameter values; and generating a fixed-point quantized neural network based on the fixed-point representation determined for each channel.

Owner:SAMSUNG ELECTRONICS CO LTD

Model generation method and device

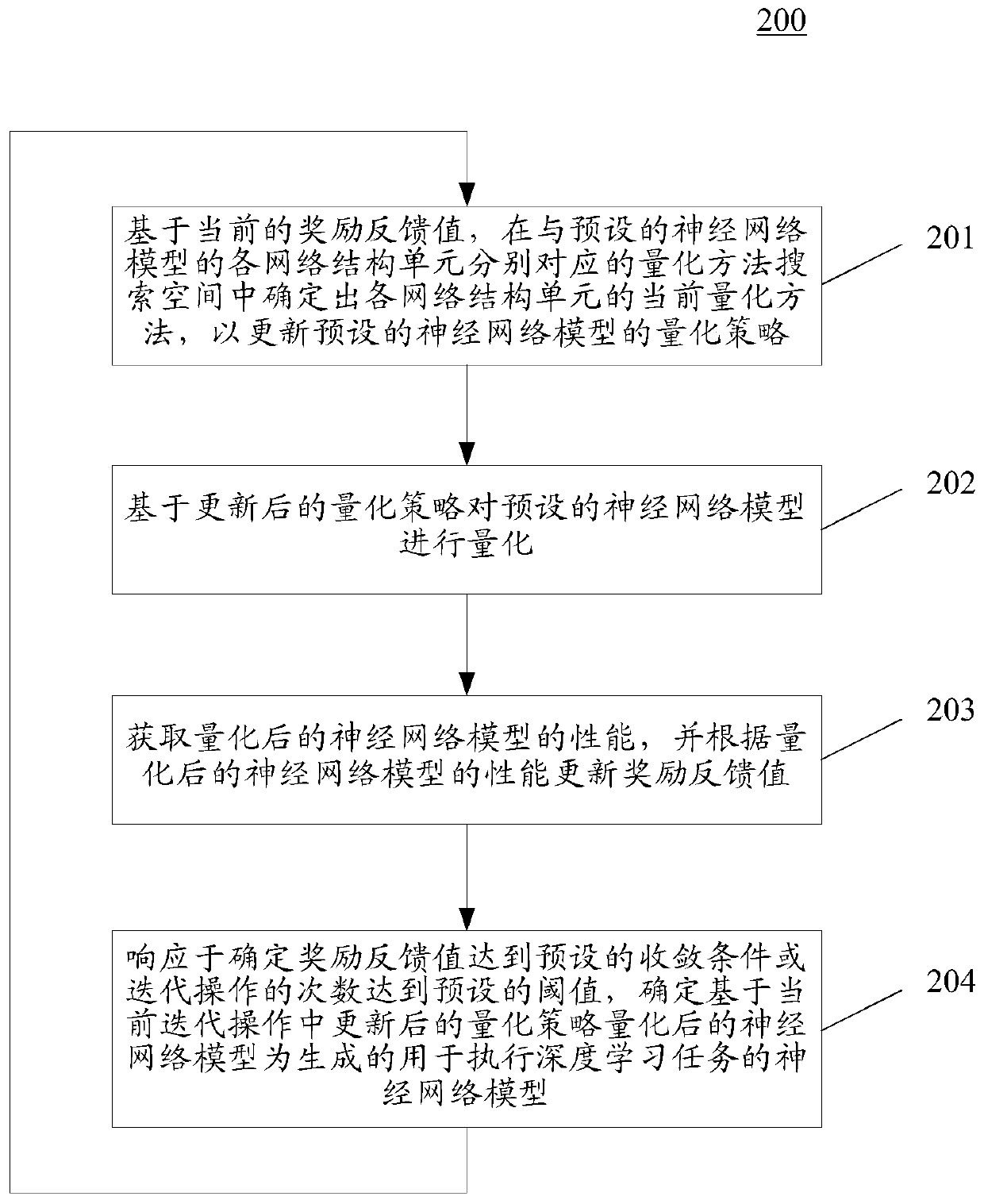

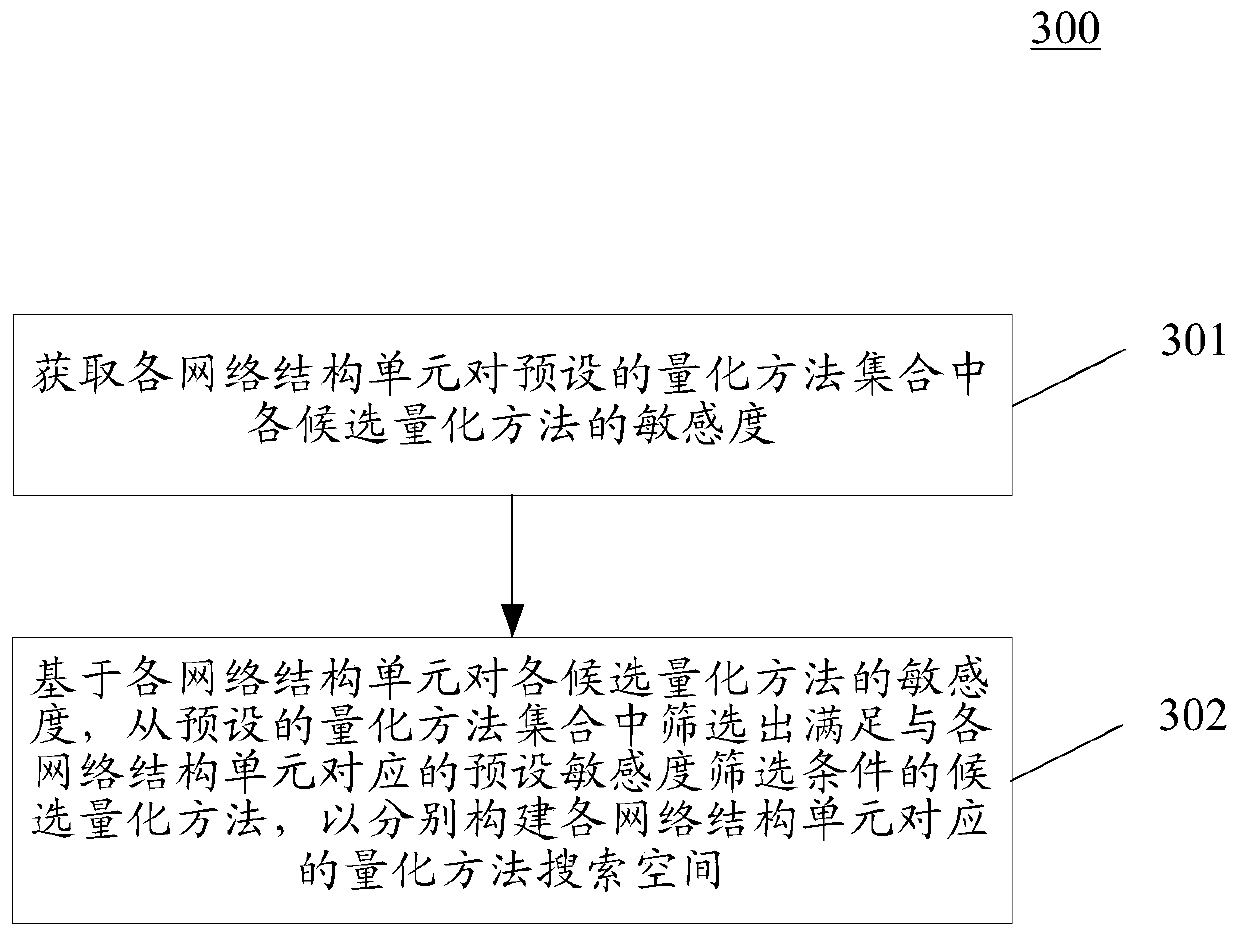

ActiveCN110852421AGuaranteed accuracyImprove search efficiencyNeural architecturesNeural learning methodsAlgorithmNetwork structure

The invention relates to the field of artificial intelligence. The embodiment of the invention discloses a model generation method and device. The method comprises the following steps: generating a neural network model for executing a deep learning task by sequentially executing multiple iterative operations; wherein the iterative operation comprises the steps of determining a current quantizationmethod of each network structure unit in a quantization method search space corresponding to each network structure unit of a preset neural network model based on a current reward feedback value so as to update a quantization strategy of the preset neural network model; quantifying a preset neural network model based on the updated quantification strategy; obtaining the performance of the quantized neural network model, and updating a reward feedback value; and in response to determining that the reward feedback value reaches a preset convergence condition or the number of times of the iterative operation reaches a preset threshold, determining that the current quantized neural network model is a generated neural network model for executing the deep learning task. The method can reduce the memory space occupied by the neural network model.

Owner:BEIJING BAIDU NETCOM SCI & TECH CO LTD

Training method for quantizing the weights and inputs of a neural network

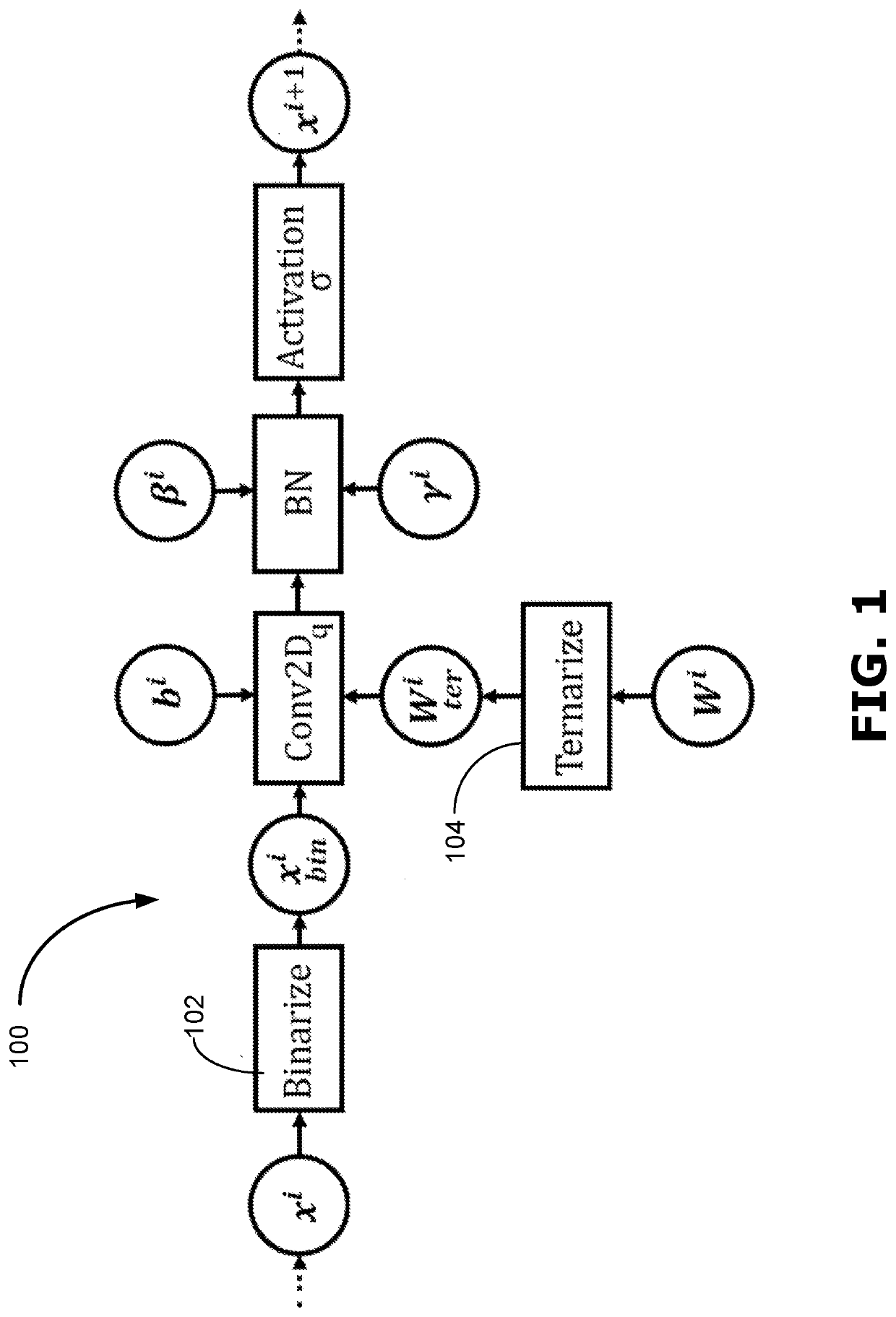

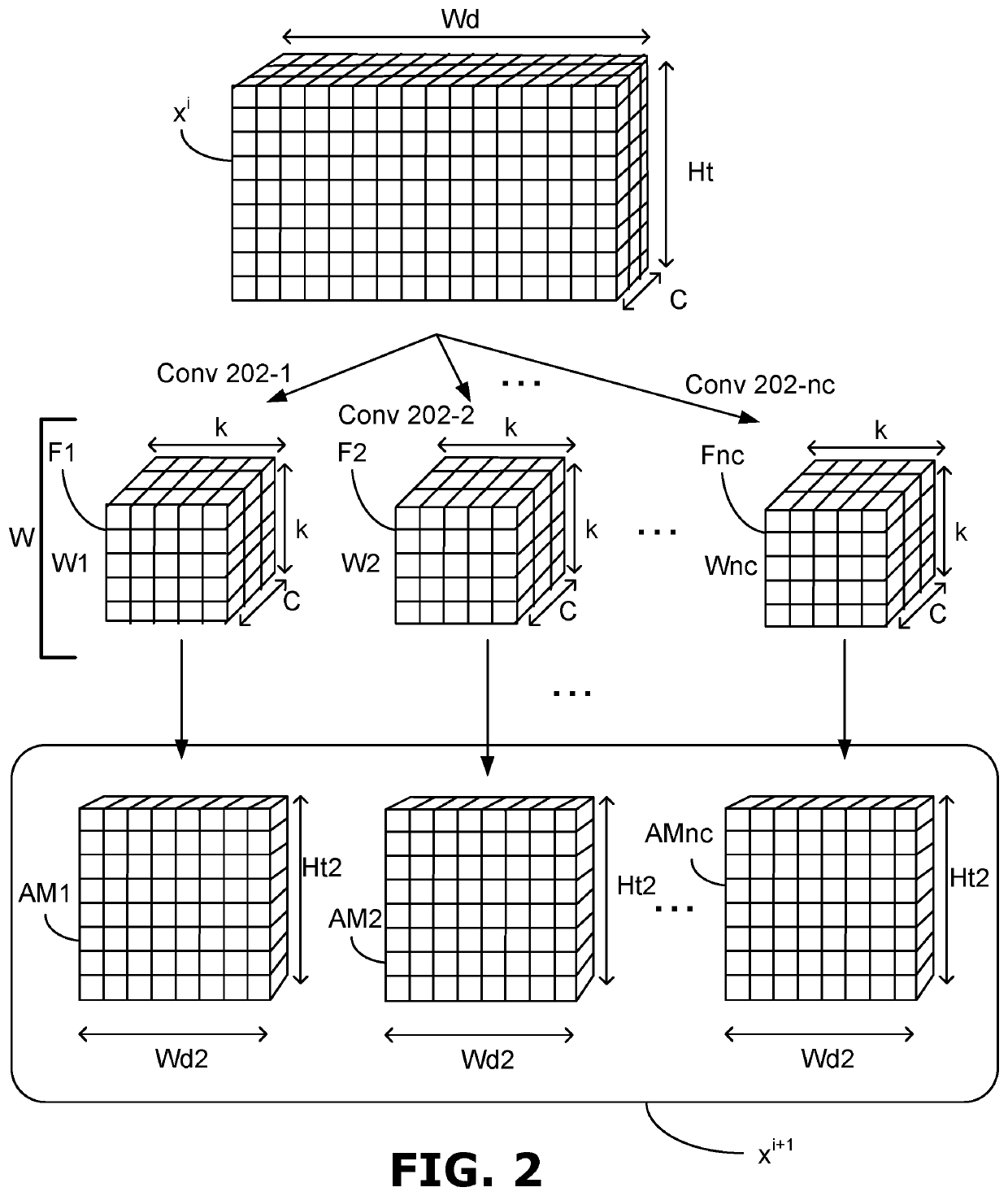

PendingUS20210089925A1Reduce the amount requiredReduce computing operationsNeural architecturesInference methodsPattern recognitionAlgorithm

A method and processing unit for training a neural network to selectively quantize weights of a filter of the neural network as either binary weights or ternary weights. A plurality of training iterations a performed that each comprise: quantizing a set of real-valued weights of a filter to generate a corresponding set of quantized weights; generating an output feature tensor based on matrix multiplication of an input feature tensor and the set of quantized weights; computing, based on the output feature tensor, a loss based on a regularization function that is configured to move the loss towards a minimum value when either: (i) the quantized weights move towards binary weights, or (ii) the quantized weights move towards a ternary weights; computing a gradient with an objective of minimizing the loss; updating the real-valued weights based on the computed gradient. When the training iterations are complete, a set of weights quantized from the updated real-valued weights is stored as either a set of binary weights or a set of ternary weights.

Owner:HUAWEI TECH CO LTD

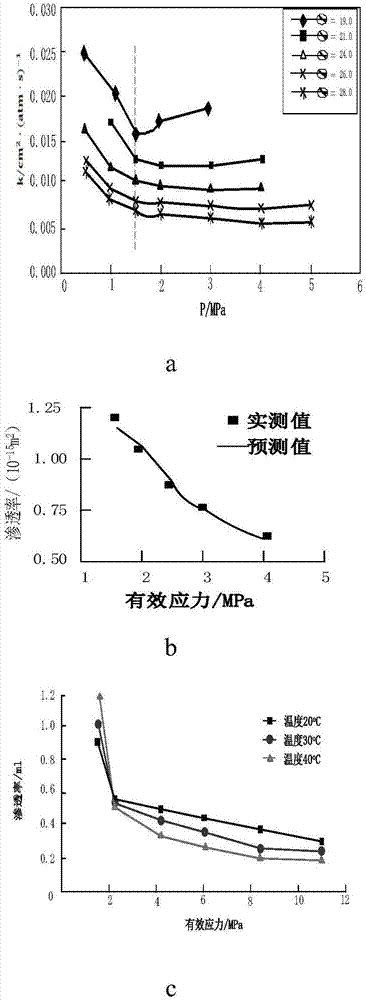

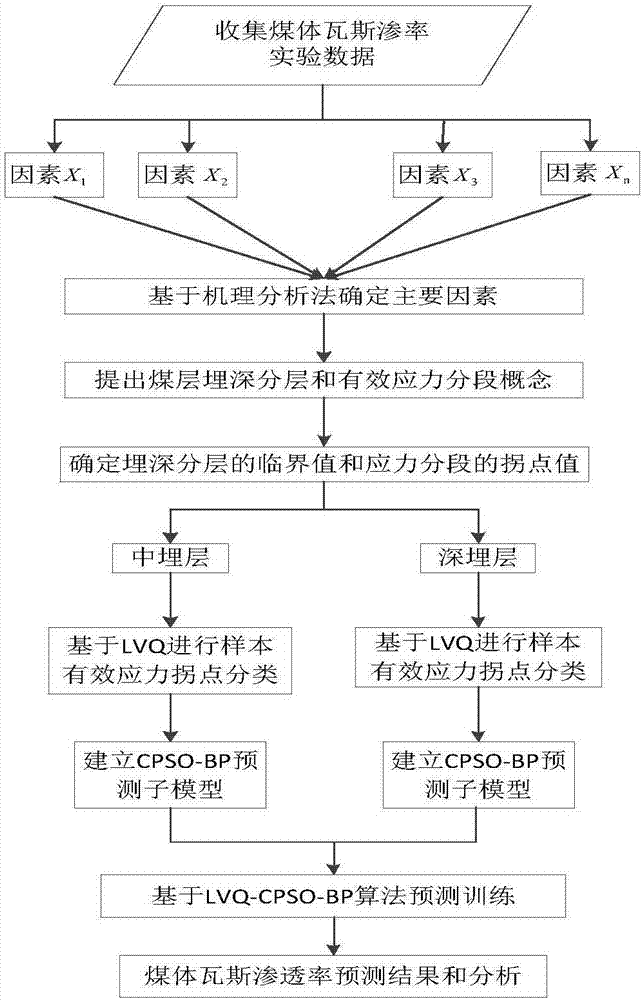

Coal-body gas permeability predicting method based on LVQ-CPSO-BP algorithm

InactiveCN106869990AHigh degree of fitImprove forecast accuracyDesign optimisation/simulationGas removalQuantized neural networksEuclidean vector

A learning vector quantization (LVQ)-chaos particle swarm optimization (CPSO)-back propagation(BP) coal-body gas permeability predicting method is provided based on an algorithm of LVQ neural network classifying, the CPSO and BP neural network predicting. A critical value is determined, and the buried depth of a coal seam is divided into two layers. Based on the inflection point relation existing between effective stress and gas permeability, an inflection point value is determined, and the effective stress is divided into two sections. Four microcosmic sample parameters are classified and identified through the LVQ according to the inflection point characteristic, a BP neural network is adopted to study and train, and the predicting result is output, and a weight value and a threshold of the BP neural network are optimized through the CPSO. Finally, the predicting result of the built LVQ-CPSO-BP algorithm is verified, and the predicting results of a BP algorithm, a GA-BP algorithm and a PSO-BP algorithm are compared and analyzed.

Owner:XINJIANG UNIVERSITY

Method and device for adjusting artificial neural network

ActiveCN110555508AGuaranteed accuracyAvoid convergenceNeural architecturesNeural learning methodsAlgorithmQuantized neural networks

A method and a device for adjusting an artificial neural network (ANN) are provided. The ANN at least comprises a plurality of layers, and the method comprises the steps: obtaining a to-be-trained neural network model; training the neural network model by using high-bit fixed-point quantization to obtain a trained high-bit fixed-point quantization neural network model; carrying out fine tuning onthe high-bit fixed-point quantization neural network model by using low bits to obtain a trained neural network model with low-bit fixed-point quantization; and outputting the trained neural network model with low-bit fixed-point quantization. According to the neural network training scheme with the bit width gradually reduced, the training and deployment of the neural network are considered, so that the calculation precision comparable to that of a floating point network can be realized under the condition of extremely low bit width.

Owner:XILINX TECH BEIJING LTD

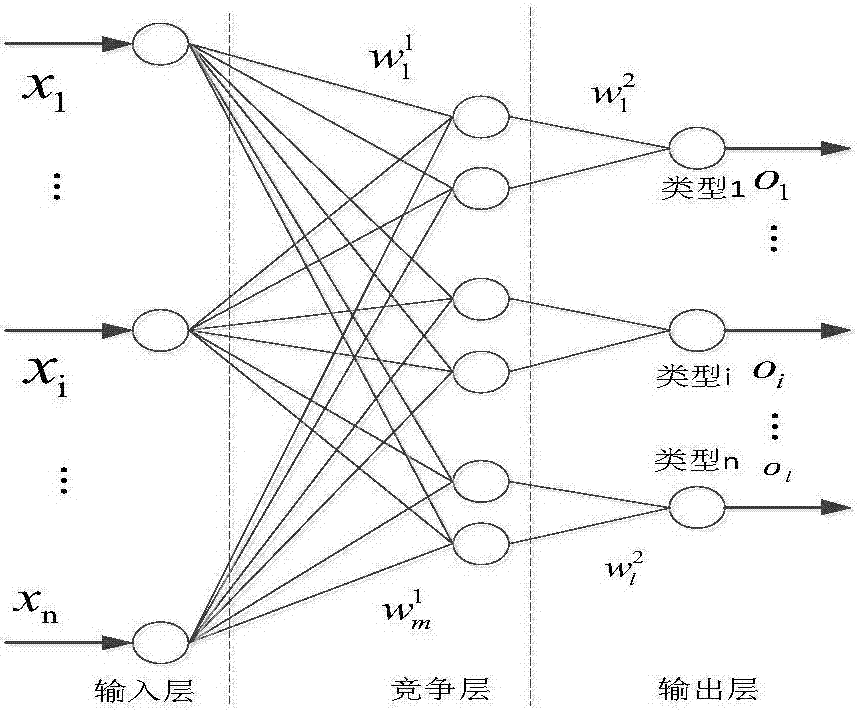

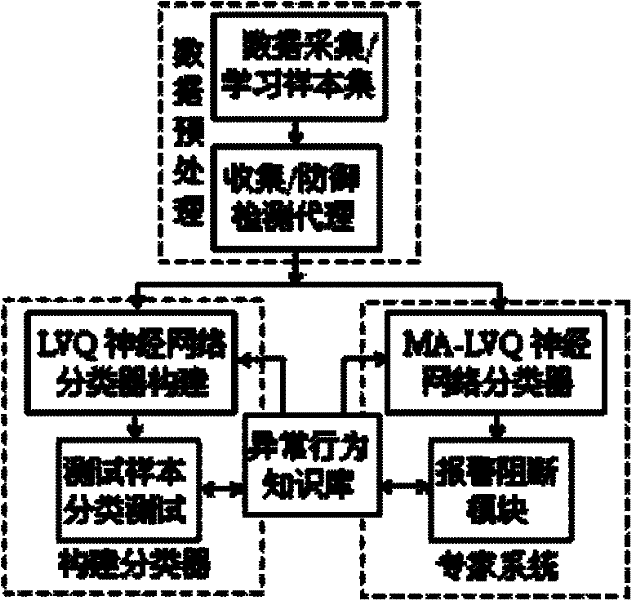

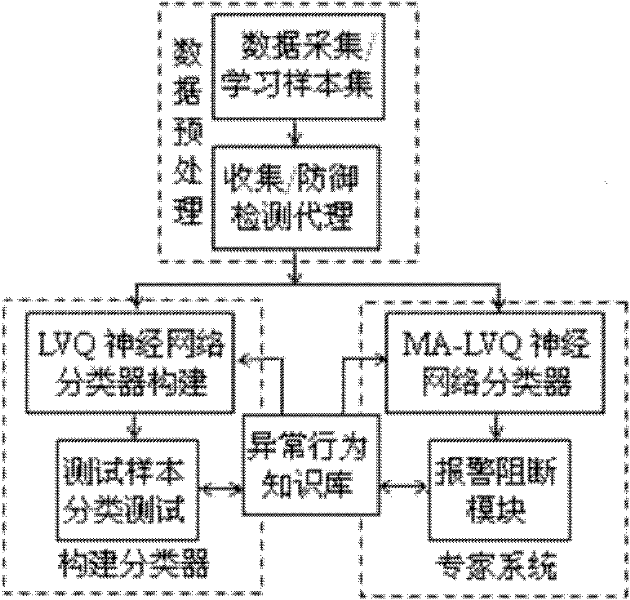

Intelligent NIPS (Network Intrusion Prevention System) framework for quantifying neural network based on mobile agent (MA) and learning vector

InactiveCN102195975AImprove classification effectWide range of applicationsBiological neural network modelsTransmissionData streamAnomalous behavior

The invention discloses an intelligent NIPS (Network Intrusion Prevention System) framework for quantifying a neural network based on a mobile agent (MA) and a learning vector. The NIPS framework comprises a data preprocessing unit, a construction classifier unit, an expert system unit and a knowledge base, wherein the data preprocessing unit is used for collecting network data streams and selecting an input sample and a test sample for the neural network from the collected network data streams; the construction classifier unit is used for making use of an input and learning sample MA-LVQ (Mobile Agent-Learning Vector Quantization) neural network classifier and performing class test to form a knowledge base; the expert system unit is used for interacting with the knowledge base according to a known security policy to compare and classify actions provided by the data streams and action descriptions in the knowledge base so as to determine an output result; and the knowledge base comprises a normal action description and an abnormal action description and is updated by interacting through the expert system unit. By adopting the NIPS framework, a better classifying effect can be achieved by a linear network, and the stronger limit on linear separability of data required by the linear network can be avoided effectively under the action of a competition layer; and the NIPS framework is more practicable and extensive.

Owner:SHANGHAI DIANJI UNIV

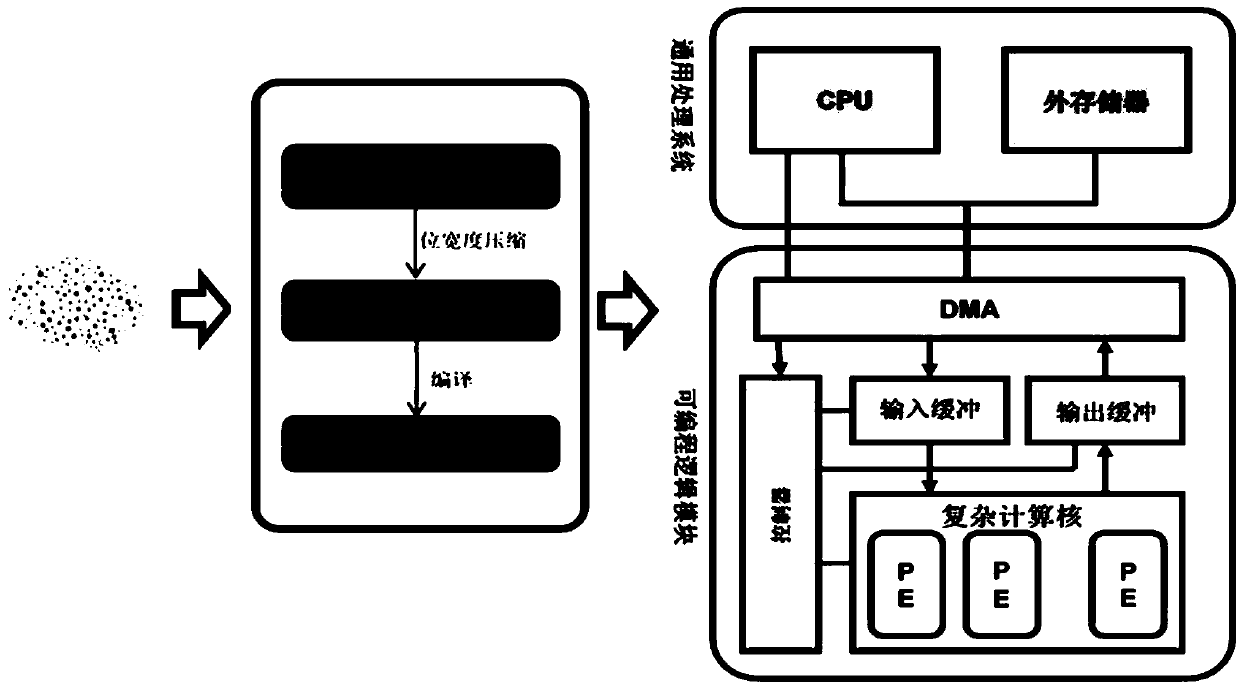

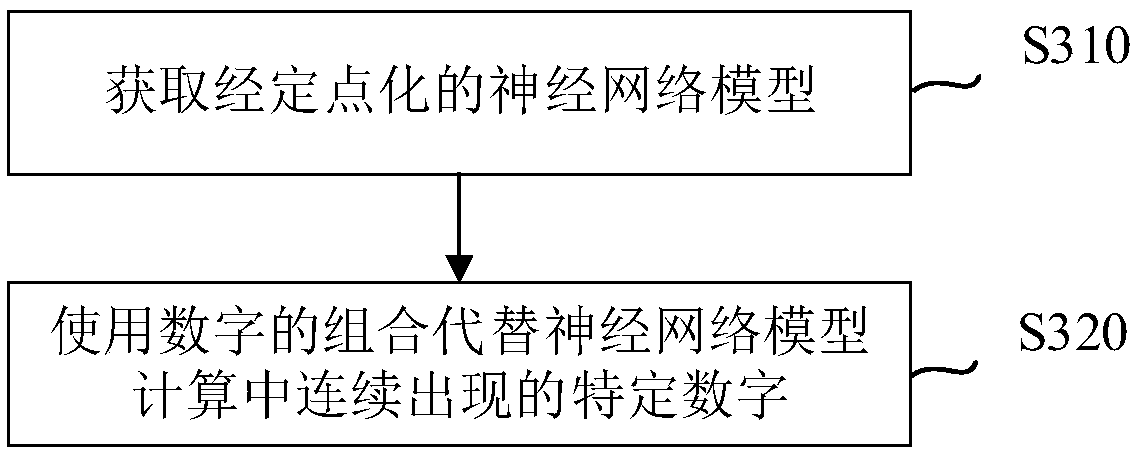

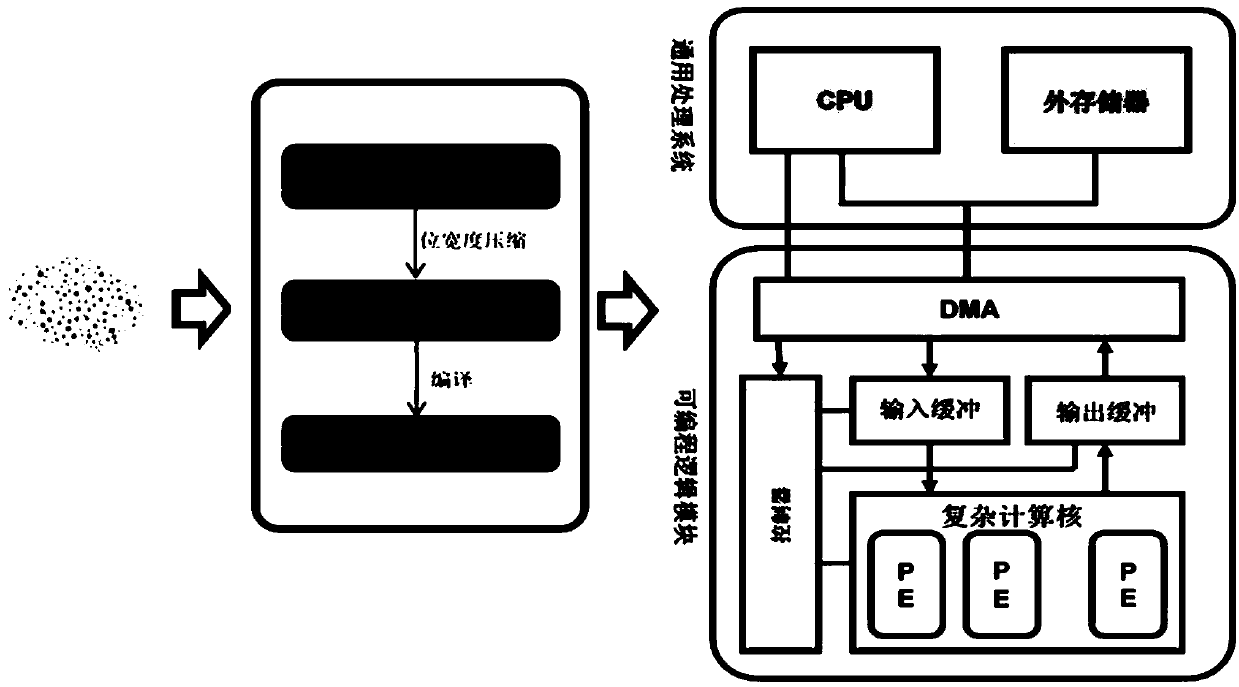

Neural network data compression and related calculation method and device

InactiveCN110490310ACompression is fast and efficientIncrease profitNeural architecturesPhysical realisationData compressionSimulation

The invention discloses a neural network data compression and related calculation method and device. The data compression method comprises the following steps: acquiring a fixed-point neural network model; and replacing specific numbers continuously appearing in the neural network model calculation with a combination of two or more numbers. Therefore, for the inherent attribute that a large numberof identical numbers continuously appear in quantized neural network calculation, a fixed number combination can be adopted for replacement, so that the effect of highly compressing the data is conveniently and simply realized.

Owner:XILINX TECH BEIJING LTD

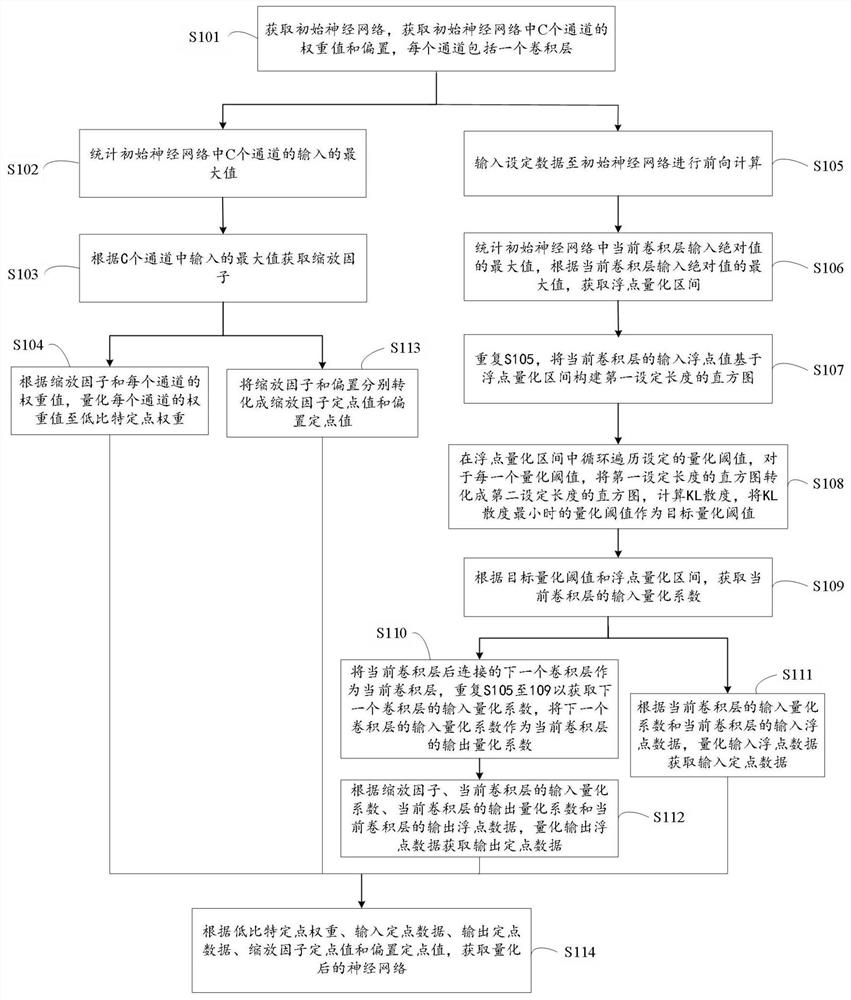

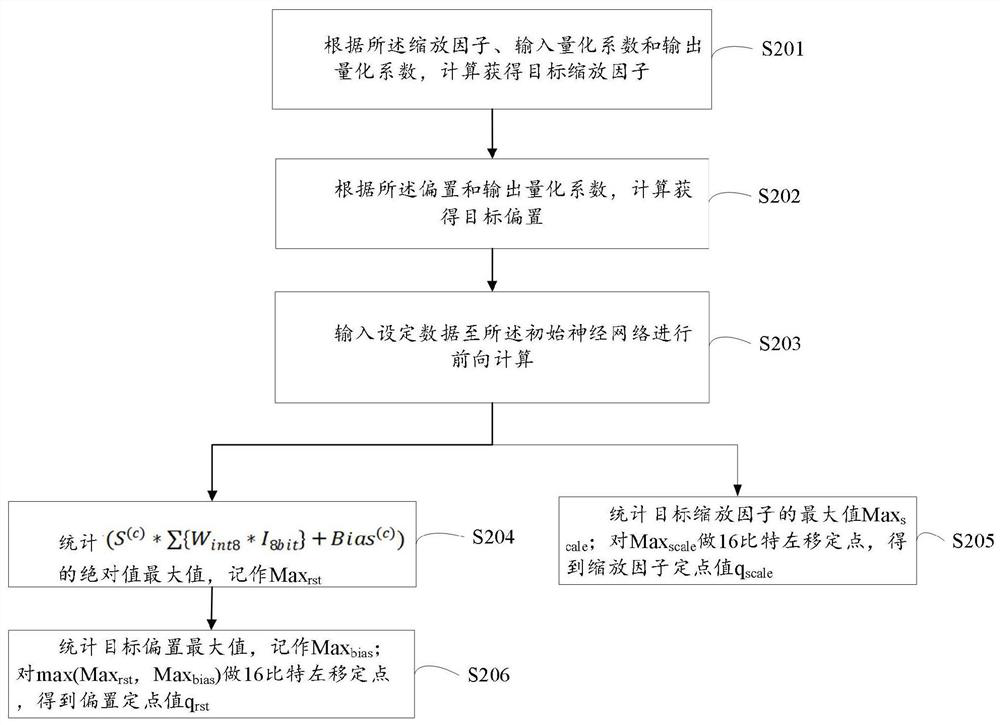

Neural network low-bit quantization method

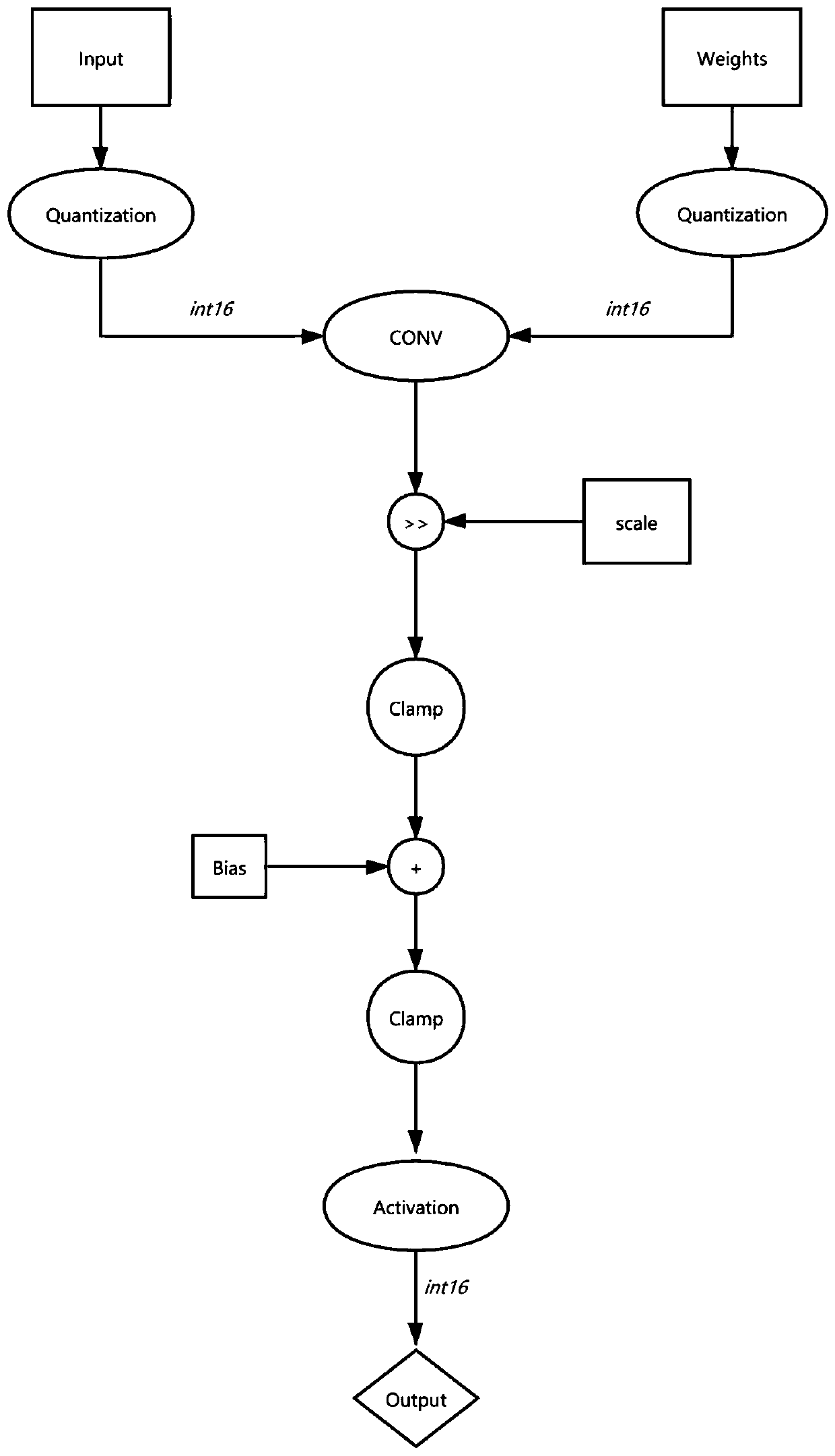

PendingCN112381205APracticalImprove Quantization EfficiencyNeural architecturesPhysical realisationAlgorithmQuantized neural networks

The invention relates to a neural network low-bit quantification method. The weight value of each channel of a neural network is quantified to a low-bit fixed-point weight. And the method also includes obtaining an input quantization coefficient of the current convolution layer according to the target quantization threshold and the floating point quantization interval; taking the input quantization coefficient of the next convolution layer as the output quantization coefficient of the current convolution layer; quantizing the input floating point data to obtain input fixed point data; and quantizing the output floating point data to obtain output fixed point data; and converting the scaling factor and the bias into a scaling factor fixed-point value and a bias fixed-point value respectively; according to the low-bit fixed-point weight, the input fixed-point data, the output fixed-point data, the scaling factor fixed-point value and the offset fixed-point value, obtaining a neural network, and applying the quantized neural network model to embedded equipment.

Owner:BEIJING TSINGMICRO INTELLIGENT TECH CO LTD

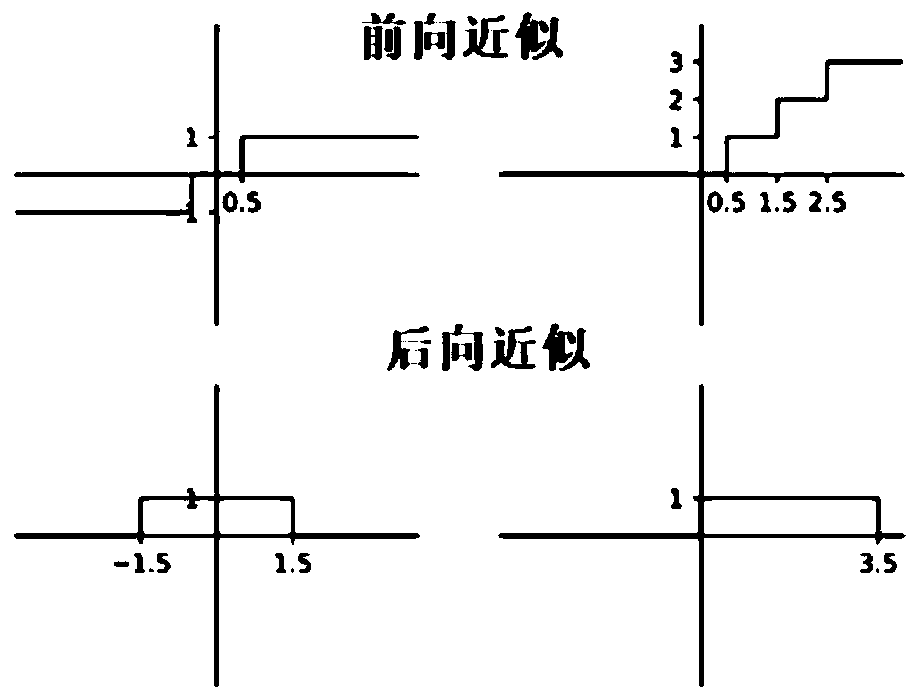

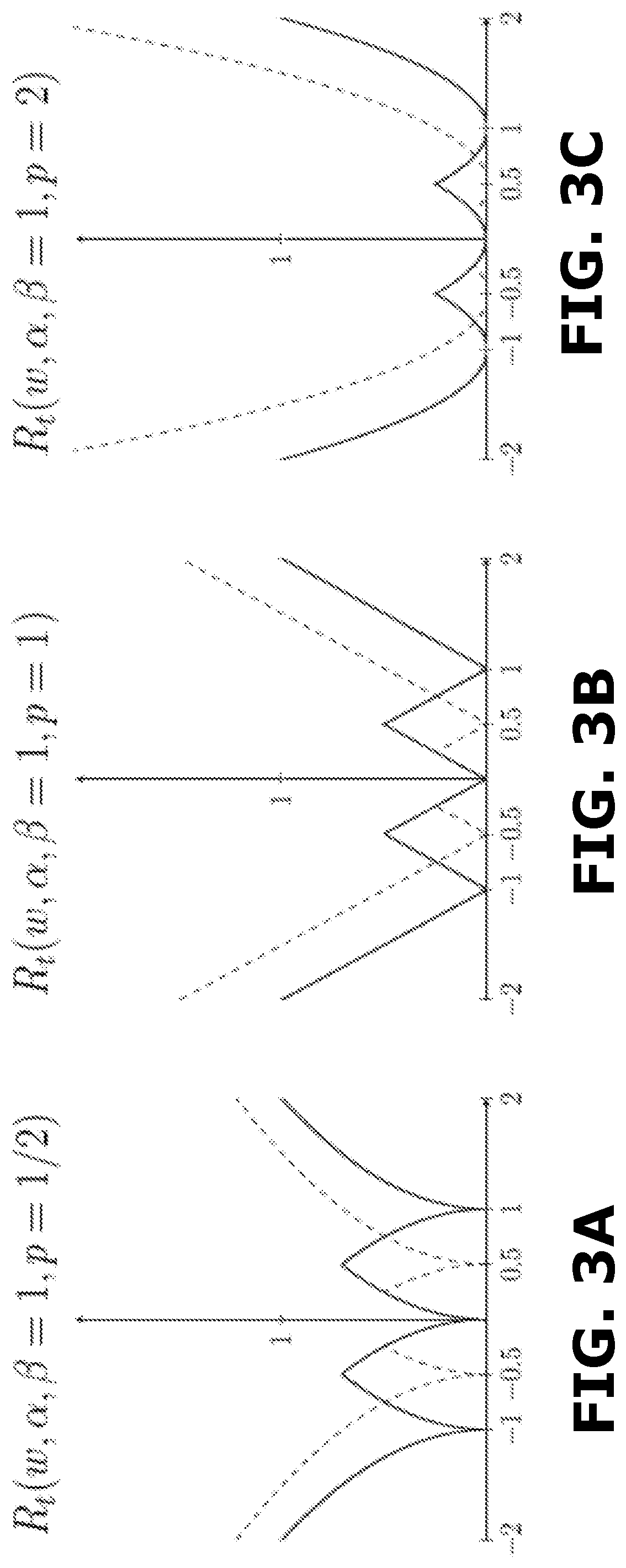

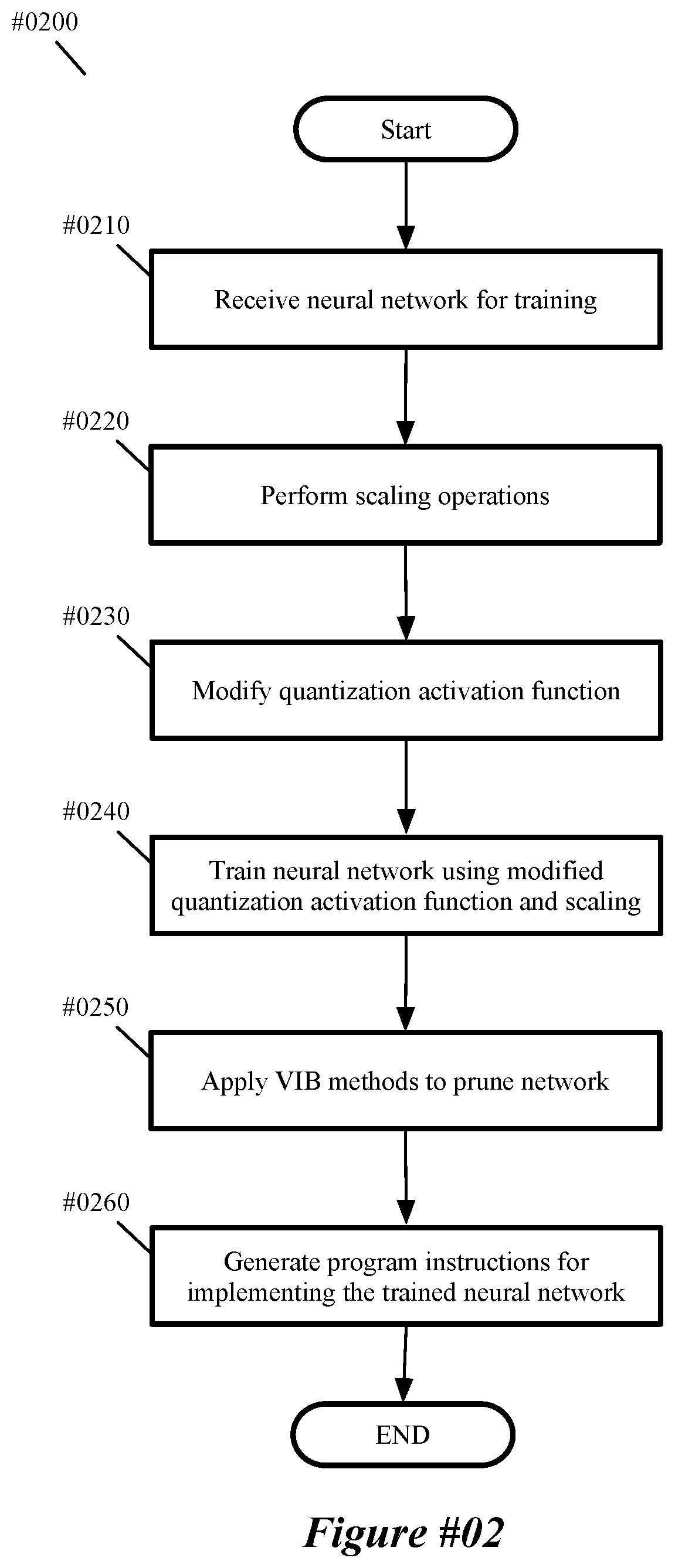

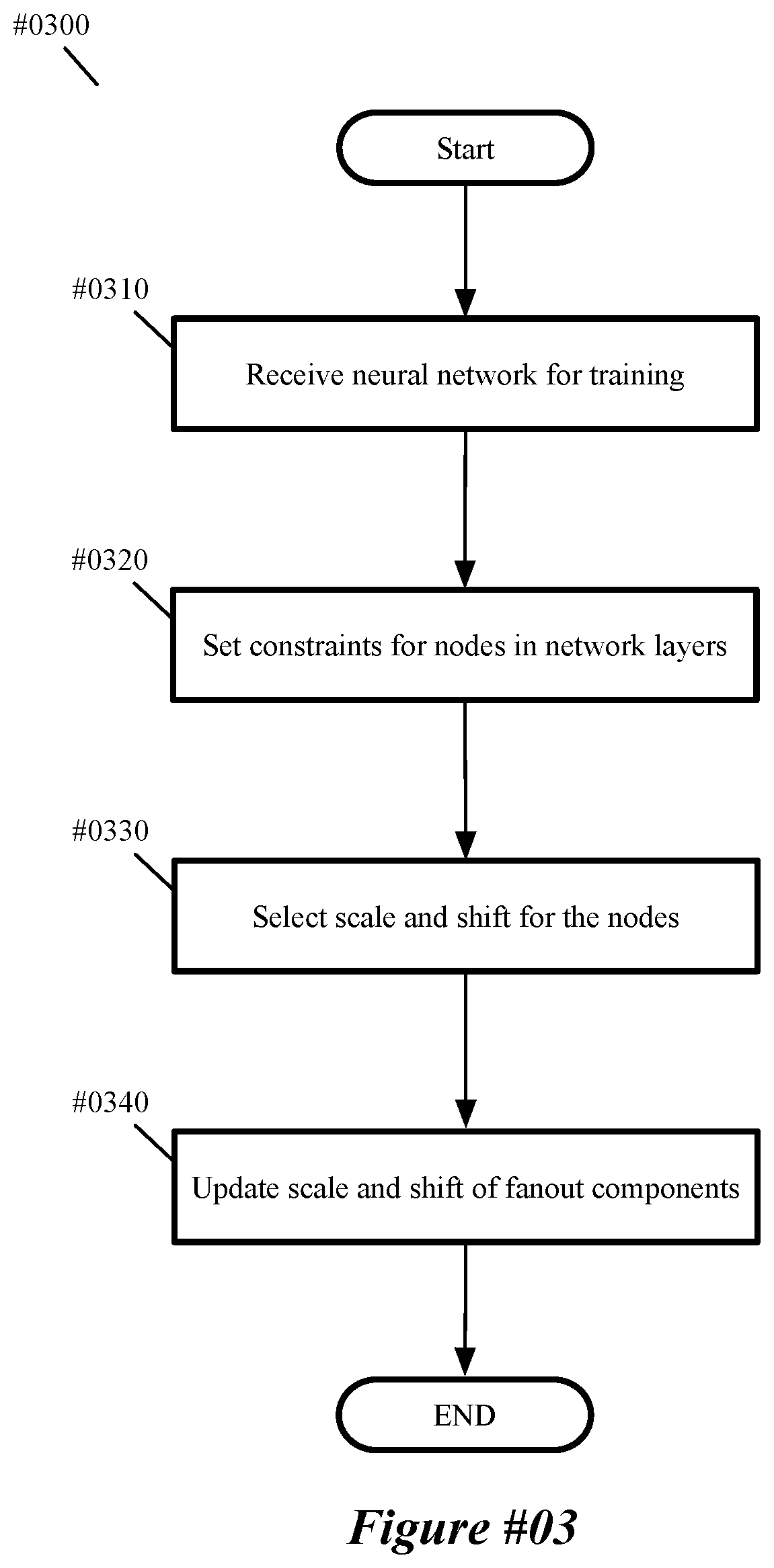

Quantizing neural networks using shifting and scaling

PendingUS20210034982A1Improve accuracyBig errorMathematical modelsDigital data processing detailsAlgorithmForward propagation

Some embodiments of the invention provide a novel method for training a quantized machine-trained network. Some embodiments provide a method of scaling a feature map of a pre-trained floating-point neural network in order to match the range of output values provided by quantized activations in a quantized neural network. A quantization function is modified, in some embodiments, to be differentiable to fix the mismatch between the loss function computed in forward propagation and the loss gradient used in backward propagation. Variational information bottleneck, in some embodiments, is incorporated to train the network to be insensitive to multiplicative noise applied to each channel. In some embodiments, channels that finish training with large noise, for example, exceeding 100%, are pruned.

Owner:PERCEIVE CORP

Face recognition neural network adjustment method and device

ActiveCN110555450AAvoid extra computing power requirementsGuaranteed accuracyCharacter and pattern recognitionNetwork deploymentNerve network

The invention provides a method and a device for adjusting and deploying a face recognition neural network. The face recognition neural network at least comprises a plurality of convolution layers andat least one full connection layer, the last full connection layer is a classifier for classification, and the method comprises the following steps: obtaining a neural network model to be trained; training the neural network model by using fixed-point quantization to obtain a trained fixed-point quantization neural network model in which the last full connection layer maintains a floating point in a training process; and outputting the trained fixed-point quantized neural network model without the last full connection layer. Therefore, by utilizing the particularity of the face recognition network, the classifier layer unfixed points which greatly influence the overall precision of the network are kept in the training stage and are not input into the network any more, and the classifier layer is not included in the network any more, so that the trained fixed-point neural network can be ensured to have high precision, and extra computing power requirements during network deployment canbe avoided at the same time.

Owner:XILINX TECH BEIJING LTD

Neural network quantification method and device, and electronic device

ActiveCN111105017AReduce computational complexityNeural architecturesMachine learningHidden layerEngineering

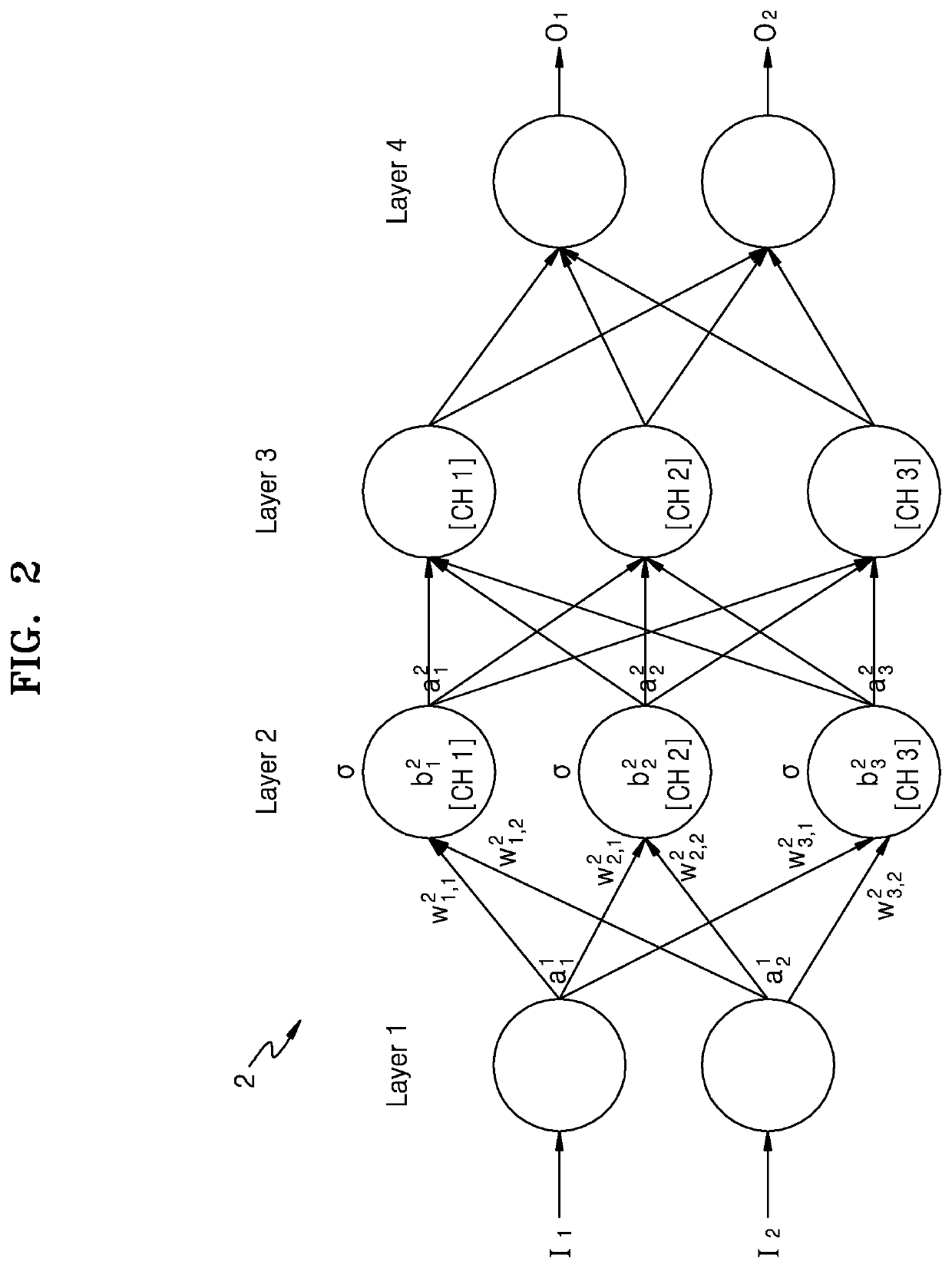

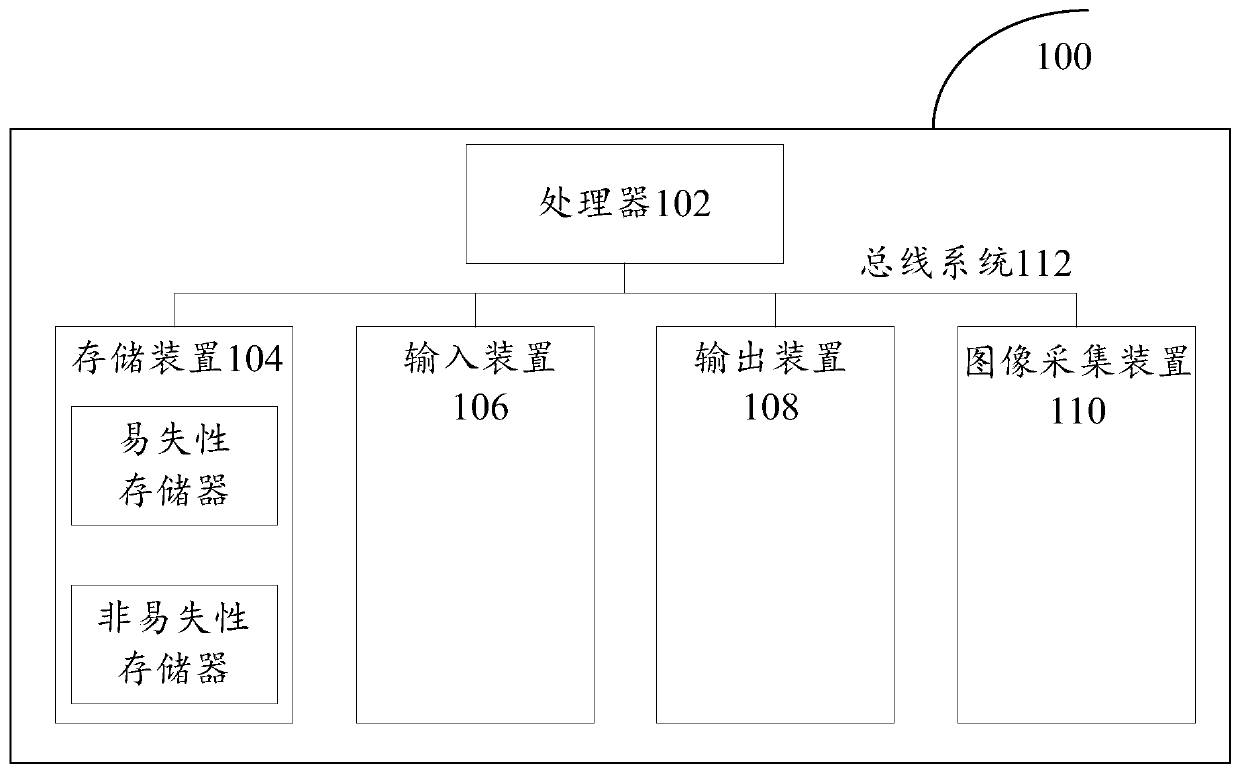

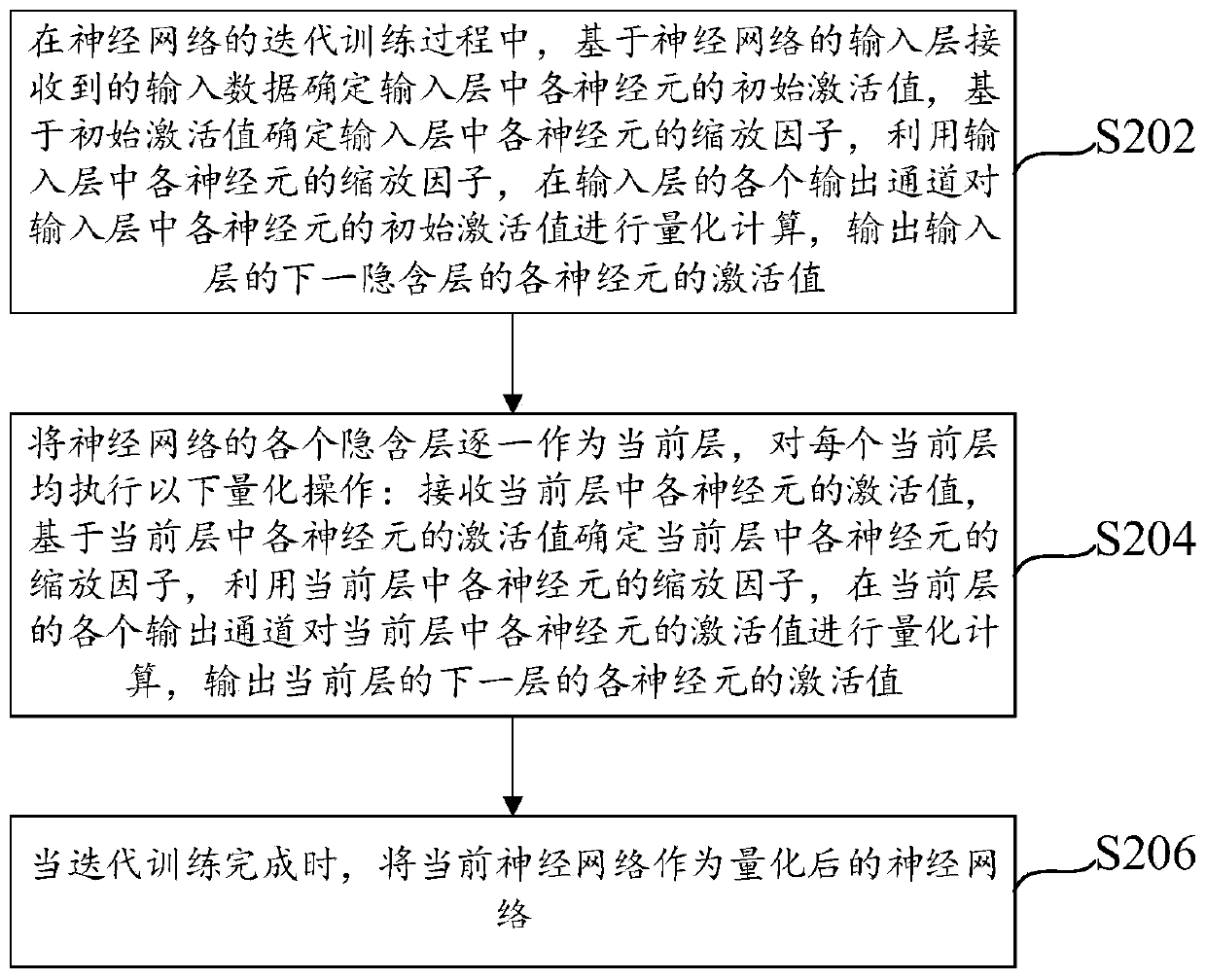

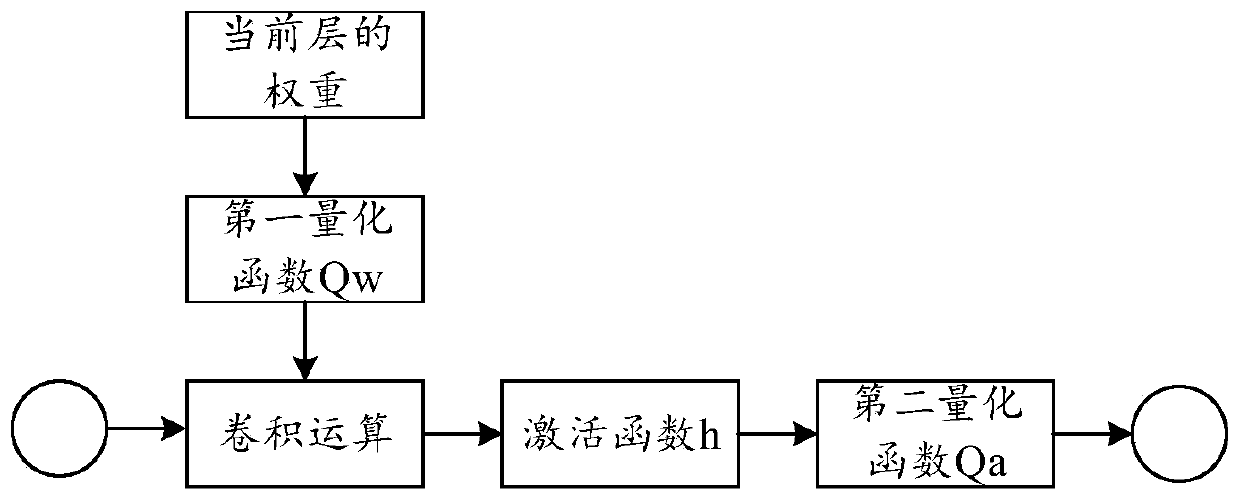

The invention provides a neural network quantification method and device, and an electronic device. The method comprises the steps of: in the iterative training process of a neural network, utilizingscaling factors of all neurons in an input layer, performing quantitative calculation on initial activation values of all the neurons in the input layer in all output channels of the input layer, andoutputting activation values of all the neurons in a next hidden layer of the input layer; taking each hidden layer of the neural network as a current layer one by one; and executing the following quantization operation on each current layer: executing the following quantization operation on each current layer, determining a scaling factor of each neuron in the current layer based on the activation value of each neuron in the current layer, performing quantitative calculation on the activation value of each neuron in the current layer in each output channel of the current layer by utilizing the scaling factor of each neuron in the current layer, and outputting the activation value of each neuron in the next layer of the current layer; and when the iterative training is completed, taking the current neural network as a quantized neural network. The recognition precision of the neural network is improved.

Owner:BEIJING KUANGSHI TECH +1

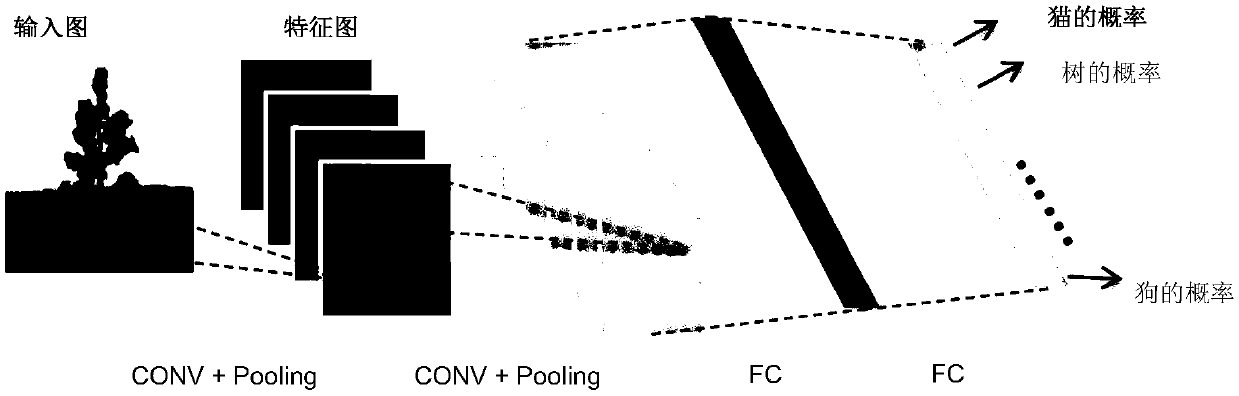

Model construction method and device, image processing method and device, hardware platform and storage medium

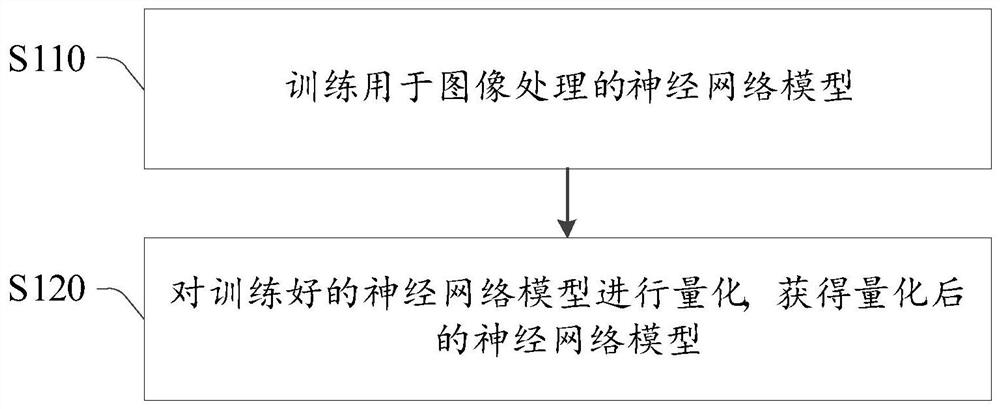

The application relates to the technical field of deep learning, and provides a model construction method and device, an image processing method and device, a hardware platform and a storage medium. The model construction method comprises the steps that a neural network model used for image processing is trained, wherein the neural network model comprises at least one depth separable convolution module, and each depth separable convolution module comprises a layer-by-layer convolution layer, a point-by-point convolution layer, a batch normalization layer and an activation layer which are connected in sequence; and the trained neural network model is quantized to obtain a quantized neural network model. According to the method, firstly, model parameters are quantified, so that the data volume of the parameters is effectively reduced, and the model is suitable for being deployed in NPU equipment. Secondly, the depth separable convolution module in the method is different from the depth separable convolution module in the prior art, and a batch normalization layer and an activation layer are not arranged between a layer-by-layer convolution layer and a point-by-point convolution layer, so that values of model parameters are distributed in a reasonable range, and the model parameters can be quantized with high precision.

Owner:成都佳华物链云科技有限公司

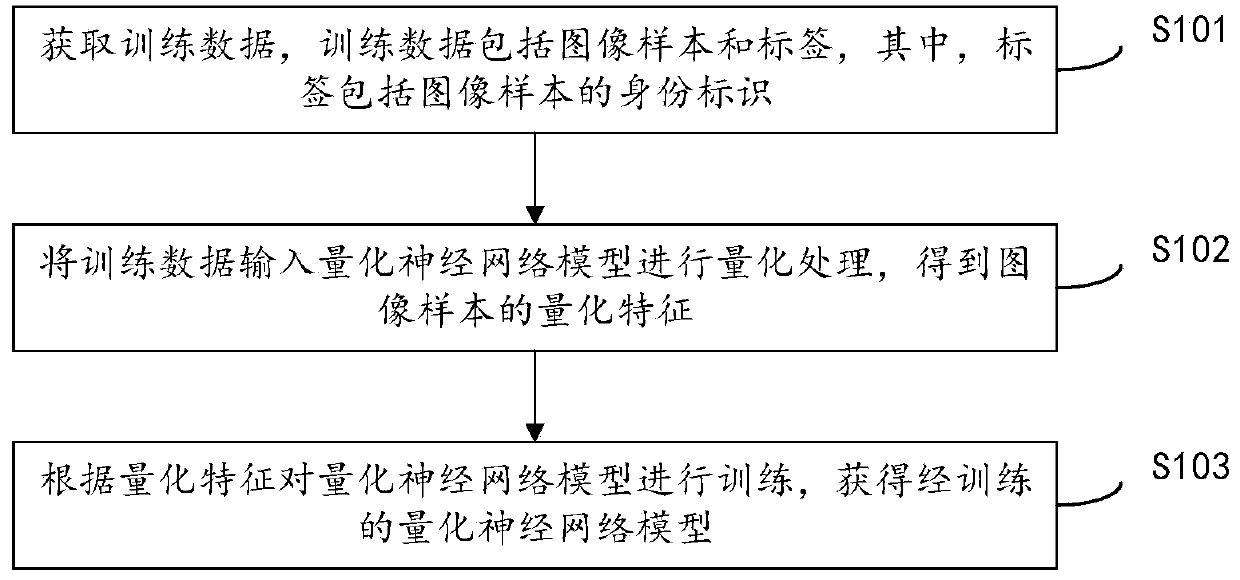

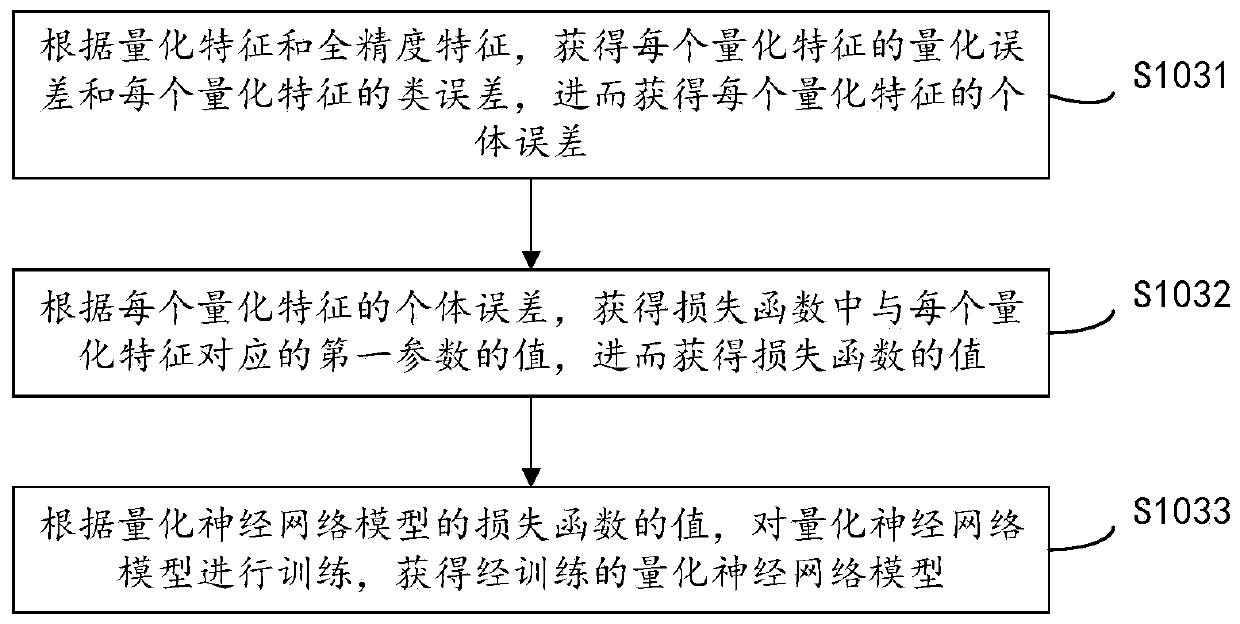

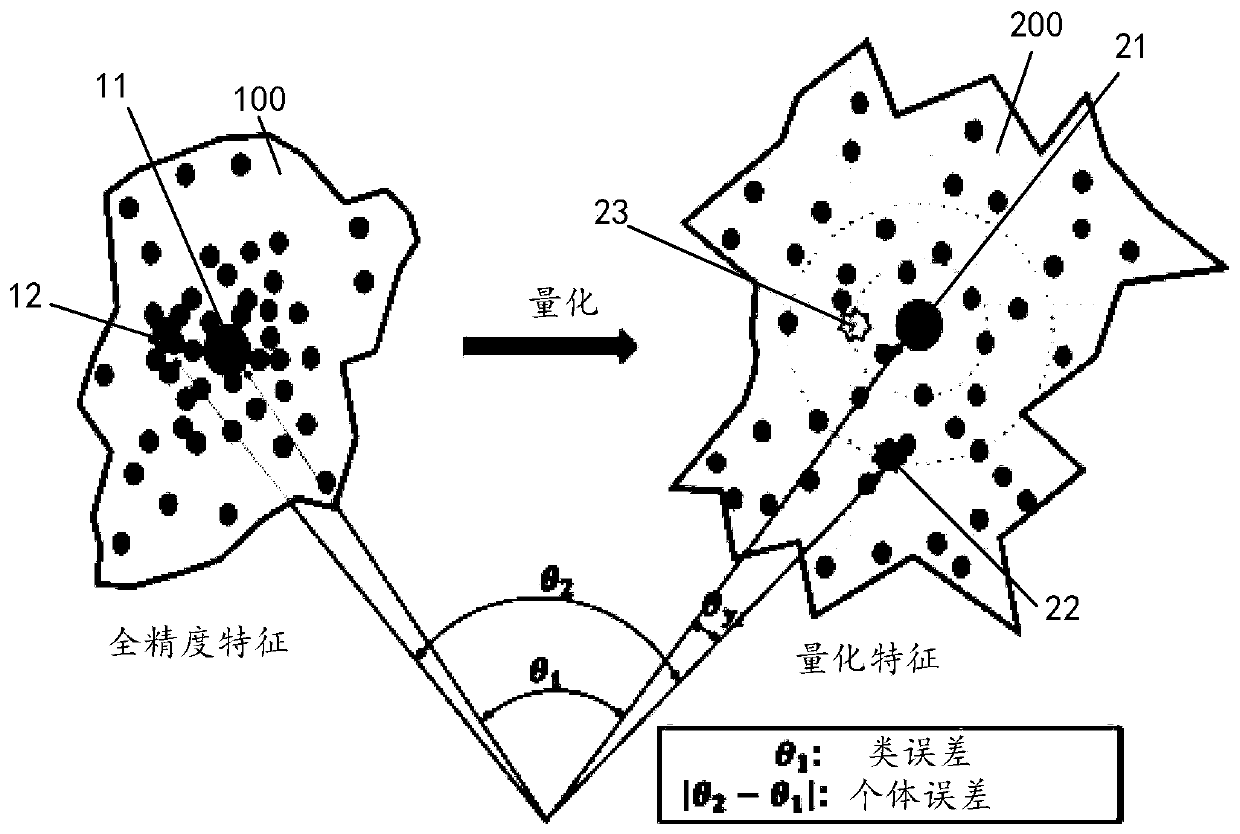

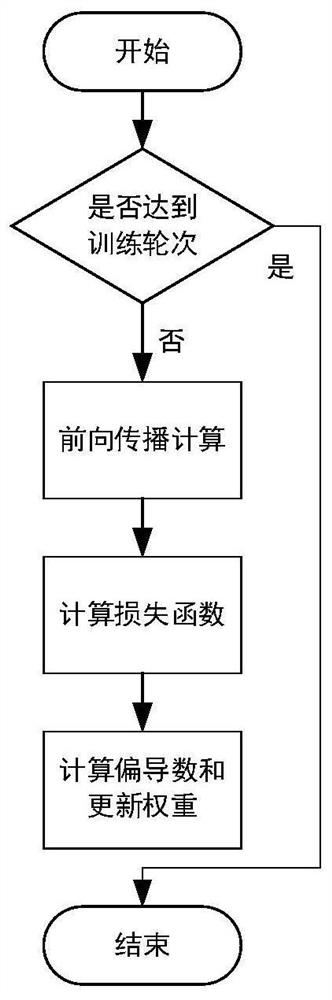

Training and testing method, device and equipment for quantized neural network model

ActiveCN111340226AImprove recognition accuracyEasy to identifyCharacter and pattern recognitionNeural learning methodsPattern recognitionTerminal equipment

The embodiment of the invention provides a training and testing method, device and equipment for a quantitative neural network model. The training method comprises the following steps: acquiring training data, wherein the training data comprises an image sample and a labe, and the label comprises an identity label of the image sample; inputting the training data into a quantitative neural networkmodel for quantitative processing to obtain quantitative features of the image sample; and training the quantitative neural network model according to the quantitative features to obtain a trained quantitative neural network model. By implementing the embodiment of the invention, the trained quantitative neural network model adopts low-bit quantization processing, the operand is reduced, the operation rate is improved, the method can be more conveniently applied to terminal equipment, the quantitative neural network model is used for face recognition, the quantization error can be minimized, and the recognition precision of the quantitative neural network model is improved.

Owner:BEIJING SENSETIME TECH DEV CO LTD

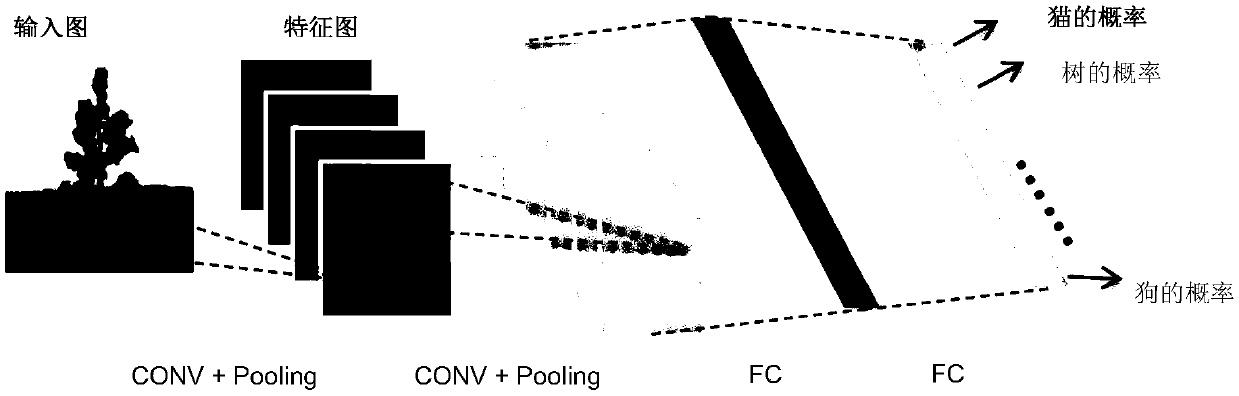

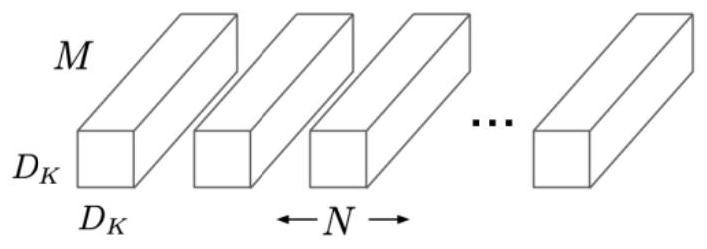

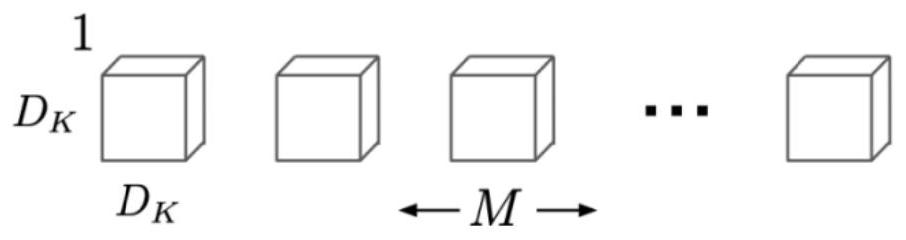

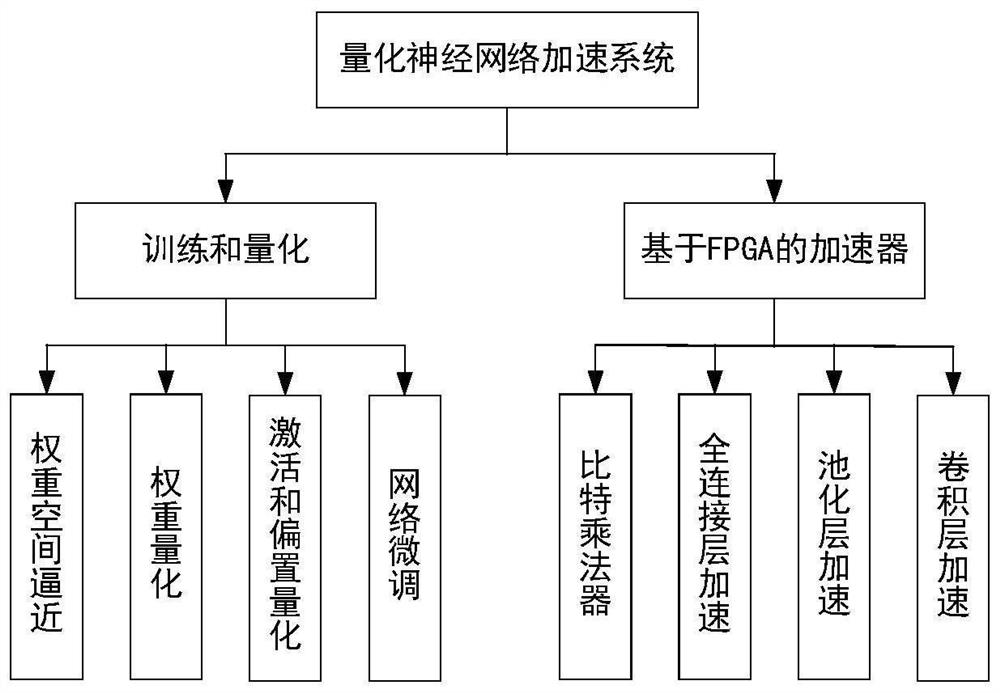

Quantitative neural network acceleration method based on field programmable array

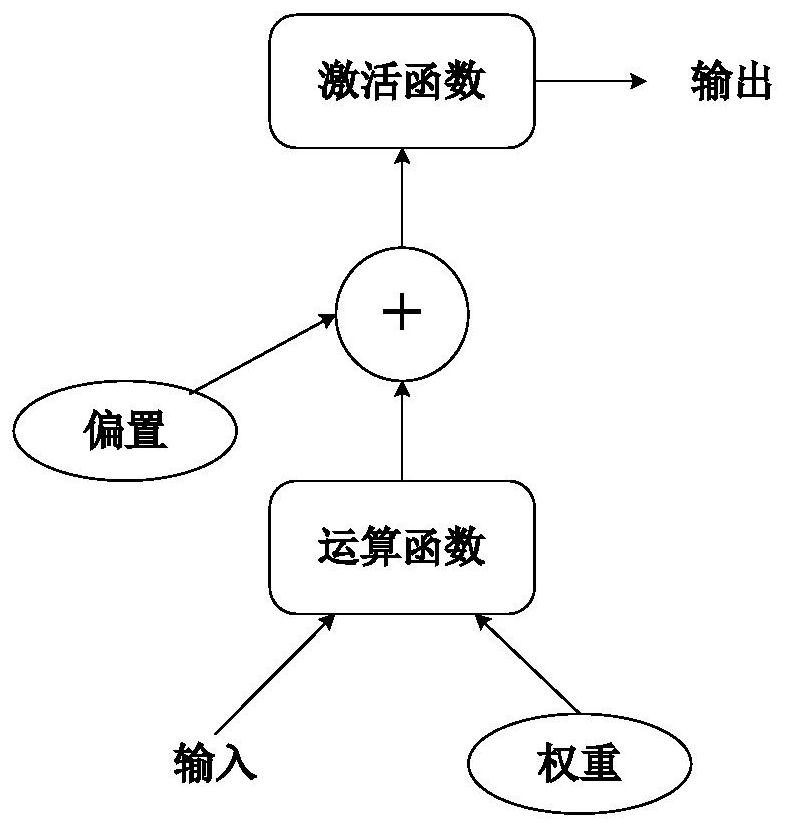

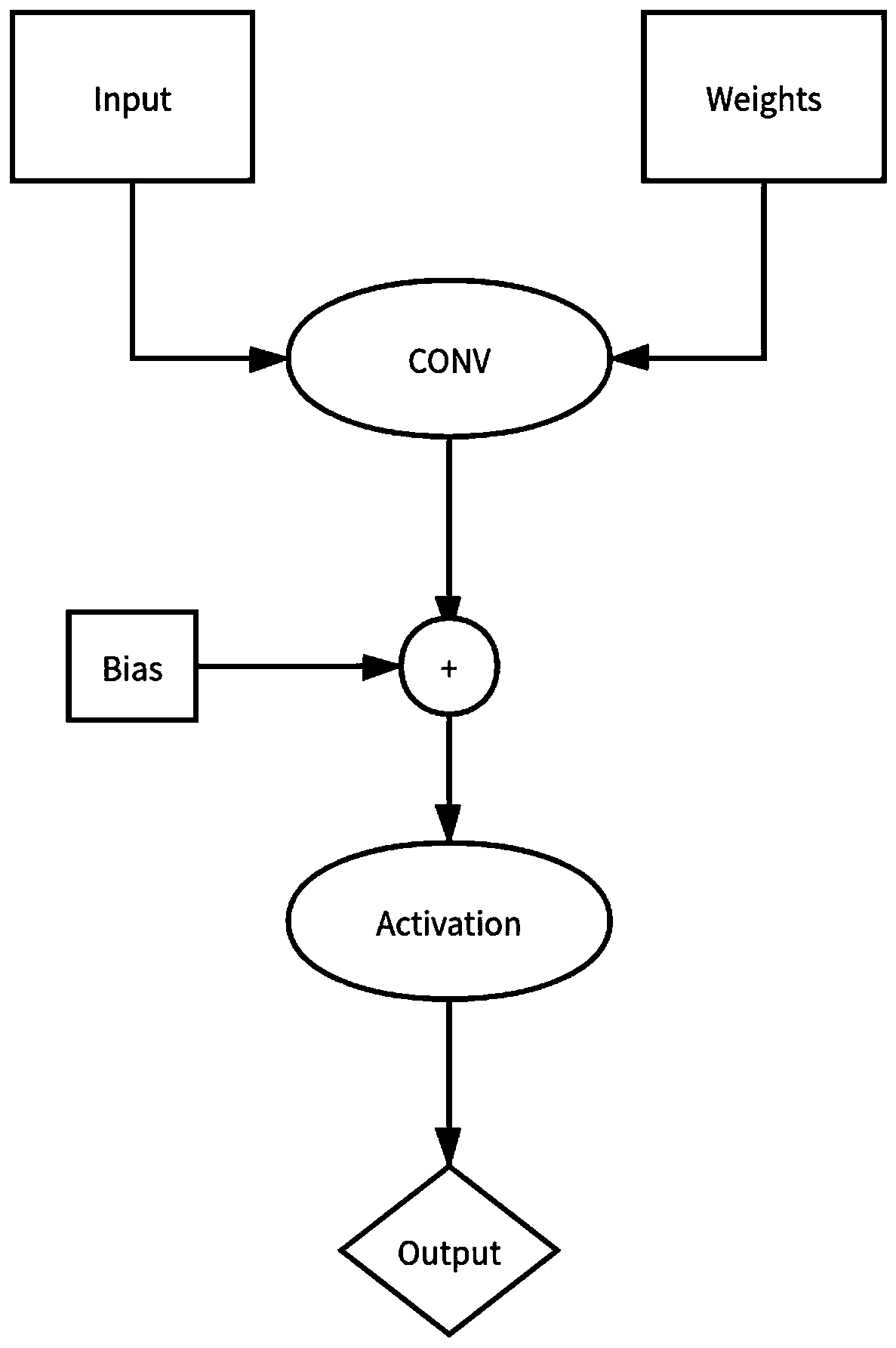

PendingCN112633477AFast reasoningReduce power consumptionResource allocationNeural architecturesActivation functionImaging processing

The invention discloses a quantitative neural network acceleration method based on a field programmable array, is applied to the field of image processing, and aims to solve the problem of low image processing efficiency in the prior art. Each layer of a neural network for image processing is expressed as a calculation graph, after input and weight are subjected to convolution or full-connection calculation, a bias value is added, and a final output is obtained through an activation function; the weight space is approximated to a sparse discrete space; numerical quantification is performed on the processed weight to obtain a quantified neural network for image processing; then an accelerator matched with the quantitative neural network for image processing is designed; and each layer of the quantized image processing neural network is calculated according to the corresponding accelerator to obtain an image processing result. By adopting the method provided by the invention, the image processing application can be deployed in a resource-limited embedded system, and the method has the characteristics of rapid reasoning and low power consumption.

Owner:UNIV OF ELECTRONICS SCI & TECH OF CHINA

Neural network quantization method and device, image recognition method and device and computer equipment

ActiveCN110443165AAvoid problems that severely degrade forecast accuracyReduce computationCharacter and pattern recognitionNeural architecturesPattern recognitionAlgorithm

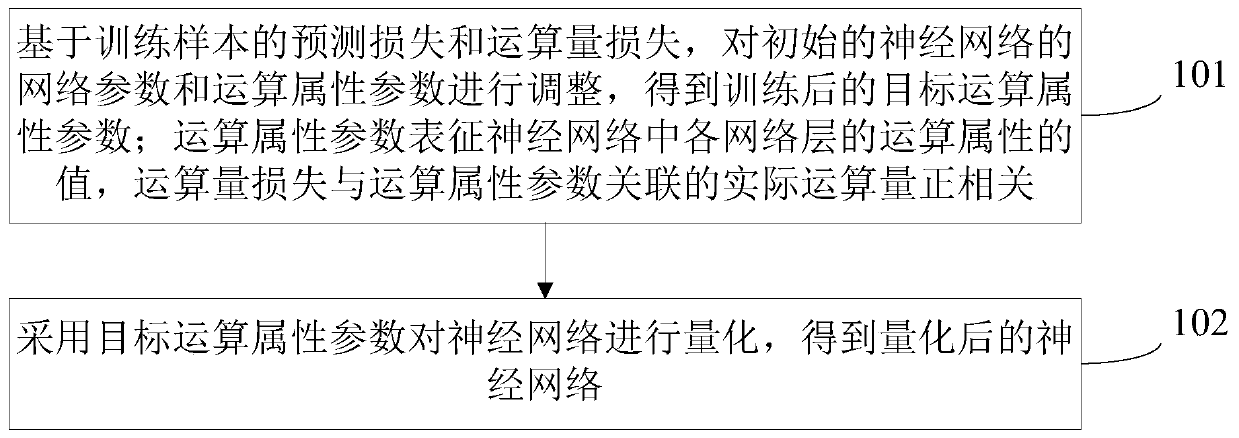

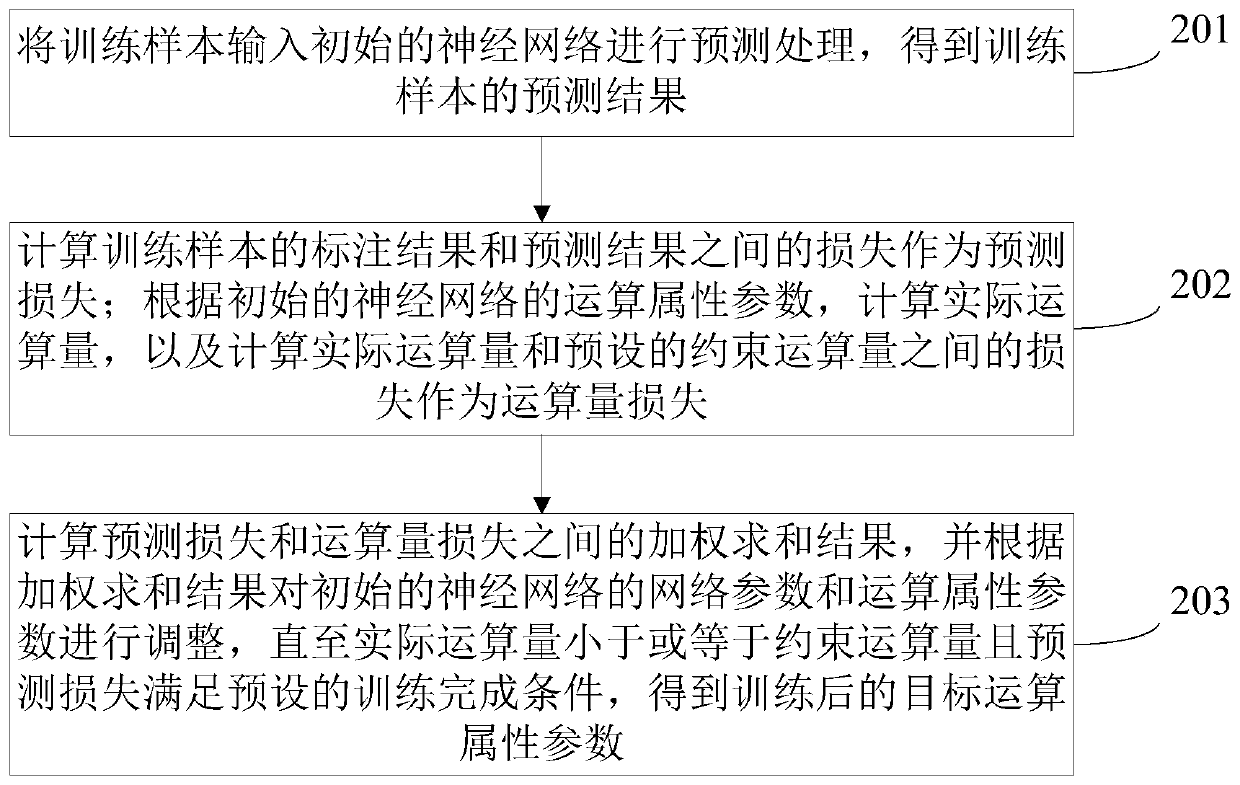

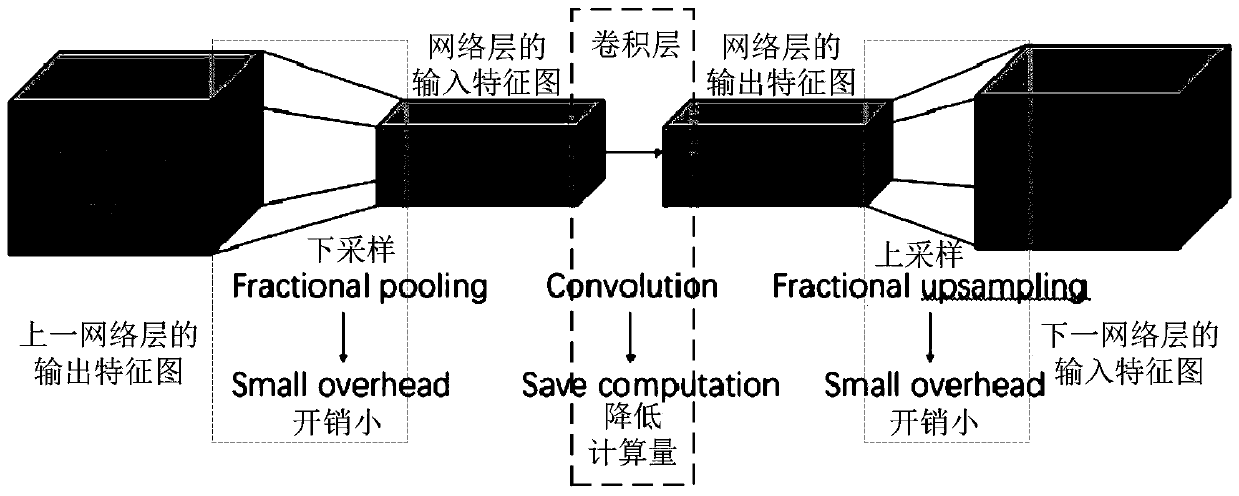

The invention relates to a neural network quantization method, an image recognition method, a neural network quantization device, an image recognition device, computer equipment and a readable storagemedium. The method comprises the following steps: based on prediction loss and operand loss of a training sample, adjusting network parameters and operation attribute parameters of an initial neuralnetwork to obtain trained target operation attribute parameters, wherein the operation attribute parameter represents the value of the operation attribute of each network layer in the neural network,and the operand loss is positively correlated with the actual operand associated with the operation attribute parameter; and quantizing the neural network by adopting the target operation attribute parameters to obtain the quantized neural network. By adopting the method, the problem that the prediction accuracy of the quantized neural network is seriously reduced can be avoided, and reasonable quantification is realized.

Owner:MEGVII BEIJINGTECH CO LTD

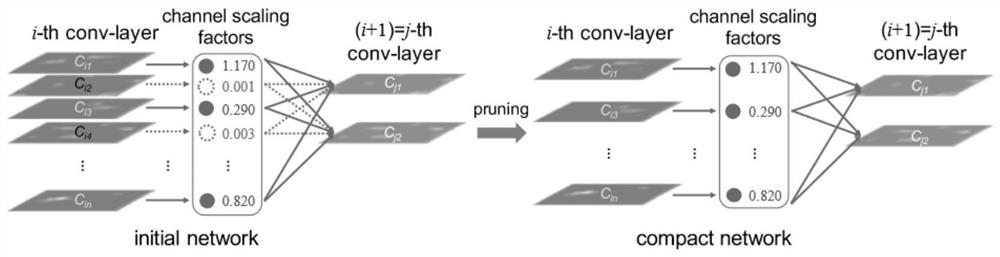

Structured pruning method and device for lightweight neural network, medium and equipment

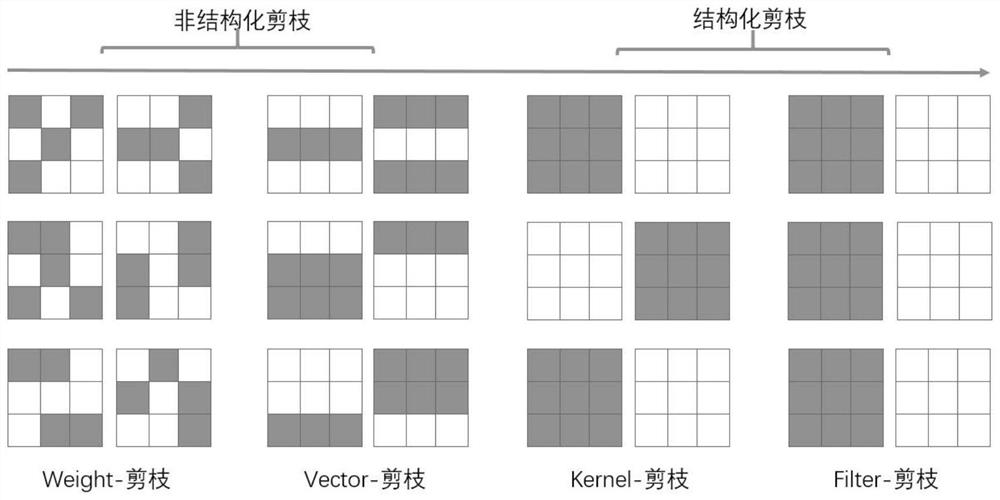

InactiveCN112241789AReduce redundancyThere is no unstructured sparsityCharacter and pattern recognitionNeural learning methodsPattern recognitionEngineering

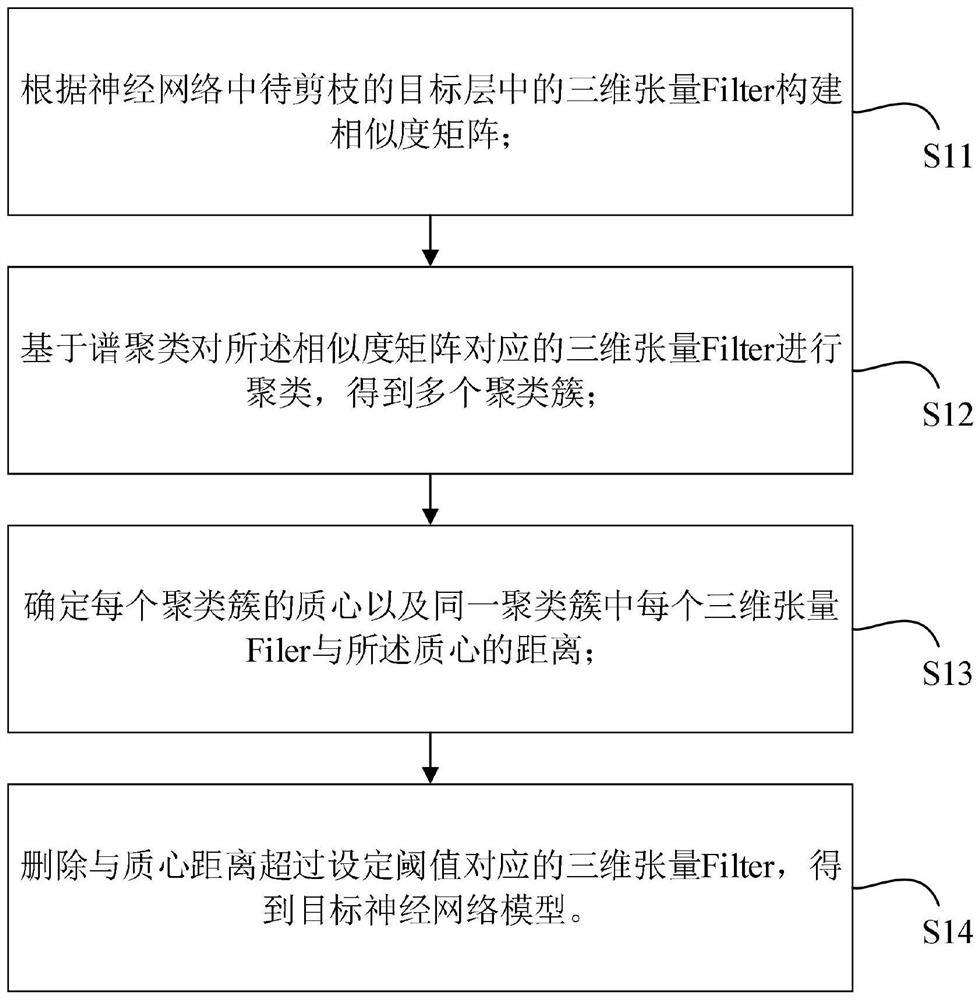

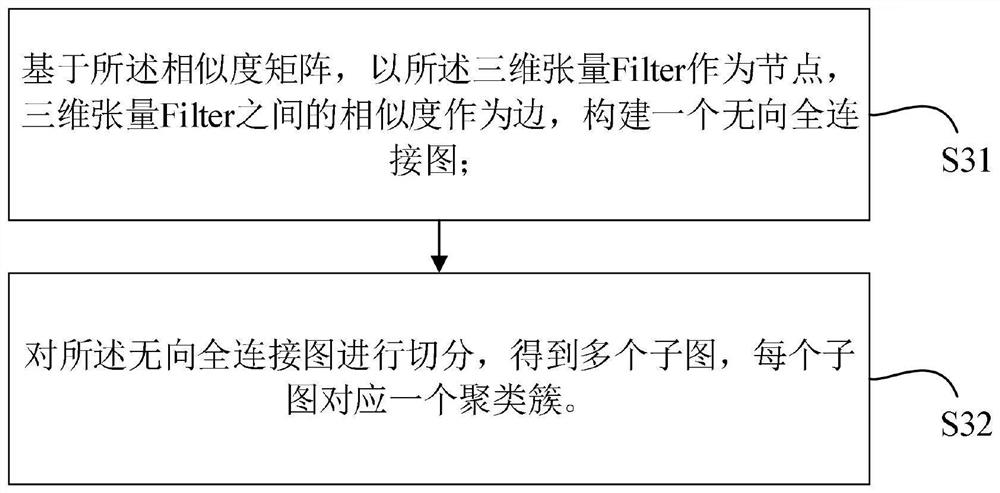

The invention discloses a structured pruning method for a lightweight neural network. The method comprises the following steps: constructing a similarity matrix according to a three-dimensional tensorFilter in a to-be-pruned target layer in the neural network; clustering the three-dimensional tensor Filter corresponding to the similarity matrix based on spectral clustering to obtain a plurality of clustering clusters; determining the centroid of each clustering cluster and the distance between each three-dimensional tensor Filter in the same clustering cluster and the centroid; and deleting the three-dimensional tensor Filter corresponding to the centroid distance exceeding a set threshold to obtain a target neural network model. As a structured pruning method, the weight matrixes of theneural network subjected to structured pruning do not have an unstructured sparse phenomenon, existing software and hardware can be directly used for acceleration, and the invention can be very naturally compared with other lightweight neural network technologies. Knowledge distillation, weight quantification and the like are combined for use to further reduce the network redundancy.

Owner:广州云从凯风科技有限公司

Method and apparatus for neural network quantization

ActiveUS20200218962A1Well formedNeural architecturesPhysical realisationNeural network analysisQuantized neural networks

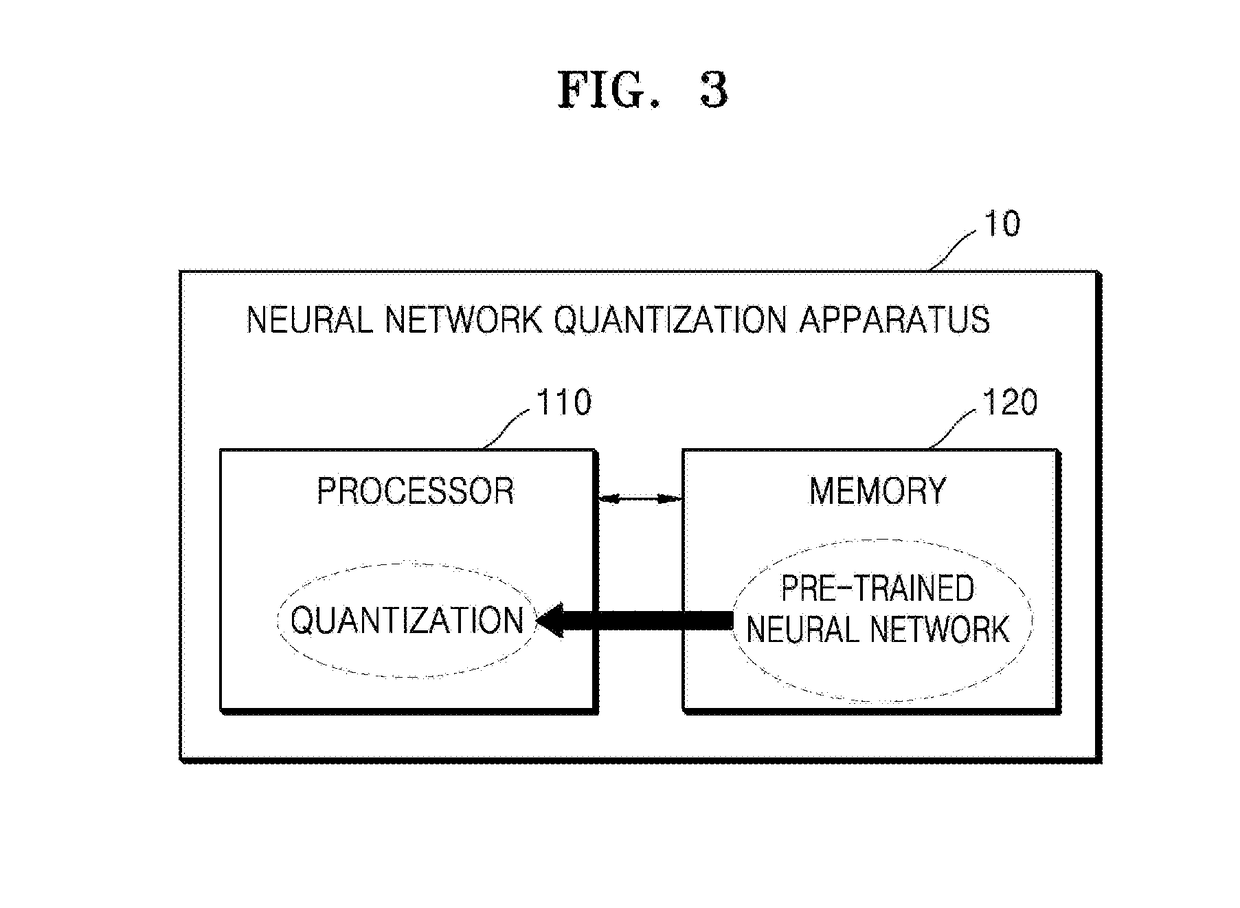

According to a method and apparatus for neural network quantization, a quantized neural network is generated by performing learning of a neural network, obtaining weight differences between an initial weight and an updated weight determined by the learning of each cycle for each of layers in the first neural network, analyzing a statistic of the weight differences for each of the layers, determining one or more layers, from among the layers, to be quantized with a lower-bit precision based on the analyzed statistic, and generating a second neural network by quantizing the determined one or more layers with the lower-bit precision.

Owner:SAMSUNG ELECTRONICS CO LTD

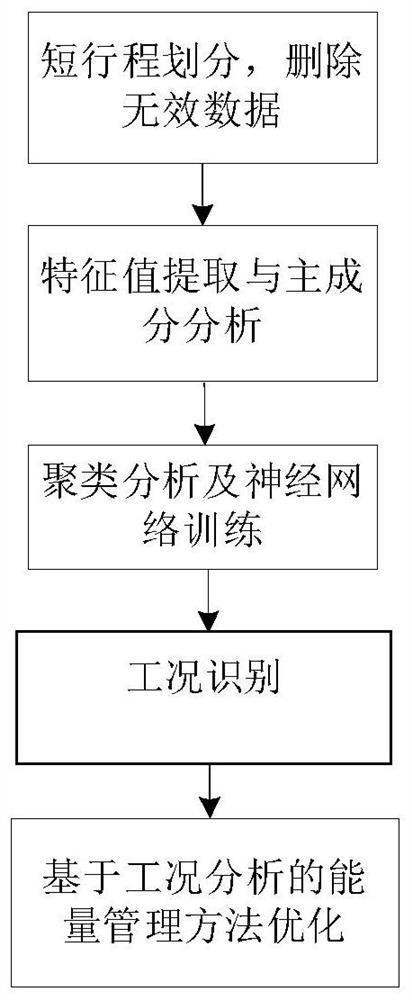

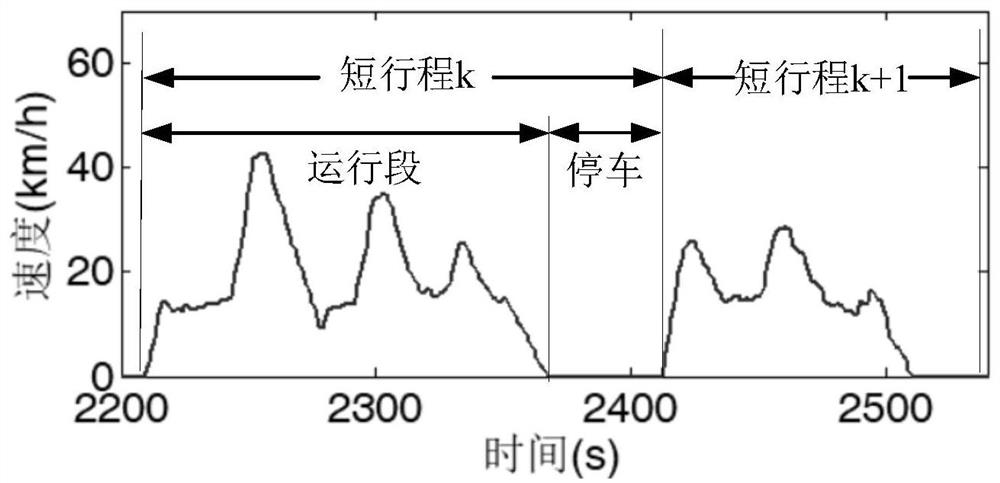

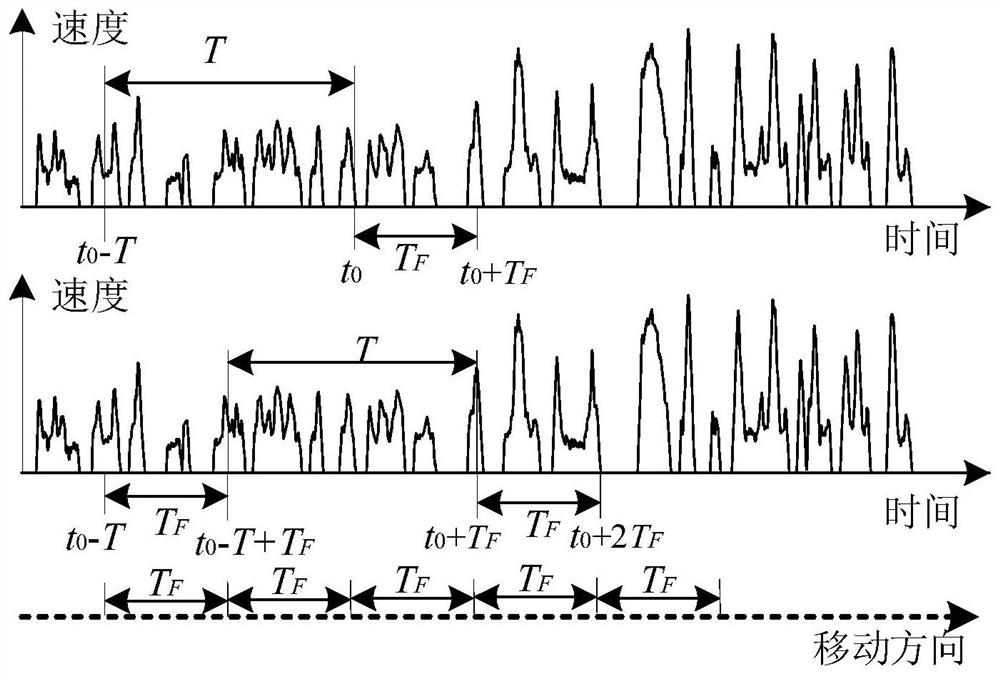

Modern tramcar hybrid energy storage system energy management method based on working condition analysis

PendingCN112668848ARealize real-time optimal controlRealize the goal of electrical energy savingForecastingCharacter and pattern recognitionNerve networkPrincipal component analysis

The invention relates to a modern tramcar hybrid energy storage system energy management method based on working condition analysis, and the method comprises the steps: 1), carrying out the arrangement of historical data obtained in the operation of a tramcar, dividing the historical data into short strokes, screening the short strokes meeting conditions, enabling the screened short strokes to enter an alternative stroke library, and deleting the short strokes which do not meet the conditions; 2) extracting characteristic values of each short stroke, and screening 13 characteristic values; 3) carrying out dimension reduction on the characteristic value by using a principal component analysis method; 4) using a clustering analysis method to classify the operation conditions; 5) performing online identification on the actual operation condition by using a learning vectorization neural network; and 6) based on the identified working conditions, performing classification optimization on the energy management method under each working condition. According to the invention, the electrical energy-saving purpose of rail transit can be achieved. The energy management strategy is optimized based on the working condition analysis result, classification optimization can be carried out according to different modes of vehicle operation, and the optimization effect of the energy management strategy in each mode is improved.

Owner:BEIJING JIAOTONG UNIV

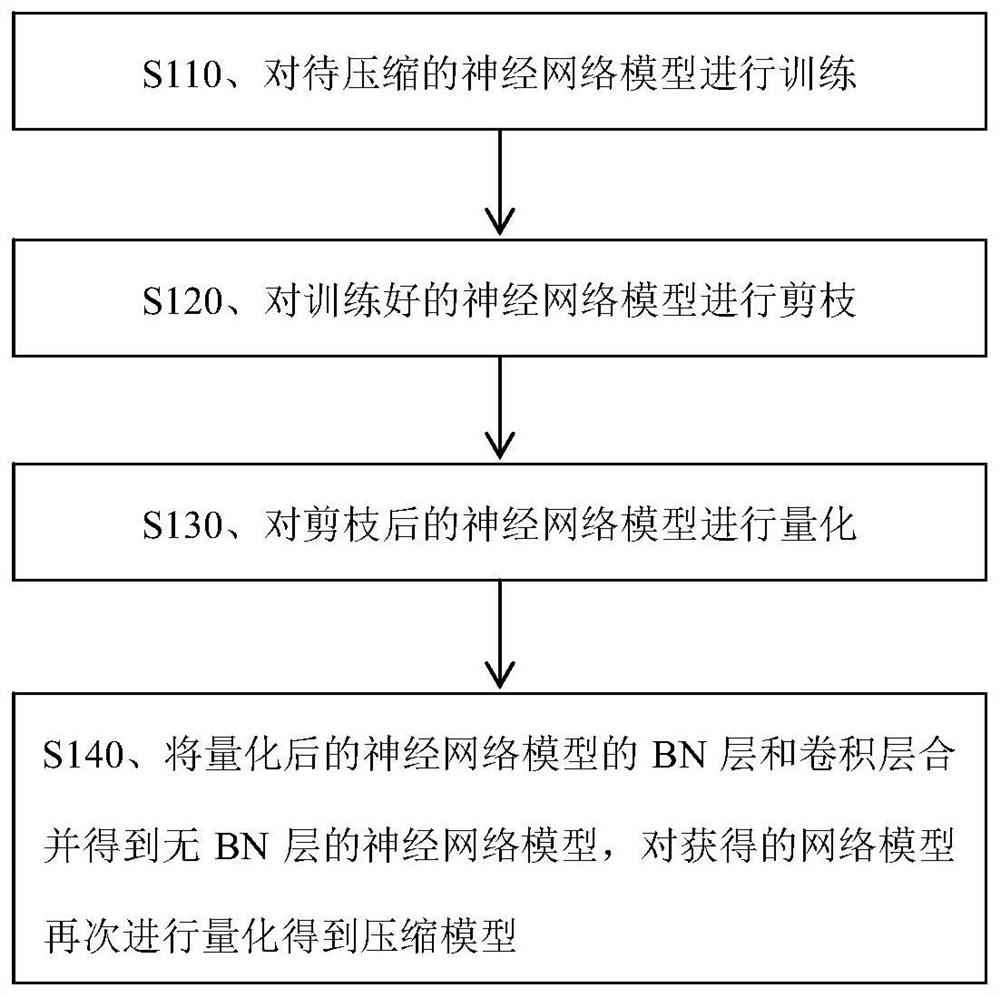

Neural network model compression method and system based on mass spectrum data set

PendingCN112329922APrecise yet thin and compactPrecision thin and compactNeural architecturesNeural learning methodsData setEngineering

The embodiment of the invention discloses a neural network model compression method and system based on a mass spectrum data set, and the method comprises the steps: carrying out training of a to-be-compressed neural network model, carrying out pruning of the trained neural network model, carrying out the quantification of the pruned neural network model, and combining a BN layer and a convolutional layer of the quantized neural network model to obtain a neural network model without the BN layer, and quantizing the obtained network model again to obtain a compression model. A large-scale network is used as an input model, unrelated channels are automatically identified and pruned, redundancy on parameter digits of the convolution layer and a full connection layer is removed, the BN layer is discarded, and a model which is equivalent in precision, thin and compact (efficient) is generated.

Owner:PEKING UNIV

Method and system capable of switching bit-wide quantized neural network on line

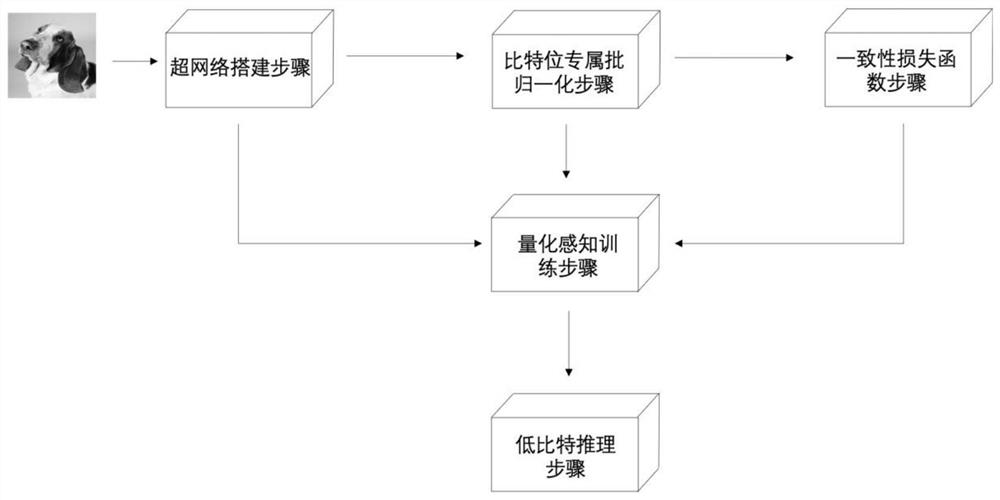

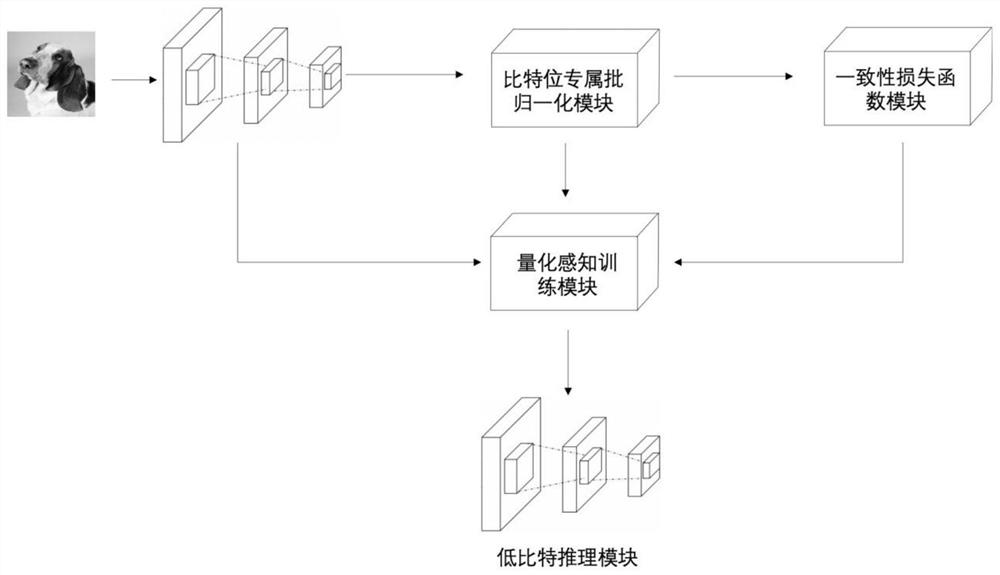

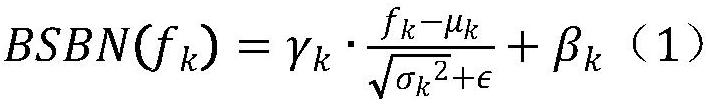

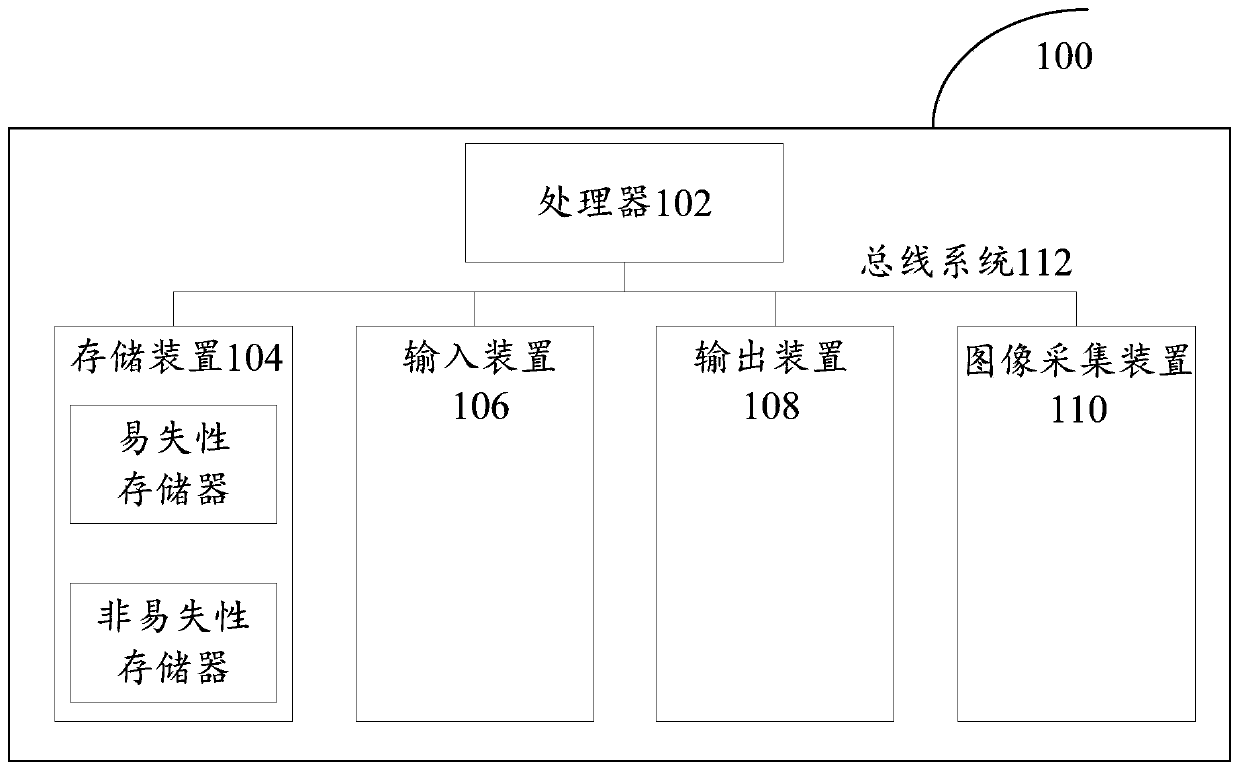

PendingCN112101524AEasy to useEasy landingNeural architecturesNeural learning methodsNetwork architectureNetwork on

The invention provides a method and a system for switching bit-wide quantized neural network on line, and the method comprises the steps: enabling deep neural networks with different bit widths to beintegrated into a super network, wherein all the networks with different bit widths share the same network architecture; the super network operating at different bit widths, for any bit width, obtaining a corresponding network intermediate layer feature, and processing each network intermediate layer feature by adopting a corresponding batch normalization layer; training the super network throughsupervised learning, and simulating quantization noise in a super-network training stage until a consistency loss function between a low-bit mode and a high-bit mode converges, so as to obtain a trained super network; and extracting the quantized neural network of the target bit from the trained super network by using a preset quantizer to perform low-bit reasoning. While the neural network is nottrained again, the bit width can be switched at will to adapt to different hardware deployment environments.

Owner:SHANGHAI JIAO TONG UNIV

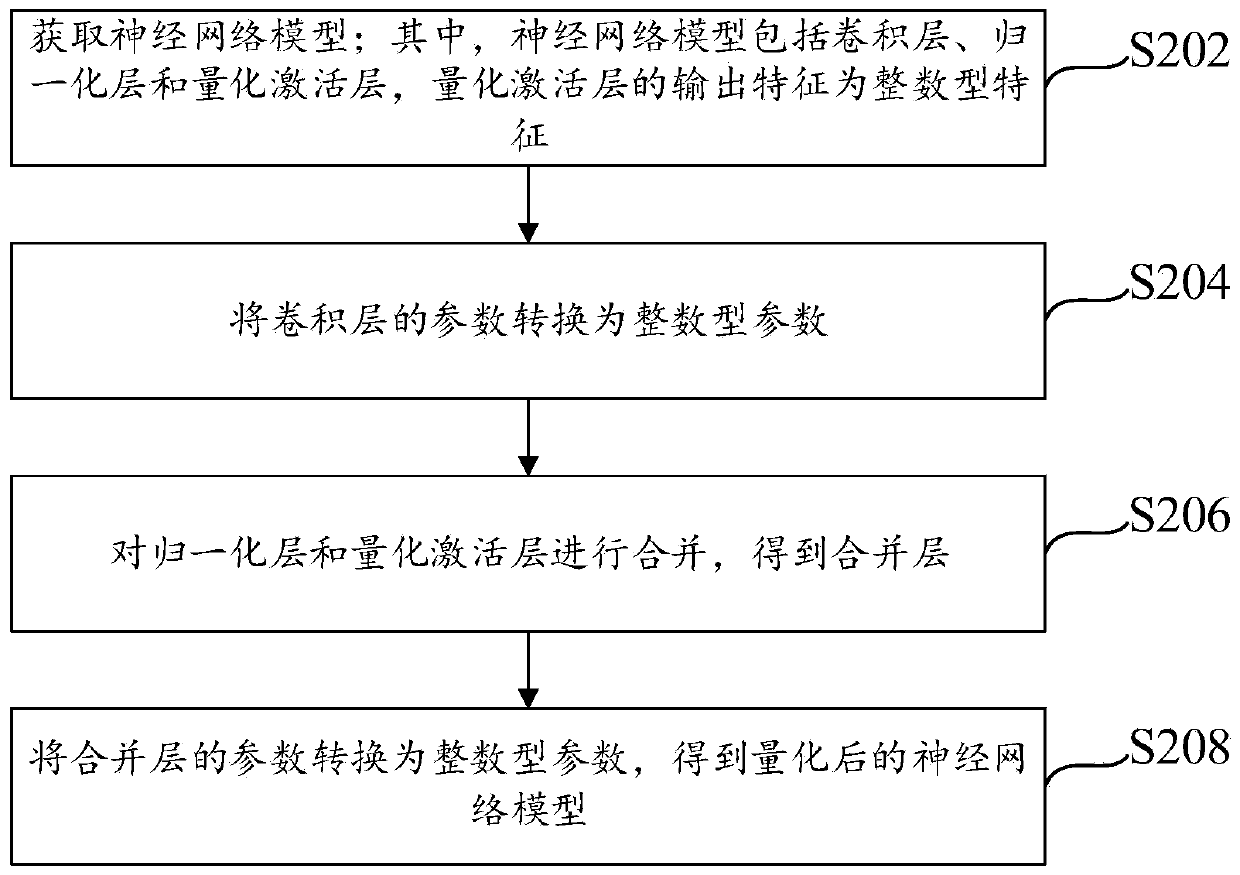

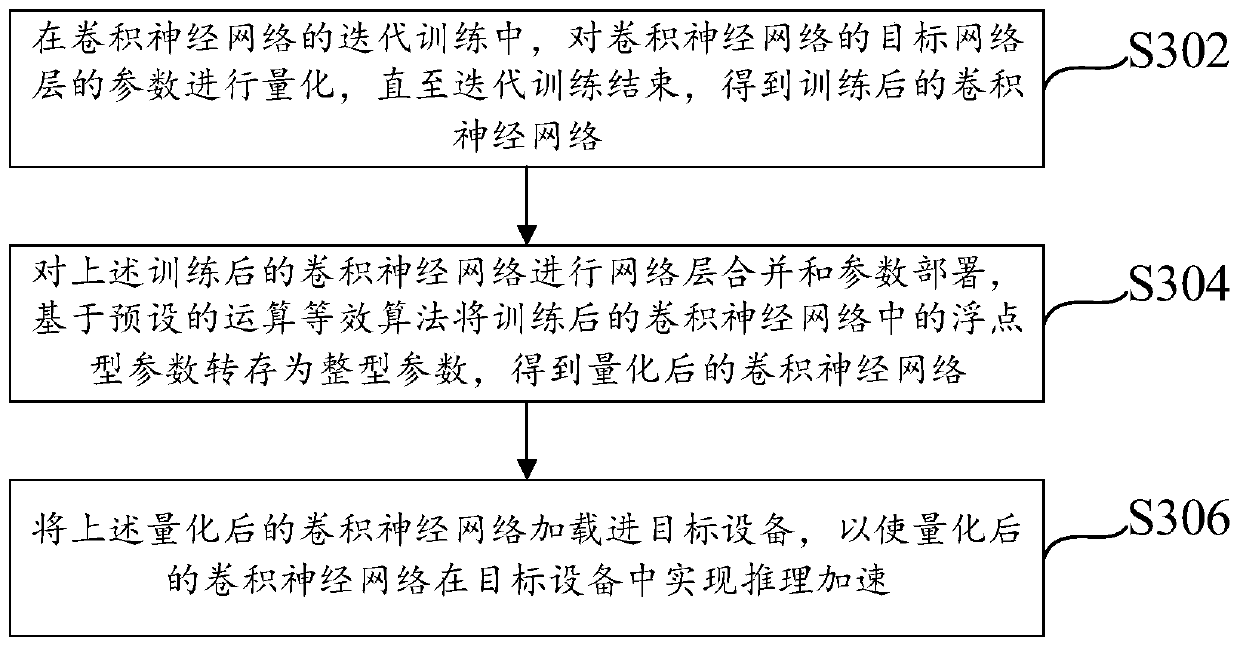

Neural network model quantification method and device and electronic equipment

PendingCN111401550AReduce operational complexityRun fastNeural architecturesNeural learning methodsAlgorithmEngineering

The invention provides a neural network model quantification method and device, and electronic equipment, and relates to the technical field of machine learning, and the method comprises the steps: obtaining a neural network model, wherein the neural network model comprises a convolution layer, a normalization layer and a quantization activation layer, and the output feature of the quantization activation layer is an integer type feature; converting the parameters of the convolution layer into integer type parameters; combining the normalization layer and the quantization activation layer to obtain a combined layer; and converting the parameters of the merging layer into integer type parameters to obtain a quantized neural network model. According to the method, the operation complexity ofthe neural network model is reduced, so that the quantized neural network model can be accelerated to run on target equipment only supporting fixed-point or low-bit-width operation.

Owner:MEGVII BEIJINGTECH CO LTD

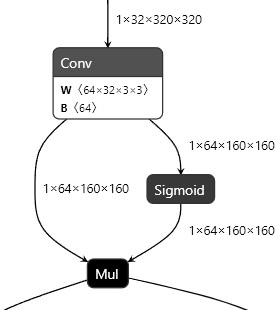

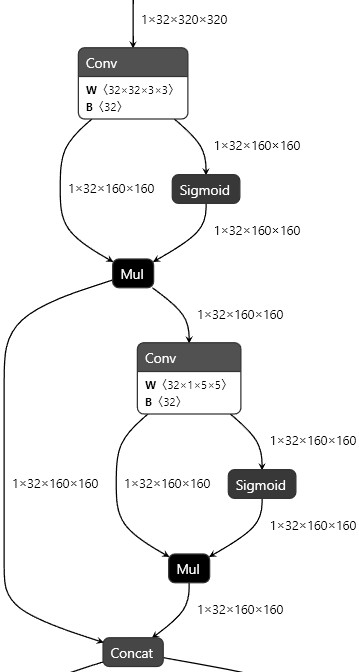

Image target detection method and system based on lightweight neural network model

PendingCN114332666AReduce sizeRun fastCharacter and pattern recognitionNeural architecturesPattern recognitionMobile end

The invention provides an image target detection method and system based on a lightweight neural network model, belongs to the technical field of image target detection, solves the problem of inaccurate image recognition at present, and comprises the following steps: inputting a path of a to-be-detected picture or video; and calculating related confidence coefficients of all classifications in the received current image by using the lightweight neural network model, obtaining a final recognition frame by selecting the highest confidence coefficient, and drawing the recognition frame in the original image to complete the detection process. Under the condition that the model precision is ensured, the operation speed of the model is greatly improved, so that the model can be smoothly deployed and applied in small equipment and a mobile terminal, and the real-time performance and accuracy of smoking detection in a daily scene are met.

Owner:QILU UNIV OF TECH

Composite insulator real-time segmentation method and system based on DeepLabV < 3 + >

PendingCN114549563AReduce computing timeReduce the amount of parametersImage enhancementImage analysisPattern recognitionData set

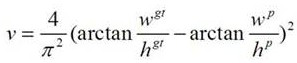

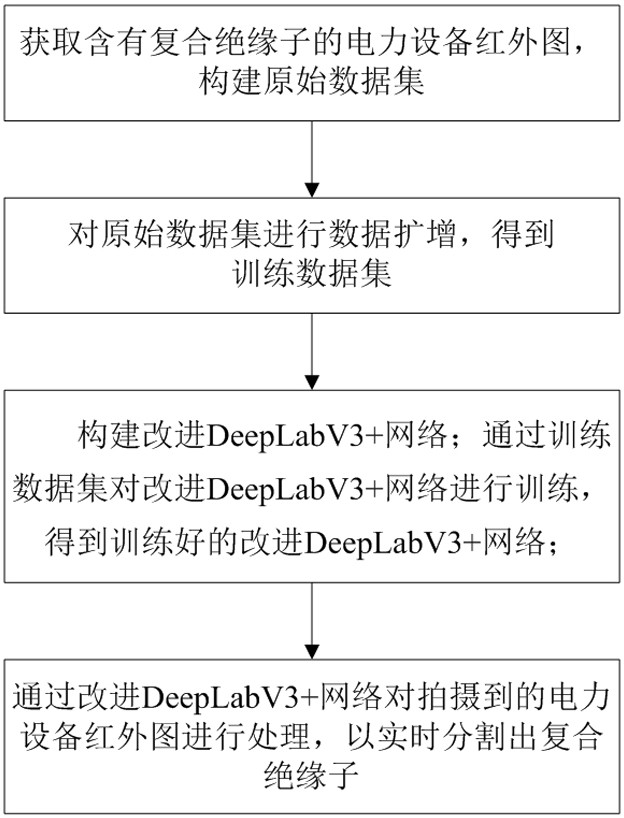

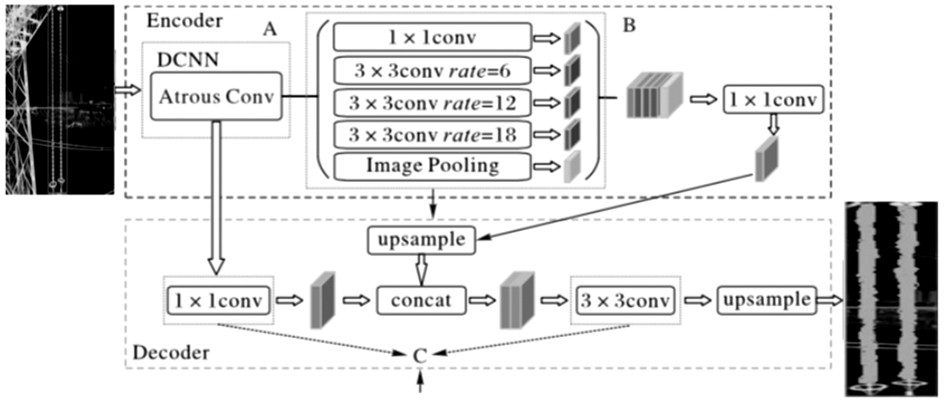

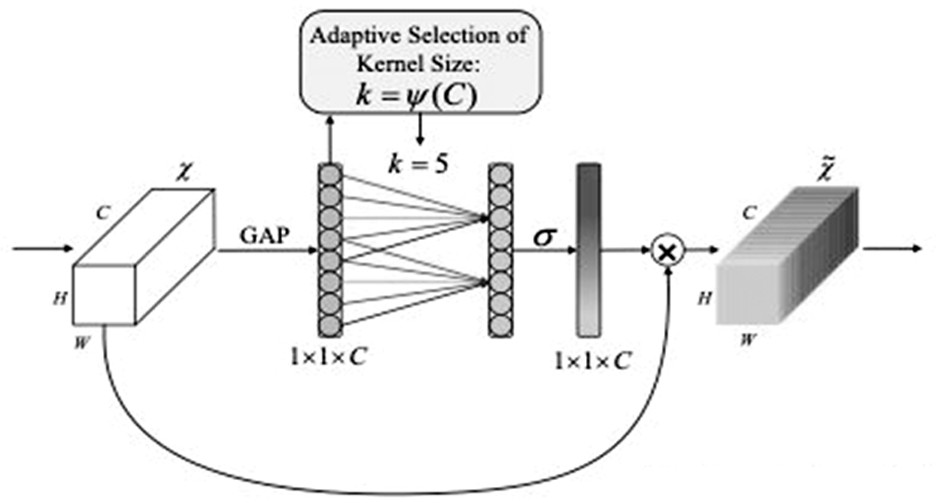

The invention relates to a composite insulator real-time segmentation method and system based on DeepLabV < 3 + >, and the method comprises the following steps: S1, obtaining a power equipment infrared image which is shot in a power inspection process and contains a composite insulator, and constructing an original data set; s2, performing data amplification on the original data set to obtain a training data set; s3, constructing an improved DeepLabV < 3 + > network: replacing a backbone network of the DeepLabV < 3 + > with a lightweight neural network MobileNetV2 to improve real-time performance, introducing a lightweight efficient channel attention module ECA to realize local cross-channel interaction without dimension reduction, and then adding a Point fine segmentation module at an output end of the DeepLabV < 3 + > for post-processing to further improve a semantic segmentation result; training the improved DeepLabV < 3 + > network through the training data set to obtain a trained improved DeepLabV < 3 + > network; and S4, processing the shot infrared image of the power equipment through the trained improved DeepLabV3 + network so as to segment the composite insulator in real time. According to the method and the system, the real-time performance and the accuracy of composite insulator segmentation can be improved.

Owner:FUJIAN UNIV OF TECH

Neural network quantification method and system

PendingCN111178514ASave resourcesFast operationNeural architecturesNeural learning methodsForward propagationEngineering

The embodiment of the invention provides a neural network quantization method and a system, and the method comprises the steps: (1) obtaining neural network parameters, carrying out the fusion of a convolution layer and a BN layer in the forward propagation of an original network, so as to obtain a new to-be-quantized weight and a to-be-quantized bias, and storing the activation output data of each layer at the same time; (2) quantizing neural network parameters and data distribution obtained after fusion; and (3) forming a new quantization network by taking the quantization parameter obtainedafter quantization processing as a parameter of forward propagation of the quantized new network. According to the method, various problems caused by too large or too small quantization scaling scaleof the neural network can be effectively balanced, and the operation speed is increased while hardware resources are saved.

Owner:ASR SMART TECH CO LTD

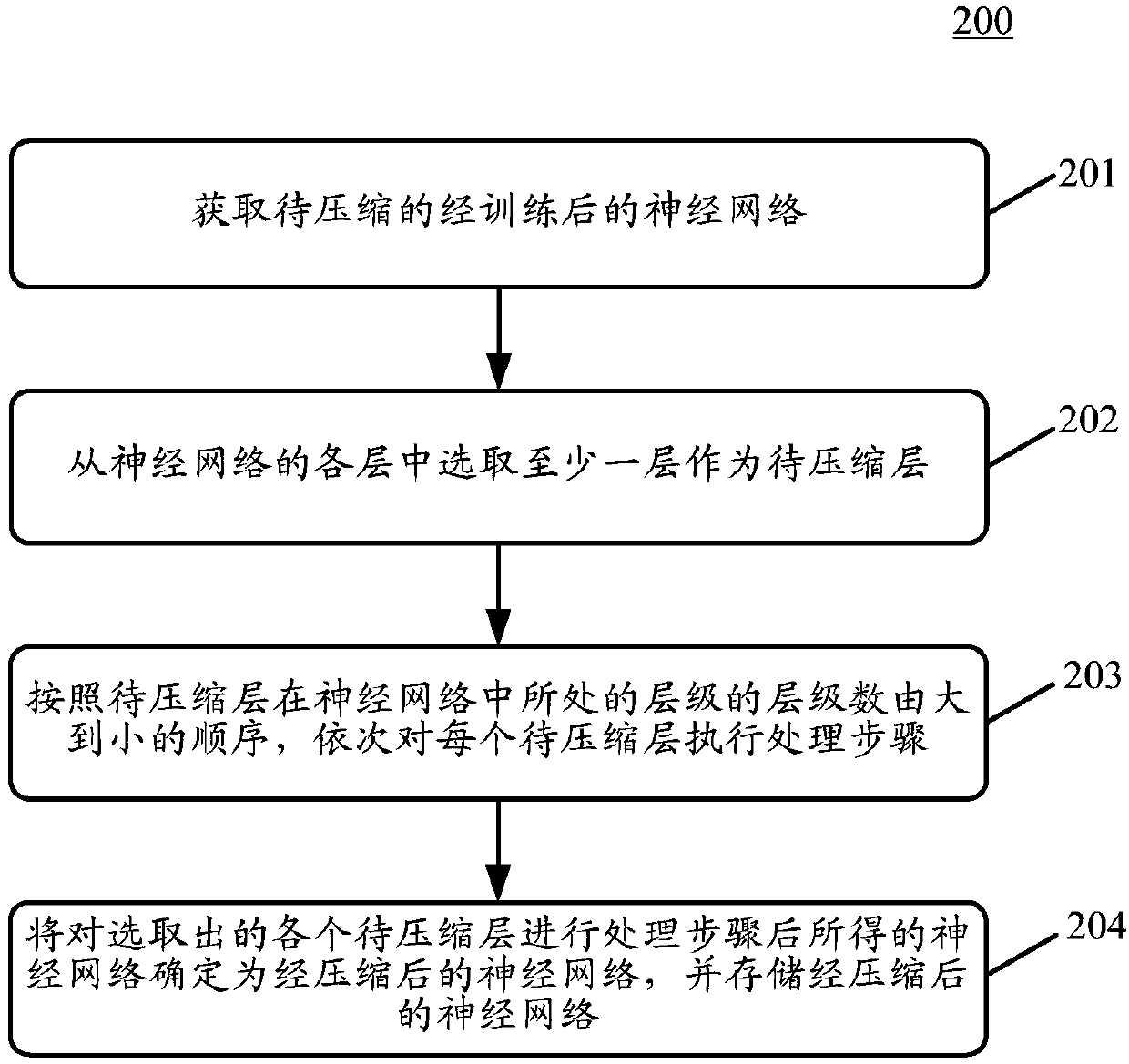

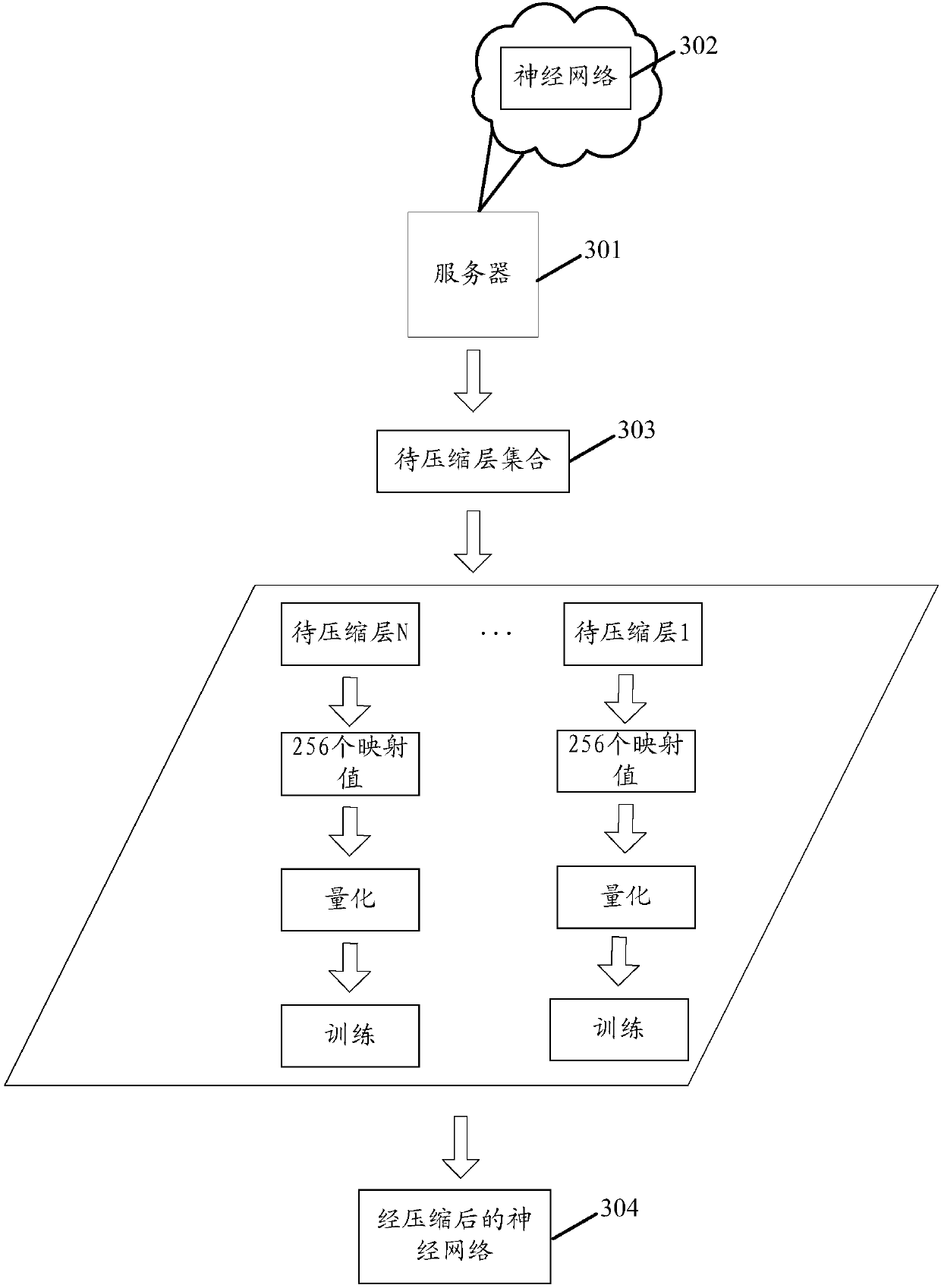

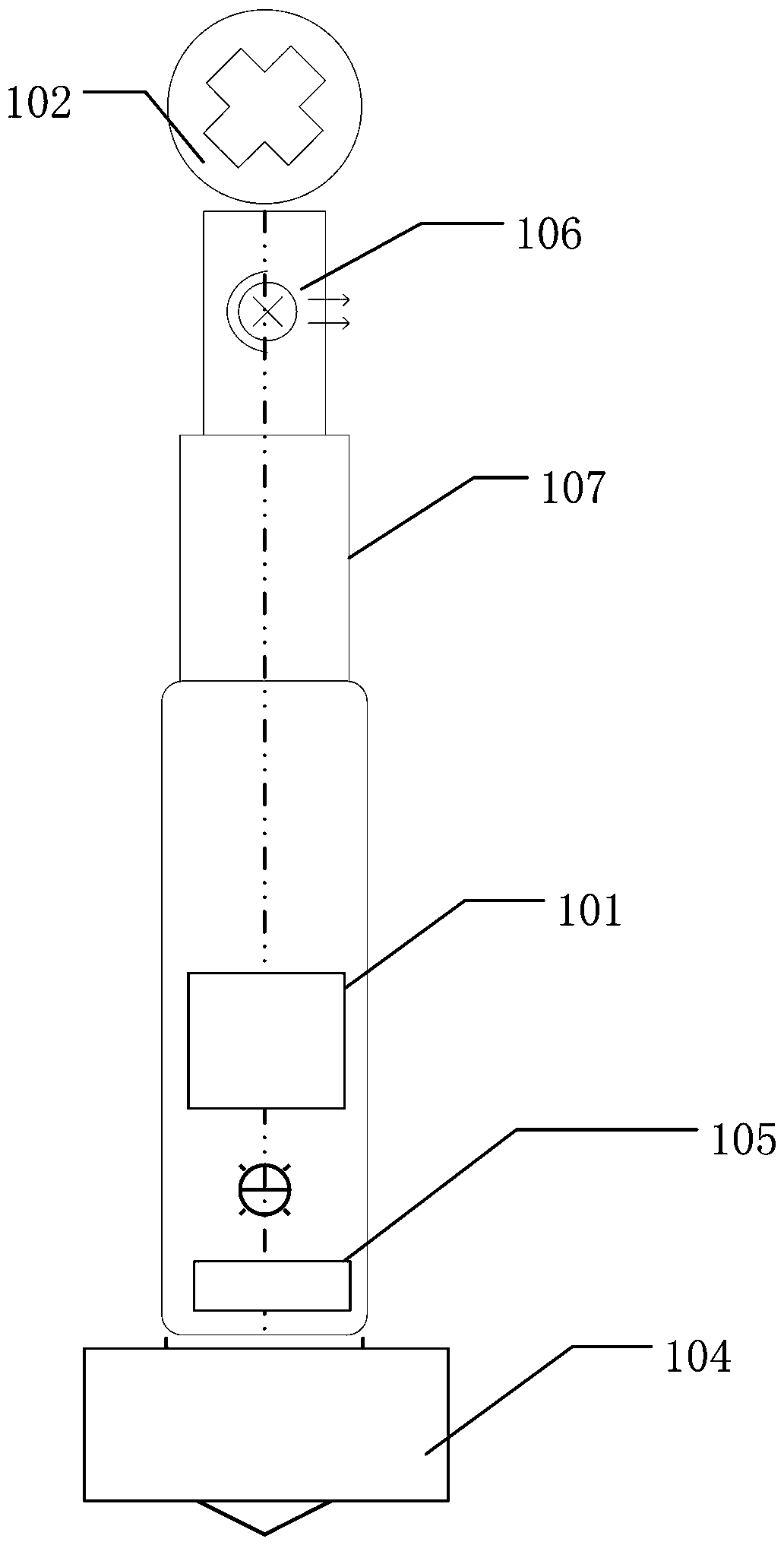

A method and apparatus for compressing neural network

PendingCN109993298AEfficient compressionNeural architecturesPhysical realisationNerve networkQuantized neural networks

The embodiment of the invention discloses a method and a device for compressing a neural network. A specific embodiment of the method comprises the steps of obtaining a to-be-compressed trained neuralnetwork; selecting at least one layer from all layers of the neural network as a layer to be compressed; sequentially executing the following processing steps on each layer to be compressed accordingto the descending order of the hierarchy number of the layers to be compressed in the neural network: quantifying parameters in the layers to be compressed based on the appointed number, and trainingthe quantized neural network based on a preset training sample by utilizing a machine learning method; and determining the neural network obtained by performing the processing step on each selected layer to be compressed as a compressed neural network, and storing the compressed neural network. According to the embodiment, effective compression of the neural network is realized.

Owner:BAIDU ONLINE NETWORK TECH (BEIJIBG) CO LTD

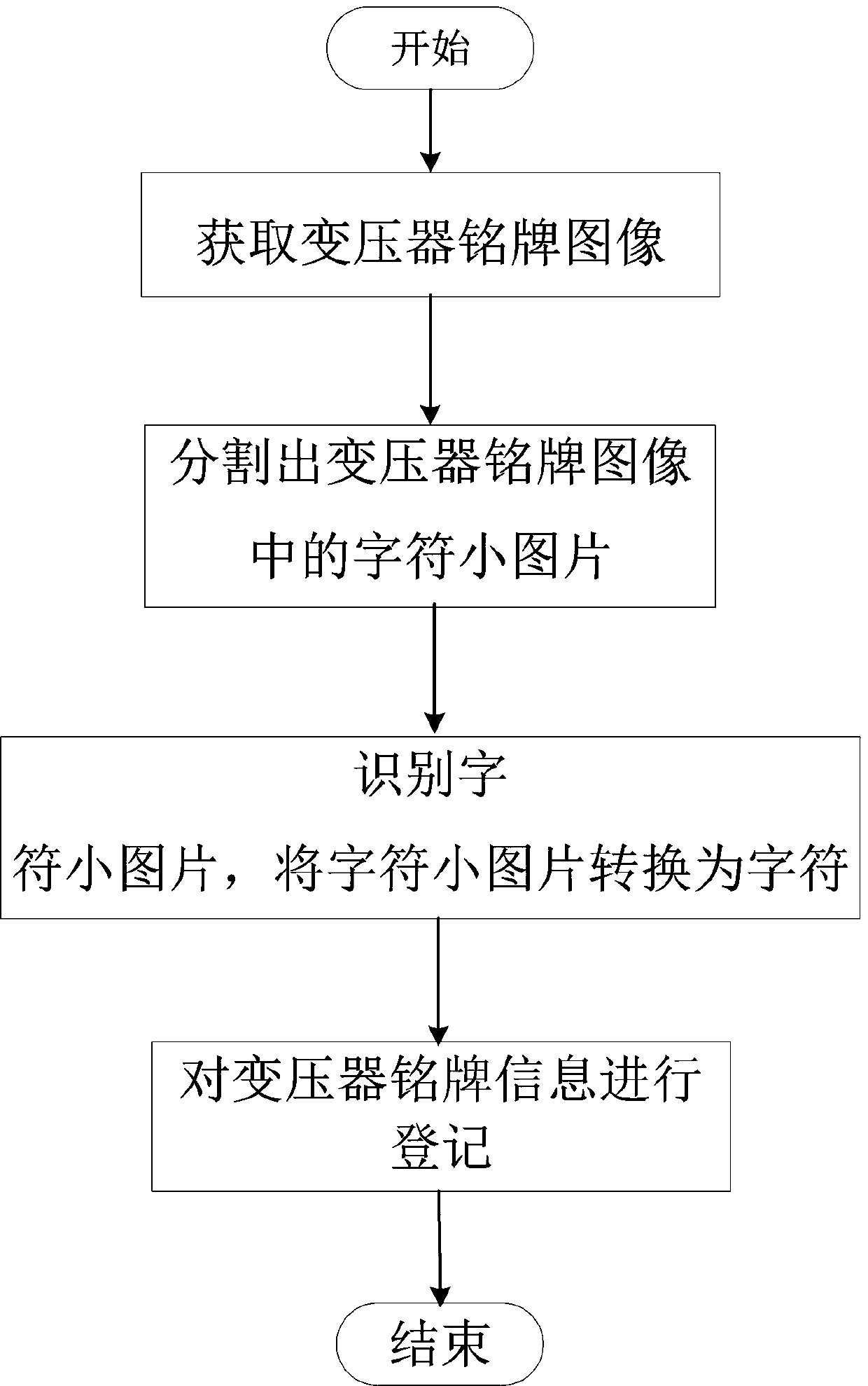

Transformer nameplate information acquisition method and intelligent acquisition system

ActiveCN110895697AEasy to checkEfficient identificationNeural architecturesCharacter recognitionNerve networkEngineering

The invention discloses a transformer nameplate information acquisition method. The method comprises the following steps: acquiring a transformer nameplate image by using camera equipment; segmentingthe transformer nameplate image on a transformer image acquisition site by adopting a lightweight neural network image recognition program to segment small character pictures in the transformer nameplate image; using a PCAnet network computer program to recognize the small character pictures in the step 2, and converting the small character pictures into characters; and registering the transformernameplate information obtained in the step 3. The invention also discloses two transformer nameplate information intelligent acquisition systems. According to the invention, after on-site image acquisition of the transformer nameplate, nameplate information identification is divided into two steps, so that transmitted image data is greatly reduced, character identification is more efficient, a background character identification result is returned to a transformer image acquisition site, the character identification result is convenient to check, and the acquired transformer nameplate information is ensured to be accurate and errorless.

Owner:CHINA THREE GORGES UNIV

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com