Quantitative neural network acceleration method based on field programmable array

A neural network and array technology, applied in the field of neural network-based image processing, can solve problems such as low energy efficiency, failure to meet the low energy consumption requirements of the mobile terminal, and difficulty in meeting the high performance requirements of the neural network and low energy consumption requirements of the mobile terminal. Power Consumption, Fast Inference Power Consumption, Effect of Reduced Storage Requirements

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0021] In order to facilitate those skilled in the art to understand the technical content of the present invention, the content of the present invention will be further explained below in conjunction with the accompanying drawings.

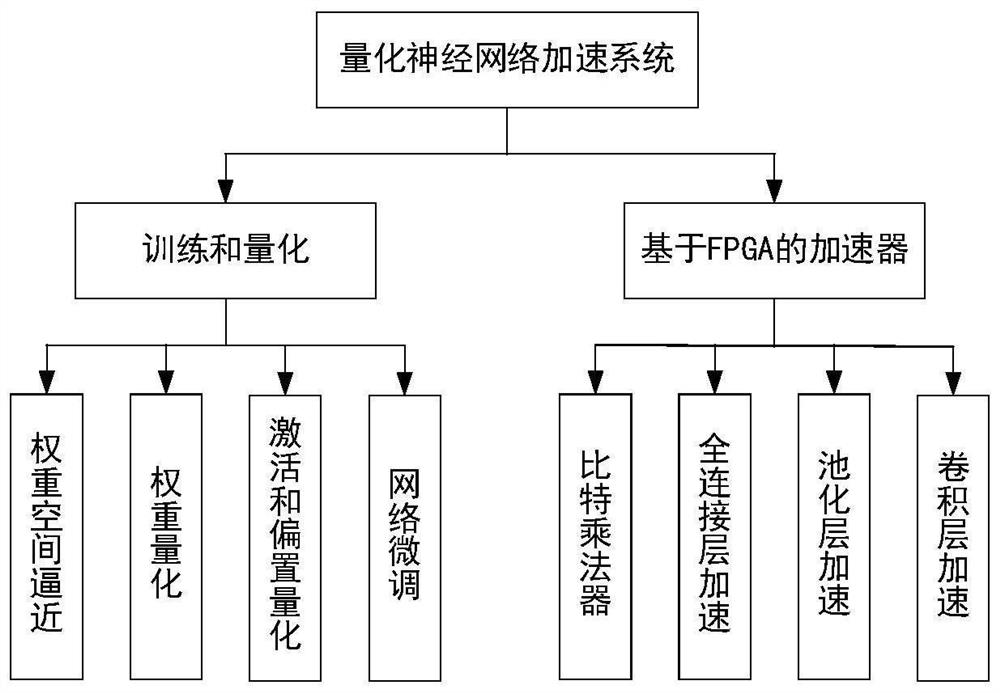

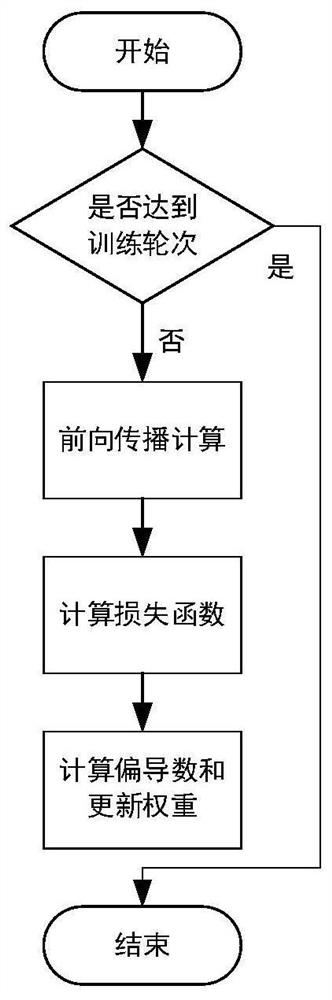

[0022] Such as figure 1 Shown, method of the present invention comprises:

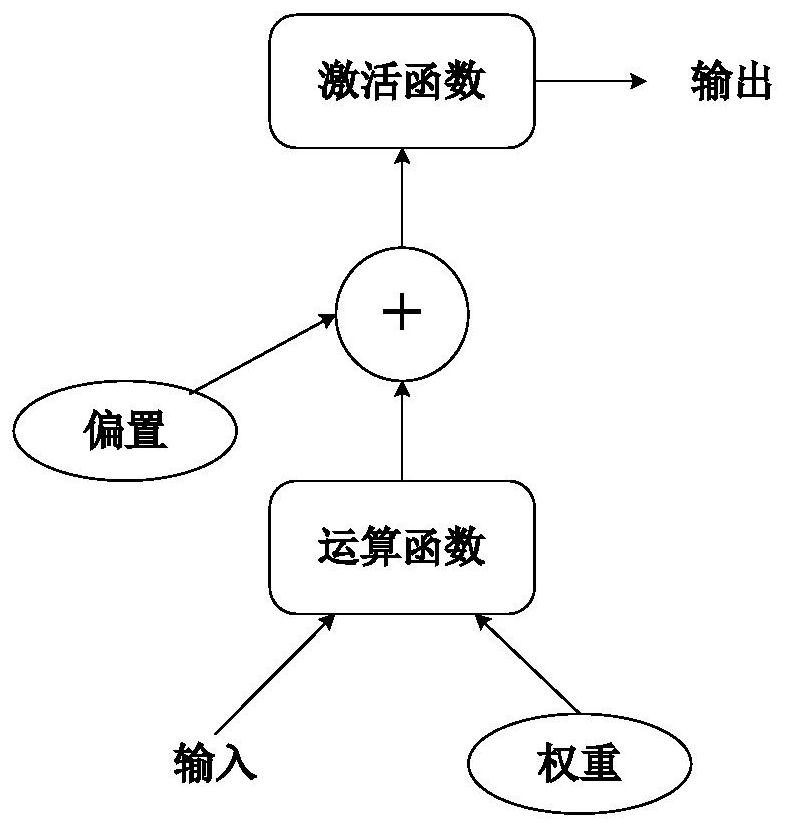

[0023] Step 1: Neural Network Weight Space Approximation. For weight space approximation, given a neural network for image processing, the weights play an important role in the final result. Each layer of the neural network is represented as a calculation graph. After the input and weight are calculated by convolution (CONV) or full connection (FC), the bias value is added, and the final output is obtained through the activation function. The original weight space is a continuous and complex real number space. It is expected that the quantized weight space only contains the three numbers 1, -1 and 0. Therefore, it is necessary to approximate the weight space to a spars...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com