Neural network quantification method and device, and electronic device

A technology of neural network and quantization method, applied in the field of neural network quantization method, device and electronic equipment, which can solve problems such as training difficulty, lack of expression ability, and affecting neural network recognition accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

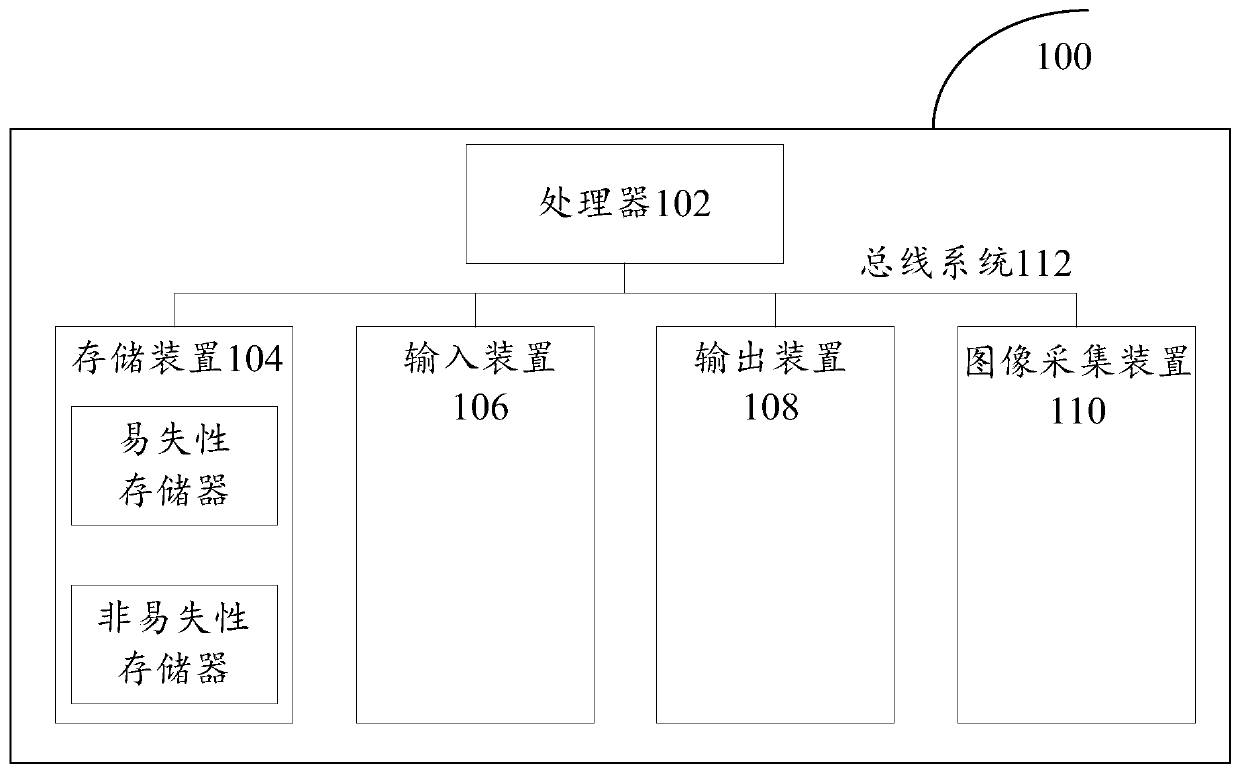

[0035] First, refer to figure 1 An example electronic device 100 for implementing a neural network quantization method, device and electronic device according to an embodiment of the present invention will be described.

[0036] Such as figure 1 Shown is a schematic structural diagram of an electronic device. The electronic device 100 includes one or more processors 102, one or more storage devices 104, an input device 106, an output device 108, and an image acquisition device 110. These components pass through a bus system 112 and / or other forms of connection mechanisms (not shown). It should be noted that figure 1 The components and structure of the electronic device 100 shown are only exemplary, not limiting, and the electronic device may also have other components and structures as required.

[0037] The processor 102 can be implemented in at least one hardware form of a digital signal processor (DSP), a field programmable gate array (FPGA), and a programmable logic arr...

Embodiment 2

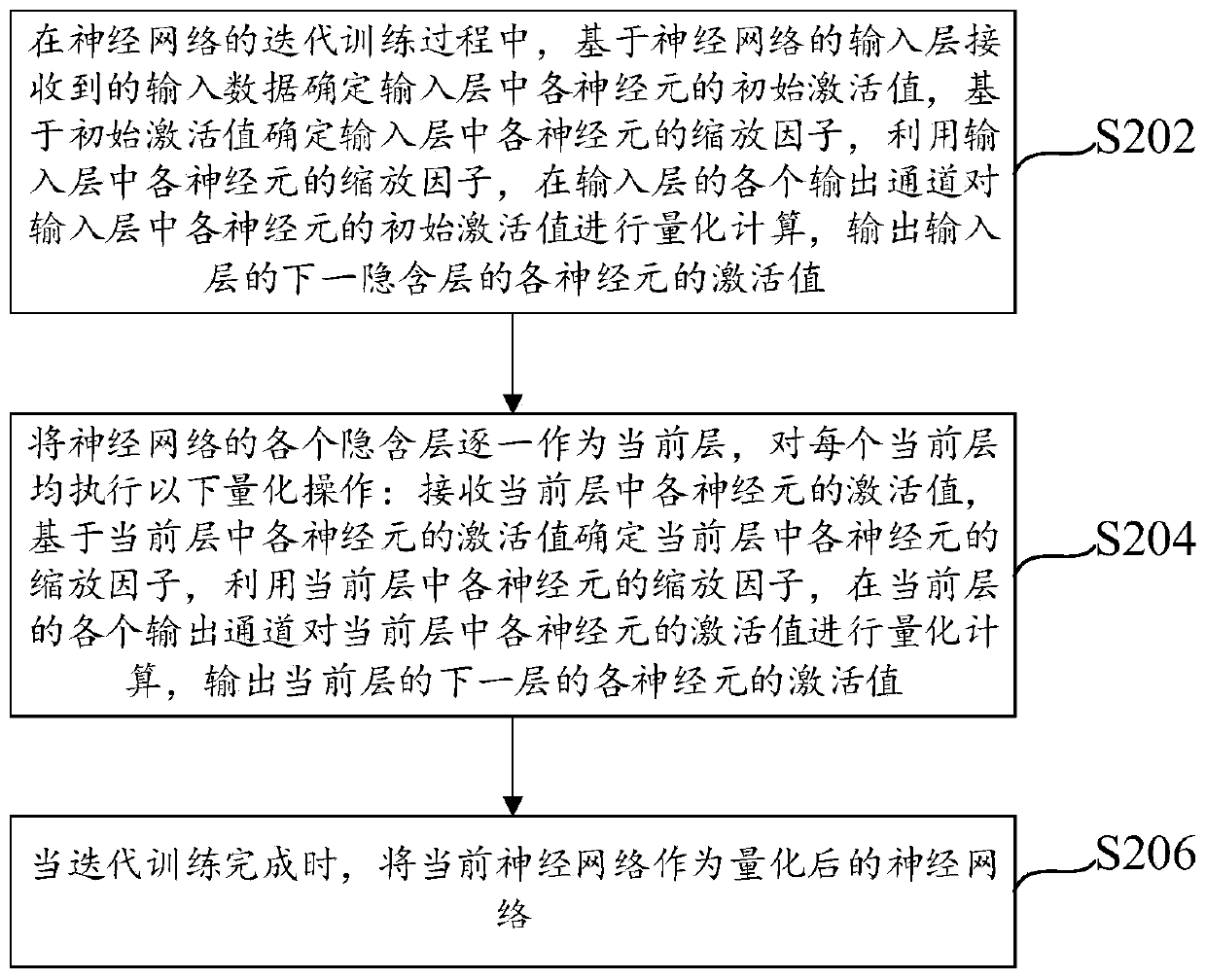

[0044] see figure 2 The flow chart of the neural network quantification method shown, the method can be executed by such as the aforementioned electronic device, in one embodiment, the electronic device can be a processing device (such as a server or computer) configured with a neural network model, the method It mainly includes the following steps S202 to S206:

[0045] Step S202, during the iterative training process of the neural network, determine the initial activation value of each neuron in the input layer based on the input data received by the input layer of the neural network, and determine the scaling factor of each neuron in the input layer based on the initial activation value, Using the scaling factor of each neuron in the input layer, the initial activation value of each neuron in the input layer is quantized and calculated in each output channel of the input layer, and the activation value of each neuron in the next hidden layer of the input layer is output. ...

Embodiment approach 1

[0070] Embodiment 1: In this embodiment, the hidden layer in the neural network includes a fully connected layer.

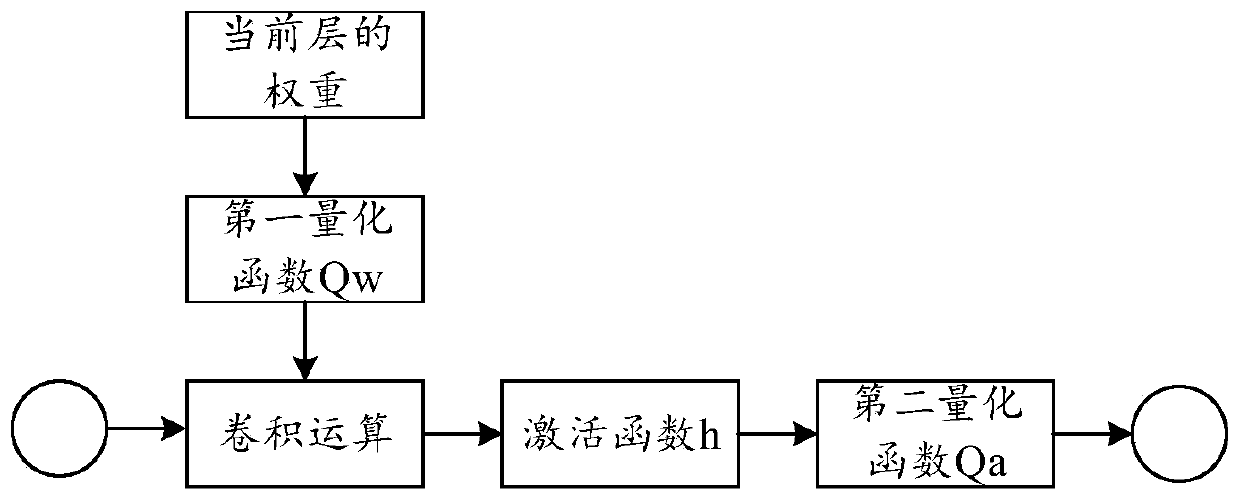

[0071] Perform the following operations on the intermediate activation values of each neuron in the current layer: perform a global average pooling operation on the intermediate activation values to obtain the pooling operation result; obtain the first floating-point weight of the first fully connected layer in the neural network, Input the pooling operation result and the floating-point weight of the fully connected layer into the preset second nonlinear activation function; obtain the second floating-point weight of the second fully connected layer in the neural network, and use the second nonlinear activation function Input the output result into the preset third nonlinear activation function to obtain the scaling factor of each neuron in the current layer; wherein, the first fully connected layer and the second fully connected layer are any two layers in t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com