Structured pruning method and device for lightweight neural network, medium and equipment

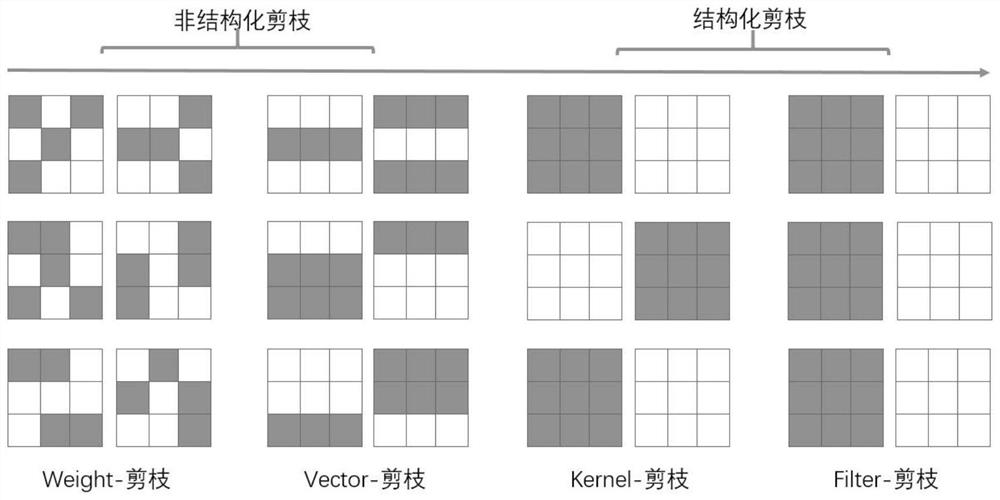

A neural network and structured technology, applied in neural learning methods, biological neural network models, instruments, etc., can solve the problems of large pruning granularity, too much pruning, too little pruning, etc., to achieve less storage space, reduce Video memory space, the effect of reducing network redundancy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

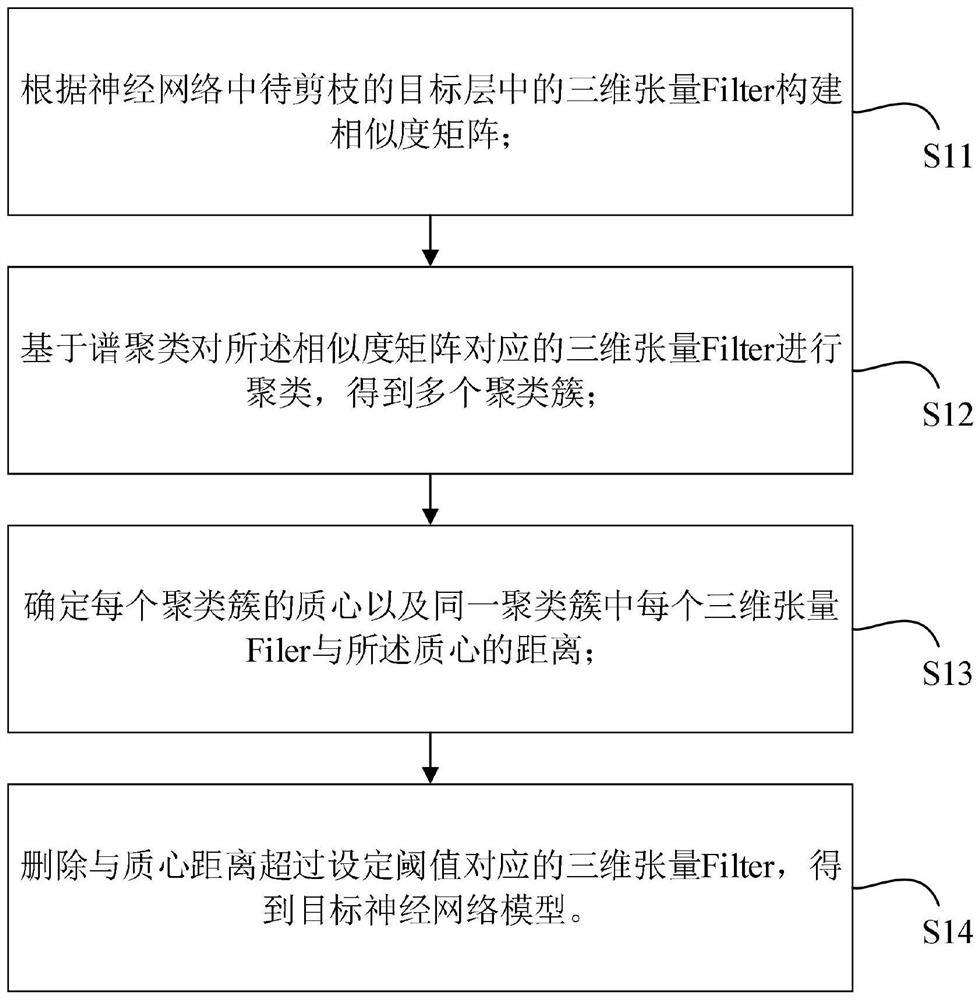

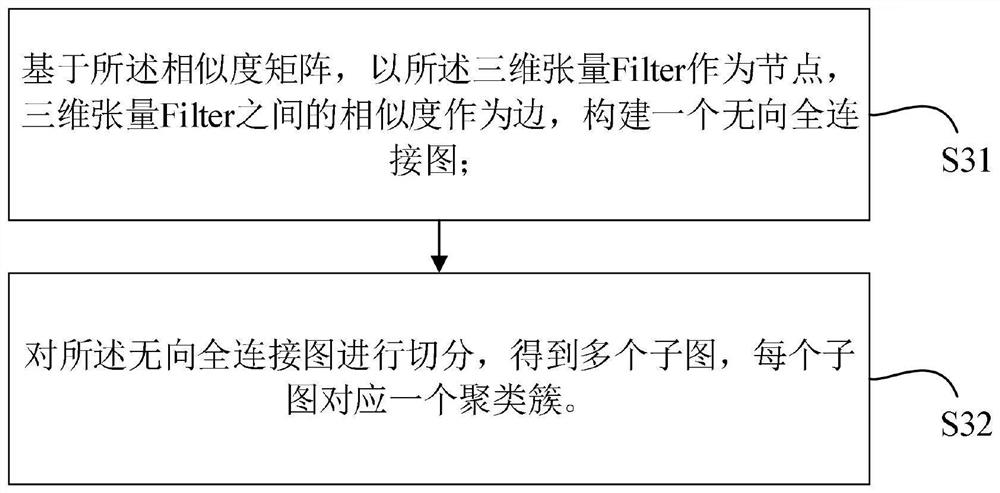

Method used

Image

Examples

Embodiment Construction

[0074] Embodiments of the present invention are described below through specific examples, and those skilled in the art can easily understand other advantages and effects of the present invention from the content disclosed in this specification. The present invention can also be implemented or applied through other different specific implementation modes, and various modifications or changes can be made to the details in this specification based on different viewpoints and applications without departing from the spirit of the present invention. It should be noted that, in the case of no conflict, the following embodiments and features in the embodiments can be combined with each other.

[0075] It should be noted that the diagrams provided in the following embodiments are only schematically illustrating the basic ideas of the present invention, and only the components related to the present invention are shown in the diagrams rather than the number, shape and shape of the compo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com