Neural network compiling method for storage and calculation integrated platform

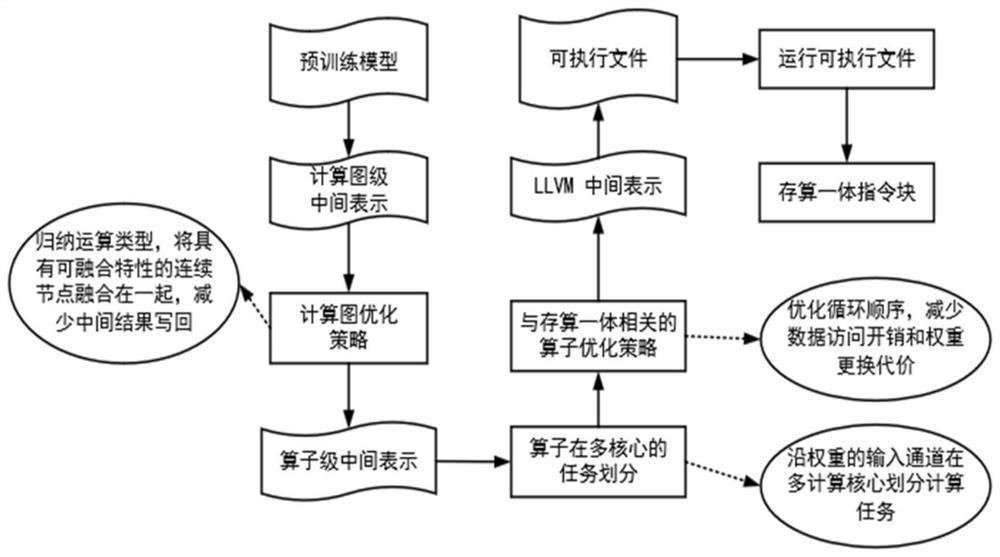

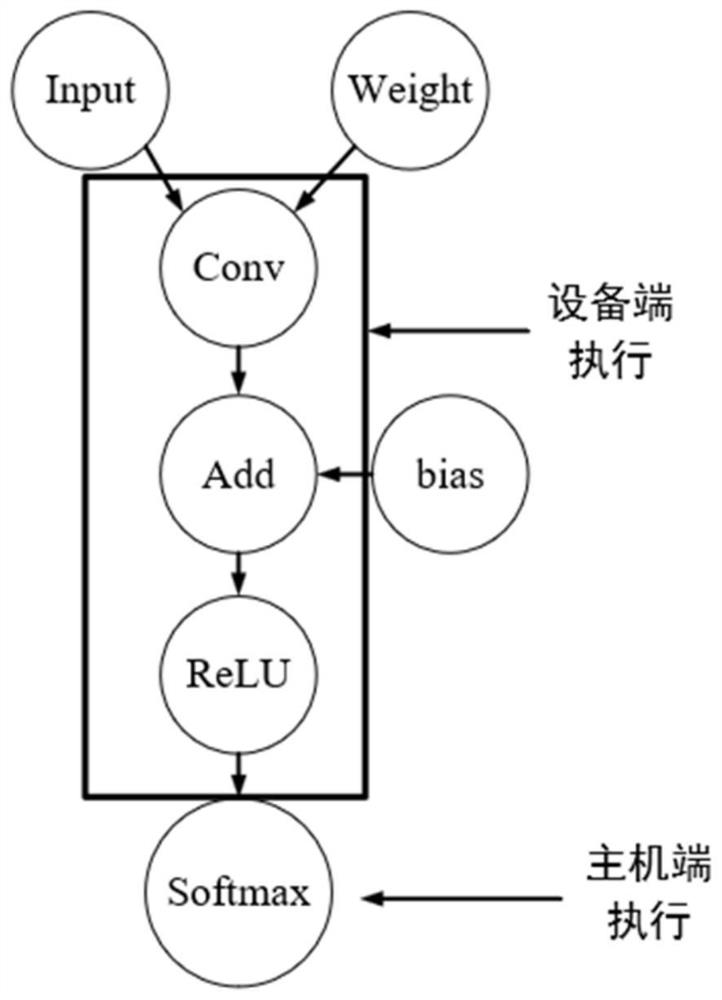

A technology of neural network and compilation method, which is applied in the field of integrated storage and computing, which can solve problems such as mapping, difficult hardware execution efficiency, and the situation where weight update is not considered, so as to reduce the number of remapping weights, balance computing load, and improve parallel efficiency Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0049] The following describes several preferred embodiments of the present invention with reference to the accompanying drawings, so as to make the technical content clearer and easier to understand. The present invention can be embodied in many different forms of embodiments, and the protection scope of the present invention is not limited to the embodiments mentioned herein.

[0050] In the drawings, components with the same structure are denoted by the same numerals, and components with similar structures or functions are denoted by similar numerals. The size and thickness of each component shown in the drawings are shown arbitrarily, and the present invention does not limit the size and thickness of each component. In order to make the illustration clearer, the thickness of parts is appropriately exaggerated in some places in the drawings.

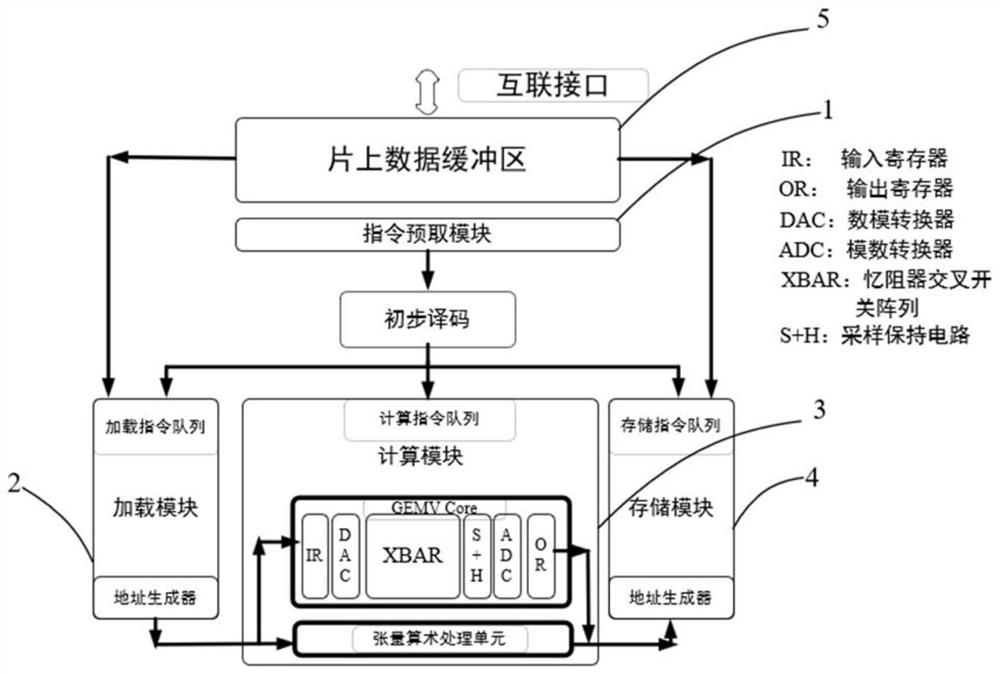

[0051] by figure 2 The Core hardware structure of the integrated storage-computing accelerator is shown as an example to illustra...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com