End-to-end unsupervised optical flow estimation method based on event camera

An unsupervised and event-based technology, applied in the field of computer vision, can solve the problems of raw event data discomfort, energy consumption, and lack of true value of optical flow in raw event data, and achieve the effect of improving network performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

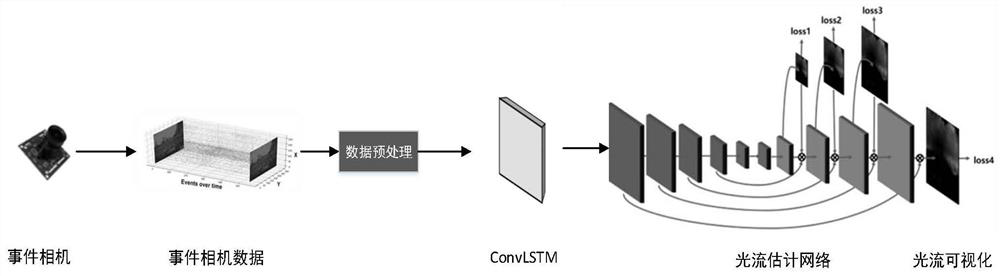

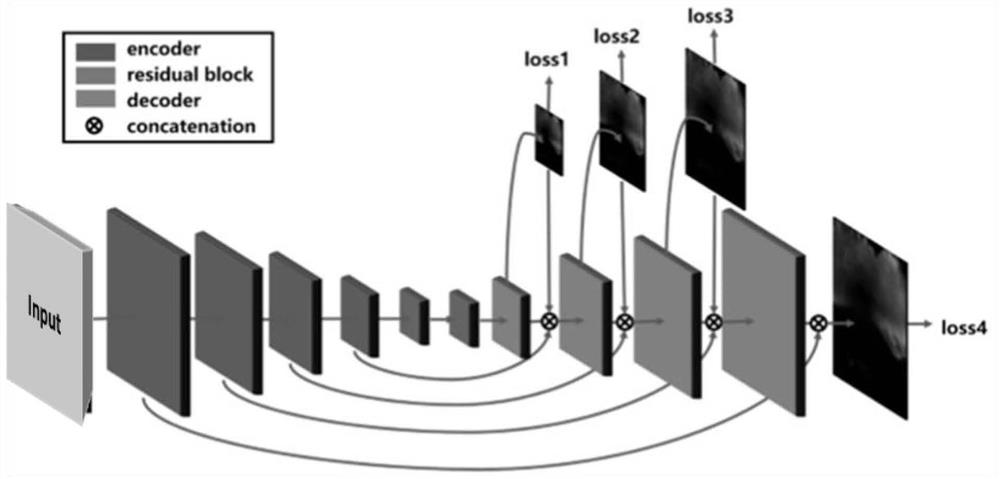

[0025] Example 1: See figure 1 , An end-to-end end-to-end-to-end-to-end-oriented optical current estimation method, such as figure 2 As shown, including the following steps:

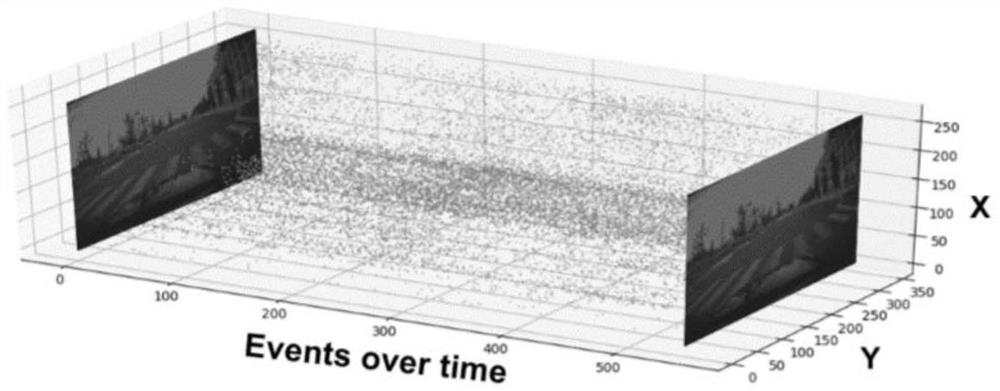

[0026] Step 1. Get the event camera LED Estimation Data Set MVSec, download the ROS BAG raw data set package from the Data Set Homepage, and get event stream data and grayscale frame data. A single event contains coordinates (X, Y), timestamp T e And the event polarity P, grayscale chart contains timestamp T i High h in the image i And wide W i . Event camera output data visualization figure 1 Indicated.

[0027] Step 2, first preprocess the data set: filter out the data before the first frame gradation map, will I ti To i ti+6 The event data between the event is a sample, here the T i It refers to the time corresponding to the gradation frame, and the timestamp of the obtained sample event is converted to normal in seconds, in order to enhance data, the second sample is taken as I t+1 To i t+7 In this cla...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com