Neural network filter pruning method based on batch feature heat map

A neural network and filter technology, applied in the fields of neural computing optimization, artificial intelligence and computer vision, can solve the problems of insufficient model accuracy and size, and achieve the effect of compressing model size, improving inference speed, and efficient pruning.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] In order to more clearly illustrate the purpose, technical solutions and advantages of the present invention, the present invention will be further described in detail below in conjunction with the accompanying drawings and specific embodiments:

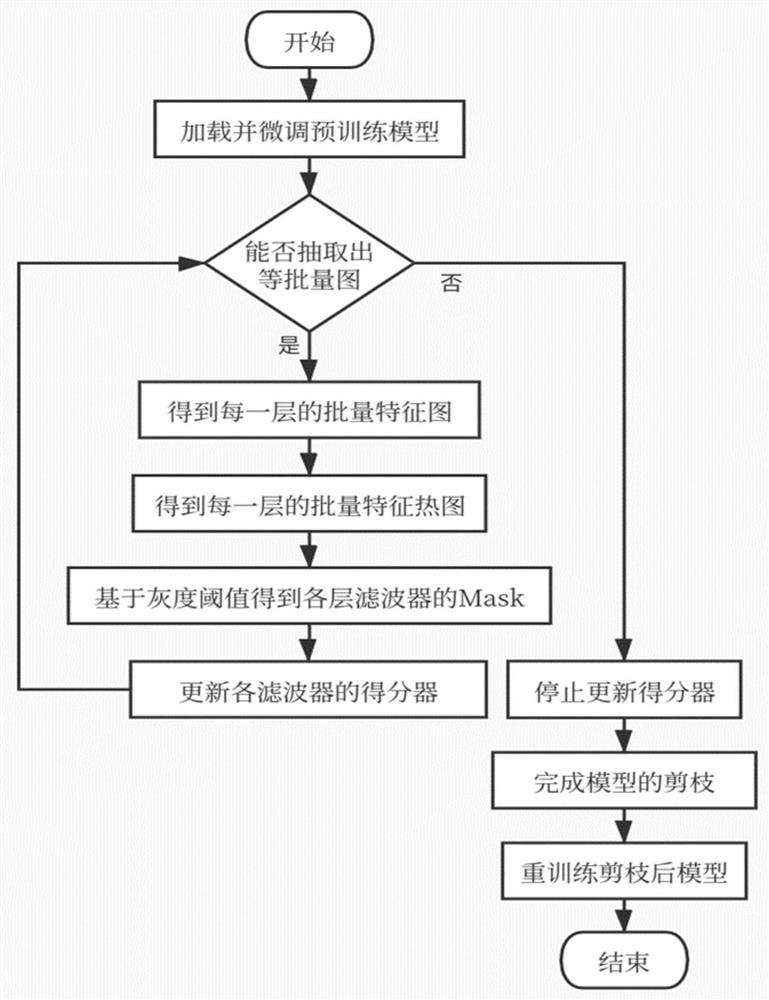

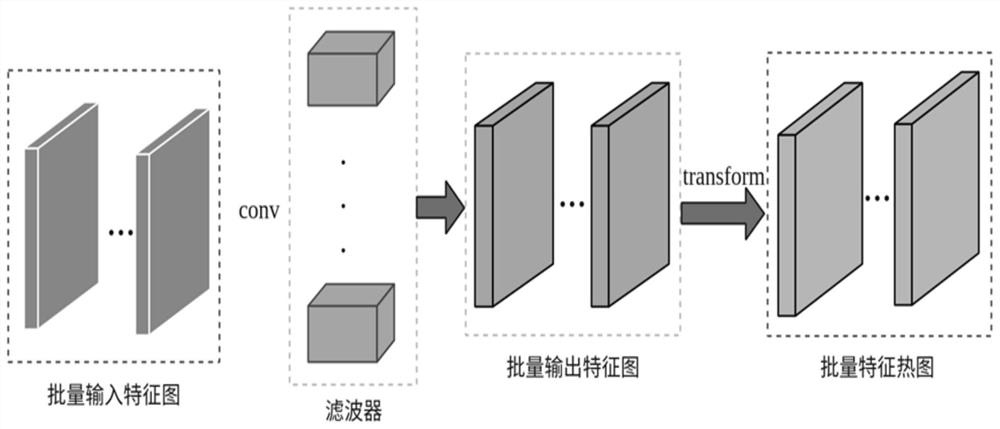

[0046] Taking the public data set CIFAR-10 and the classic network model VGG16 pre-trained on ImageNet as examples, the specific implementation of a neural network filter pruning method based on batch feature heatmaps of the present invention will be further described in detail in conjunction with the accompanying drawings ,in figure 1 It is a flow chart of the overall structure for realizing filter pruning through the present invention.

[0047] Step 1: Load the pre-trained convolutional neural network model VGG16 and its related configuration files, and fine-tune the pre-trained model for dozens of rounds based on the CIFAR-10 dataset to obtain the fine-tuned model.

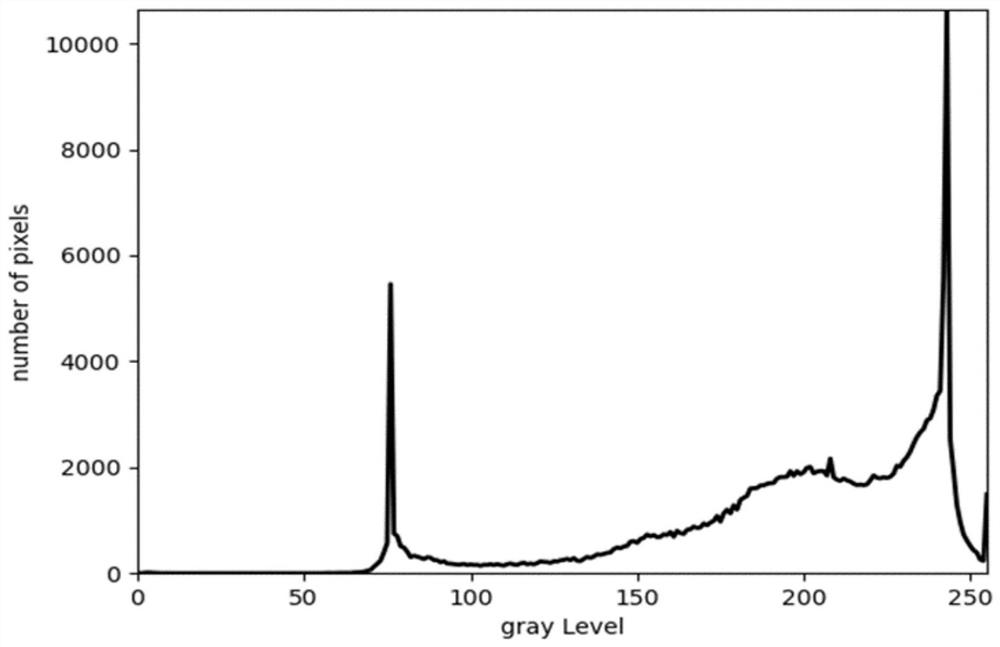

[0048] Step 2: Randomly extract 128 images from the CIFA...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com