Finger vein recognition method based on multi-semantic feature fusion network

A technology of feature fusion and recognition method, applied in the fields of biometric recognition and information security, finger vein image recognition, can solve problems such as reducing network overfitting and inability to extract semantic features, and achieve the effect of improving the recognition rate

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0025] The specific embodiments of the present invention will be further described below in conjunction with the accompanying drawings.

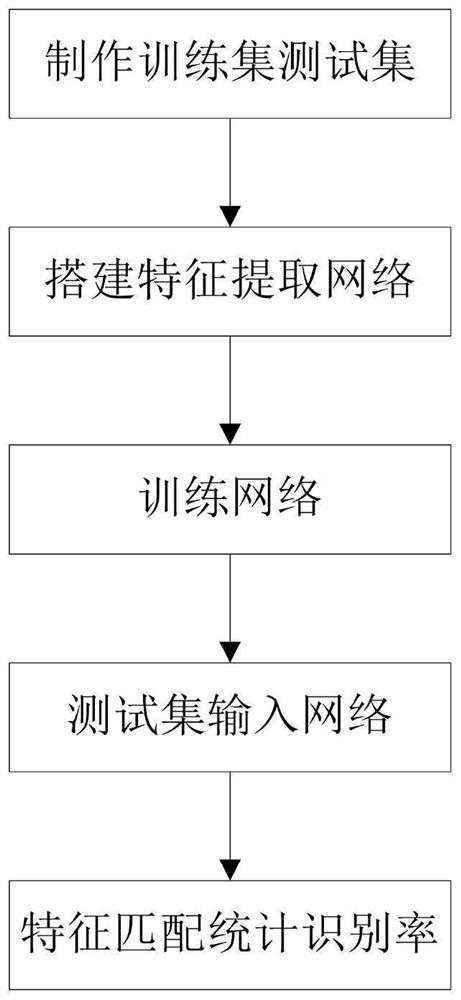

[0026] Such as figure 1 As shown, the finger vein recognition method based on the multi-semantic feature fusion network includes the following steps:

[0027] S1. Collect finger vein images, perform data enhancement on the finger vein images, and make training sets and test sets. In order to make the trained convolutional neural network model have a better classification effect, it is required that the number of samples in the training sample set should reach a certain scale, and the number of samples in each category should be evenly distributed; if the number of samples is small, it is impossible to obtain a more adaptable model; if the number of samples in each category is unevenly distributed, it will also affect the recognition of small sample categories. The existing finger vein images are processed by rotation, translation, scaling,...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com