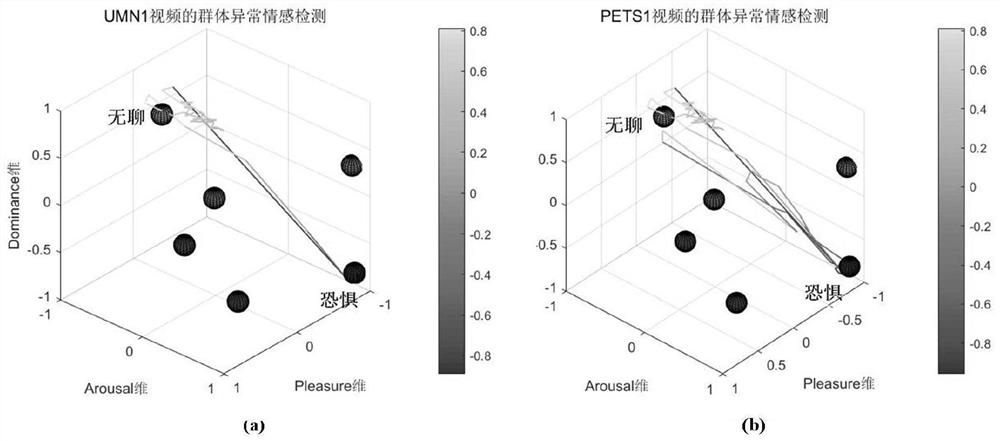

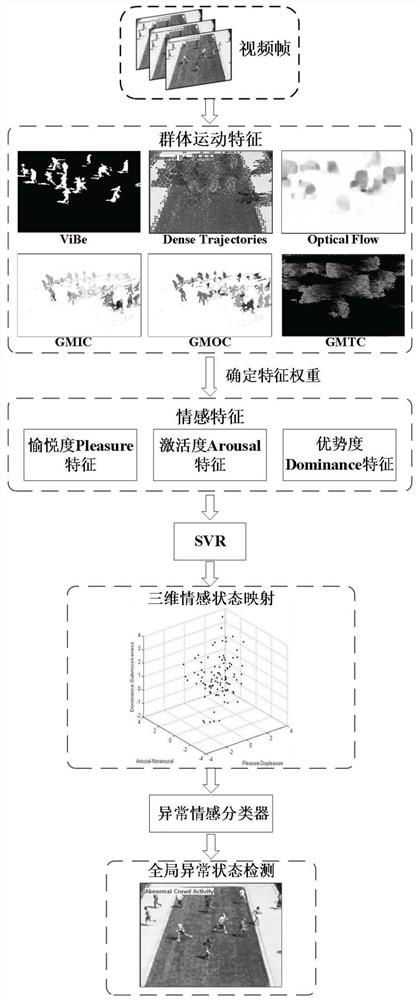

Group emotion recognition and abnormal emotion detection method based on dimension emotion model

A technology of emotion recognition and detection methods, applied in character and pattern recognition, acquisition/recognition of facial features, instruments, etc., can solve the problem that discrete models cannot be effectively expressed, and achieve the effect of accurate expression

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0086] In the step S2, an emotion labeling system is designed according to the manual labeling strategy. The system expresses the P-dimensional value by the facial expression of the character model, the A-dimensional value by the vibration degree of the heart, and the D-dimensional value by the size of the villain. value.

[0087] There are two main methods of constructing sentiment datasets: deduction and citation. The way of deduction is that the performer (preferably with professional performance quality) simulates a certain typical emotional type (joy, panic, sadness) through body movements. This method has a clear emotional contrast and strong expressive power, but there is still a certain gap between this form of interpretation and the real emotion, and it requires high performance quality of the performers, so it is not universal. The way of excerpting and citing is to use the method of manual labeling from the video clips of the real scene to evaluate the emotional st...

Embodiment 2

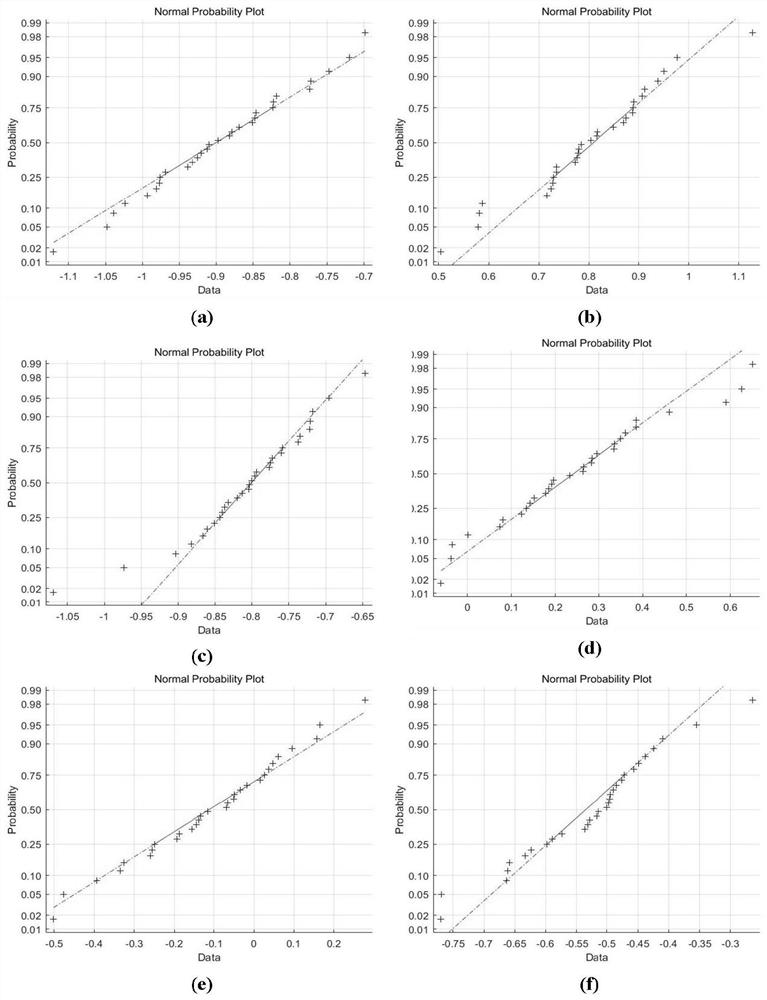

[0094] The method for judging the consistency of the step S4 is as follows: calculate the coefficient of variation, count and evaluate the sample mean μ, sample standard deviation σ and three indicators of the coefficient of variation CV of the PAD data, wherein the coefficient of variation is defined as:

[0095]

[0096] If the coefficient of variation is small, it means that the consistency of the verification label data is low; otherwise, it means that the consistency of the verification label data is high.

[0097] As far as the PAD data of different video clips are concerned, their tag values in the same dimension are counted. If the coefficient of variation is large, it reflects that the degree of dispersion on the unit mean is large, indicating that the consistency and certainty of the volunteers' scoring for this group are low; otherwise, it shows that the consistency and certainty of the volunteers' scoring for this group higher. Generally speaking, for videos ...

Embodiment 3

[0100] The step S5 extracting group motion features includes the extraction of the foreground area, the extraction of the optical flow feature, the extraction of the trajectory feature and the graphic expression of the motion feature; the extraction of the foreground area adopts the improved ViBE+ algorithm. After detection, the tth frame The foreground region is denoted as R t ; The extraction of the optical flow feature adopts the dense optical flow field of GunnerFarneback to carry out visual expression, for the tth frame image, the optical flow offset of the pixel point (x, y) in the horizontal and vertical directions is u and v respectively; the said The extraction of trajectory features uses the iDT algorithm to intensively collect video pixels, and judge the position of the tracking point in the next frame through optical flow, thereby forming a tracking trajectory, expressed as T(p 1 ,p 2 …p L ), wherein L≤15; the graphical expression of the motion feature adopts the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com