Question and answer task downstream task processing method and model

A task processing and downstream technology, applied in neural learning methods, electrical digital data processing, biological neural network models, etc., can solve problems such as failure to perform semantic matching

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0073] The present invention will be further described in detail below in conjunction with the accompanying drawings.

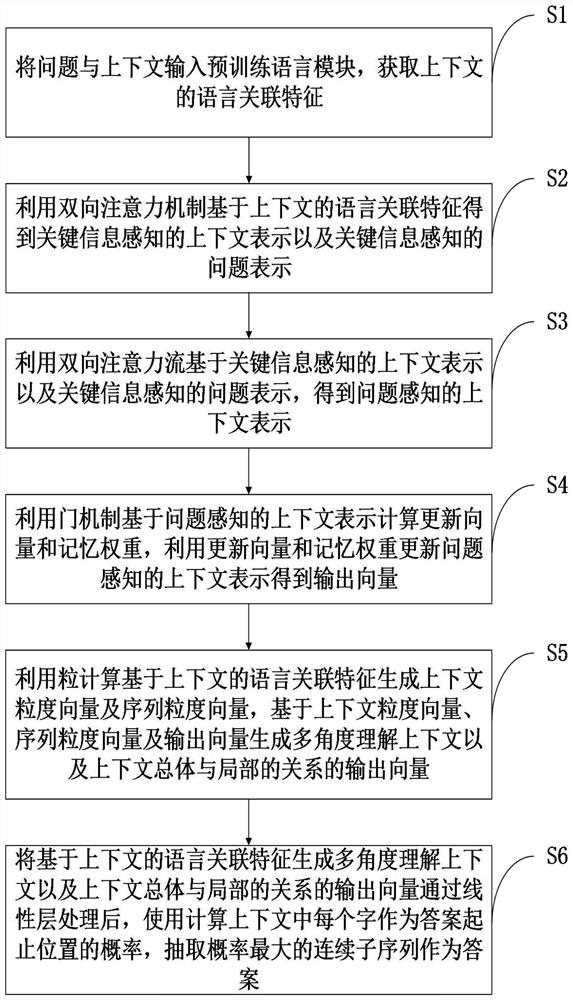

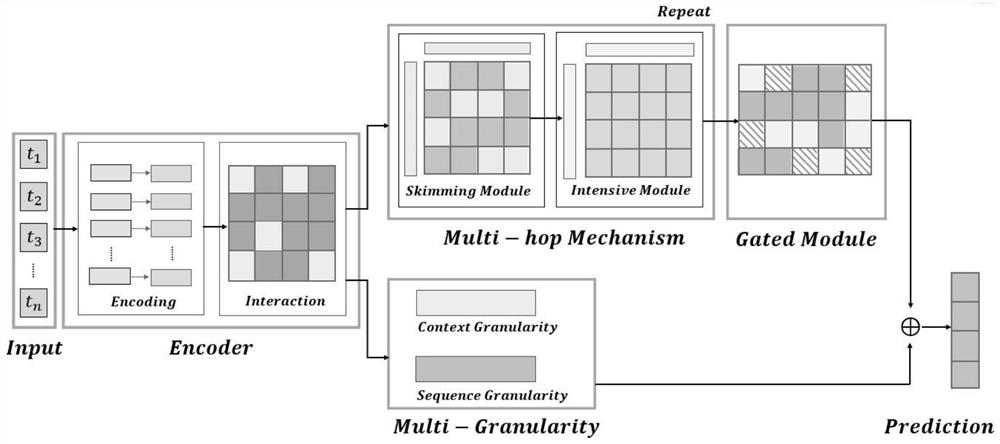

[0074] Such as figure 1 As shown, the present invention discloses a downstream task processing method of a question answering task, comprising the following steps:

[0075] S1. Input the question and context into the pre-training language module to obtain the language-related features of the context;

[0076] The specific operation is to send the sequence composed of questions and context splicing into the pre-training language module, that is, the encoder for encoding.

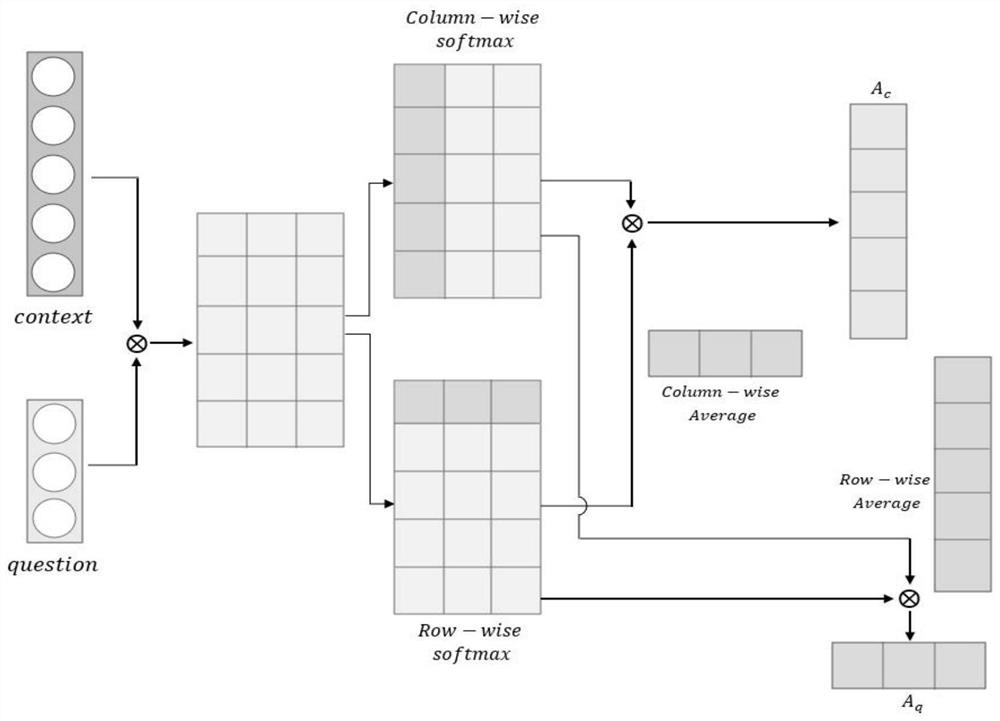

[0077] S2. Using the context-based language association features of the two-way attention mechanism to obtain the contextual representation of key information perception H CKey And the problem of key information perception indicates that H QKey ;

[0078] S3, using bidirectional attention flow based on contextual representation of key information perception H CKey and key information Pe...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com